| Fast interpolation of multivariate

polynomials with sparse exponents   |

|

| Preliminary version of May 2, 2024 |

|

. This work has

been partly supported by the French ANR-22-CE48-0016

NODE project.

. This work has

been partly supported by the French ANR-22-CE48-0016

NODE project.

. This article has

been written using GNU TeXmacs [24].

. This article has

been written using GNU TeXmacs [24].

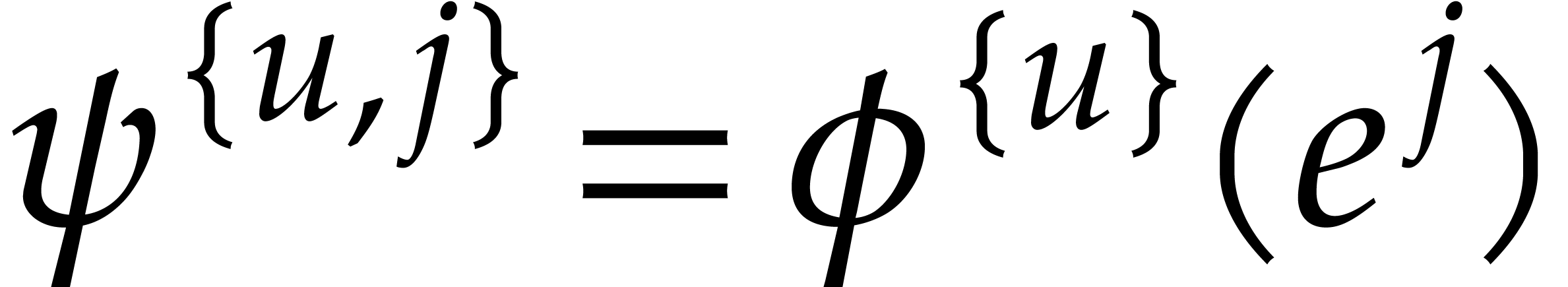

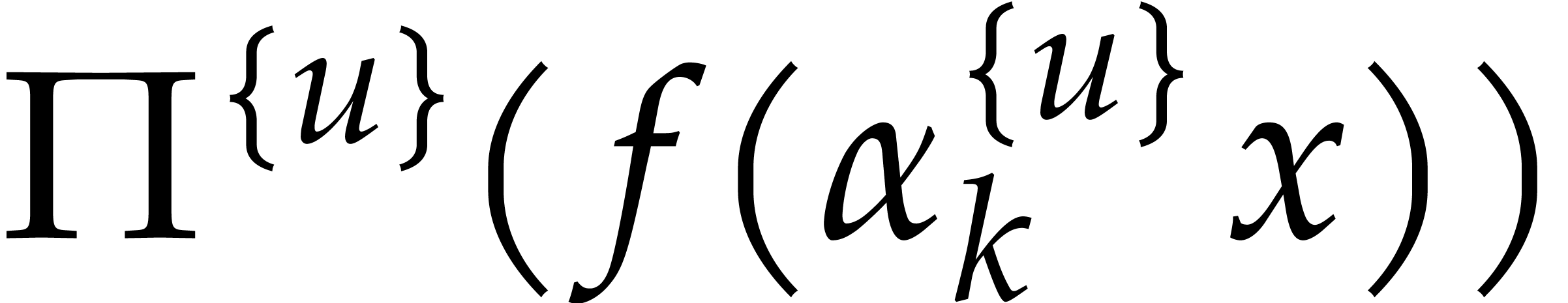

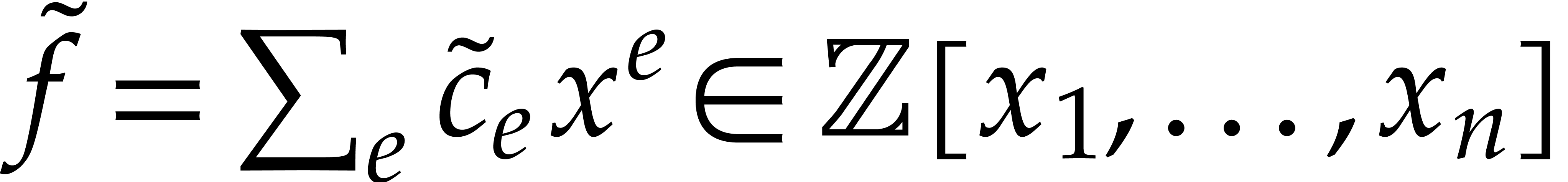

Consider a sparse multivariate polynomial |

Consider a multivariate integer polynomial  .

Then

.

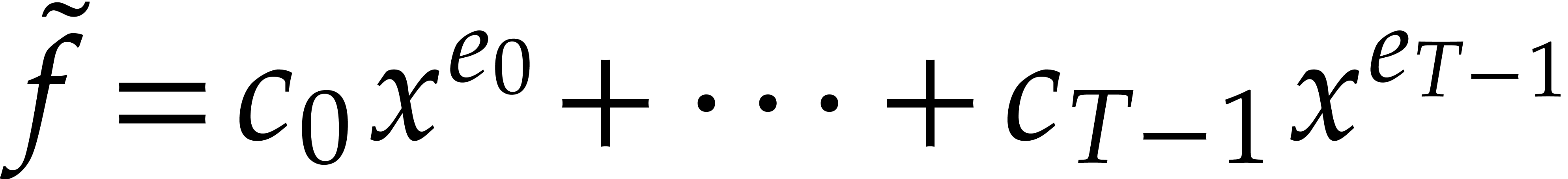

Then  can uniquely be written as a sum

can uniquely be written as a sum

|

(1.1) |

where  and

and  are pairwise

distinct. Here we understand that

are pairwise

distinct. Here we understand that  for any

for any  . We call (1.1) the

sparse representation of

. We call (1.1) the

sparse representation of  .

.

In this paper, we assume that  is not

explicitly given through its sparse representation and that we only have

a program for evaluating

is not

explicitly given through its sparse representation and that we only have

a program for evaluating  .

The goal of sparse interpolation is to recover the sparse

representation of

.

The goal of sparse interpolation is to recover the sparse

representation of  .

.

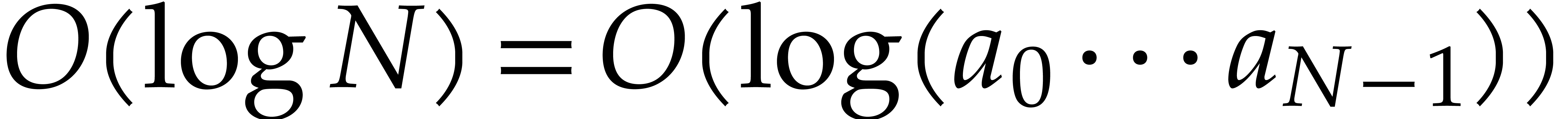

Theoretically speaking, we could simply evaluate  at a single point

at a single point  with

with  for some sufficiently large positive integer

for some sufficiently large positive integer  . Then the sparse representation of

. Then the sparse representation of  can directly be read off from the binary digits of

can directly be read off from the binary digits of  . However, the bit-complexity of this method is

terrible, since the bit-size of

. However, the bit-complexity of this method is

terrible, since the bit-size of  typically

becomes huge.

typically

becomes huge.

In order to get a better grip on the bit-complexity to evaluate and then

interpolate  , we will assume

that we actually have a program to evaluate

, we will assume

that we actually have a program to evaluate  modulo

modulo  for any positive integer modulus

for any positive integer modulus  . We denote by

. We denote by  the cost to evaluate

the cost to evaluate  for a modulus with

for a modulus with  and we assume that the average cost per bit

and we assume that the average cost per bit

is a non-decreasing function that grows not too

fast as a function of

is a non-decreasing function that grows not too

fast as a function of  . Since

the blackbox function should at least read its

. Since

the blackbox function should at least read its  input values, we also assume that

input values, we also assume that  .

.

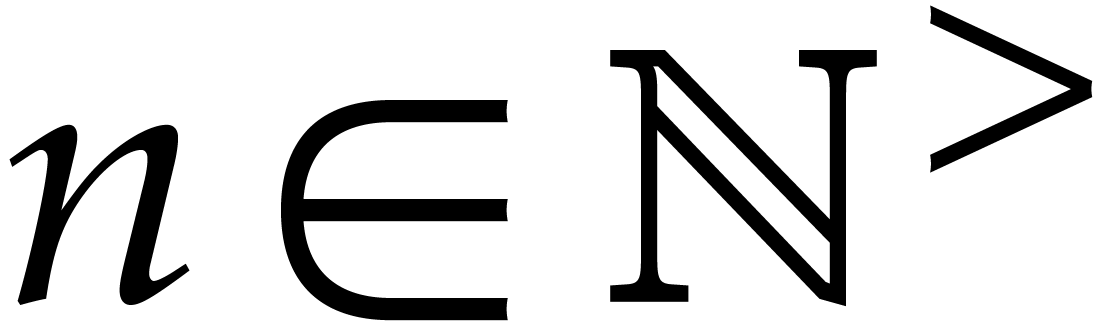

As in [15], for complexity estimates we use a random

memory access (RAM) machine over a finite alphabet along with the

soft-Oh notation:  means that

means that  . The machine is expected to have

an instruction for generating a random bit in constant time (see section

2 for the precise computational model and hypotheses that

we use). Assuming that we are given a bound

. The machine is expected to have

an instruction for generating a random bit in constant time (see section

2 for the precise computational model and hypotheses that

we use). Assuming that we are given a bound  for

the number of terms of

for

the number of terms of  and

and  for a bound of the bit-size of the terms of

for a bound of the bit-size of the terms of  , the main result of this paper is the following:

, the main result of this paper is the following:

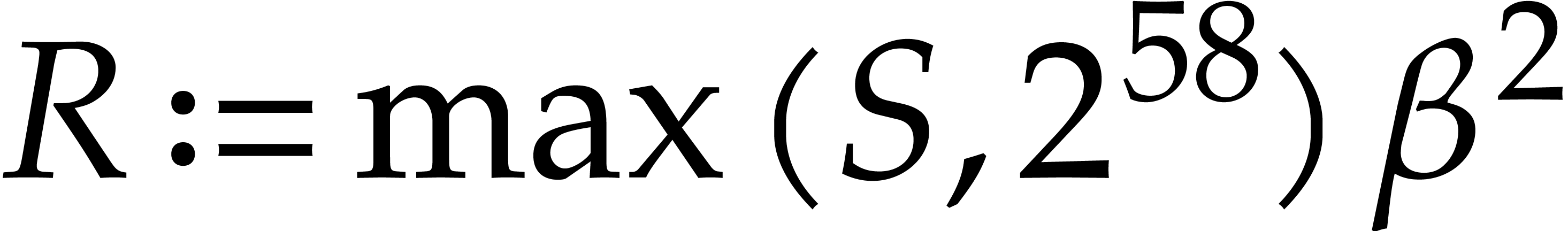

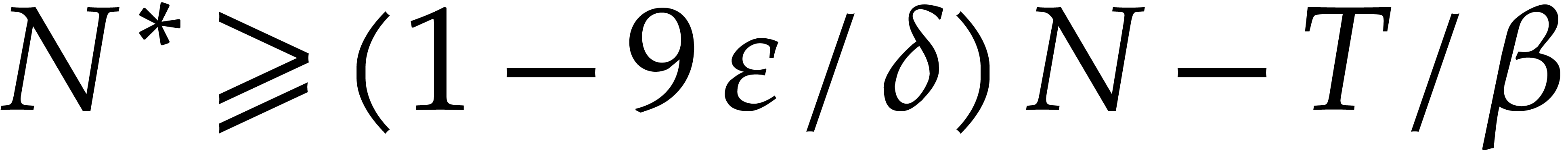

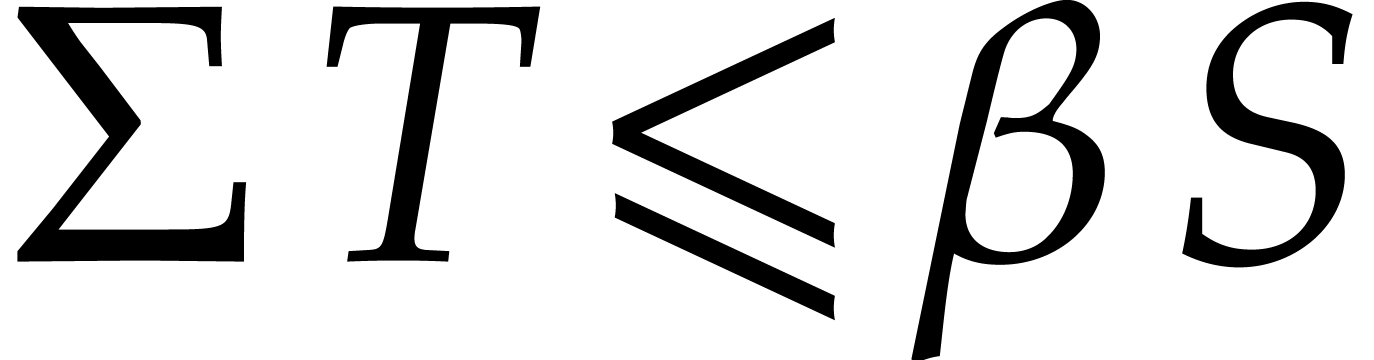

of

of  terms of bit-size

terms of bit-size  as input

and that interpolates

as input

and that interpolates  in time

in time  with a probability of success at least

with a probability of success at least  .

.

If the  variables all appear in the sparse

representation of

variables all appear in the sparse

representation of  , then

, then  and

and  , so

the bound further simplifies into

, so

the bound further simplifies into  .

In addition, detecting useless variables can be done fast,

e.g. using the heuristic method from [27,

section 7.4].

.

In addition, detecting useless variables can be done fast,

e.g. using the heuristic method from [27,

section 7.4].

The problem of sparse interpolation has a long history and goes back to work by Prony in the 18th century [41]. The first modern fast algorithm is due to Ben-Or and Tiwari [6]. Their work spawned a new area of research in computer algebra together with early implementations [11, 13, 20, 31, 34, 35, 38, 45]. We refer to [42] and [40, section 3] for nice surveys.

Modern research on sparse interpolation has developed in two directions. The first theoretical line of research has focused on rigorous and general complexity bounds [2, 3, 3, 4, 14, 17, 32]. The second direction concerns implementations, practical efficiency, and applications of sparse interpolation [5, 19, 21, 23, 26, 27, 30, 33, 36, 37].

The present paper mainly falls in the first line of research, although we will briefly discuss practical aspects in section 5. The proof of our main theorem relies on some number theoretical facts about prime numbers that will be recalled in section 2.3. There is actually a big discrepancy between empirical observations about prime numbers and hard theorems that one is able to prove. Because of this, our algorithms involve constant factors that are far more pessimistic than the ones that can be used in practice. Our algorithms also involve a few technical complications in order to cover all possible cases, including very large exponents that are unlikely to occur in practice.

Our paper borrows many techniques from [17, 40]

that deal with the particular case when  is a

univariate polynomial. In principle, the multivariate case can be

reduced to this case: setting

is a

univariate polynomial. In principle, the multivariate case can be

reduced to this case: setting  for a sufficiently

large

for a sufficiently

large  , the interpolation of

, the interpolation of

reduces to the interpolation of

reduces to the interpolation of  . However, this reduction is optimal only if

the entries of the vector exponents

. However, this reduction is optimal only if

the entries of the vector exponents  are all

approximately of the same bit-size. One interesting case that is not

well covered by this reduction is when the number of variables

are all

approximately of the same bit-size. One interesting case that is not

well covered by this reduction is when the number of variables  is large and when the exponent vectors

is large and when the exponent vectors  are themselves sparse in the sense that only a few entries are non-zero.

The main contribution of the present paper is the introduction of a

quasi-optimal technique to address this problem.

are themselves sparse in the sense that only a few entries are non-zero.

The main contribution of the present paper is the introduction of a

quasi-optimal technique to address this problem.

Another case that is not well covered by [17, 40]

is when the bit-sizes of the coefficients or exponents vary wildly.

Recent progress on this issue has been made in [18] and it

is plausible that these improvements can be extended to our multivariate

setting (see also Remark 4.11). Theorem 4.9

also provides a quasi-optimal solution for an approximation of the

sparse interpolation problem: for a fixed constant  , we only require the determination of at least

, we only require the determination of at least

correct terms of (1.1).

correct terms of (1.1).

In order to cover sparse exponent vectors in a more efficient way, we will introduce a new technique in section 3. The idea is to compress such exponent vectors using random projections. With high probability, it will be possible to reconstruct the actual exponent vectors from their projections. We regard this section as the central technical contribution of our paper. Let us further mention that random projections of the exponents were previously implemented in [4] in order to reduce the multivariate case to the univariate one: monomial collisions were avoided in this way but the reconstruction of the exponents needed linear algebra and could not really catch sparse or unbalanced exponents. The proof of Theorem 1.1 will be completed at the end of section 4. Section 5 will address the practical aspects of our new method. An important inspiration behind the techniques from section 3 and its practical variants is the mystery ball game from [23]; this connection will also be discussed in section 5.

Our paper focuses on the case when  has integer

coefficients, but our algorithm can be easily adapted to rational

coefficients as well, essentially by appealing to rational

reconstruction [15, Chapter 5, section 5.10] during the

proof of Lemma 4.8. However when

has integer

coefficients, but our algorithm can be easily adapted to rational

coefficients as well, essentially by appealing to rational

reconstruction [15, Chapter 5, section 5.10] during the

proof of Lemma 4.8. However when  has rational coefficients, its blackbox might include divisions and

therefore raise “division by zero” errors occasionally. This

makes the probability analysis and the worst case complexity bounds more

difficult to analyze, so we preferred to postpone this study to another

paper.

has rational coefficients, its blackbox might include divisions and

therefore raise “division by zero” errors occasionally. This

makes the probability analysis and the worst case complexity bounds more

difficult to analyze, so we preferred to postpone this study to another

paper.

Our algorithm should also be applicable to various other coefficient rings of characteristic zero. However it remains an open challenge to develop similar algorithms for coefficient rings of small positive characteristic.

For any  , we also define

, we also define

We may use both  and

and  as

sets of canonical representatives modulo

as

sets of canonical representatives modulo  .

Given

.

Given  and depending on the context, we write

and depending on the context, we write

for the unique

for the unique  or

or  with

with  .

.

This section presents sparse data structures, computational models, and quantitative results about prime distributions. At the end, an elementary fast algorithm is presented for testing the divisibility of several integers by several prime numbers.

We order  lexicographically by

lexicographically by  . Given formal indeterminates

. Given formal indeterminates  and an exponent

and an exponent  , we

define

, we

define  . We define the

bit-size of an integer

. We define the

bit-size of an integer  as

as  . In particular,

. In particular,  ,

,

,

,  , etc. We define the bit-size of an

exponent tuple

, etc. We define the bit-size of an

exponent tuple  by

by  .

We extend these definitions to the cases when

.

We extend these definitions to the cases when  and

and  by setting

by setting  and

and  , where

, where  .

.

Now consider a multivariate polynomial  .

Then

.

Then  can uniquely be written as a sum

can uniquely be written as a sum

where  and

and  are such that

are such that

. We call this the sparse

representation of

. We call this the sparse

representation of  . We

call

. We

call  the exponents of

the exponents of  and

and  the corresponding coefficients. We

also say that

the corresponding coefficients. We

also say that  are the terms of

are the terms of  and we call

and we call  the

support of

the

support of  . Any

non-zero

. Any

non-zero  with

with  and

and  is called an individual exponent of

is called an individual exponent of  . We define

. We define  to be the bit-size of

to be the bit-size of  .

.

Remark  then becomes

then becomes  . For

. For  ,

we also define

,

we also define  . Exponents

. Exponents

can be stored by appending the representations

of

can be stored by appending the representations

of  , but this is suboptimal

in the case when only a few entries of

, but this is suboptimal

in the case when only a few entries of  are

non-zero. For such “sparse exponents”, one prefers to store

the pairs

are

non-zero. For such “sparse exponents”, one prefers to store

the pairs  for which

for which  , again using suitable markers. For this reason, the

Turing bit-size of

, again using suitable markers. For this reason, the

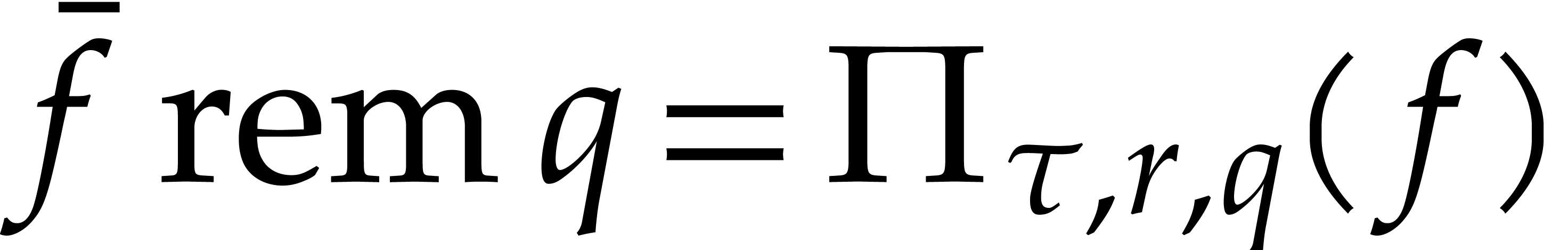

Turing bit-size of  becomes

becomes  .

.

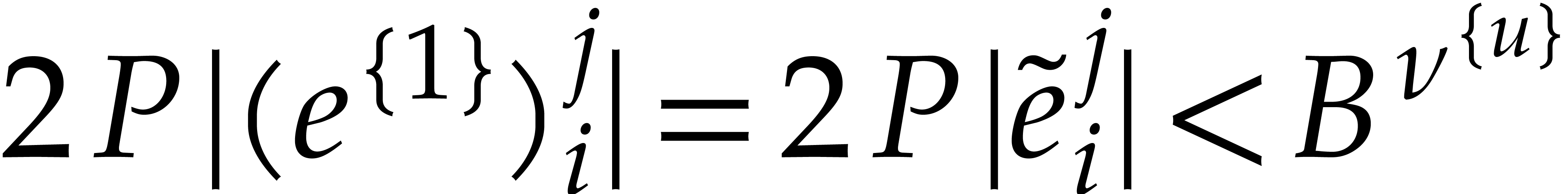

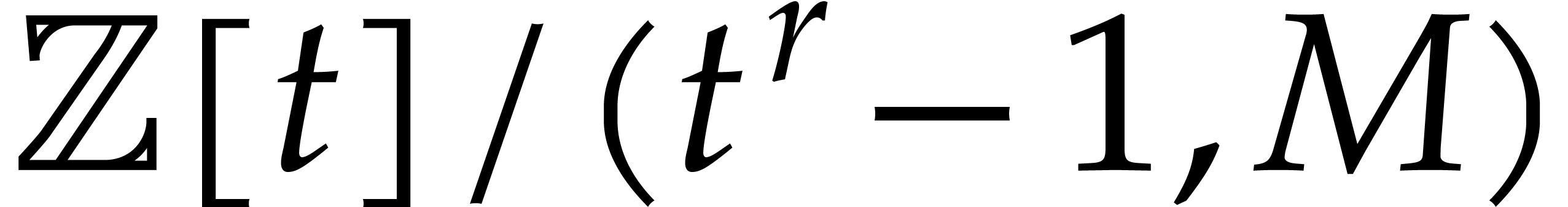

Throughout this paper, we will analyze bit complexities in the RAM model

as in [15]. In this model, it is known [22]

that two  -bit integers can be

multiplied in time

-bit integers can be

multiplied in time  . As a

consequence, given an

. As a

consequence, given an  -bit

modulus

-bit

modulus  , the ring operations

in

, the ring operations

in  can be done with the same complexity [9, 15]. Inverses can be computed in time

can be done with the same complexity [9, 15]. Inverses can be computed in time  , whenever they exist [9,

15]. For randomized algorithms, we assume that we have an

instruction for generating a random bit in constant time.

, whenever they exist [9,

15]. For randomized algorithms, we assume that we have an

instruction for generating a random bit in constant time.

Consider a polynomial  . A

modular blackbox representation for

. A

modular blackbox representation for  is

a program that takes a modulus

is

a program that takes a modulus  and

and  integers

integers  as input, and that

returns

as input, and that

returns  . A modular

blackbox polynomial is a polynomial

. A modular

blackbox polynomial is a polynomial  that is

represented in this way. The cost (or, better, a cost function)

of such a polynomial is a function

that is

represented in this way. The cost (or, better, a cost function)

of such a polynomial is a function  such that

such that

yields an upper bound for the running time if

yields an upper bound for the running time if

has bit-size

has bit-size  .

It will be convenient to always assume that the average cost

.

It will be convenient to always assume that the average cost

per bit of the modulus is non-decreasing and

that

per bit of the modulus is non-decreasing and

that  for any

for any  .

Since

.

Since  should at least read its

should at least read its  input values, we also assume that

input values, we also assume that  .

.

Remark  that only uses ring

operations, the above average cost function usually becomes

that only uses ring

operations, the above average cost function usually becomes  , for some fixed constant

, for some fixed constant  that does not depend on

that does not depend on  .

.

If the SLP also allows for divisions, then we rather obtain  , but this is out of the scope of this paper,

due to the “division by zero” issue. In fact, computation

trees [10] are more suitable than SLPs in this context. For

instance, the computation of determinants using Gaussian elimination

naturally fits in this setting, since the chosen computation path may

then depend on the modulus

, but this is out of the scope of this paper,

due to the “division by zero” issue. In fact, computation

trees [10] are more suitable than SLPs in this context. For

instance, the computation of determinants using Gaussian elimination

naturally fits in this setting, since the chosen computation path may

then depend on the modulus  .

.

However, although these bounds “usually” hold

(i.e. for all common algebraic algorithms that we are aware

of, including the RAM model), they may fail in pathological cases when

the SLP randomly accesses data that are stored at very distant locations

on the Turing tape. For this reason, the blackbox cost model may be

preferred in order to study bit complexities. In this model, a suitable

replacement for the length  of an SLP is the

average cost function

of an SLP is the

average cost function  , which

typically involves only a logarithmic overhead in the bit-length

, which

typically involves only a logarithmic overhead in the bit-length  .

.

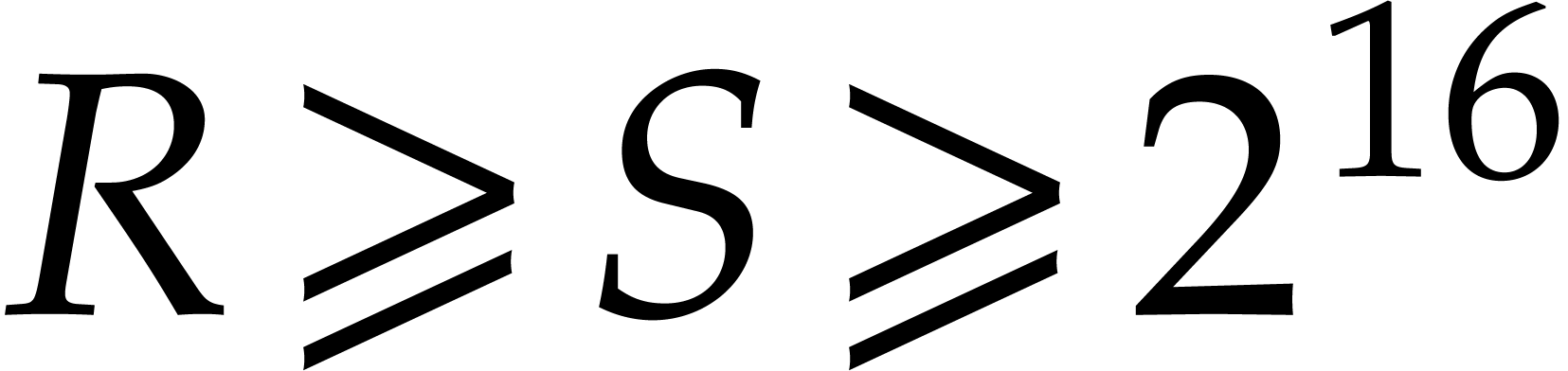

All along this paper  (resp.

(resp.  ) will bound the bit-size (resp. number of

terms) of the polynomial to be interpolated, and

) will bound the bit-size (resp. number of

terms) of the polynomial to be interpolated, and  and

and  will denote random prime numbers that

satisfy:

will denote random prime numbers that

satisfy:

,

,

,

,

.

.

The construction of  and

and  will rely on the following number theoretic theorems, where

will rely on the following number theoretic theorems, where  stands for the natural logarithm, that is

stands for the natural logarithm, that is  . We will also use

. We will also use  .

.

, there exist at least

, there exist at least  distinct prime numbers in the open interval

distinct prime numbers in the open interval  .

.

Proof. The function  is

increasing for

is

increasing for  and

and  .

So it is always non-decreasing and continuous. The number of prime

divisor of any

.

So it is always non-decreasing and continuous. The number of prime

divisor of any  it at most

it at most  . Let

. Let  and

and  respectively be the number of divisors and prime divisors of

respectively be the number of divisors and prime divisors of  . Then clearly

. Then clearly  . Now for all

. Now for all  we know

from [39] that

we know

from [39] that

We will need the following slightly modified version of [16, Theorem 2.1], which is itself based on a result from [44].

and

and  ,

produces a triple

,

produces a triple  that has the following

properties with probability at least

that has the following

properties with probability at least  ,

and returns fail otherwise:

,

and returns fail otherwise:

is uniformly distributed amongst the

primes of

is uniformly distributed amongst the

primes of  ;

;

there are at least  primes in

primes in  and

and  is uniformly distributed

amongst them;

is uniformly distributed

amongst them;

is a primitive

is a primitive  -th root of unity in

-th root of unity in  .

.

Its worst-case bit complexity is  .

.

Proof. In [16, Theorem 2.1] the

statement (b) is replaced by the simpler condition that  . But by looking at step 2 of the

algorithm on page 4 of [44], we observe that

. But by looking at step 2 of the

algorithm on page 4 of [44], we observe that  is actually uniformly distributed amongst the primes of

is actually uniformly distributed amongst the primes of

and that there are at least

and that there are at least  such primes with high probability

such primes with high probability  .

.

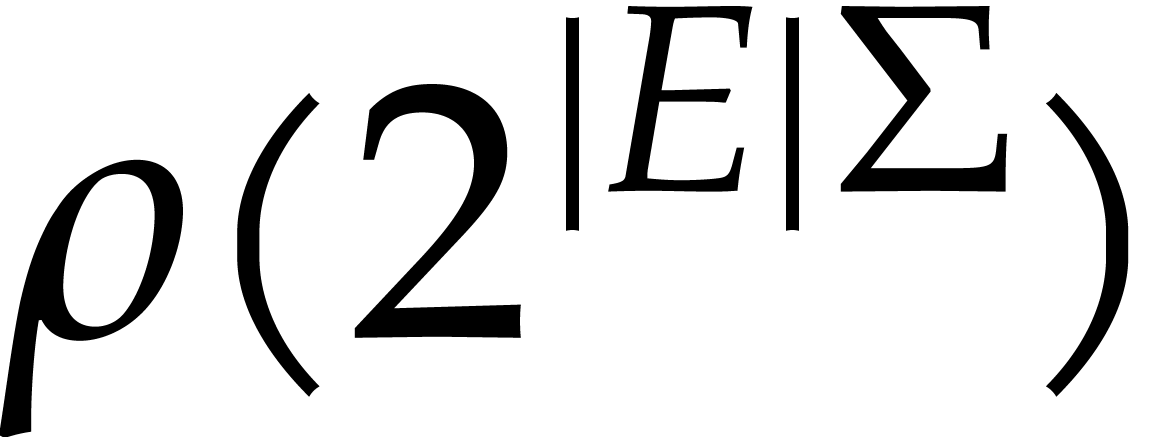

be a real number in

be a real number in  and let

and let  be such that

be such that  . There exists a Monte Carlo

algorithm that computes distinct random prime numbers

. There exists a Monte Carlo

algorithm that computes distinct random prime numbers  in

in  in time

in time

with a probability of success of at least  .

.

Proof. Theorem 2.3 asserts that

there are at least  primes in the interval

primes in the interval  . The probability to fetch a prime

number in

. The probability to fetch a prime

number in  while avoiding at most

while avoiding at most  fixed numbers is at least

fixed numbers is at least

The probability of failure after  trials is at

most

trials is at

most  . By using the AKS

algorithm [1] each primality test takes time

. By using the AKS

algorithm [1] each primality test takes time  . The probability of success for picking

. The probability of success for picking  distinct prime numbers in this way is at least

distinct prime numbers in this way is at least

In order to guarantee this probability of success to be at least  , it suffices to take

, it suffices to take

The concavity of the  function yields

function yields  for

for  , whence

, whence

and consequently,

On the other hand we have  .

It therefore suffices to take

.

It therefore suffices to take

Let  be prime numbers and let

be prime numbers and let  be strictly positive integers. The aim of this subsection is to show

that the set of pairs

be strictly positive integers. The aim of this subsection is to show

that the set of pairs  can be computed in

quasi-linear time using the following algorithm named

can be computed in

quasi-linear time using the following algorithm named  .

.

Algorithm divisors

Input: non empty subsets  and

and

.

.

Output: the set  .

.

If  , then return

, then return

.

.

Let  , let

, let  be a subset of

be a subset of  of

cardinality

of

cardinality  , and let

, and let

.

.

Compute  and

and  .

.

Compute  and

and  .

.

Return  .

.

Proof. Let  and

and  . Step 1 costs

. Step 1 costs  by using fast multi-remaindering [15, Chapter 10]. Using

fast sub-product trees [15, Chapter 10], step 3 takes

by using fast multi-remaindering [15, Chapter 10]. Using

fast sub-product trees [15, Chapter 10], step 3 takes  . By means of fast

multi-remaindering again, step 4 takes

. By means of fast

multi-remaindering again, step 4 takes  .

.

Since the  are distinct prime numbers, when

entering step 5 we have

are distinct prime numbers, when

entering step 5 we have

Let  denote the cost of the recursive calls

occurring during the execution of the algorithm. So far we have shown

that

denote the cost of the recursive calls

occurring during the execution of the algorithm. So far we have shown

that

Unrolling this inequality and taking into account that the depth of the

recursion is  , we deduce that

, we deduce that

. Finally the total cost of

the algorithm is obtained by adding the cost of the top level call, that

is

. Finally the total cost of

the algorithm is obtained by adding the cost of the top level call, that

is

Consider a sparse polynomial  that we wish to

interpolate. In the next section, we will describe a method that allows

us to efficiently compute most of the exponents

that we wish to

interpolate. In the next section, we will describe a method that allows

us to efficiently compute most of the exponents  in an encoded form

in an encoded form  . The

simplest codes

. The

simplest codes  are of the form

are of the form

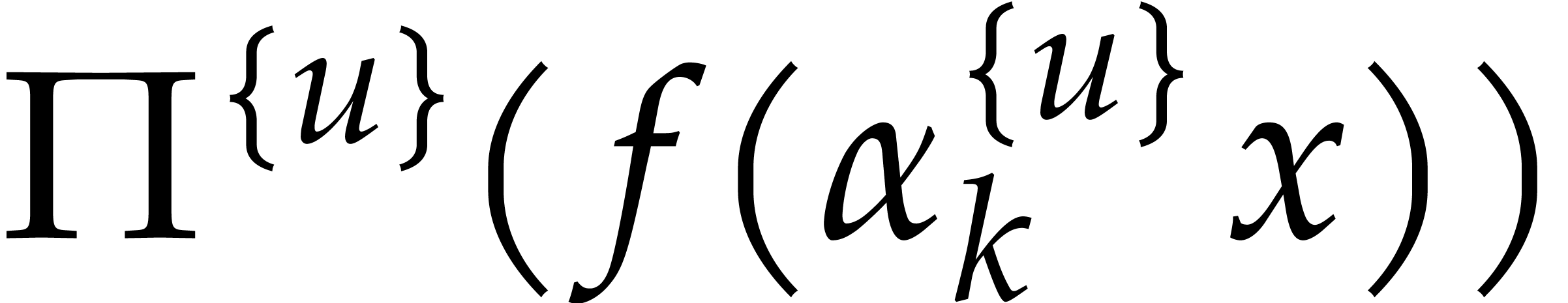

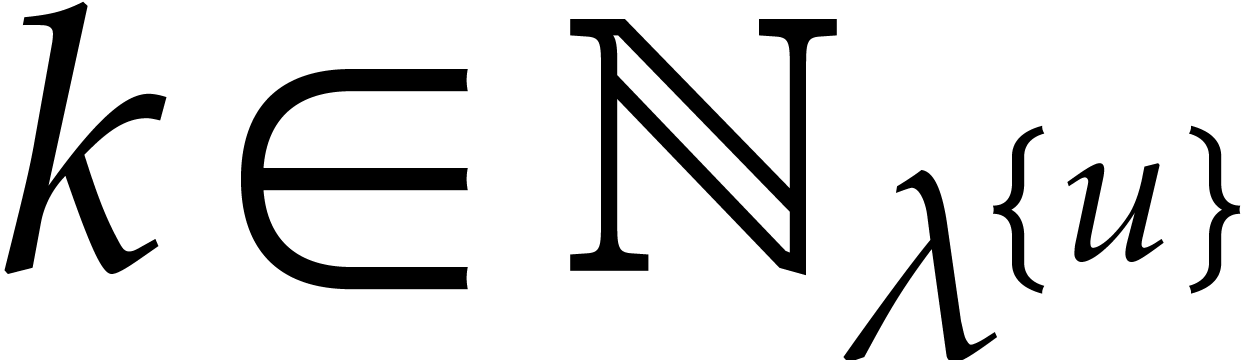

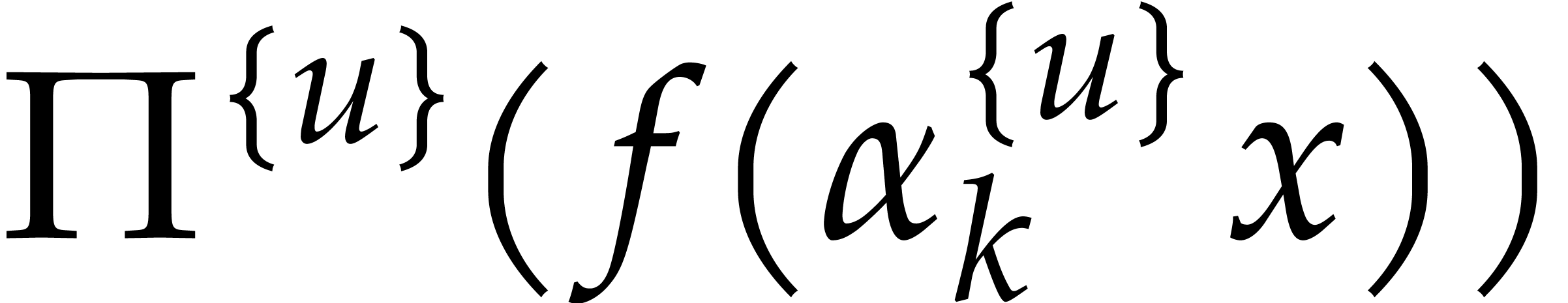

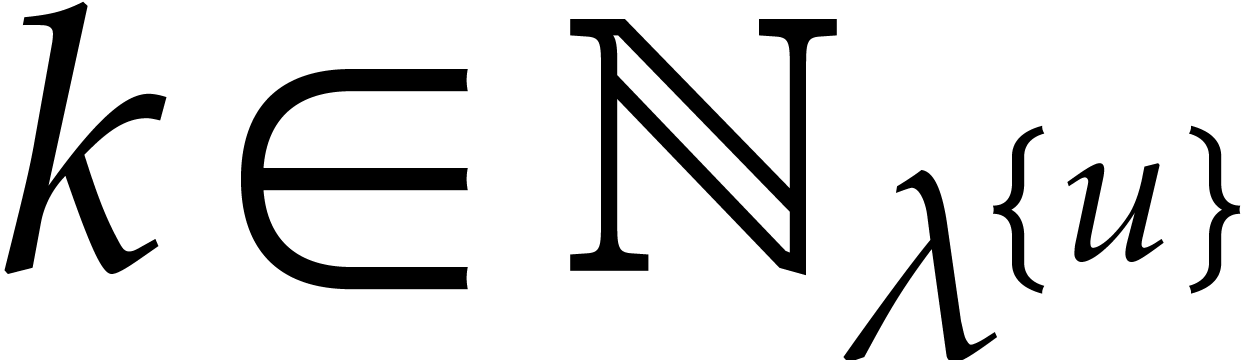

|

(3.1) |

When  , the most common such

encoding is the Kronecker encoding, with

, the most common such

encoding is the Kronecker encoding, with  for all

for all  . However, this

encoding may incur large bit-size

. However, this

encoding may incur large bit-size  with respect

to the bit-size of

with respect

to the bit-size of  .

.

The aim of this section is to introduce more compact codes  . These codes will be

“probabilistic” in the sense that we will only be able to

recover

. These codes will be

“probabilistic” in the sense that we will only be able to

recover  from

from  with high

probability, under suitable assumptions on

with high

probability, under suitable assumptions on  .

Moreover, the recovery algorithm is only efficient if we wish to

simultaneously “bulk recover”

.

Moreover, the recovery algorithm is only efficient if we wish to

simultaneously “bulk recover”  exponents from their codes.

exponents from their codes.

Throughout this section, the number of variables  and the target number of exponents

and the target number of exponents  are assumed

to be given. We allow exponents to be vectors of arbitrary integers in

are assumed

to be given. We allow exponents to be vectors of arbitrary integers in

. Actual computations on

exponents will be done modulo

. Actual computations on

exponents will be done modulo  for a fixed odd

base

for a fixed odd

base  and a flexible

and a flexible  -adic precision

-adic precision  .

We also fix a constant

.

We also fix a constant  such that

such that

|

(3.2) |

and we note that this assumption implies

|

(3.3) |

and

|

(3.4) |

We finally assume  to be a parameter that will be

specified in section 4. The exponent encoding will depend

on one more parameter

to be a parameter that will be

specified in section 4. The exponent encoding will depend

on one more parameter

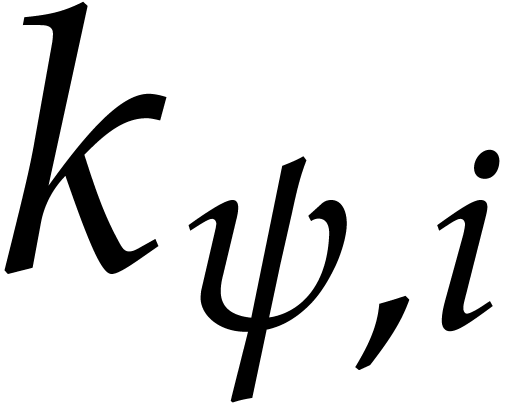

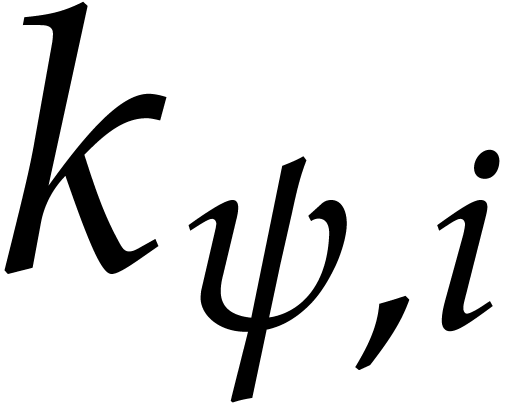

that will be fixed in section 3.2 and flexible in section 3.3. We define

Our encoding also depends on the following random parameters:

For each  , let

, let  be random elements in

be random elements in  and

and

.

.

Let  be pairwise distinct random prime

numbers in the interval

be pairwise distinct random prime

numbers in the interval  ;

such primes do exist thanks to Lemma 2.6 and (3.3).

;

such primes do exist thanks to Lemma 2.6 and (3.3).

Now consider an exponent  . We

encode

. We

encode  as

as

We will sometimes write  and

and  instead of

instead of  and

and  in order

to make the dependence on

in order

to make the dependence on  ,

,

and

and  explicit.

explicit.

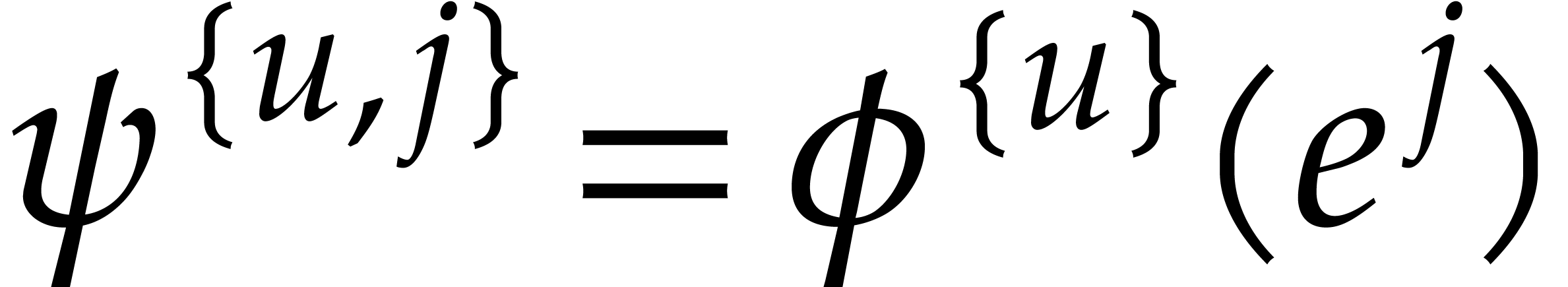

Given an exponent  of

of  , our first aim is to determine the individual

exponents

, our first aim is to determine the individual

exponents  of small size. More precisely,

assuming that

of small size. More precisely,

assuming that

we wish to determine all  with

with  .

.

We say that  is transparent if for each

is transparent if for each

with

with  ,

there exists a

,

there exists a  such that

such that  . This property does only depend on the random

choices of the

. This property does only depend on the random

choices of the  .

.

. Then,

for random choices of

. Then,

for random choices of  , the

probability that

, the

probability that  is transparent is at least

is transparent is at least

.

.

Proof. Let  and

and  . Given

. Given  and

and

, the probability that

, the probability that  is

is

The probability that  for some

for some  is therefore at least

is therefore at least

We conclude that the probability that this holds for all  is at least

is at least  .

.

We say that  is faithful if for every

is faithful if for every

and

and  such that

such that  and

and  , we have

, we have

.

.

Proof. Let  be the set of

all primes strictly between

be the set of

all primes strictly between  and

and  . Let

. Let  be the set of all

be the set of all

such that

such that  are pairwise

distinct. Let

are pairwise

distinct. Let  be the subset of

be the subset of  of all choices of

of all choices of  for which

for which  is not faithful.

is not faithful.

Consider  ,

,  , and

, and  be such that

be such that

,

,  and

and

. Let

. Let  . For each

. For each  ,

let

,

let

and  , so that

, so that  . For each

. For each  ,

using

,

using  , we observe that

, we observe that

necessarily holds with  .

.

Now consider the set  of

of  such that

such that  is divisible by

is divisible by  . Any such

. Any such  is a divisor of

either

is a divisor of

either  ,

,  , or

, or  .

Since

.

Since  is odd we have

is odd we have

and therefore

It follows that  .

Consequently,

.

Consequently,

|

|

|

|

|

|

||

|

|

||

|

|

(by (3.2)) | |

|

|

Now let

so that  . By what precedes,

we have

. By what precedes,

we have

whence

From  we deduce that

we deduce that

From Theorem 2.3 we know that  .

This yields

.

This yields  , as well as

, as well as

, thanks to (3.3).

We conclude that

, thanks to (3.3).

We conclude that

Given  and

and  ,

let

,

let  be the smallest index such that

be the smallest index such that  and

and  . If no

such

. If no

such  exists, then we let

exists, then we let  . We define

. We define  .

.

Assume that  is transparent and faithful for some

is transparent and faithful for some

. Given

. Given  , let

, let  if

if  and

and  otherwise. Then the condition

otherwise. Then the condition

implies that

implies that  always

holds. Moreover, if

always

holds. Moreover, if  , then

the definitions of “transparent” and “faithful”

imply that

, then

the definitions of “transparent” and “faithful”

imply that  . In other words,

these

. In other words,

these  can efficiently be recovered from

can efficiently be recovered from  and

and  .

.

Proof. Note that the hypotheses  and

and  imply that

imply that  .

Using Lemma 2.7, we can compute all triples

.

Using Lemma 2.7, we can compute all triples  with

with  in time

in time

thanks to (3.2). Using  further

operations we can filter out the triples

further

operations we can filter out the triples  for

which

for

which  . We next sort the

resulting triples for the lexicographical ordering, which can again be

done in time

. We next sort the

resulting triples for the lexicographical ordering, which can again be

done in time  . For each pair

. For each pair

, we finally only retain the

first triple of the form

, we finally only retain the

first triple of the form  .

This can once more be done in time

.

This can once more be done in time  .

Then the remaining triples are precisely those

.

Then the remaining triples are precisely those  with

with  .

.

The following combination of the preceding lemmas will be used in the sequel:

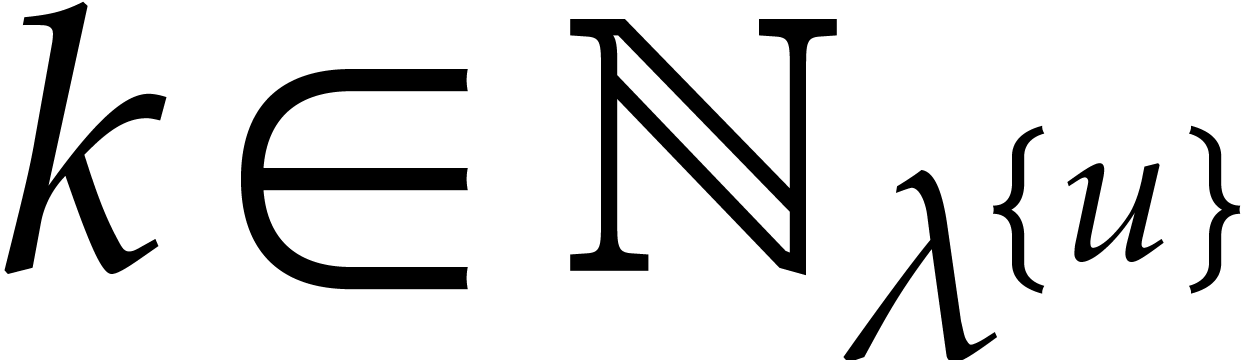

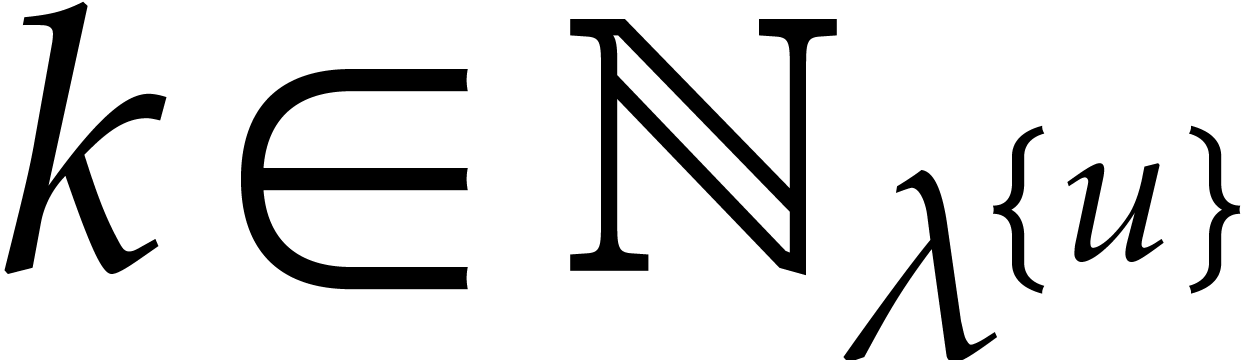

and let

and let  be

the corresponding values as above. Let

be

the corresponding values as above. Let  be the

set of indices

be the

set of indices  such that

such that  and

and  . Then there exists an

algorithm that takes

. Then there exists an

algorithm that takes  as input and that

computes

as input and that

computes  such that

such that  for

all

for

all  and

and  for all

for all  with

with  .

The algorithm runs in time

.

The algorithm runs in time  and succeeds with

probability at least

and succeeds with

probability at least

Proof. Lemmas 3.1 and 3.2

bound the probability of failure for the transparency and the

faithfulness of the random choices for each of the  terms. The complexity bound follows from Lemma 3.3.

terms. The complexity bound follows from Lemma 3.3.

Our next aim is to determine all exponents  with

with

, for some fixed threshold

, for some fixed threshold

. For this, we will apply

Lemma 3.4 several times, for different choices of

. For this, we will apply

Lemma 3.4 several times, for different choices of  . Let

. Let

For  , let

, let

We also choose  and

and  independently at random as explained in section 3.1, with

independently at random as explained in section 3.1, with

,

,  , and

, and  in the roles of

in the roles of  ,

,  ,

and

,

and  . We finally define

. We finally define

for  .

.

Note that the above definitions imply

|

(3.5) |

and  . The inequality

. The inequality  implies

implies

|

(3.6) |

If  , then

, then  , so, in general,

, so, in general,

|

(3.7) |

By (3.5) we have  ,

whence

,

whence

|

(3.8) |

Proof. From  ,

we get

,

we get

|

(3.9) |

Now

which concludes the proof.

and let

and let  for

for  be as above. Assume that

be as above. Assume that  . Let

. Let  be the set of

indices

be the set of

indices  such that

such that  and

and

for all

for all  .

Then there exists an algorithm that takes

.

Then there exists an algorithm that takes  as

input and that computes

as

input and that computes  such that

such that  for all

for all  and

and  for all

for all  . The algorithm

runs in time

. The algorithm

runs in time  and succeeds with probability at

least

and succeeds with probability at

least

Proof. We compute successive approximations  of

of  as follows. We start with

as follows. We start with

. Assuming that

. Assuming that  is known, we compute

is known, we compute

for all  , and then apply the

algorithm underlying Lemma 3.4 to

, and then apply the

algorithm underlying Lemma 3.4 to  and

and  . Note that for all

. Note that for all  the equality

the equality  implies

implies

Our choice of  and

and  ,

the inequality (3.5), and the assumption

,

the inequality (3.5), and the assumption  successively ensure that

successively ensure that

so we can actually apply Lemma 3.4.

Let  be the set of indices

be the set of indices  such that

such that  and

and  .

Lemma 3.4 provides us with

.

Lemma 3.4 provides us with  such

that

such

that  for all

for all  .

In addition

.

In addition  holds whenever

holds whenever  and

and  with probability at least

with probability at least

Now we set

|

(3.10) |

At the end, we return  as our guess for

as our guess for  . For the analysis of this

algorithm, we first assume that all applications of Lemma 3.4

succeed. Let us show by induction (and with the above definitions of

. For the analysis of this

algorithm, we first assume that all applications of Lemma 3.4

succeed. Let us show by induction (and with the above definitions of

and

and  )

that, for all

)

that, for all  ,

,  , and

, and  ,

we have:

,

we have:

If  and

and  then (i)

clearly holds. If

then (i)

clearly holds. If  and

and  , then (3.5) and (3.6)

imply

, then (3.5) and (3.6)

imply  . Since

. Since  we have

we have  . Now

we clearly have

. Now

we clearly have  and

and  , so (i) holds.

, so (i) holds.

If  , then let

, then let  be such that

be such that  ,

so we have

,

so we have  . The induction

hypothesis (iii) and (3.4) yield

. The induction

hypothesis (iii) and (3.4) yield

whence  . Consequently,

. Consequently,

Hence the total bit-size of all  such that

such that  is at least

is at least  and at most

and at most  by definition of

by definition of  .

This concludes the proof of (i). Now if

.

This concludes the proof of (i). Now if  , then

, then  ,

so Lemma 3.4 and (i) imply that

,

so Lemma 3.4 and (i) imply that  . In other words, we obtain an approximation

. In other words, we obtain an approximation

of

of  such that

such that  for all

for all  , and

, and

holds whenever

holds whenever  .

.

Let us prove (ii). If  ,

then

,

then  . If

. If  , then (3.10) yields

, then (3.10) yields

As to (iii), assume that  .

If

.

If  , then

, then  is immediate. If

is immediate. If  , then the

induction hypothesis (ii) yields

, then the

induction hypothesis (ii) yields  , whence

, whence

|

|

|

|

|

|

||

|

|

(by (3.8)) |

We deduce that  holds, hence (3.10)

implies (iii). This completes our induction.

holds, hence (3.10)

implies (iii). This completes our induction.

At the end of the induction, we have  and, for

all

and, for

all  ,

,

By (iii) and (3.4), this implies the correctness of the overall algorithm.

As to the complexity bound, Lemma 3.5 shows that  , when applying Lemma 3.4.

Consequently, the cost of all these applications for

, when applying Lemma 3.4.

Consequently, the cost of all these applications for  is bounded by

is bounded by

since  . The cost of the

evaluations of

. The cost of the

evaluations of  and all further additions,

subtractions, and modular reductions is not more expensive.

and all further additions,

subtractions, and modular reductions is not more expensive.

The bound for the probability of success follows by induction from

Lemmas 3.4 and 3.5, while using the fact that

all  and

and  are chosen

independently.

are chosen

independently.

Throughout this section, we assume that  is a

modular blackbox polynomial in

is a

modular blackbox polynomial in  with at most

with at most  terms and of bit-size at most

terms and of bit-size at most  . In order to simplify the cost analyses, it will be

convenient to assume that

. In order to simplify the cost analyses, it will be

convenient to assume that

Our main goal is to interpolate  .

From now on

.

From now on  will stand for the actual number of

non-zero terms in the sparse representation of

will stand for the actual number of

non-zero terms in the sparse representation of  .

.

Our strategy is based on the computation of increasingly good

approximations of the interpolation of  ,

as in [3], for instance. The idea is to determine an

approximation

,

as in [3], for instance. The idea is to determine an

approximation  of

of  ,

such that

,

such that  contains roughly half the number of

terms as

contains roughly half the number of

terms as  , and then to

recursively re-run the same algorithm for

, and then to

recursively re-run the same algorithm for  instead of

instead of  . Our first

approximation will only contain terms of small bit-size. During later

iterations, we will include terms of larger and larger bit-sizes.

. Our first

approximation will only contain terms of small bit-size. During later

iterations, we will include terms of larger and larger bit-sizes.

Throughout this section, we set

|

(4.1) |

so that at most  of the terms of

of the terms of  have size

have size  . Our main

technical aim will be to determine at least

. Our main

technical aim will be to determine at least  terms of

terms of  of size

of size  ,

with high probability.

,

with high probability.

Our interpolation algorithm is based on an extension of the univariate

approach from [17]. One first key ingredient is to

homomorphically project the polynomial  to an

element of

to an

element of  for a suitable cycle length

for a suitable cycle length  and a suitable modulus

and a suitable modulus  (of the

form

(of the

form  , where

, where  is as in the previous section and

is as in the previous section and  ).

).

More precisely, we fix

|

(4.2) |

and compute  as in Theorem 2.5. Now

let

as in Theorem 2.5. Now

let  be random elements of

be random elements of  , and consider the map

, and consider the map

We call  a cyclic projection.

a cyclic projection.

Proof. Given  ,

let

,

let  and

and  .

Note that

.

Note that  and

and  for any

for any

. The first inequality yields

. The first inequality yields

, whereas the second one

implies

, whereas the second one

implies  .

.

Given a modulus  , we also

define

, we also

define

and call  a cyclic modular projection.

a cyclic modular projection.

If  , then we say that a term

, then we say that a term

of

of  and the corresponding

exponent

and the corresponding

exponent  are

are  -faithful

if there is no other term

-faithful

if there is no other term  of

of  such that

such that  . If

. If  , then we define

, then we define  -faithfulness

in the same way, while requiring in addition that

-faithfulness

in the same way, while requiring in addition that  be invertible modulo

be invertible modulo  . For

any

. For

any  , we note that

, we note that  is

is  -faithful if

and only if

-faithful if

and only if  is

is  -faithful.

We say that

-faithful.

We say that  is

is  -faithful

if all its terms are

-faithful

if all its terms are  -faithful.

In a similar way, we say that

-faithful.

In a similar way, we say that  is

is  -faithful if

-faithful if  is

invertible for any non-zero term

is

invertible for any non-zero term  of

of  .

.

The first step of our interpolation algorithm is similar to the one from

[17] and consists of determining the exponents of  . Let

. Let  be a

prime number. If

be a

prime number. If  is

is  -faithful, then the exponents of

-faithful, then the exponents of  are precisely those of

are precisely those of  .

.

Proof. We first precompute  in time

in time  . We compute

. We compute  by evaluating

by evaluating  for

for  . This takes

. This takes  bit-operations. We next retrieve

bit-operations. We next retrieve  from these

values using an inverse discrete Fourier transform (DFT) of order

from these

values using an inverse discrete Fourier transform (DFT) of order  . This takes

. This takes  further operations in

further operations in  , using

Bluestein's method [7].

, using

Bluestein's method [7].

Assuming that  is

is  -faithful

and that we know

-faithful

and that we know  , consider

the computation of

, consider

the computation of  for higher precisions

for higher precisions  . Now

. Now  is

also

is

also  -faithful, so the

exponents of

-faithful, so the

exponents of  and

and  coincide. One crucial idea from [17] is to compute

coincide. One crucial idea from [17] is to compute  using only

using only  instead of

instead of  evaluations of

evaluations of  modulo

modulo  . This is the purpose of the next

lemma.

. This is the purpose of the next

lemma.

is

is  -faithful and that

-faithful and that  is

known. Let

is

known. Let  and let

and let  , where

, where  is such that

is such that  for all

for all  .

Then we may compute

.

Then we may compute  in time

in time

Proof. We first Hensel lift the primitive  -th root of unity

-th root of unity  in

in  to a principal

to a principal  -th

root of unity

-th

root of unity  in

in  in time

in time

, as detailed in [17,

section 2.2]. We next compute

, as detailed in [17,

section 2.2]. We next compute  in time

in time  , using binary powering. We pursue

with the evaluations

, using binary powering. We pursue

with the evaluations  for

for  . This can be done in time

. This can be done in time

Now the exponents  of

of  are

known, since they coincide with those of

are

known, since they coincide with those of  ,

and we have the relation

,

and we have the relation

where  denotes the coefficient of

denotes the coefficient of  in

in  , for

, for  . It is well known that this linear

system can be solved in quasi-linear time

. It is well known that this linear

system can be solved in quasi-linear time  :

in fact this problem reduces to the usual interpolation problem thanks

to the transposition principle [8, 35]; see

for instance [25, section 5.1].

:

in fact this problem reduces to the usual interpolation problem thanks

to the transposition principle [8, 35]; see

for instance [25, section 5.1].

The next lemma is devoted to bounding the probability of picking up

random prime moduli that yield  -faithful

projections.

-faithful

projections.

(resp.

(resp.  ) be the number of terms (resp.

) be the number of terms (resp.

-faithful terms) of

-faithful terms) of  of size

of size  .

Let the cycle length

.

Let the cycle length  and the modulus

and the modulus  be given as described in Theorem 2.5. If

the

be given as described in Theorem 2.5. If

the  are uniformly and independently taken at

random

are uniformly and independently taken at

random  , then, with

probability

, then, with

probability  , the

projection

, the

projection  is

is  -faithful

and

-faithful

and

Proof. We let  and

and  as in (4.2), which allows us to apply

Theorem 2.5. Let

as in (4.2), which allows us to apply

Theorem 2.5. Let  and

and  . For any

. For any  ,

let

,

let

Given  , consider

, consider  and note that

and note that  .

We say that

.

We say that  is admissible if

is admissible if  and

and  for all

for all  . This is the case if and only if

. This is the case if and only if  is not divisible by

is not divisible by  . Now

. Now

is divisible by at most

is divisible by at most  distinct prime numbers, by Theorem 2.4. Since there are at

least

distinct prime numbers, by Theorem 2.4. Since there are at

least

prime numbers in  , by Theorem

2.3, the probability that

, by Theorem

2.3, the probability that  is

divisible by

is

divisible by  is at most

is at most

From (4.1) we obtain  ,

whence

,

whence  . It follows that

. It follows that

since  from (4.1). Now consider two

admissible exponents

from (4.1). Now consider two

admissible exponents  in

in  and let

and let  with

with  .

For fixed values of

.

For fixed values of  with

with  , there is a single choice of

, there is a single choice of  with

with  . Hence the probability

that this happens with random

. Hence the probability

that this happens with random  is

is  . Consequently, for fixed

. Consequently, for fixed  , the probability that

, the probability that  for some

for some  is at most

is at most

|

(4.4) |

thanks to (4.1).

Assuming now that  is fixed, let us examine the

probability that

is fixed, let us examine the

probability that  is

is  -faithful. Let

-faithful. Let  be the

product of all non-zero coefficients of

be the

product of all non-zero coefficients of  .

Then

.

Then  is

is  faithful if and

only if

faithful if and

only if  does not divide

does not divide  . Now the bit-size of the product

. Now the bit-size of the product  is bounded by

is bounded by  , by Lemma 4.1. Hence

, by Lemma 4.1. Hence  is divisible by at most

is divisible by at most

prime numbers, by Theorem 2.4 and

our assumption that

prime numbers, by Theorem 2.4 and

our assumption that  . With

probability at least

. With

probability at least

|

(4.5) |

there are at least  prime numbers amongst which

prime numbers amongst which

is chosen at random, by Theorem 2.5(b).

Assuming this,

is chosen at random, by Theorem 2.5(b).

Assuming this,  is not

is not  -faithful with probability at most

-faithful with probability at most

|

(4.6) |

since  , by (4.2).

, by (4.2).

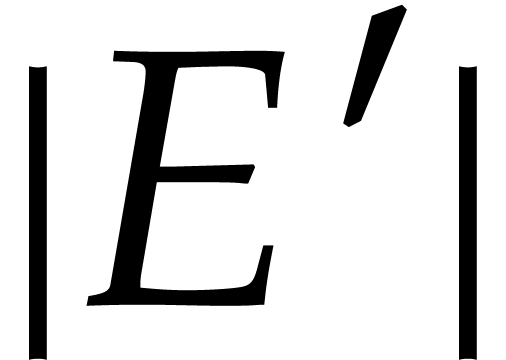

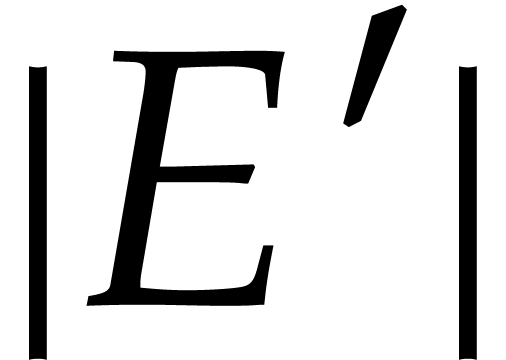

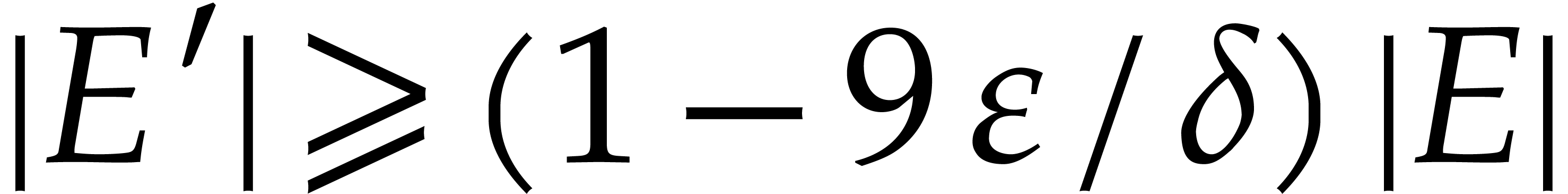

Let  be the set of

be the set of  such

that

such

that  and

and  for all

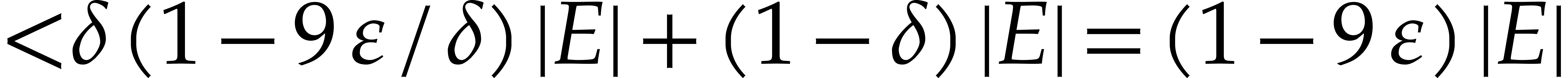

for all  . Altogether, the bounds (4.3),

(4.4), (4.5), and (4.6) imply

that the probability that a given

. Altogether, the bounds (4.3),

(4.4), (4.5), and (4.6) imply

that the probability that a given  belongs to

belongs to

is at least

is at least  .

It follows that the expectation of

.

It follows that the expectation of  is at least

is at least

. For

. For

this further implies that the probability that  cannot exceed

cannot exceed  : otherwise the

expectation of

: otherwise the

expectation of  would be

would be  .

.

We finally observe that  is

is  -faithful whenever

-faithful whenever  for

all

for

all  such that

such that  .

Now for every

.

Now for every  with

with  ,

there is at most one

,

there is at most one  with

with  : if

: if  for

for  , then

, then  ,

whence

,

whence  . By (4.1),

there are at most

. By (4.1),

there are at most  exponents

exponents  with

with  . We conclude that

. We conclude that  , whenever

, whenever  .

.

Lemma 4.3 allows us to compute the coefficients of  with good complexity. In the univariate case, it

turns out that the exponents of

with good complexity. In the univariate case, it

turns out that the exponents of  -faithful

terms of

-faithful

terms of  can be recovered as quotients of

matching terms of

can be recovered as quotients of

matching terms of  and

and  by

taking

by

taking  sufficiently large.

sufficiently large.

In the multivariate case, this idea still works, for a suitable

Kronecker-style choice of  .

However, we only reach a suboptimal complexity when the exponents of

.

However, we only reach a suboptimal complexity when the exponents of

are themselves sparse and/or of unequal

magnitudes. The remedy is to generalize the “quotient trick”

from the univariate case, by combining it with the algorithms from

section 3: the quotients will now yield the exponents in

encoded form.

are themselves sparse and/or of unequal

magnitudes. The remedy is to generalize the “quotient trick”

from the univariate case, by combining it with the algorithms from

section 3: the quotients will now yield the exponents in

encoded form.

Let us now specify the remaining parameters from section 3.

First of all, we take  , where

, where

Consequently,

|

(4.7) |

We also take  and

and  .

Since

.

Since  is odd, the inequalities (3.2)

hold. We compute

is odd, the inequalities (3.2)

hold. We compute  and

and  for

for

as in section 3.3.

as in section 3.3.

For  ,

,  , and

, and  ,

let

,

let

For any term  with

with  and

and

, note that

, note that

Whenever  , it follows that

, it follows that

can be read off modulo

can be read off modulo  from the quotient of

from the quotient of  and

and  .

.

,

,  ,

,  ,

,

,

,  be as in

be as in  be as in

Theorem 2.5. Then we can compute the random parameters

be as in

Theorem 2.5. Then we can compute the random parameters

,

,  (

( ,

,  ) and

) and  in time

in time  and with a probability of success

and with a probability of success  .

.

Proof. The cost to generate  random elements

random elements  in

in  is

is

since we assumed  . The

generation of

. The

generation of  can be done in time

can be done in time

For  , we compute

, we compute  using Lemma 2.6 with

using Lemma 2.6 with  . The computation of a single

. The computation of a single  takes time

takes time

and succeeds with probability at least  .

The probability of success for all

.

The probability of success for all  is at least

is at least

, because

, because

We conclude with the observation that  .

.

is

is  -faithful and that

-faithful and that  is

known. Let

is

known. Let  . Then we can

compute

. Then we can

compute  and

and  for all

for all

and

and  in time

in time

Proof. For a fixed  ,

we can compute

,

we can compute  and

and  for

all

for

all  in time

in time

by Lemma 4.3. From Lemma 3.5 and  , we get

, we get

By definition of  and

and  , we have

, we have  .

By (4.1) we also have

.

By (4.1) we also have  .

Hence the cost for computing

.

Hence the cost for computing  and

and  simplifies to

simplifies to

|

|||

|

|

(by (4.1)) | |

|

|

||

|

|

(since  ) ) |

Since  , the total computation

time for

, the total computation

time for  is also bounded by

is also bounded by  .

.

Consider a family of numbers  ,

where

,

where  ,

,  , and

, and  .

We say that

.

We say that  is a faithful exponent

encoding for

is a faithful exponent

encoding for  if we have

if we have  whenever

whenever  is a

is a  -faithful

exponent of

-faithful

exponent of  with

with  .

We require nothing for the remaining numbers

.

We require nothing for the remaining numbers  , which should be considered as garbage.

, which should be considered as garbage.

is

is  -faithful and that

-faithful and that  is known. Then we may compute a faithful exponent

encoding for

is known. Then we may compute a faithful exponent

encoding for  in time

in time  .

.

Proof. We compute all  and

and  using Lemma 4.6. Let

using Lemma 4.6. Let  and

and  be the coefficients of

be the coefficients of  in

in  and

and  . Then we take

. Then we take  if

if  is divisible by

is divisible by  and

and  if not. For a fixed

if not. For a fixed  all these

divisions take

all these

divisions take  bit-operations, using a similar

reasoning as in the proof of Lemma 4.6. Since

bit-operations, using a similar

reasoning as in the proof of Lemma 4.6. Since  , the result follows.

, the result follows.

Let  . We say that

. We say that  is a

is a  -approximation

of

-approximation

of  if

if  and

and  .

.

be as in Theorem 2.5,

with

be as in Theorem 2.5,

with  as in (4.2). There is a

Monte Carlo algorithm that computes a

as in (4.2). There is a

Monte Carlo algorithm that computes a  -approximation

of

-approximation

of  in time

in time  and which

succeeds with probability at least

and which

succeeds with probability at least  .

.

Proof. We first compute the required random

parameters as in Lemma 4.5. This takes time  and succeeds with probability at least

and succeeds with probability at least  . We next compute

. We next compute  using

Lemma 4.2, which can be done in time

using

Lemma 4.2, which can be done in time

We next apply Corollary 4.7 and compute a faithful exponent

encoding for  , in time

, in time  . By Lemma 4.4, this

computation fails with probability at most

. By Lemma 4.4, this

computation fails with probability at most  .

Moreover, in case of success, we have

.

Moreover, in case of success, we have  ,

still with the notation of Lemma 4.4.

,

still with the notation of Lemma 4.4.

From (4.1) and  ,

we get

,

we get  . This allows us to

apply Theorem 3.6 to the faithful exponent encoding for

. This allows us to

apply Theorem 3.6 to the faithful exponent encoding for

. Let

. Let  be the exponents of

be the exponents of  .

Consider

.

Consider  such that there exists a

such that there exists a  -faithful term

-faithful term  of

of  with

with  and

and  . Theorem 3.6 produces a guess for

. Theorem 3.6 produces a guess for

for every such

for every such  .

With probability at least

.

With probability at least

these guesses are all correct. The running time of this step is bounded by

since  ,

,  , and (4.7) imply

, and (4.7) imply  .

.

Below we will show that

|

(4.8) |

Let  be the smallest integer such that

be the smallest integer such that  . We finally compute

. We finally compute  using Lemma 4.3, which can be done in time

using Lemma 4.3, which can be done in time

Let  be such that

be such that

For every  -faithful term

-faithful term  of

of  with

with  and

and  , we have

, we have

so we can recover  from

from  .

.

With a probability at least  ,

all the above steps succeed. In that case, we clearly have

,

all the above steps succeed. In that case, we clearly have  . Let

. Let  ,

where

,

where  (resp.

(resp.  ) is the sum of the terms of bit-size

) is the sum of the terms of bit-size  (resp.

(resp.  ),

so that

),

so that  . Then

. Then  and

and

Consequently,

|

(4.9) |

which means that  is a

is a  -approximation of

-approximation of  .

.

In order to conclude, it remains to prove the claimed inequality (4.8). Using the definition of  and the

inequalities

and the

inequalities  ,

,  ,

,  ,

we have

,

we have

|

(4.10) |

From (4.7) we have  and therefore

and therefore

. So the inequality

. So the inequality  yields

yields

|

(4.11) |

Let us analyze the right-hand side of (4.11). Without

further mention, we will frequently use that  . First of all, we have

. First of all, we have

It follows that

|

(4.12) |

Now the function  is decreasing for

is decreasing for  . Consequently,

. Consequently,

Similarly,  . Hence,

. Hence,

|

|

|

|

|

|

|

|

|

|

|

(by (4.7)) |

This yields

|

(4.13) |

Combining (4.12), (4.13), and (4.7), we deduce that

|

(4.14) |

The inequalities (4.10), (4.11), and (4.14) finally yield the claimed bound:

Instead of just half of the coefficients, it is possible to compute any

constant portion of the terms with the same complexity. More precisely,

given  , we say that

, we say that  is a

is a  -approximation

of

-approximation

of  if

if  and

and  .

.

be as in Theorem 2.5,

with

be as in Theorem 2.5,

with  as in (4.2). Given

as in (4.2). Given  , there is a Monte Carlo

algorithm that computes a

, there is a Monte Carlo

algorithm that computes a  -approximation

of

-approximation

of  in time

in time  and which

succeeds with probability at least

and which

succeeds with probability at least  .

.

Proof. When  we have

we have

, so (4.9)

becomes

, so (4.9)

becomes  . In addition, we

have

. In addition, we

have  , whenever

, whenever  . In other words, if

. In other words, if  is

sufficiently large, then the proof of Lemma 4.8 actually

yields a

is

sufficiently large, then the proof of Lemma 4.8 actually

yields a  -approximation of

-approximation of

.

.

Once an approximation  of the sparse

interpolation of

of the sparse

interpolation of  is known, we wish to obtain

better approximations by applying the same algorithm with

is known, we wish to obtain

better approximations by applying the same algorithm with  in the role of

in the role of  .

This requires an efficient algorithm to evaluate

.

This requires an efficient algorithm to evaluate  . In fact, we only need to compute projections of

. In fact, we only need to compute projections of

as in Lemma 4.2 and to evaluate

as in Lemma 4.2 and to evaluate

at geometric sequences as in Lemma 4.3.

Building a blackbox for

at geometric sequences as in Lemma 4.3.

Building a blackbox for  turns out to be

suboptimal for these tasks. Instead, it is better to use the following

lemma.

turns out to be

suboptimal for these tasks. Instead, it is better to use the following

lemma.

where  and

and  ,

let

,

let  , let

, let  be as in Theorem 2.5, and let

be as in Theorem 2.5, and let  be

in

be

in  .

.

We can compute  in time

in time

Given  ,

,  , an element

, an element  of

order

of

order  , and

, and  , we can compute

, we can compute  for

for  in time

in time

Proof. Given  ,

the projections

,

the projections  can be computed in time

can be computed in time  . The projections

. The projections  can be obtained using

can be obtained using  further operations. Adding

up these projections for

further operations. Adding

up these projections for  takes

takes  operations. Altogether, this yields (i).

operations. Altogether, this yields (i).

As for part (ii) we follow the proof of Lemma 4.3.

We first compute  with

with  by

binary powering. The reductions

by

binary powering. The reductions  of exponents are

obtained in time

of exponents are

obtained in time  . Then, the

powers

. Then, the

powers  and

and  can be

deduced in time

can be

deduced in time  .

.

Let  . Then we have

. Then we have

By the transposition principle, this matrix-vector product can be

computed with the same cost as multi-point evaluation of a univariate

polynomial. We conclude that  can be computed in

time

can be computed in

time  .

.

We are now ready to complete the proof of our main result.

Proof of Theorem 1.1. We set  and take

and take  ,

,

as in (4.2). Thanks to Theorem 2.5, we may compute a suitable triple

as in (4.2). Thanks to Theorem 2.5, we may compute a suitable triple  in time

in time  , with probability of

success at least

, with probability of

success at least  , where

, where

.

.

Let  . Let

. Let  and

and  for

for  .

Starting with

.

Starting with  , we compute a

sequence

, we compute a

sequence  of successive approximations of

of successive approximations of  as follows. Assuming that

as follows. Assuming that  is

known for some

is

known for some  , we apply

Lemma 4.8 with

, we apply

Lemma 4.8 with  and

and  in the roles of

in the roles of  and

and  . With high probability, this yields a

. With high probability, this yields a  -approximation

-approximation  of

of  and we set

and we set  .

Taking

.

Taking  into account in Lemma 4.8

requires the following changes in the complexity analysis:

into account in Lemma 4.8

requires the following changes in the complexity analysis:

Since  has

has  terms of

size

terms of

size  , part (i)

of Lemma 4.10 implies that the complexity bound stated

in Lemma 4.2 becomes

, part (i)

of Lemma 4.10 implies that the complexity bound stated

in Lemma 4.2 becomes

Part (ii) of Lemma 4.10 implies that the complexity bound stated in Lemma 4.3 becomes

It follows that the complexity bounds of Lemmas 4.6, 4.8, and Corollary 4.7 remain unchanged. The total running time is therefore bounded by

Using the inequalities  and

and  , the probability of failure is bounded by

, the probability of failure is bounded by

If none of the steps fail, then  for

for  , by induction. In particular,

, by induction. In particular,  , so

, so  .

.

Remark  ,

where

,

where  is a given bound on the bit-size of

is a given bound on the bit-size of  . This was erroneously asserted in

a prior version of this paper [28] and such a bound would

be more efficient in cases where the sizes of the coefficients and

exponents of

. This was erroneously asserted in

a prior version of this paper [28] and such a bound would

be more efficient in cases where the sizes of the coefficients and

exponents of  vary widely. Here, the first

difficulty appears when a first approximation

vary widely. Here, the first

difficulty appears when a first approximation  of

of

has

has  terms with small

coefficients and

terms with small

coefficients and  has just a few terms but huge

coefficients of bit-size

has just a few terms but huge

coefficients of bit-size  :

the evaluation cost of

:

the evaluation cost of  in part (ii) of

Lemma 4.10 would need to remain in

in part (ii) of

Lemma 4.10 would need to remain in  . Recent advances in this problem can be found in

[18] for the univariate case. Note that the bound

. Recent advances in this problem can be found in

[18] for the univariate case. Note that the bound  is still achieved in the approximate version (Theorem 4.9) of our main theorem.

is still achieved in the approximate version (Theorem 4.9) of our main theorem.

For practical purposes, the choice of  is not

realistic. The high constant

is not

realistic. The high constant  is due to the fact

that we rely on [44] for unconditional proofs for the

existence of prime numbers with the desirable properties from Theorem 2.5. Such unconditional number theoretic proofs are typically

very hard and lead to pessimistic constants. Numerical evidence shows

that a much smaller constant would do in practice: see [16,

section 4] and [40, section 2.2.2]. For the univariate case

the complexity of the sparse interpolation algorithm in [40]

is made precise in term of the logarithmic factors.

is due to the fact

that we rely on [44] for unconditional proofs for the

existence of prime numbers with the desirable properties from Theorem 2.5. Such unconditional number theoretic proofs are typically

very hard and lead to pessimistic constants. Numerical evidence shows

that a much smaller constant would do in practice: see [16,

section 4] and [40, section 2.2.2]. For the univariate case

the complexity of the sparse interpolation algorithm in [40]

is made precise in term of the logarithmic factors.

The exposition so far has also been optimized for simplicity of

presentation rather than practical efficiency: some of the other

constant factors might be sharpened further and some of the logarithmic

factors in the complexity bounds might be removed. In practical

implementations, one may simply squeeze all thresholds until the error

rate becomes unacceptably high. Here one may exploit the

“auto-correcting” properties of the algorithm. For instance,

although the  -approximation

-approximation

from the proof of Theorem 1.1 may

contain incorrect terms, most of these terms will be removed at the next

iteration.

from the proof of Theorem 1.1 may

contain incorrect terms, most of these terms will be removed at the next

iteration.

Our exposition so far has also been optimized for full generality. For

applications, a high number of, say  ,

variables may be useful, but the bit-size of individual exponents rarely

exceeds the machine precision. In fact, most multivariate polynomials

,

variables may be useful, but the bit-size of individual exponents rarely

exceeds the machine precision. In fact, most multivariate polynomials

of practical interest are of low or moderately

large total degree. A lot of the technical difficulties from the

previous sections disappear in that case. In this section we will

describe some practical variants of our sparse interpolation algorithm,

with a main focus on this special case.

of practical interest are of low or moderately

large total degree. A lot of the technical difficulties from the

previous sections disappear in that case. In this section we will

describe some practical variants of our sparse interpolation algorithm,

with a main focus on this special case.

In practice, the evaluation of our modular blackbox polynomial is

typically an order of magnitude faster if the modulus fits into a double

precision number (e.g.  bits if we

rely on floating point arithmetic and

bits if we

rely on floating point arithmetic and  bits when

using integer arithmetic). In this subsection, we describe some

techniques that can be used to minimize the use of multiple precision

arithmetic.

bits when

using integer arithmetic). In this subsection, we describe some

techniques that can be used to minimize the use of multiple precision

arithmetic.

are small, but

its coefficients are allowed to be large, then it is classical to

proceed in two phases. We first determine the exponents using double

precision arithmetic only. Knowing these exponents, we next determine

the coefficients using modular arithmetic and the Chinese remainder

theorem: modulo any small prime

are small, but

its coefficients are allowed to be large, then it is classical to

proceed in two phases. We first determine the exponents using double

precision arithmetic only. Knowing these exponents, we next determine

the coefficients using modular arithmetic and the Chinese remainder

theorem: modulo any small prime  ,

we may efficiently compute

,

we may efficiently compute  using only

using only  evaluations of

evaluations of  modulo

modulo  on a geometric progression, followed by [25,

section 5.1]. Doing this for enough small primes, we may then

reconstruct the coefficients of

on a geometric progression, followed by [25,

section 5.1]. Doing this for enough small primes, we may then

reconstruct the coefficients of  using Chinese

remaindering. Only the Chinese remaindering step involves a limited

but unavoidable amount of multi-precision arithmetic. By determining

only the coefficients of size

using Chinese

remaindering. Only the Chinese remaindering step involves a limited

but unavoidable amount of multi-precision arithmetic. By determining

only the coefficients of size  ,

where

,

where  is repeatedly doubled until we reach

is repeatedly doubled until we reach

, the whole second phase

can be accomplished in time

, the whole second phase

can be accomplished in time  .

.

inside an integer

inside an integer  modulo

modulo  of doubled precision

of doubled precision  instead of

instead of  . In practice,

this often leads us to exceed the machine precision. An alternative

approach (which is reminiscent of the ones from [19, 32]) is to work with tangent numbers: let us now take

. In practice,

this often leads us to exceed the machine precision. An alternative

approach (which is reminiscent of the ones from [19, 32]) is to work with tangent numbers: let us now take

where  is extended to

is extended to  and

where

and

where  . Then, for any term

. Then, for any term

, we have

, we have

so we may again obtain  from

from  and

and  using one division. Of course, this requires

our ability to evaluate

using one division. Of course, this requires

our ability to evaluate  at elements of

at elements of  , which is indeed the case if

, which is indeed the case if  is given by an SLP. Note that arithmetic in

is given by an SLP. Note that arithmetic in  is about three times as expensive as arithmetic in

is about three times as expensive as arithmetic in  .

.

from section 2.4

is asymptotically efficient, it relies heavily on multiple precision

arithmetic. If all

from section 2.4

is asymptotically efficient, it relies heavily on multiple precision

arithmetic. If all  and

and  fit within machine numbers and

fit within machine numbers and  is not too

large, then we expect it to be more efficient to simply compute all

remainders

is not too