Factoring sparse polynomials

fast  |

|

December 28, 2023 |

| Final version of February 23, 2025 |

|

. This work has

partly been supported by the French ANR-22-CE48-0016

NODE project.

. This work has

partly been supported by the French ANR-22-CE48-0016

NODE project.

. This article has

been written using GNU TeXmacs [47].

. This article has

been written using GNU TeXmacs [47].

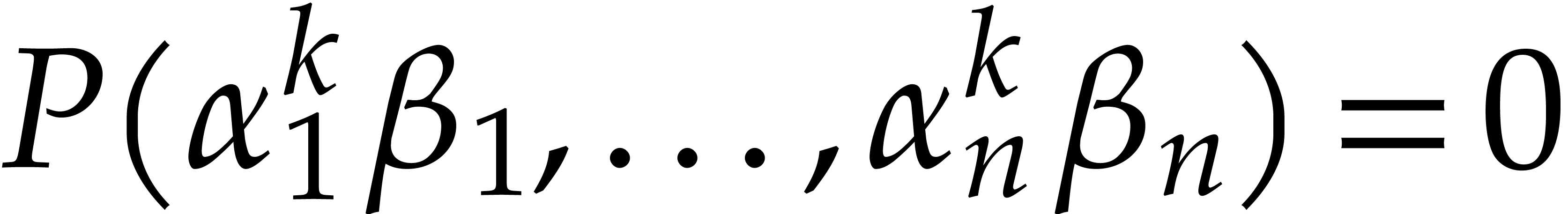

Consider a sparse polynomial in several variables given explicitly as a sum of non-zero terms with coefficients in an effective field. In this paper, we present several algorithms for factoring such polynomials and related tasks (such as gcd computation, square-free factorization, content-free factorization, and root extraction). Our methods are all based on sparse interpolation, but follow two main lines of attack: iteration on the number of variables and more direct reductions to the univariate or bivariate case. We present detailed probabilistic complexity bounds in terms of the complexity of sparse interpolation and evaluation. |

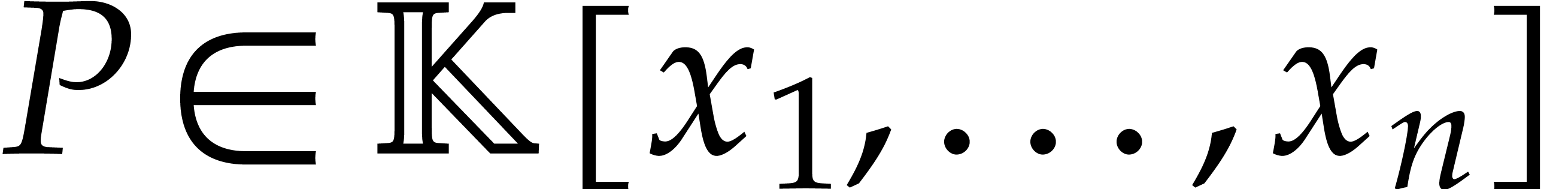

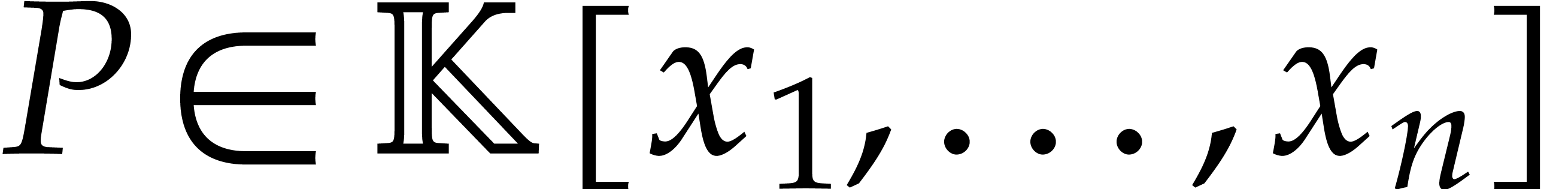

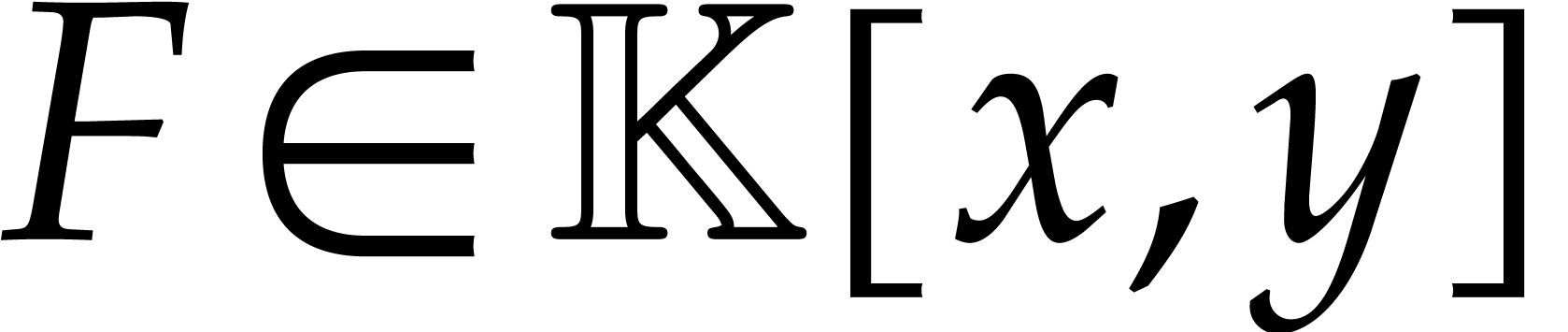

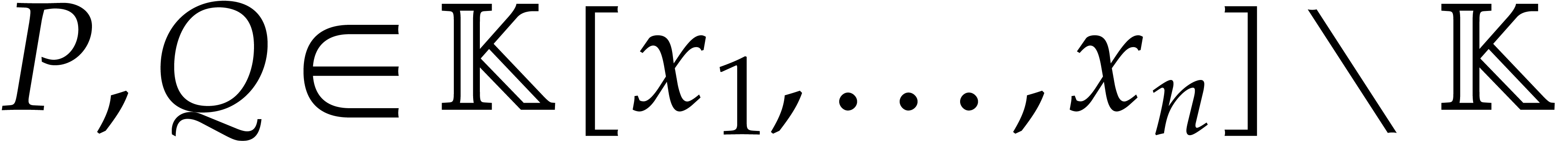

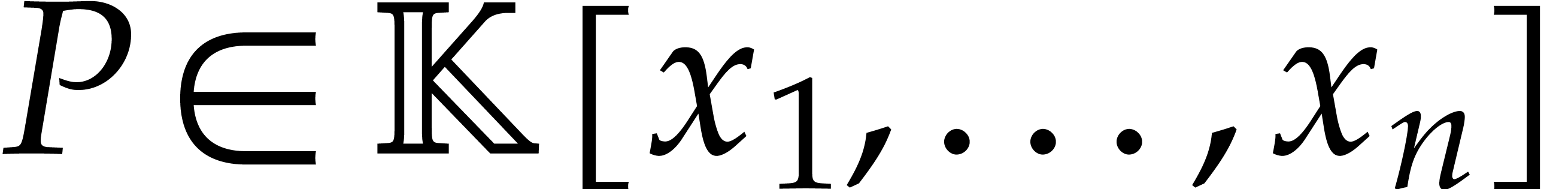

Let  be an effective field. Consider a sparse

polynomial

be an effective field. Consider a sparse

polynomial  , represented as

, represented as

|

(1.1) |

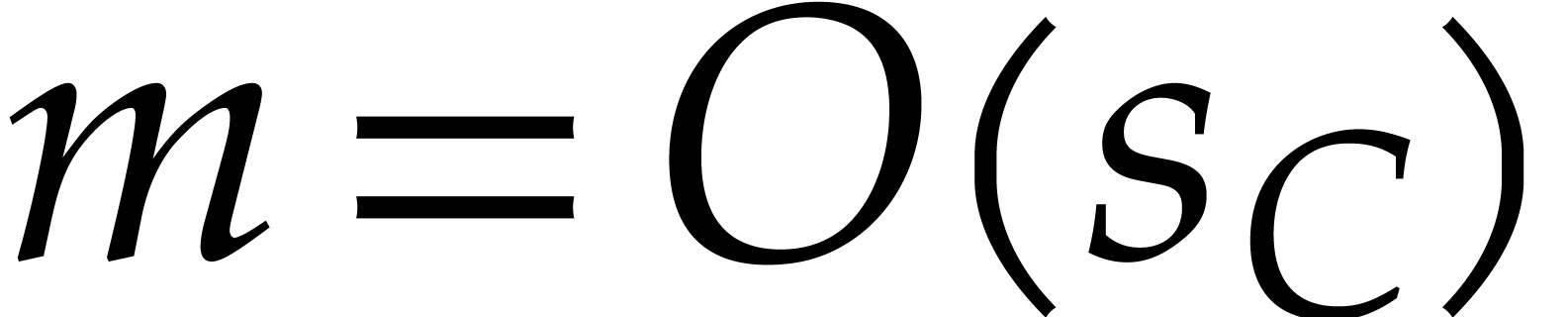

where  ,

,  , and

, and  for any

for any  . We call

. We call  the size of

the size of  and

and  its support. The aim of this paper is to factor

its support. The aim of this paper is to factor  into a product of irreducible sparse polynomials.

into a product of irreducible sparse polynomials.

All algorithms that we will present are based on the approach of sparse evaluation and interpolation. Instead of directly working with sparse representations (1.1), the idea is to evaluate input polynomials at a sequence of well-chosen points, do the actual work on these evaluations, and then recover the output polynomials using sparse interpolation. The evaluation-interpolation approach leads to very efficient algorithms for many tasks, such as multiplication [48, 46], division, gcd computations [51], etc. In this paper, we investigate the complexity of factorization under this light.

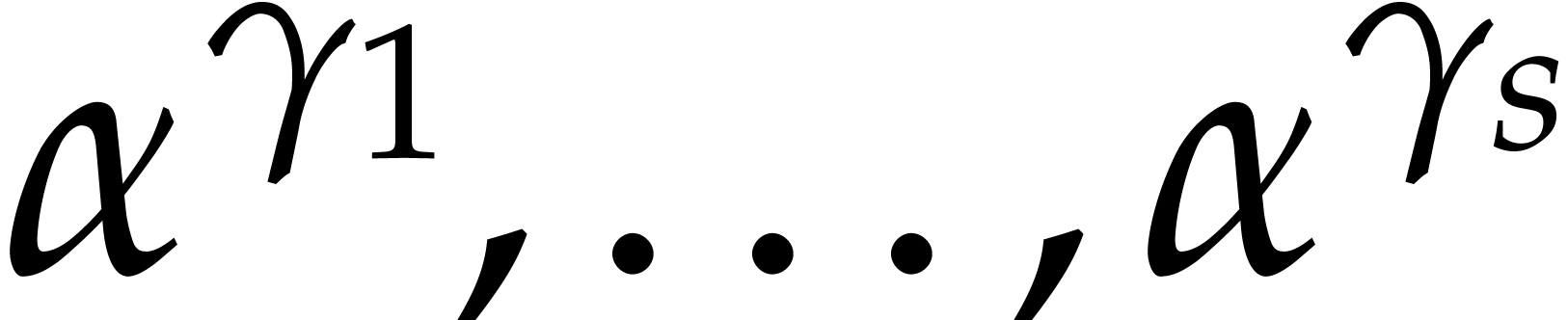

One particularly good way to choose the evaluation points is to take

them in a geometric progression: for a fixed  , we evaluate at

, we evaluate at  ,

where

,

where  . This idea goes back

to Prony [91] and was rediscovered, extended, and

popularized by Ben Or and Tiwari [5]. We refer to [92]

for a nice survey. If

. This idea goes back

to Prony [91] and was rediscovered, extended, and

popularized by Ben Or and Tiwari [5]. We refer to [92]

for a nice survey. If  is a finite field, then a

further refinement is to use suitable roots of unity, in which case both

sparse evaluation and interpolation essentially reduce to discrete

Fourier transforms [52, 46].

is a finite field, then a

further refinement is to use suitable roots of unity, in which case both

sparse evaluation and interpolation essentially reduce to discrete

Fourier transforms [52, 46].

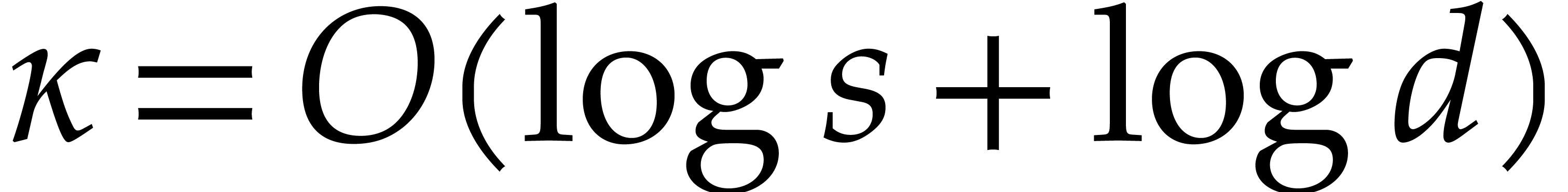

In this paper, we do not specify the precise algorithms that should be

used for sparse evaluation and interpolation, but we will always assume

that the evaluation points form geometric progressions. Then the cost

of sparse evaluation or interpolation at

of sparse evaluation or interpolation at  such points is quasi-linear in

such points is quasi-linear in  . We refer to Sections 2.1, 2.3,

and 2.4 for more details on this cost function

. We refer to Sections 2.1, 2.3,

and 2.4 for more details on this cost function  and the algebraic complexity model that we assume.

and the algebraic complexity model that we assume.

One important consequence of relying on geometric progressions is that

this constraints the type of factorization algorithms that will be

efficient. For instance, several existing methods start with the

application of random shifts  for one or more

variables

for one or more

variables  . Since such shifts

do not preserve geometric progressions, this is a technique that we must

avoid. On the other hand, monomial transformations like

. Since such shifts

do not preserve geometric progressions, this is a technique that we must

avoid. On the other hand, monomial transformations like  do preserve geometric progressions and we will see how to make use of

this fact.

do preserve geometric progressions and we will see how to make use of

this fact.

The main goal of this paper is to develop fast algorithms for factoring sparse polynomials under these constraints. Besides the top-level problem of factorization into irreducibles, we also consider several interesting subtasks, such as gcd computations, Hensel lifting, content-free and square-free factorization, and the extraction of multiple roots. While relying on known techniques, we shall show how to conciliate them with the above constraints.

Our complexity bounds are expressed in terms of the maximal size and total degree of the input and output polynomials. In practical applications, total degrees often remain reasonably small, so we typically allow for a polynomial dependence on the total degree times the required number of evaluation/interpolation points. In this particular asymptotic regime, our complexity bounds are very sharp and they improve on the bounds from the existing literature.

Concerning the top-level problem of decomposing sparse polynomials into irreducible factors, we develop two main approaches: a recursive one on the dimension and a more direct one based on simultaneous lifting with respect to all but one variables. We will present precise complexity bounds and examples of particularly difficult cases.

The factorization of polynomials is a fundamental problem in computer algebra. Since we are relying on sparse interpolation techniques, it is also natural to focus exclusively on randomized algorithms of Monte Carlo type. For some deterministic algorithms, we refer to [61, 101, 72].

Before considering multivariate polynomials, we need an algorithm for

factoring univariate polynomials. Throughout this paper, we assume that

we have an algorithm for this task (it can be shown that the mere

assumption of  being effective is not sufficient

[27, 28]).

being effective is not sufficient

[27, 28]).

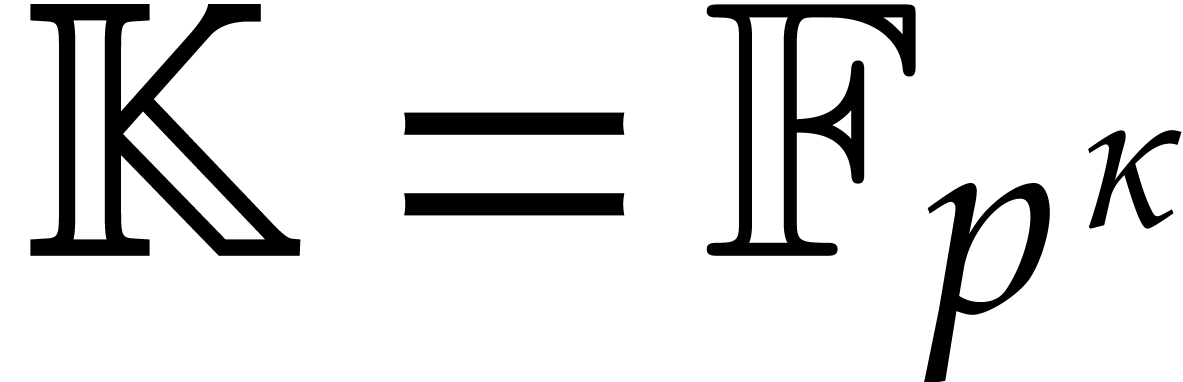

In practice, we typically have  ,

,

,

,  , or

, or  for some prime

for some prime  and

and  . Most

basic is the case when

. Most

basic is the case when  is a finite field, and

various efficient probabilistic methods have been proposed for this

case. An early such method is due to Berlekamp [6, 7].

A very efficient algorithm that is also convenient to implement is due

to Cantor and Zassenhaus [16]. Asymptotically more

efficient methods have been developed since [67, 70]

as well as specific improvements for the case when

is a finite field, and

various efficient probabilistic methods have been proposed for this

case. An early such method is due to Berlekamp [6, 7].

A very efficient algorithm that is also convenient to implement is due

to Cantor and Zassenhaus [16]. Asymptotically more

efficient methods have been developed since [67, 70]

as well as specific improvements for the case when  is composite [53]. See also [31, Chapter 14]

and [65].

is composite [53]. See also [31, Chapter 14]

and [65].

Rational numbers can either be regarded as a subfield of  or

or  . For

asymptotically efficient algorithms for the approximate factorizations

of univariate polynomials over

. For

asymptotically efficient algorithms for the approximate factorizations

of univariate polynomials over  ,

we refer to [98, 87, 83]. When

reducing a polynomial in

,

we refer to [98, 87, 83]. When

reducing a polynomial in  modulo

modulo  for a sufficiently large random prime, factorization over

for a sufficiently large random prime, factorization over  reduces to factorization over

reduces to factorization over  via

Hensel lifting [97, 42, 107]. For

more general factorization methods over

via

Hensel lifting [97, 42, 107]. For

more general factorization methods over  ,

we refer to [26, 20, 82, 39,

3].

,

we refer to [26, 20, 82, 39,

3].

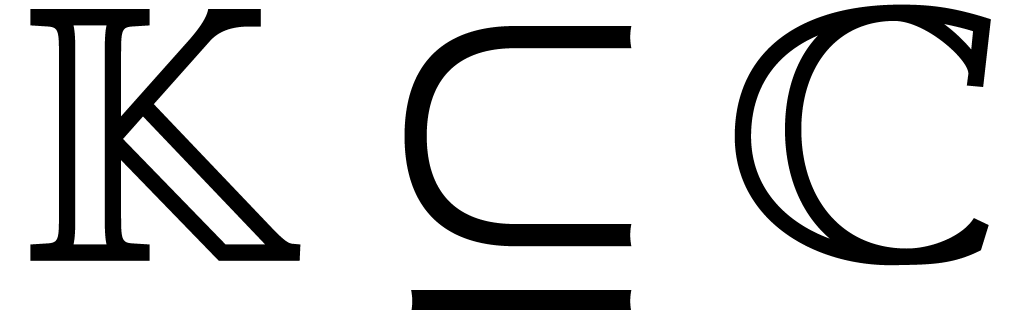

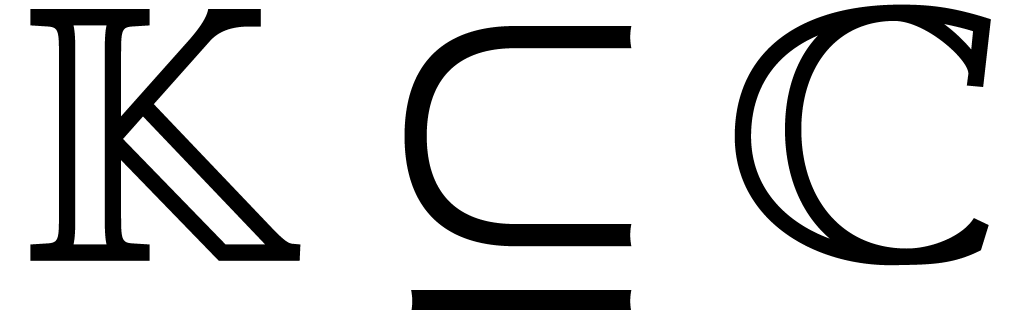

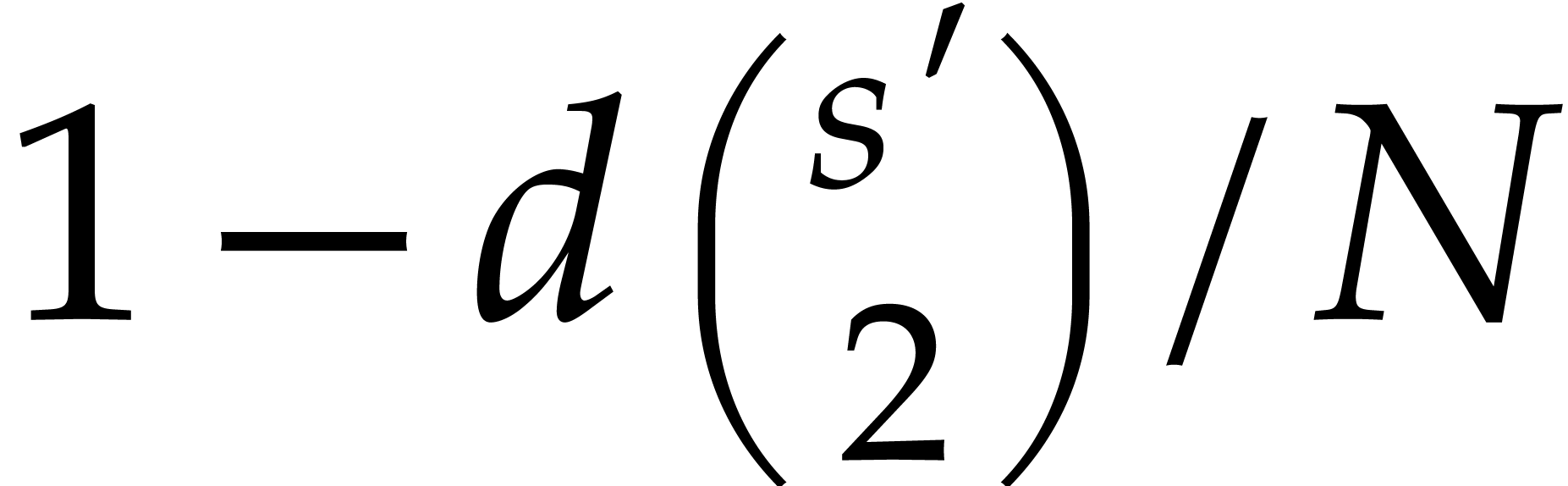

Now let  and assume that we have an irreducible

factorization

and assume that we have an irreducible

factorization  with

with  for

for

or

or  .

(In practice, we require that

.

(In practice, we require that  are known with

sufficient precision.) In order to turn this into a factorization over

are known with

sufficient precision.) In order to turn this into a factorization over

, we need a way to recombine

the factors, i.e., to find the subsets

, we need a way to recombine

the factors, i.e., to find the subsets  for which

for which  . Indeed, if

. Indeed, if  is irreducible in

is irreducible in  and

and  , then

, then  is

never irreducible in

is

never irreducible in  and irreducible with

probability

and irreducible with

probability  in

in  for a

large random prime

for a

large random prime  . The

first recombination method that runs in polynomial time is due to

Lenstra-Lenstra-Lovasz [77]. Subsequently, many

improvements and variants of this LLL-algorithm have been developed [44, 4, 85, 86, 94].

. The

first recombination method that runs in polynomial time is due to

Lenstra-Lenstra-Lovasz [77]. Subsequently, many

improvements and variants of this LLL-algorithm have been developed [44, 4, 85, 86, 94].

The problem of factoring a bivariate polynomial  over

over  is similar in many regards to factoring

polynomials with rational coefficients. Indeed, for a suitable random

prime

is similar in many regards to factoring

polynomials with rational coefficients. Indeed, for a suitable random

prime  , we have seen above

that the latter problem reduces to univariate factorization over

, we have seen above

that the latter problem reduces to univariate factorization over  , Hensel lifting, and factor

combination. In a similar way, after factoring

, Hensel lifting, and factor

combination. In a similar way, after factoring  for a sufficiently random

for a sufficiently random  (possibly in an

extension field of

(possibly in an

extension field of  , whenever

, whenever

is a small finite field), we may use Hensel

lifting to obtain a factorization in

is a small finite field), we may use Hensel

lifting to obtain a factorization in  ,

and finally recombine the factors. The precise algorithms for factor

recombination are slightly different in this context [29,

71, 4, 74] (see also [93,

95] for earlier related work), although they rely on

similar ideas.

,

and finally recombine the factors. The precise algorithms for factor

recombination are slightly different in this context [29,

71, 4, 74] (see also [93,

95] for earlier related work), although they rely on

similar ideas.

Hensel lifting naturally generalizes to polynomials in three or more

variables  . Many algorithms

for multivariate polynomial factorization rely on it [84,

104, 103, 109, 60,

32, 33, 62, 61, 8, 72, 80, 81, 17],

as well as many implementations in computer algebra systems. One

important property of higher dimensional Hensel lifting is that factor

recombination can generally be avoided with high probability, contrary

to what we saw for

. Many algorithms

for multivariate polynomial factorization rely on it [84,

104, 103, 109, 60,

32, 33, 62, 61, 8, 72, 80, 81, 17],

as well as many implementations in computer algebra systems. One

important property of higher dimensional Hensel lifting is that factor

recombination can generally be avoided with high probability, contrary

to what we saw for  -adic and

bivariate Hensel lifting. This is due to Hilbert and Bertini's

irreducibility theorems [43, 10]:

-adic and

bivariate Hensel lifting. This is due to Hilbert and Bertini's

irreducibility theorems [43, 10]:

is irreducible and of

total degree

is irreducible and of

total degree  . Let

. Let  be the set of points

be the set of points  for

which

for

which

|

(1.2) |

is irreducible in  . Then

. Then

is a Zariski open subset of

is a Zariski open subset of  , which is dense over the algebraic closure

of

, which is dense over the algebraic closure

of  .

.

Effective versions of these theorems can be used to directly reduce the

factorization problem in dimension  to a

bivariate problem (and even to a univariate problem if

to a

bivariate problem (and even to a univariate problem if  , using similar ideas). We refer to [74]

and [75, Chapître 6] for a recent presentation of how

to do this and to [100, Section 6.1] for the mathematical

background.

, using similar ideas). We refer to [74]

and [75, Chapître 6] for a recent presentation of how

to do this and to [100, Section 6.1] for the mathematical

background.

In order to analyze the computational complexity of factorization, we

first need to specify the precise way we represent our polynomials. When

using a dense representation (e.g. storing all monomials of

total degree  with their (possibly zero)

coefficients), Theorem 1.1 allows us to directly reduce to

the bivariate case (if

with their (possibly zero)

coefficients), Theorem 1.1 allows us to directly reduce to

the bivariate case (if  is very small, then one

may need to replace

is very small, then one

may need to replace  by a suitable algebraic

extension). The first polynomial time reduction of this kind was

proposed by Kaltofen [60]. More recent bounds that exploit

fast dense polynomial arithmetic can be found in [72].

by a suitable algebraic

extension). The first polynomial time reduction of this kind was

proposed by Kaltofen [60]. More recent bounds that exploit

fast dense polynomial arithmetic can be found in [72].

Another popular representation is the “black box

representation”, in which case we are only given an algorithm for

the evaluation of our polynomial  .

Often this algorithm is actually a straight line program (SLP) [14],

which corresponds to the “SLP representation”. In these

cases, the relevant complexity measure is the length of the SLP or the

maximal number of steps that are needed to compute one black box

evaluation. Consequently, affine changes of variables (1.2)

only marginally increase the program size. This has been exploited in

order to derive polynomial time complexity bounds for factoring in this

model [63, 68]; see also [30, 17, 18]. Here we stress that the output factors

are also given in black box or SLP representation.

.

Often this algorithm is actually a straight line program (SLP) [14],

which corresponds to the “SLP representation”. In these

cases, the relevant complexity measure is the length of the SLP or the

maximal number of steps that are needed to compute one black box

evaluation. Consequently, affine changes of variables (1.2)

only marginally increase the program size. This has been exploited in

order to derive polynomial time complexity bounds for factoring in this

model [63, 68]; see also [30, 17, 18]. Here we stress that the output factors

are also given in black box or SLP representation.

Plausibly the most common representation for multivariate polynomials in

computer algebra is the sparse one (1.1). Any sparse

polynomial naturally gives rise to an SLP of roughly the same size.

Sparse interpolation also provides a way to convert in the opposite

direction. However, for an SLP  of length

of length  , it takes

, it takes  time to recover its sparse representation

time to recover its sparse representation  ,

where

,

where  . A priori,

the back and forth conversion

. A priori,

the back and forth conversion  therefore takes

quadratic time

therefore takes

quadratic time  . While it is

theoretically possible to factor sparse polynomials using the above

black box methods, this is suboptimal from a complexity perspective.

. While it is

theoretically possible to factor sparse polynomials using the above

black box methods, this is suboptimal from a complexity perspective.

Unfortunately, general affine transformations (1.2) destroy

sparsity, so additional ideas are needed for the design of efficient

factorization methods based on Hilbert-Bertini-like irreducibility

theorems. Dedicated algorithms for the sparse model have been developed

in [32, 8, 79, 81].

There are two ways to counter the loss of sparsity under affine

transformations, both of which will be considered in the present paper.

One possibility is to successively use Hensel lifting with respect to

. Another approach is to use

a more particular type of changes of variables, like

. Another approach is to use

a more particular type of changes of variables, like  . However, both approaches require

. However, both approaches require  to be of a suitable form to allow for Hensel lifting. Some

reference for Hensel lifting in the context of sparse polynomials are

[62, 8, 78, 80, 81, 17].

to be of a suitable form to allow for Hensel lifting. Some

reference for Hensel lifting in the context of sparse polynomials are

[62, 8, 78, 80, 81, 17].

For most applications in computer algebra, the total degree of large sparse polynomials is typically much smaller than the number of terms. The works in the above references mainly target this asymptotic regime. The factorization of “supersparse” polynomials has also been considered in [40, 37]. The survey talk [96] discusses the complexity of polynomial factorizations for yet other polynomial representations.

The theory of polynomial factorization involves several other basic algorithmic tasks that are interesting for their own sake. We already mentioned the importance of Hensel lifting. Other fundamental operations are gcd computations, multiple root extractions, square-free factorizations, and determining the content of a polynomial. We refer to [31] for classical univariate algorithms. As to sparse multivariate polynomials, there exist many approaches for gcd computations [21, 108, 68, 66, 59, 22, 56, 76, 51, 58].

Whenever convenient, we will assume that the characteristic of  is either zero or sufficiently large. This will allow us

to avoid technical non-separability issues; we refer to [34,

102, 73] for algorithms to deal with such

issues. A survey of multivariate polynomial factorization over finite

fields (including small characteristic) can be found in [65].

Throughout this paper, factorizations will be done over the ground field

is either zero or sufficiently large. This will allow us

to avoid technical non-separability issues; we refer to [34,

102, 73] for algorithms to deal with such

issues. A survey of multivariate polynomial factorization over finite

fields (including small characteristic) can be found in [65].

Throughout this paper, factorizations will be done over the ground field

. Some algorithms for

“absolute factorization” over the algebraic closure

. Some algorithms for

“absolute factorization” over the algebraic closure  of

of  can be found in [64,

19, 23]; alternatively, one may mimic

computations in

can be found in [64,

19, 23]; alternatively, one may mimic

computations in  using dynamic evaluation [24, 49].

using dynamic evaluation [24, 49].

The goal of this paper is to redevelop the theory of sparse polynomial factorization, by taking advantage as fully as possible of evaluation-interpolation techniques at geometric sequences. The basic background material is recalled in Section 2. We recall that almost all algorithms in the paper are randomized, of Monte Carlo type. We also note that the correctness of a factorization can easily be verified, either directly or with high probability, by evaluating the polynomial and the product of the potential factors at a random point. (On the other hand, this approach does not yield a probabilistic irreducibility test.)

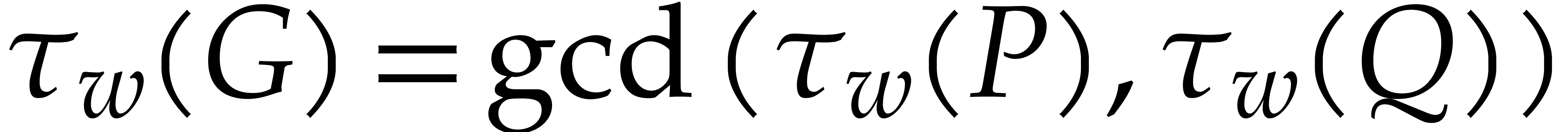

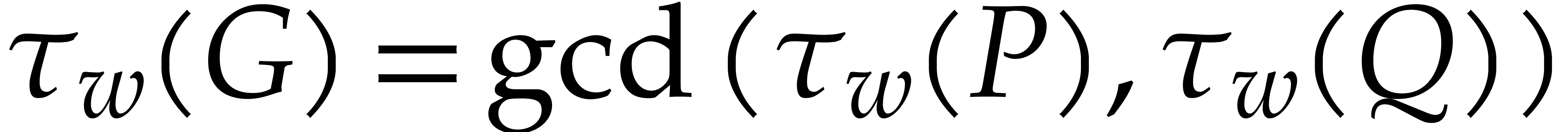

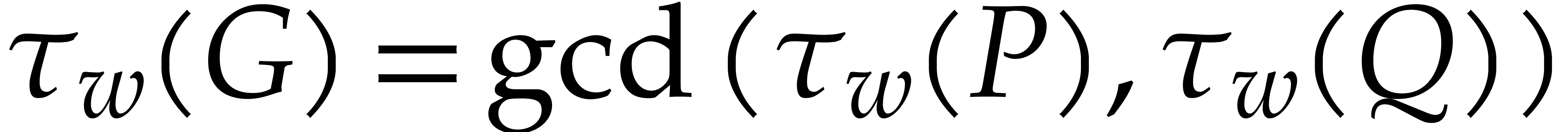

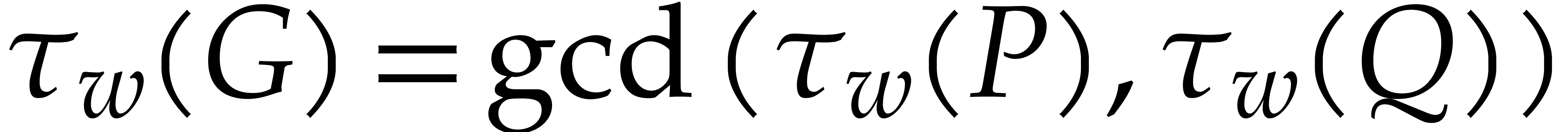

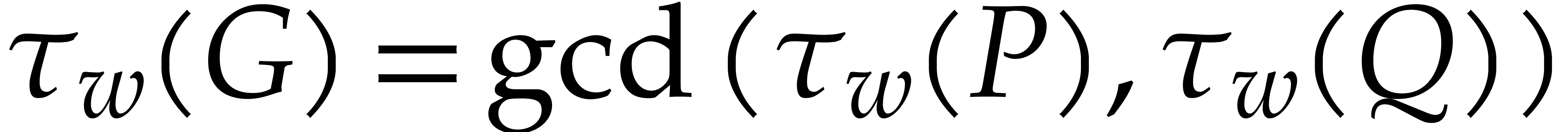

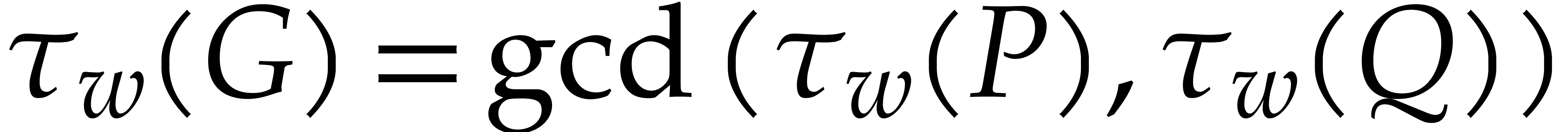

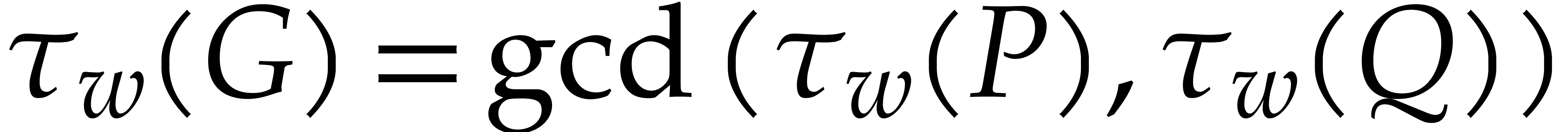

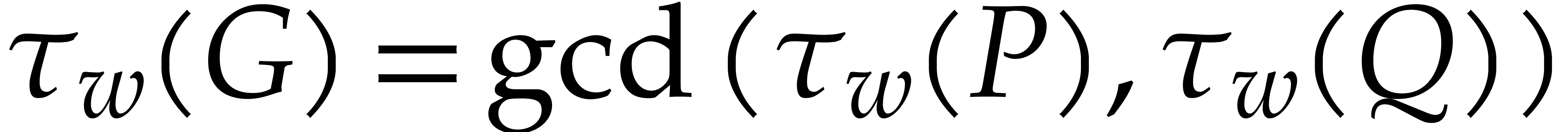

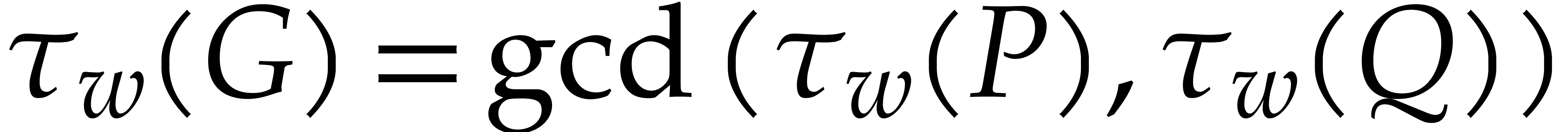

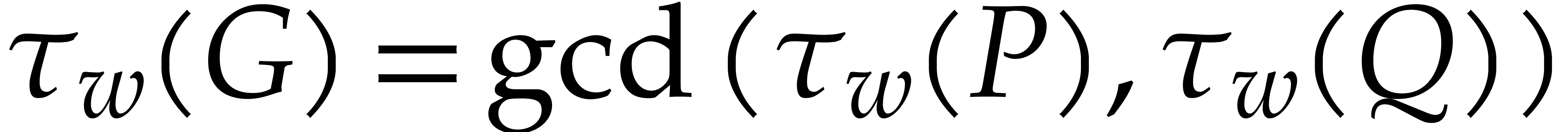

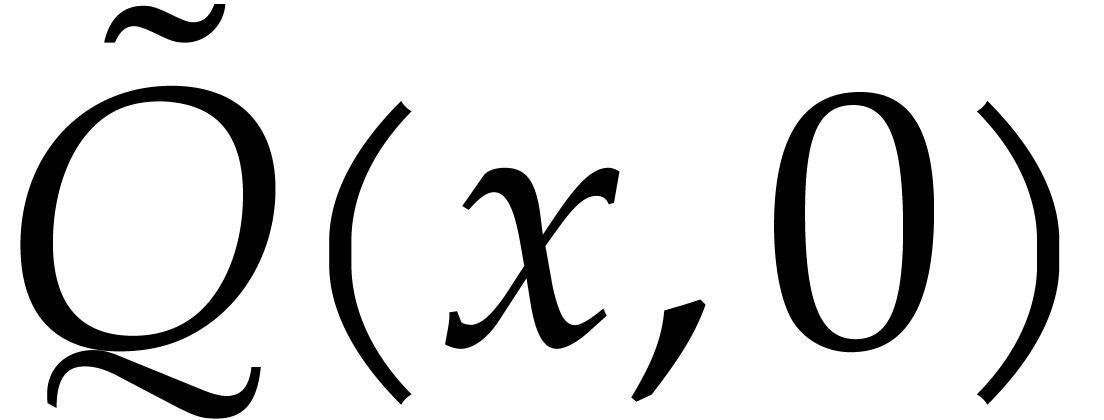

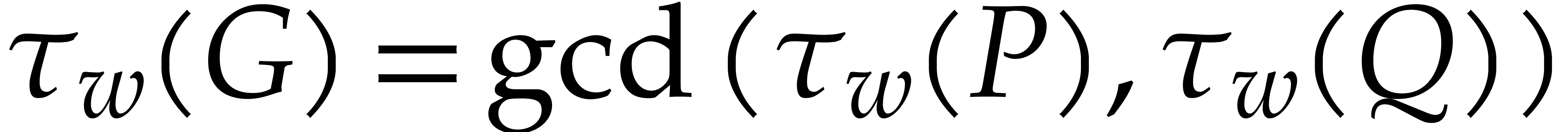

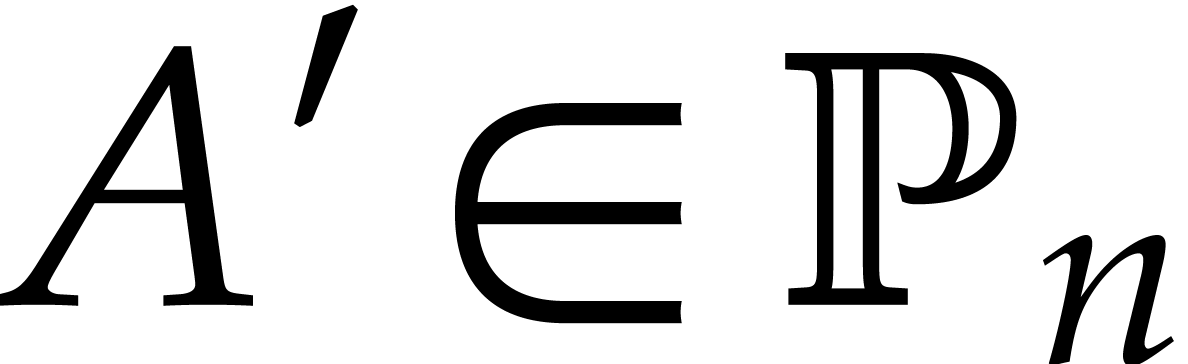

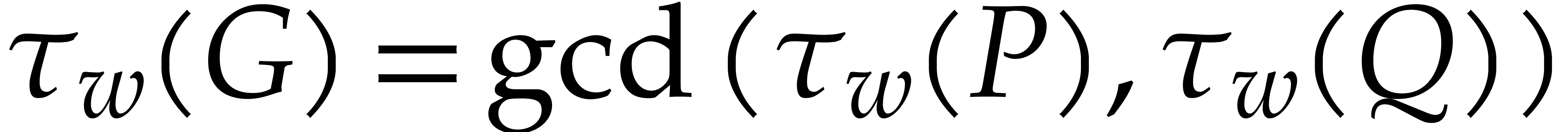

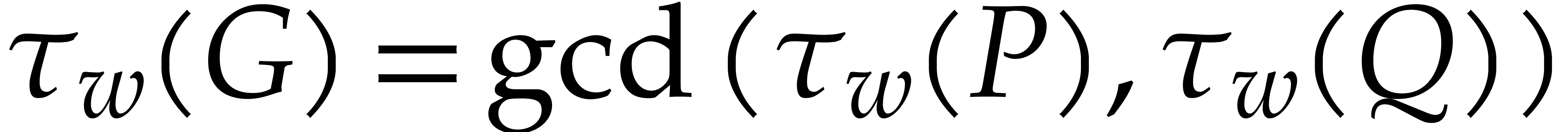

As an appetizer, we start in Section 3 with the problem of

multivariate gcd computation. This provides a nice introduction for the

main two approaches used later in the paper: induction on dimension and

direct reduction to the univariate (or bivariate or low dimensional)

case. It also illustrates the kind of complexity bounds that we are

aiming for. Consider the computation of the gcd  of two sparse polynomials

of two sparse polynomials  .

Ideally speaking, setting

.

Ideally speaking, setting  ,

,

,

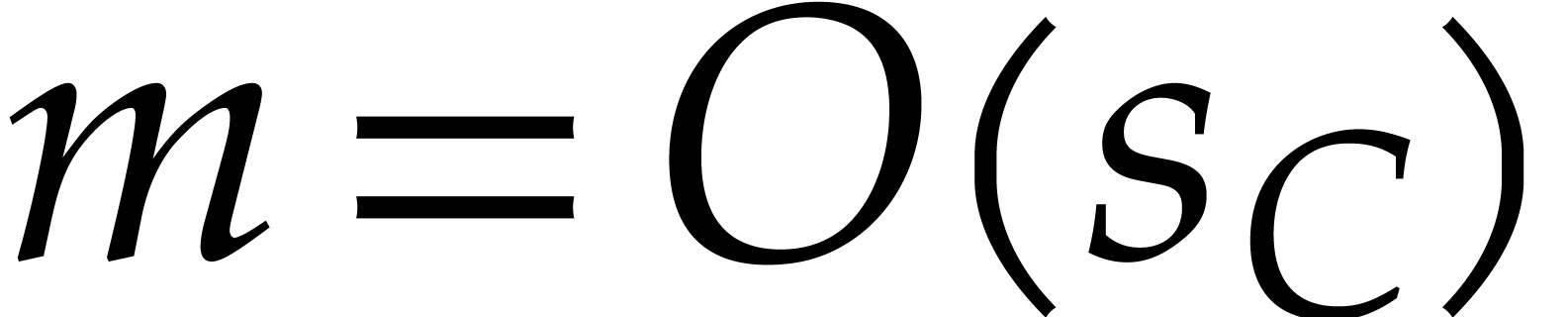

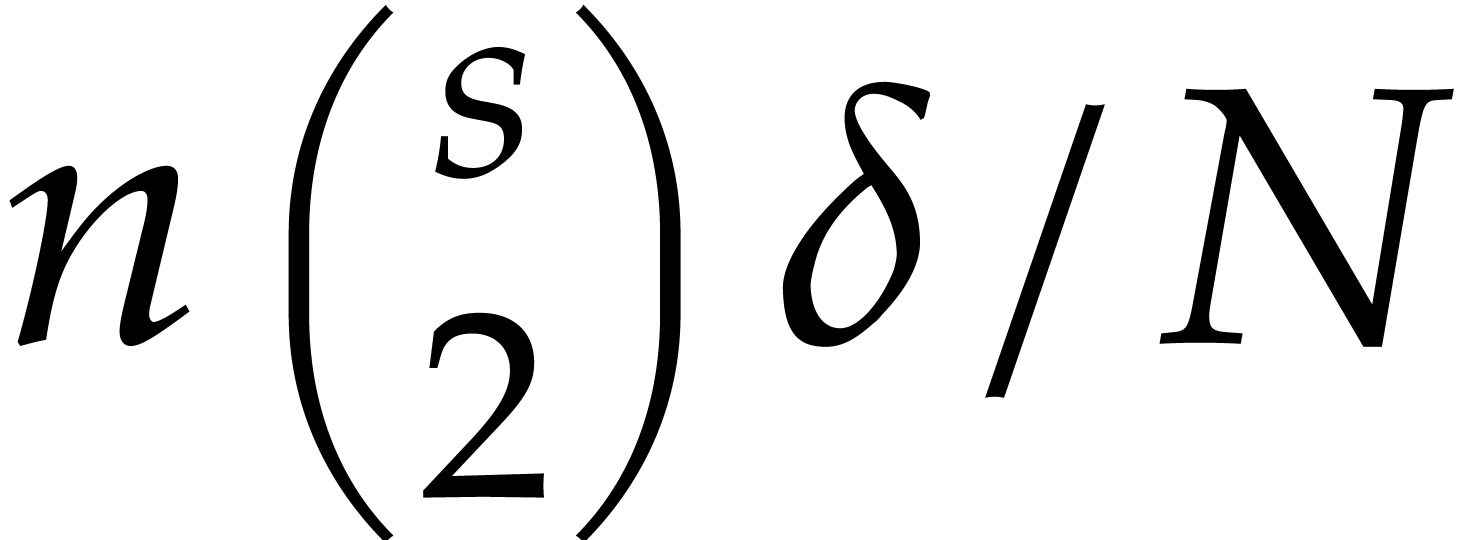

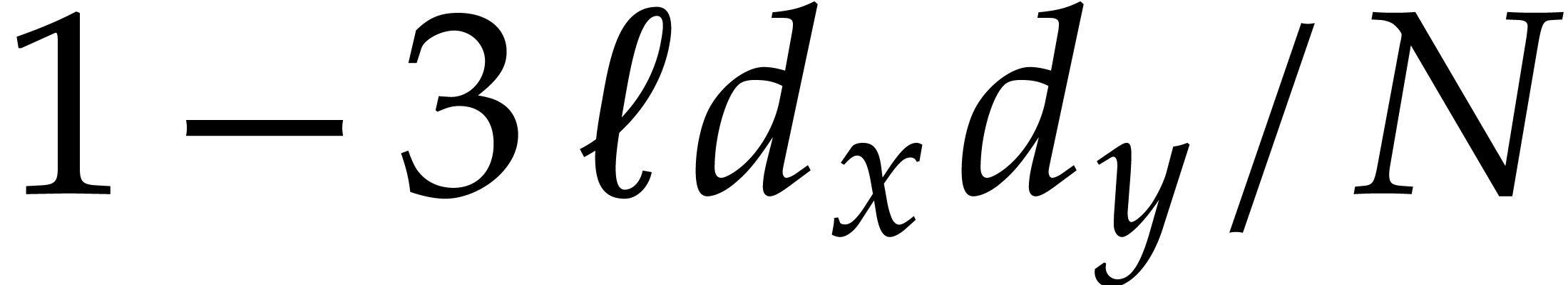

,  , we are aiming for a complexity bound of the form

, we are aiming for a complexity bound of the form

|

(1.3) |

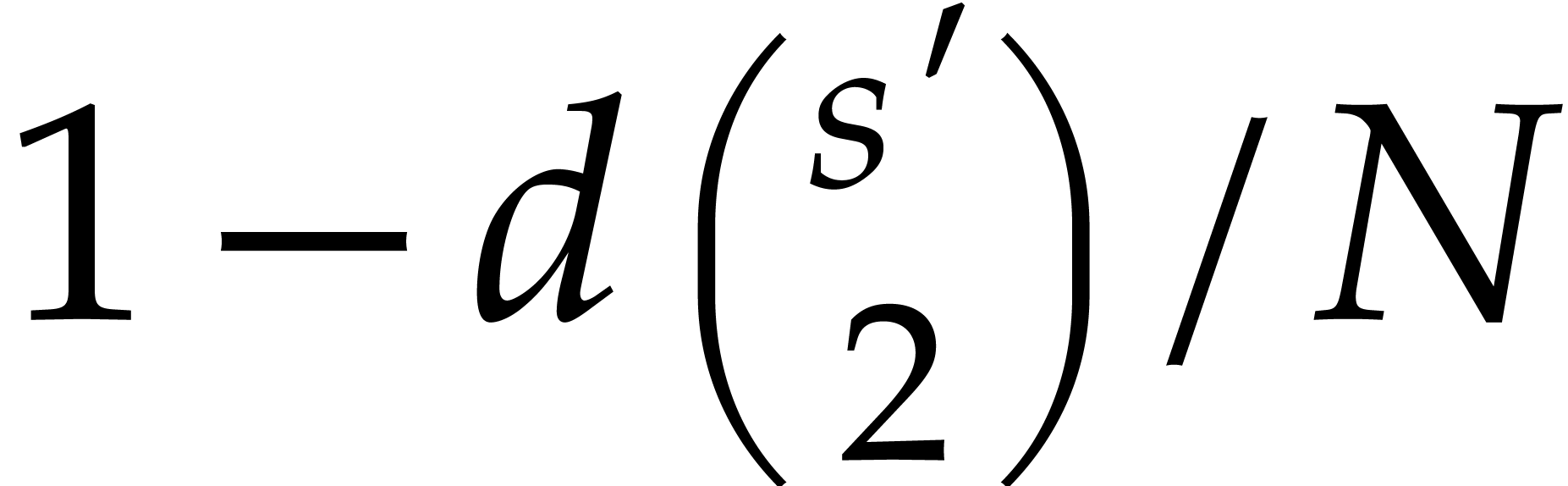

where  is a constant. Since

is a constant. Since  is typically much smaller than

is typically much smaller than  ,

we can afford ourselves the non-trivial overhead with respect to

,

we can afford ourselves the non-trivial overhead with respect to  in the term

in the term  .

The inductive approach on the dimension

.

The inductive approach on the dimension  achieves

the complexity (1.3) with

achieves

the complexity (1.3) with  ,

but its worst case complexity is

,

but its worst case complexity is  .

This algorithm seems to be new. The second approach uses a direct

reduction to univariate gcd computations via so-called

“regularizing weights” and achieves the complexity (1.3)

with

.

This algorithm seems to be new. The second approach uses a direct

reduction to univariate gcd computations via so-called

“regularizing weights” and achieves the complexity (1.3)

with  , and even

, and even  for some practical examples (e.g., when the gcd is monic

in one of the variables). This algorithm is a sharpened version of the

algorithm from [51, Section 4.3].

for some practical examples (e.g., when the gcd is monic

in one of the variables). This algorithm is a sharpened version of the

algorithm from [51, Section 4.3].

Most existing algorithms for multivariate polynomial factorization

reduce to the univariate or bivariate case. Direct reduction to the

univariate case is only possible for certain types of coefficients, such

as integers, rational numbers, or algebraic numbers. Reduction to the

bivariate case works in general, thanks to Theorem 1.1, and

this is even interesting when  :

first reduce modulo a suitable prime

:

first reduce modulo a suitable prime  ,

then factor over

,

then factor over  , and

finally Hensel lift to obtain a factorization over

, and

finally Hensel lift to obtain a factorization over  . In this paper, we will systematically opt for

the bivariate reduction strategy. For this reason, we have included a

separate Section 4 on bivariate factorization and related

problems. This material is classical, but recalled for

self-containedness and convenience of the reader.

. In this paper, we will systematically opt for

the bivariate reduction strategy. For this reason, we have included a

separate Section 4 on bivariate factorization and related

problems. This material is classical, but recalled for

self-containedness and convenience of the reader.

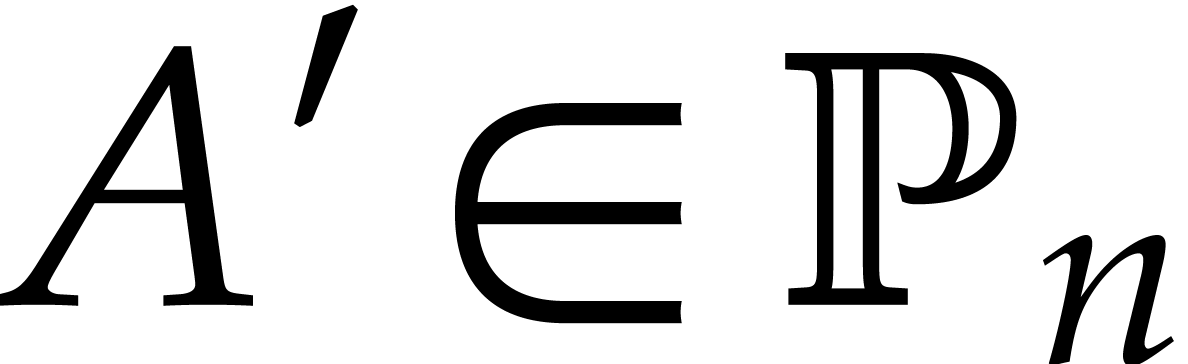

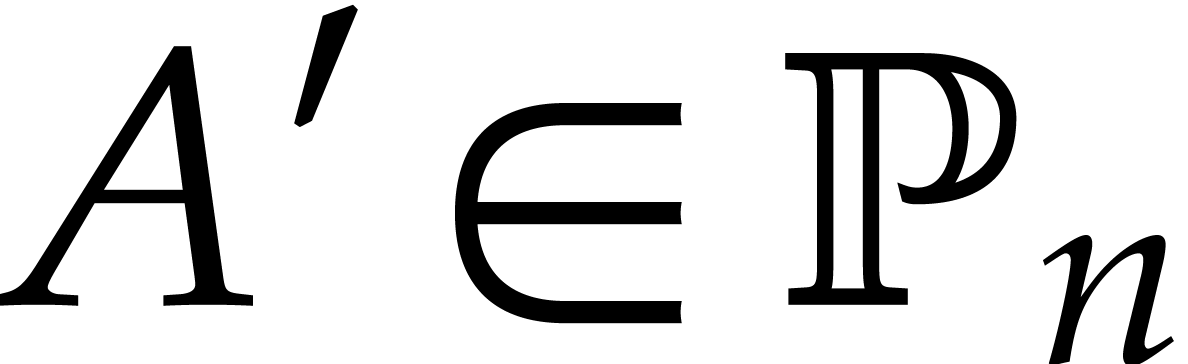

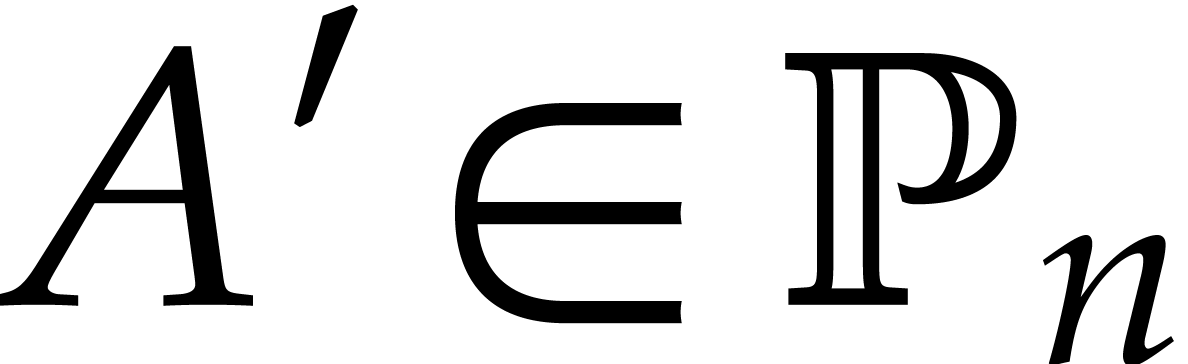

If  is a finite field, then we already noted that

multivariate factorization does not directly reduce to the univariate

case. Nevertheless, such direct reductions are possible for some special

cases of interest: content-free factorization, extraction of multiple

roots, and square-free factorization. In Section 5, we

present efficient algorithms for these tasks, following the

“regularizing weights” approach that was introduced in

Section 3.2 for gcd computations. All complexity bounds are

of the form (1.3) for the relevant notions of output size

is a finite field, then we already noted that

multivariate factorization does not directly reduce to the univariate

case. Nevertheless, such direct reductions are possible for some special

cases of interest: content-free factorization, extraction of multiple

roots, and square-free factorization. In Section 5, we

present efficient algorithms for these tasks, following the

“regularizing weights” approach that was introduced in

Section 3.2 for gcd computations. All complexity bounds are

of the form (1.3) for the relevant notions of output size

, input-output size

, input-output size  , and total degree

, and total degree  .

.

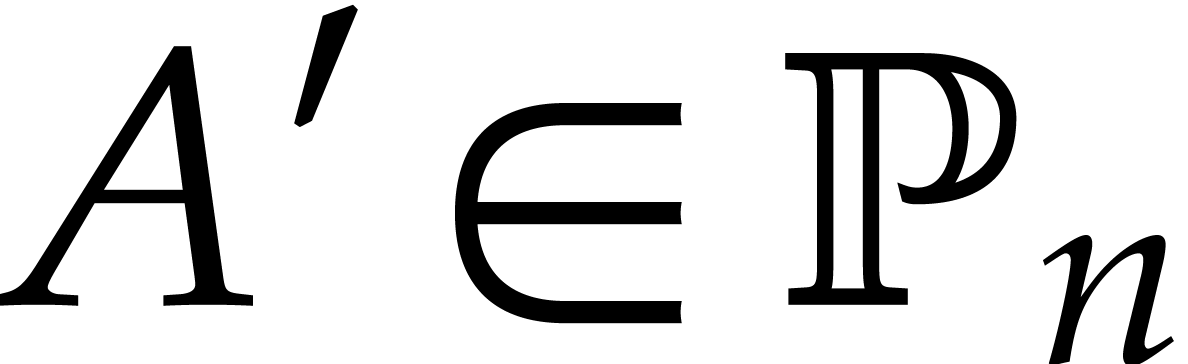

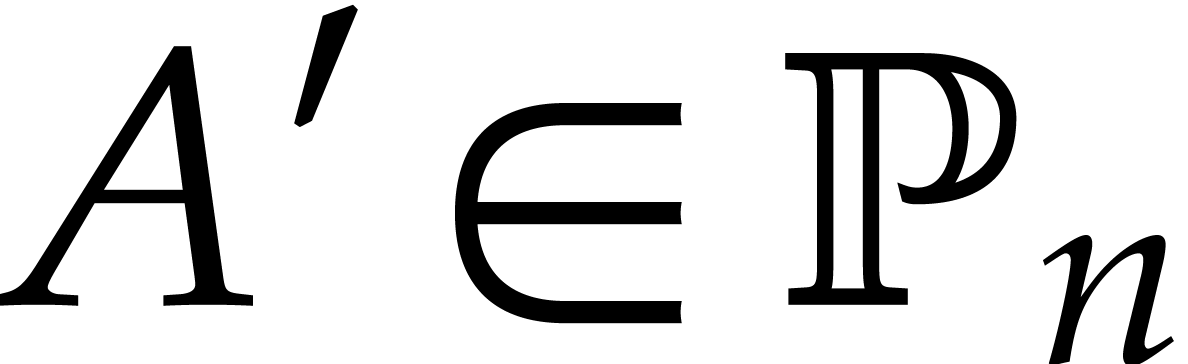

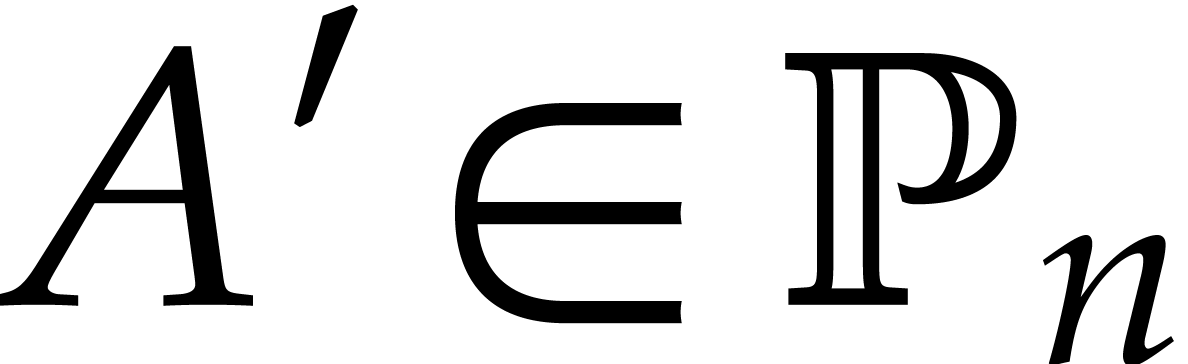

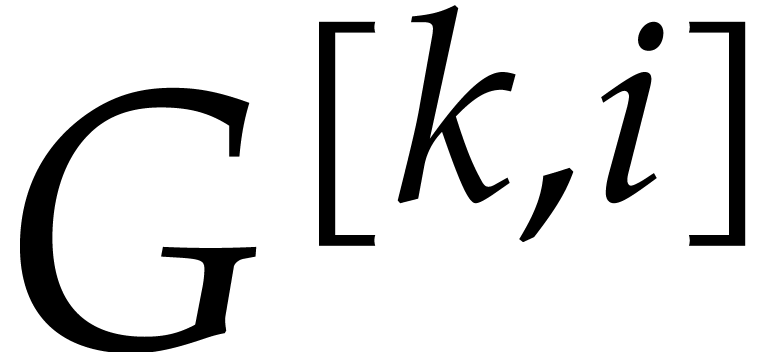

In Section 6, we turn to the factorization of a

multivariate polynomial  using induction on the

dimension

using induction on the

dimension  . Starting with a

coprime factorization of a bivariate projection

. Starting with a

coprime factorization of a bivariate projection  of

of  for random

for random  ,

we successively Hensel lift this factorization with respect to

,

we successively Hensel lift this factorization with respect to  . Using a suitable

evaluation-interpolation strategy, the actual Hensel lifting can be done

on bivariate polynomials. This leads to complexity bounds of the form

. Using a suitable

evaluation-interpolation strategy, the actual Hensel lifting can be done

on bivariate polynomials. This leads to complexity bounds of the form

with

with  .

In fact, we separately consider factorizations into two or more factors.

In the case of two factors, it is possible to first determine the

smallest factor and then perform an exact division to obtain the other

one. In the complexity bound, this means that

.

In fact, we separately consider factorizations into two or more factors.

In the case of two factors, it is possible to first determine the

smallest factor and then perform an exact division to obtain the other

one. In the complexity bound, this means that  should really be understood as the number of evaluation-interpolation

points, i.e. the minimum of the sizes of the two factors.

It depends on the situation whether it is faster to lift a full coprime

factorization or one factor at a time, although we expect the first

approach to be fastest in most cases.

should really be understood as the number of evaluation-interpolation

points, i.e. the minimum of the sizes of the two factors.

It depends on the situation whether it is faster to lift a full coprime

factorization or one factor at a time, although we expect the first

approach to be fastest in most cases.

Due to the fact that projections such as  are

only very special types of affine transformations, Theorem 1.1

does not apply. Therefore, the direct inductive approach from Section 6 does not systematically lead to a full irreducible

factorization of

are

only very special types of affine transformations, Theorem 1.1

does not apply. Therefore, the direct inductive approach from Section 6 does not systematically lead to a full irreducible

factorization of  . In Remarks

6.14 and 6.15, we give an example where our

approach fails, together with two different heuristic remedies (which

both lead to similar complexity bounds, but with

. In Remarks

6.14 and 6.15, we give an example where our

approach fails, together with two different heuristic remedies (which

both lead to similar complexity bounds, but with  or higher).

or higher).

In the last Section 7, we present another approach, which

avoids induction on the dimension  and the

corresponding overhead in the complexity bound. The idea is to exploit

properties of the Newton polytope of

and the

corresponding overhead in the complexity bound. The idea is to exploit

properties of the Newton polytope of  and

“face factorizations” (e.g. factorizations of

restrictions of

and

“face factorizations” (e.g. factorizations of

restrictions of  to faces of its Newton

polytope). In the most favorable case, there exists a coprime edge

factorization, which can be Hensel lifted into a full factorization, and

we obtain a complexity bound of the form (1.3). In less

favorable cases, different face factorizations need to be combined.

Although this yields a similar complexity bound, the details are more

technical. We refer to [1, 105] for a few

existing ways to exploit Newton polytopes for polynomial factorization.

to faces of its Newton

polytope). In the most favorable case, there exists a coprime edge

factorization, which can be Hensel lifted into a full factorization, and

we obtain a complexity bound of the form (1.3). In less

favorable cases, different face factorizations need to be combined.

Although this yields a similar complexity bound, the details are more

technical. We refer to [1, 105] for a few

existing ways to exploit Newton polytopes for polynomial factorization.

In very unfavorable cases (see Section 7.6), the

factorization of  cannot be recovered from its

face factorizations at all. In Section 7.7, we conclude

with a fully general algorithm for irreducible factorization. This

algorithm is not as practical, but addresses pathologically difficult

cases through the introduction of a few extra variables. Its cost is

cannot be recovered from its

face factorizations at all. In Section 7.7, we conclude

with a fully general algorithm for irreducible factorization. This

algorithm is not as practical, but addresses pathologically difficult

cases through the introduction of a few extra variables. Its cost is

plus the cost of one univariate factorization of

degree

plus the cost of one univariate factorization of

degree  .

.

In this paper, we will measure the complexity of algorithms using the

algebraic complexity model [14]. In addition, we assume

that it is possible to sample a random element from  (or a subset of

(or a subset of  ) in constant

time. We will use

) in constant

time. We will use  as a shorthand for

as a shorthand for  .

.

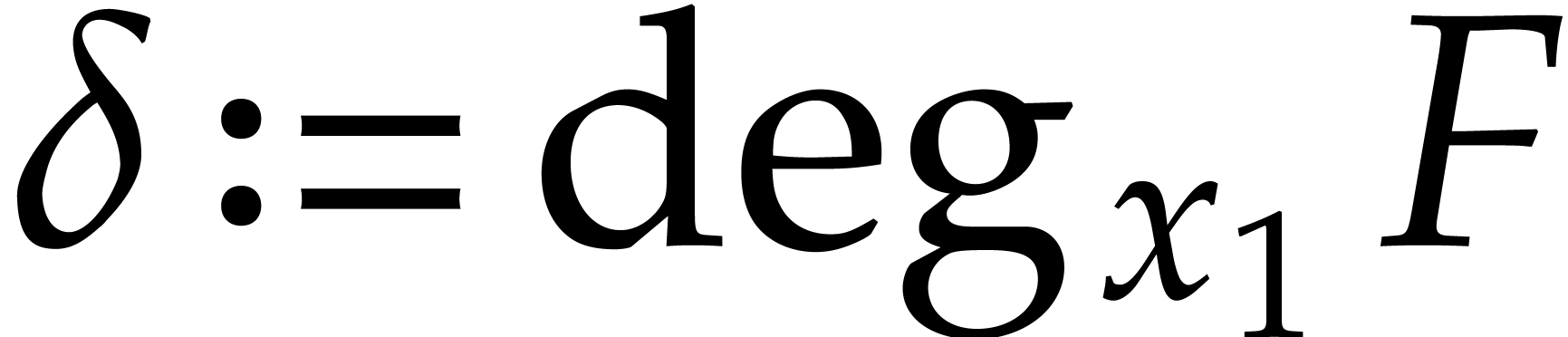

We let  and

and  .

We also define

.

We also define  , for any ring

, for any ring

. Given a multivariate

polynomial

. Given a multivariate

polynomial  and

and  ,

we write

,

we write  (resp.

(resp.  ) for the degree (resp. valuation)

of

) for the degree (resp. valuation)

of  in

in  .

We also write

.

We also write  (resp.

(resp.  ) for the total degree (resp.

valuation of

) for the total degree (resp.

valuation of  ), and we set

), and we set

. Recall that

. Recall that  stands for the number of terms of

stands for the number of terms of  in its sparse representation (1.1).

in its sparse representation (1.1).

Acknowledgment. We wish to thank Grégoire Lecerf for useful discussions during the preparation of this paper and the first anonymous referee for helpful comments and suggestions.

Let  (or

(or  )

be the cost to multiply two dense univariate polynomials of degree

)

be the cost to multiply two dense univariate polynomials of degree  in

in  .

Throughout the paper, we make the assumption that

.

Throughout the paper, we make the assumption that  is a non-decreasing function. In the algebraic complexity model [14], when counting the number of operations in

is a non-decreasing function. In the algebraic complexity model [14], when counting the number of operations in  , we may take

, we may take  [15].

If

[15].

If  is a finite field

is a finite field  , then one has

, then one has  ,

under suitable number theoretic assumption [41]. In this

case, the corresponding bit complexity (when counting the number of

operations on a Turing machine [88]) is

,

under suitable number theoretic assumption [41]. In this

case, the corresponding bit complexity (when counting the number of

operations on a Turing machine [88]) is  .

.

For polynomials  of degree

of degree  it is possible to find the unique

it is possible to find the unique  ,

such that

,

such that  with

with  .

This is the problem of univariate division with remainder, which

can be solved in time

.

This is the problem of univariate division with remainder, which

can be solved in time  by applying Newton

iteration [31, Section 9.1]. A related task is

univariate root extraction: given

by applying Newton

iteration [31, Section 9.1]. A related task is

univariate root extraction: given  and

and

, check whether

, check whether  is of the form

is of the form  for some

for some  and monic

and monic  , and

determine

, and

determine  and

and  if so. For

a fixed

if so. For

a fixed  , this can be

checked, and the unique

, this can be

checked, and the unique  can be found in

can be found in  arithmetic operations in

arithmetic operations in  by

applying Newton iteration [31, polynomial analogue of

Theorem 9.28].

by

applying Newton iteration [31, polynomial analogue of

Theorem 9.28].

Consider the Vandermonde matrix

where  are pairwise distinct. Given a column

vector

are pairwise distinct. Given a column

vector  with entries

with entries  , it is well known [11, 69,

12, 9, 45] that the products

, it is well known [11, 69,

12, 9, 45] that the products

,

,  ,

,  , and

, and

can all be computed in time

can all be computed in time  . These are respectively the problems of

multi-point evaluation, (univariate) interpolation,

transposed multi-point evaluation, and transposed

interpolation. For fixed

. These are respectively the problems of

multi-point evaluation, (univariate) interpolation,

transposed multi-point evaluation, and transposed

interpolation. For fixed  ,

these complexities can often be further reduced to

,

these complexities can often be further reduced to  using techniques from [45].

using techniques from [45].

For our factorization algorithms, we will sometimes need to assume the

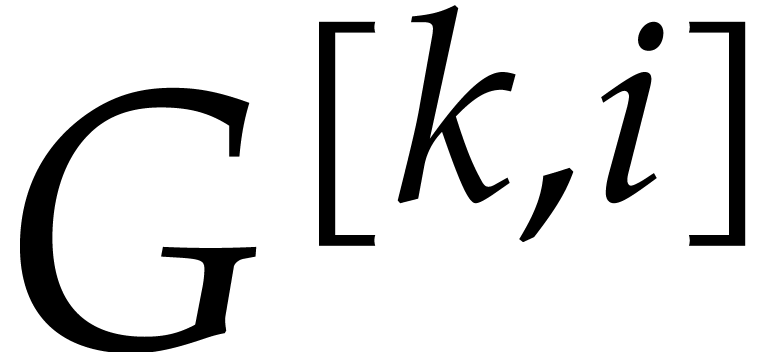

existence of an algorithm to factor univariate polynomials in  into irreducible factors. We will denote by

into irreducible factors. We will denote by  the cost of such a factorization as a function of degree

the cost of such a factorization as a function of degree

. We will always assume that

. We will always assume that

is non-decreasing. In particular

is non-decreasing. In particular  for all

for all  and

and  .

.

If  is the finite field

is the finite field  with

with  elements for some odd

elements for some odd  , then the best univariate factorization

methods are randomized of Las Vegas type. When allowing for such

algorithms, we may take

, then the best univariate factorization

methods are randomized of Las Vegas type. When allowing for such

algorithms, we may take  , by

using Cantor and Zassenhaus' method from [16]. With the use

of fast modular composition [70], we may take

, by

using Cantor and Zassenhaus' method from [16]. With the use

of fast modular composition [70], we may take

but this algorithm is only relevant in theory, for the moment [50].

If the extension degree  is composite, then this

can be exploited to lower the practical complexity of factorization [53].

is composite, then this

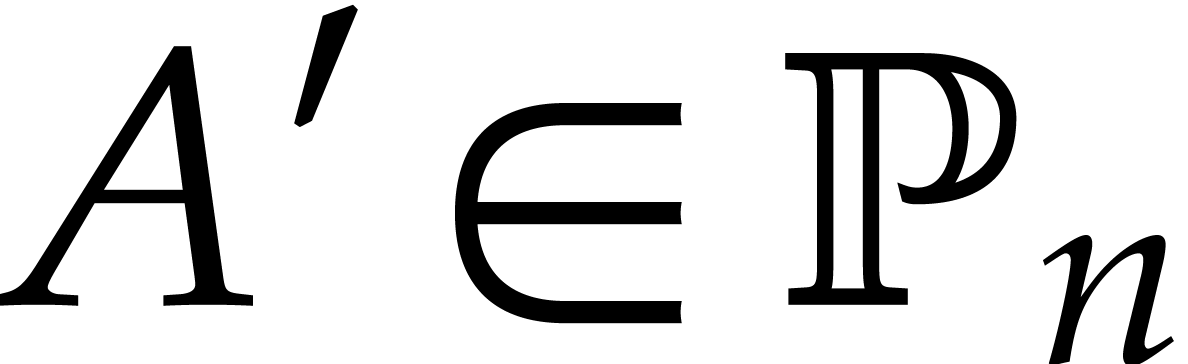

can be exploited to lower the practical complexity of factorization [53].

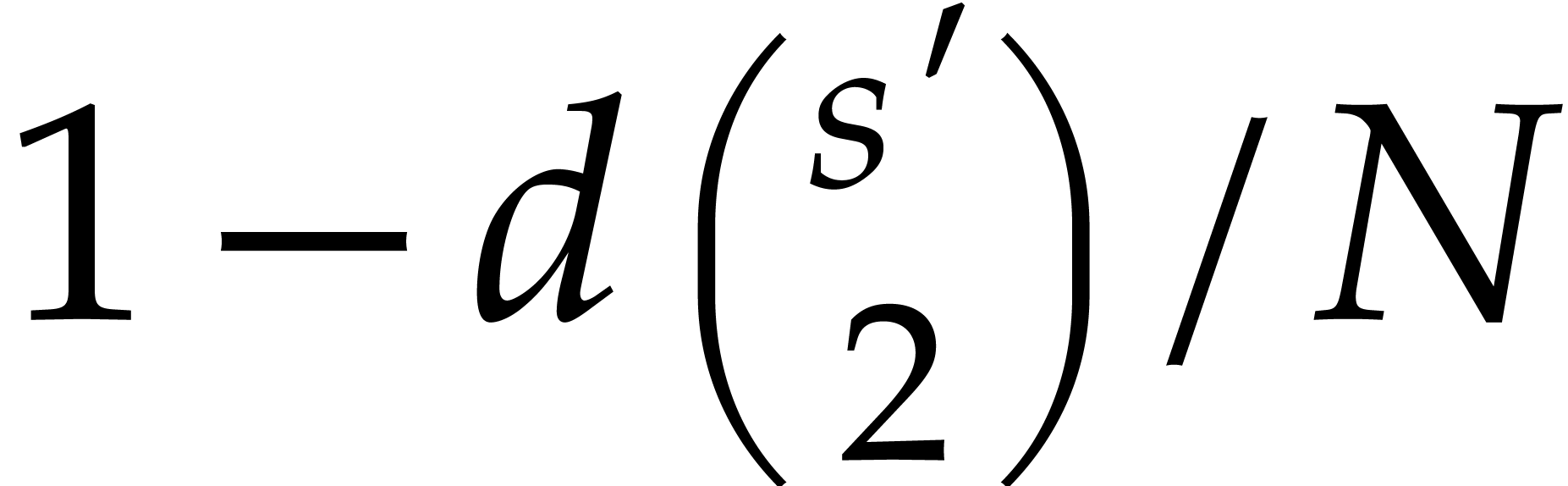

In all probabilistic algorithms in this paper,  will stand for a sufficiently large integer such that “random

elements in

will stand for a sufficiently large integer such that “random

elements in  ” are

chosen in some fixed subset of

” are

chosen in some fixed subset of  of size at least

of size at least

. In the case when

. In the case when  is larger than the cardinality

is larger than the cardinality  of

of

, this means that

, this means that  needs to be replaced by an algebraic extension of degree

needs to be replaced by an algebraic extension of degree

, which induces a logarithmic

, which induces a logarithmic

overhead for the cost of field operations in

overhead for the cost of field operations in

. We will frequently use the

following well-known lemma:

. We will frequently use the

following well-known lemma:

Proof. We apply the lemma to  .

.

.

Let

.

Let  and let

and let  be chosen

independently at random. Then the probability that

be chosen

independently at random. Then the probability that  for some

for some  is at most

is at most  .

.

Proof. We apply the lemma to  .

.

Consider a polynomial  that is presented as a

blackbox function. The task of sparse interpolation is to

recover the sparse representation (1.1) from a sufficient

number of blackbox evaluations of

that is presented as a

blackbox function. The task of sparse interpolation is to

recover the sparse representation (1.1) from a sufficient

number of blackbox evaluations of  .

One may distinguish between the cases when the exponents

.

One may distinguish between the cases when the exponents  of

of  are already known or not. (Here

“known exponents” may be taken liberally to mean a superset

of reasonable size of the set of actual exponents.)

are already known or not. (Here

“known exponents” may be taken liberally to mean a superset

of reasonable size of the set of actual exponents.)

One popular approach for sparse interpolation is based on Prony's

geometric sequence technique [91, 5]. This

approach requires an admissible ratio  , such that for any

, such that for any  ,

there is an algorithm to recover

,

there is an algorithm to recover  from

from  . If

. If  ,

then we may take

,

then we may take  to be the

to be the  -th prime number, and use prime factorization

in order to recover

-th prime number, and use prime factorization

in order to recover  . If

. If  is a finite field, where

is a finite field, where  is a

smooth prime (i.e.

is a

smooth prime (i.e.  has many small

prime divisors), then one may recover exponents using the tangent

Graeffe method [38].

has many small

prime divisors), then one may recover exponents using the tangent

Graeffe method [38].

Given an admissible ratio  ,

Prony's method allows for the sparse interpolation of

,

Prony's method allows for the sparse interpolation of  using

using  evaluations of

evaluations of  at

at

for

for  ,

as well as

,

as well as  operations in

operations in  for determining

for determining  , and

, and  subsequent exponent recoveries. If

subsequent exponent recoveries. If  , then the exponents can be recovered if

, then the exponents can be recovered if  , which can be ensured by working

over a field extension

, which can be ensured by working

over a field extension  of

of  with

with  . If the exponents

. If the exponents  are already known, then the coefficients can be

obtained from

are already known, then the coefficients can be

obtained from  evaluations of

evaluations of  at

at  using one transposed univariate interpolation

of cost

using one transposed univariate interpolation

of cost  .

.

If  is a finite field, then it can be more

efficient to consider an FFT ratio

is a finite field, then it can be more

efficient to consider an FFT ratio  for

which

for

which  are roots of unity. When choosing these

roots of unity with care, possibly in an extension field of

are roots of unity. When choosing these

roots of unity with care, possibly in an extension field of  , sparse interpolation can often be done in

time

, sparse interpolation can often be done in

time  from

from  values of

values of

, using discrete Fourier

transforms; see [52, 46] for details.

, using discrete Fourier

transforms; see [52, 46] for details.

In what follows, we will denote by  the cost of

sparse interpolation of size

the cost of

sparse interpolation of size  ,

given

,

given  values of

values of  at a

suitable geometric sequence. When using Prony's approach, we may thus

take

at a

suitable geometric sequence. When using Prony's approach, we may thus

take  , whenever the cost to

recover the exponents

, whenever the cost to

recover the exponents  from

from  is negligible. If the discrete Fourier approach is applicable, then we

may even take

is negligible. If the discrete Fourier approach is applicable, then we

may even take  .

.

Remark  for the cost of

sparse interpolation, since this cost may also depend on the dimension

for the cost of

sparse interpolation, since this cost may also depend on the dimension

and the total degree

and the total degree  . In particular, manipulations of the exponent

vectors typically add

. In particular, manipulations of the exponent

vectors typically add  to the bit complexity.

Nonetheless, in our algebraic complexity model, this cost can often be

discarded: if

to the bit complexity.

Nonetheless, in our algebraic complexity model, this cost can often be

discarded: if  , then the

sparse interpolation of the exponents only gives rise to a constant

overhead using the derivative trick from [57]. In practice,

this remains true as long as the exponent vectors can be packed into

small integers, such as 64 bit machine integers. In order to enhance the

readability of our complexity analyses, we therefore assume that we are

in a regime where the cost

, then the

sparse interpolation of the exponents only gives rise to a constant

overhead using the derivative trick from [57]. In practice,

this remains true as long as the exponent vectors can be packed into

small integers, such as 64 bit machine integers. In order to enhance the

readability of our complexity analyses, we therefore assume that we are

in a regime where the cost  of sparse

interpolation can be modeled in terms of the sole parameter

of sparse

interpolation can be modeled in terms of the sole parameter  . For recent theoretical work on sparse

interpolation when

. For recent theoretical work on sparse

interpolation when  and/or

and/or  are allowed to become large, we refer to [36, 54].

are allowed to become large, we refer to [36, 54].

Remark  for the number of terms of

for the number of terms of  , then we assume that our sparse

interpolation method is deterministic and that it interpolates

, then we assume that our sparse

interpolation method is deterministic and that it interpolates  in time

in time  . If we

do not know the number of terms of

. If we

do not know the number of terms of  ,

then we may run the interpolation method for a guessed number of

,

then we may run the interpolation method for a guessed number of  terms. We may check the correctness of our guessed

interpolation

terms. We may check the correctness of our guessed

interpolation  by verifying that the evaluations

of

by verifying that the evaluations

of  and

and  coincide at a

randomly chosen point. By the Schwartz-Zippel lemma, this strategy

succeeds with probability at least

coincide at a

randomly chosen point. By the Schwartz-Zippel lemma, this strategy

succeeds with probability at least  .

.

Remark  with

with  as the evaluation points, by considering the function

as the evaluation points, by considering the function  instead of

instead of  .

Taking a random

.

Taking a random  avoids certain problems due to

degeneracies; this is sometimes called “diversification” [35]. If

avoids certain problems due to

degeneracies; this is sometimes called “diversification” [35]. If  is itself chosen at random,

then it often suffices to simply take

is itself chosen at random,

then it often suffices to simply take  .

We will occasionally do so without further mention.

.

We will occasionally do so without further mention.

Remark  of geometric progressions to be picked at random, which

excludes FFT ratios. Alternatively, we could have relied on Corollary 2.3 and diversification of all input and output polynomials

for a fixed random scaling factor

of geometric progressions to be picked at random, which

excludes FFT ratios. Alternatively, we could have relied on Corollary 2.3 and diversification of all input and output polynomials

for a fixed random scaling factor  (see the above

remark).

(see the above

remark).

Assume for instance that we wish to factor  .

Then the factors

.

Then the factors  of

of  are

in one-to-one correspondence with the factors

are

in one-to-one correspondence with the factors  of

of

. If we rely on Corollary 2.3 instead of 2.2 in our complexity bounds for

factoring

. If we rely on Corollary 2.3 instead of 2.2 in our complexity bounds for

factoring  (like the bounds in Theorems 6.11

or 7.2 below), then the cost of diversification adds an

extra term

(like the bounds in Theorems 6.11

or 7.2 below), then the cost of diversification adds an

extra term  , where

, where  and

and  is the total size of

is the total size of  and the computed factors. On the positive side, in the

corresponding bounds for the probability of failure, the quadratic

dependence

and the computed factors. On the positive side, in the

corresponding bounds for the probability of failure, the quadratic

dependence  on the number

on the number  of evaluation points reduces to a linear one. Similar adjustments apply

for other operations such as gcd computations.

of evaluation points reduces to a linear one. Similar adjustments apply

for other operations such as gcd computations.

Opposite to sparse interpolation, one may also consider the evaluation

of  at

at  points

points  in a geometric progression. In general, this can be done

in time

in a geometric progression. In general, this can be done

in time  , using one

transposed multi-point evaluation of size

, using one

transposed multi-point evaluation of size  .

If

.

If  is a suitable FFT ratio, then this complexity

drops to

is a suitable FFT ratio, then this complexity

drops to  , using a discrete

Fourier transform of size

, using a discrete

Fourier transform of size  .

In what follows, we will assume that this operation can always be done

in time

.

In what follows, we will assume that this operation can always be done

in time  .

.

Remark  and

and  ,

so similar observations as in Remark 2.4 apply.

,

so similar observations as in Remark 2.4 apply.

More generally, we may consider the evaluation of  at

at  points

points  in a geometric

progression. If

in a geometric

progression. If  , we may do

this in time

, we may do

this in time  , by reducing to

the evaluation of

, by reducing to

the evaluation of  at

at  progressions of size

progressions of size  . To

obtain the evaluations of

. To

obtain the evaluations of  at

at  for

for  , we can evaluate

, we can evaluate  at

at  . If

. If

, then we may cut

, then we may cut  into

into  polynomials of size

polynomials of size  , and do the evaluation in time

, and do the evaluation in time

.

.

Remark  is an FFT-ratio, then the bound for the case when

is an FFT-ratio, then the bound for the case when  further reduces to

further reduces to  plus

plus  bit operations on exponents, since the cyclic

projections from [46, 52] reduce

bit operations on exponents, since the cyclic

projections from [46, 52] reduce  in linear time to cyclic polynomials with

in linear time to cyclic polynomials with  terms before applying the FFTs. We did not exploit this optimization for

the complexity bounds in this paper, since we preferred to state these

bounds for general ratios

terms before applying the FFTs. We did not exploit this optimization for

the complexity bounds in this paper, since we preferred to state these

bounds for general ratios  ,

but it should be straightforward to adapt them to the specific FFT case.

,

but it should be straightforward to adapt them to the specific FFT case.

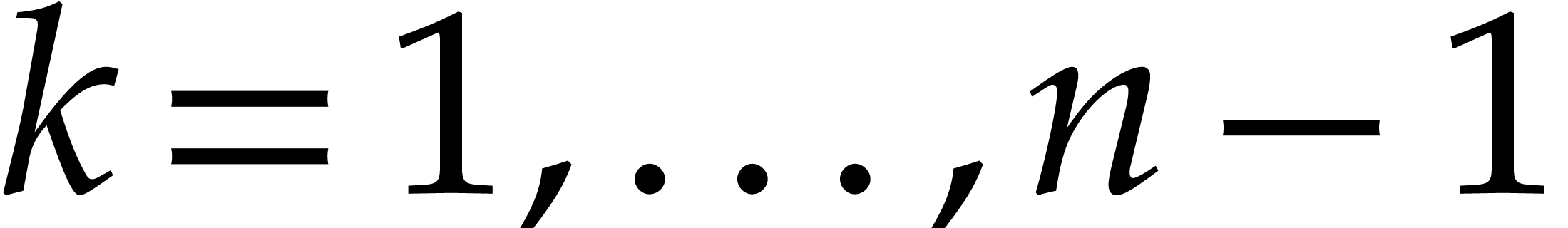

In this paper, we will frequently need to evaluate  at all but one or two variables. Assume for instance that

at all but one or two variables. Assume for instance that

where  has

has  terms for

terms for  and

and  . Then

using the above method, we can compute

. Then

using the above method, we can compute  for

for  using

using  operations.

operations.

One traditional application of the combination of sparse evaluation and

interpolation are probabilistic algorithms for multiplication and exact

division of sparse multivariate polynomials. For the given  , we can compute the product

, we can compute the product  by evaluating

by evaluating  , and

, and  at a geometric progression

at a geometric progression  with

with

and recovering of

and recovering of  in the

sparse representation (1.1) via sparse interpolation. The

total cost of this method is bounded by

in the

sparse representation (1.1) via sparse interpolation. The

total cost of this method is bounded by  operations in

operations in  , where

, where  . If

. If  and

and

are known, then the exact quotient

are known, then the exact quotient  can be computed in a similar fashion and with the same

complexity. If

can be computed in a similar fashion and with the same

complexity. If  , then the

quotient

, then the

quotient  can actually be computed in time

can actually be computed in time  . Divisions by zero are avoided

through diversification, with overhead

. Divisions by zero are avoided

through diversification, with overhead  and

probability at least

and

probability at least  , by

Corollary 2.2.

, by

Corollary 2.2.

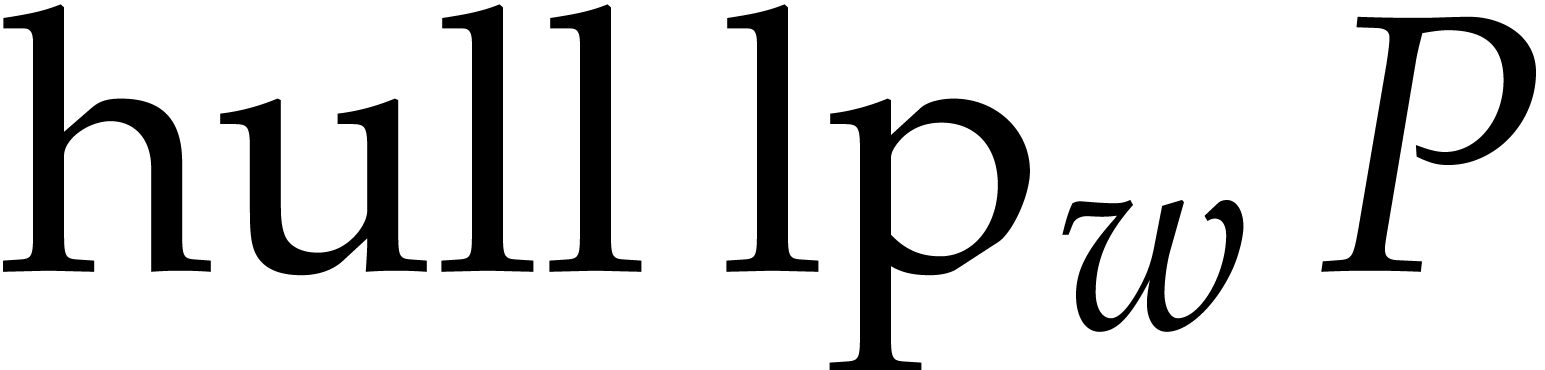

Consider a multivariate polynomial  .

We define

.

We define  to be the convex hull of

to be the convex hull of  and we call it the Newton polytope of

and we call it the Newton polytope of  . Given another polynomial

. Given another polynomial  , it is well known that

, it is well known that

where the Minkowski sum of two subsets  is

is  .

.

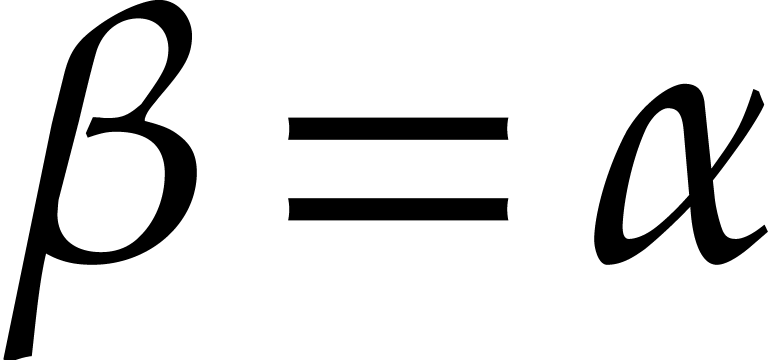

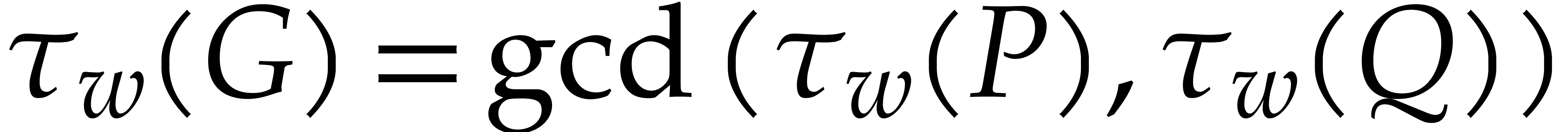

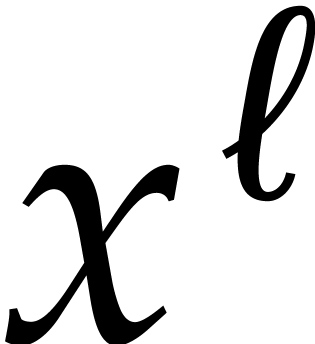

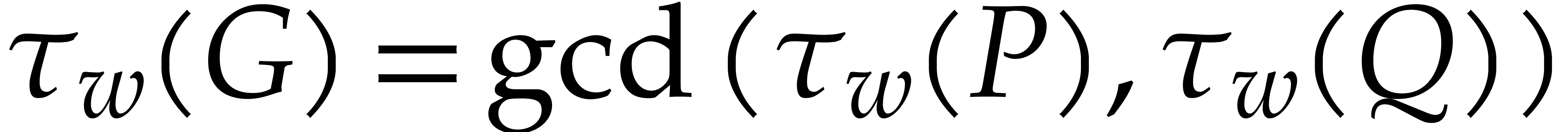

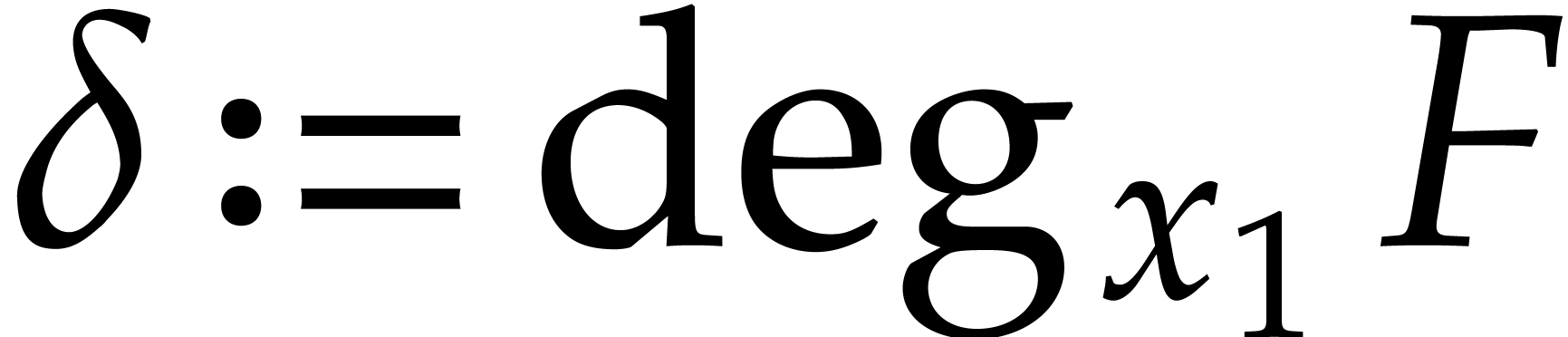

Let  be a non-zero weight vector. We define the

be a non-zero weight vector. We define the

-degree,

-degree,  -valuation, and

-valuation, and  -ecart of a non-zero

polynomial

-ecart of a non-zero

polynomial  by

by

Note that  and

and  ,

where we exploit the fact that we allow for negative weights. We say

that

,

where we exploit the fact that we allow for negative weights. We say

that  is

is  -homogeneous

if

-homogeneous

if  . Any

. Any  can uniquely be written as a sum

can uniquely be written as a sum

of its  -homogeneous

parts

-homogeneous

parts

We call  and

and  the

the

-leading and

-leading and

-trailing parts of

-trailing parts of

. We say that

. We say that  is

is  -regular

if

-regular

if  consists of a single term

consists of a single term  . In that case, we denote

. In that case, we denote  and we say that

and we say that  is

is  -monic if

-monic if  .

Given another non-zero polynomial

.

Given another non-zero polynomial  ,

we have

,

we have

Setting  , we also have

, we also have

The Newton polytopes  and

and  are facets of

are facets of  . In

particular, they are contained in the boundary

. In

particular, they are contained in the boundary  .

.

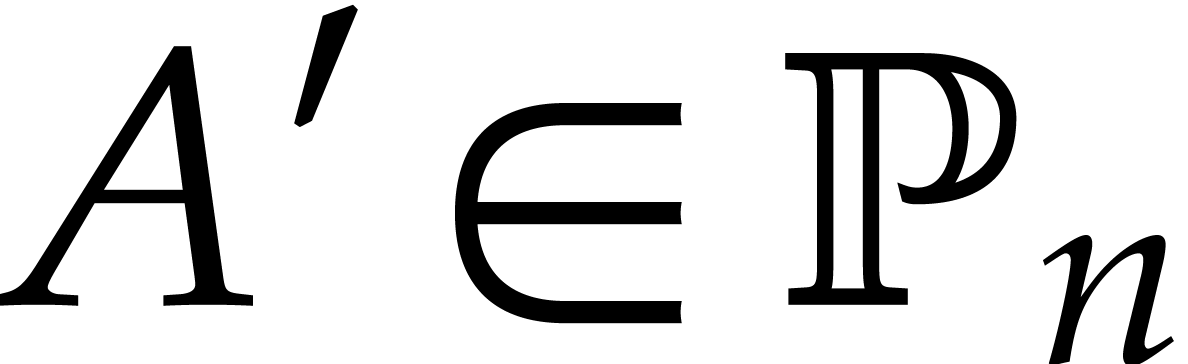

Consider the rings  and

and  of ordinary polynomials and Laurent polynomials. Both rings are

unique factorization domains, but the group of units of

of ordinary polynomials and Laurent polynomials. Both rings are

unique factorization domains, but the group of units of  is

is  , whereas the group of

units of

, whereas the group of

units of  is

is  .

Factoring in

.

Factoring in  is therefore essentially the same

as factoring in

is therefore essentially the same

as factoring in  up to multiplications with

monomials in

up to multiplications with

monomials in  . For instance,

the factorization

. For instance,

the factorization  in

in  gives rise to the factorization

gives rise to the factorization  in

in  . Conversely, the factorization

. Conversely, the factorization  in

in  gives rise to the factorization

gives rise to the factorization

in

in  .

Similarly, computing gcds in

.

Similarly, computing gcds in  is essentially the

same problem as computing gcds in

is essentially the

same problem as computing gcds in  .

.

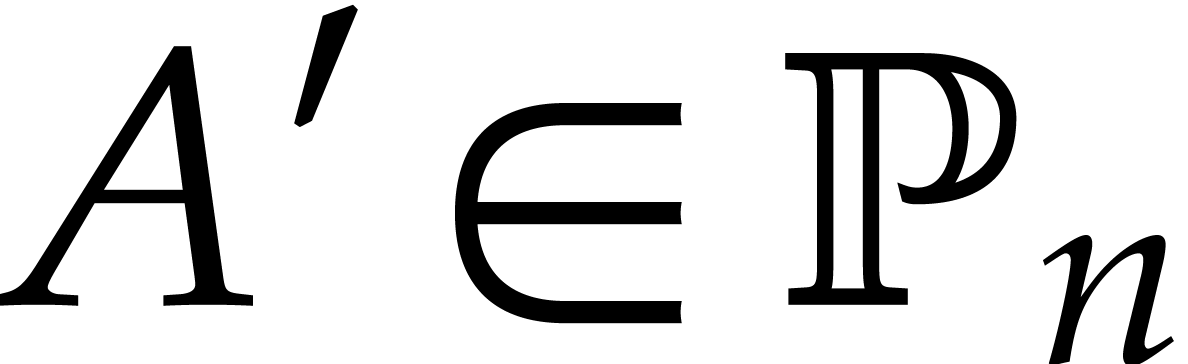

Given any  matrix

matrix  ,

we define the monomial map

,

we define the monomial map  by

by

This is clearly a homomorphism, which is injective (or surjective) if

the linear map  is injective (or surjective).

Note also that

is injective (or surjective).

Note also that  for any matrices

for any matrices  and

and  . In particular, if

. In particular, if  is unimodular, then

is unimodular, then  is an

automorphism of

is an

automorphism of  with

with  .

.

Laurent polynomials are only slightly more general than ordinary

polynomials and we already noted above that factoring in  is essentially the same problem as factoring in

is essentially the same problem as factoring in  (and similarly for gcd computations). It is also

straightforward to adapt the definitions from Section 2.5

and most algorithms for sparse interpolation to this slightly more

general setting. The main advantage of Laurent polynomials is that they

are closed under monomial maps, which allows us to change the geometry

of the support of a polynomial without changing its properties with

respect to factorization.

(and similarly for gcd computations). It is also

straightforward to adapt the definitions from Section 2.5

and most algorithms for sparse interpolation to this slightly more

general setting. The main advantage of Laurent polynomials is that they

are closed under monomial maps, which allows us to change the geometry

of the support of a polynomial without changing its properties with

respect to factorization.

Let  be a non-zero weight vector and let

be a non-zero weight vector and let  . We define the

. We define the  -tagging map by

-tagging map by

This map is an injective monomial map. For any  , we have

, we have  ,

,

,

,  , and

, and  .

Divisibility and gcds are preserved as follows:

.

Divisibility and gcds are preserved as follows:

with

with  for

for  . Then

. Then

divides

divides  in

in  if and only if

if and only if  divides

divides  in

in  .

.

in

in  if and only

if

if and only

if  in

in  .

.

Proof. We claim that  divides

divides  in

in  if and only

if

if and only

if  divides

divides  in

in  . One direction is clear. Assume

that

. One direction is clear. Assume

that  divides

divides  in

in  , so that

, so that  with

with  . We may uniquely write

. We may uniquely write

with

with  and

and  such that

such that  for

for  . Since

. Since  for

for  , it follows that

, it follows that  . Hence

. Hence  divides

divides  in

in  . Our

claim implies that

. Our

claim implies that  divides

divides  in

in  if and only if

if and only if  divides

divides  in

in  .

Now we may further extend

.

Now we may further extend  to a monomial

automorphism of

to a monomial

automorphism of  by sending

by sending  to itself. This yields (a). The second property is an easy

consequence.

to itself. This yields (a). The second property is an easy

consequence.

Given a  -regular polynomial

-regular polynomial

, we note that any divisor

, we note that any divisor

must again be

must again be  -regular.

Hence, modulo multiplication with a suitable constant in

-regular.

Hence, modulo multiplication with a suitable constant in  , we may always normalize such a divisor to

become

, we may always normalize such a divisor to

become  -monic. In the setting

of Laurent polynomials, we may further multiply

-monic. In the setting

of Laurent polynomials, we may further multiply  by a monomial in

by a monomial in  such that

such that  . Similarly, when applying

. Similarly, when applying  , we can always normalize

, we can always normalize  to be monic as a Laurent polynomial in

to be monic as a Laurent polynomial in  by

considering

by

considering  .

.

Both for gcd computations and factorization, this raises the question of

how to find weights  for which a given non-zero

polynomial

for which a given non-zero

polynomial  is

is  -regular.

In [51, Section 4.3], a way was described to compute such a

regularizing weight

-regular.

In [51, Section 4.3], a way was described to compute such a

regularizing weight  :

let

:

let  be such that

be such that  is

maximal. In that case, it suffices to take

is

maximal. In that case, it suffices to take  ,

and we note that

,

and we note that  , where

, where  is the total degree of

is the total degree of  .

For our applications in Sections 3.2 and 5 it

is often important to find a

.

For our applications in Sections 3.2 and 5 it

is often important to find a  for which

for which  is small. By trying a few random weights with small

(possibly negative) entries, it is often possible to find a regularizing

weight

is small. By trying a few random weights with small

(possibly negative) entries, it is often possible to find a regularizing

weight  with

with  or

or  .

.

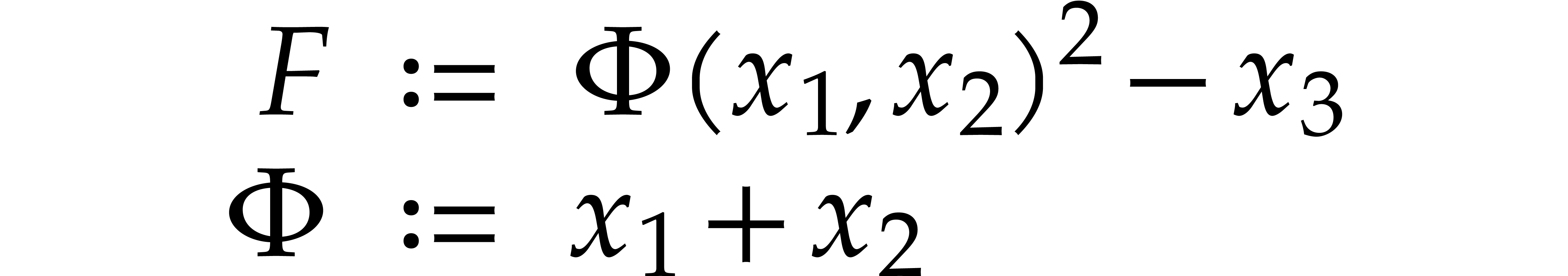

Example  . Then,

. Then,  is not

is not  -regular for the

natural weight

-regular for the

natural weight  . If we take

. If we take

instead, then

instead, then  is

is  -regular with

-regular with  , and we arrive at

, and we arrive at

Furthermore,  can be normalized to be monic as a

Laurent polynomial in

can be normalized to be monic as a

Laurent polynomial in  by considering

by considering  . Note that

. Note that  is also a regularizing weight for

is also a regularizing weight for  with

with  .

.

Before studying the factorization problem for multivariate polynomials, it is interesting to consider the easier problem of gcd computations. In this section we introduce two approaches for gcd computations that will also be useful later for factoring polynomials.

The first approach is iterative on the number of variables. It will be adapted to the factorization problem in Section 6. The second approach is more direct, but requires a regularizing weight (see Section 2.7). Square-free factorization can be accomplished using a similar technique, as we shall see in Section 5. The algorithms from Section 7 also draw some of their inspiration from this technique, but also rely on Newton polygons instead of regularizing weights.

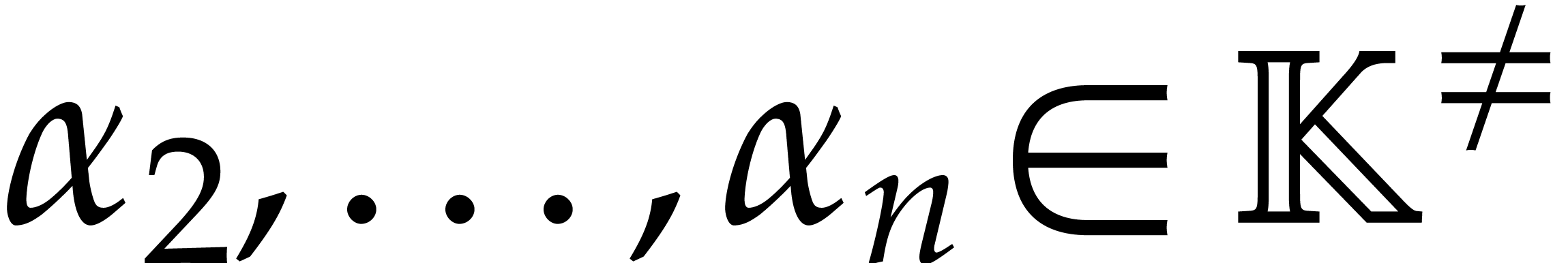

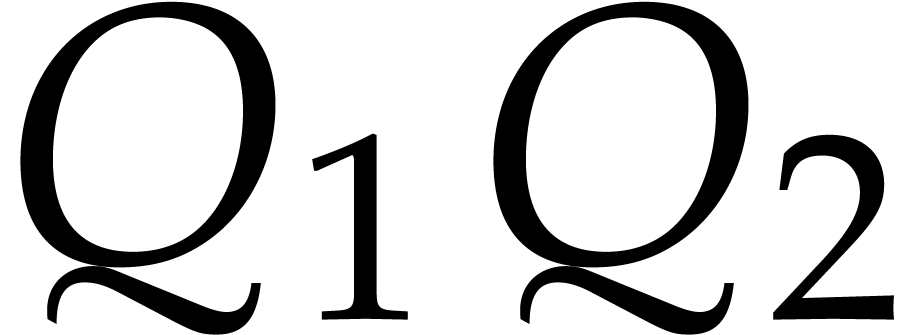

Let  be random elements of

be random elements of  . For any

. For any  and

and  , we define

, we define

Let  and

and  . As we will see below,

. As we will see below,  for

for  with high probability. We may easily compute

the univariate greatest common divisor

with high probability. We may easily compute

the univariate greatest common divisor  .

In this subsection, we shall describe an iterative algorithm to compute

.

In this subsection, we shall describe an iterative algorithm to compute

from

from  for

for  . Eventually, this yields

. Eventually, this yields  .

.

Let  be an admissible ratio or an FFT ratio. For

any

be an admissible ratio or an FFT ratio. For

any  and any

and any  ,

let

,

let

For any  , we have

, we have  with high probability. Now these greatest common divisors

are only defined up to non-zero scalar multiples in

with high probability. Now these greatest common divisors

are only defined up to non-zero scalar multiples in  . Nevertheless, there exists a unique greatest

common divisor

. Nevertheless, there exists a unique greatest

common divisor  of

of  and

and

whose evaluation at

whose evaluation at  coincides with

coincides with  .

.

If  is known, then

is known, then  ,

,

, and

, and  can be computed for successive

can be computed for successive  using fast

evaluation at geometric progressions. For any

using fast

evaluation at geometric progressions. For any  , we may then compute the univariate gcd

, we may then compute the univariate gcd  of

of  and

and  , under the normalization constraint that

, under the normalization constraint that  . It finally suffices to

interpolate

. It finally suffices to

interpolate  from sufficiently many

from sufficiently many  . This yields the following algorithm:

. This yields the following algorithm:

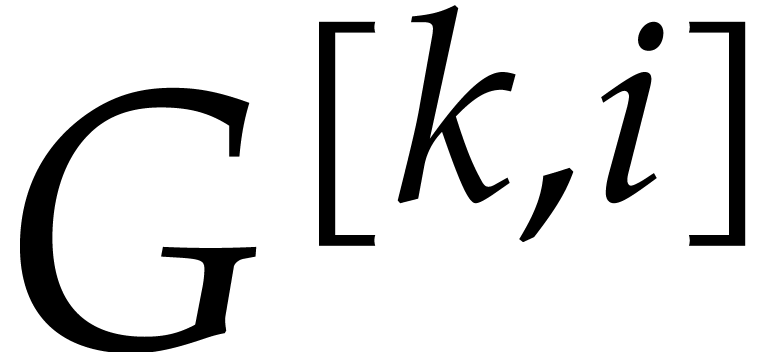

Algorithm

Output:  ,

such that

,

such that

If  , then compute

, then compute  using a univariate algorithm and return it

using a univariate algorithm and return it

Compute  by recursively applying the algorithm

to

by recursively applying the algorithm

to  and

and

Let

Compute  ,

,  ,

,  for

for  using sparse evaluation

using sparse evaluation

Compute  with

with  for

for

Recover  from

from  using

sparse interpolation

using

sparse interpolation

Return

Before we analyze this algorithm, we will need a few probabilistic

lemmas. Assume that  and

and  are independently chosen at random from a subset of

are independently chosen at random from a subset of  of size at least

of size at least  (if

(if  is

a small finite field, this forces us to move to a field extension).

is

a small finite field, this forces us to move to a field extension).

be such that

be such that  and

and  are coprime. Then the probability that

are coprime. Then the probability that

and

and  are not coprime is

bounded by

are not coprime is

bounded by  .

.

Proof. Let  with

with  be a variable that occurs both in

be a variable that occurs both in  and in

and in  . Then

. Then

If  occurs in

occurs in  ,

then

,

then  , which can happen for

at most

, which can happen for

at most  values of

values of  .

.

Proof. Let us write  and

and

, where

, where  and

and  are coprime. Then we have

are coprime. Then we have  if and only if

if and only if  and

and  are

not coprime. The result now follows by applying the previous lemma for

are

not coprime. The result now follows by applying the previous lemma for

.

.

,

,  ,

,  ,

and

,

and  . Then Algorithm 3.1 is correct with probability at least

. Then Algorithm 3.1 is correct with probability at least  and it runs in time

and it runs in time

Remark. The probability bound implicitly assumes

that  , since the statement

becomes void for smaller

, since the statement

becomes void for smaller  . In

particular, we recall that this means that the cardinality of

. In

particular, we recall that this means that the cardinality of  should be at least

should be at least  .

.

Proof. Assuming that  and

and

for

for  and

and  , let us prove that Algorithm 3.1

returns the correct answer. Indeed, these assumptions imply that

, let us prove that Algorithm 3.1

returns the correct answer. Indeed, these assumptions imply that  can be normalized such that

can be normalized such that  and that the unique such

and that the unique such  must coincide with

must coincide with

. Now

. Now  fails for some

fails for some  with probability at most

with probability at most  , by Lemma 3.2. Since

, by Lemma 3.2. Since

, the condition

, the condition  fails with probability at most

fails with probability at most  for

some

for

some  and

and  ,

by Corollary 2.2. This completes the probabilistic

correctness proof.

,

by Corollary 2.2. This completes the probabilistic

correctness proof.

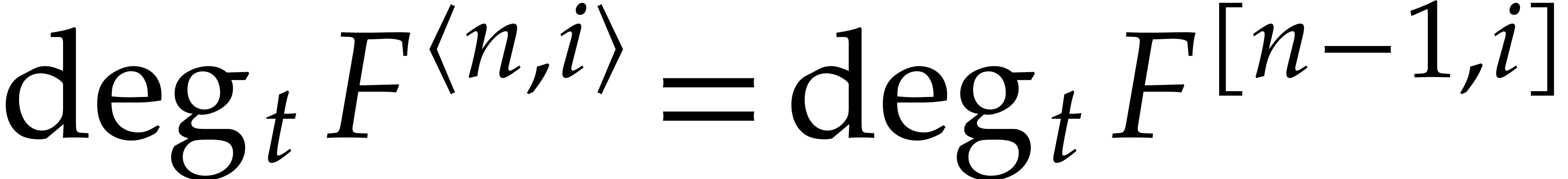

As to the complexity, let us first ignore the cost of the recursive

call. Then the sparse evaluations of  ,

,

, and

, and  can be done in time

can be done in time  : see

Section 2.4. The univariate gcd computations take

: see

Section 2.4. The univariate gcd computations take  operations. Finally, the recovery of

operations. Finally, the recovery of  using sparse interpolation can be done in time

using sparse interpolation can be done in time  . Altogether, the complexity without the recursive

calls is bounded by

. Altogether, the complexity without the recursive

calls is bounded by  . We

conclude by observing that the degrees and numbers of terms of

. We

conclude by observing that the degrees and numbers of terms of  ,

,  ,

and

,

and  can only decrease during recursive calls.

Since the recursive depth is

can only decrease during recursive calls.

Since the recursive depth is  ,

the complexity bound follows.

,

the complexity bound follows.

Remark  from

from  using

sparse interpolation, one may exploit the fact that the exponents of

using

sparse interpolation, one may exploit the fact that the exponents of

in

in  are already known.

are already known.

Example  be random polynomials of total degree

be random polynomials of total degree  and consider

and consider  ,

,  . With high probability,

. With high probability,  . Let us measure the overhead of recursive calls in

Algorithm 3.1 with respect to the number of variables

. Let us measure the overhead of recursive calls in

Algorithm 3.1 with respect to the number of variables  . With high probability, we have

. With high probability, we have

and

Assuming that  , it follows

that

, it follows

that

This shows that the sizes of the supports of the input and output polynomials in Algorithm 3.1 become at least twice as small at every recursive call. Consequently, the overall cost is at most twice the cost of the top-level call, roughly speaking.

Algorithm 3.1 has the disadvantage that the complexity

bound in Theorem 3.3 involves a factor  . Let us now present an alternative algorithm

that avoids this pitfall, but which may require a non-trivial monomial

change of variables. Our method is a variant of the algorithm from [51, Section 4.3].

. Let us now present an alternative algorithm

that avoids this pitfall, but which may require a non-trivial monomial

change of variables. Our method is a variant of the algorithm from [51, Section 4.3].

Given  , we first compute a

regularizing weight

, we first compute a

regularizing weight  for

for  or

or  , for which

, for which  is as small as possible. From a practical point of view,

as explained in Section 2.7, we first try a few random

small weights

is as small as possible. From a practical point of view,

as explained in Section 2.7, we first try a few random

small weights  . If no

regularizing weight is found in this way, then we may always revert to

the following choice:

. If no

regularizing weight is found in this way, then we may always revert to

the following choice:

, let

, let  . Let

. Let  be such that

be such that

is maximal. Let

is maximal. Let  be

such that

be

such that  is maximal. Let

is maximal. Let  if

if  and

and  otherwise. Then

otherwise. Then

.

.

Proof. Assume that  . Then

. Then  for any

for any  with

with  . The case

when

. The case

when  is handled similarly.

is handled similarly.

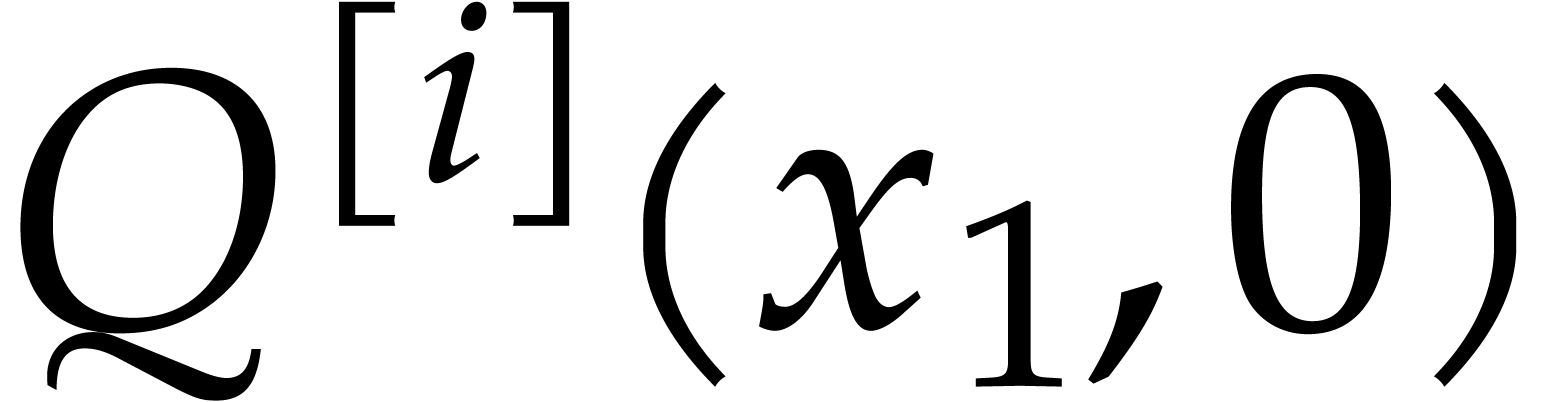

Now consider  ,

,  , and

, and  in

in  . We normalize

. We normalize  in such

a way that

in such

a way that  and such that

and such that  is monic as a polynomial in

is monic as a polynomial in  ;

this is always possible since

;

this is always possible since  is

is  -regular. Let

-regular. Let  be an

admissible ratio or an FFT ratio. For any

be an

admissible ratio or an FFT ratio. For any  ,

we evaluate at

,

we evaluate at  and

and  ,

which leads us to define the univariate polynomials

,

which leads us to define the univariate polynomials

With high probability,  , and

, and

is the monic gcd of

is the monic gcd of  and

and

, where

, where  and

and  . For sufficiently large

. For sufficiently large

(with

(with  ),

we may thus recover

),

we may thus recover  from

from  using sparse interpolation. Finally, for

using sparse interpolation. Finally, for  ,

we obtain

,

we obtain  , where

, where  for

for  . This

leads to the following algorithm:

. This

leads to the following algorithm:

Algorithm

Output:  ,

such that

,

such that

Find a regularizing weight  for

for  or

or

For  do

do

Compute  ,

,  for

for

Compute  with

with  monic and

monic and

for

for

If  yield

yield  through

sparse interpolation, then

through

sparse interpolation, then

Let  for

for

Return

Remark  is normalized in

is normalized in  to be monic as a

polynomial in

to be monic as a

polynomial in  , then we may

need to interpolate multivariate polynomials with negative exponents in

order to recover

, then we may

need to interpolate multivariate polynomials with negative exponents in

order to recover  from

from  . In practice, many interpolation algorithms based

on geometric sequences, like Prony's method, can be adapted to do this.

. In practice, many interpolation algorithms based

on geometric sequences, like Prony's method, can be adapted to do this.

As in the previous subsection, assume that  are

independently chosen at random from a subset of

are

independently chosen at random from a subset of  of size at least

of size at least  . We let

. We let

,

,  , and

, and  .

.

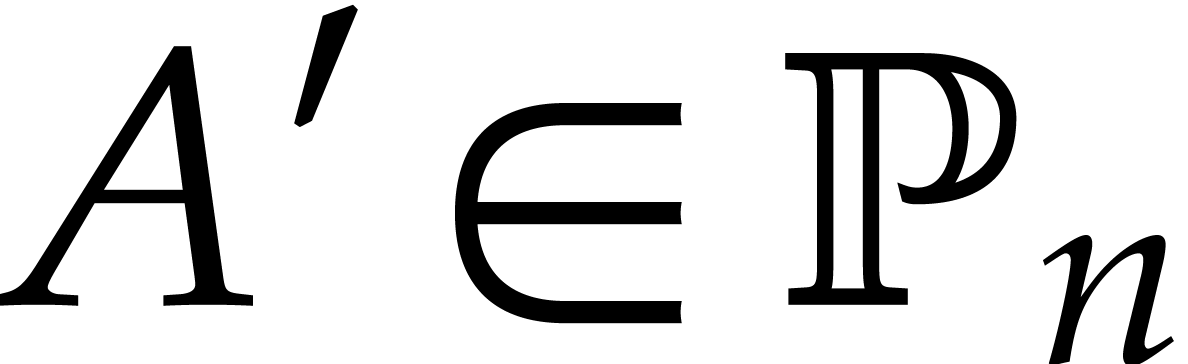

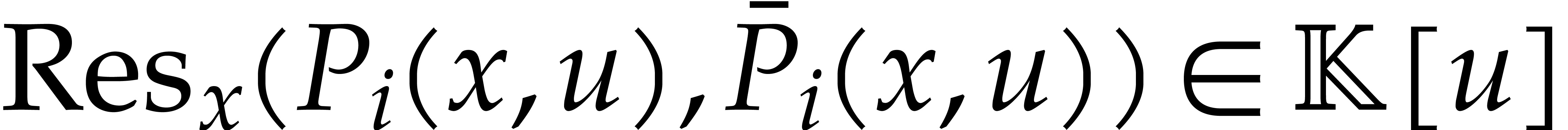

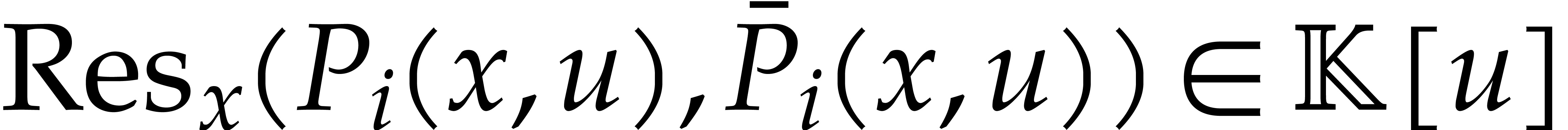

or

or  is

is  -regular. Let

-regular. Let  with

with  be monic as a polynomial in

be monic as a polynomial in

. Take

. Take  ,

,  ,

and

,

and  . The probability that

. The probability that

for all

for all  is at least

is at least

Proof. We have  if and

only if

if and

only if  does not vanish at

does not vanish at  . Now the degree of

. Now the degree of  is

at most

is

at most  , so the probability

that

, so the probability

that  for a randomly chosen

for a randomly chosen  and all

and all  is at least

is at least  , by Corollary 2.2.

, by Corollary 2.2.

,

,  ,

,  ,

and

,

and  . Algorithm 3.2

is correct with probability at least

. Algorithm 3.2

is correct with probability at least  and it

runs in time

and it

runs in time

|

(3.1) |

Proof. The correctness with the announced

probability follows from Corollaries 2.11 and 3.9,

while also using Remark 2.5. The computation of  and

and  through sparse evaluation at

geometric progressions requires

through sparse evaluation at

geometric progressions requires  operations (see

Section 2.4). The univariate gcd computations take

operations (see

Section 2.4). The univariate gcd computations take  further operations. The final interpolation of

further operations. The final interpolation of  from

from  can be done in time

can be done in time

.

.

Example  . Consider the particular case when

. Consider the particular case when

is monic as a polynomial in

is monic as a polynomial in  . Then,

. Then,  is a

regularizing weight for

is a

regularizing weight for  , and

therefore also for

, and

therefore also for  . This

situation can be readily detected and, in this case, we have

. This

situation can be readily detected and, in this case, we have  in the complexity bound (3.1).

in the complexity bound (3.1).

Remark  in Algorithm 3.2 in case the terms of

in Algorithm 3.2 in case the terms of  are distributed over the powers of

are distributed over the powers of  .