Ball arithmetic |

|

| November 3, 2023 |

|

. This work has

been supported by the ANR-09-JCJC-0098-01

. This work has

been supported by the ANR-09-JCJC-0098-01

The

|

Computer algebra systems are widely used today in order to perform mathematically correct computations with objects of algebraic or combinatorial nature. It is tempting to develop a similar system for analysis. On input, the user would specify the problem in a high level language, such as computable analysis [Wei00, BB85, Abe80, Tur36] or interval analysis [Moo66, AH83, Neu90, JKDW01, Kul08]. The system should then solve the problem in a certified manner. In particular, all necessary computations of error bounds must be carried out automatically.

There are several specialized systems and libraries which contain work

in this direction. For instance, Taylor models have been used with

success for the validated long term integration of dynamical systems [Ber98, MB96, MB04]. A fairly

complete

The

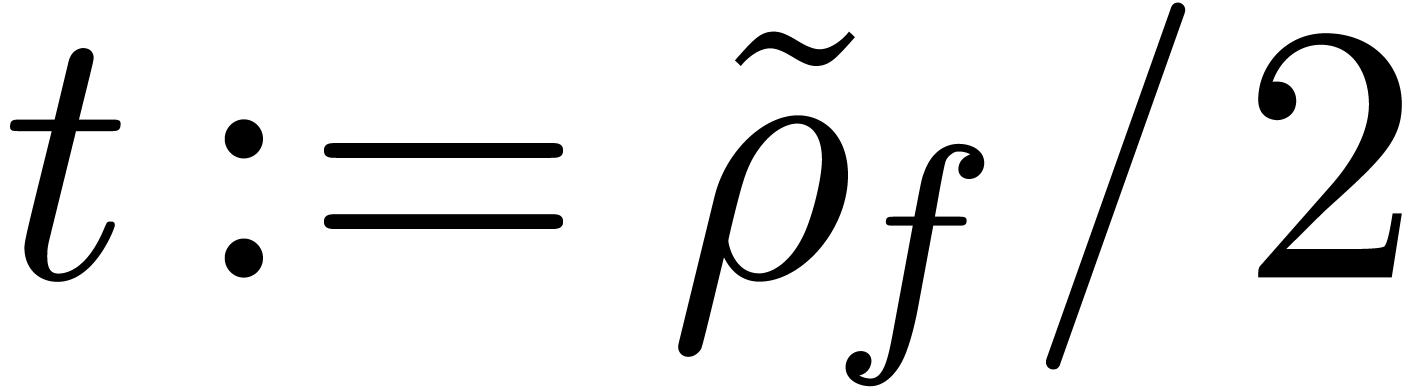

Most of the algorithms in this paper will be based on “ball arithmetic”, which provides a systematic tool for the automatic computation of error bounds. In section 3, we provide a short introduction to this topic and describe its relation with computable analysis. Although ball arithmetic is really a variant of interval arithmetic, we will give several arguments in section 4 why we actually prefer balls over intervals for most applications. Roughly speaking, balls should be used for the reliable approximation of numbers, whereas intervals are mainly useful for certified algorithms which rely on the subdivision of space. Although we will mostly focus on ball arithmetic in this paper, section 5 presents a rough overview of the other kinds of algorithms that are needed in a complete computer analysis system.

After basic ball arithmetic for real and complex numbers, the next challenge is to develop efficient algorithms for reliable linear algebra, polynomials, analytic functions, resolution of differential equations, etc. In sections 6 and 7 we will survey several basic techniques which can be used to implement efficient ball arithmetic for matrices and formal power series. Usually, there is a trade-off between efficiency and the quality of the obtained error bounds. One may often start with a very efficient algorithm which only computes rough bounds, or bounds which are only good in favourable well-conditioned cases. If the obtained bounds are not good enough, then we may switch to a more expensive and higher quality algorithm.

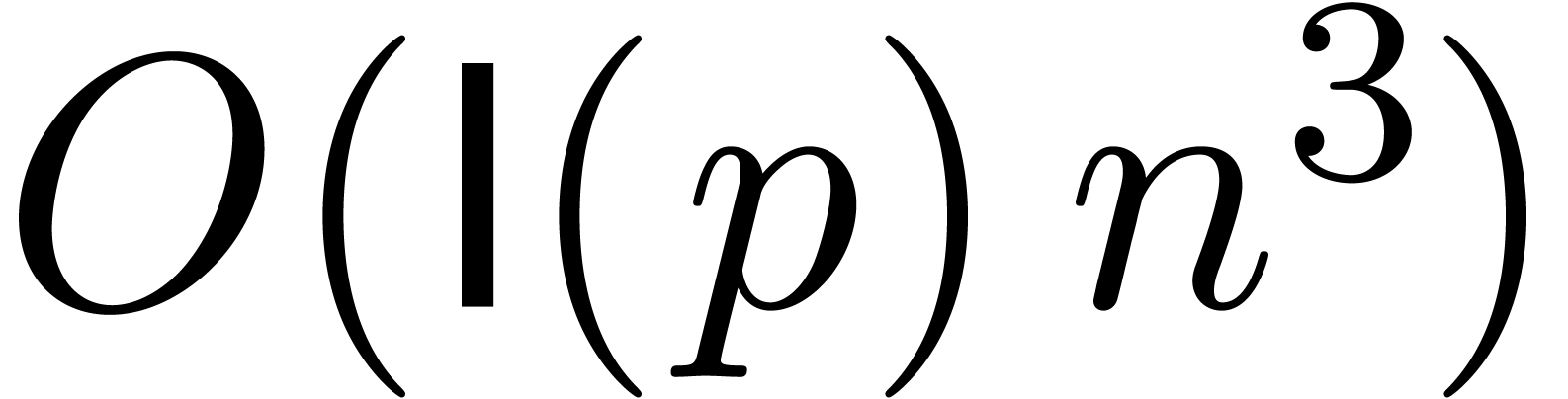

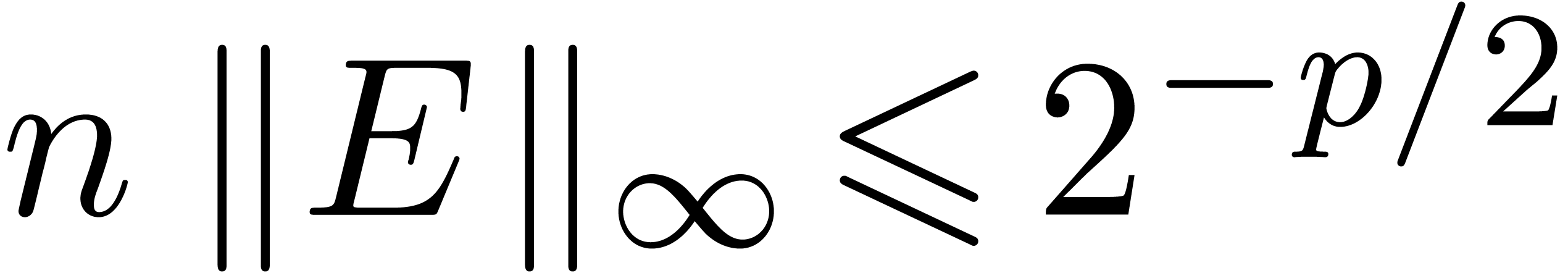

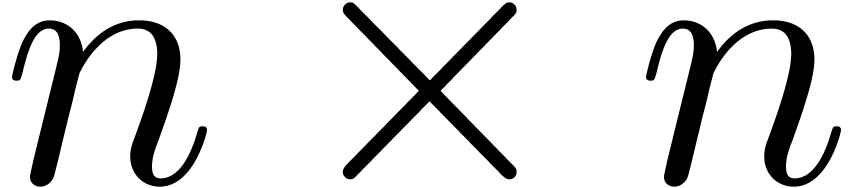

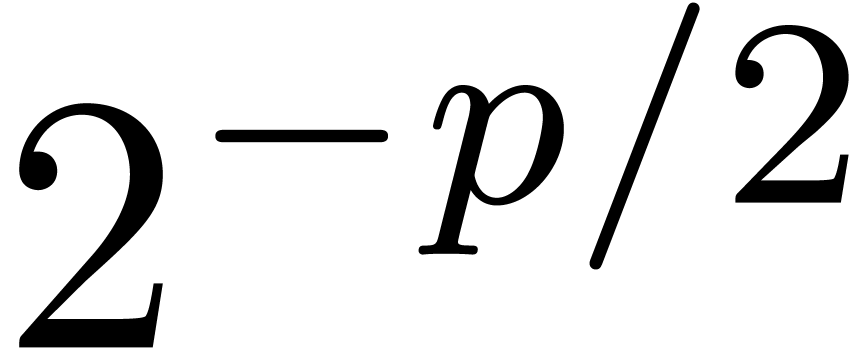

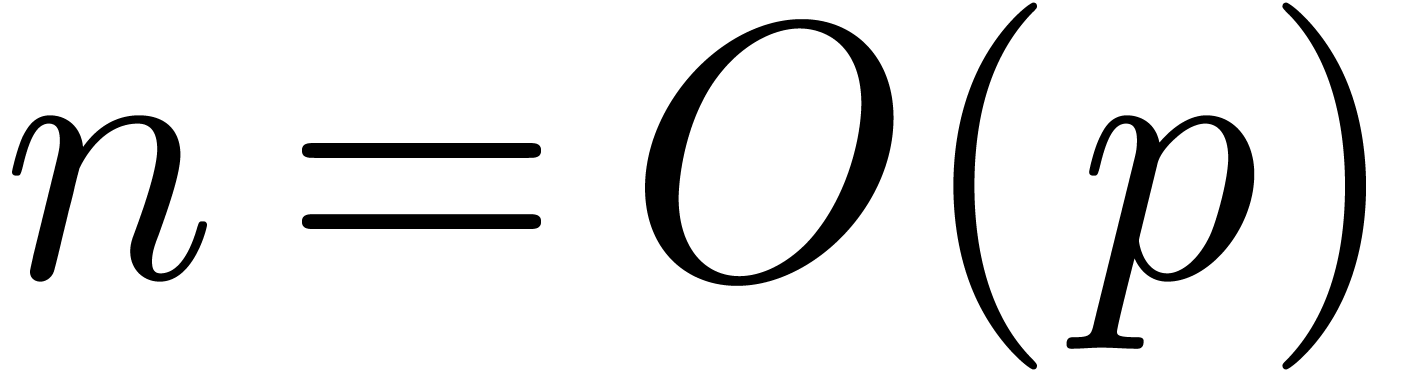

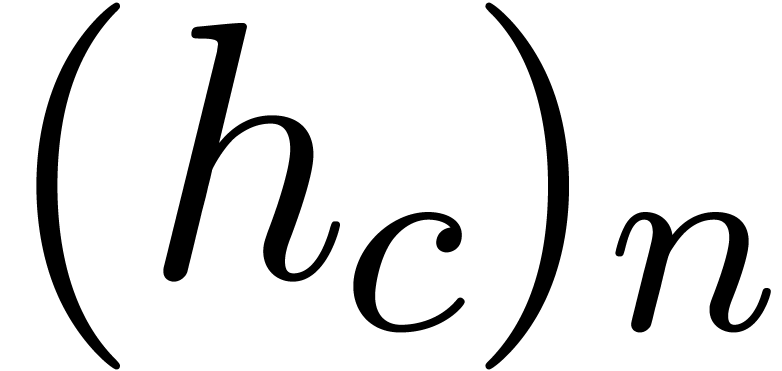

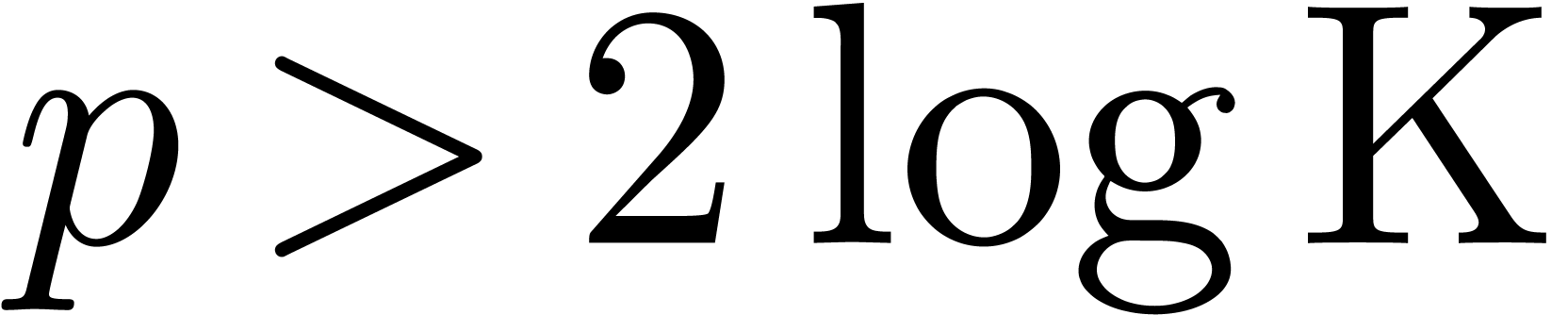

Of course, the main algorithmic challenge in the area of ball arithmetic

is to reduce the overhead of the bound computations as much as possible

with respect to the principal numeric computation. In favourable cases,

this overhead indeed becomes negligible. For instance, for high working

precisions  , the centers of

real and complex balls are stored with the full precision, but we only

need a small precision for the radii. Consequently, the cost of

elementary operations (

, the centers of

real and complex balls are stored with the full precision, but we only

need a small precision for the radii. Consequently, the cost of

elementary operations ( ,

,

,

,  ,

,  ,

etc.) on balls is dominated by the cost of the

corresponding operations on their centers. Similarly, crude error bounds

for large matrix products can be obtained quickly with respect to the

actual product computation, by using norm bounds for the rows and

columns of the multiplicands; see (21).

,

etc.) on balls is dominated by the cost of the

corresponding operations on their centers. Similarly, crude error bounds

for large matrix products can be obtained quickly with respect to the

actual product computation, by using norm bounds for the rows and

columns of the multiplicands; see (21).

Another algorithmic challenge is to use fast algorithms for the actual

numerical computations on the centers of the balls. In particular, it is

important to use existing high performance libraries, such as

The representations of objects should be chosen with care. For instance, should we rather work with ball matrices or matricial balls (see sections 6.4 and 7.1)?

If the result of our computation satisfies an equation, then we may first solve the equation numerically and only perform the error analysis at the end. In the case of matrix inversion, this method is known as Hansen's method; see section 6.2.

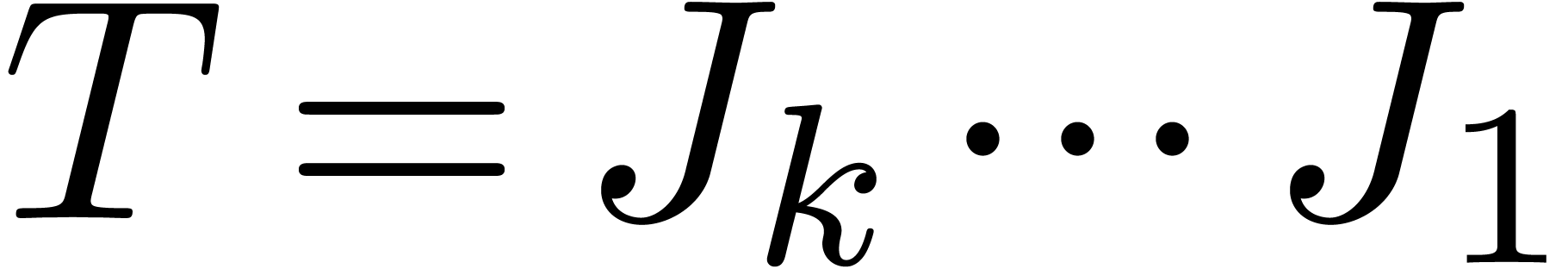

When considering a computation as a tree or dag, then the error tends to increase with the depth of the tree. If possible, algorithms should be designed so as to keep this depth small. Examples will be given in sections 6.4 and 7.5. Notice that this kind of algorithms are usually also more suitable for parallelization.

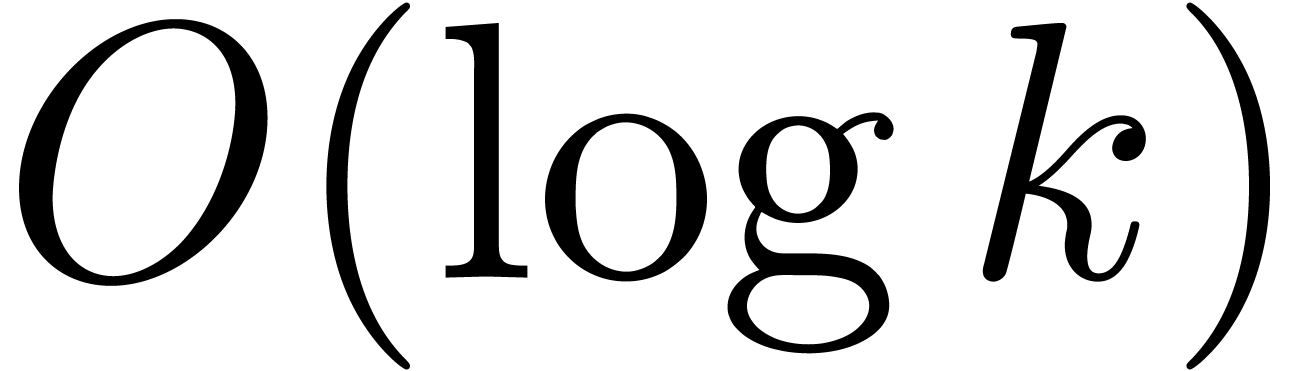

When combining the above approaches to the series of problems considered in this paper, we are usually able to achieve a constant overhead for sharp error bound computations. In favourable cases, the overhead becomes negligible. For particularly ill conditioned problems, we need a logarithmic overhead. It remains an open question whether we have been lucky or whether this is a general pattern.

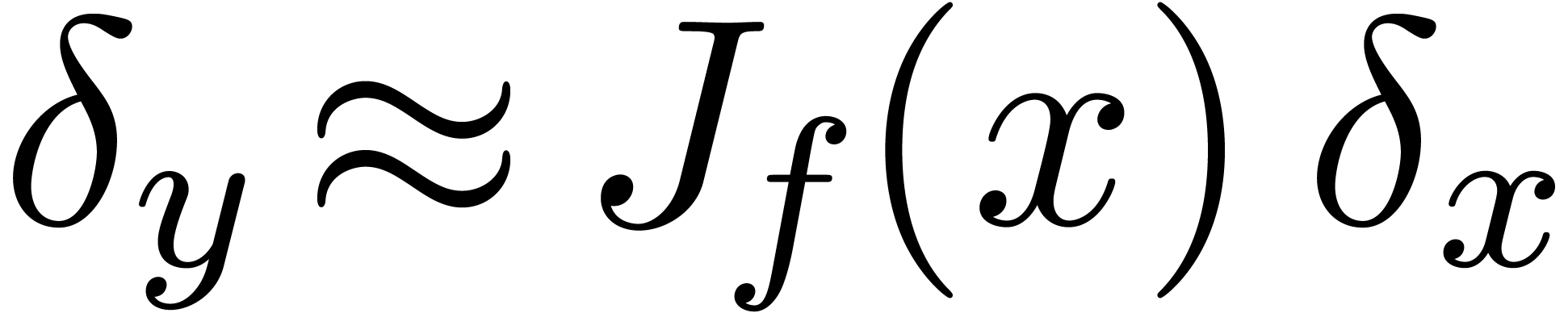

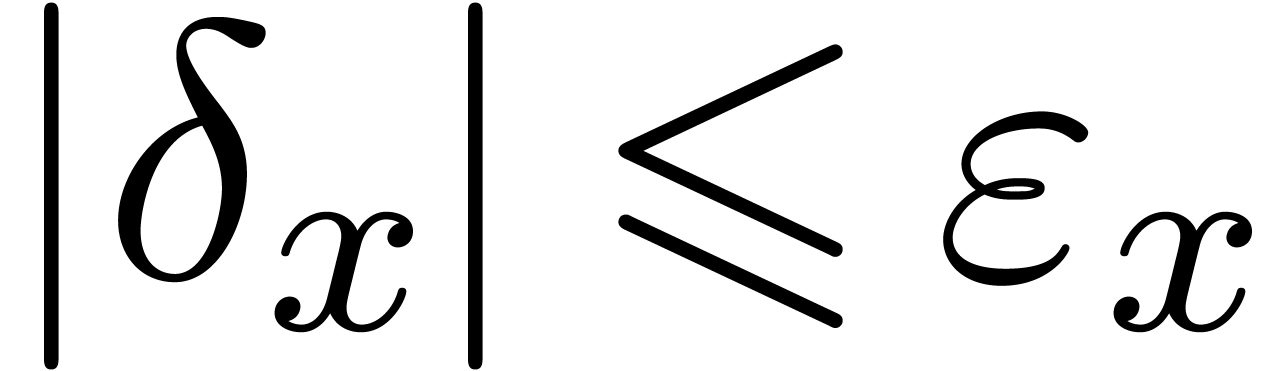

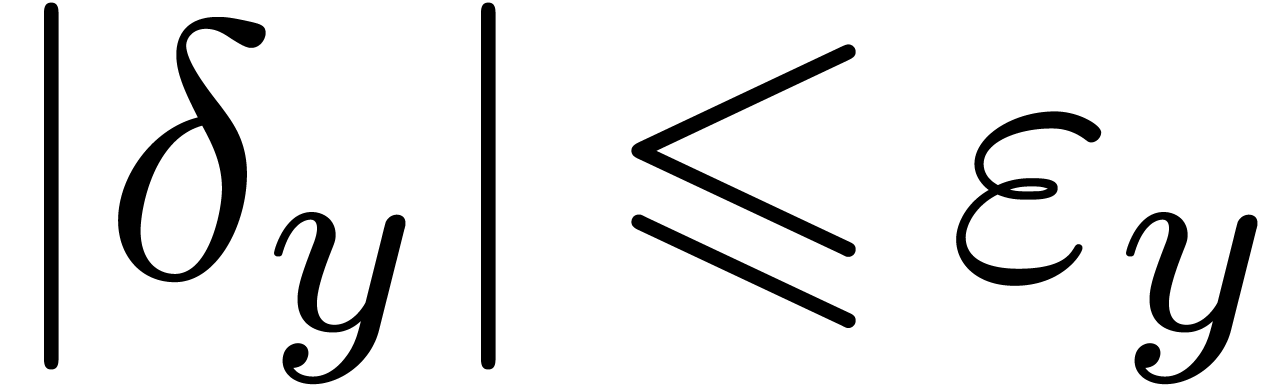

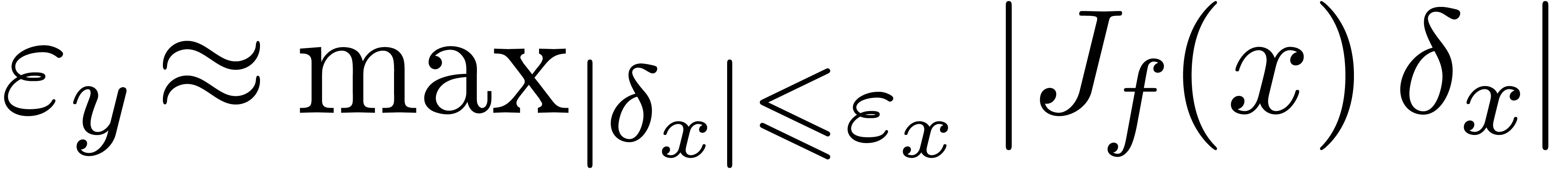

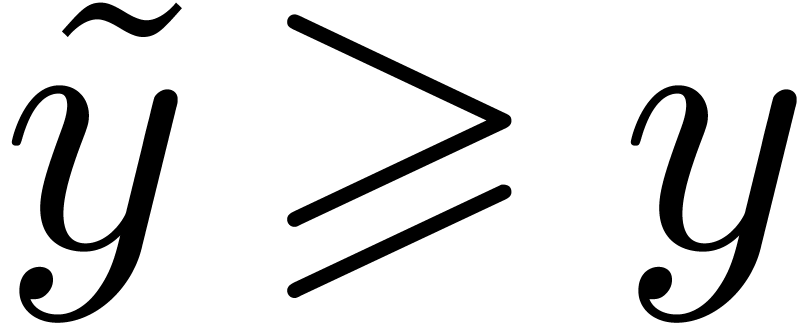

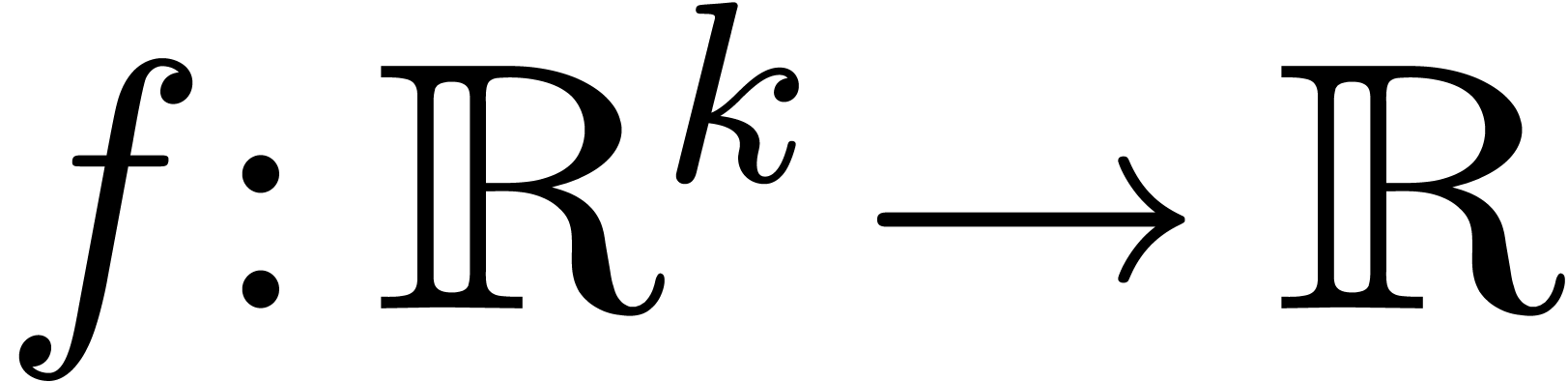

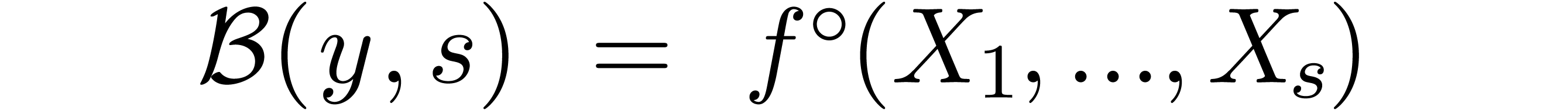

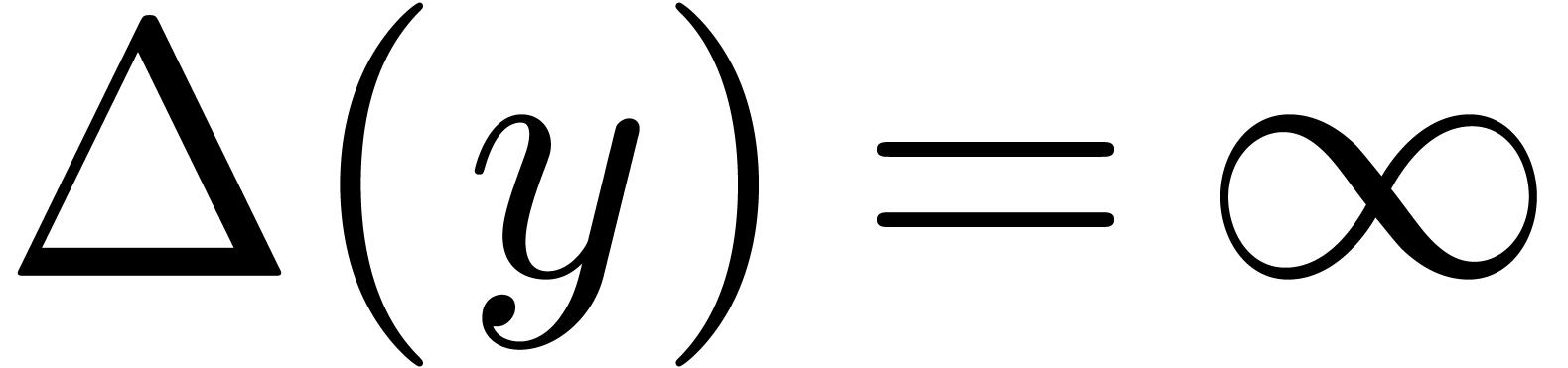

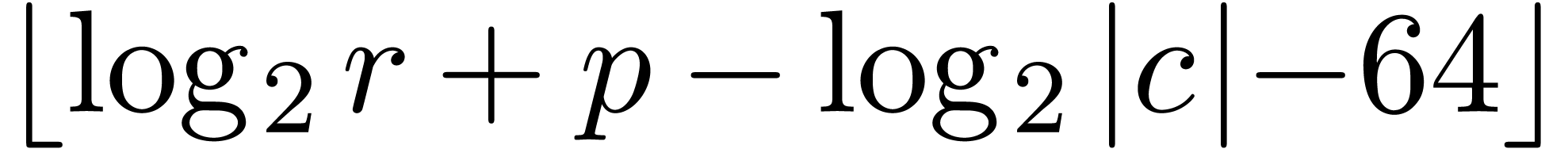

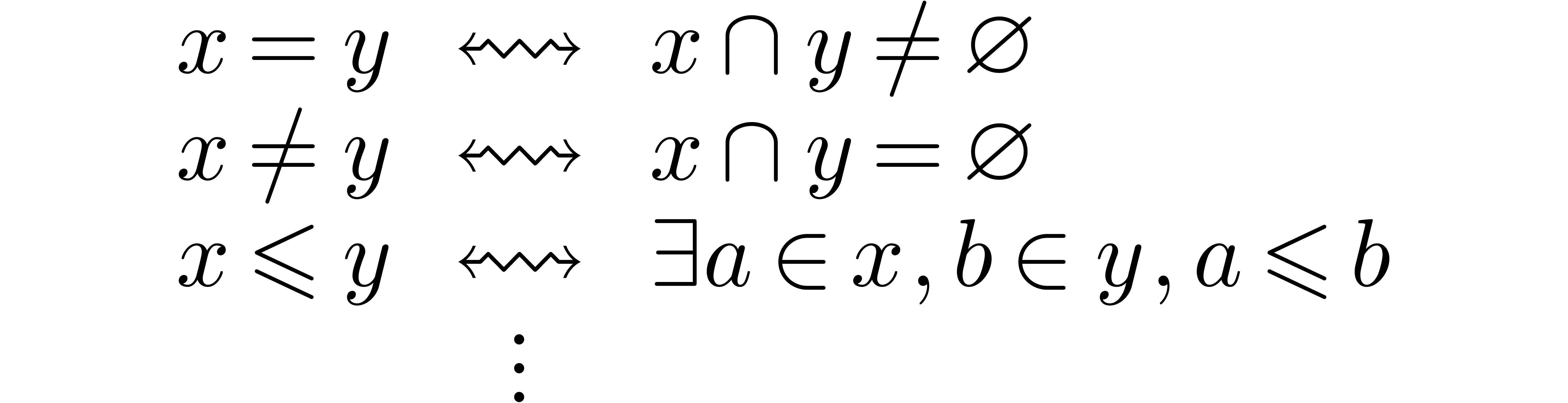

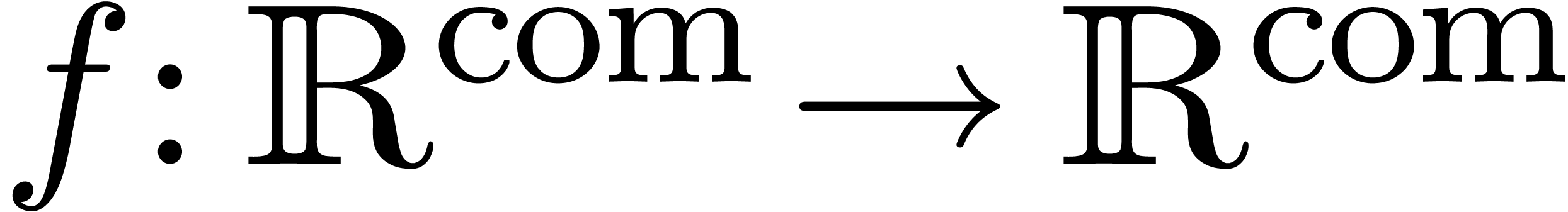

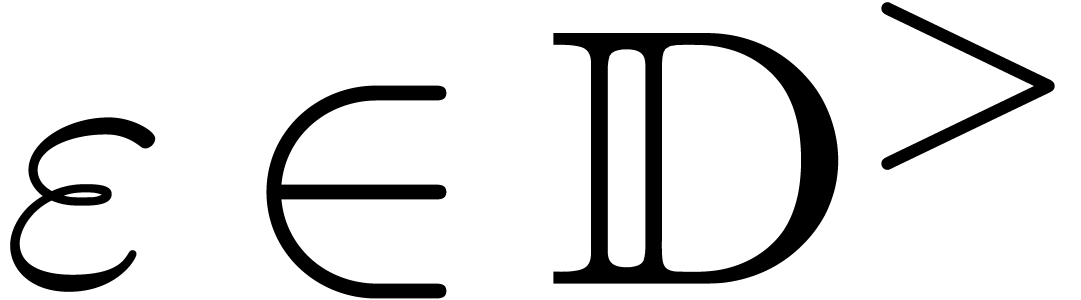

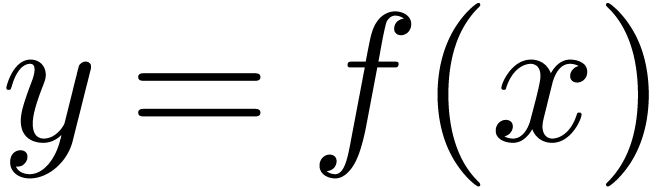

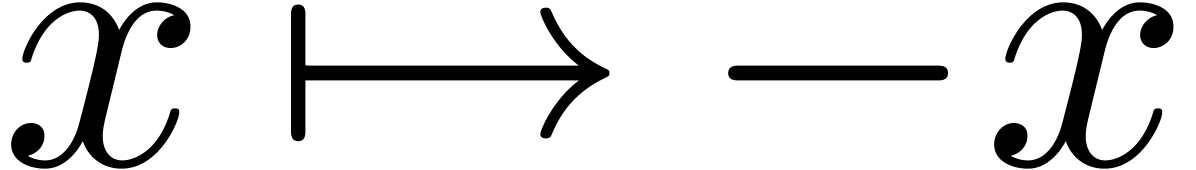

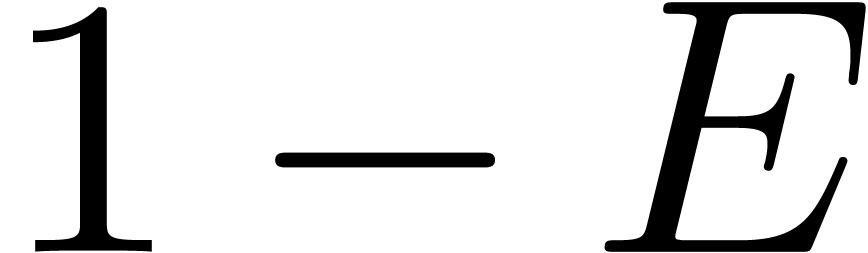

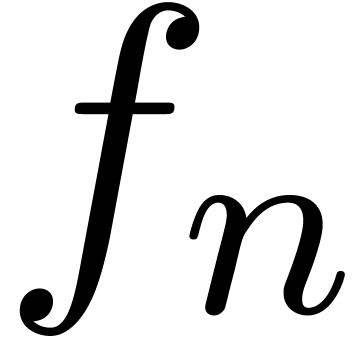

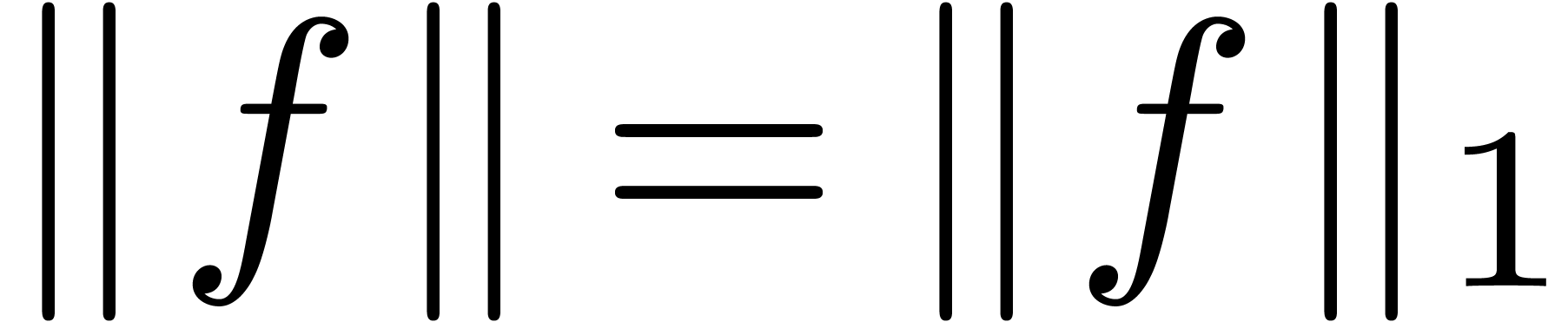

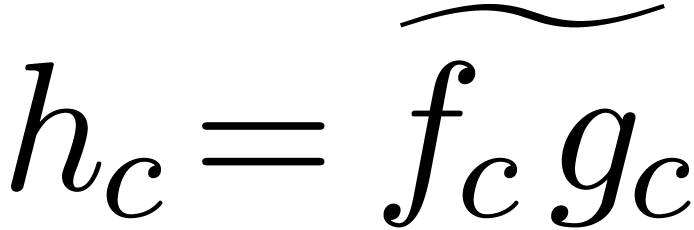

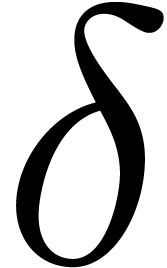

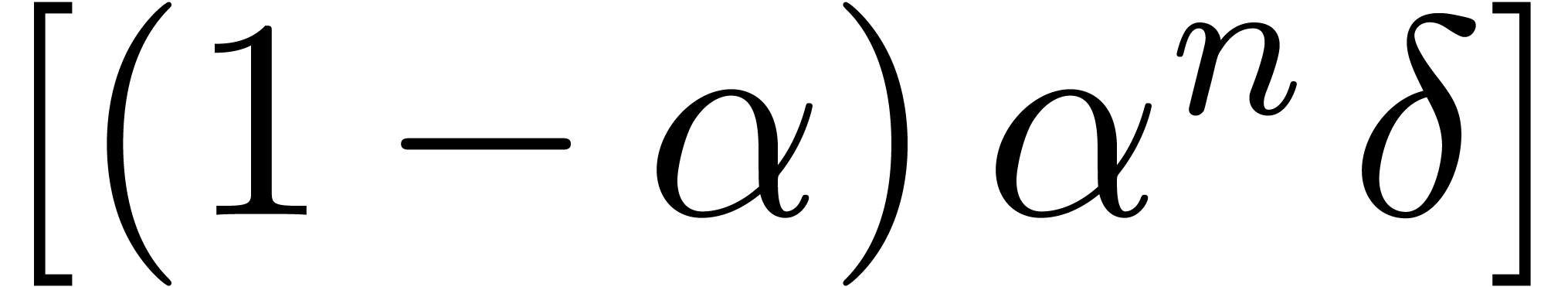

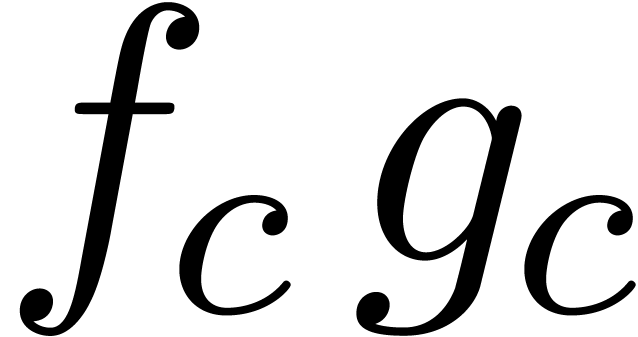

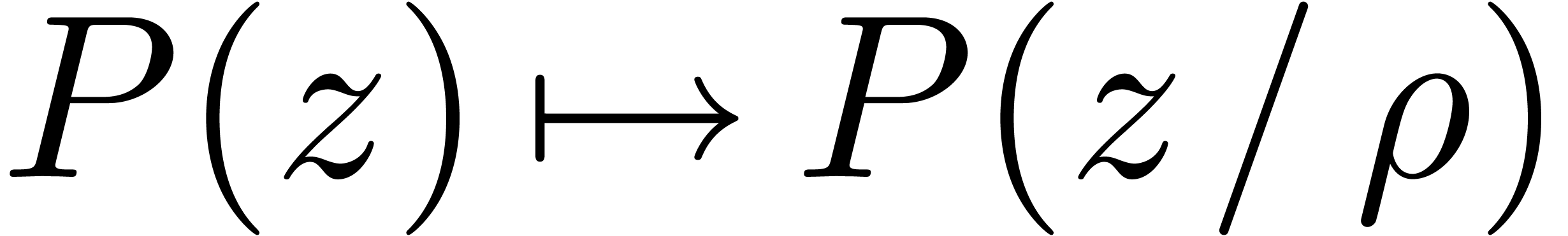

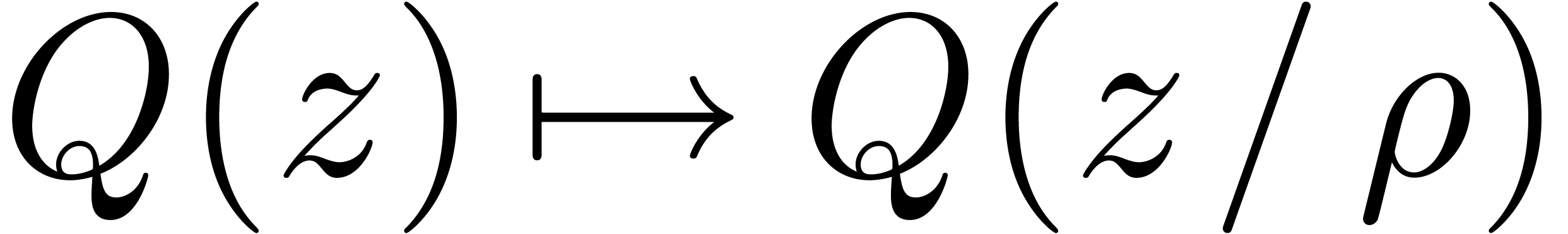

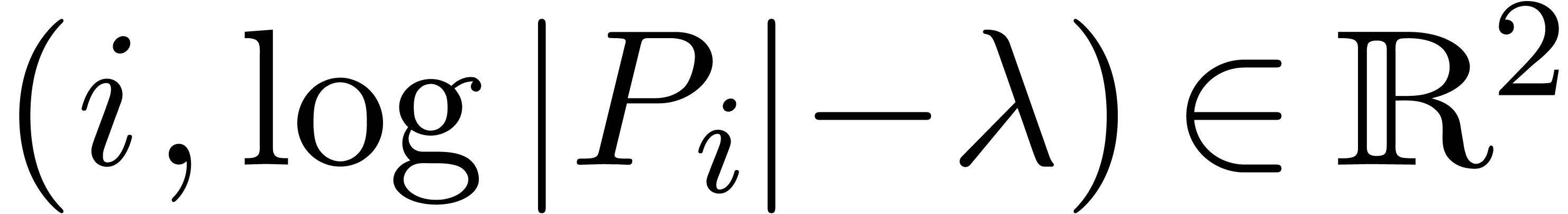

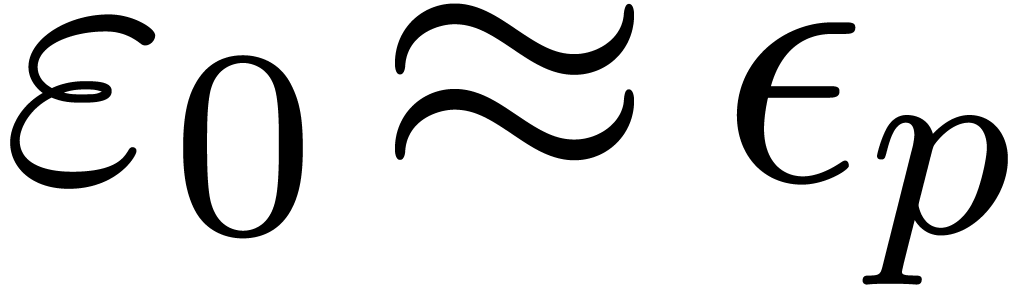

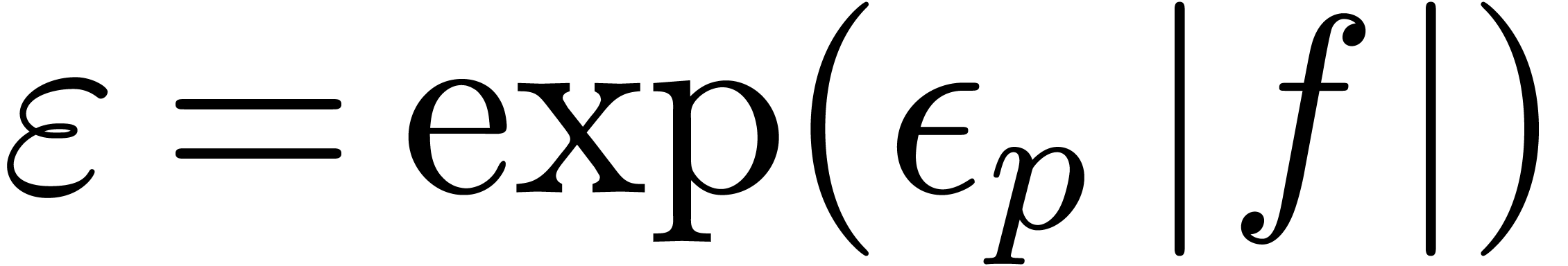

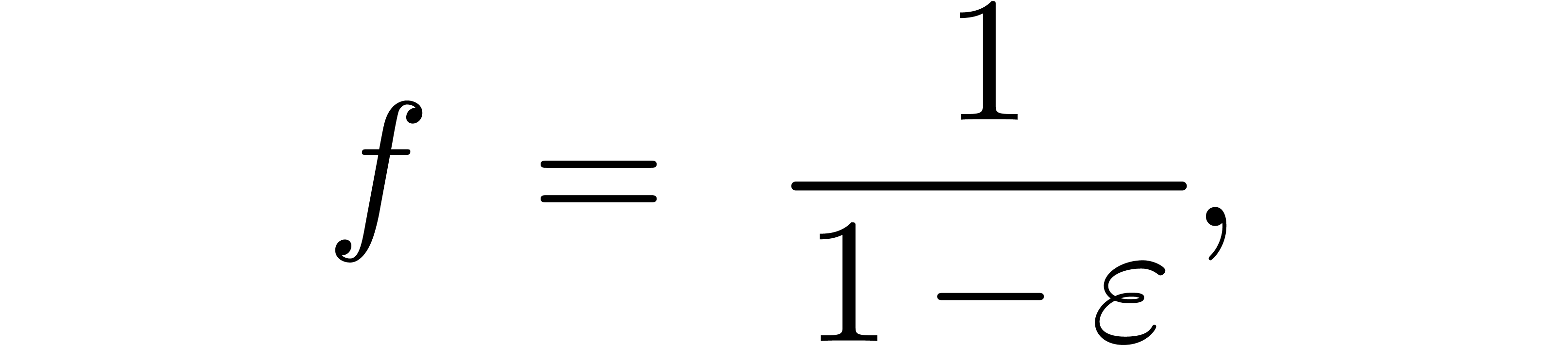

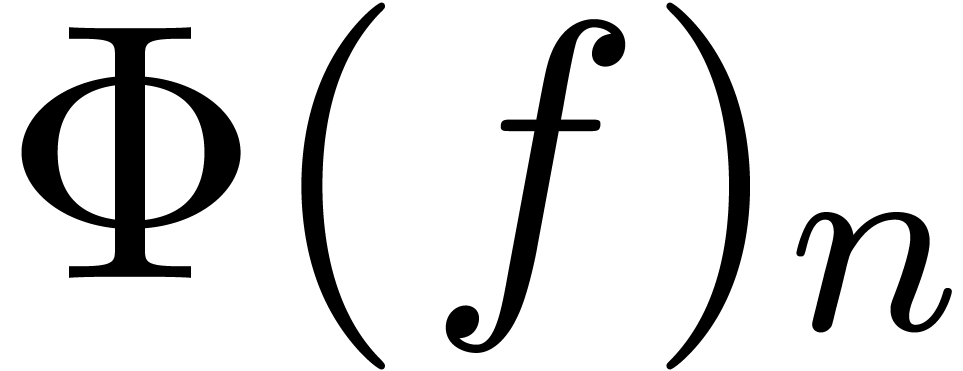

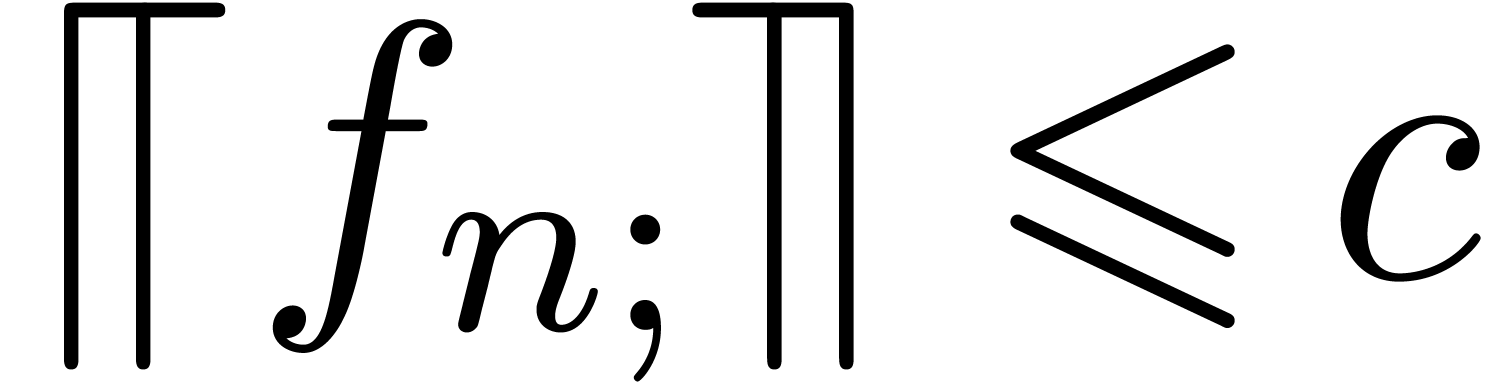

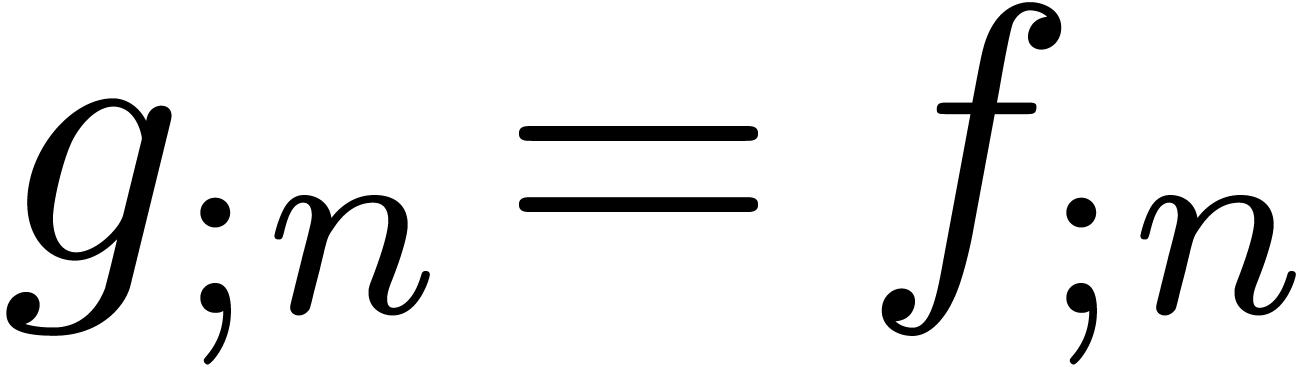

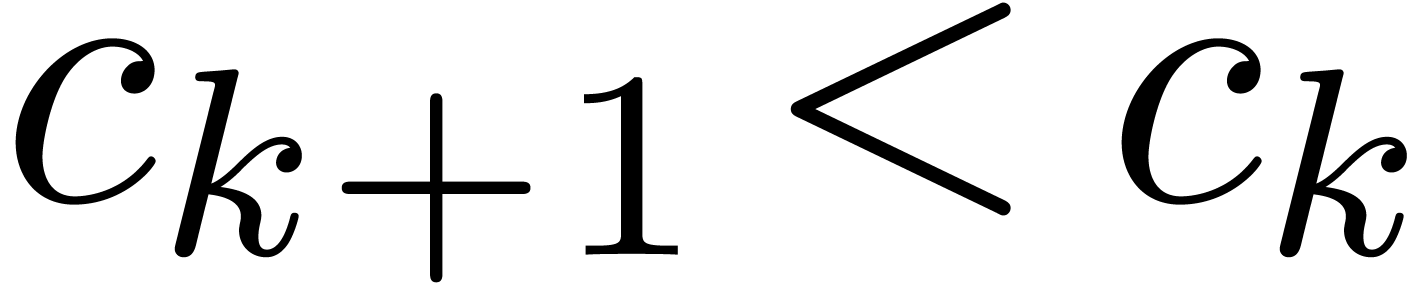

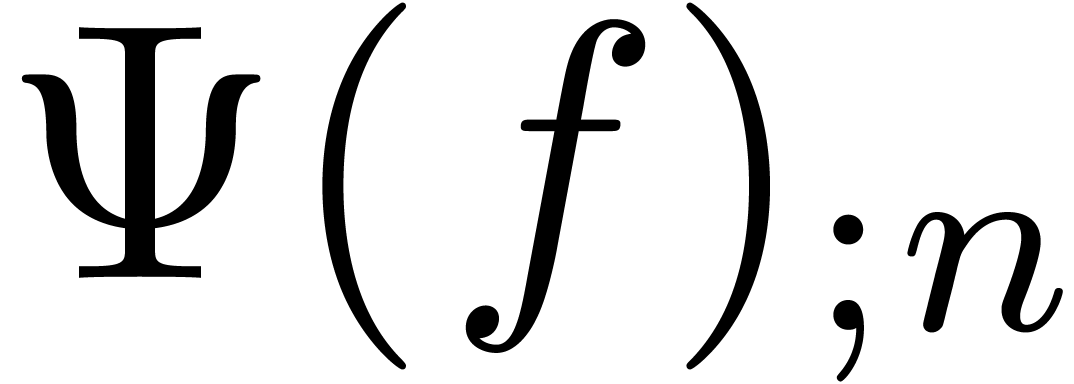

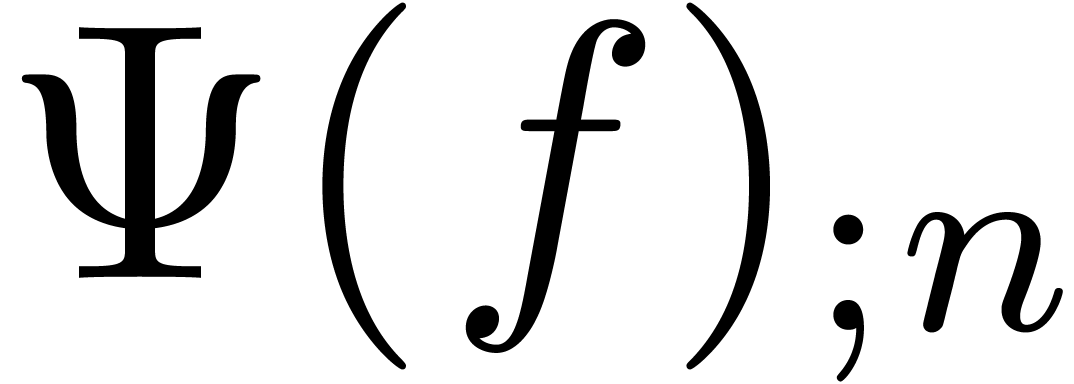

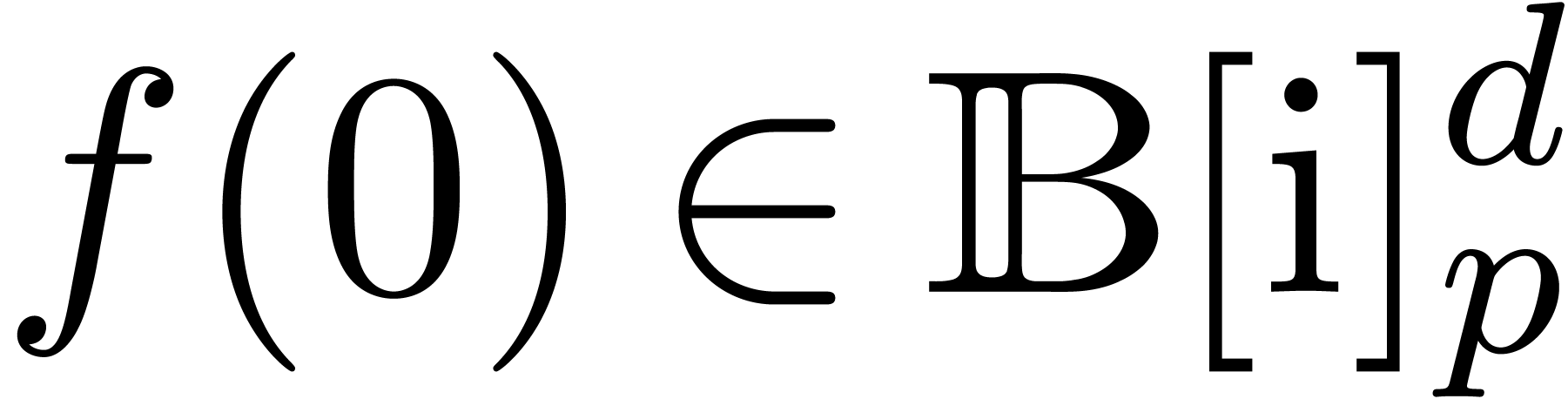

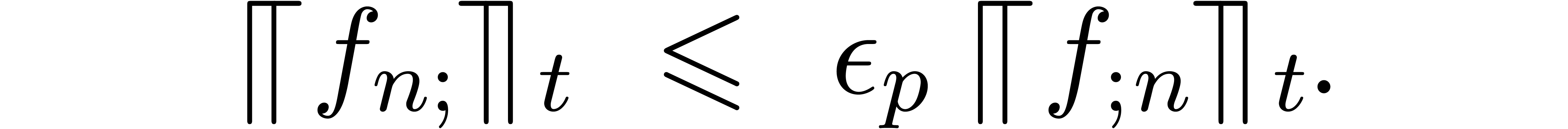

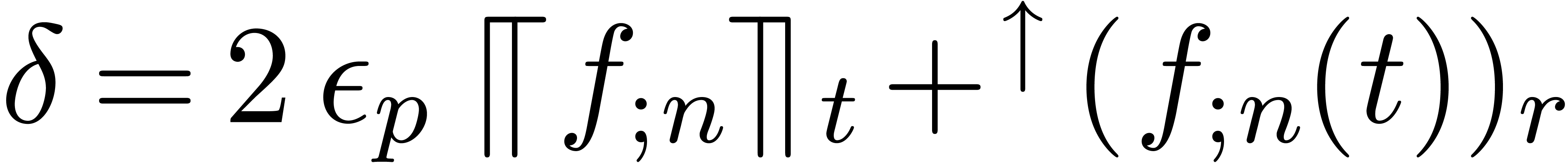

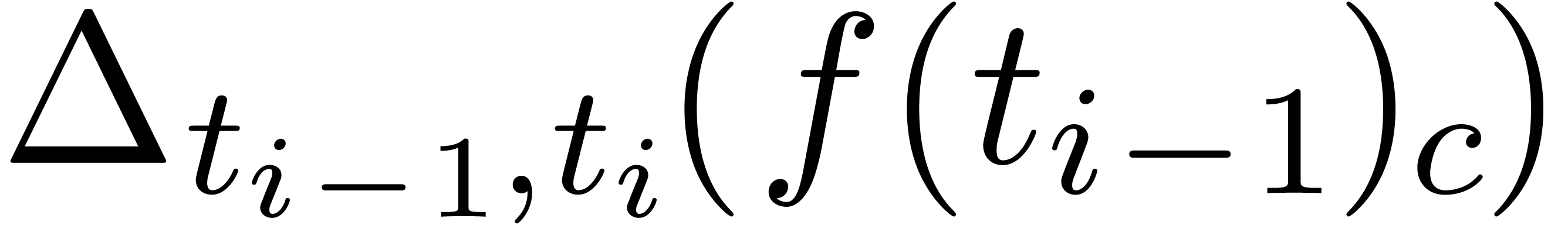

In this paper, we will be easygoing on what is meant by “sharp

error bound”. Regarding algorithms as functions  , an error

, an error  in the input

automatically gives rise to an error

in the input

automatically gives rise to an error  in the

output. When performing our computations with bit precision

in the

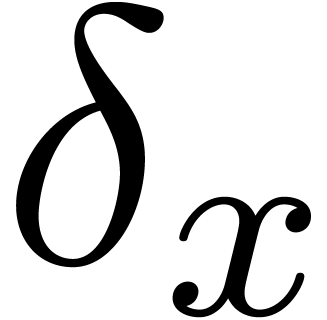

output. When performing our computations with bit precision  , we have to consider that the input error

, we have to consider that the input error  is as least of the order of

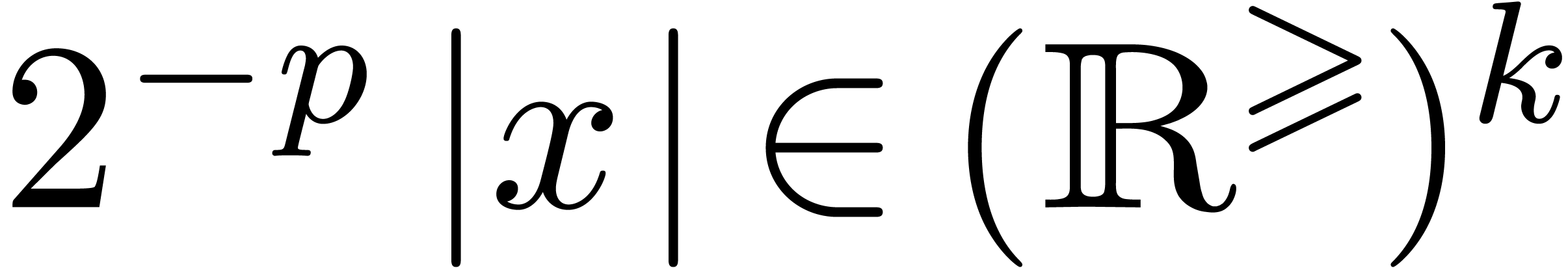

is as least of the order of  (where

(where  for all

for all  ).

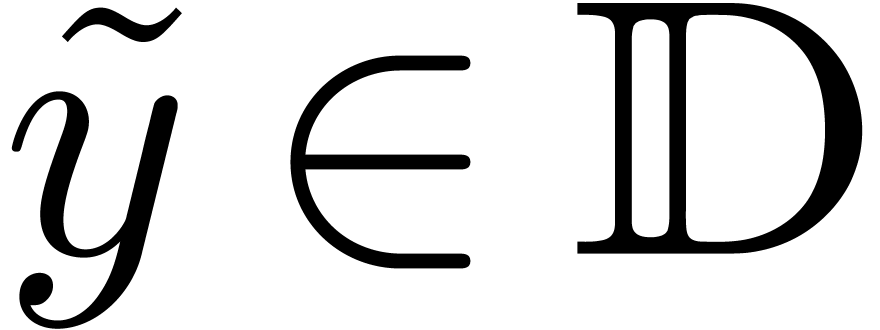

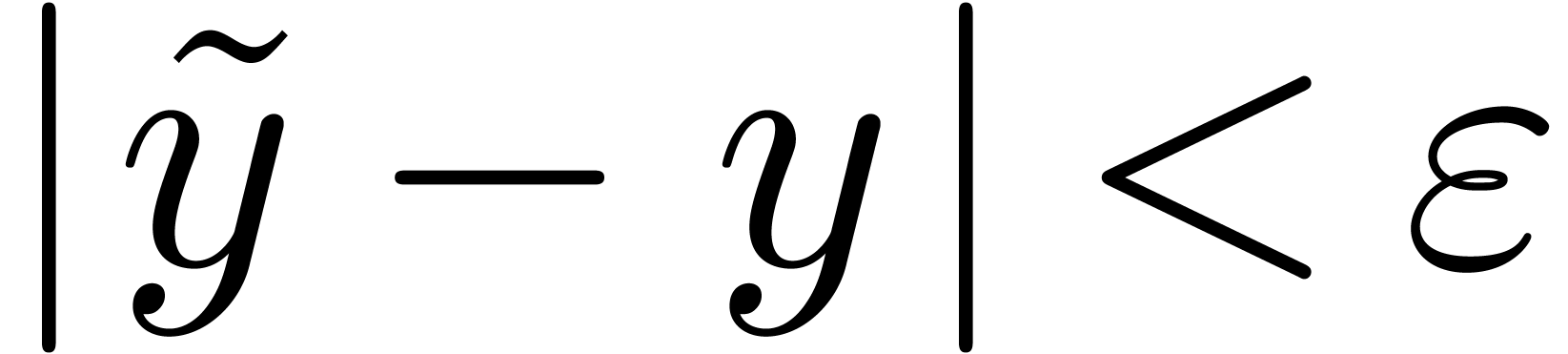

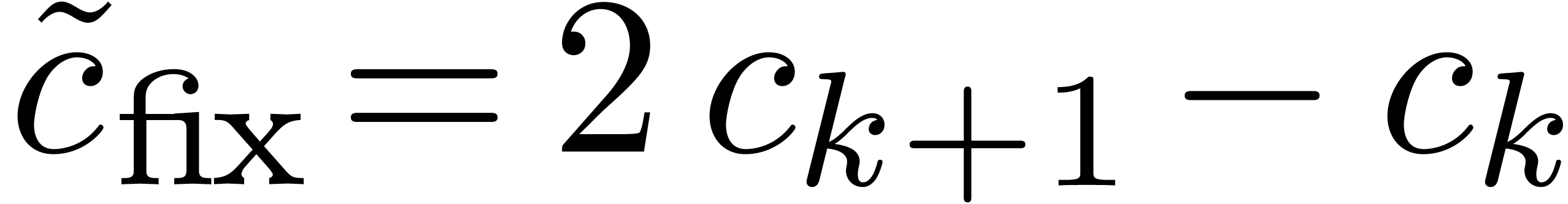

Now given an error bound

).

Now given an error bound  for the input, an error

bound

for the input, an error

bound  is considered to be sharp if

is considered to be sharp if  . More generally, condition numbers provide a

similar device for measuring the quality of error bounds. A detailed

investigation of the quality of the algorithms presented in this paper

remains to be carried out. Notice also that the computation of optimal

error bounds is usually NP-hard [KLRK97].

. More generally, condition numbers provide a

similar device for measuring the quality of error bounds. A detailed

investigation of the quality of the algorithms presented in this paper

remains to be carried out. Notice also that the computation of optimal

error bounds is usually NP-hard [KLRK97].

Of course, the development of asymptotically efficient ball arithmetic

presupposes the existence of the corresponding numerical algorithms.

This topic is another major challenge, which will not be addressed in

this paper. Indeed, asymptotically fast algorithms usually suffer from

lower numerical stability. Besides, switching from machine precision to

multiple precision involves a huge overhead. Special techniques are

required to reduce this overhead when computing with large compound

objects, such as matrices or polynomials. It is also recommended to

systematically implement single, double, quadruple and multiple

precision versions of all algorithms (see [BHL00, BHL01]

for a double-double and quadruple-double precision library). The

The aim of our paper is to provide a survey on how to implement an efficient library for ball arithmetic. Even though many of the ideas are classical in the interval analysis community, we do think that the paper sheds a new light on the topic. Indeed, we systematically investigate several issues which have received limited attention until now:

Using ball arithmetic instead of interval arithmetic, for a wide variety of center and radius types.

The trade-off between algorithmic complexity and quality of the error bounds.

The complexity of multiple precision ball arithmetic.

Throughout the text, we have attempted to provide pointers to existing literature, wherever appropriate. We apologize for possible omissions and would be grateful for any suggestions regarding existing literature.

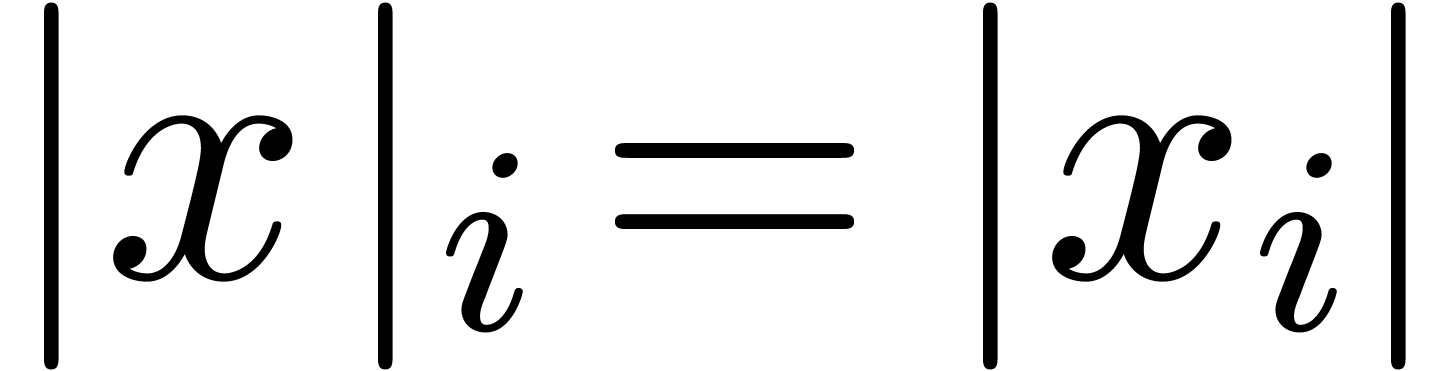

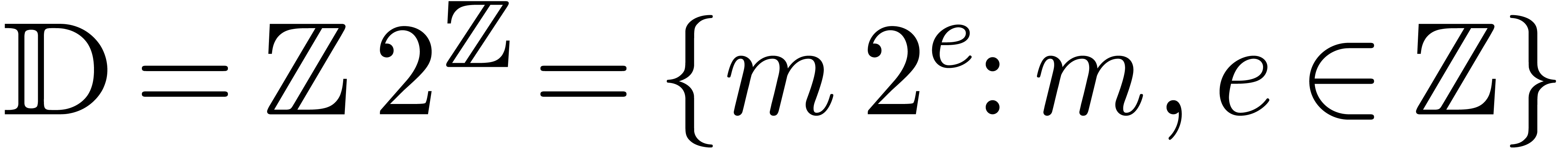

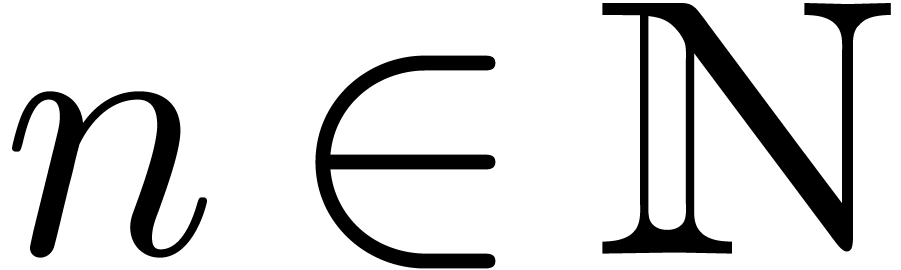

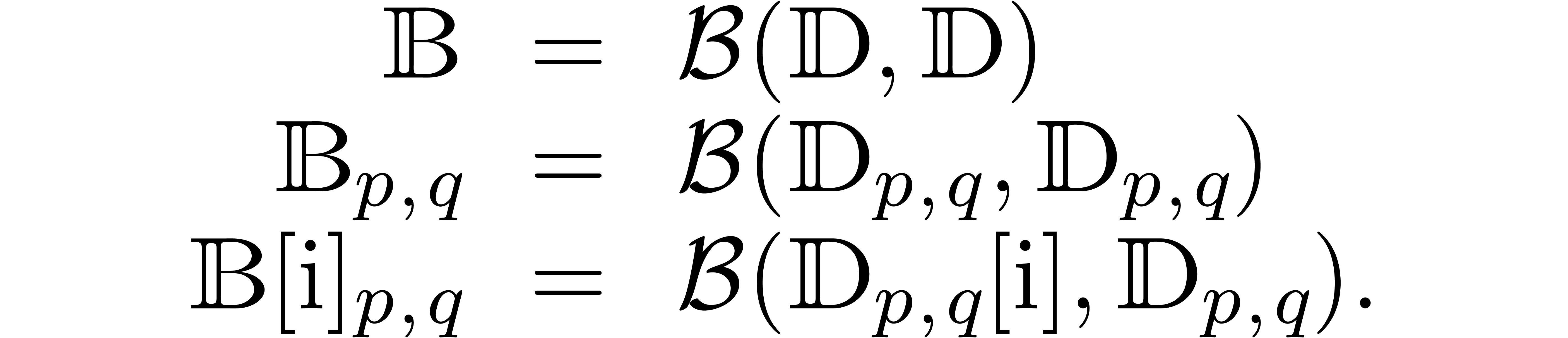

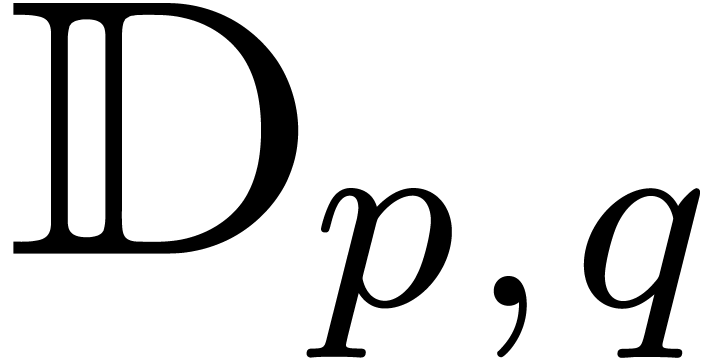

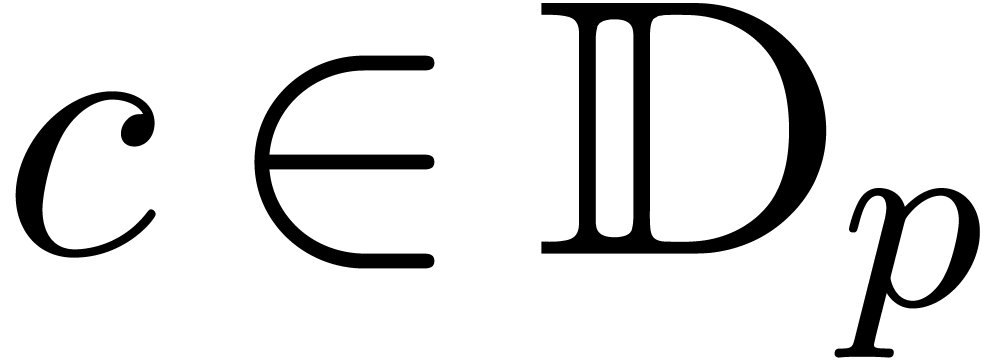

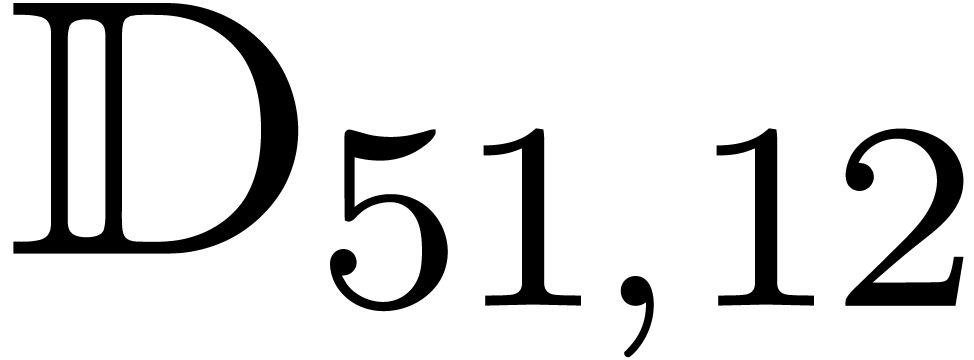

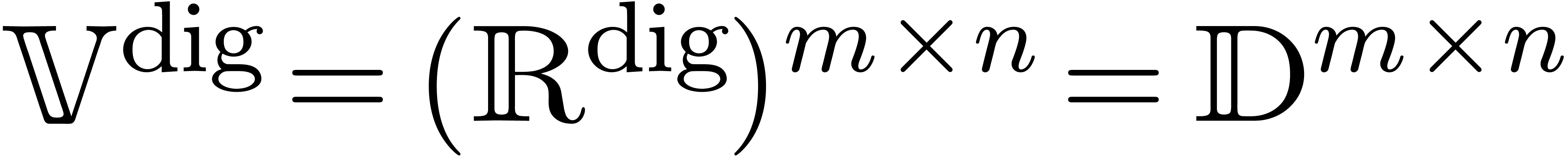

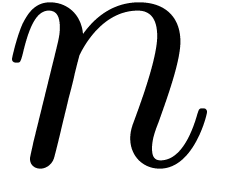

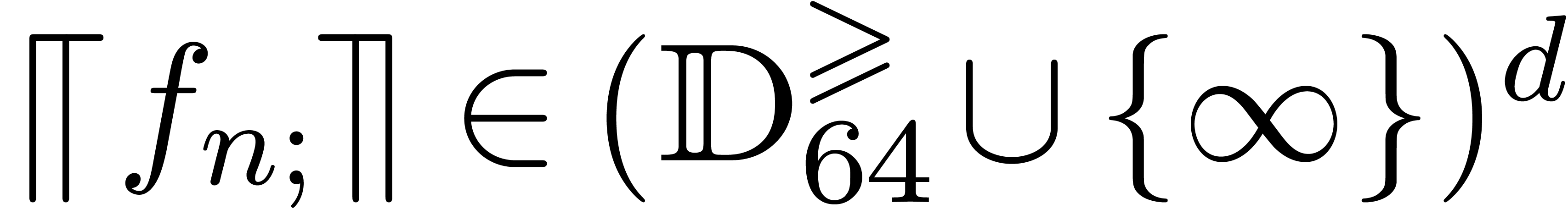

We will denote by

the set of dyadic numbers. Given fixed bit precisions  and

and  for the unsigned mantissa

(without the leading

for the unsigned mantissa

(without the leading  ) and

signed exponent, we also denote by

) and

signed exponent, we also denote by

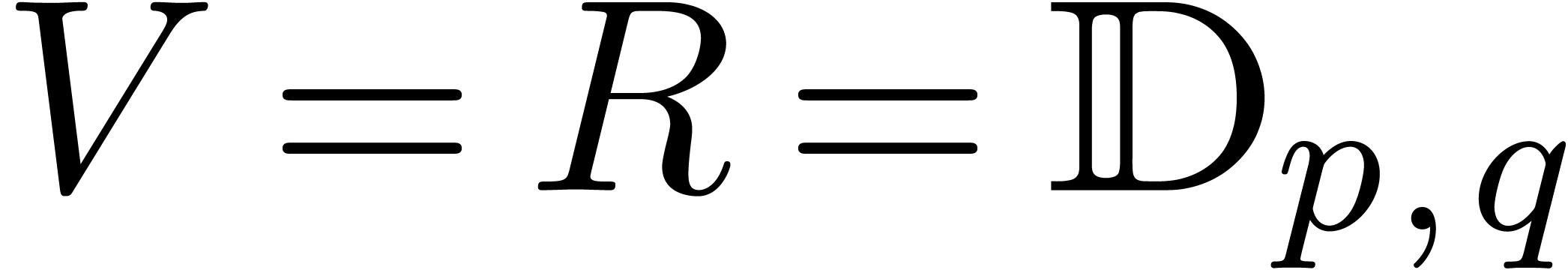

the corresponding set of floating point numbers. Numbers in  can be stored in

can be stored in  bit space and

correspond to “ordinary numbers” in the IEEE 754 norm [ANS08]. For double precision numbers, we have

bit space and

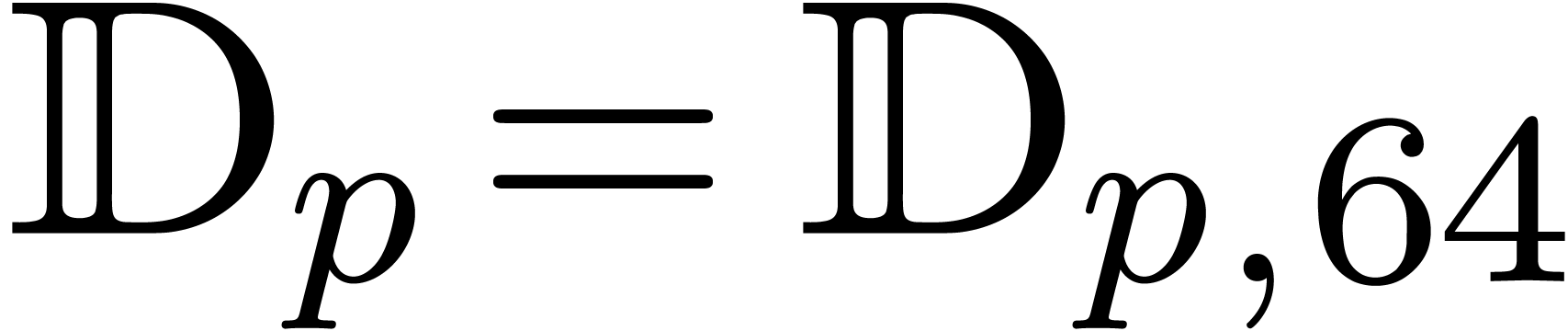

correspond to “ordinary numbers” in the IEEE 754 norm [ANS08]. For double precision numbers, we have  and

and  . For multiple precision

numbers, we usually take

. For multiple precision

numbers, we usually take  to be the size of a

machine word, say

to be the size of a

machine word, say  , and

denote

, and

denote  .

.

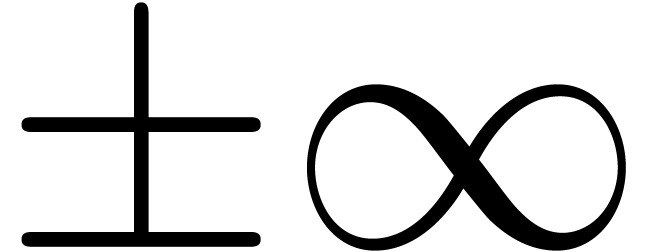

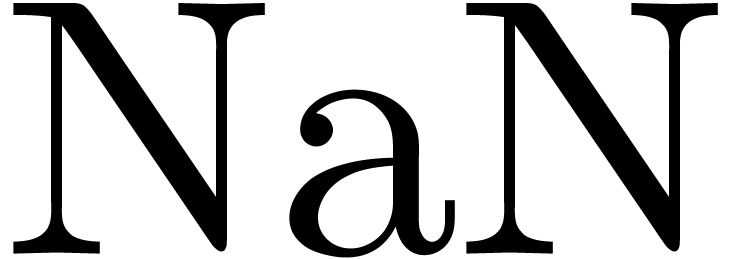

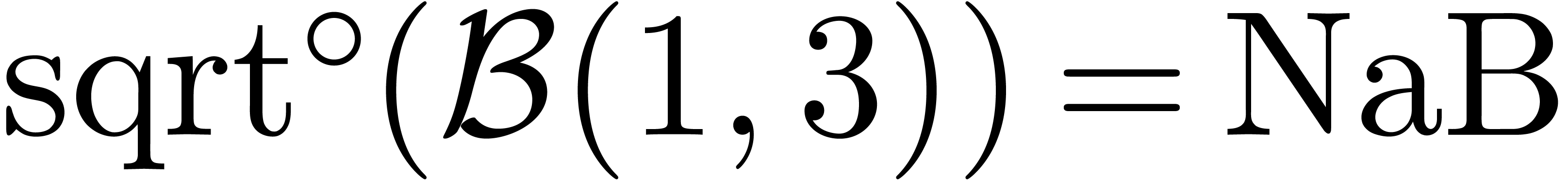

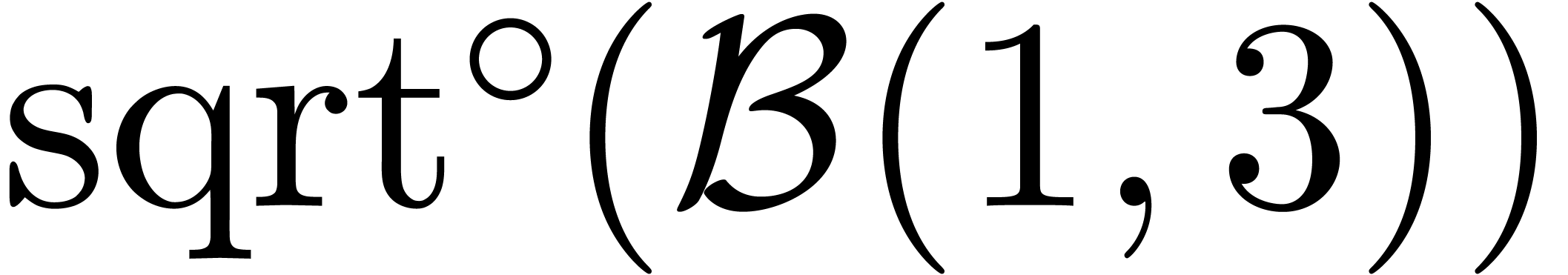

The IEEE 754 norm also defines several special numbers. The most

important ones are the infinities  and “not

a number”

and “not

a number”  , which

corresponds to the result of an invalid operation, such as

, which

corresponds to the result of an invalid operation, such as  . We will denote

. We will denote

Less important details concern the specification of signed zeros  and several possible types of NaNs. For more details,

we refer to [ANS08].

and several possible types of NaNs. For more details,

we refer to [ANS08].

The other main feature of the IEEE 754 is that it specifies how to round

results of operations which cannot be performed exactly. There are four

basic rounding modes  (upwards),

(upwards),  (downwards),

(downwards),  (nearest) and

(nearest) and  (towards zero). Assume that we have an operation

(towards zero). Assume that we have an operation  with

with  . Given

. Given  and

and  , we define

, we define  to be the smallest number

to be the smallest number  in

in  , such that

, such that  . More generally, we will use the notation

. More generally, we will use the notation  to indicate that all operations are performed by rounding

upwards. The other rounding modes are specified in a similar way. One

major advantage of IEEE 754 arithmetic is that it completely specifies

how basic operations are done, making numerical programs behave exactly

in the same way on different architectures. Most current microprocessors

implement the IEEE 754 norm for single and double precision numbers. A

conforming multiple precision implementation also exists [HLRZ00].

to indicate that all operations are performed by rounding

upwards. The other rounding modes are specified in a similar way. One

major advantage of IEEE 754 arithmetic is that it completely specifies

how basic operations are done, making numerical programs behave exactly

in the same way on different architectures. Most current microprocessors

implement the IEEE 754 norm for single and double precision numbers. A

conforming multiple precision implementation also exists [HLRZ00].

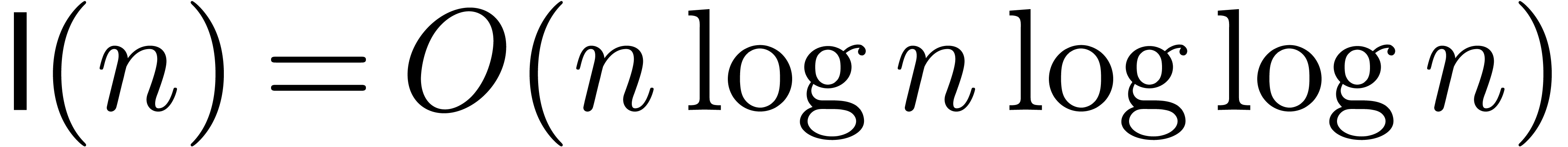

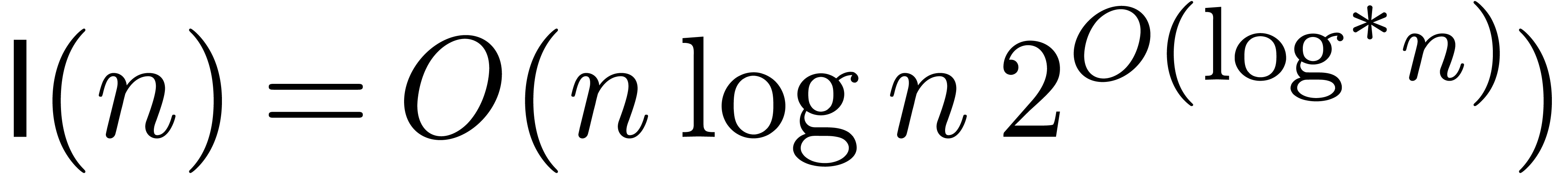

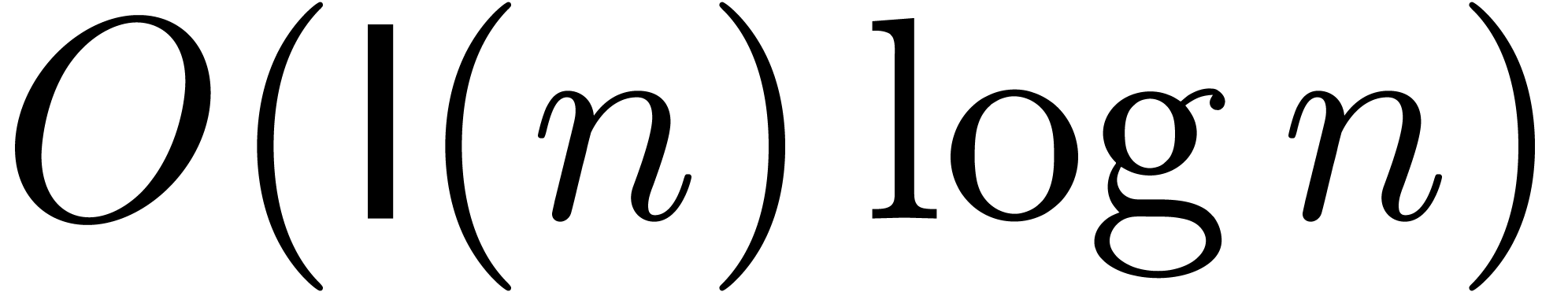

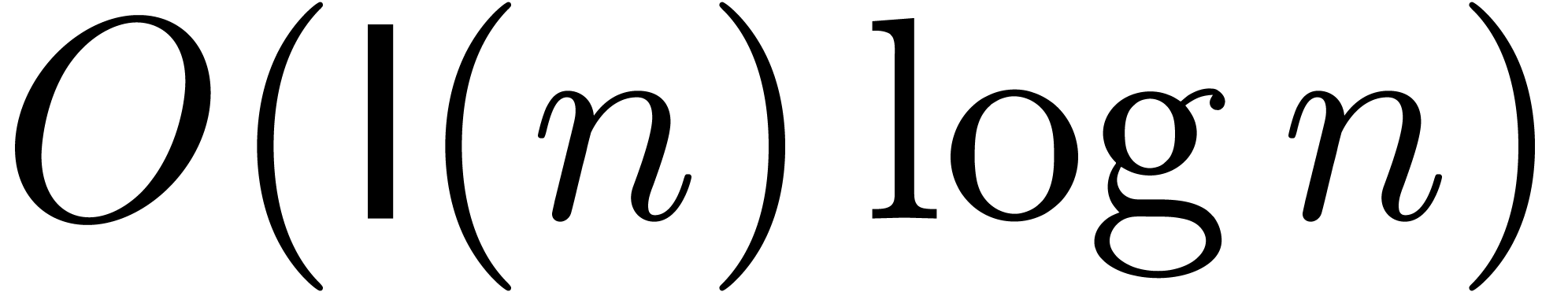

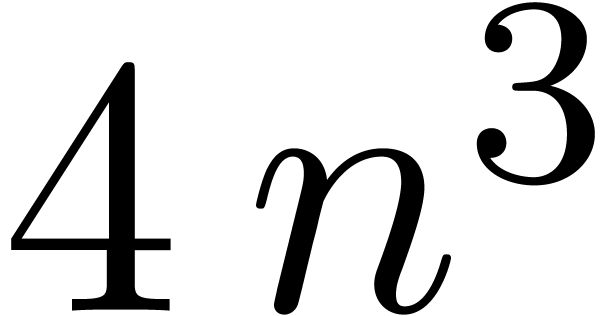

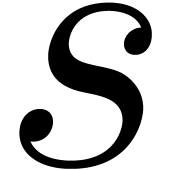

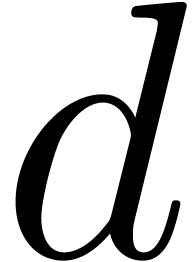

Using Schönhage-Strassen multiplication [SS71], it is

classical that the product of two  -bit

integers can be computed in time

-bit

integers can be computed in time  .

A recent algorithm by Fürer [Für07] further

improves this bound to

.

A recent algorithm by Fürer [Für07] further

improves this bound to  ,

where

,

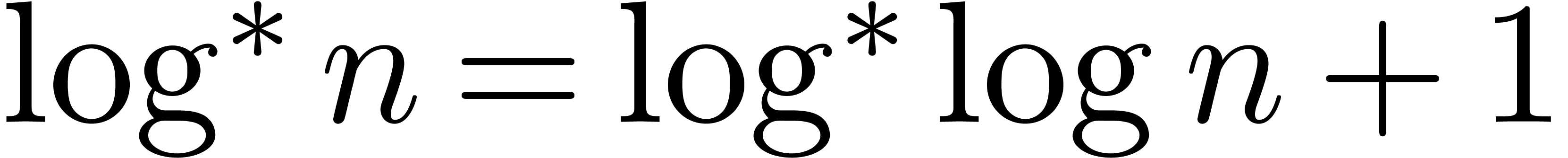

where  denotes the iterator of the logarithm (we

have

denotes the iterator of the logarithm (we

have  ). Other algorithms,

such as division two

). Other algorithms,

such as division two  -bit

integers, have a similar complexity

-bit

integers, have a similar complexity  .

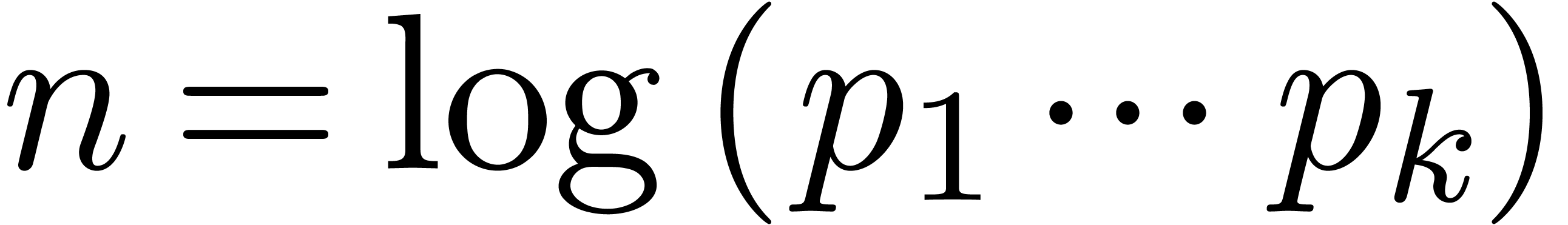

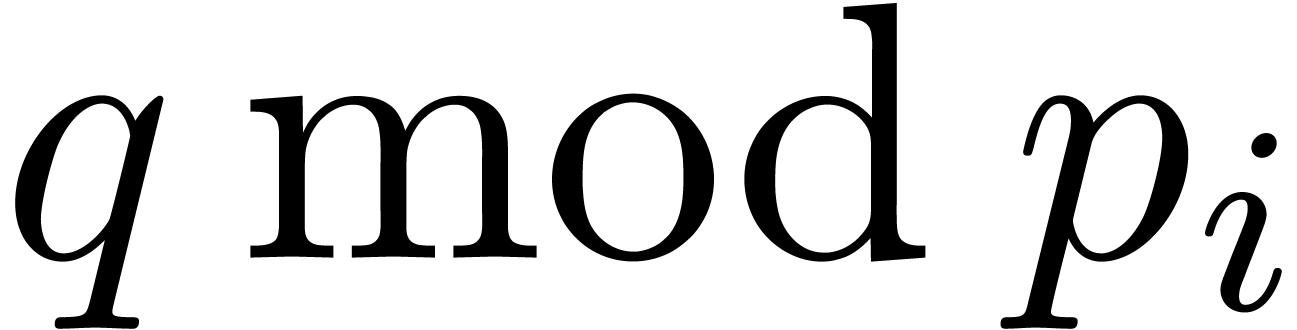

Given

.

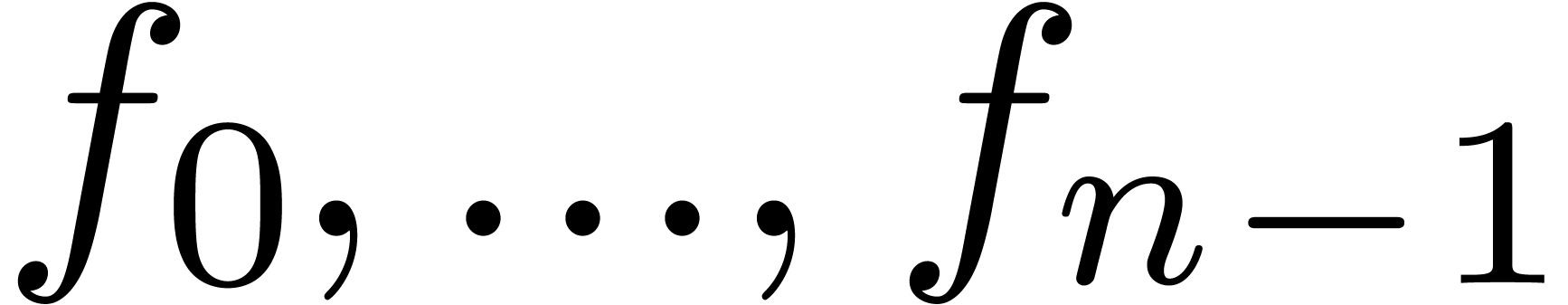

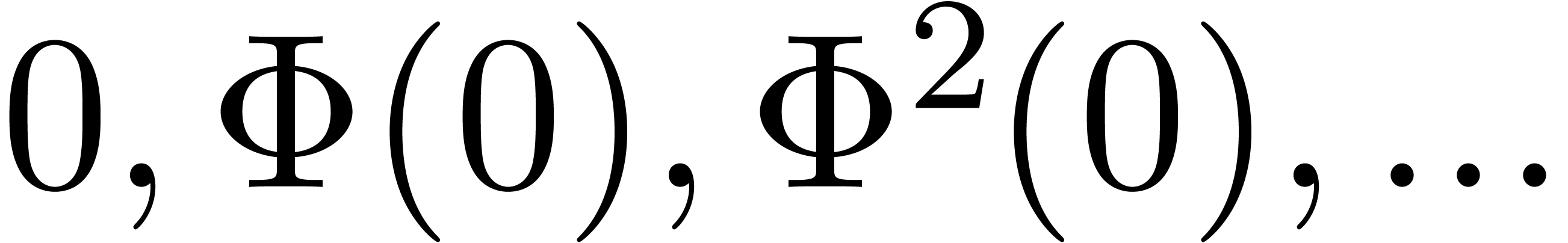

Given  prime numbers

prime numbers  and

a number

and

a number  , the computation of

, the computation of

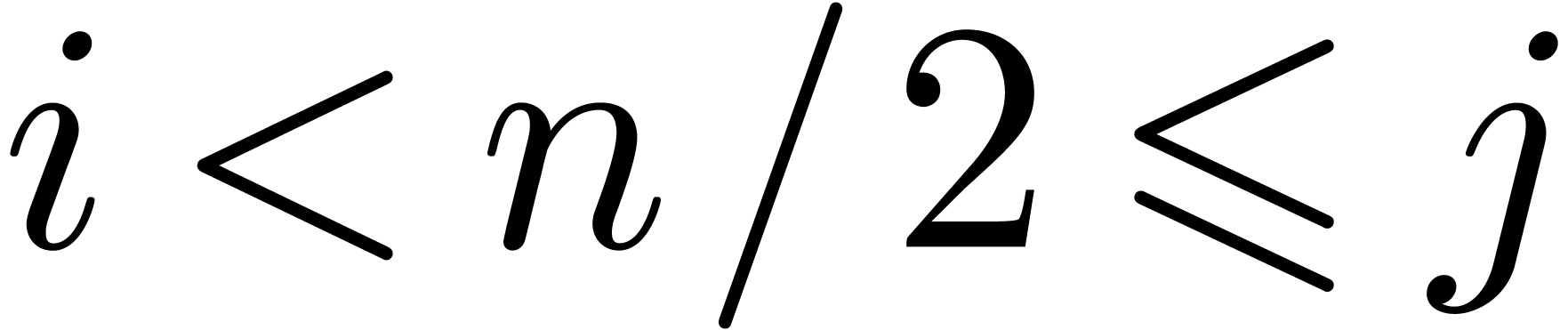

for

for  can be done in time

can be done in time

using binary splitting [GG02,

Theorem 10.25], where

using binary splitting [GG02,

Theorem 10.25], where  . It is

also possible to reconstruct

. It is

also possible to reconstruct  from the remainders

from the remainders

in time

in time  .

.

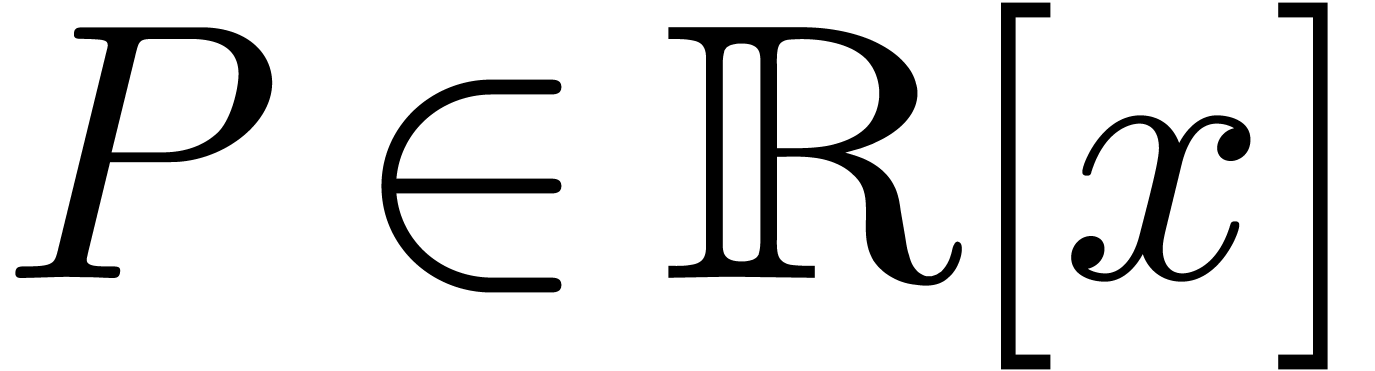

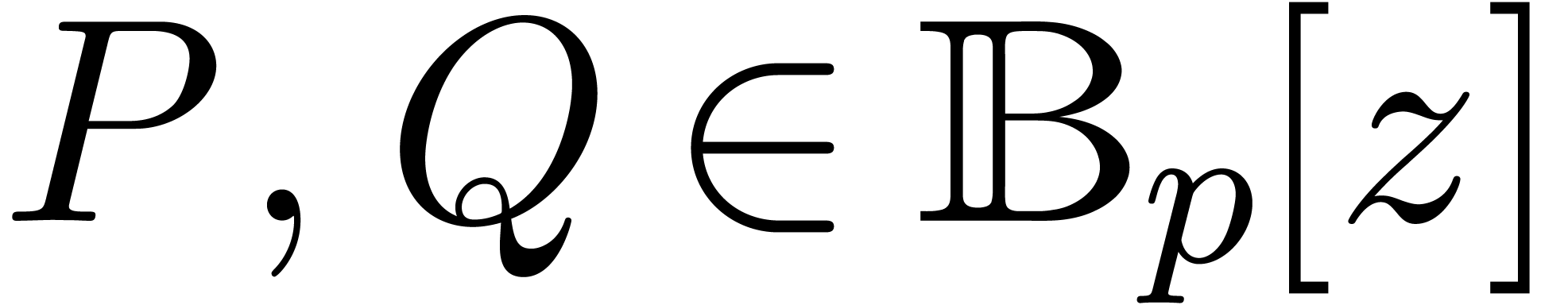

Let  be an effective ring, in the sense that we

have algorithms for performing the ring operations in

be an effective ring, in the sense that we

have algorithms for performing the ring operations in  . If

. If  admits

admits  -th roots of unity for

-th roots of unity for  , then it is classical [CT65] that

the product of two polynomials

, then it is classical [CT65] that

the product of two polynomials  with

with  can be computed using

can be computed using  ring

operations in

ring

operations in  , using the

fast Fourier transform. For general rings, this product can be computed

using

, using the

fast Fourier transform. For general rings, this product can be computed

using  operations [CK91], using a

variant of Schönhage-Strassen multiplication.

operations [CK91], using a

variant of Schönhage-Strassen multiplication.

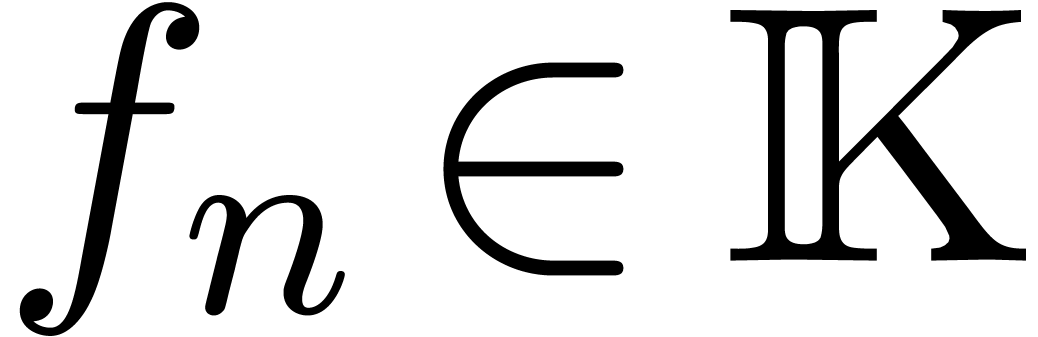

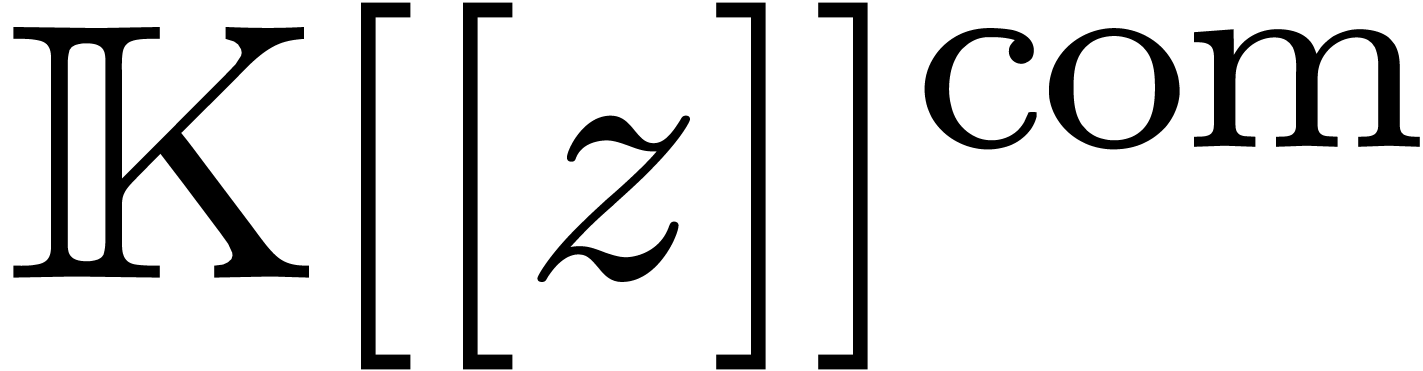

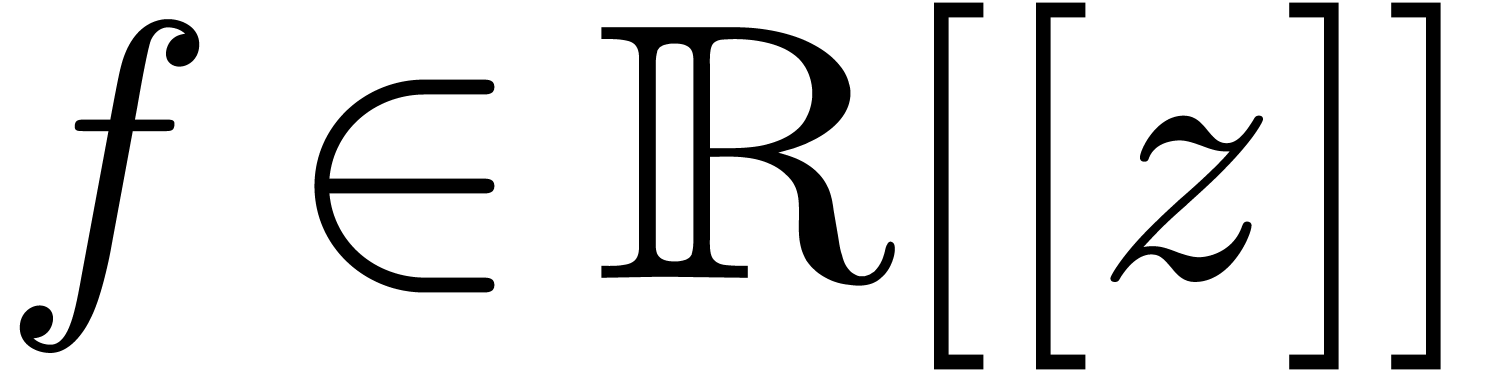

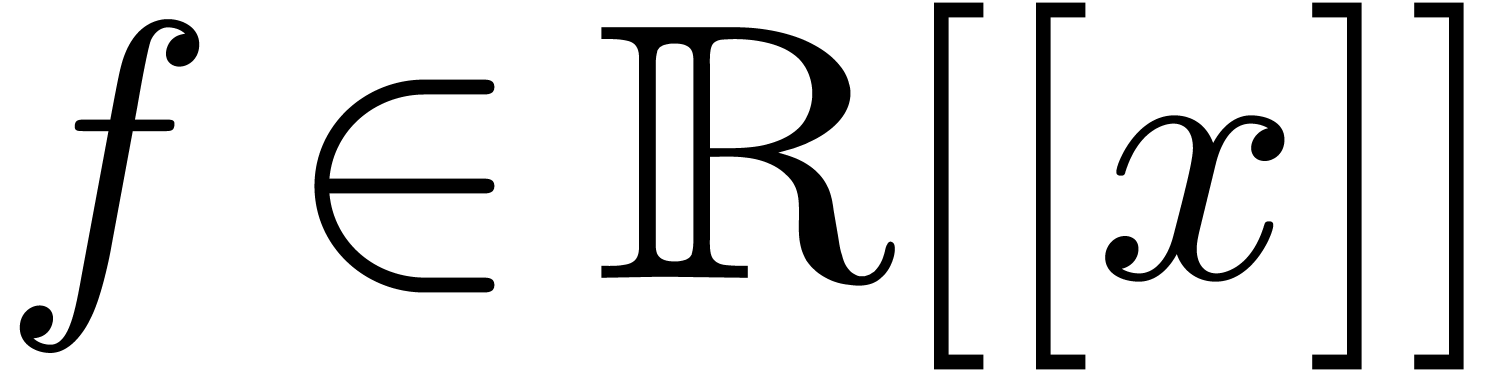

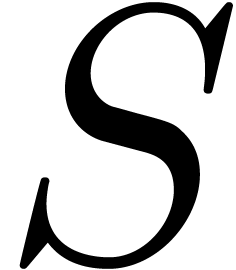

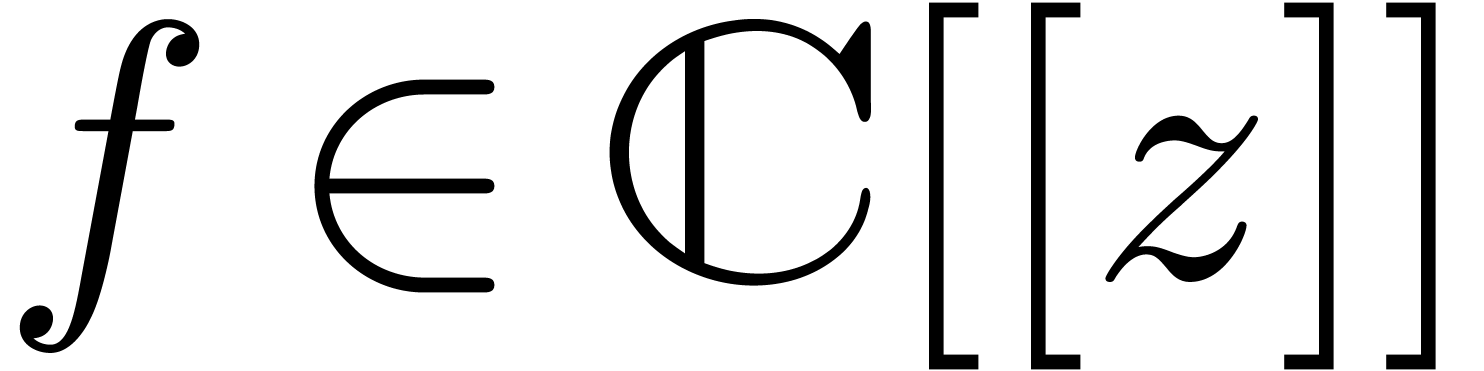

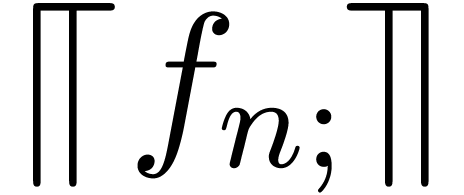

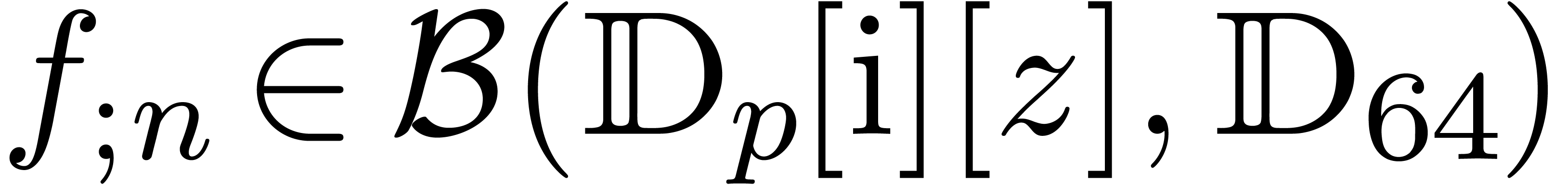

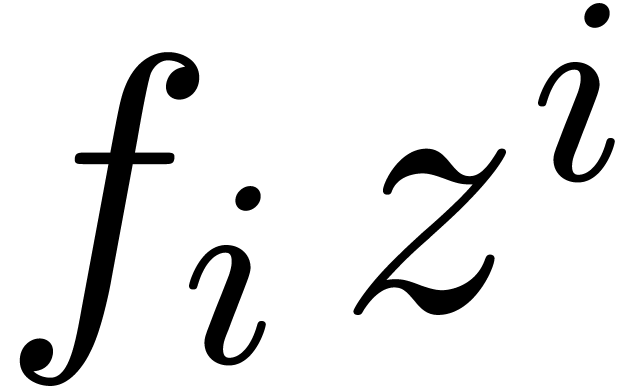

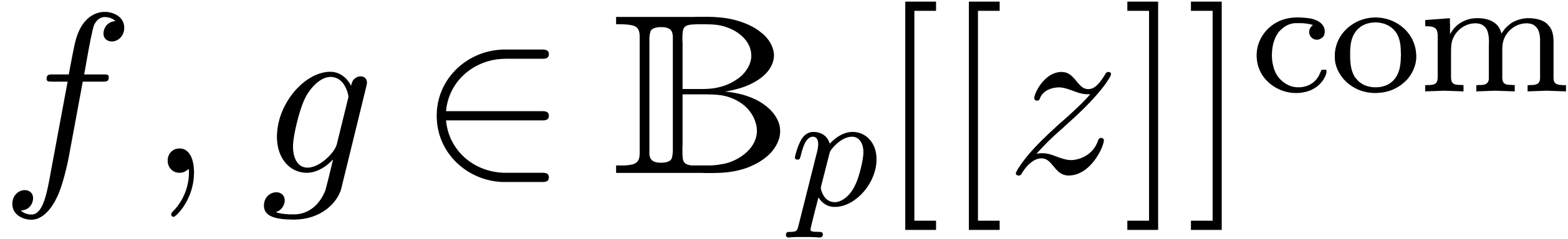

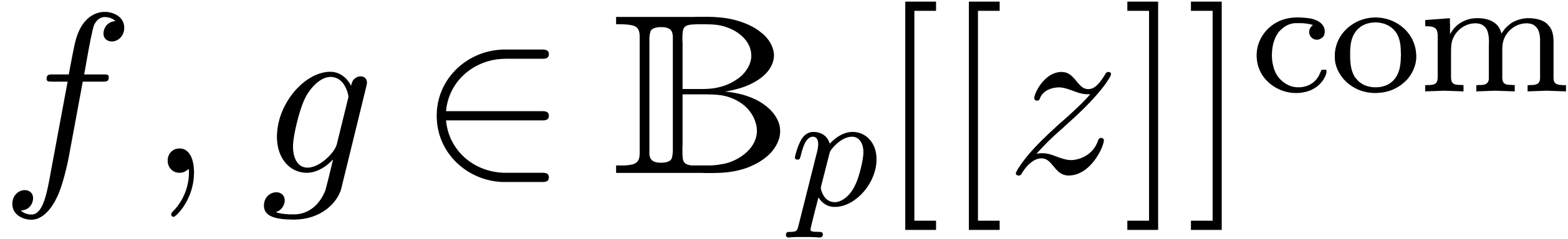

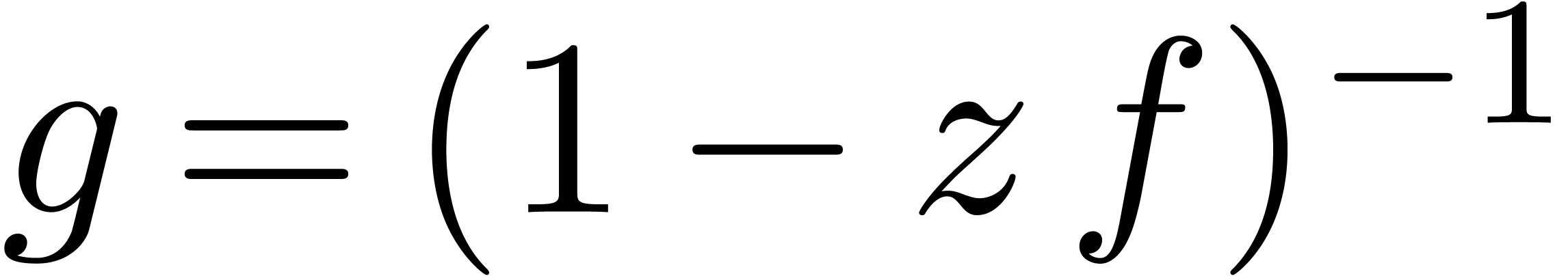

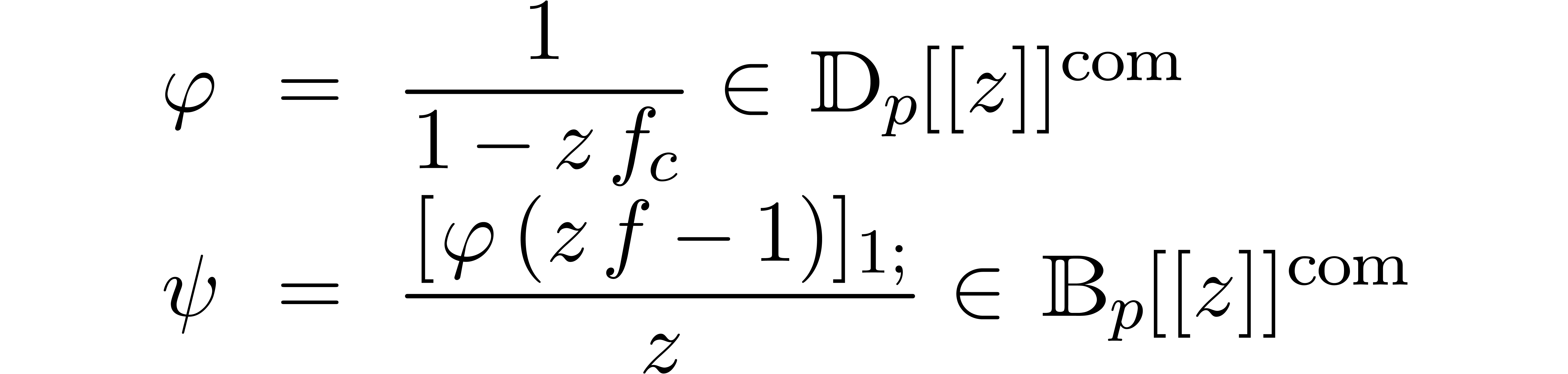

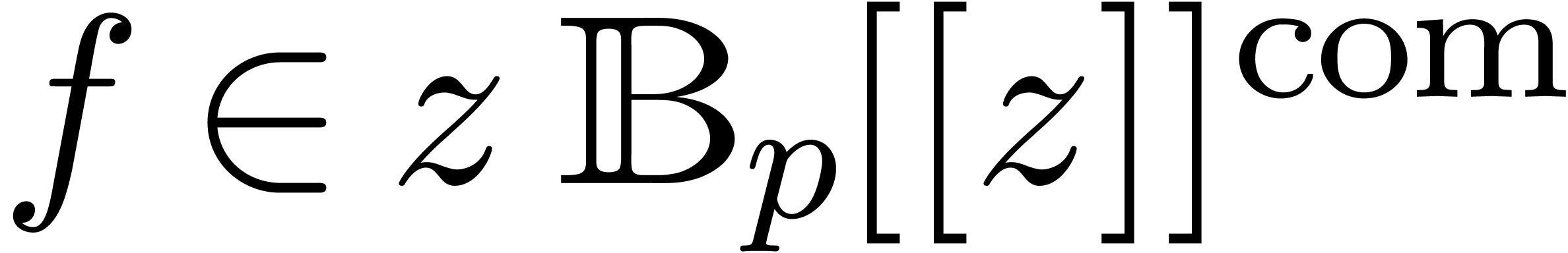

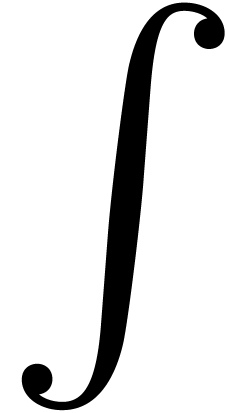

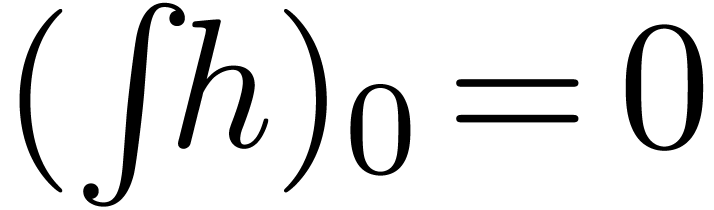

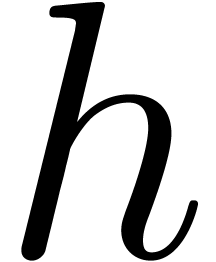

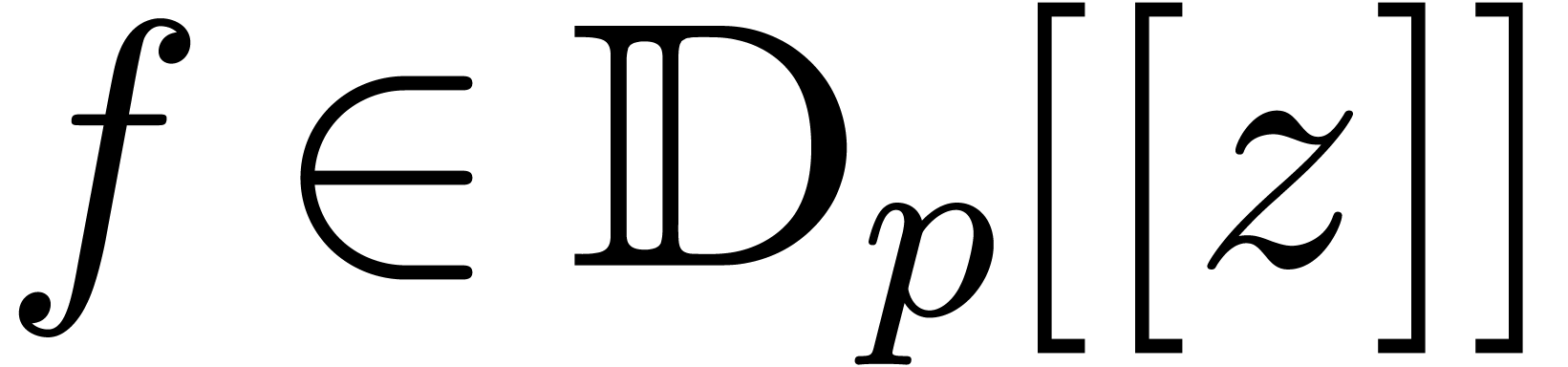

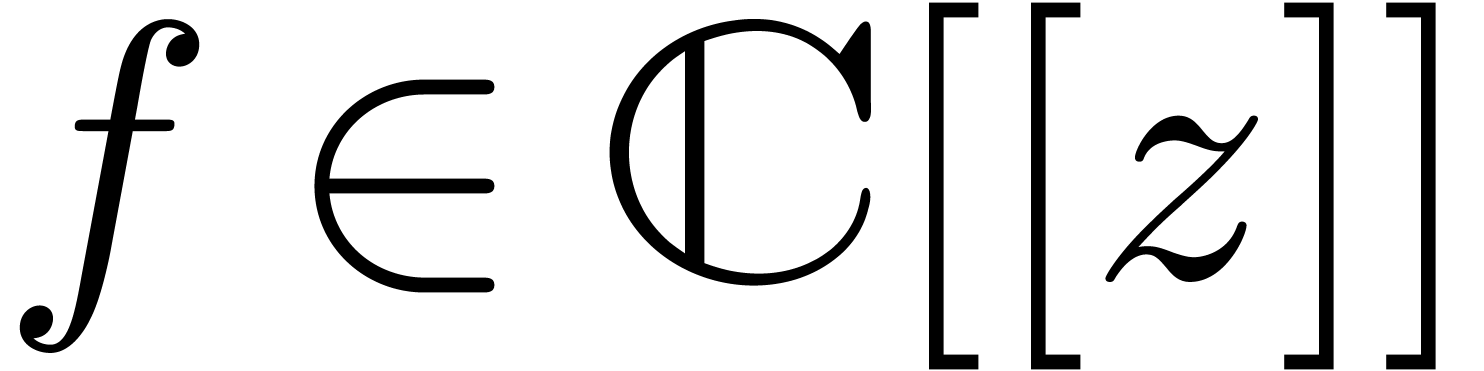

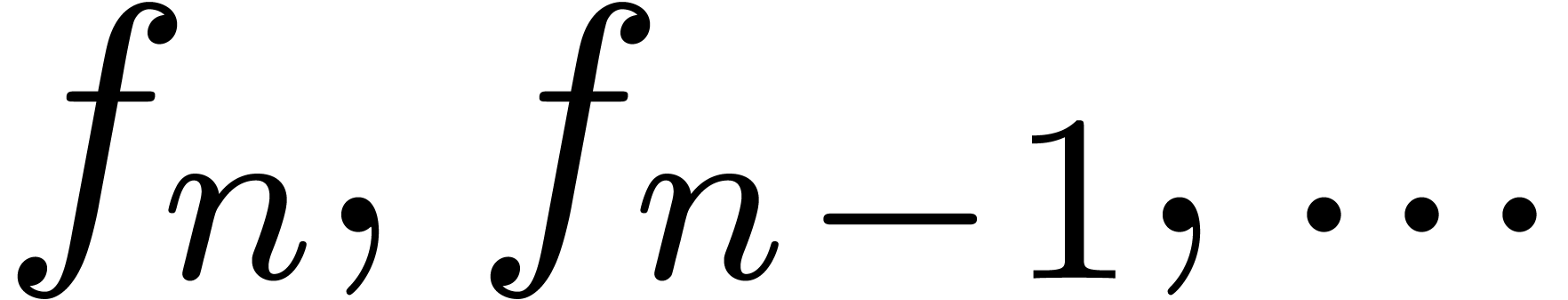

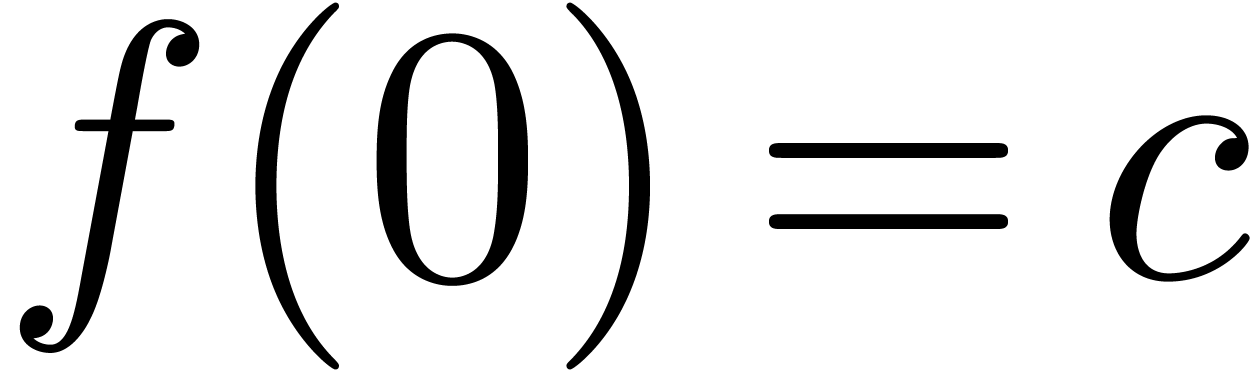

A formal power series  is said to be

computable, if there exists an algorithm which takes

is said to be

computable, if there exists an algorithm which takes  on input and which computes

on input and which computes  .

We denote by

.

We denote by  the set of computable power series.

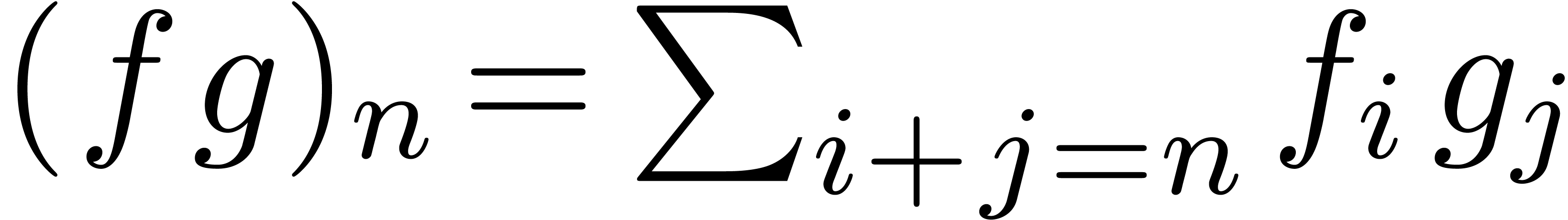

Many simple operations on formal power series, such as multiplication,

division, exponentiation, etc., as well as the resolution

of algebraic differential equations can be done up till order

the set of computable power series.

Many simple operations on formal power series, such as multiplication,

division, exponentiation, etc., as well as the resolution

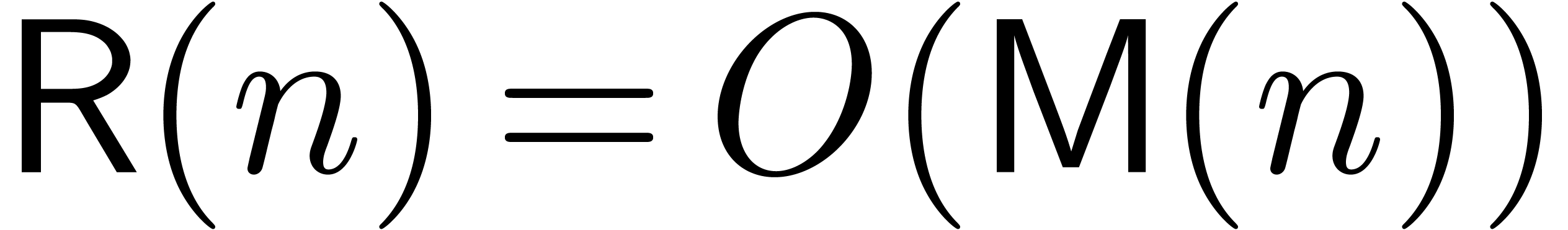

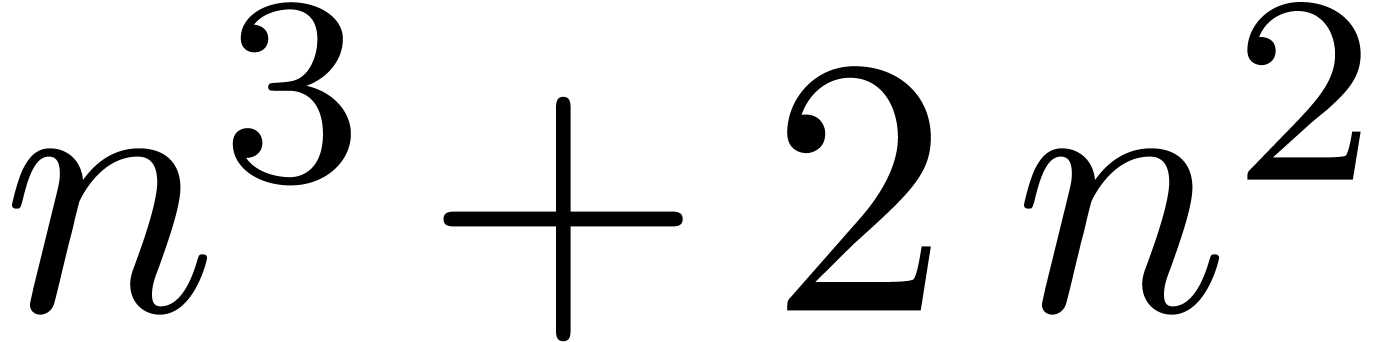

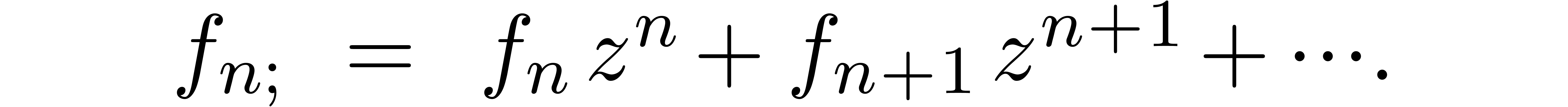

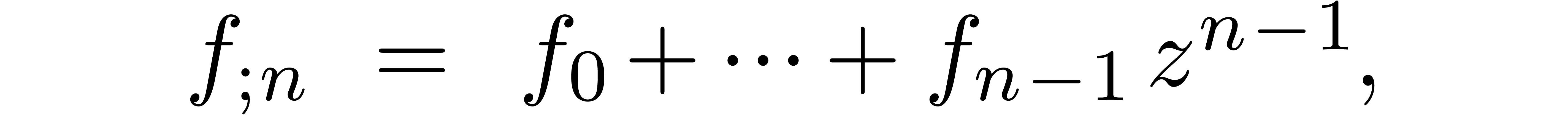

of algebraic differential equations can be done up till order  using a similar time complexity

using a similar time complexity  as

polynomial multiplication [BK78, BCO+06, vdH06b]. Alternative relaxed multiplication

algorithms of time complexity

as

polynomial multiplication [BK78, BCO+06, vdH06b]. Alternative relaxed multiplication

algorithms of time complexity  are given in [vdH02a, vdH07a]. In this case, the coefficients

are given in [vdH02a, vdH07a]. In this case, the coefficients

are computed gradually and

are computed gradually and  is output as soon as

is output as soon as  and

and  are known. This strategy is often most efficient for the resolution of

implicit equations.

are known. This strategy is often most efficient for the resolution of

implicit equations.

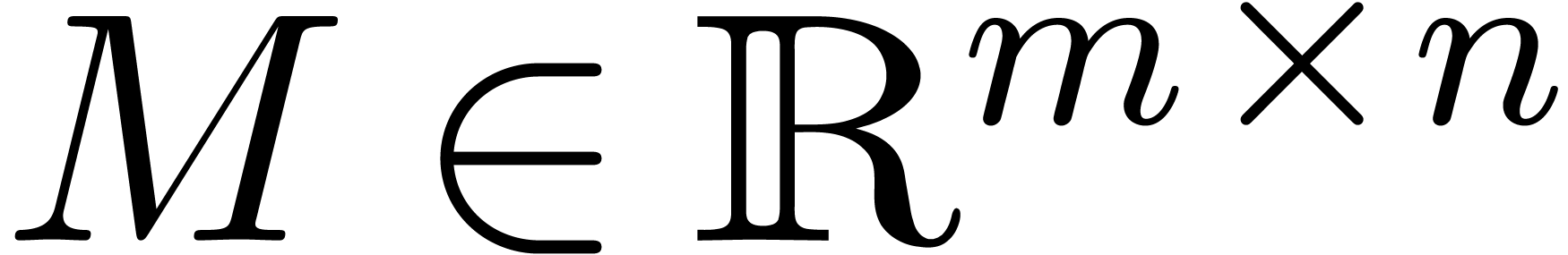

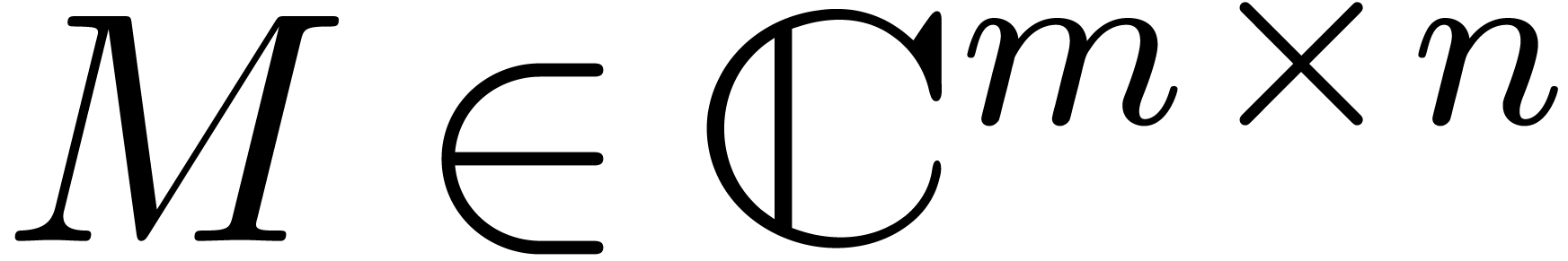

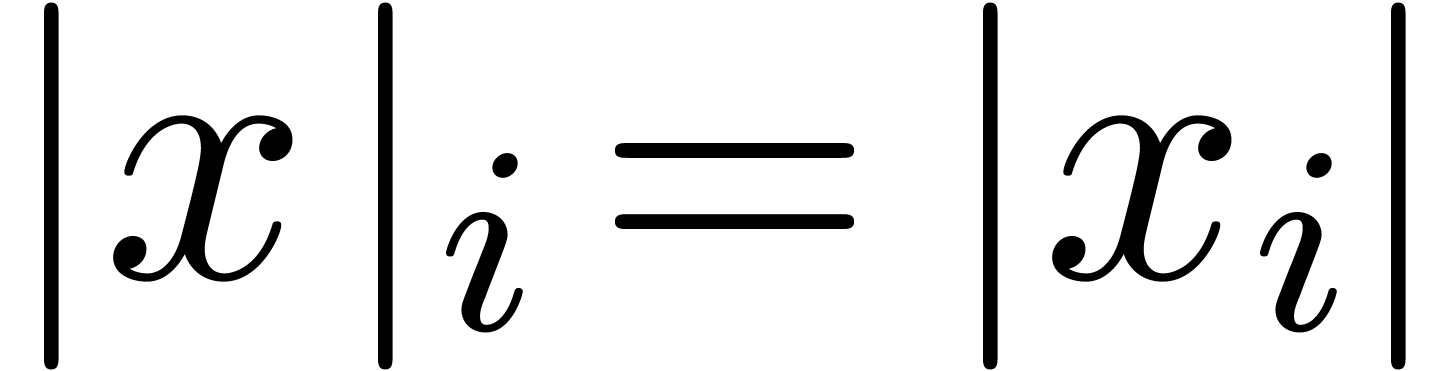

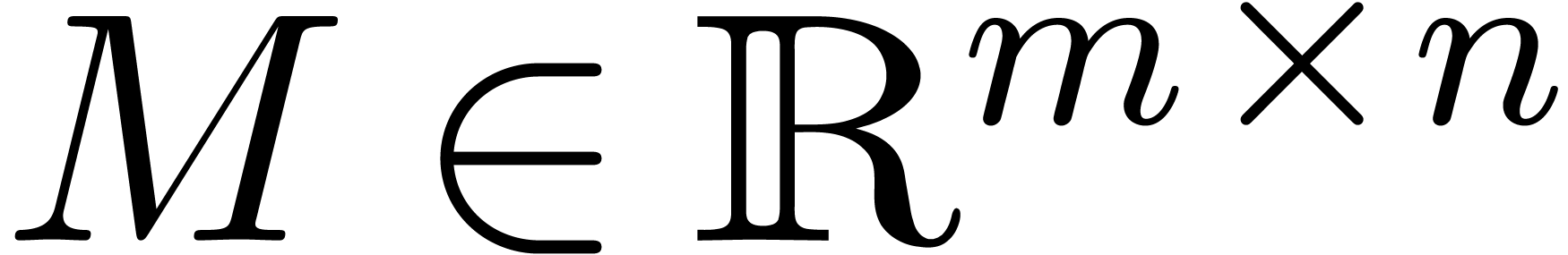

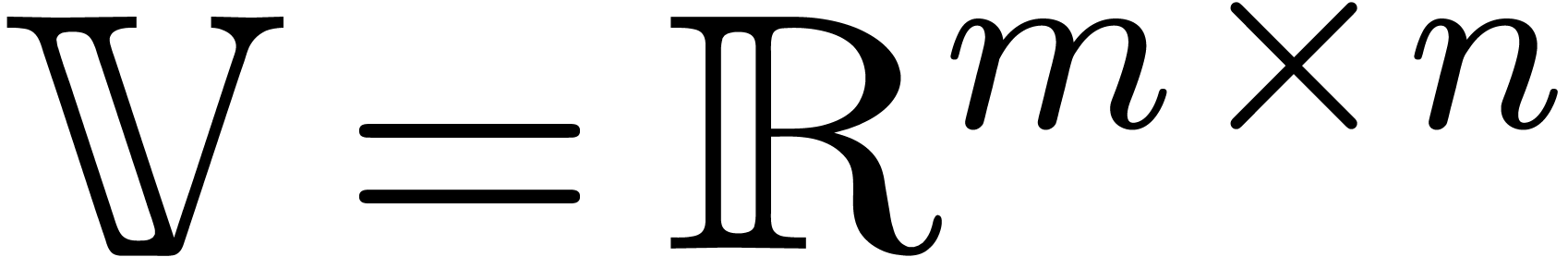

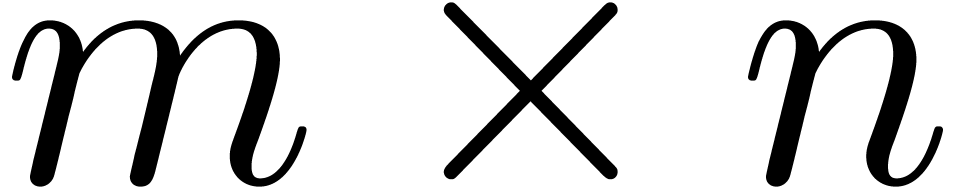

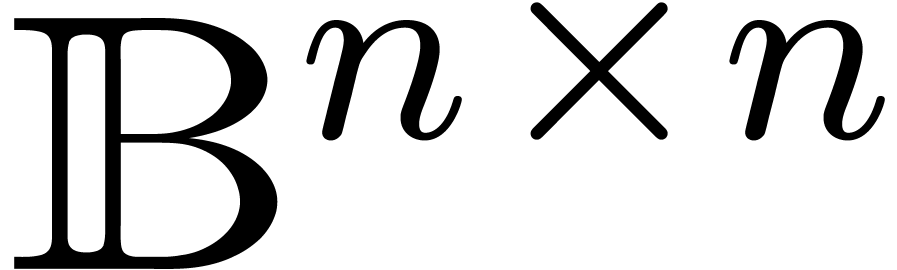

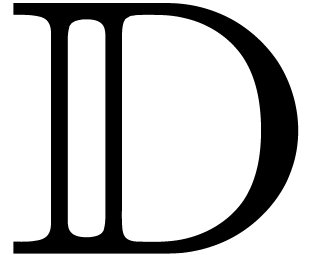

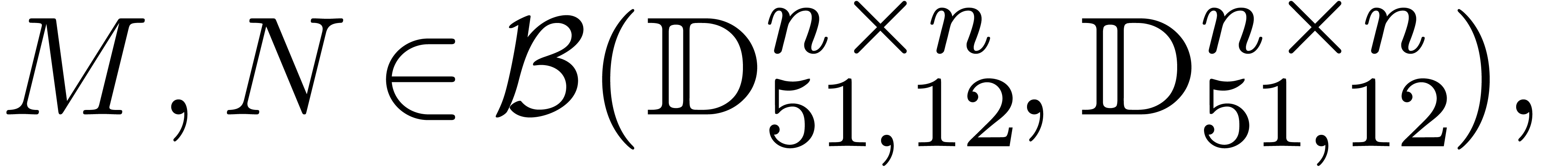

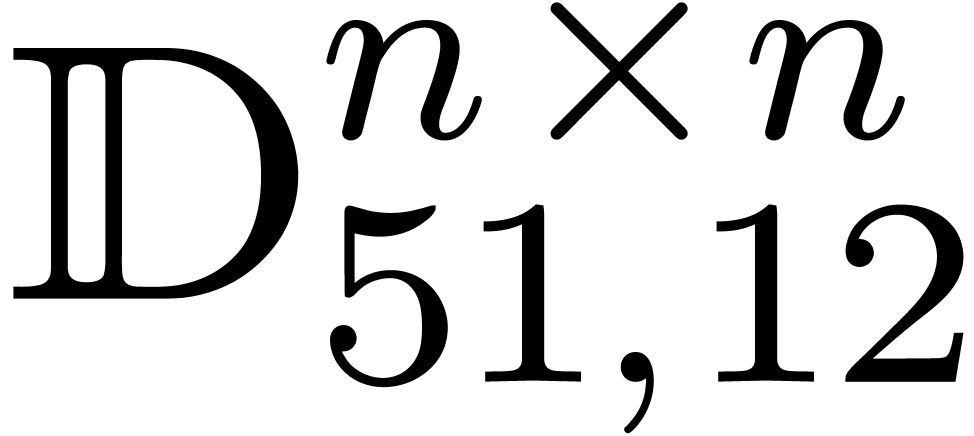

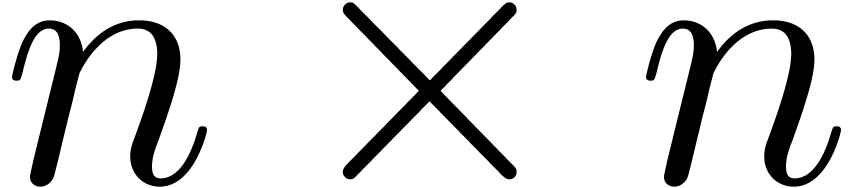

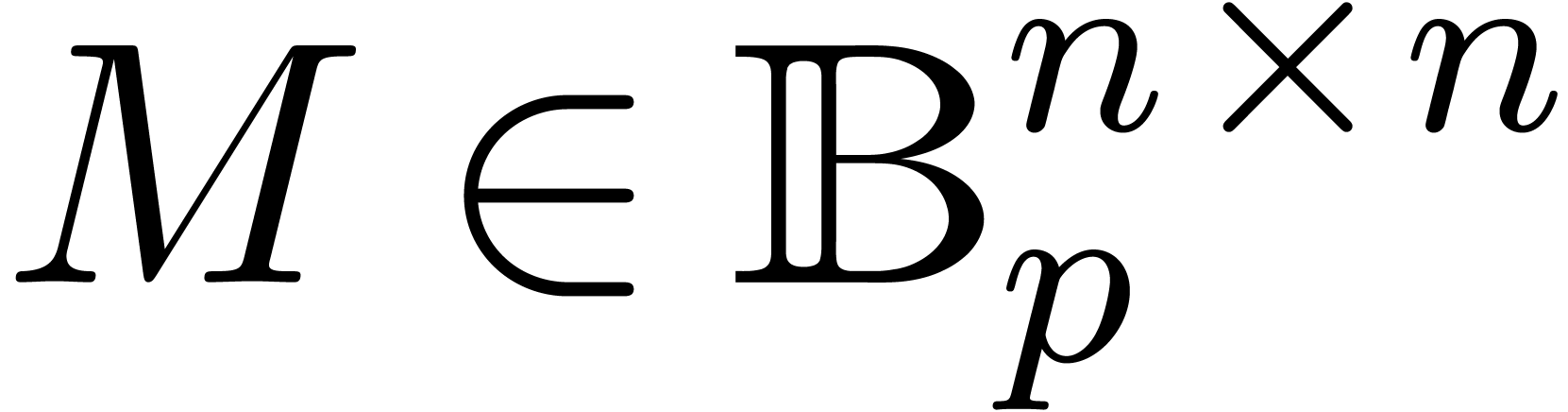

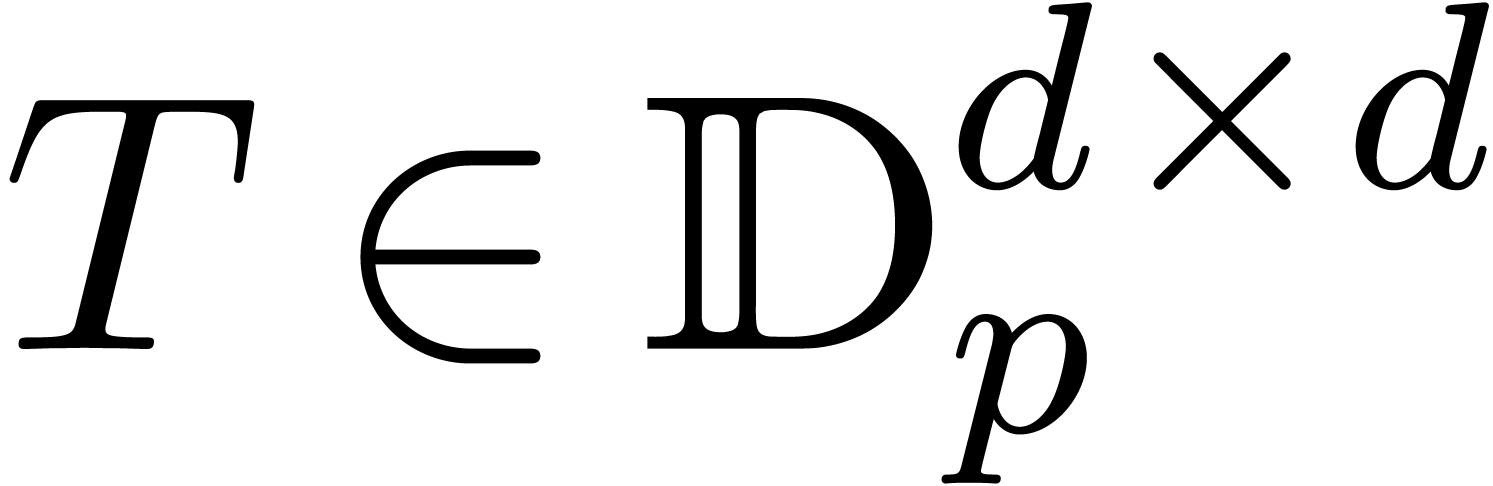

Let  denote the set of

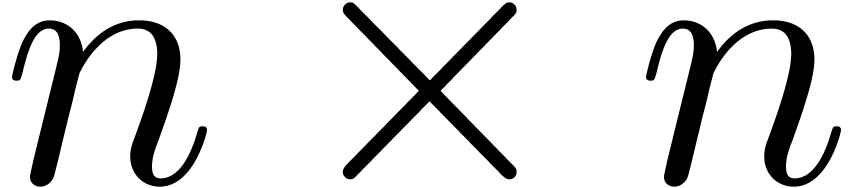

denote the set of  matrices with entries in

matrices with entries in  .

Given two

.

Given two  matrices

matrices  ,

the naive algorithm for computing

,

the naive algorithm for computing  requires

requires  ring operations with

ring operations with  .

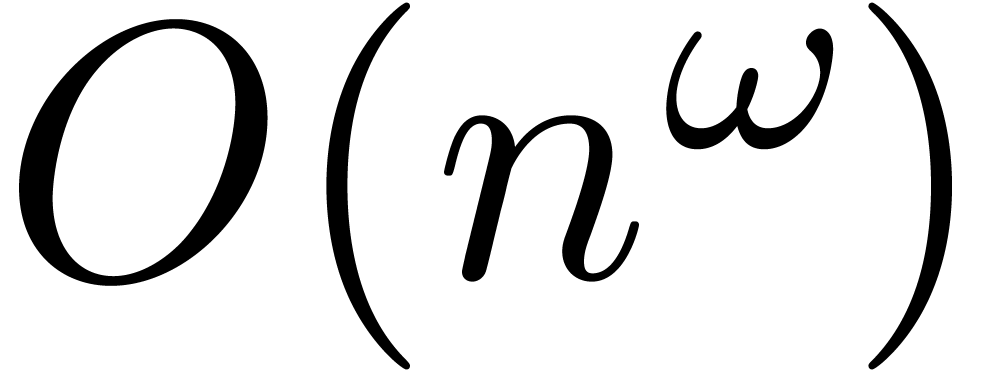

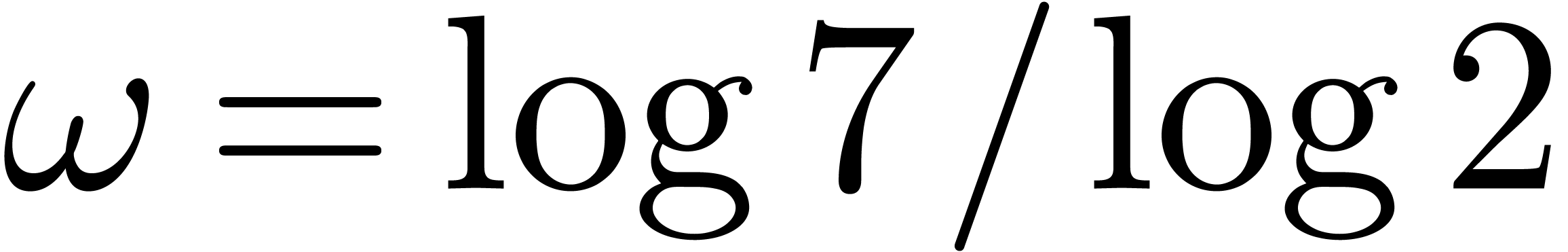

The exponent

.

The exponent  has been reduced by Strassen [Str69] to

has been reduced by Strassen [Str69] to  . Using

more sophisticated techniques,

. Using

more sophisticated techniques,  can be further

reduced to

can be further

reduced to  [Pan84, CW87].

However, this exponent has never been observed in practice.

[Pan84, CW87].

However, this exponent has never been observed in practice.

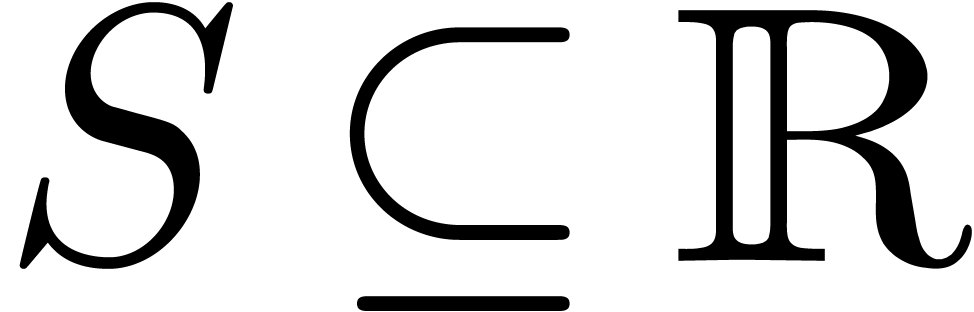

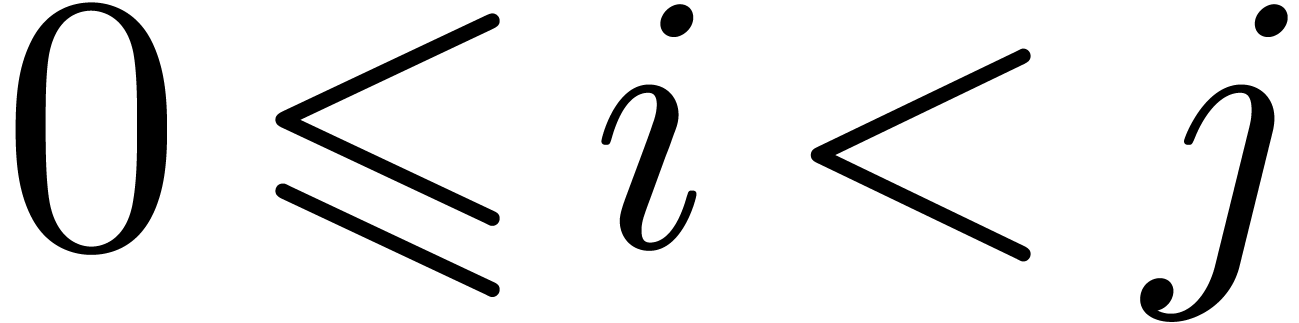

Given  in a totally ordered set

in a totally ordered set  , we will denote by

, we will denote by  the

closed interval

the

closed interval

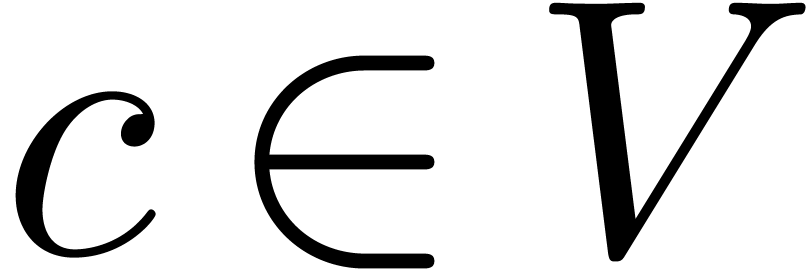

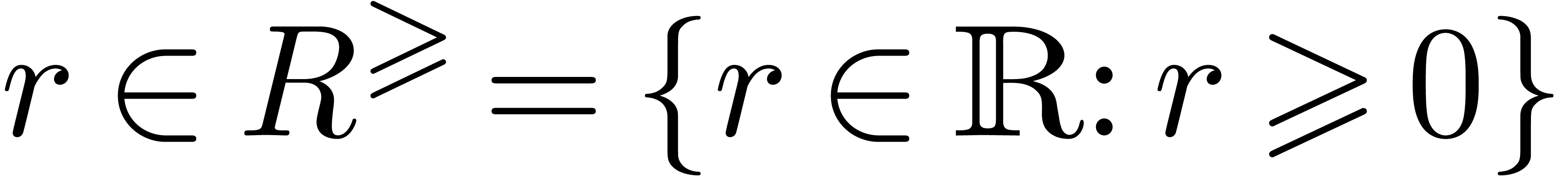

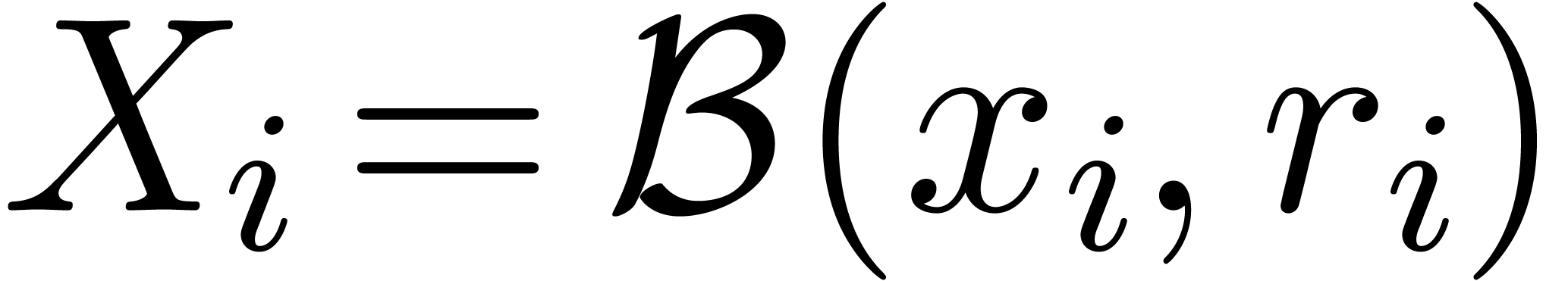

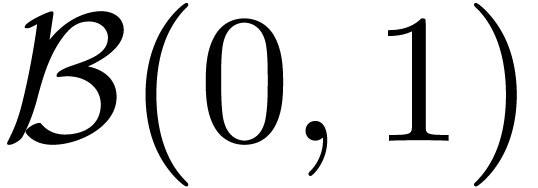

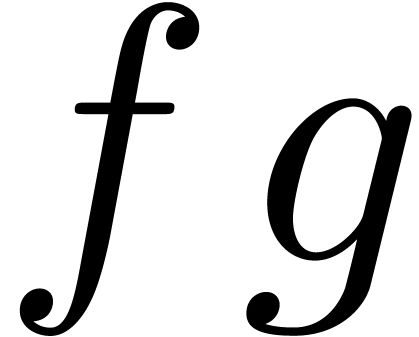

Assume now that  is a totally ordered field and

consider a normed vector space

is a totally ordered field and

consider a normed vector space  over

over  . Given

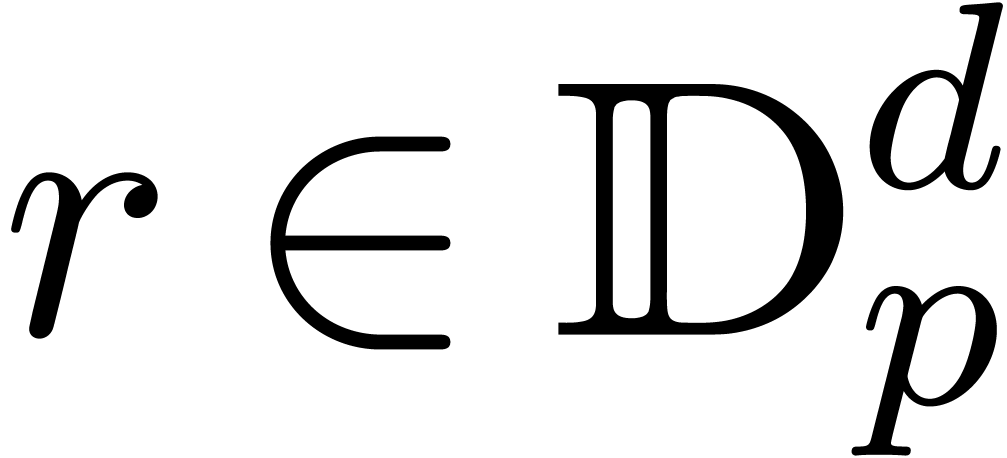

. Given  and

and  , we will denote by

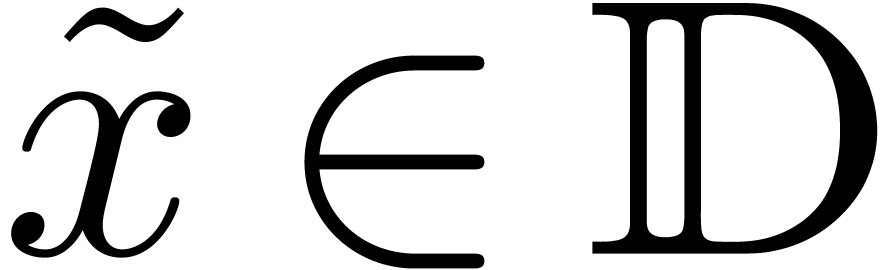

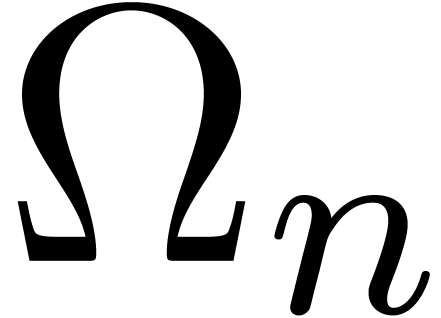

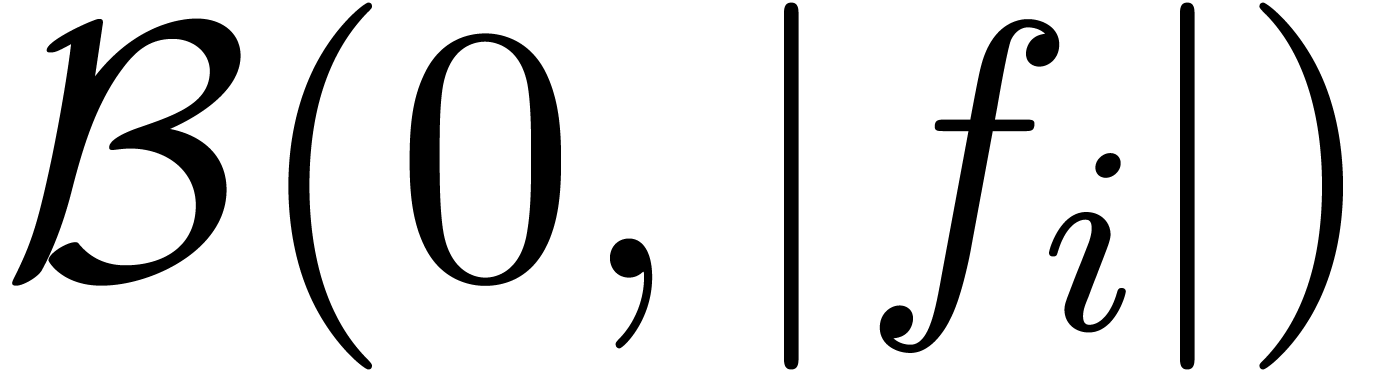

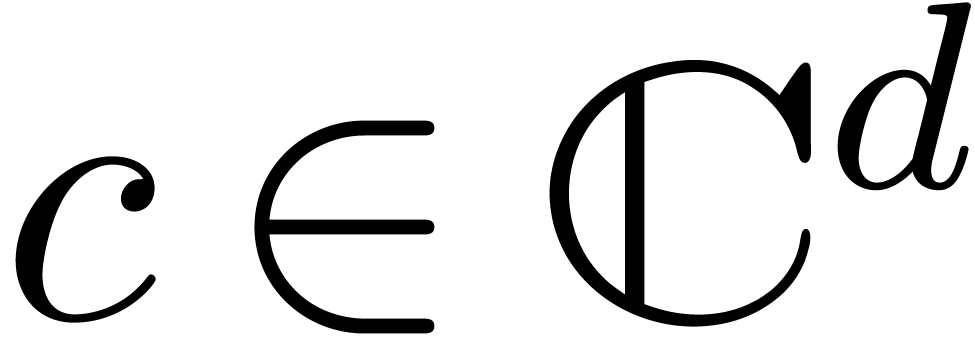

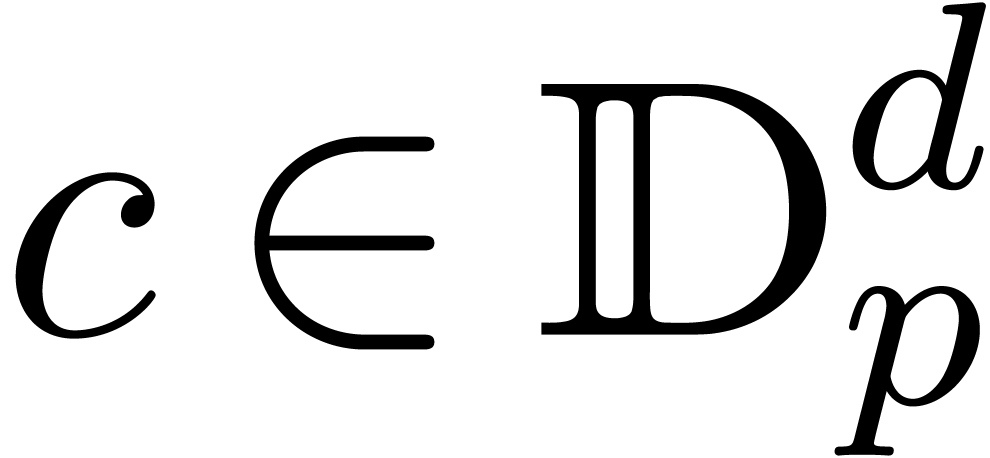

, we will denote by

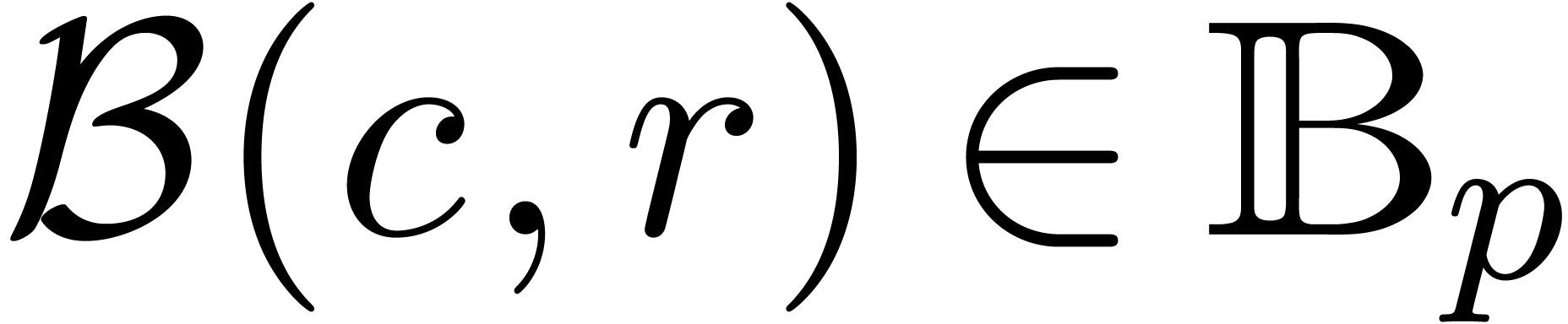

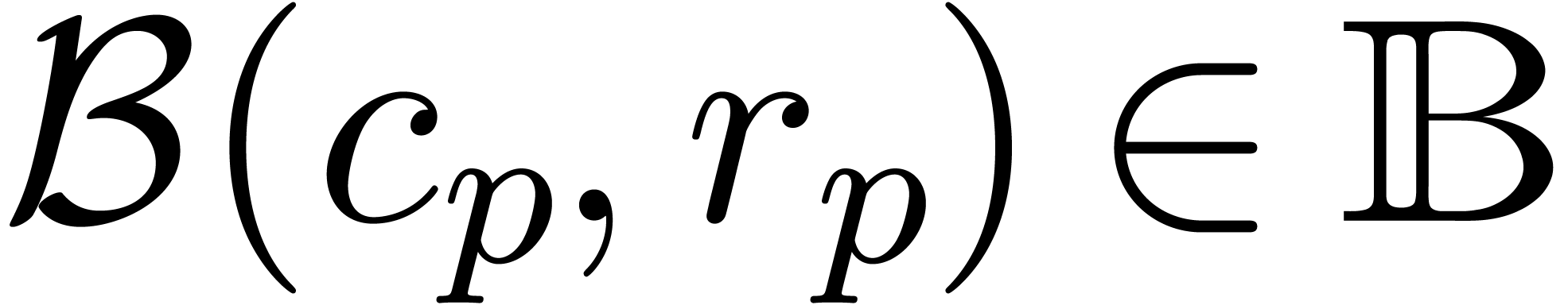

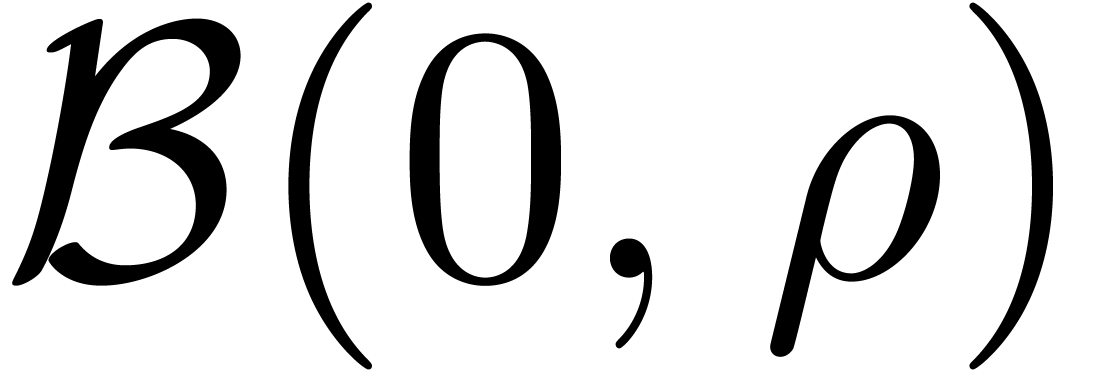

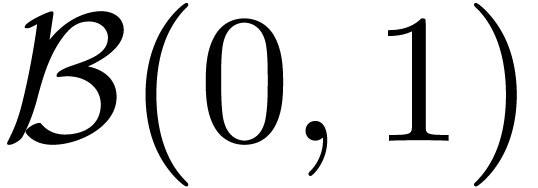

the closed ball with center  and radius

and radius  . Notice that we will never work

with open balls in what follows. We denote the set of all balls of the

form (2) by

. Notice that we will never work

with open balls in what follows. We denote the set of all balls of the

form (2) by  .

Given

.

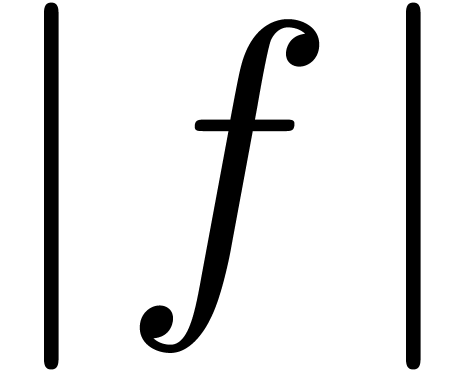

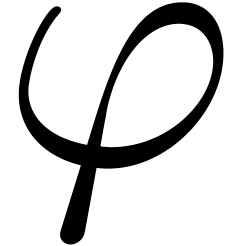

Given  , we will denote by

, we will denote by

and

and  the center

resp. radius of

the center

resp. radius of  .

.

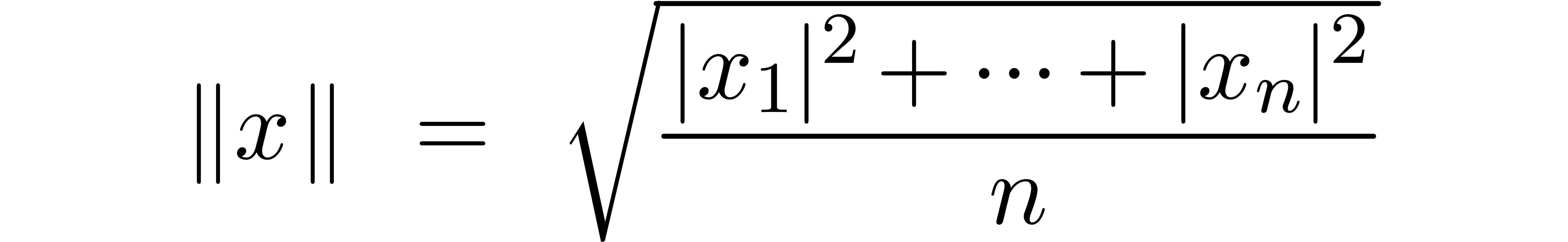

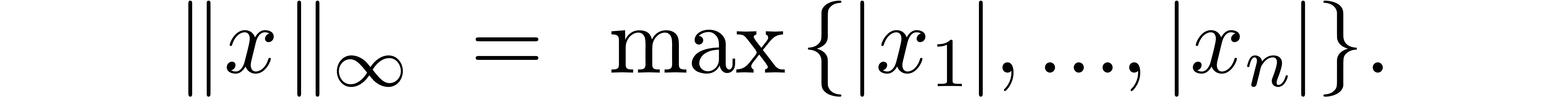

We will use the standard euclidean norm

as the default norm on  and

and  . Occasionally, we will also consider the

max-norm

. Occasionally, we will also consider the

max-norm

For matrices  or

or  ,

the default operator norm is defined by

,

the default operator norm is defined by

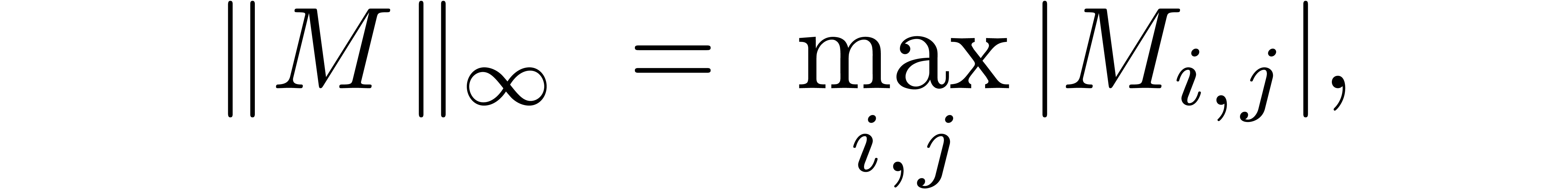

Occasionally, we will also consider the max-norm

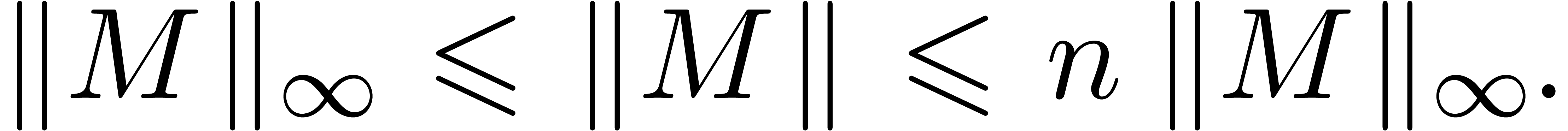

which satisfies

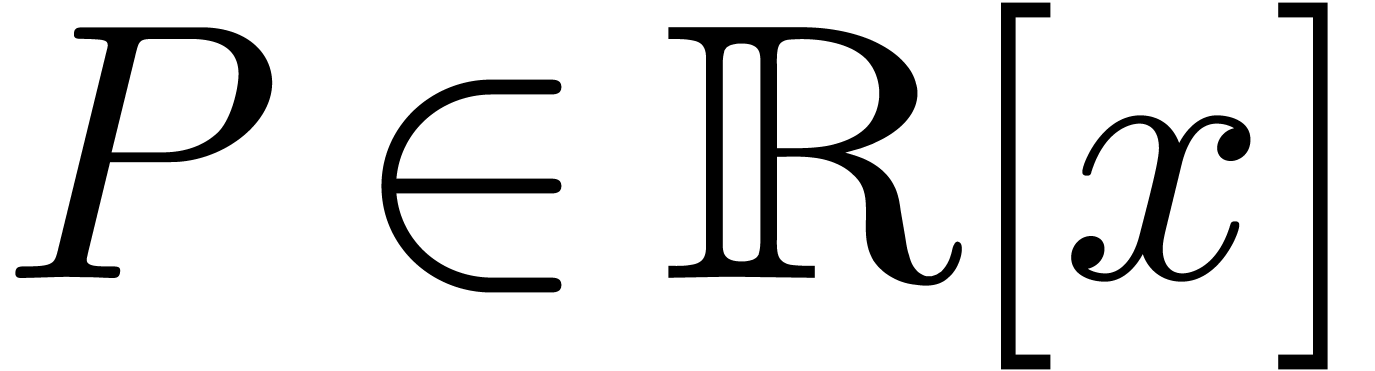

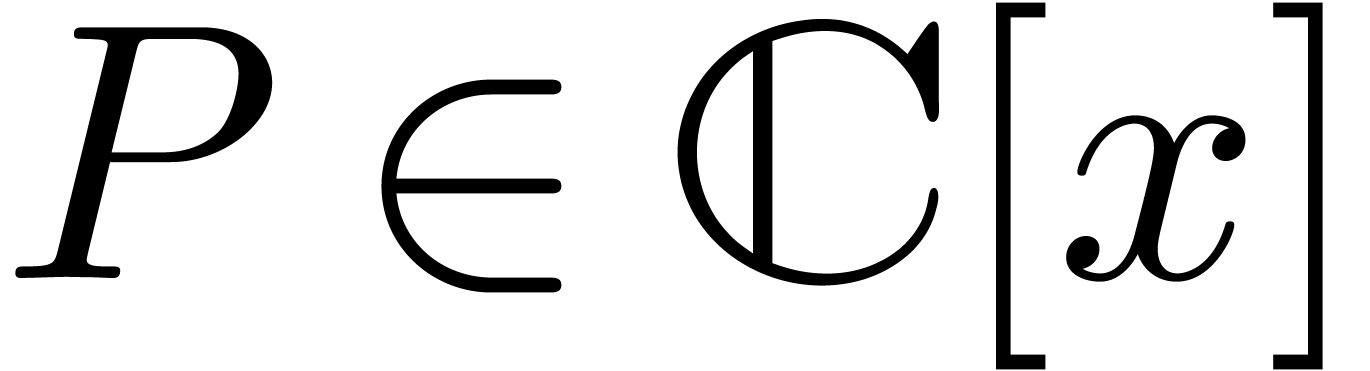

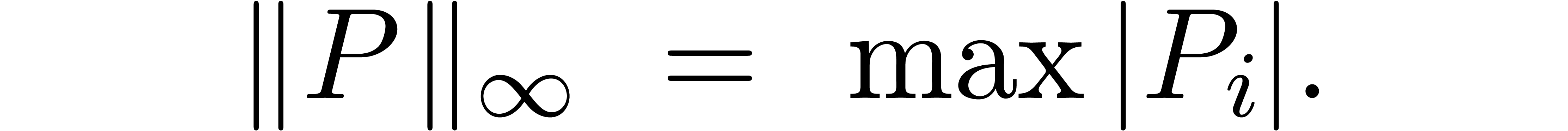

We also define the max-norm for polynomials  or

or

by

by

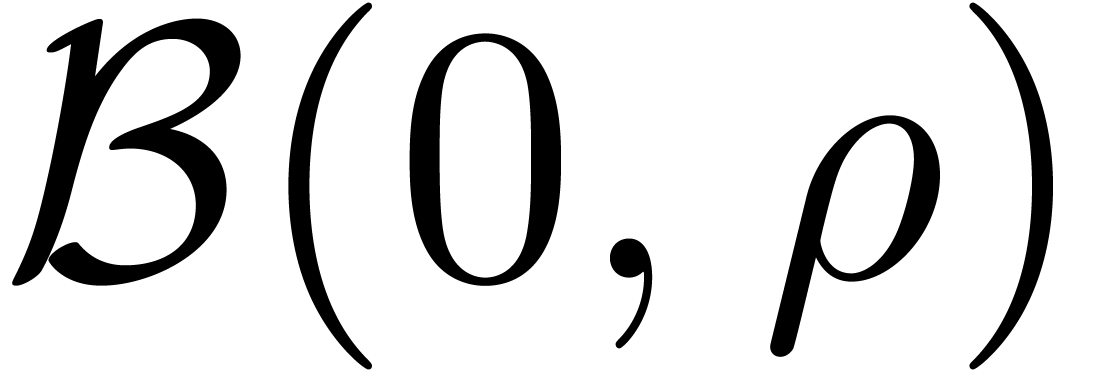

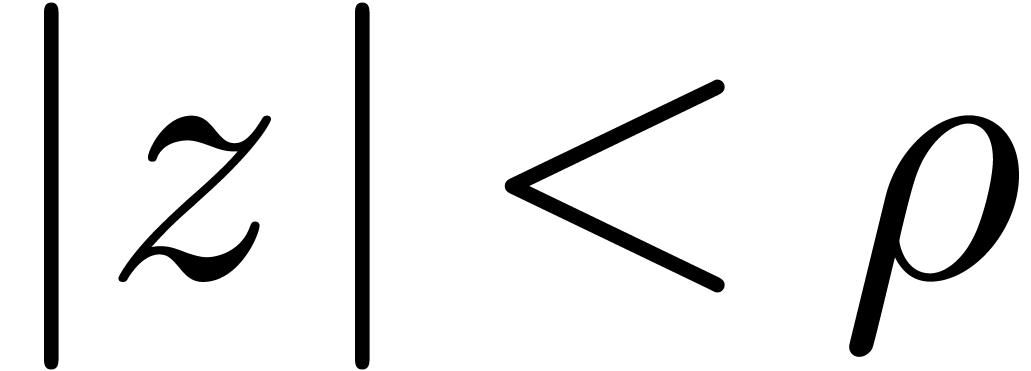

For power series  or

or  which converge on the compact disk

which converge on the compact disk  ,

we define the norm

,

we define the norm

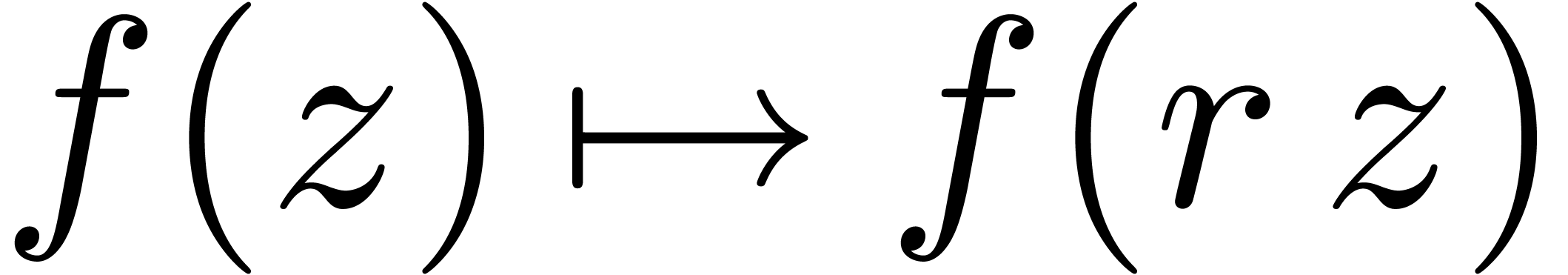

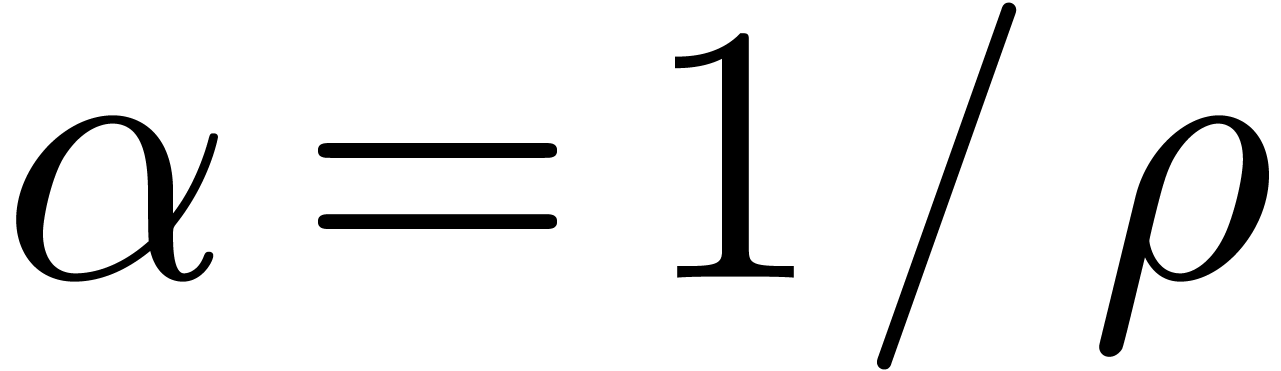

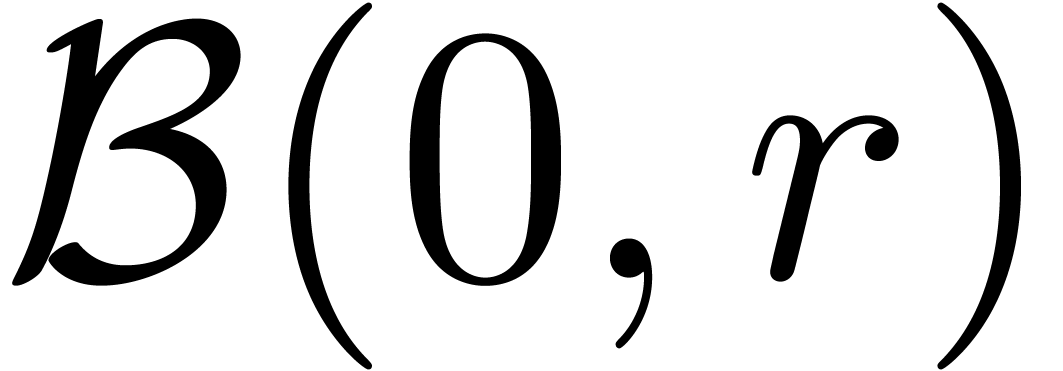

After rescaling  , we will

usually work with the norm

, we will

usually work with the norm  .

.

In some cases, it may be useful to generalize the concept of a ball and

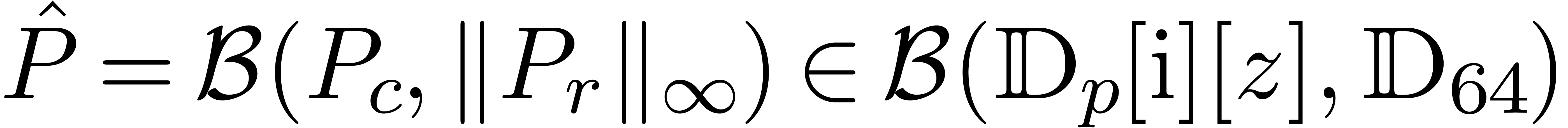

allow for radii in partially ordered rings. For instance, a ball  stands for the set

stands for the set

The sets  ,

,  ,

,  ,

etc. are defined in a similar component wise way. Given

,

etc. are defined in a similar component wise way. Given

, it will also be convenient

to denote by

, it will also be convenient

to denote by  the vector with

the vector with  . For matrices

. For matrices  ,

polynomials

,

polynomials  and power series

and power series  , we define

, we define  ,

,

and

and  similarly.

similarly.

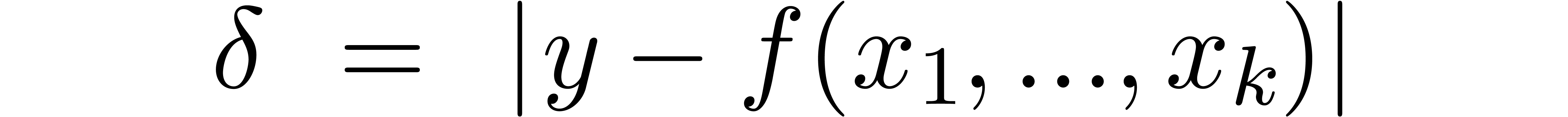

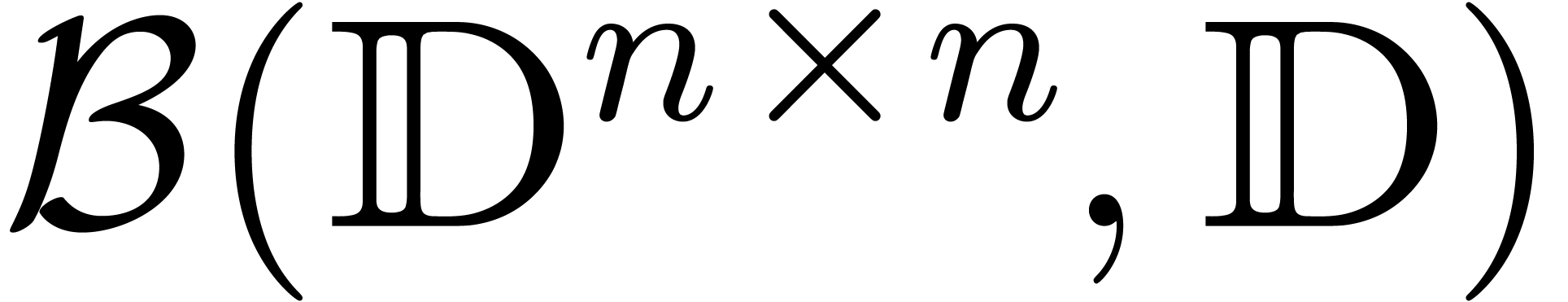

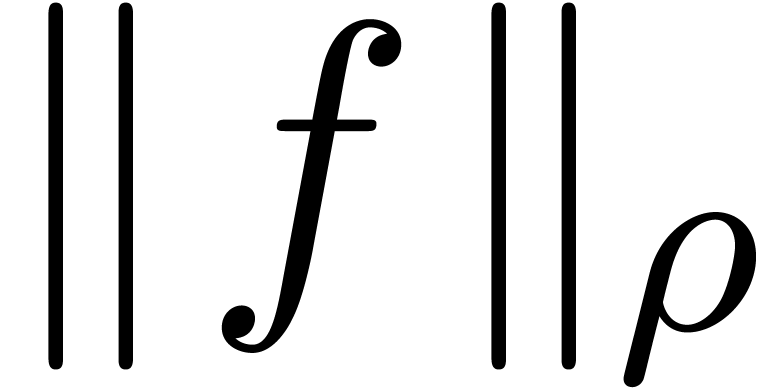

Let  be a normed vector space over

be a normed vector space over  and recall that

and recall that  stands for the set

of all balls with centers in

stands for the set

of all balls with centers in  and radii in

and radii in  . Given an operation

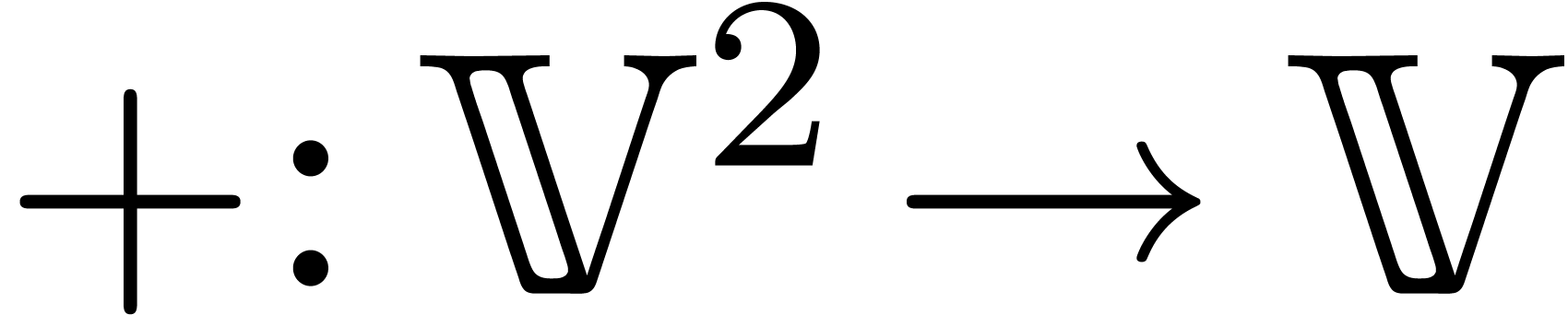

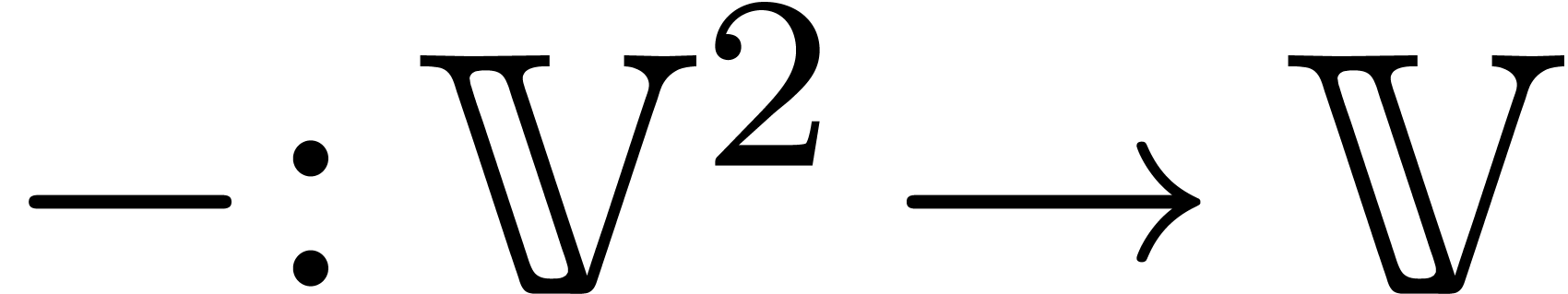

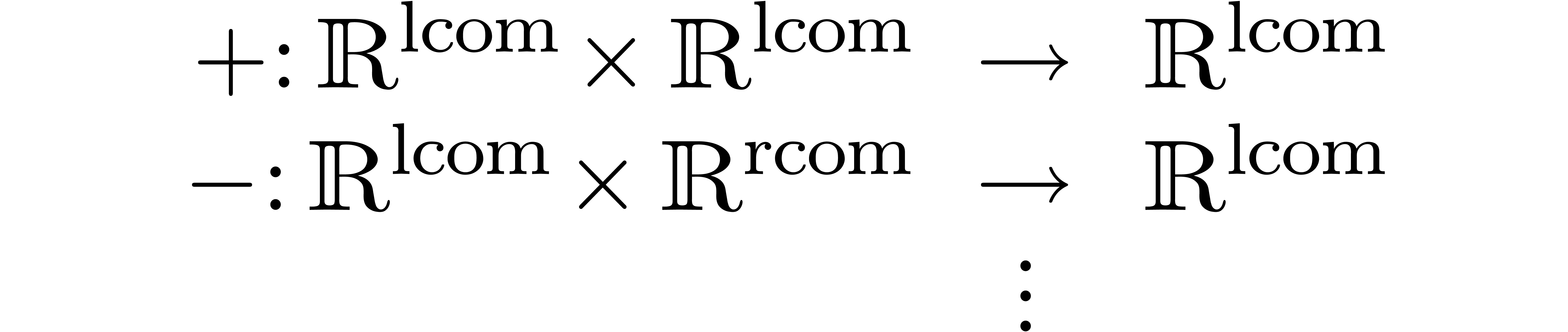

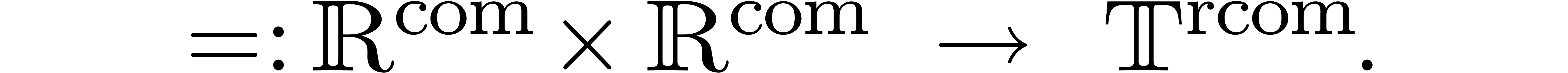

. Given an operation  , the operation is said to lift to an operation

, the operation is said to lift to an operation

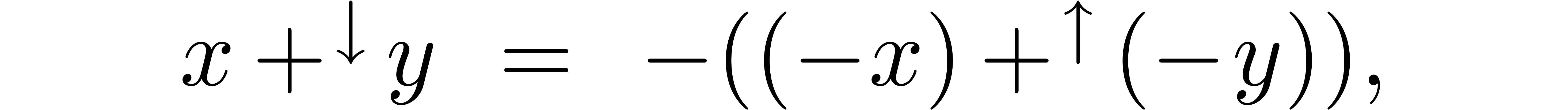

if we have

for all  . For instance, both

the addition

. For instance, both

the addition  and subtraction

and subtraction  admits lifts

admits lifts

Similarly, if  is a normed algebra, then the

multiplication lifts to

is a normed algebra, then the

multiplication lifts to

The lifts  ,

,  ,

,  ,

etc. are also said to be the ball arithmetic counterparts

of addition, subtractions, multiplication, etc.

,

etc. are also said to be the ball arithmetic counterparts

of addition, subtractions, multiplication, etc.

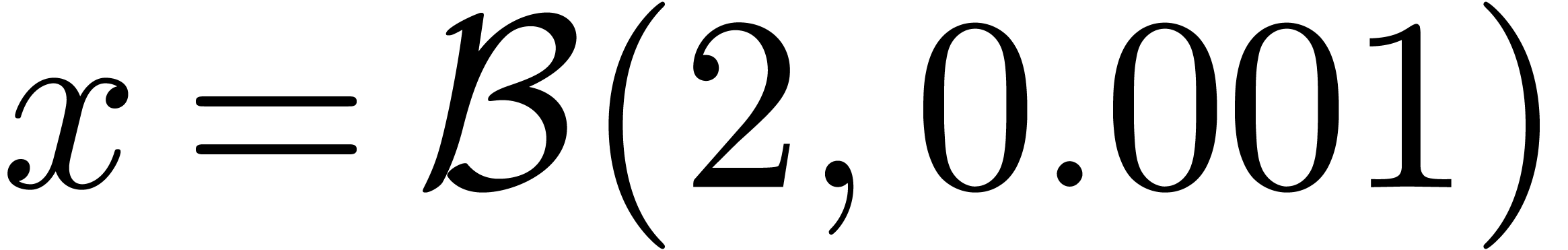

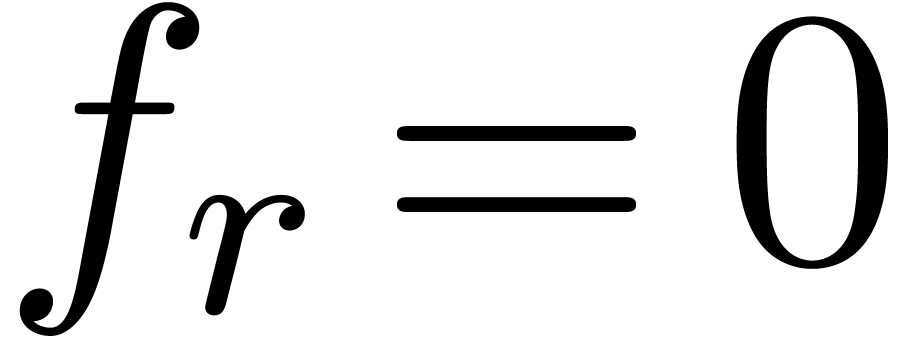

Ball arithmetic is a systematic way for the computation of error bounds

when the input of a numerical operation is known only approximately.

These bounds are usually not sharp. For instance, consider the

mathematical function  which evaluates to zero

everywhere, even if

which evaluates to zero

everywhere, even if  is only approximately known.

However, taking

is only approximately known.

However, taking  , we have

, we have

. This phenomenon is known as

overestimation. In general, ball algorithms have to be designed

carefully so as to limit overestimation.

. This phenomenon is known as

overestimation. In general, ball algorithms have to be designed

carefully so as to limit overestimation.

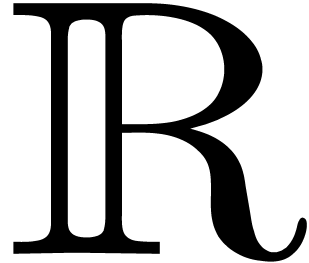

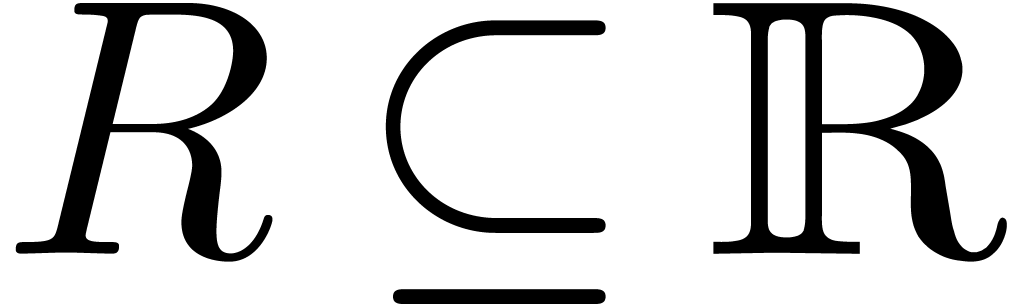

In the above definitions,  can be replaced by a

subfield

can be replaced by a

subfield  ,

,  by an

by an  -vector space

-vector space  , and the domain of

, and the domain of  by an open subset of

by an open subset of  .

If

.

If  and

and  are

effective in the sense that we have algorithms for the

additions, subtractions and multiplications in

are

effective in the sense that we have algorithms for the

additions, subtractions and multiplications in  and

and  , then basic ball

arithmetic in

, then basic ball

arithmetic in  is again effective. If we are

working with finite precision floating point numbers in

is again effective. If we are

working with finite precision floating point numbers in  rather than a genuine effective subfield

rather than a genuine effective subfield  ,

we will now show how to adapt the formulas (5), (6)

and (7) in order to take into account rounding errors; it

may also be necessary to allow for an infinite radius in this case.

,

we will now show how to adapt the formulas (5), (6)

and (7) in order to take into account rounding errors; it

may also be necessary to allow for an infinite radius in this case.

Let us detail what needs to be changed when using IEEE conform finite

precision arithmetic, say  .

We will denote

.

We will denote

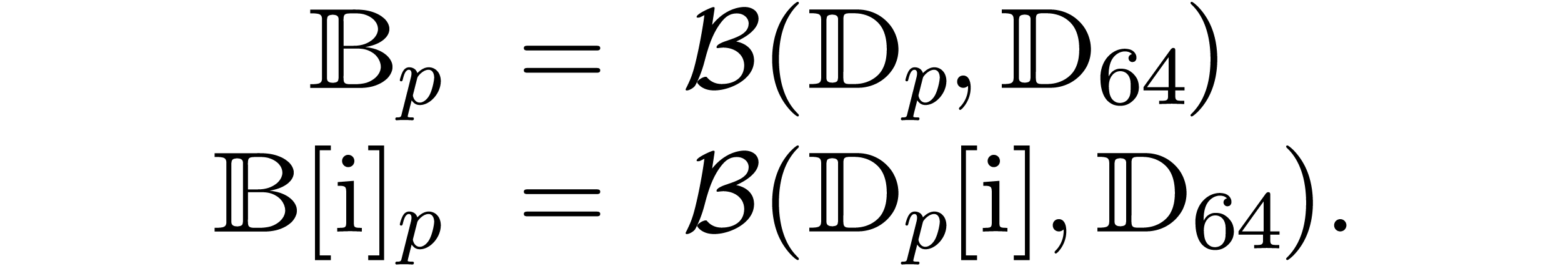

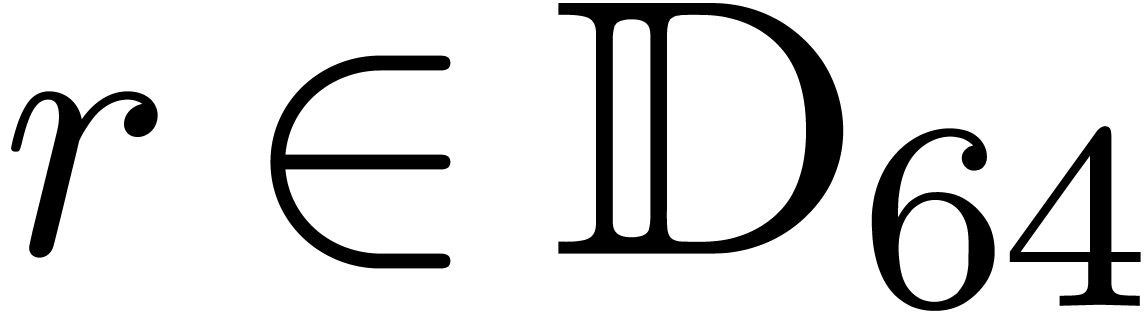

When working with multiple precision numbers, it usually suffices to use

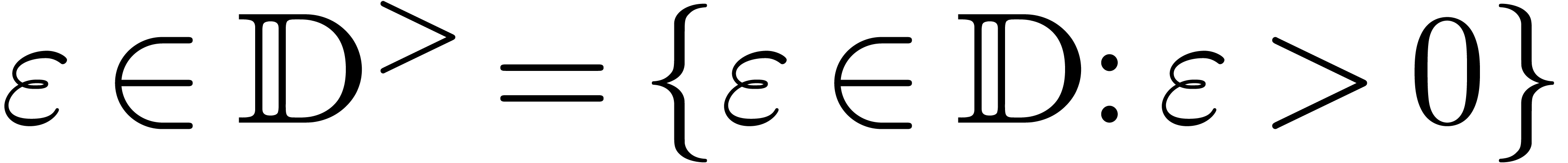

low precision numbers for the radius type. Recalling that  , we will therefore denote

, we will therefore denote

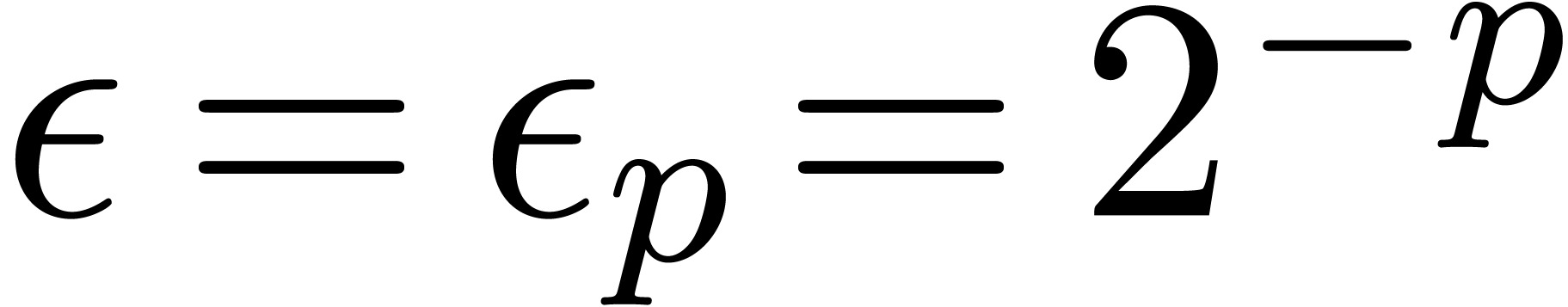

We will write  for the machine accuracy and

for the machine accuracy and  for the smallest representable positive number in

for the smallest representable positive number in

.

.

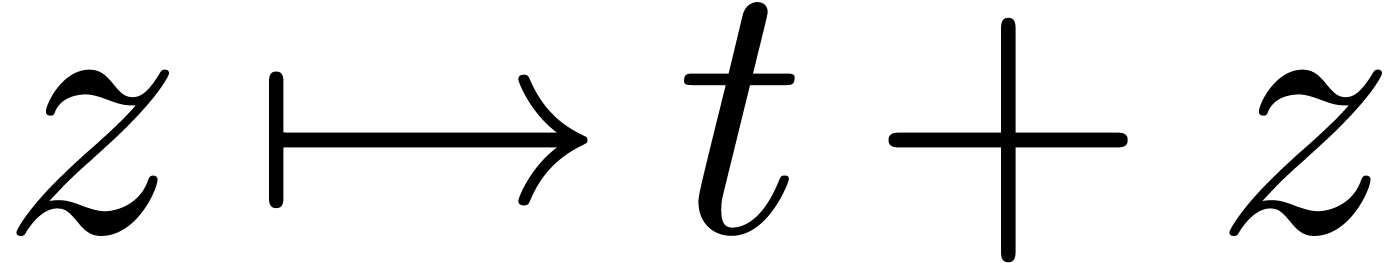

Given an operation  as in section 3.1,

together with balls

as in section 3.1,

together with balls  , it is

natural to compute the center

, it is

natural to compute the center  of

of

by rounding to the nearest:

One interesting point is that the committed error

does not really depend on the operation  itself:

we have the universal upper bound

itself:

we have the universal upper bound

It would be useful if this adjustment function  were present in the hardware.

were present in the hardware.

For the computation of the radius  ,

it now suffices to use the sum of

,

it now suffices to use the sum of  and the

theoretical bound formulas for the infinite precision case. For

instance,

and the

theoretical bound formulas for the infinite precision case. For

instance,

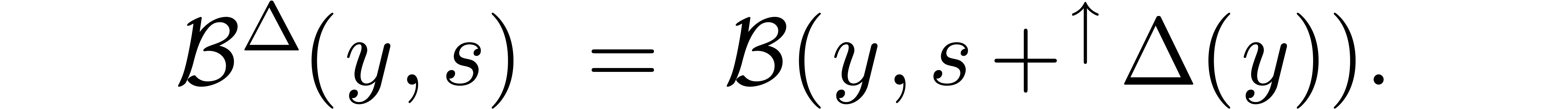

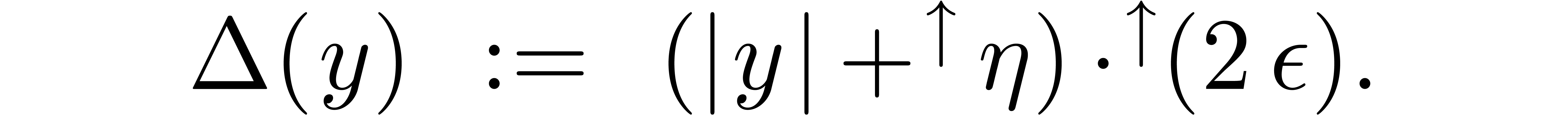

where  stands for the “adjusted

constructor”

stands for the “adjusted

constructor”

The approach readily generalizes to other “normed vector

spaces”  over

over  ,

as soon as one has a suitable rounded arithmetic in

,

as soon as one has a suitable rounded arithmetic in  and a suitable adjustment function

and a suitable adjustment function  attached to

it.

attached to

it.

Notice that  , if

, if  or

or  is the largest representable

real number in

is the largest representable

real number in  .

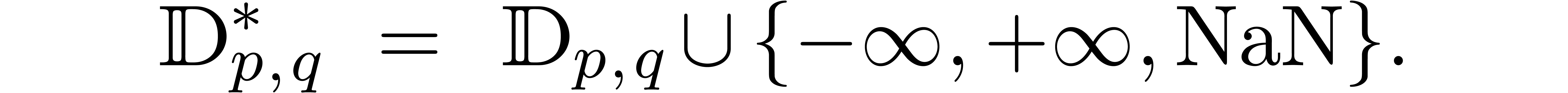

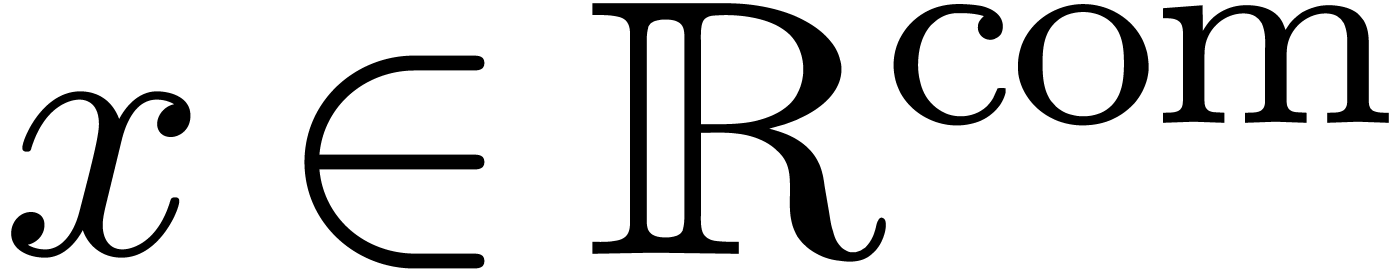

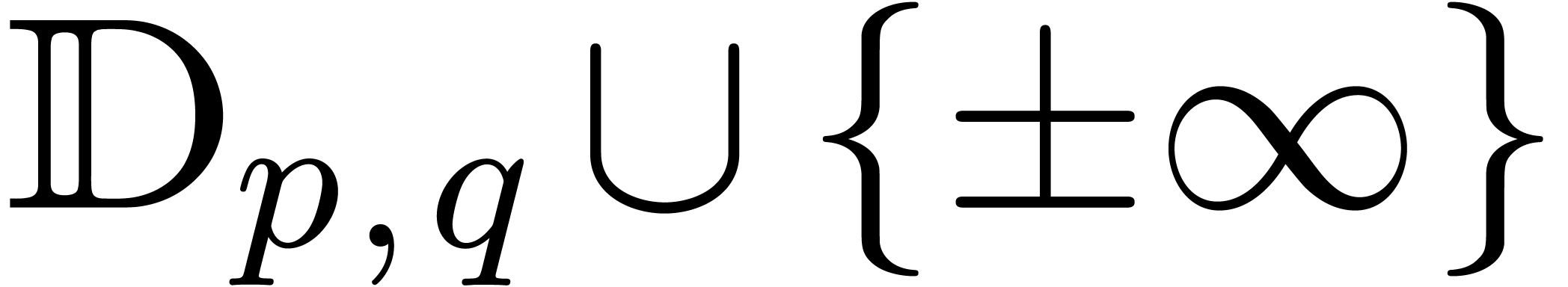

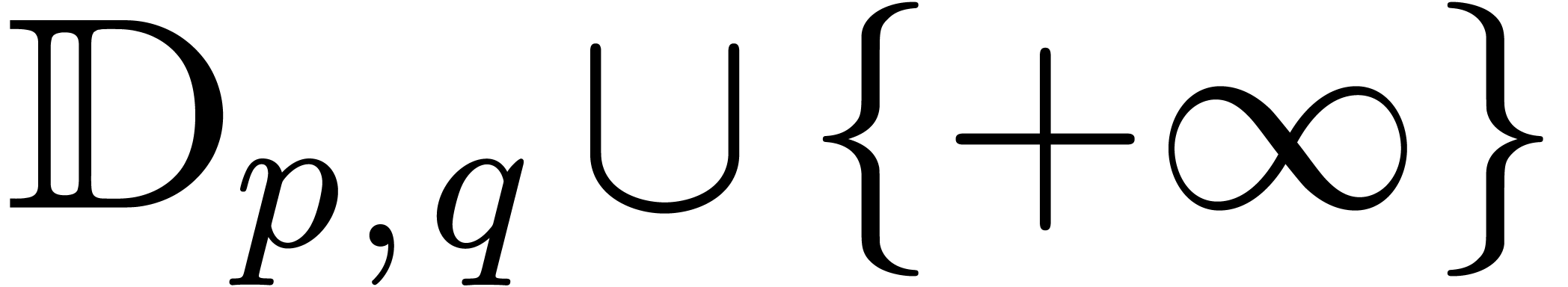

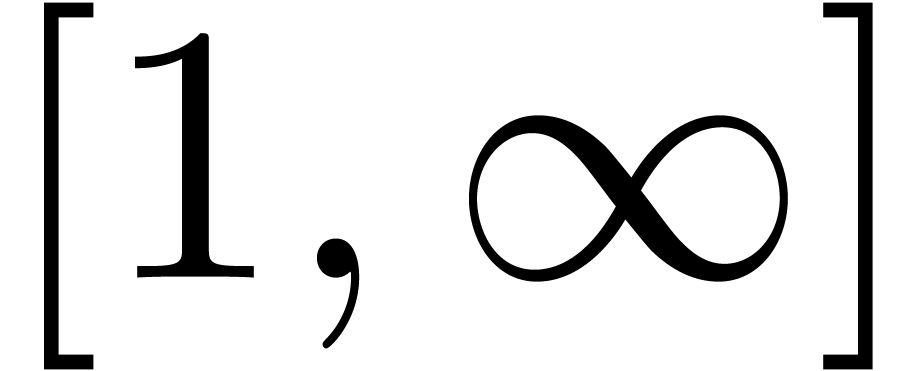

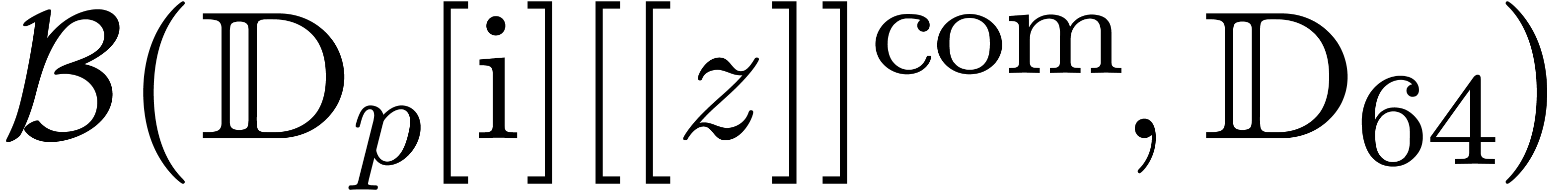

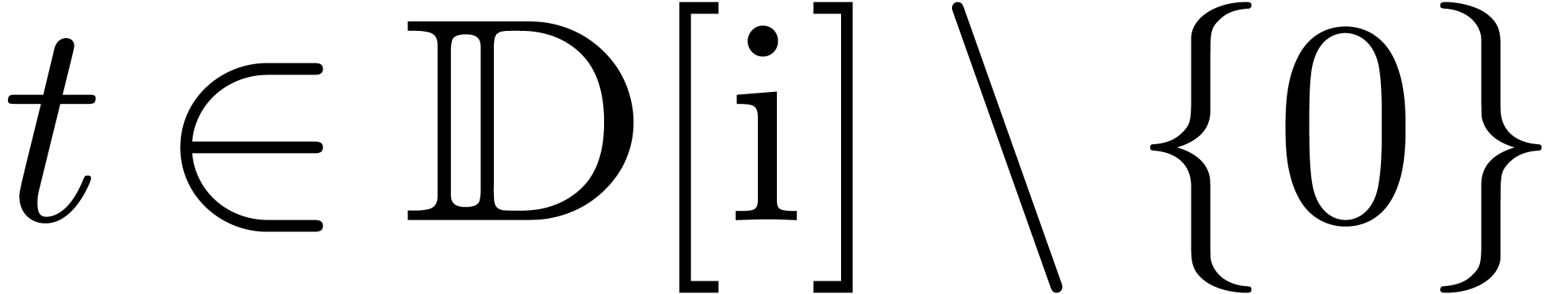

Consequently, the finite precision ball computations naturally take

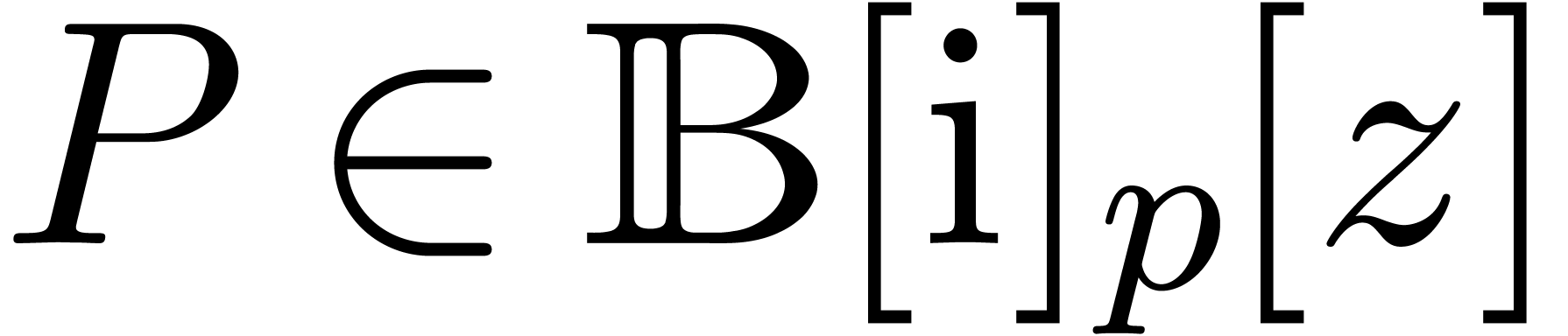

place in domains of the form

.

Consequently, the finite precision ball computations naturally take

place in domains of the form  rather than

rather than  . Of course, balls with infinite

radius carry no useful information about the result. In order to ease

the reading, we will assume the absence of overflows in what follows,

and concentrate on computations with ordinary numbers in

. Of course, balls with infinite

radius carry no useful information about the result. In order to ease

the reading, we will assume the absence of overflows in what follows,

and concentrate on computations with ordinary numbers in  . We will only consider infinities if they are

used in an essential way during the computation.

. We will only consider infinities if they are

used in an essential way during the computation.

Similarly, if we want ball arithmetic to be a natural extension of the

IEEE 754 norm, then we need an equivalent of  . One approach consists of introducing a

. One approach consists of introducing a  (not a ball) object, which could be represented by

(not a ball) object, which could be represented by  or

or  . A

ball function

. A

ball function  returns

returns  if

if

returns

returns  for one

selection of members of the input balls. For instance,

for one

selection of members of the input balls. For instance,  . An alternative approach consists of the

attachment of an additional flag to each ball object, which signals a

possible invalid outcome. Following this convention,

. An alternative approach consists of the

attachment of an additional flag to each ball object, which signals a

possible invalid outcome. Following this convention,  yields

yields  .

.

Using the formulas from the previous section, it is relatively

straightforward to implement ball arithmetic as a C++ template library,

as we have done in the

Multiple precision libraries such as

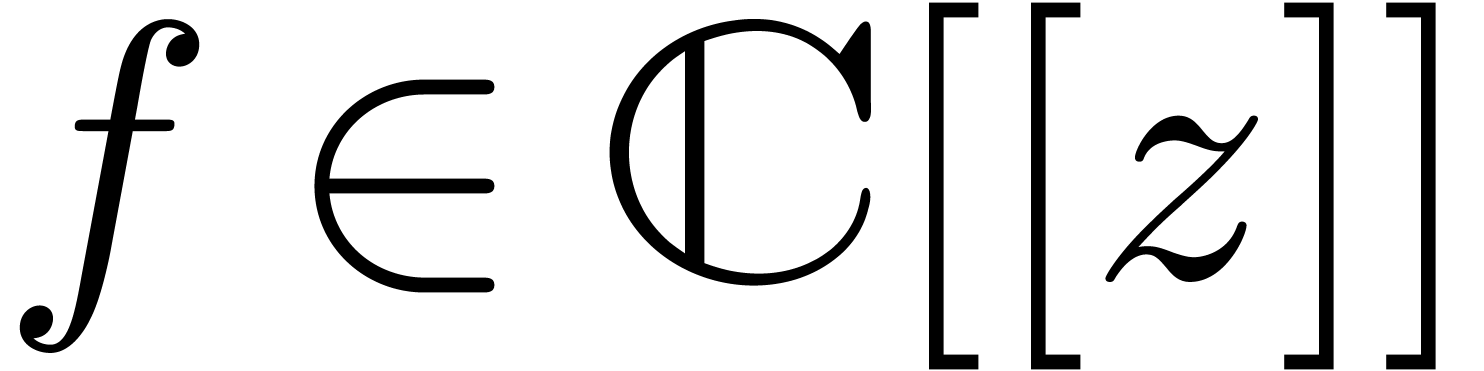

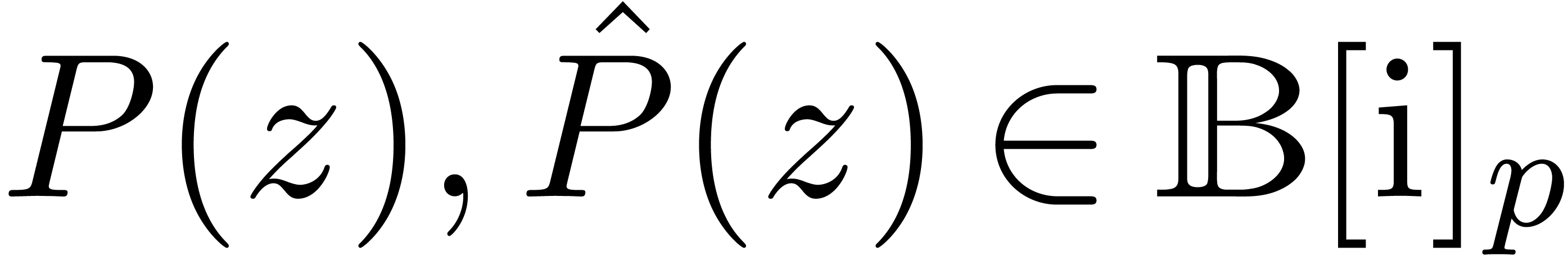

When computing with complex numbers  ,

one may again save several function calls. Moreover, it is possible

to regard

,

one may again save several function calls. Moreover, it is possible

to regard  as an element of

as an element of  rather than

rather than  ,

i.e. use a single exponent for both the real and

imaginary parts of

,

i.e. use a single exponent for both the real and

imaginary parts of  . This

optimization reduces the time spent on exponent computations and

mantissa normalizations.

. This

optimization reduces the time spent on exponent computations and

mantissa normalizations.

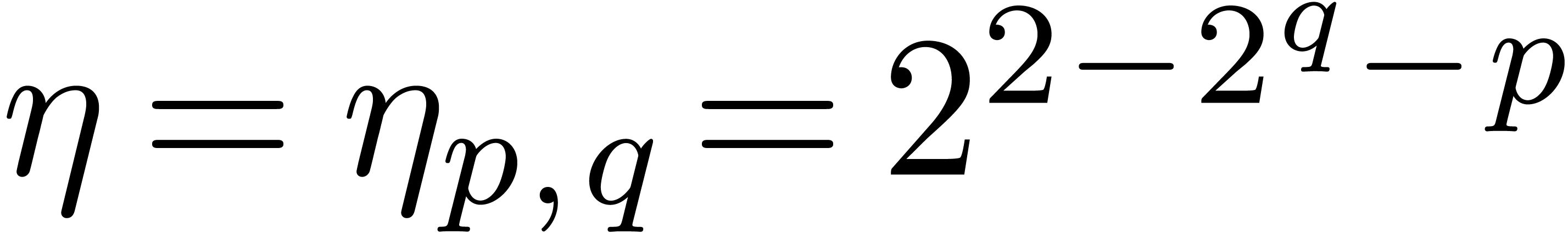

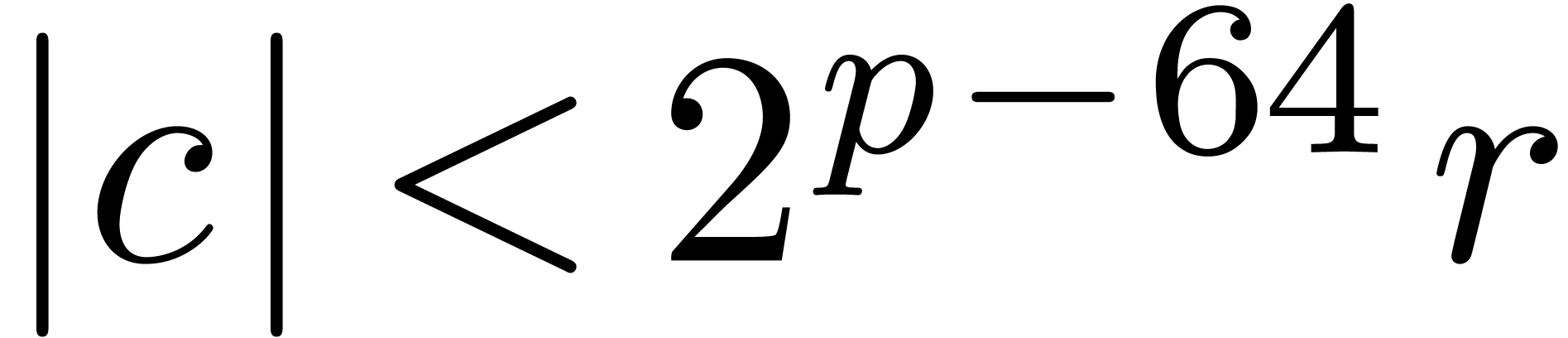

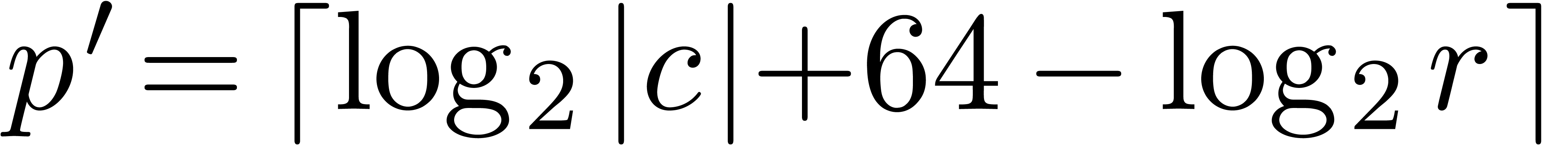

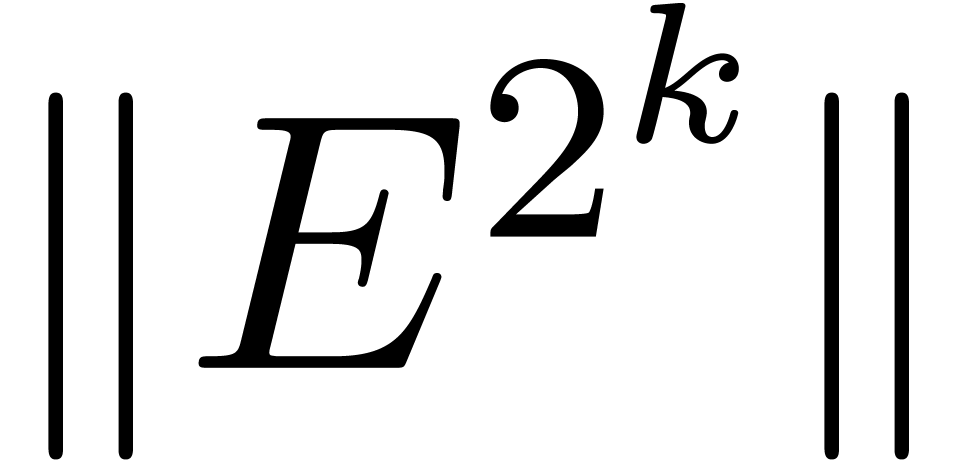

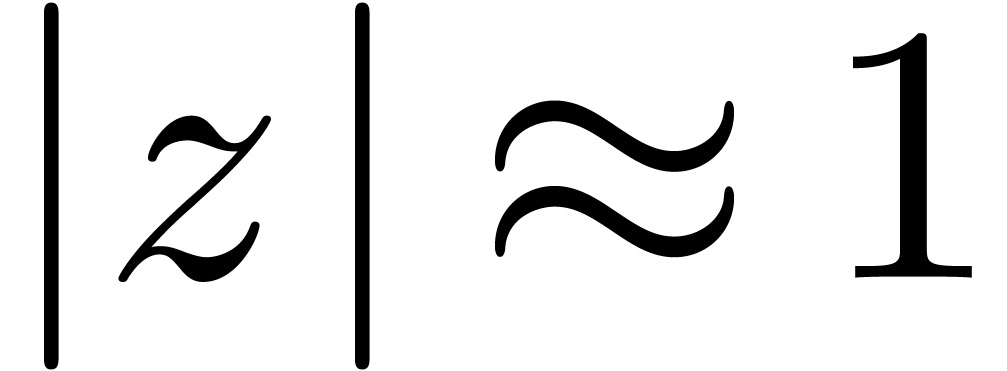

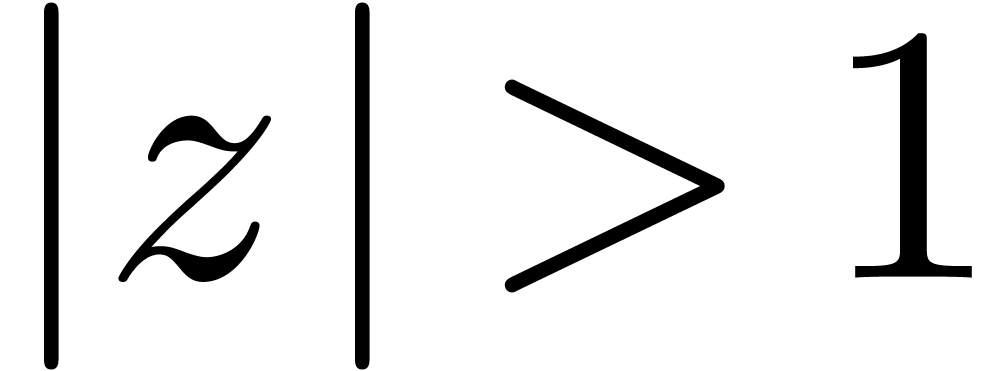

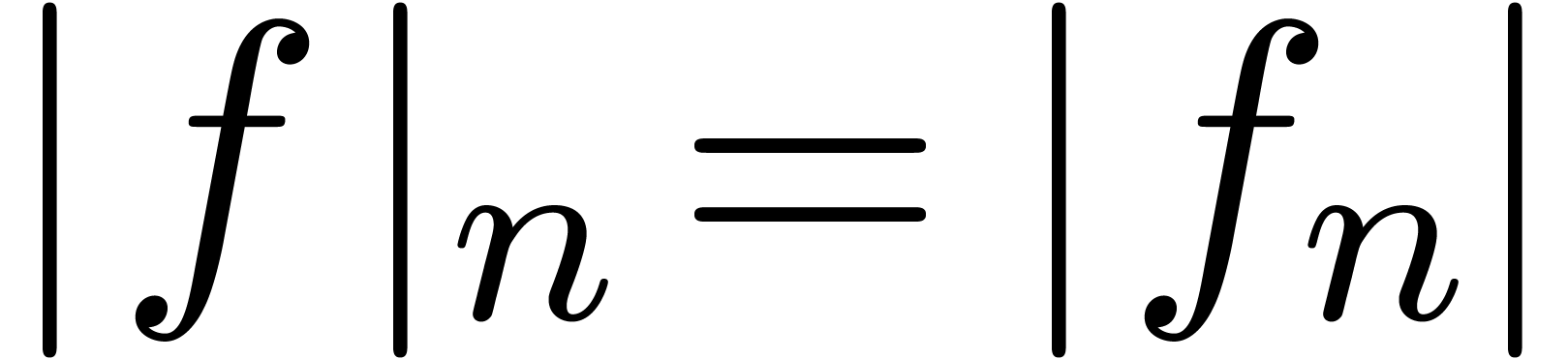

Consider a ball  and recall that

and recall that  ,

,  .

If

.

If  , then the

, then the  least significant binary digits of

least significant binary digits of  are of little interest. Hence, we may replace

are of little interest. Hence, we may replace  by its closest approximation in

by its closest approximation in  ,

with

,

with  , and reduce the

working precision to

, and reduce the

working precision to  .

Modulo slightly more work, it is also possible to share the

exponents of the center and the radius.

.

Modulo slightly more work, it is also possible to share the

exponents of the center and the radius.

If we don't need large exponents for our multiple precision numbers,

then it is possible to use machine doubles  as our radius type and further reduce the overhead of bound

computations.

as our radius type and further reduce the overhead of bound

computations.

When combining the above optimizations, it can be hoped that multiple precision ball arithmetic can be implemented almost as efficiently as standard multiple precision arithmetic. However, this requires a significantly higher implementation effort.

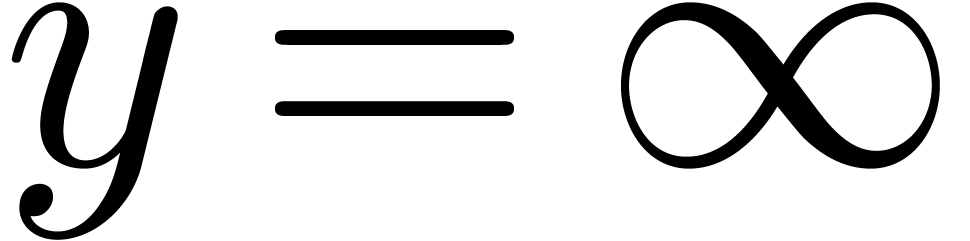

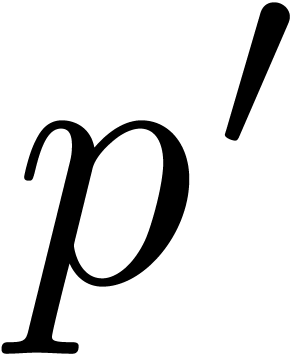

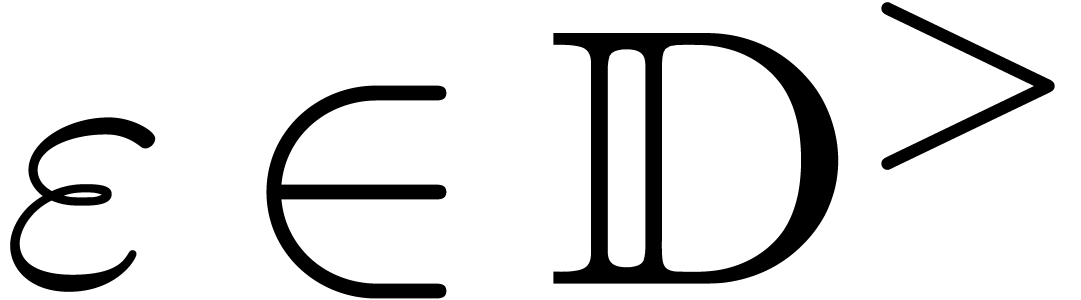

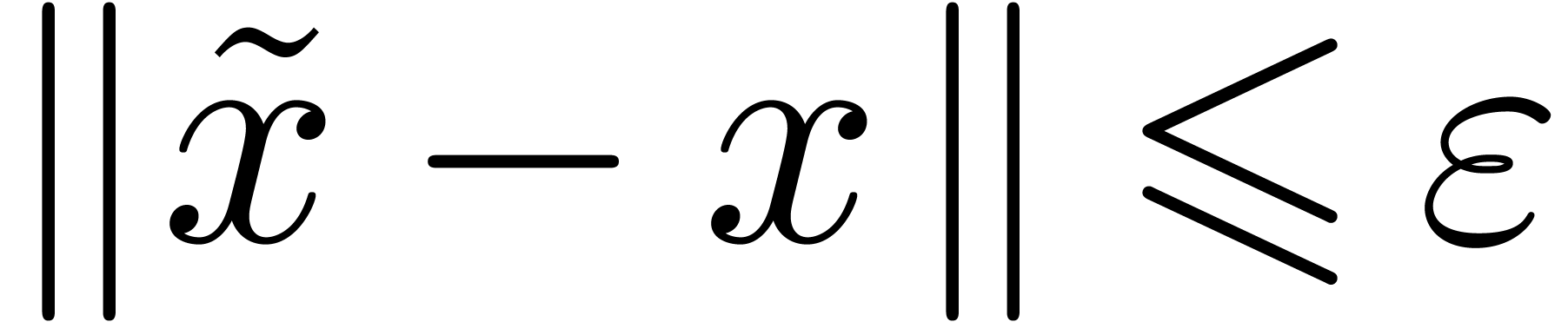

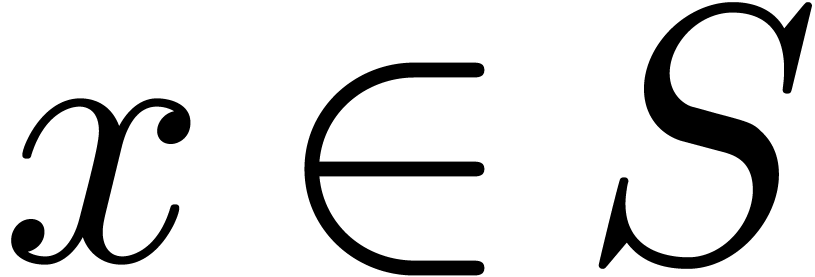

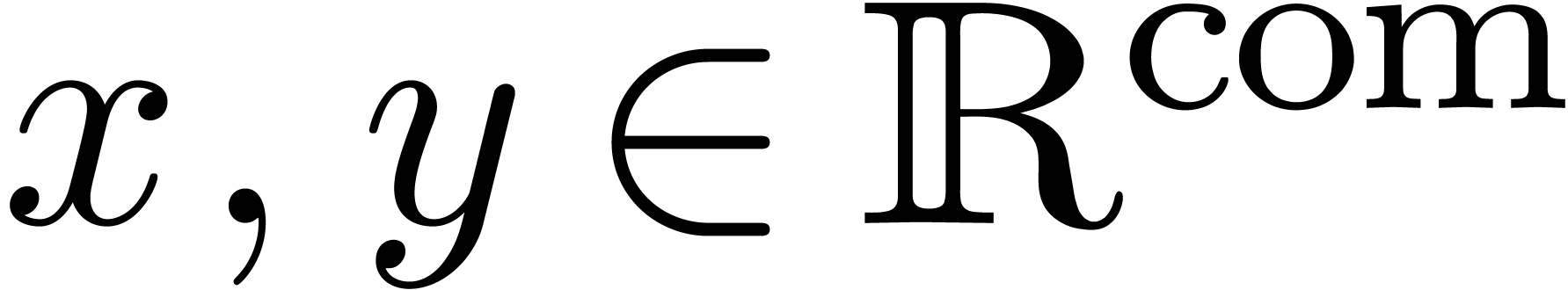

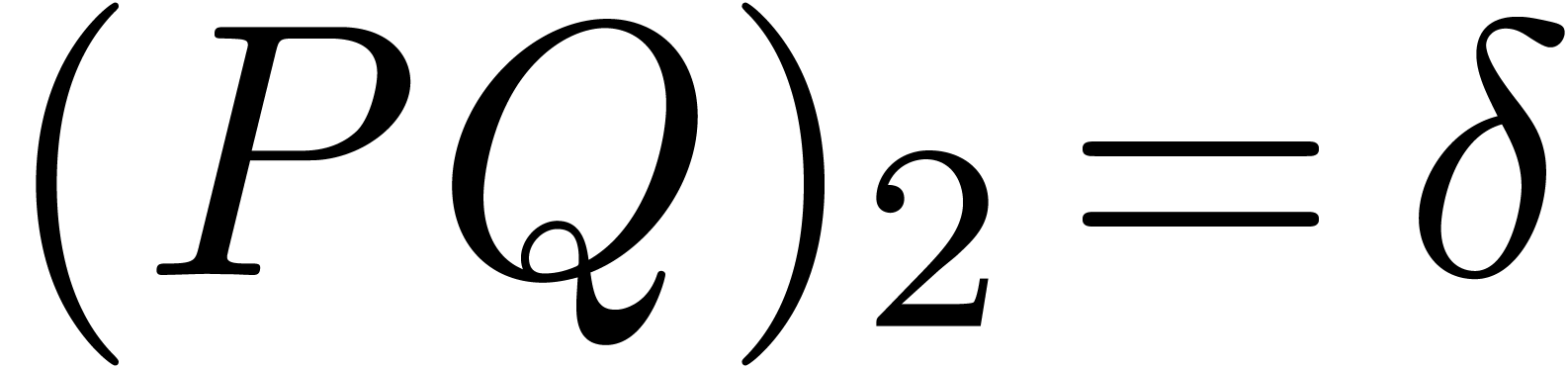

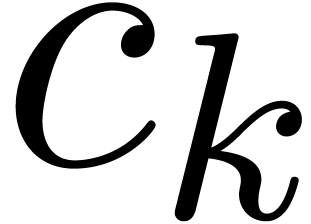

Given  ,

,  and

and  , we say that

, we say that  is an

is an  -approximation

of

-approximation

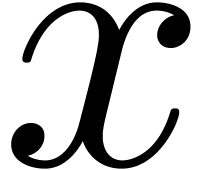

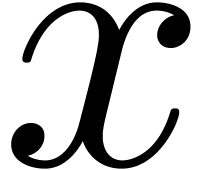

of  if

if  .

A real number

.

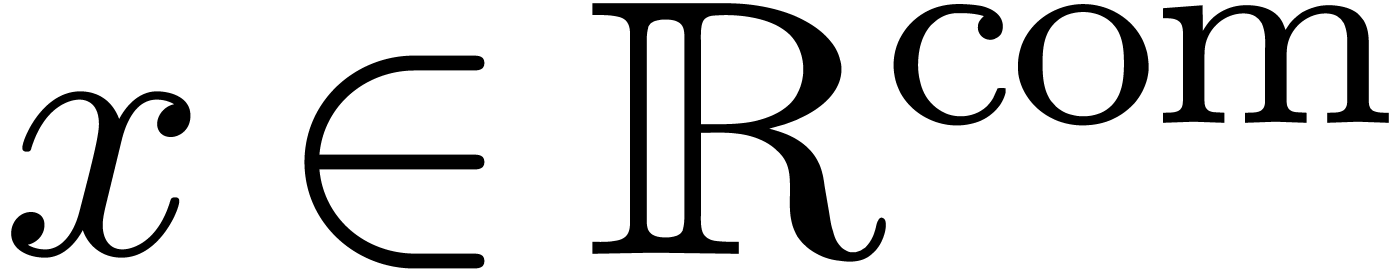

A real number  is said to be computable

[Tur36, Grz57, Wei00] if there

exists an approximation algorithm which takes an absolute

tolerance

is said to be computable

[Tur36, Grz57, Wei00] if there

exists an approximation algorithm which takes an absolute

tolerance  on input and which returns an

on input and which returns an  -approximation of

-approximation of  . We denote by

. We denote by  the set

of computable real numbers. We may regard

the set

of computable real numbers. We may regard  as a

data type, whose instances are represented by approximation algorithms

(this is also known as the Markov representation [Wei00,

Section 9.6]).

as a

data type, whose instances are represented by approximation algorithms

(this is also known as the Markov representation [Wei00,

Section 9.6]).

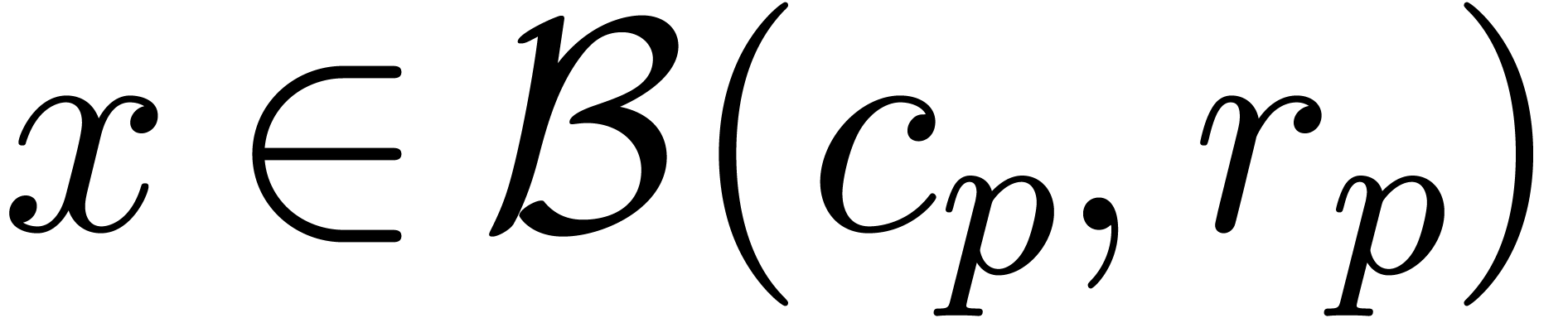

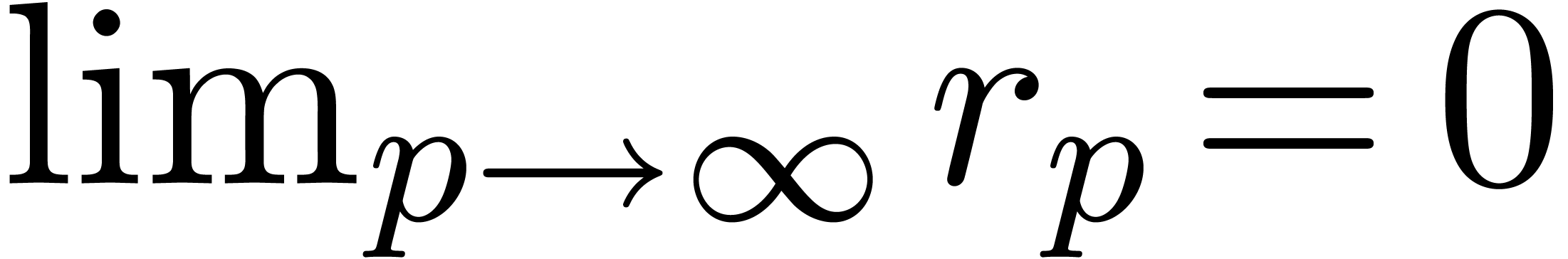

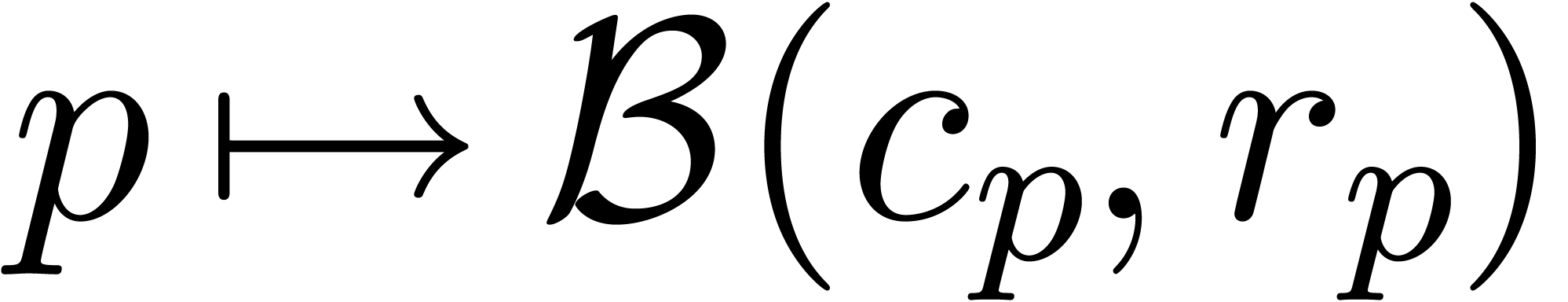

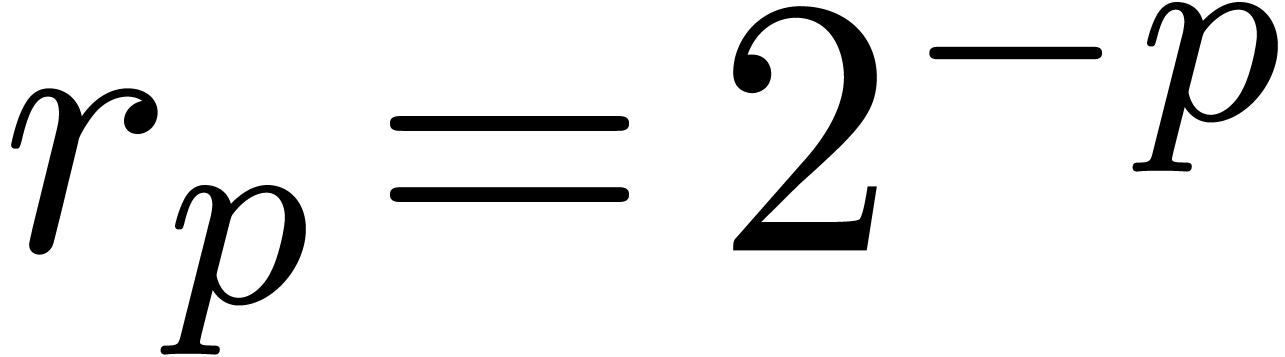

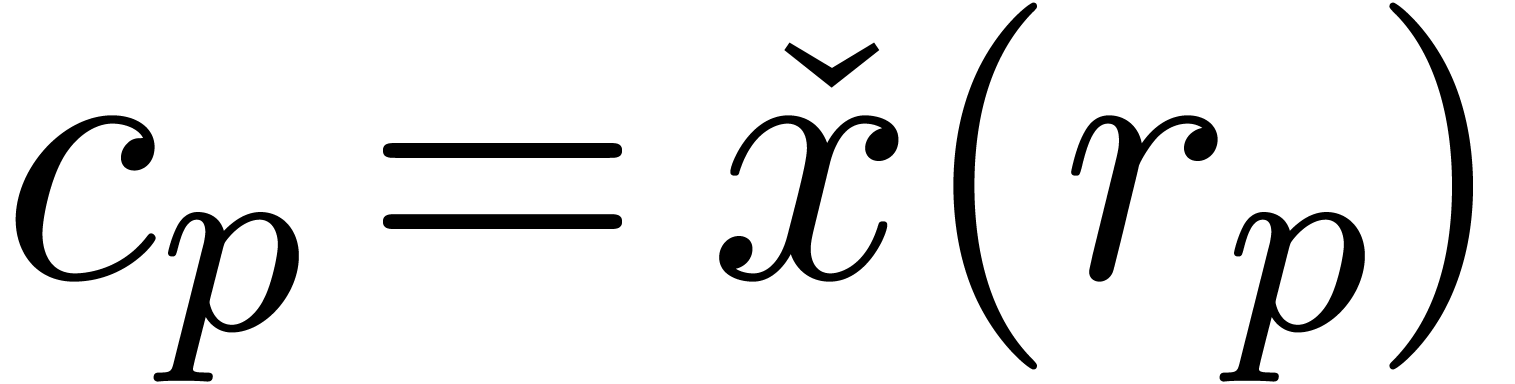

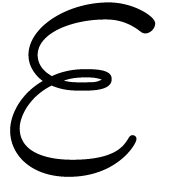

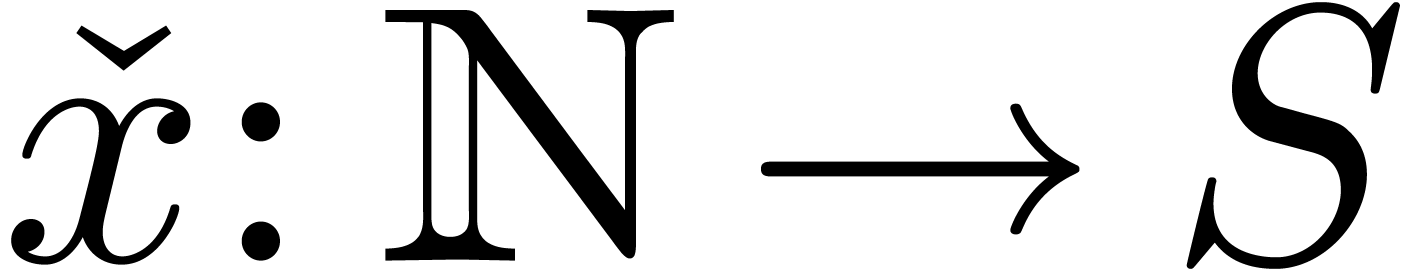

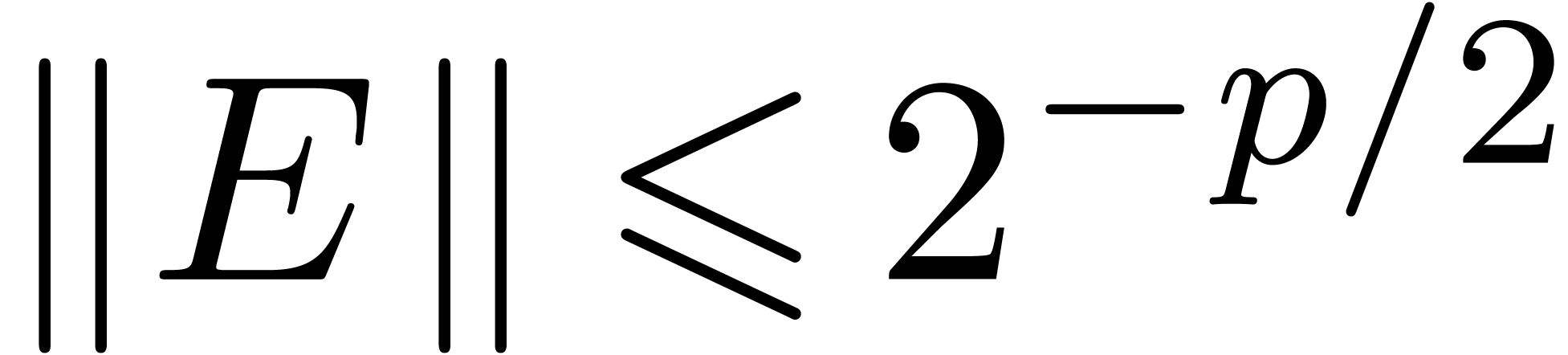

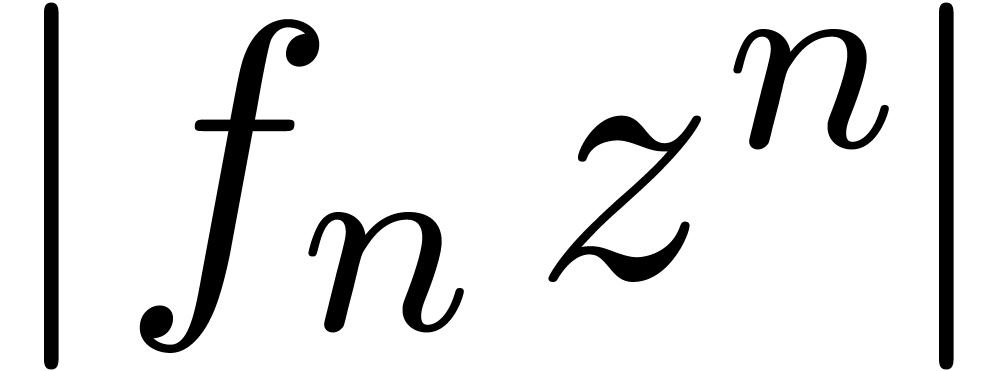

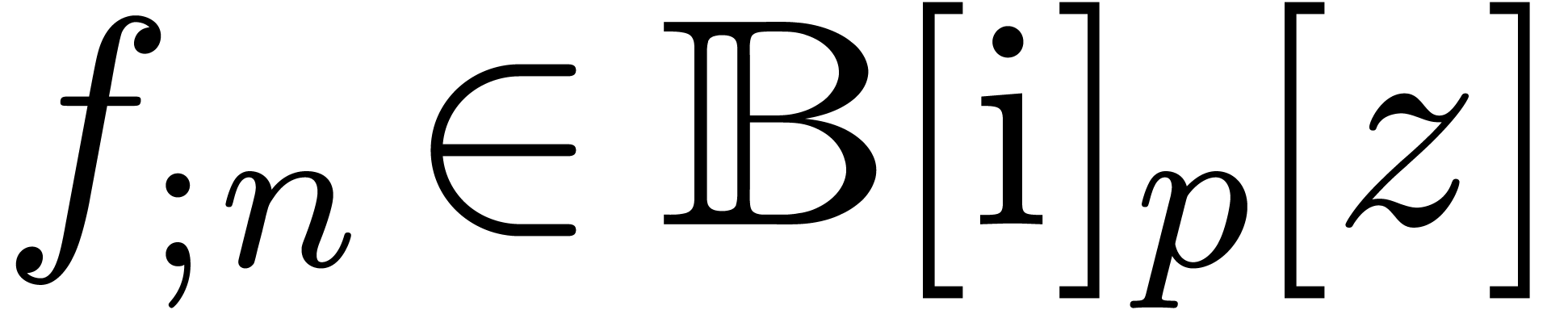

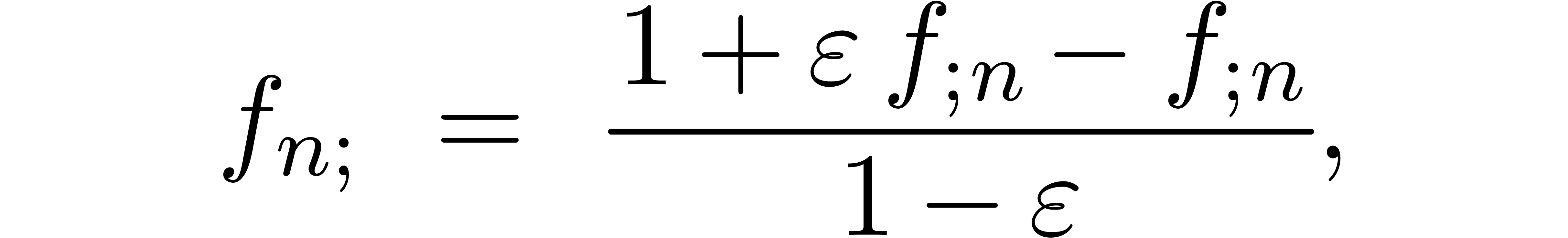

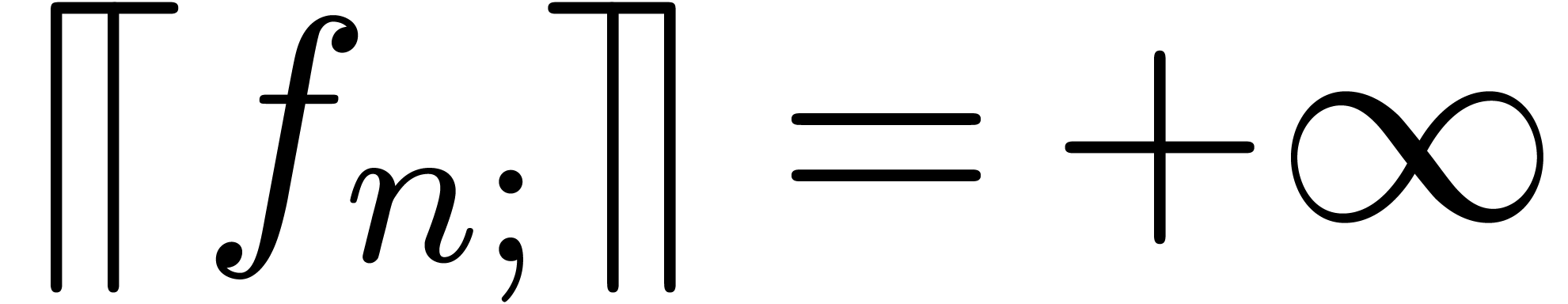

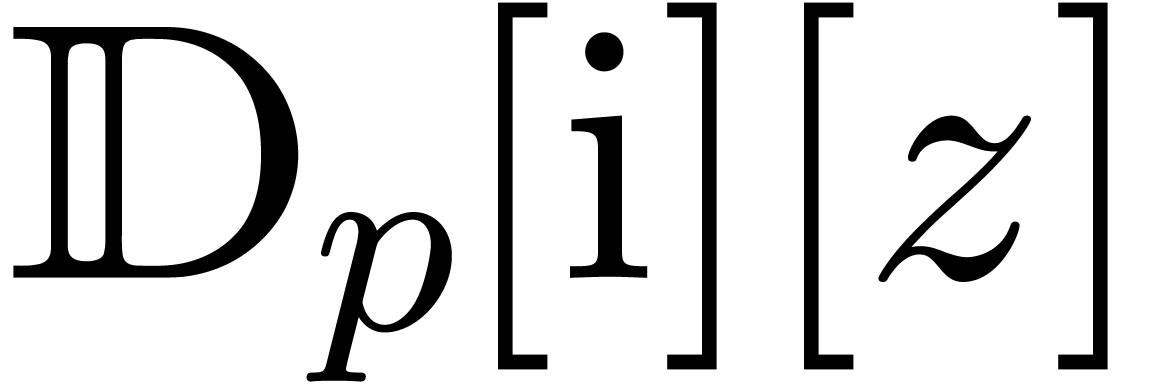

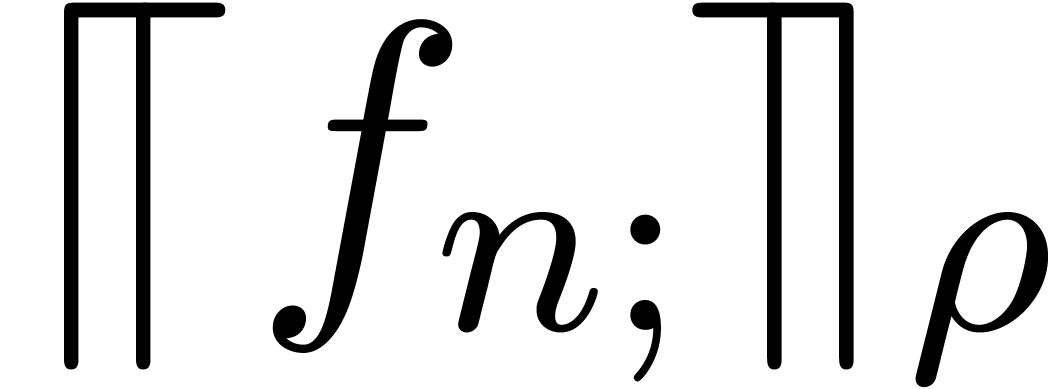

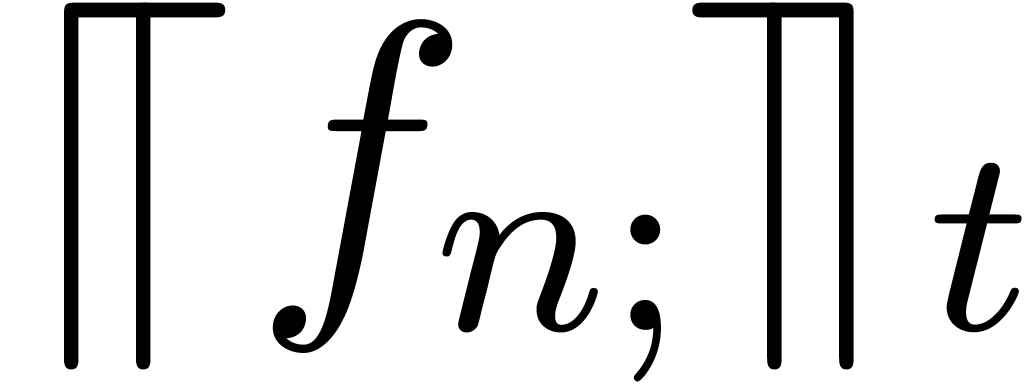

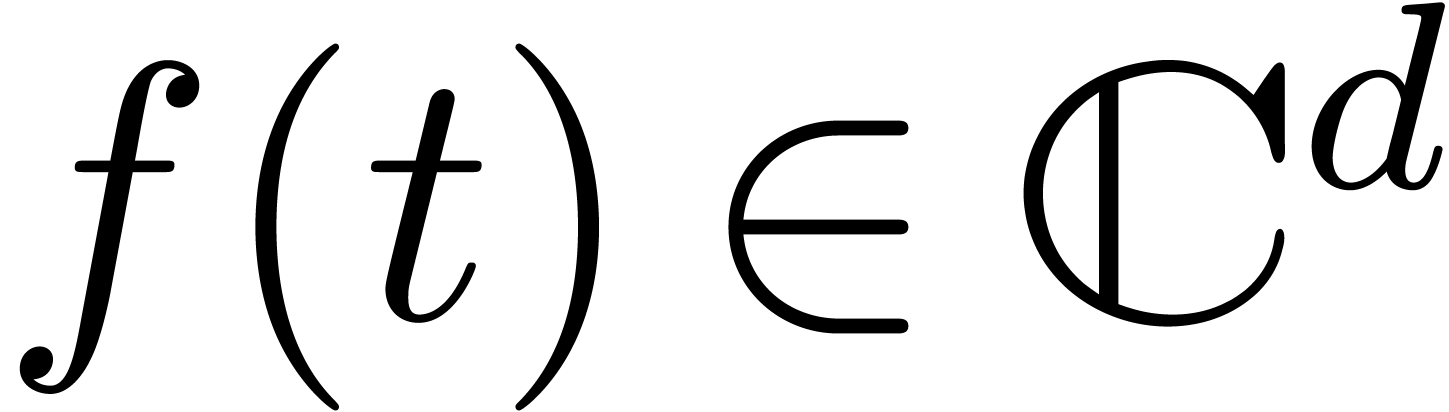

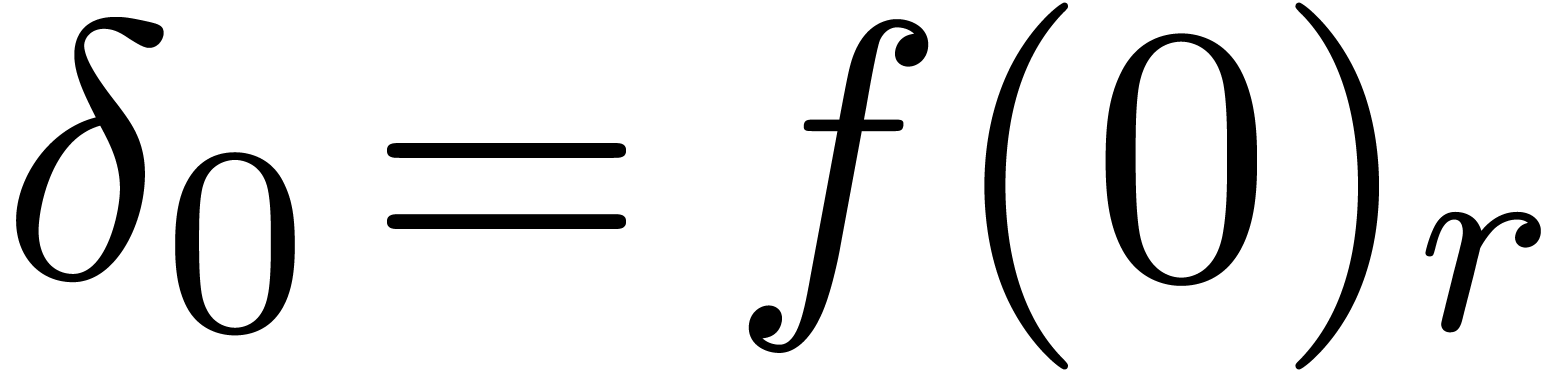

In practice, it is more convenient to work with so called ball

approximation algorithms: a real number is computable if and only if it

admits a ball approximation algorithm, which takes a working

precision  on input and returns a ball

approximation

on input and returns a ball

approximation  with

with  and

and  . Indeed, assume that we

have a ball approximation algorithm. In order to obtain an

. Indeed, assume that we

have a ball approximation algorithm. In order to obtain an  -approximation, it suffices to compute ball

approximations at precisions

-approximation, it suffices to compute ball

approximations at precisions  which double at

every step, until

which double at

every step, until  .

Conversely, given an approximation algorithm

.

Conversely, given an approximation algorithm  of

of

, we obtain a ball

approximation algorithm

, we obtain a ball

approximation algorithm  by taking

by taking  and

and  .

.

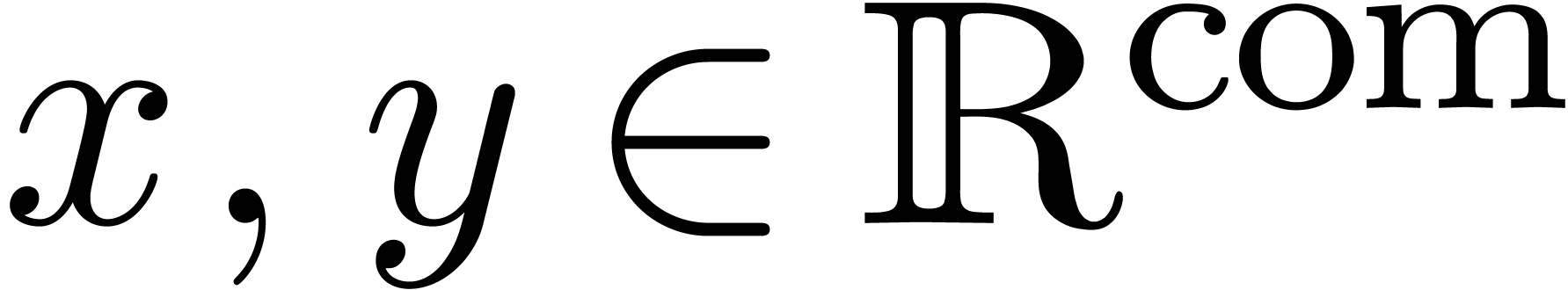

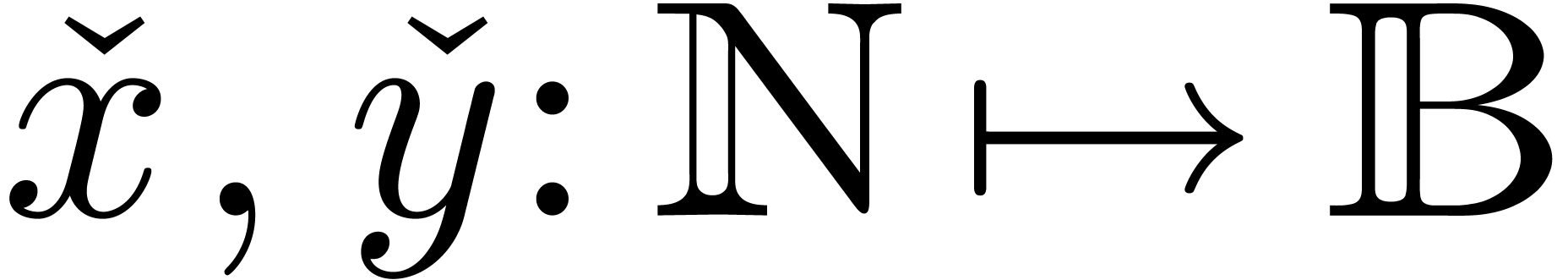

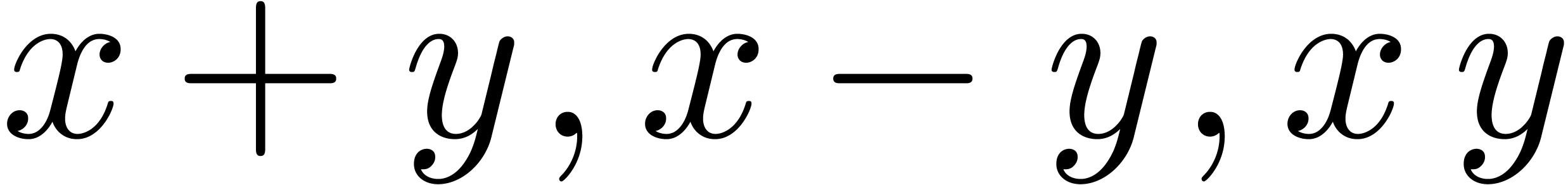

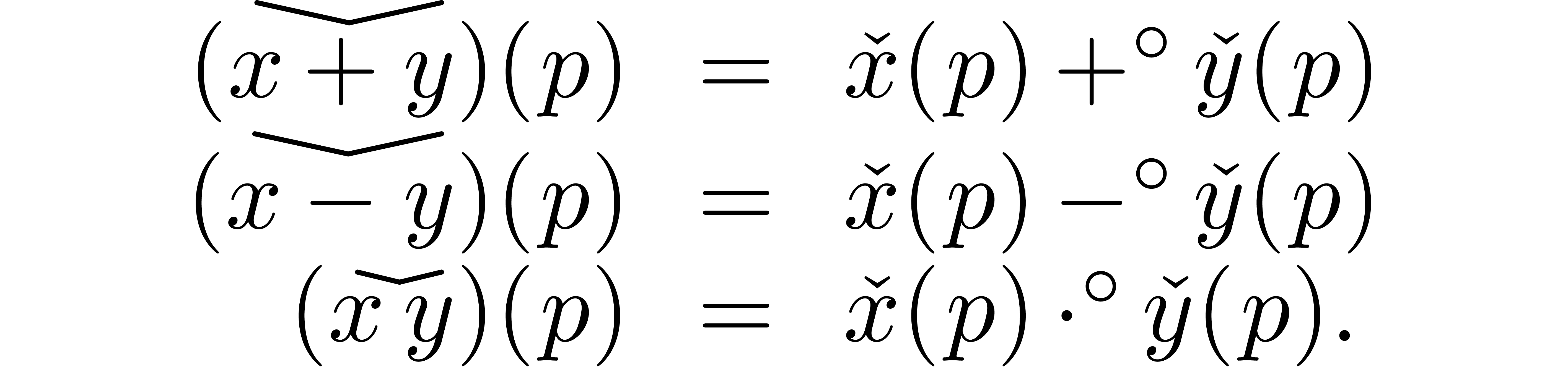

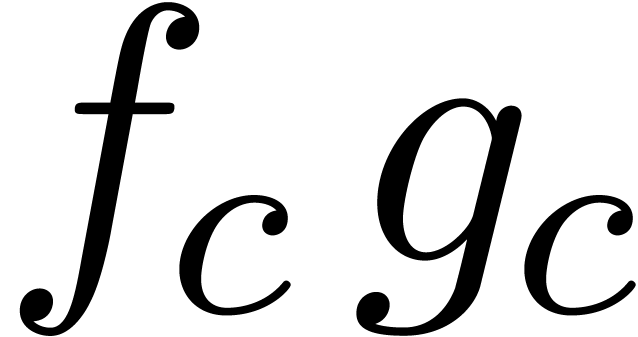

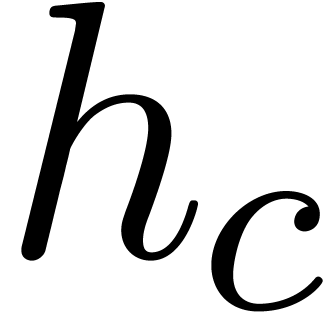

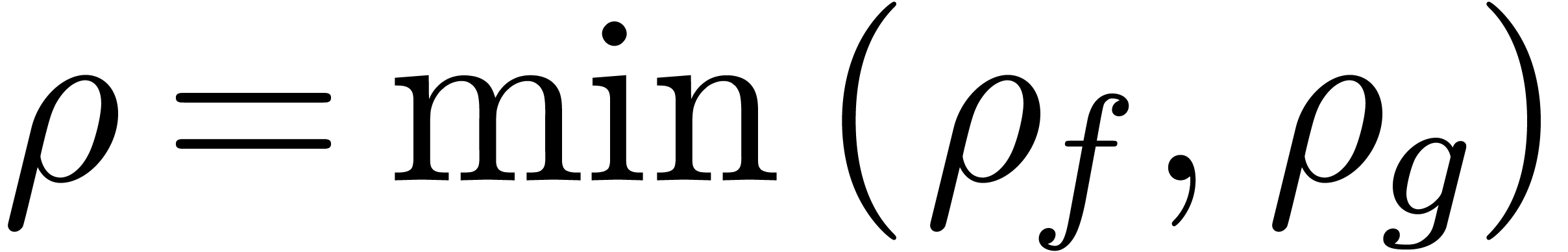

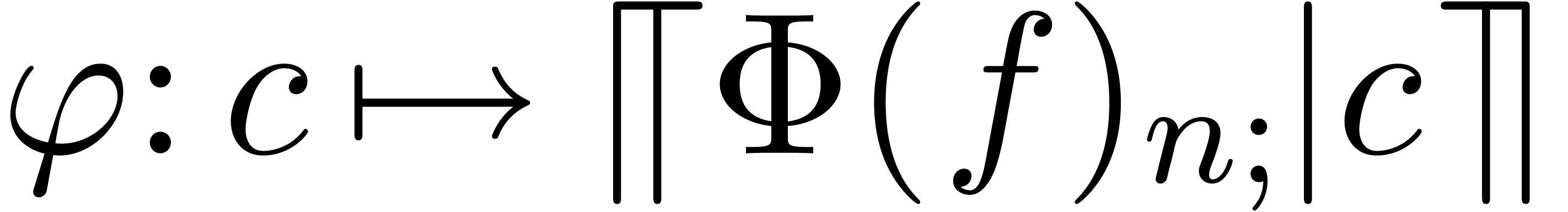

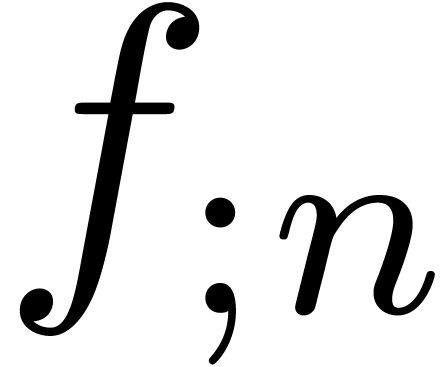

Given  with ball approximation algorithms

with ball approximation algorithms  , we may compute ball approximation

algorithms for

, we may compute ball approximation

algorithms for  simply by taking

simply by taking

More generally, assuming a good library for ball arithmetic, it is usually easy to write a wrapper library with the corresponding operations on computable numbers.

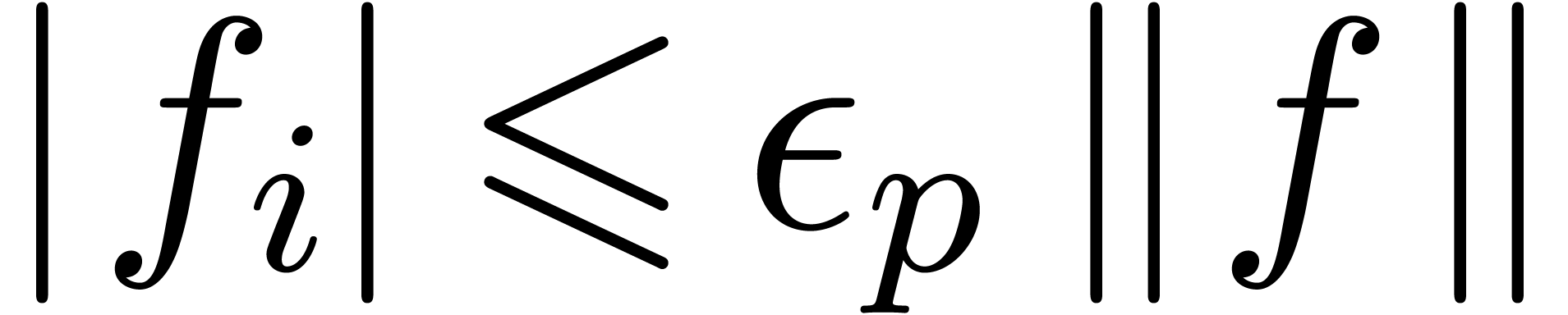

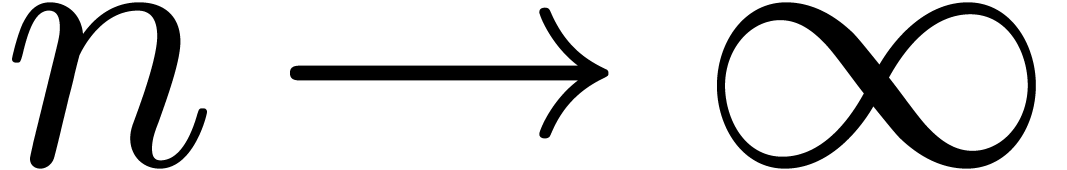

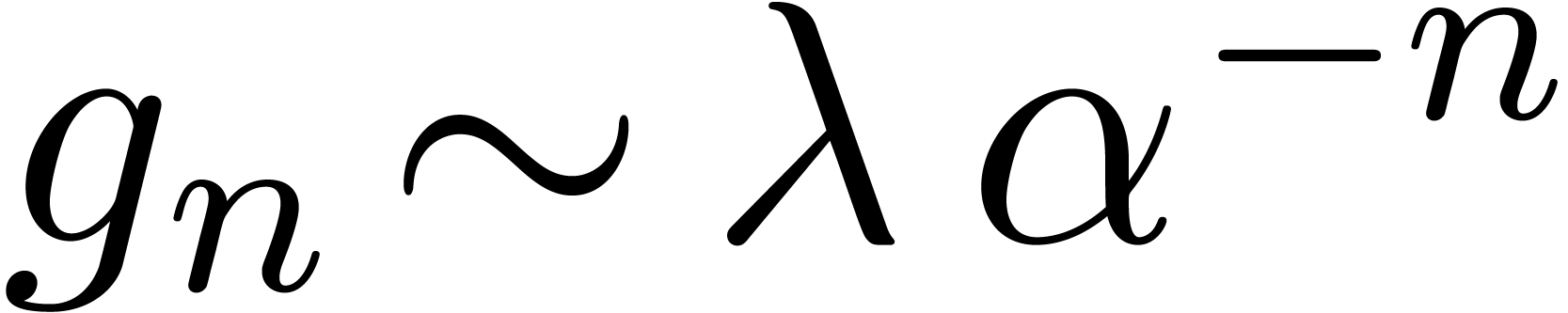

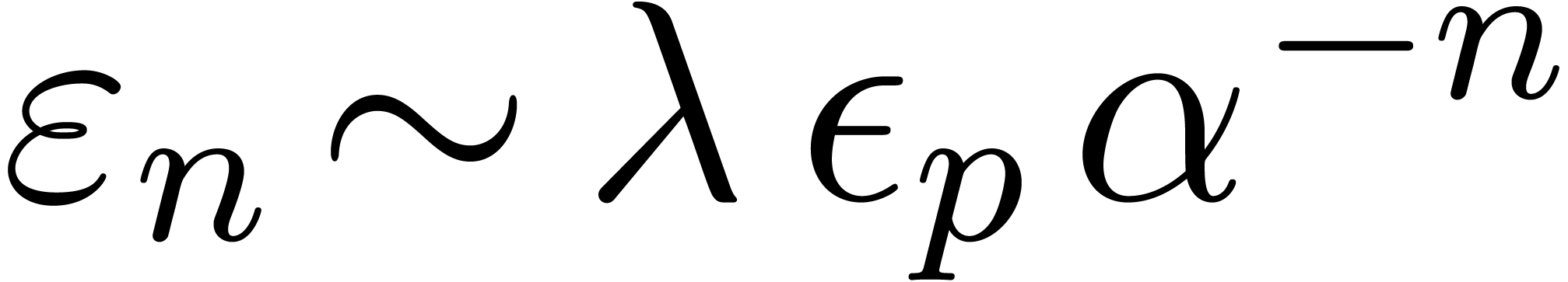

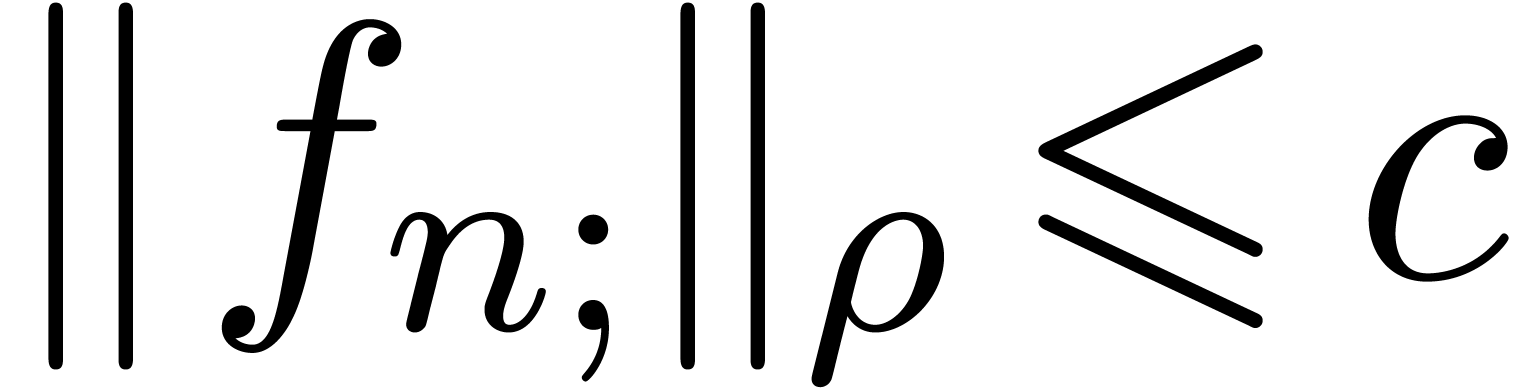

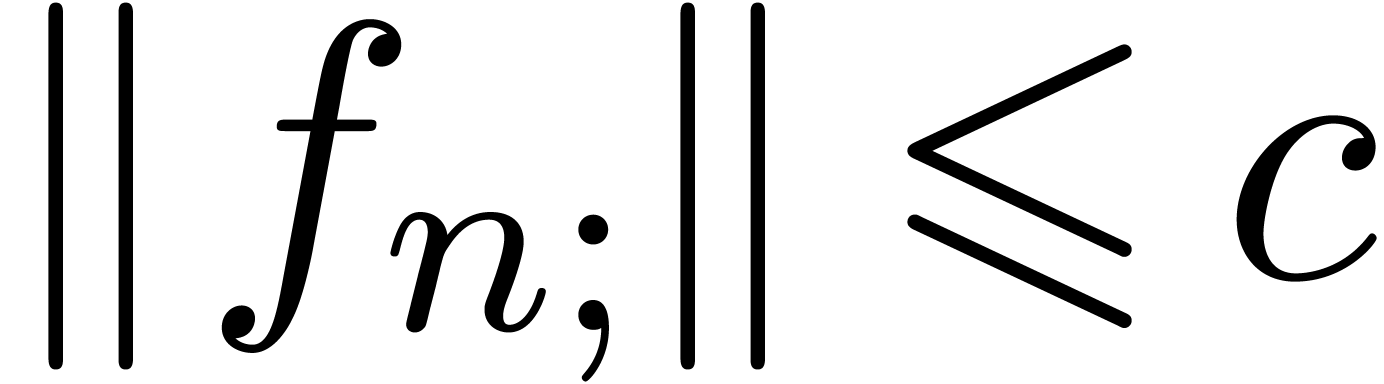

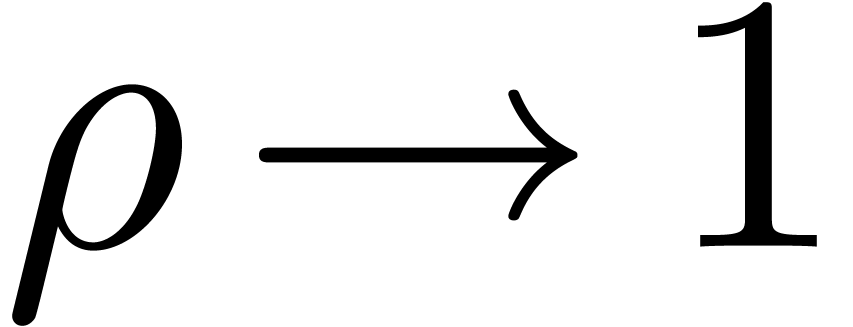

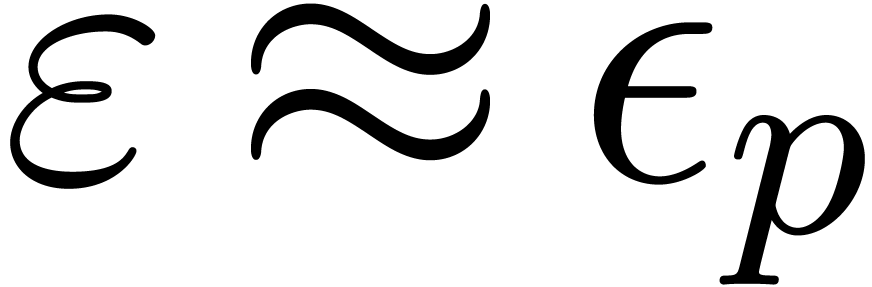

From the efficiency point of view, it is also convenient to work with

ball approximations. Usually, the radius  satisfies

satisfies

or at least

for some  . In that case,

doubling the working precision

. In that case,

doubling the working precision  until a

sufficiently good approximation is found is quite efficient. An even

better strategy is to double the “expected running time” at

every step [vdH06a, KR06]. Yet another

approach will be described in section 3.6 below.

until a

sufficiently good approximation is found is quite efficient. An even

better strategy is to double the “expected running time” at

every step [vdH06a, KR06]. Yet another

approach will be described in section 3.6 below.

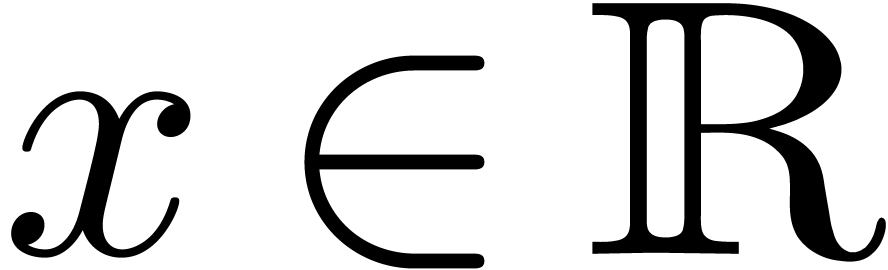

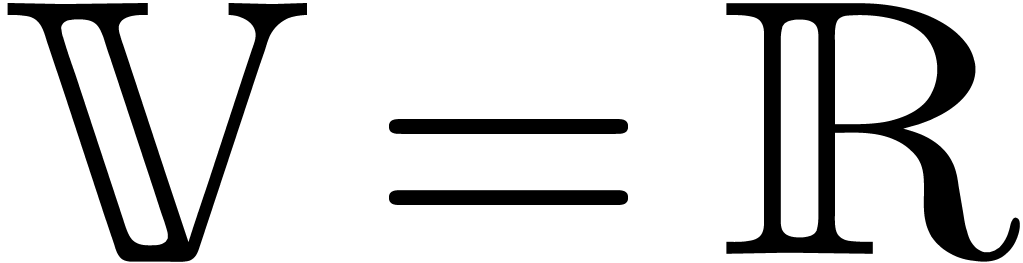

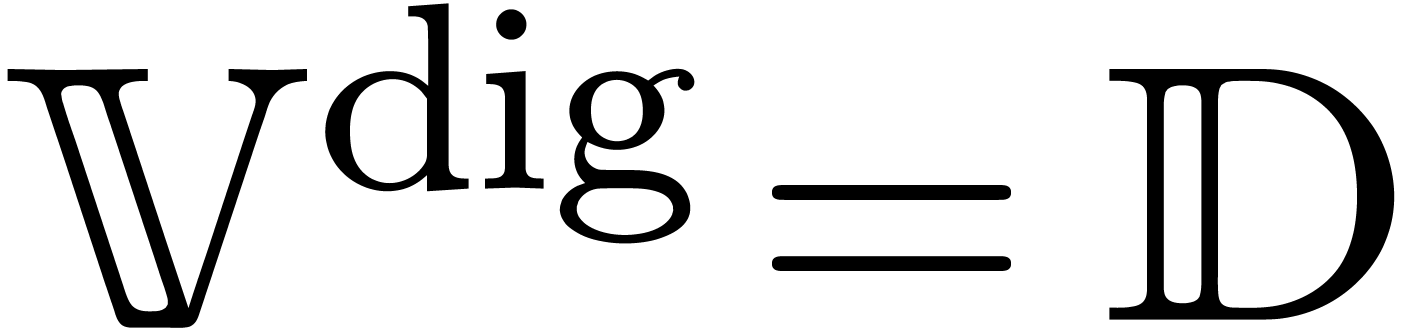

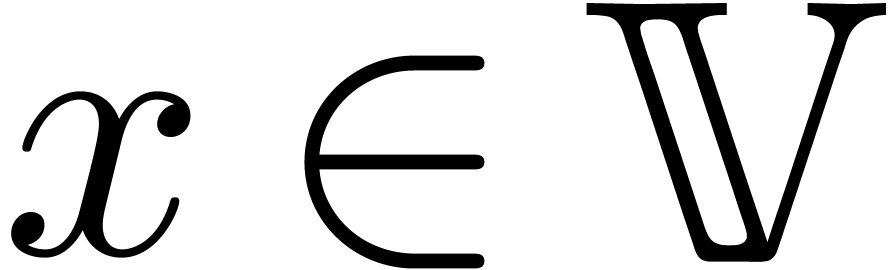

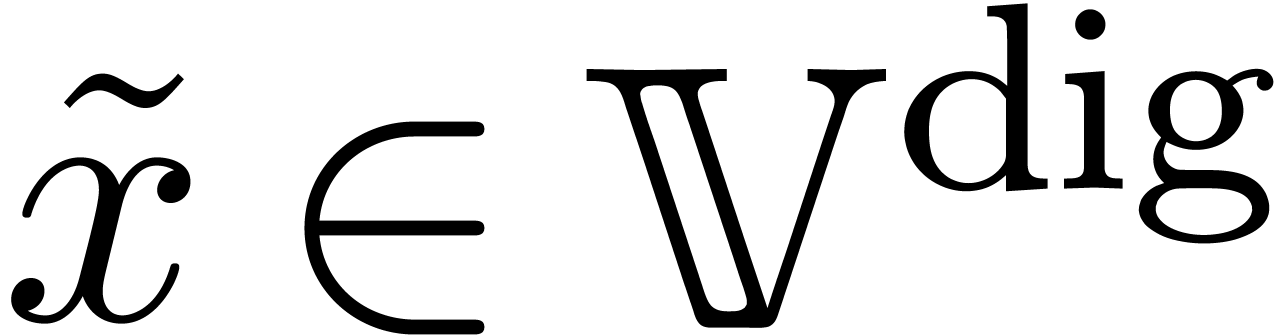

The concept of computable real numbers readily generalizes to more

general normed vector spaces. Let  be a normed

vector space and let

be a normed

vector space and let  be an effective subset of

digital points in

be an effective subset of

digital points in  ,

i.e. the elements of

,

i.e. the elements of  admit a

computer representation. For instance, if

admit a

computer representation. For instance, if  ,

then we take

,

then we take  . Similarly, if

. Similarly, if

is the set of real

is the set of real  matrices, with one of the matrix norms from section 2.3,

then it is natural to take

matrices, with one of the matrix norms from section 2.3,

then it is natural to take  .

A point

.

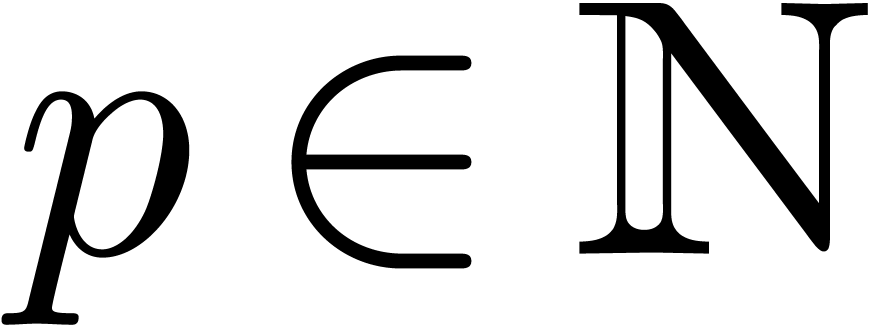

A point  is said to be computable, if it

admits an approximation algorithm which takes

is said to be computable, if it

admits an approximation algorithm which takes  on input, and returns an

on input, and returns an  -approximation

-approximation

of

of  (satisfying

(satisfying  , as above).

, as above).

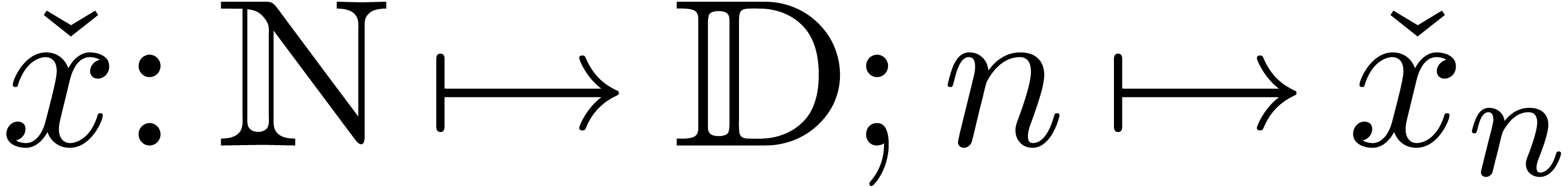

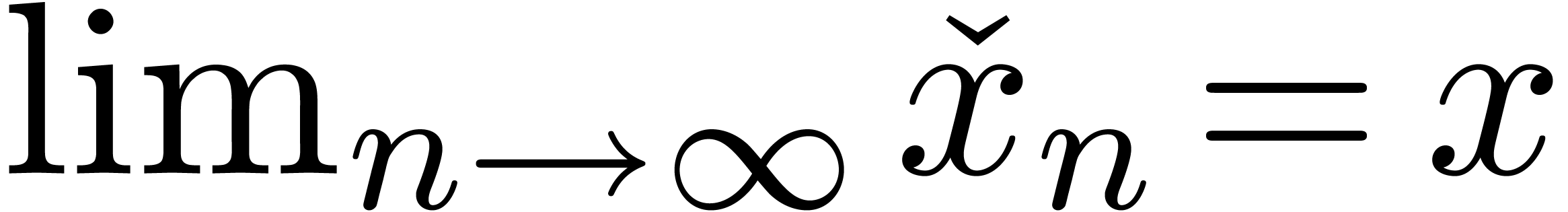

A real number  is said to be left

computable if there exists an algorithm for computing an increasing

sequence

is said to be left

computable if there exists an algorithm for computing an increasing

sequence  with

with  (and

(and  is called a left approximation algorithm). Similarly,

is called a left approximation algorithm). Similarly,

is said to be right computable if

is said to be right computable if  is left computable. A real number is computable if

and only if it is both left and right computable. Left computable but

non computable numbers occur frequently in practice and correspond to

“computable lower bounds” (see also [Wei00, vdH07b]).

is left computable. A real number is computable if

and only if it is both left and right computable. Left computable but

non computable numbers occur frequently in practice and correspond to

“computable lower bounds” (see also [Wei00, vdH07b]).

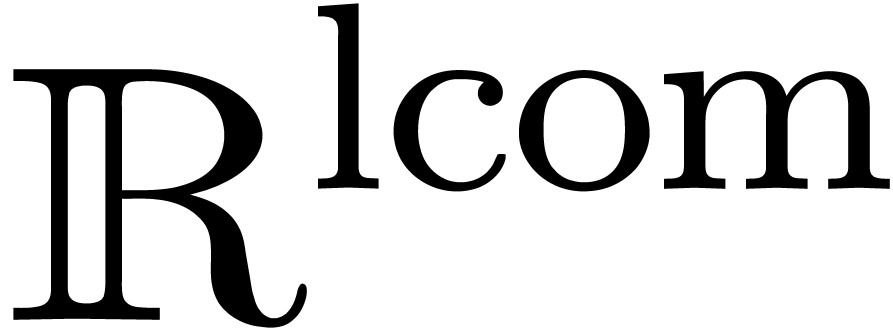

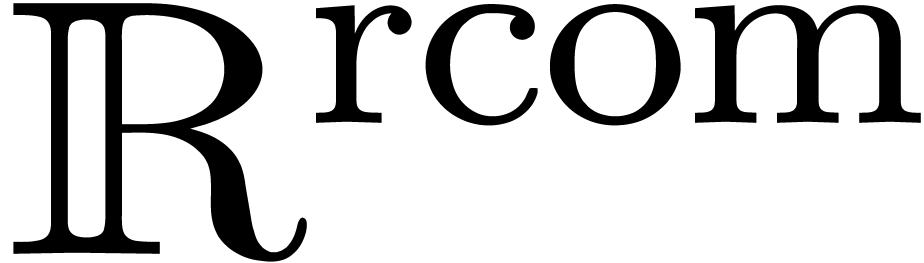

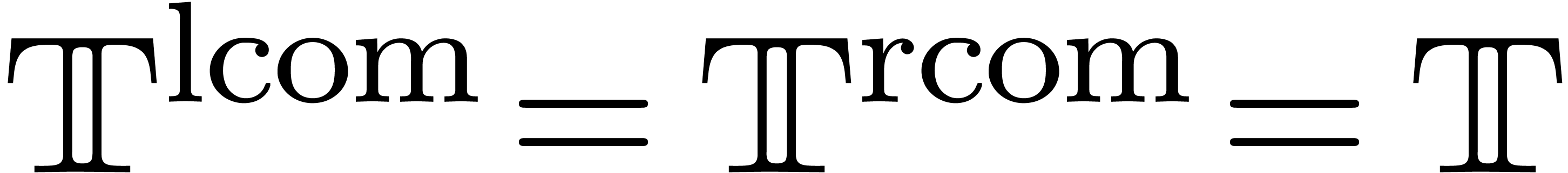

We will denote by  and

and  the data types of left and right computable real numbers. It is

convenient to specify and implement algorithms in computable analysis in

terms of these data types, whenever appropriate [vdH07b].

For instance, we have computable functions

the data types of left and right computable real numbers. It is

convenient to specify and implement algorithms in computable analysis in

terms of these data types, whenever appropriate [vdH07b].

For instance, we have computable functions

More generally, given a subset  ,

we say that

,

we say that  is left computable in

is left computable in  if there exists a left approximation algorithm

if there exists a left approximation algorithm  for

for  . We will

denote by

. We will

denote by  and

and  the data

types of left and computable numbers in

the data

types of left and computable numbers in  ,

and define

,

and define  .

.

Identifying the type of boolean numbers  with

with

, we have

, we have  as sets, but not as data types. For instance, it is well known

that equality is non computable for computable real numbers [Tur36].

Nevertheless, equality is “ultimately computable”

in the sense that there exists a computable function

as sets, but not as data types. For instance, it is well known

that equality is non computable for computable real numbers [Tur36].

Nevertheless, equality is “ultimately computable”

in the sense that there exists a computable function

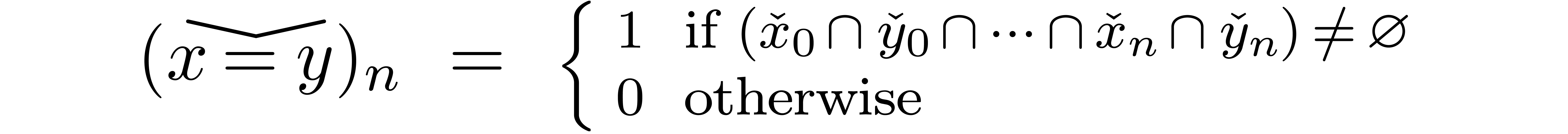

Indeed, given  with ball approximation algorithms

with ball approximation algorithms

and

and  ,

we may take

,

we may take

Similarly, the ordering relation  is ultimately

computable.

is ultimately

computable.

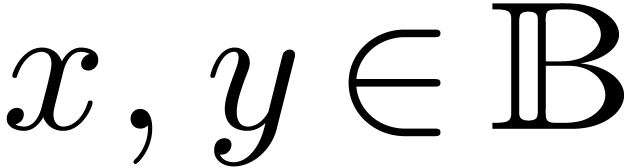

This asymmetric point of view on equality testing also suggest a

semantics for the relations  ,

,

, etc. on balls.

For instance, given balls

, etc. on balls.

For instance, given balls  ,

it is natural to take

,

it is natural to take

These definitions are interesting if balls are really used as successive approximations of a real number. An alternative application of ball arithmetic is for modeling non-deterministic computations: the ball models a set of possible values and we are interested in the set of possible outcomes of an algorithm. In that case, the natural return type of a relation on balls becomes a “boolean ball”. In the area of interval analysis, this second interpretation is more common [ANS09].

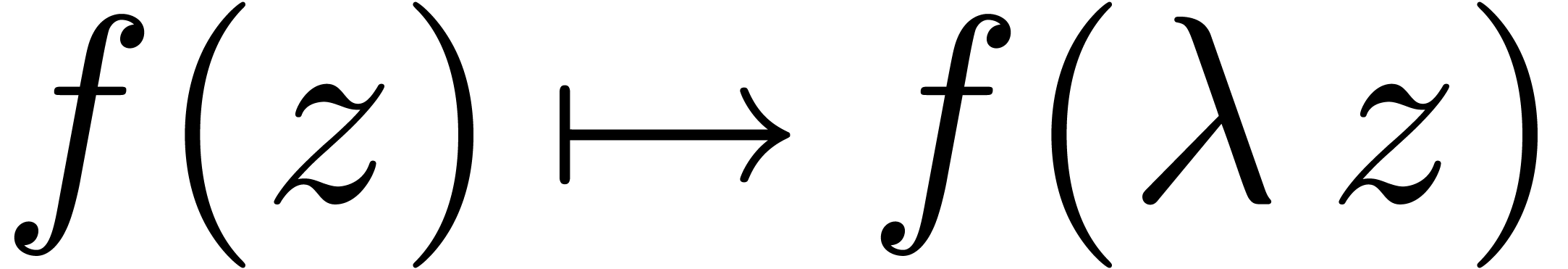

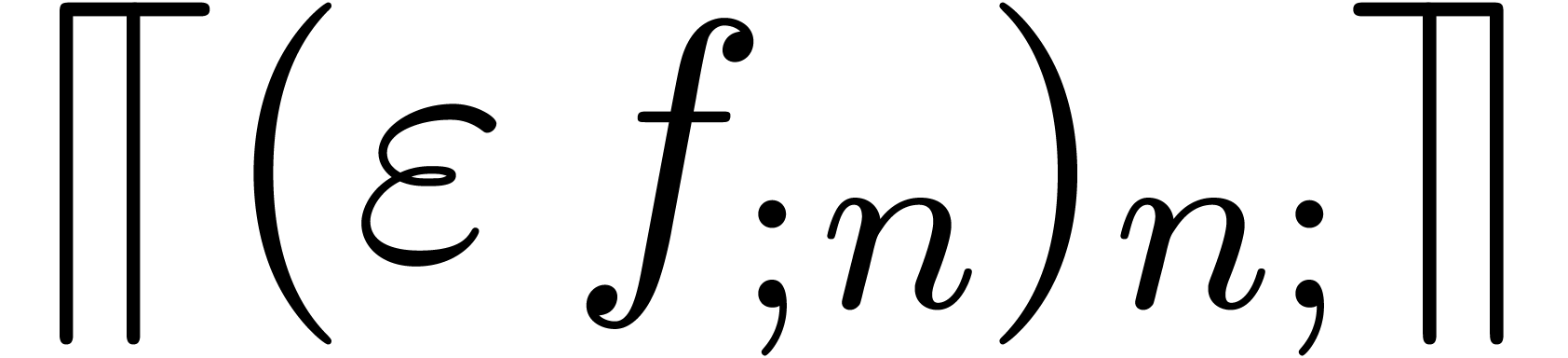

Remark

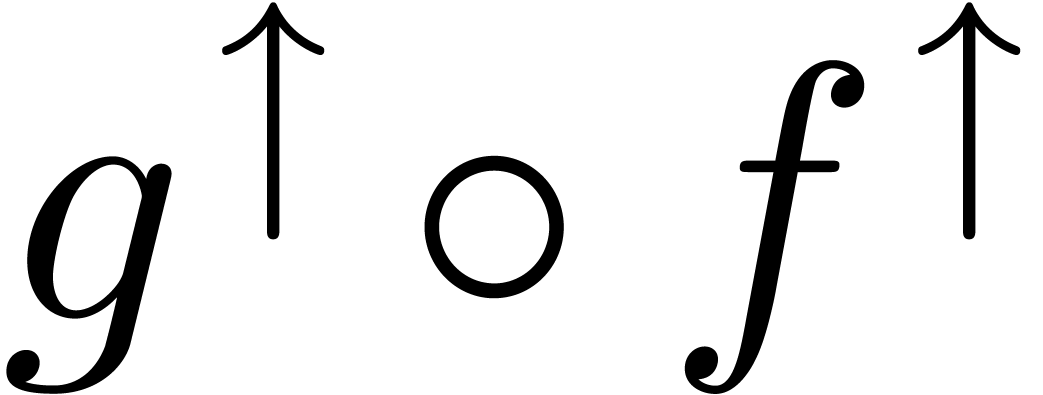

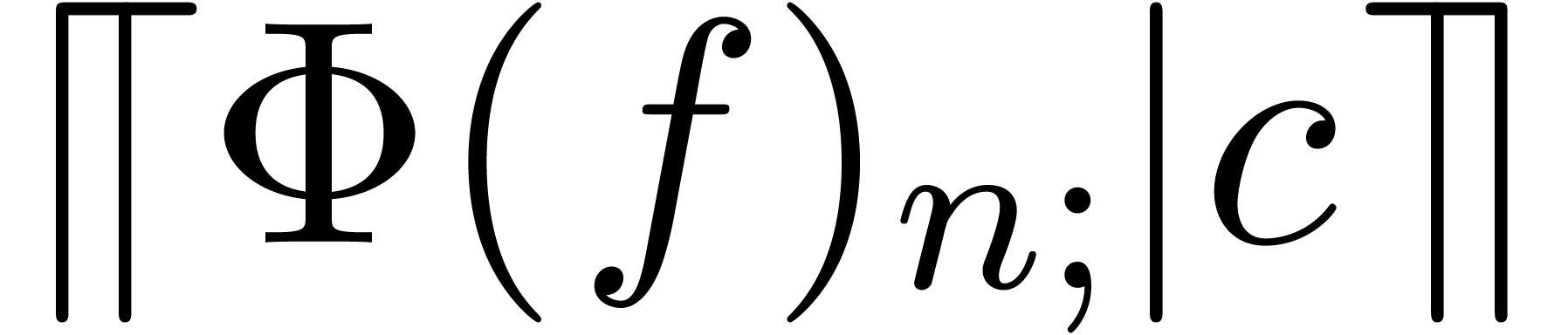

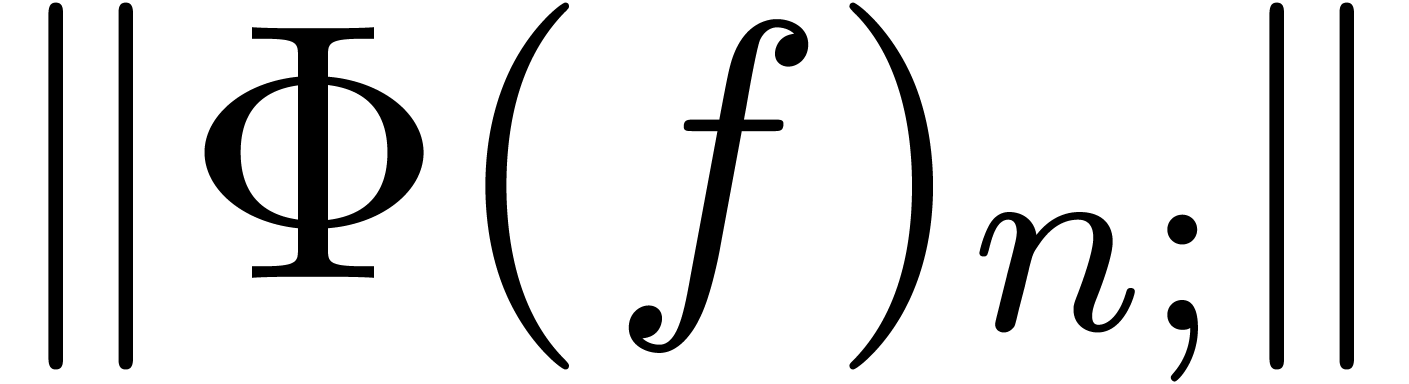

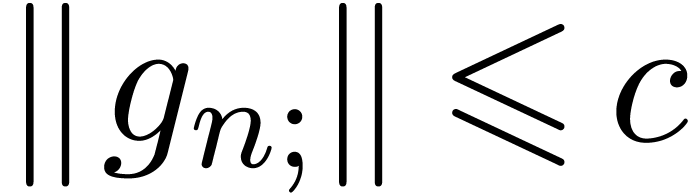

Given a computable function  ,

,

and

and  ,

let us return to the problem of efficiently computing an approximation

,

let us return to the problem of efficiently computing an approximation

of

of  with

with  . In section 3.4, we suggested to

compute ball approximations of

. In section 3.4, we suggested to

compute ball approximations of  at precisions

which double at every step, until a sufficiently precise approximation

is found. This computation involves an implementation

at precisions

which double at every step, until a sufficiently precise approximation

is found. This computation involves an implementation  of

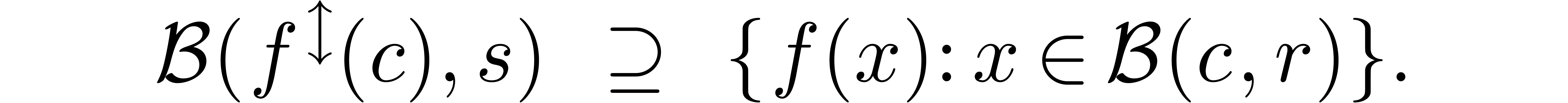

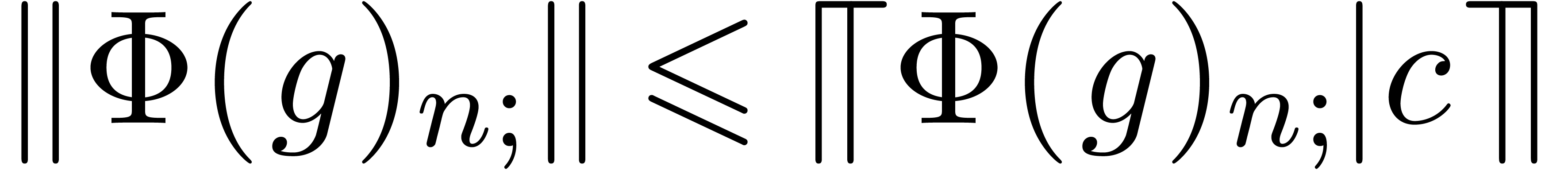

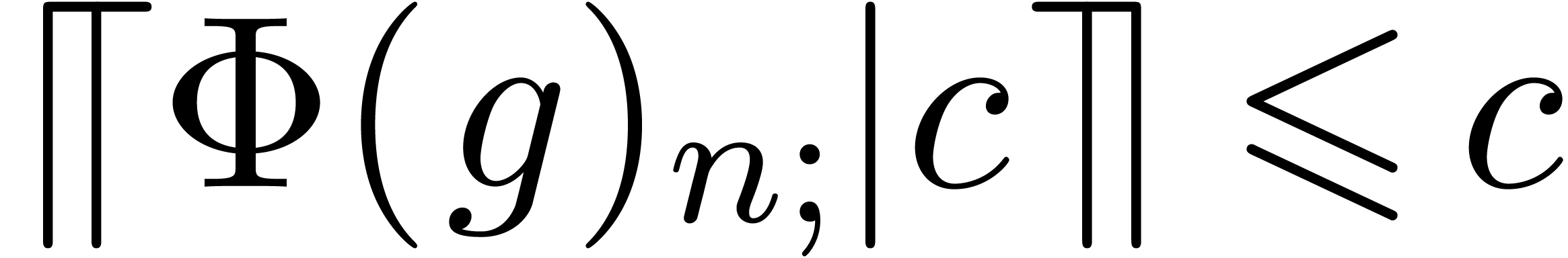

of  on the level of balls, which satisfies

on the level of balls, which satisfies

for every ball  . In practice,

. In practice,

is often differentiable, with

is often differentiable, with  . In that case, given a ball approximation

. In that case, given a ball approximation  of

of  , the

computed ball approximation

, the

computed ball approximation  of

of  typically has a radius

typically has a radius

for  . This should make it

possible to directly predict a sufficient precision at which

. This should make it

possible to directly predict a sufficient precision at which  . The problem is that (14)

needs to be replaced by a more reliable relation. This can be done on

the level of ball arithmetic itself, by replacing the usual condition

(13) by

. The problem is that (14)

needs to be replaced by a more reliable relation. This can be done on

the level of ball arithmetic itself, by replacing the usual condition

(13) by

Similarly, the multiplication of balls is carried out using

instead of (7). A variant of this kind of “Lipschitz

ball arithmetic” has been implemented in [M0].

Although a constant factor is gained for high precision computations at

regular points  , the

efficiency deteriorates near singularities (i.e. the

computation of

, the

efficiency deteriorates near singularities (i.e. the

computation of  ).

).

In the area of reliable computation, interval arithmetic has for long been privileged with respect to ball arithmetic. Indeed, balls are often regarded as a more or less exotic variant of intervals, based on an alternative midpoint-radius representation. Historically, interval arithmetic is also preferred in computer science because it is easy to implement if floating point operations are performed with correct rounding. Since most modern microprocessors implement the IEEE 754 norm, this point of view is well supported by hardware.

Not less historically, the situation in mathematics is inverse: whereas

intervals are the standard in computer science, balls are the standard

in mathematics, since they correspond to the traditional  -

- -calculus.

Even in the area of interval analysis, one usually resorts (at least

implicitly) to balls for more complex computations, such as the

inversion of a matrix [HS67, Moo66]. Indeed,

balls are more convenient when computing error bounds using perturbation

techniques. Also, we have a great deal of flexibility concerning the

choice of a norm. For instance, a vectorial ball is not necessarily a

Cartesian product of one dimensional balls.

-calculus.

Even in the area of interval analysis, one usually resorts (at least

implicitly) to balls for more complex computations, such as the

inversion of a matrix [HS67, Moo66]. Indeed,

balls are more convenient when computing error bounds using perturbation

techniques. Also, we have a great deal of flexibility concerning the

choice of a norm. For instance, a vectorial ball is not necessarily a

Cartesian product of one dimensional balls.

In this section, we will give a more detailed account on the respective advantages and disadvantages of interval and ball arithmetic.

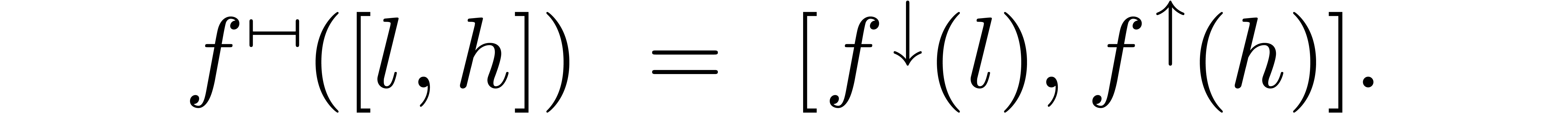

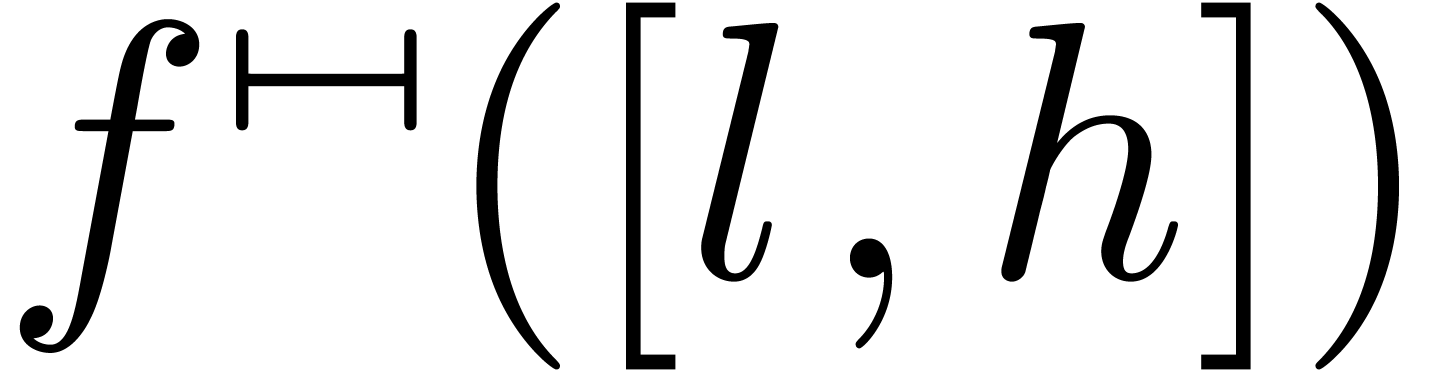

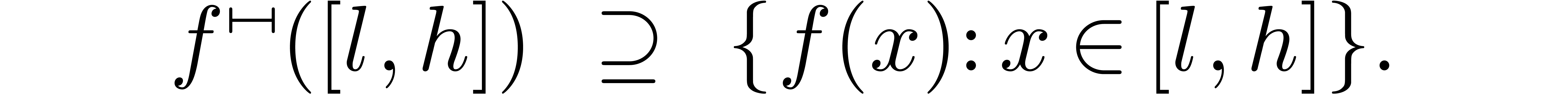

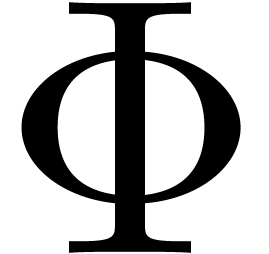

One advantage of interval arithmetic is that the IEEE 754 norm suggests

a natural and standard implementation. Indeed, let  be a real function which is increasing on some interval

be a real function which is increasing on some interval  . Then the natural interval lift

. Then the natural interval lift  of

of  is given by

is given by

This implementation has the property that  is the

smallest interval with end-points in

is the

smallest interval with end-points in  ,

which satisfies

,

which satisfies

For not necessarily increasing functions  this

property can still be used as a requirement for the

“standard” implementation of

this

property can still be used as a requirement for the

“standard” implementation of  .

For instance, this leads to the following implementation of the cosine

function on intervals:

.

For instance, this leads to the following implementation of the cosine

function on intervals:

Such a standard implementation of interval arithmetic has the convenient property that programs will execute in the same way on any platform which conforms to the IEEE 754 standard.

By analogy with the above approach for standardized interval arithmetic,

we may standardize the ball implementation  of

of

by taking

by taking

where the radius  is smallest in

is smallest in  with

with

Unfortunately, the computation of such an optimal  is not always straightforward. In particular, the formulas (10),

(11) and (12) do not necessarily realize this

tightest bound. In practice, it might therefore be better to achieve

standardization by fixing once and for all the formulas by which ball

operations are performed. Of course, more experience with ball

arithmetic is required before this can happen.

is not always straightforward. In particular, the formulas (10),

(11) and (12) do not necessarily realize this

tightest bound. In practice, it might therefore be better to achieve

standardization by fixing once and for all the formulas by which ball

operations are performed. Of course, more experience with ball

arithmetic is required before this can happen.

The respective efficiencies of interval and ball arithmetic depend on the precision at which we are computing. For high precisions and most applications, ball arithmetic has the advantage that we can still perform computations on the radius at single precision. By contrast, interval arithmetic requires full precision for operations on both end-points. This makes ball arithmetic twice as efficient at high precisions.

When working at machine precision, the efficiencies of both approaches

essentially depend on the hardware. A priori, interval

arithmetic is better supported by current computers, since most of them

respect the IEEE 754 norm, whereas the function  from (9) usually has to be implemented by hand. However,

changing the rounding mode is often highly expensive (over hundred

cycles). Therefore, additional gymnastics may be required in order to

always work with respect to a fixed rounding mode. For instance, if

from (9) usually has to be implemented by hand. However,

changing the rounding mode is often highly expensive (over hundred

cycles). Therefore, additional gymnastics may be required in order to

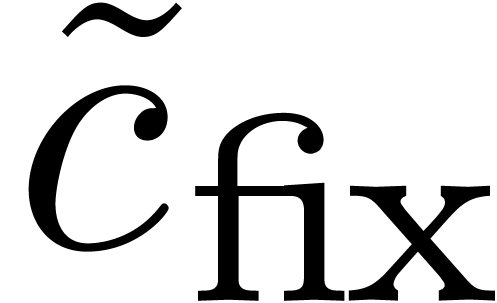

always work with respect to a fixed rounding mode. For instance, if  is our current rounding mode, then we may take

is our current rounding mode, then we may take

since the operation  is always exact

(i.e. does not depend on the rounding mode). As a

consequence, interval arithmetic becomes slightly more expensive. By

contrast, when releasing the condition that centers of balls are

computed using rounding to the nearest, we may replace (8)

by

is always exact

(i.e. does not depend on the rounding mode). As a

consequence, interval arithmetic becomes slightly more expensive. By

contrast, when releasing the condition that centers of balls are

computed using rounding to the nearest, we may replace (8)

by

and (9) by

Hence, ball arithmetic already allows us to work with respect to a fixed rounding mode. Of course, using (17) instead of (8) does require to rethink the way ball arithmetic should be standardized.

An alternative technique for avoiding changes in rounding mode exists when performing operations on compound types, such as vectors or matrices. For instance, when adding two vectors, we may first add all lower bounds while rounding downwards and next add the upper bounds while rounding upwards. Unfortunately, this strategy becomes more problematic in the case of multiplication, because different rounding modes may be needed depending on the signs of the multiplicands. As a consequence, matrix operations tend to require many conditional parts of code when using interval arithmetic, with increased probability of breaking the processor pipeline. On the contrary, ball arithmetic highly benefits from parallel architecture and it is easy to implement ball arithmetic for matrices on top of existing libraries: see [Rum99a] and section 6 below.

Besides the efficiency of ball and interval arithmetic for basic

operations, it is also important to investigate the quality of the

resulting bounds. Indeed, there are usually differences between the sets

which are representable by balls and by intervals. For instance, when

using the extended IEEE 754 arithmetic with infinities, then it is

possible to represent  as an interval, but not as

a ball.

as an interval, but not as

a ball.

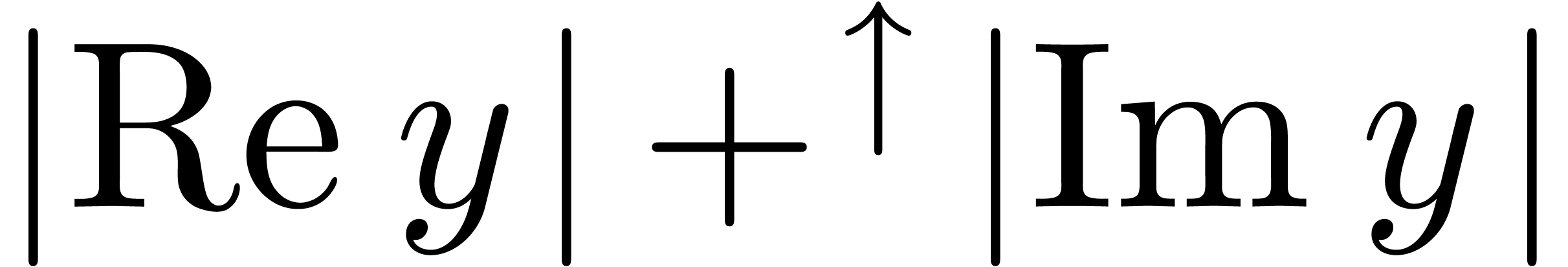

These differences get more important when dealing with complex numbers

or compound types, such as matrices. For instance, when using interval

arithmetic for reliable computations with complex numbers, it is natural

to enclose complex numbers by rectangles  ,

where

,

where  and

and  are intervals.

For instance, the complex number

are intervals.

For instance, the complex number  may be enclosed

by

may be enclosed

by

for some small number  . When

using ball arithmetic, we would rather enclose

. When

using ball arithmetic, we would rather enclose  by

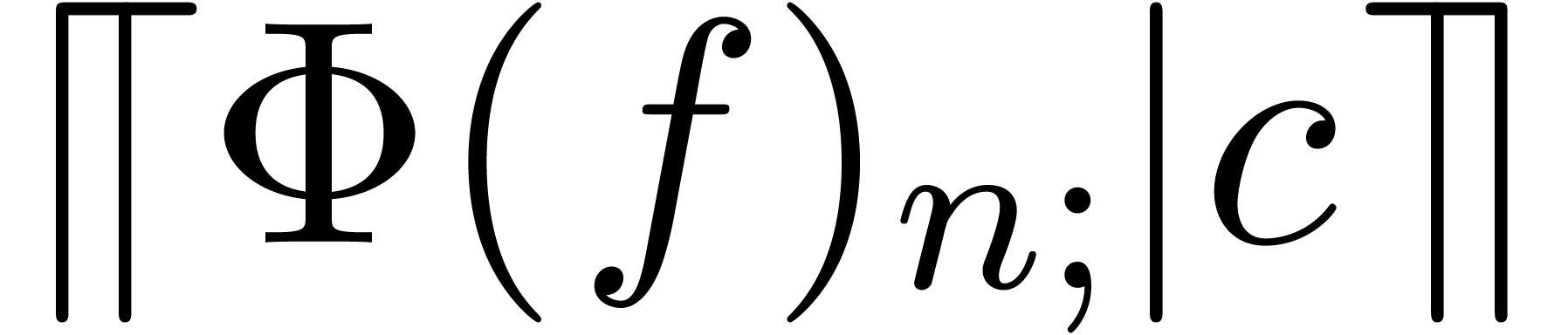

by

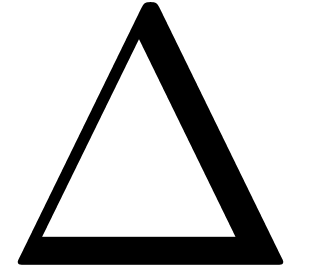

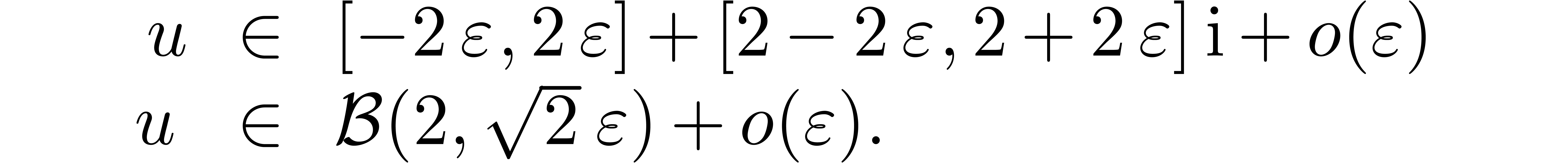

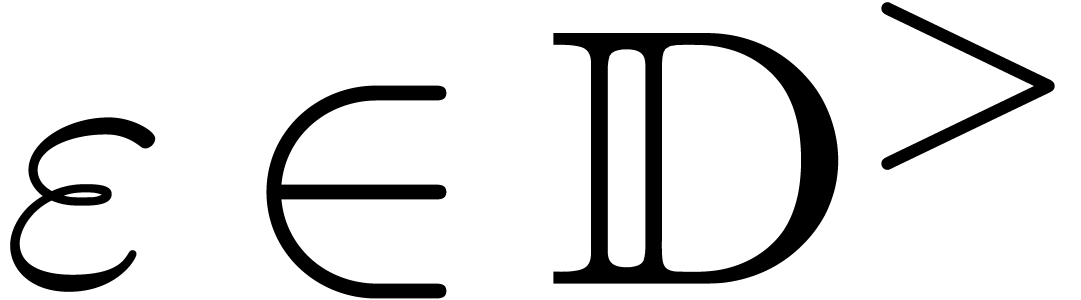

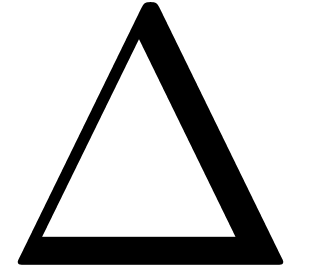

Now consider the computation of  .

The computed rectangular and ball enclosures are given by

.

The computed rectangular and ball enclosures are given by

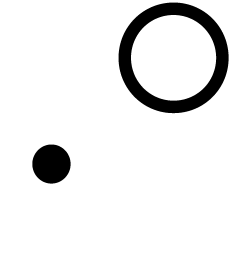

Consequently, ball arithmetic yields a much better bound, which is due

to the fact that multiplication by  turns the

rectangular enclosure by

turns the

rectangular enclosure by  degrees, leading to an

overestimation by a factor

degrees, leading to an

overestimation by a factor  when re-enclosing the

result by a horizontal rectangle (see figure 1).

when re-enclosing the

result by a horizontal rectangle (see figure 1).

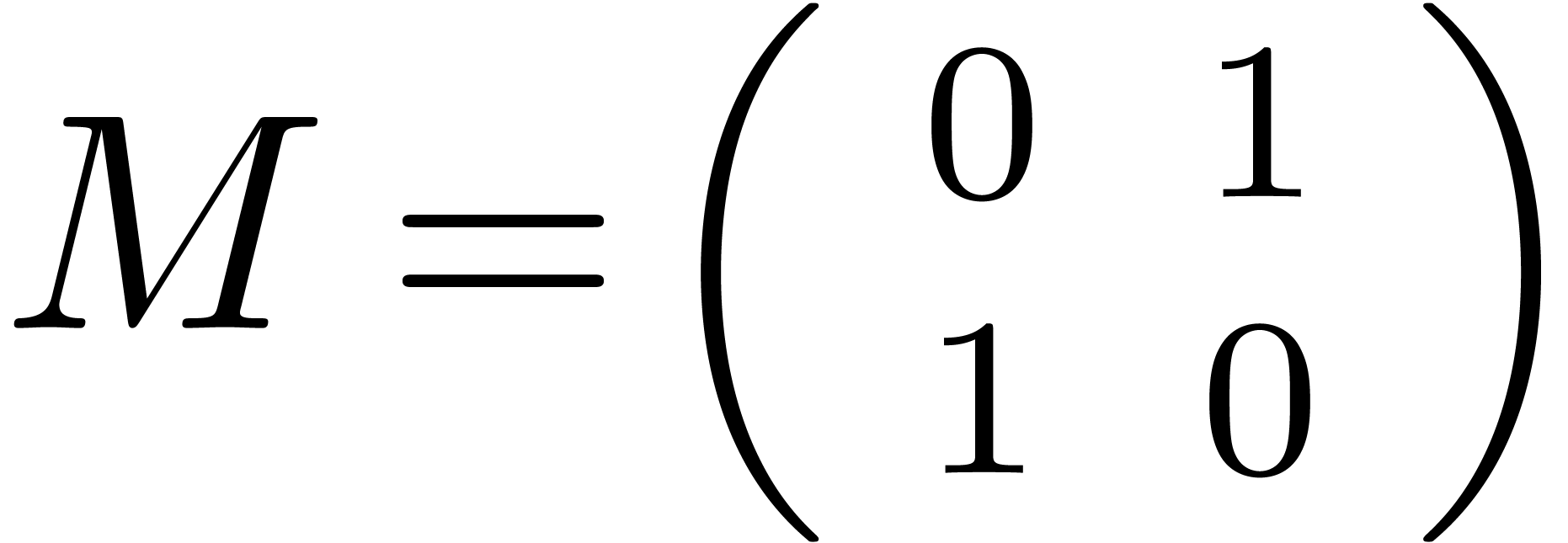

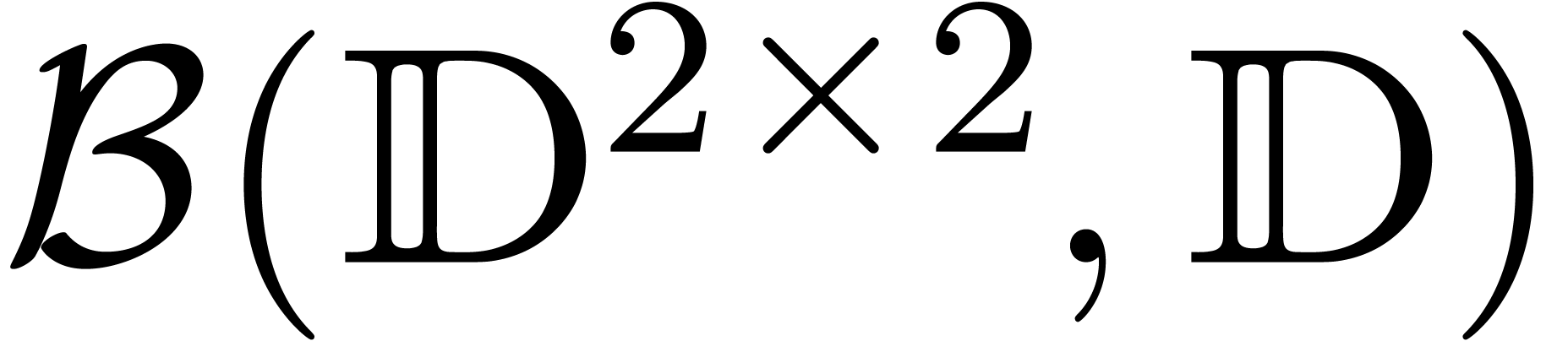

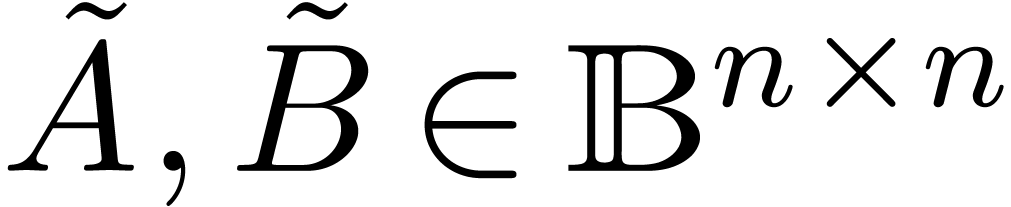

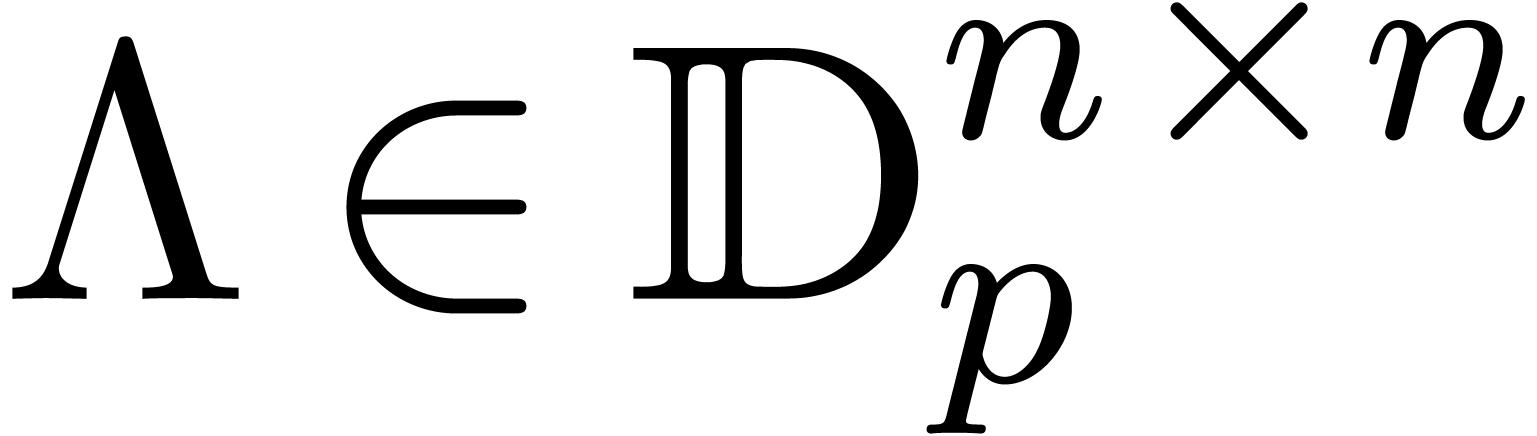

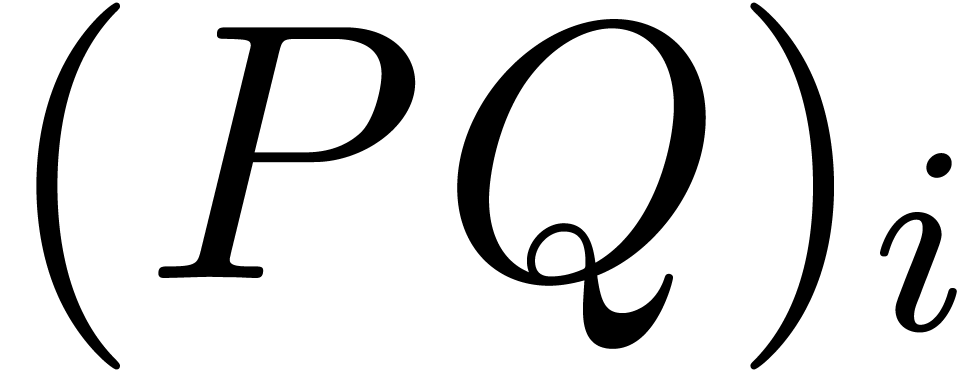

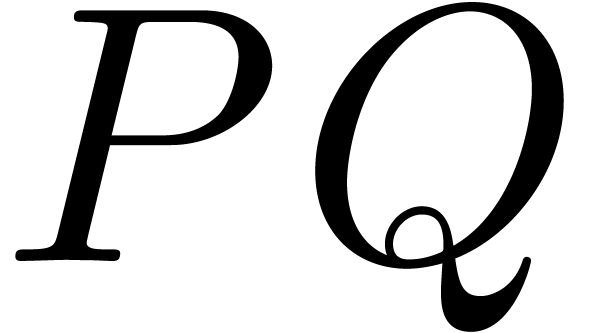

This is one of the simplest instances of the wrapping effect [Moo66]. For similar reasons, one may prefer to compute the square of the matrix

|

(18) |

in  rather than

rather than  ,

while using the operator norm (3) for matrices. This

technique highlights another advantage of ball arithmetic: we have a

certain amount of flexibility regarding the choice of the radius type.

By choosing a simple radius type, we do not only reduce the wrapping

effect, but also improve the efficiency: when computing with complex

balls in

,

while using the operator norm (3) for matrices. This

technique highlights another advantage of ball arithmetic: we have a

certain amount of flexibility regarding the choice of the radius type.

By choosing a simple radius type, we do not only reduce the wrapping

effect, but also improve the efficiency: when computing with complex

balls in  , we only need to

bound one radius instead of two for every basic operation. More

precisely, we replace (8) and (9) by

, we only need to

bound one radius instead of two for every basic operation. More

precisely, we replace (8) and (9) by

On the negative side, generalized norms may be harder to compute, even

though a rough bound often suffices (e.g. replacing  by

by  in the above formula). In

the case of matricial balls, a more serious problem concerns

overestimation when the matrix contains entries of different orders of

magnitude. In such badly conditioned situations, it is better to work in

in the above formula). In

the case of matricial balls, a more serious problem concerns

overestimation when the matrix contains entries of different orders of

magnitude. In such badly conditioned situations, it is better to work in

rather than

rather than  .

Another more algorithmic technique for reducing the wrapping effect will

be discussed in sections 6.4 and 7.5 below.

.

Another more algorithmic technique for reducing the wrapping effect will

be discussed in sections 6.4 and 7.5 below.

Even though personal taste in the choice between balls and intervals cannot be discussed, the elegance of the chosen approach for a particular application can partially be measured in terms of the human time which is needed to establish the necessary error bounds.

We have already seen that interval enclosures are particularly easy to

obtain for monotonic real functions. Another typical algorithm where

interval arithmetic is more convenient is the resolution of a system of

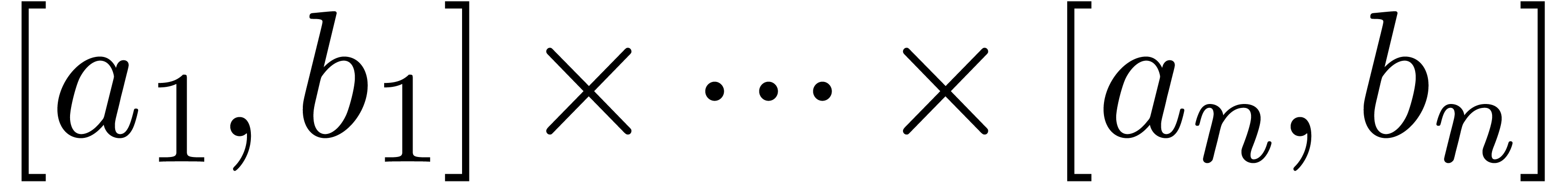

equations using dichotomy. Indeed, it is easier to cut an  -dimensional block

-dimensional block  into

into

smaller blocks than to perform a similar

operation on balls.

smaller blocks than to perform a similar

operation on balls.

For most other applications, ball representations are more convenient. Indeed, error bounds are usually obtained by perturbation methods. For any mathematical proof where error bounds are explicitly computed in this way, it is generally easy to derive a certified algorithm based on ball arithmetic. We will see several illustrations of this principle in the sections below.

Implementations of interval arithmetic often rely on floating point arithmetic with correct rounding. One may question how good correct rounding actually is in order to achieve reliability. One major benefit is that it provides a simple and elegant way to specify what a mathematical function precisely does at limited precision. In particular, it allows numerical programs to execute exactly in the same way on many different hardware architectures.

On the other hand, correct rounding does have a certain cost. Although

the cost is limited for field operations and elementary functions [Mul06], the cost increases for more complex special functions,

especially if one seeks for numerical methods with a constant operation

count. For arbitrary computable functions on  , correct rounding even becomes impossible. Another

disadvantage is that correct rounding is lost as soon we perform more

than one operation: in general,

, correct rounding even becomes impossible. Another

disadvantage is that correct rounding is lost as soon we perform more

than one operation: in general,  and

and  do not coincide.

do not coincide.

In the case of ball arithmetic, we only require an upper bound for the error, not necessarily the best possible representable one. In principle, this is just as reliable and usually more economic. Now in an ideal world, the development of numerical codes goes hand in hand with the systematic development of routines which compute the corresponding error bounds. In such a world, correct rounding becomes superfluous, since correctness is no longer ensured at the micro-level of hardware available functions, but rather at the top-level, via mathematical proof.

Computable analysis provides a good high-level framework for the

automatic and certified resolution of analytic problems. The user states

the problem in a formal language and specifies a required absolute or

relative precision. The program should return a numerical result which

is certified to meet the requirement on the precision. A simple example

is to compute an  -approximation

for

-approximation

for  , for a given

, for a given  .

.

The

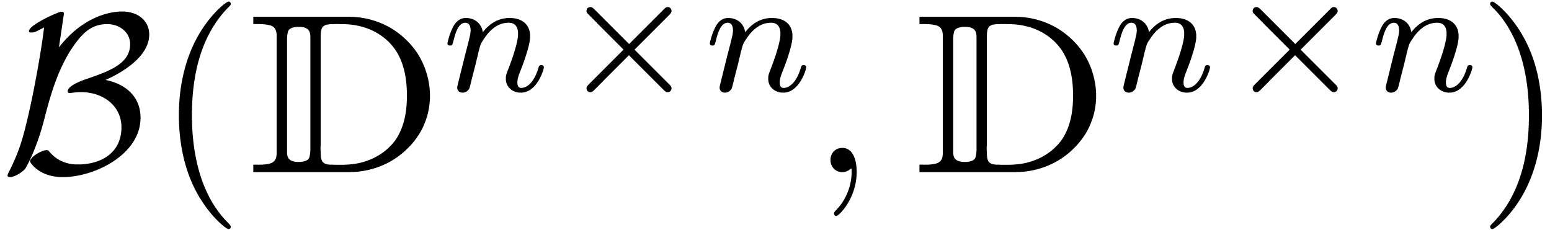

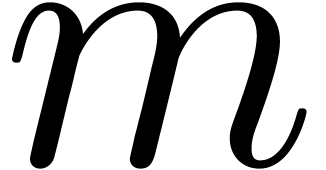

In order to address this efficiency problem, the  computable real matrices. The

numerical hierarchy turns out to be a convenient framework for more

complex problems as well, such as the analytic continuation of the

solution to a dynamical system. As a matter of fact, the framework

incites the developer to restate the original problem at the different

levels, which is generally a good starting point for designing an

efficient solution.

computable real matrices. The

numerical hierarchy turns out to be a convenient framework for more

complex problems as well, such as the analytic continuation of the

solution to a dynamical system. As a matter of fact, the framework

incites the developer to restate the original problem at the different

levels, which is generally a good starting point for designing an

efficient solution.

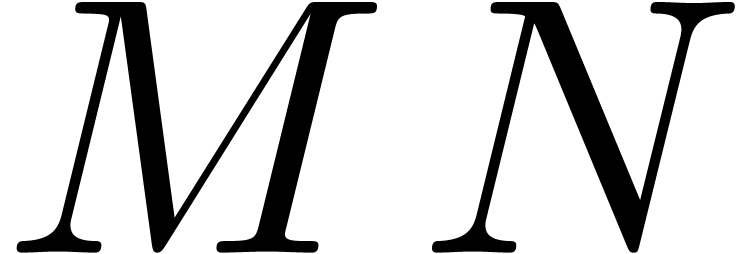

matrices

matrices  and an absolute

error

and an absolute

error  . The aim is to

compute

. The aim is to

compute  -approximations for

all entries of the product

-approximations for

all entries of the product  .

.

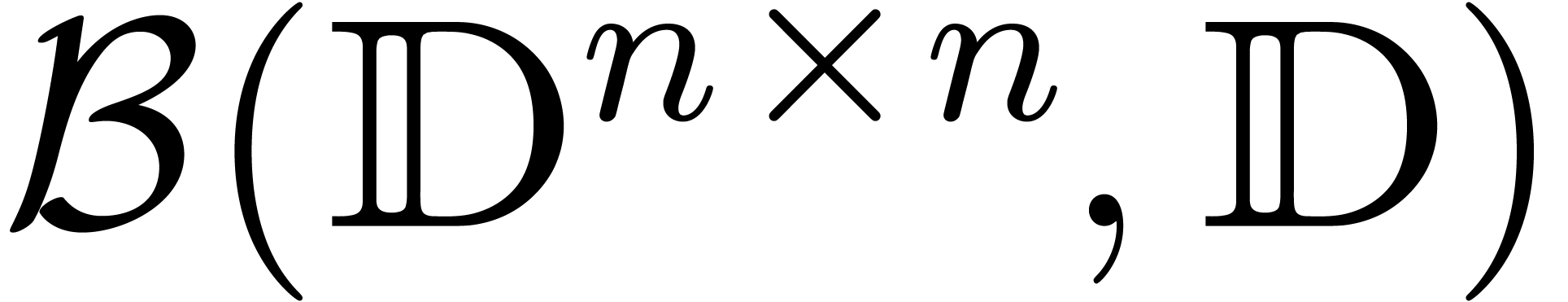

The simplest approach to this problem is to use a generic formal matrix

multiplication algorithm, using the fact that  is

an effective ring. However, as stressed above, instances of

is

an effective ring. However, as stressed above, instances of  are really functions, so that ring operations in

are really functions, so that ring operations in  are quite expensive. Instead, when working at precision

are quite expensive. Instead, when working at precision

, we may first compute ball

approximations for all the entries of

, we may first compute ball

approximations for all the entries of  and

and  , after which we form two

approximation matrices

, after which we form two

approximation matrices  . The

multiplication problem then reduces to the problem of multiplying two

matrices in

. The

multiplication problem then reduces to the problem of multiplying two

matrices in  . This approach

has the advantage that

. This approach

has the advantage that  operations on

“functions” in

operations on

“functions” in  are replaced by a

single multiplication in

are replaced by a

single multiplication in  .

.

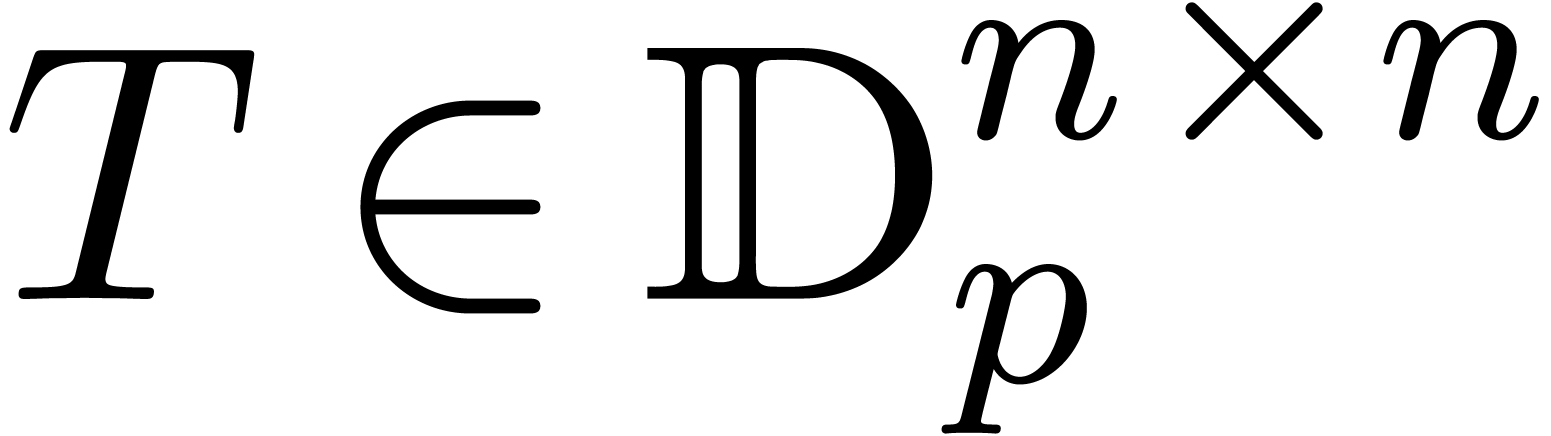

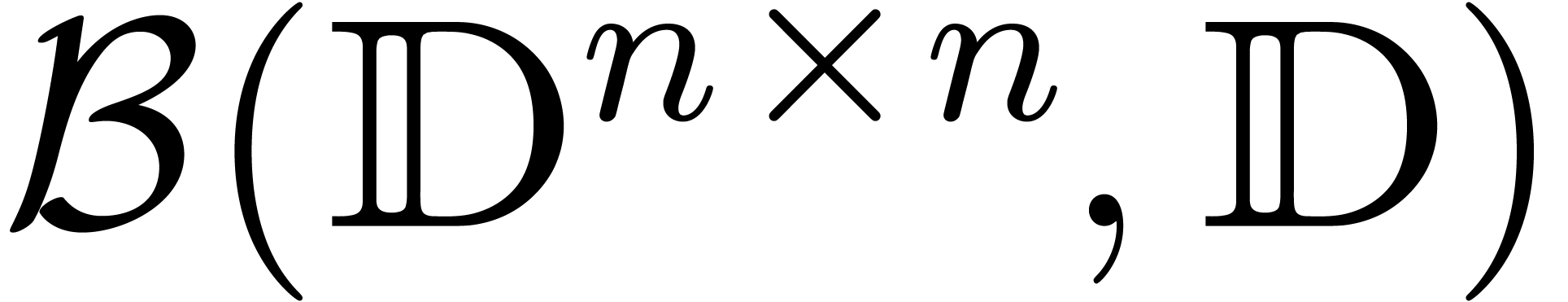

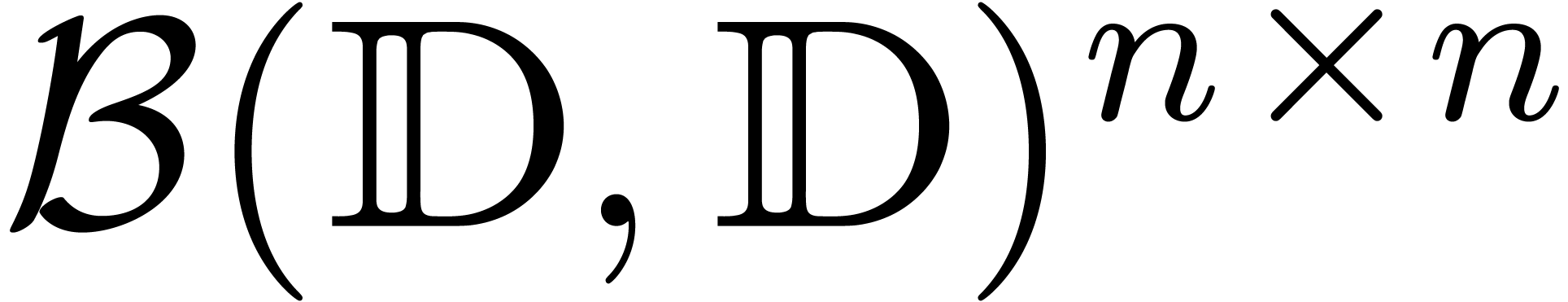

When operating on non-scalar objects, such as matrices of balls, it is

often efficient to rewrite the objects first. For instance, when working

in fixed point arithmetic (i.e. all entries of  and

and  admit similar orders of

magnitude), a matrix in

admit similar orders of

magnitude), a matrix in  may also be considered

as a matricial ball in

may also be considered

as a matricial ball in  for the matrix

norm

for the matrix

norm  . We multiply two such

balls using the formula

. We multiply two such

balls using the formula

which is a corrected version of (7), taking into account

that the matrix norm  only satisfies

only satisfies  . Whereas the naive multiplication in

. Whereas the naive multiplication in  involves

involves  multiplications in

multiplications in  , the new method reduces this

number to

, the new method reduces this

number to  : one

“expensive” multiplication in

: one

“expensive” multiplication in  and

two scalar multiplications of matrices by numbers. This type of tricks

will be discussed in more detail in section 6.4 below.

and

two scalar multiplications of matrices by numbers. This type of tricks

will be discussed in more detail in section 6.4 below.

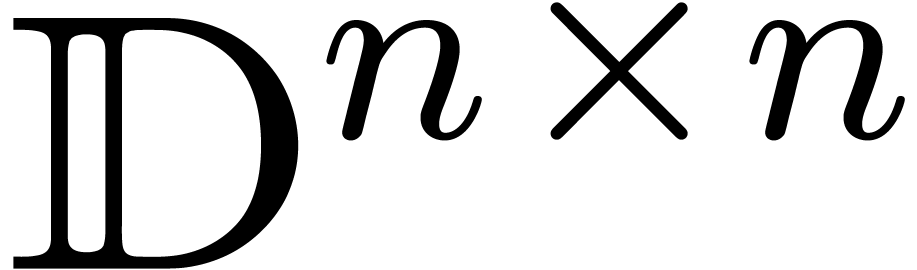

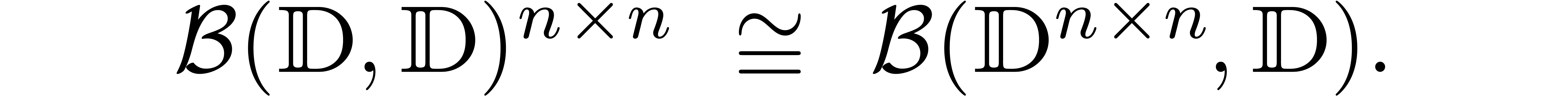

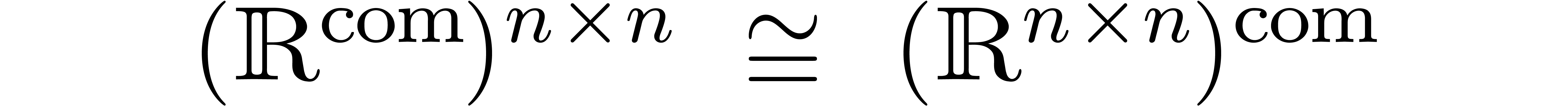

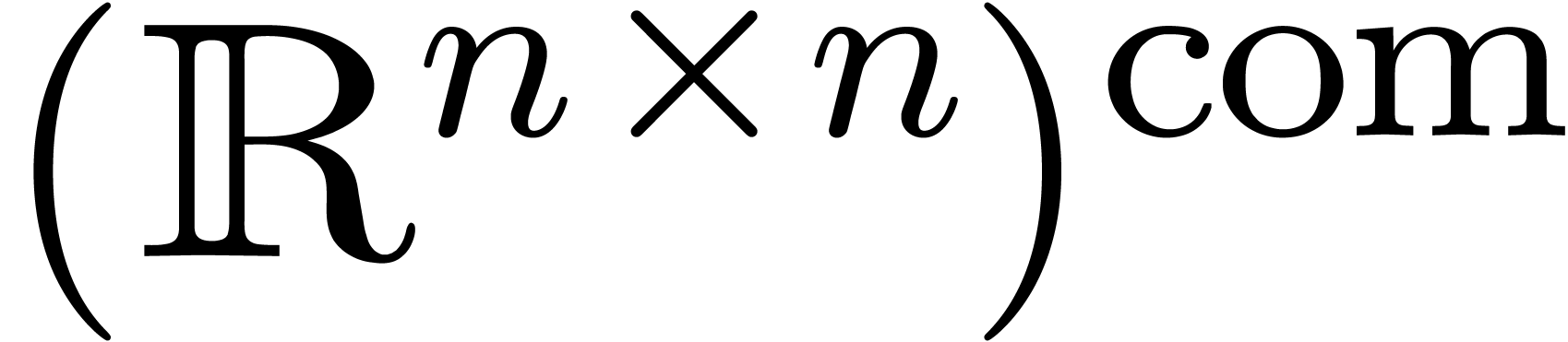

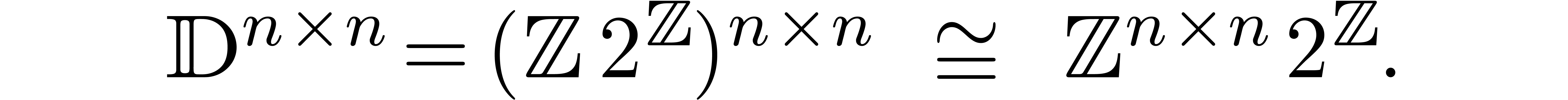

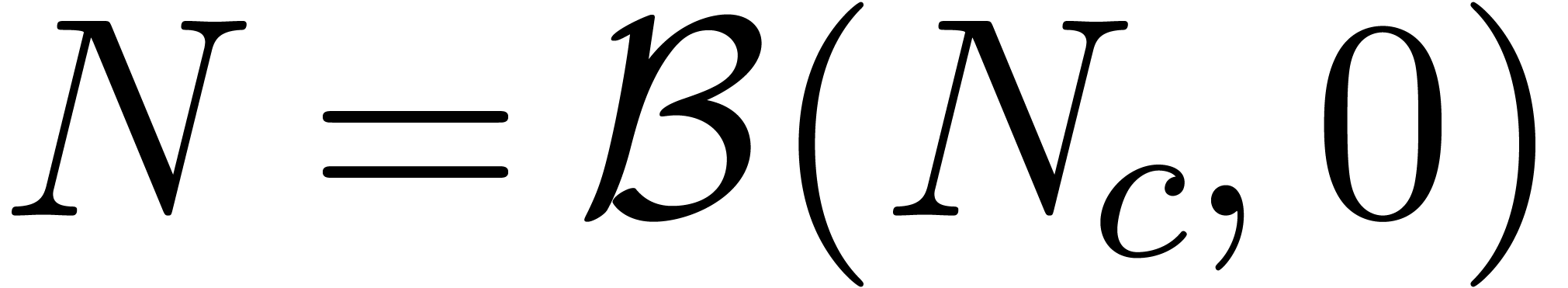

Essentially, the new method is based on the isomorphism

A similar isomorphism exists on the mathematical level:

As a variant, we directly may use the latter isomorphism at the top

level, after which ball approximations of elements of  are already in

are already in  .

.

. In single or

double precision, we may usually rely on highly efficient numerical

libraries (

. In single or

double precision, we may usually rely on highly efficient numerical

libraries ( and

and  .

.

When working with matrices with multiple precision floating point entries, this overhead can be greatly reduced by putting the entries under a common exponent using the isomorphism

This reduces the matrix multiplication problem for floating point numbers to a purely arithmetic problem. Of course, this method becomes numerically unstable when the exponents differ wildly; in that case, row preconditioning of the first multiplicand and column preconditioning of the second multiplicand usually helps.

matrices in

matrices in  . The efficient

resolution of this problem for all possible

. The efficient

resolution of this problem for all possible  and integer bit lengths

and integer bit lengths  is again non-trivial.

is again non-trivial.

Indeed, libraries such as  , which is far from optimal for

large values of

, which is far from optimal for

large values of  .

.

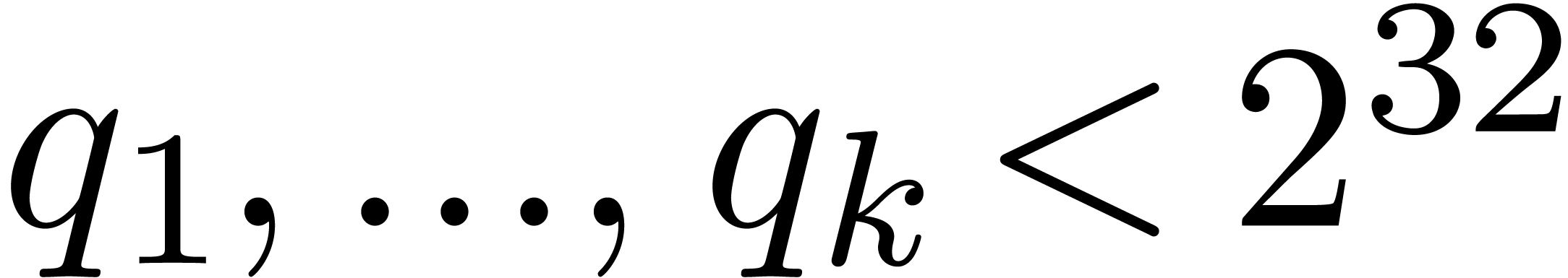

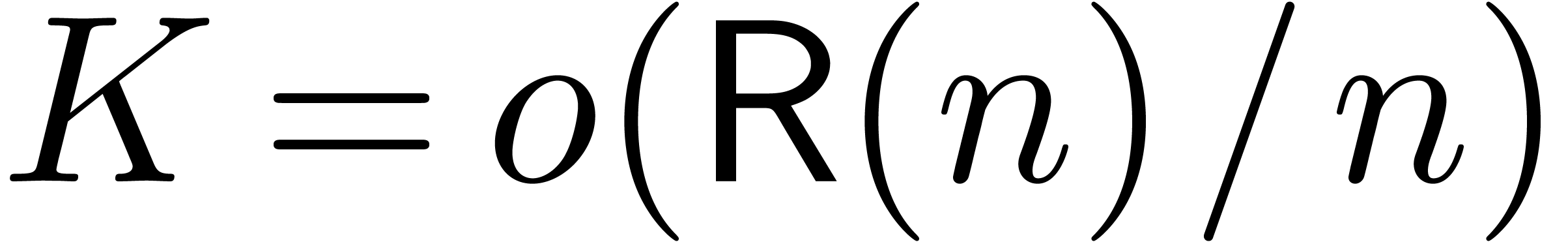

Indeed, for large  , it is

better to use multi-modular methods. For instance, choosing sufficiently

many small primes

, it is

better to use multi-modular methods. For instance, choosing sufficiently

many small primes  (or

(or  ) with

) with  ,

the multiplication of the two integer matrices can be reduced to

,

the multiplication of the two integer matrices can be reduced to  multiplications of matrices in

multiplications of matrices in  . Recall that a

. Recall that a  -bit

number can be reduced modulo all the

-bit

number can be reduced modulo all the  and

reconstructed from these reductions in time

and

reconstructed from these reductions in time  . The improved matrix multiplication algorithm

therefore admits a time complexity

. The improved matrix multiplication algorithm

therefore admits a time complexity  and has been

implemented in

and has been

implemented in  when

when  and

and  are of the same order of magnitude.

are of the same order of magnitude.

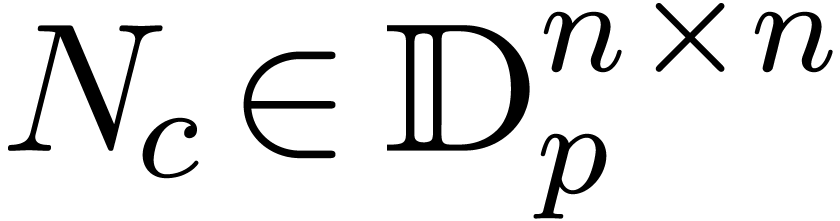

In this section, we will start the study of ball arithmetic for non

numeric types, such as matrices. We will examine the complexity of

common operations, such as matrix multiplication, linear system solving,

and the computation of eigenvectors. Ideally, the certified variants of

these operations are only slightly more expensive than the non certified

versions. As we will see, this objective can sometimes be met indeed. In

general however, there is a trade-off between the efficiency of the

certification and its quality, i.e. the sharpness of the

obtained bound. As we will see, the overhead of bound computations also

tends to diminish for increasing bit precisions  .

.

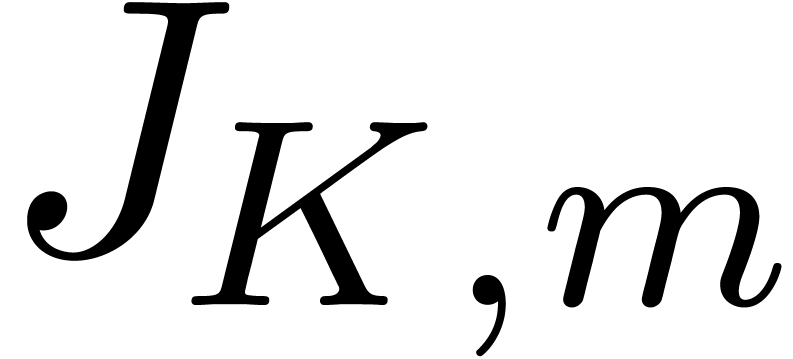

Let us first consider the multiplication of two  double precision matrices

double precision matrices

using the naive symbolic formula

using the naive symbolic formula

Although this strategy is efficient for very small  , it has the disadvantage that we cannot profit

from high performance

, it has the disadvantage that we cannot profit

from high performance

and

and  as

balls with matricial radii

as

balls with matricial radii

we may compute  using

using

where  is given by

is given by  .

A similar approach was first proposed in [Rum99a]. Notice

that the additional term

.

A similar approach was first proposed in [Rum99a]. Notice

that the additional term  replaces

replaces  . This extra product is really required: the

computation of

. This extra product is really required: the

computation of  may involve cancellations, which

prevent a bound for the rounding errors to be read off from the

end-result. The formula (20) does assume that the

underlying

may involve cancellations, which

prevent a bound for the rounding errors to be read off from the

end-result. The formula (20) does assume that the

underlying  using the naive formula (19) and correct rounding, with the

possibility to compute the sums in any suitable order. Less naive

schemes, such as Strassen multiplication [Str69], may give

rise to additional rounding errors.

using the naive formula (19) and correct rounding, with the

possibility to compute the sums in any suitable order. Less naive

schemes, such as Strassen multiplication [Str69], may give

rise to additional rounding errors.

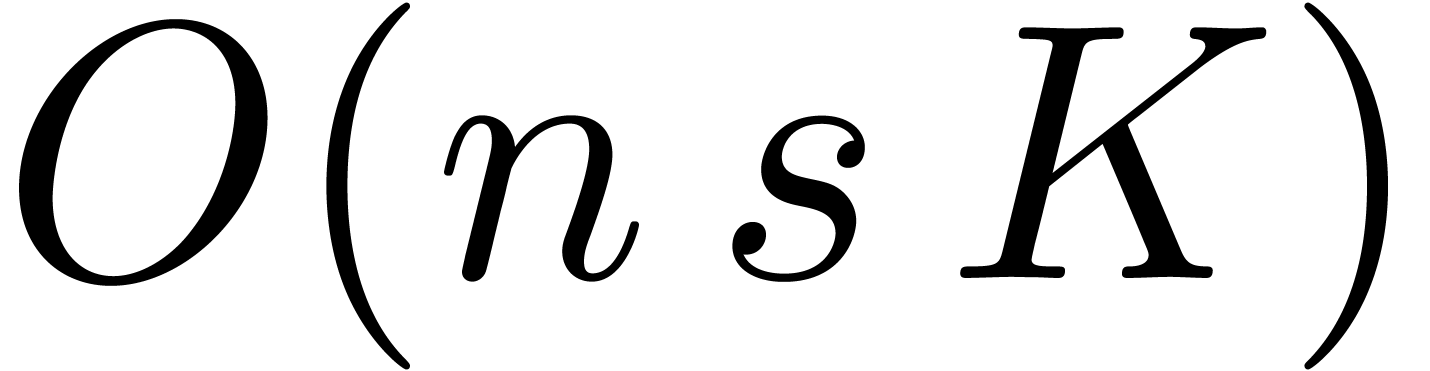

matrix products in

matrix products in  . If

. If  and

and

are well-conditioned, then the following

formula may be used instead:

are well-conditioned, then the following

formula may be used instead:

where  and

and  stand for the

stand for the

-th row of

-th row of  and the

and the  -th column of

-th column of  . Since the

. Since the  norms can be computed using only

norms can be computed using only  operations, the

cost of the bound computation is asymptotically negligible with respect

to the

operations, the

cost of the bound computation is asymptotically negligible with respect

to the  cost of the multiplication

cost of the multiplication  .

.

matrices, chances increase that

matrices, chances increase that  or

or  gets badly conditioned,

in which case the quality of the error bound (22)

decreases. Nevertheless, we may use a compromise between the naive and

the fast strategies: fix a not too small constant

gets badly conditioned,

in which case the quality of the error bound (22)

decreases. Nevertheless, we may use a compromise between the naive and

the fast strategies: fix a not too small constant  , such as

, such as  ,

and rewrite

,

and rewrite  and

and  as

as

matrices whose entries are

matrices whose entries are  matrices. Now multiply

matrices. Now multiply  and

and  using the revisited naive strategy, but use the fast strategy on each

of the

using the revisited naive strategy, but use the fast strategy on each

of the  block coefficients. Being able to

choose

block coefficients. Being able to

choose  , the user has an

explicit control over the trade-off between the efficiency of matrix

multiplication and the quality of the computed bounds.

, the user has an

explicit control over the trade-off between the efficiency of matrix

multiplication and the quality of the computed bounds.

As an additional, but important observation, we notice that the user often has the means to perform an “a posteri quality check”. Starting with a fast but low quality bound computation, we may then check whether the computed bound is suitable. If not, then we recompute a better bound using a more expensive algorithm.

. Computing

. Computing  using (20) requires one expensive multiplication in

using (20) requires one expensive multiplication in  and three cheap multiplications in

and three cheap multiplications in  . For large

. For large  ,

the bound computation therefore induces no noticeable overhead.

,

the bound computation therefore induces no noticeable overhead.

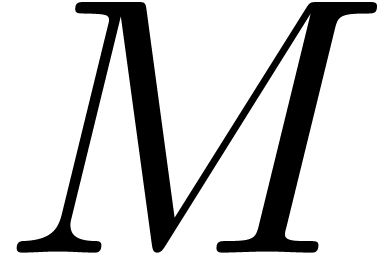

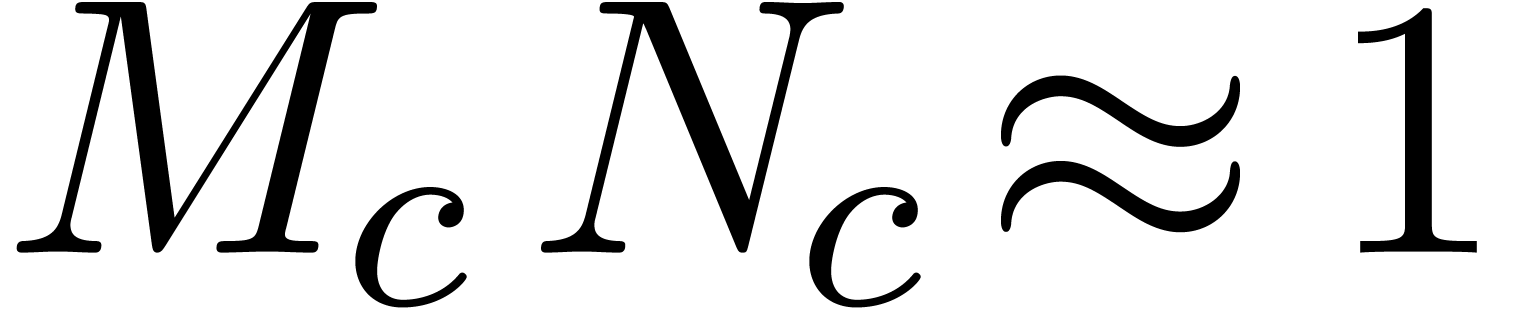

Assume that we want to invert an  ball matrix

ball matrix

. This a typical situation

where the naive application of a symbolic algorithm (such as gaussian

elimination or LR-decomposition) may lead to overestimation. An

efficient and high quality method for the inversion of

. This a typical situation

where the naive application of a symbolic algorithm (such as gaussian

elimination or LR-decomposition) may lead to overestimation. An

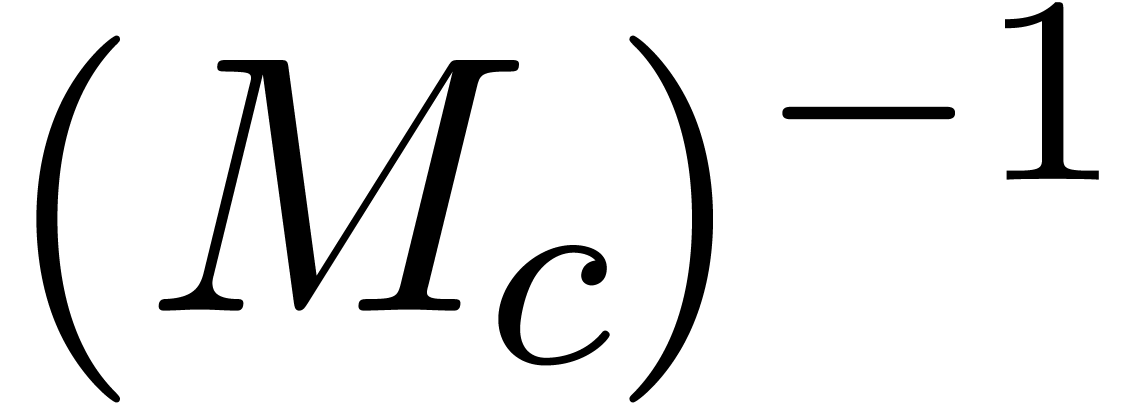

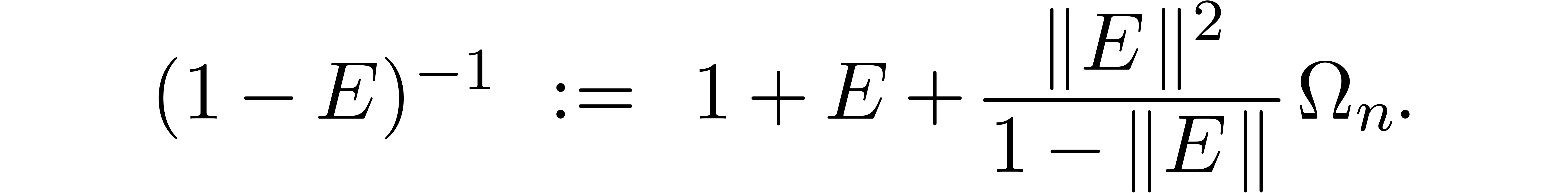

efficient and high quality method for the inversion of  is called Hansen's method [HS67, Moo66]. The

main idea is to first compute the inverse of

is called Hansen's method [HS67, Moo66]. The

main idea is to first compute the inverse of  using a standard numerical algorithm. Only at the end, we estimate the

error using a perturbative analysis. The same technique can be used for

many other problems.

using a standard numerical algorithm. Only at the end, we estimate the

error using a perturbative analysis. The same technique can be used for

many other problems.

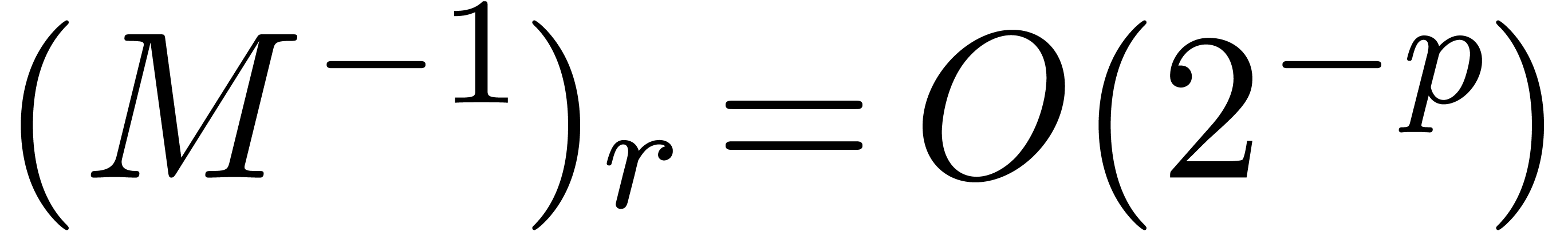

More precisely, we start by computing an approximation  of

of  . Putting

. Putting  , we next compute the product

, we next compute the product  using ball arithmetic. This should yield a matrix of the form

using ball arithmetic. This should yield a matrix of the form  , where

, where  is

small. If

is

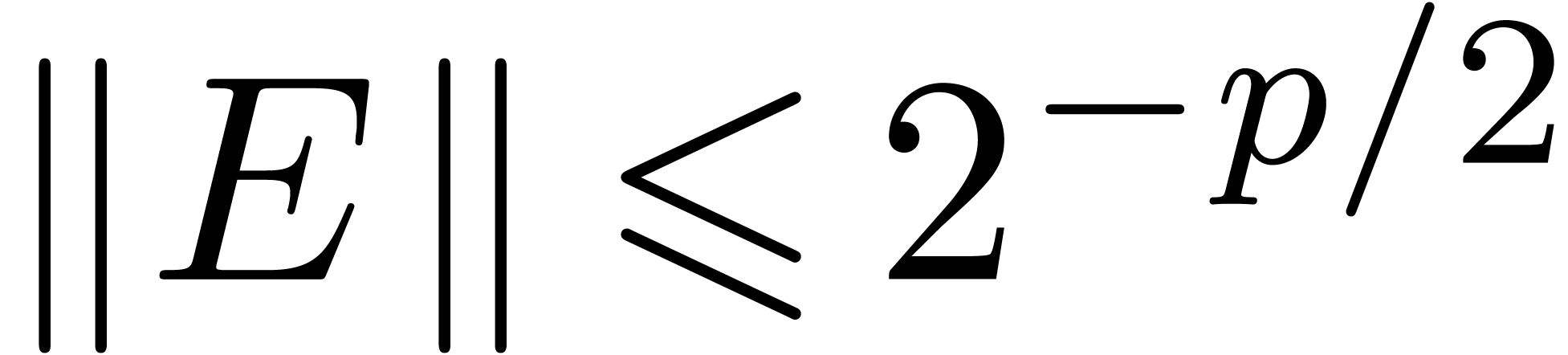

small. If  is indeed small, say

is indeed small, say  , then

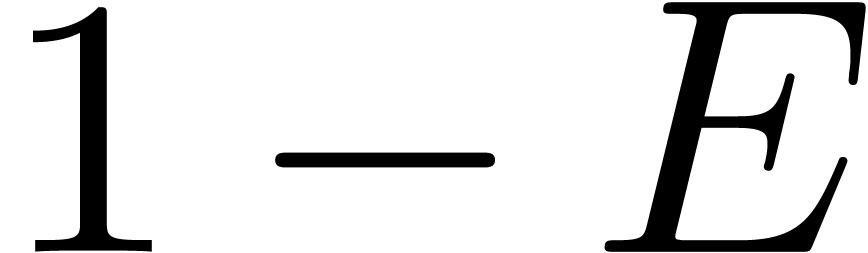

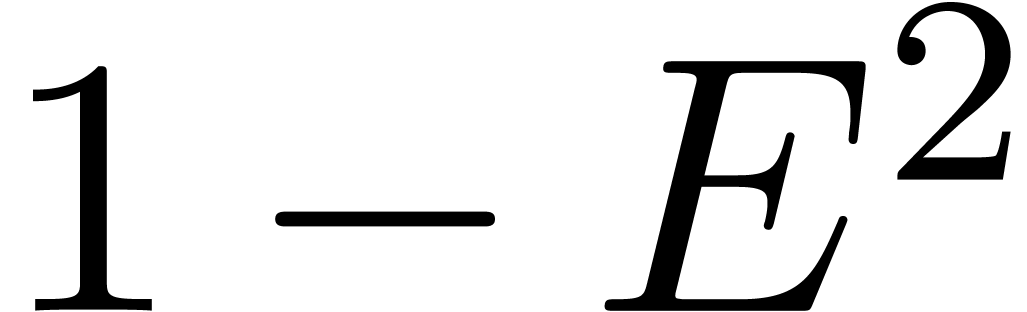

, then

Denoting by  the

the  matrix

whose entries are all

matrix

whose entries are all  , we

may thus take

, we

may thus take

Having inverted  , we may

finally take

, we may

finally take  . Notice that

the computation of

. Notice that

the computation of  can be quite expensive. It is

therefore recommended to replace the check

can be quite expensive. It is

therefore recommended to replace the check  by a

cheaper check, such as

by a

cheaper check, such as  .

.

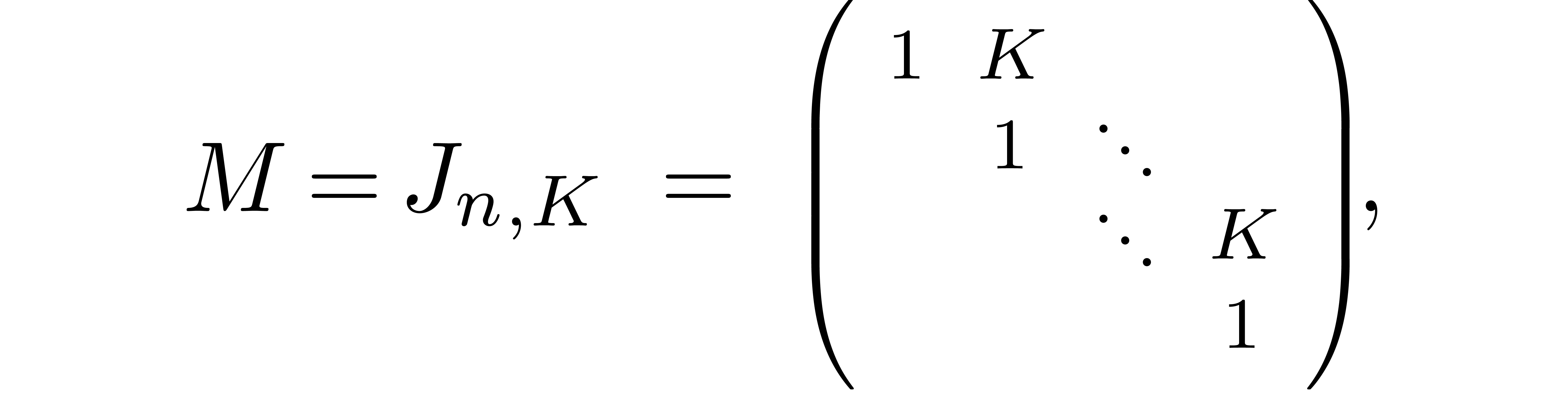

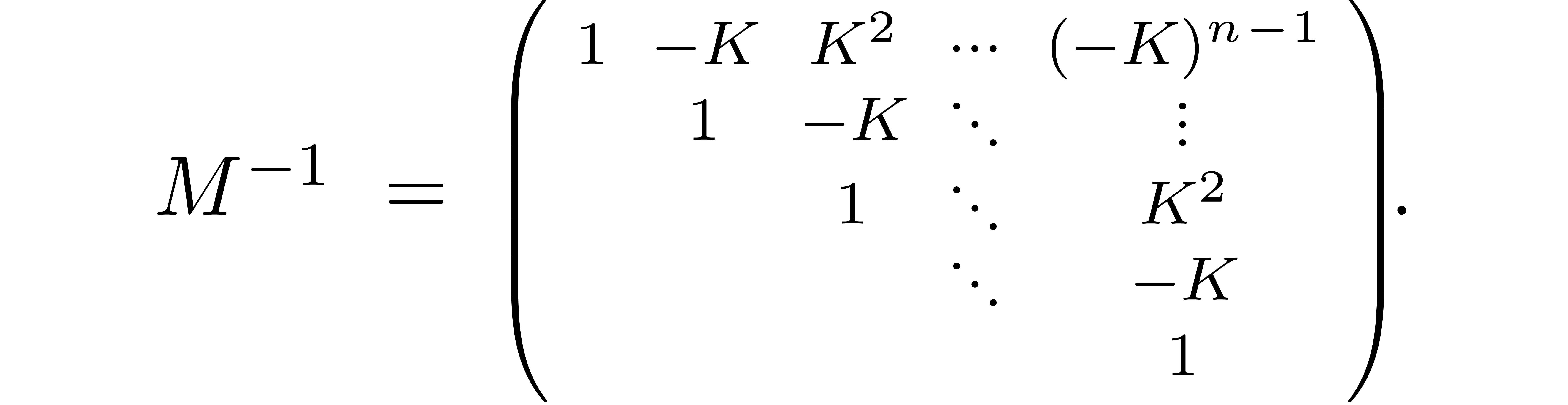

Unfortunately, the matrix  is not always small,

even if

is not always small,

even if  is nicely invertible. For instance,

starting with a matrix

is nicely invertible. For instance,

starting with a matrix  of the form

of the form

with  large, we have

large, we have

Computing  using bit precision

using bit precision  , this typically leads to

, this typically leads to

In such cases, we rather reduce the problem of inverting  to the problem of inverting

to the problem of inverting  ,

using the formula

,

using the formula

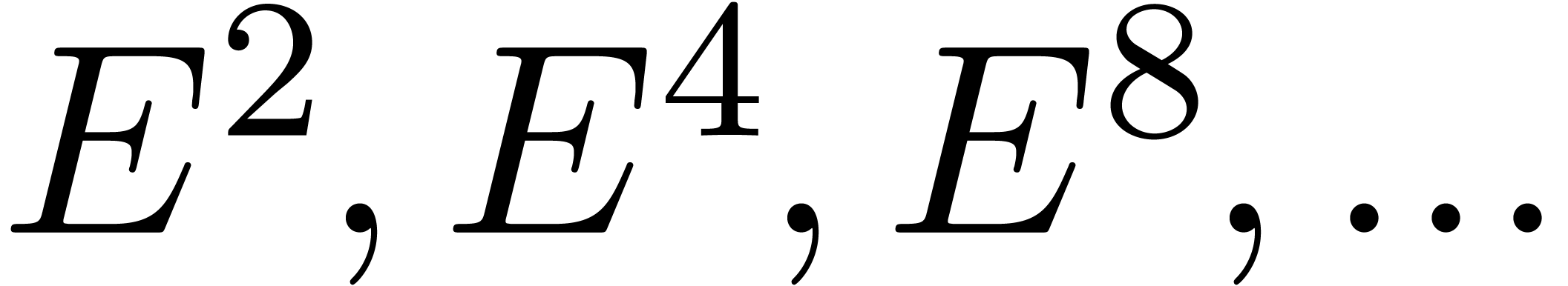

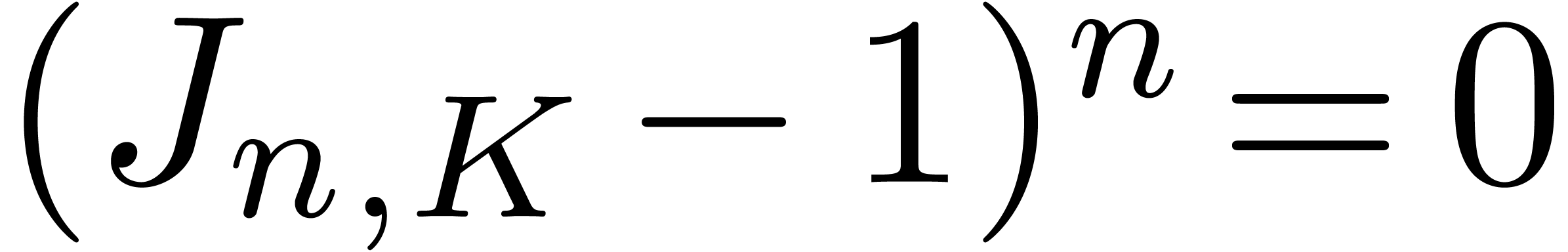

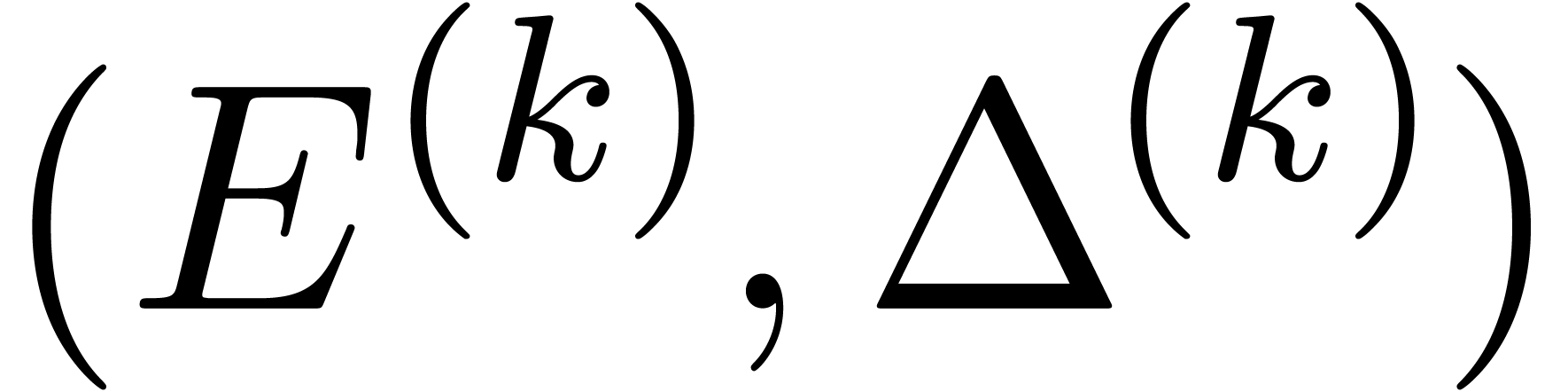

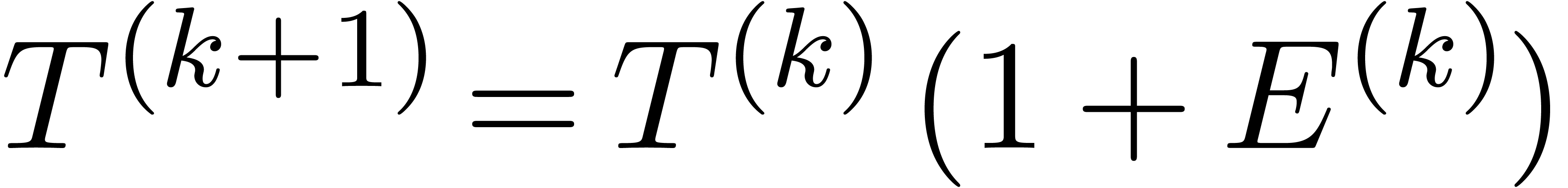

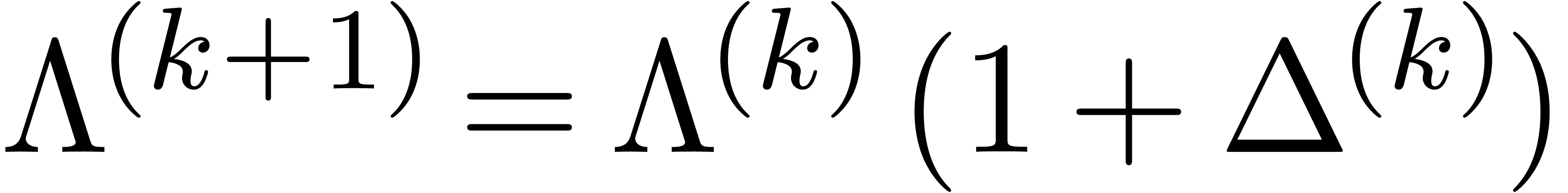

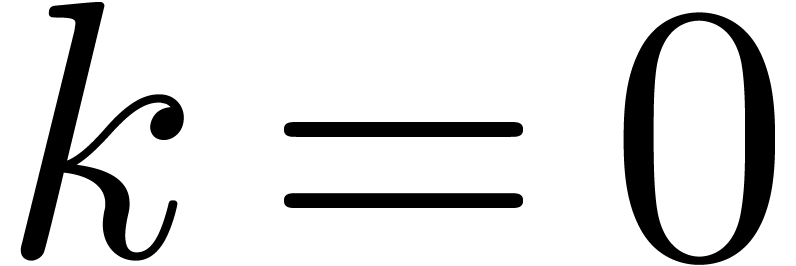

More precisely, applying this trick recursively, we compute  until

until  becomes small (say

becomes small (say  ) and use the formula

) and use the formula

We may always stop the algorithm for  ,

since

,

since  . We may also stop the

algorithm whenever

. We may also stop the

algorithm whenever  and

and  , since

, since  usually fails to be

invertible in that case.

usually fails to be

invertible in that case.

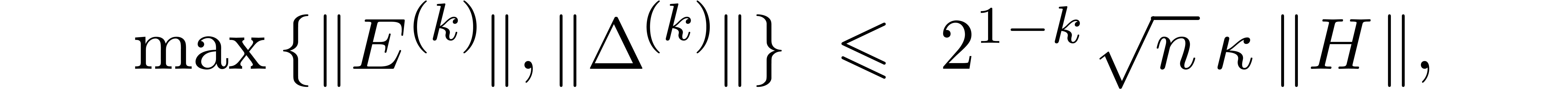

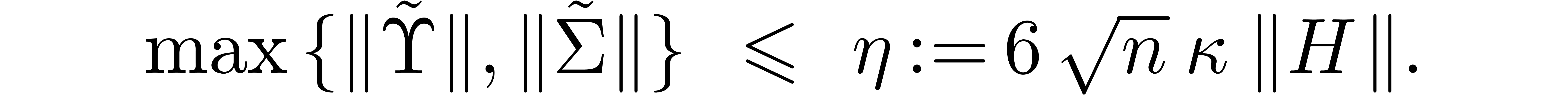

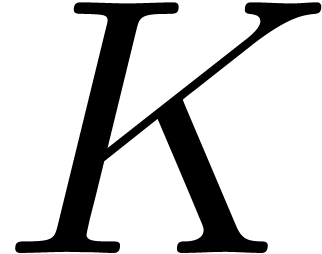

In general, the above algorithm requires  ball

ball

matrix multiplications, where

matrix multiplications, where  is the size of the largest block of the kind

is the size of the largest block of the kind  in

the Jordan decomposition of

in

the Jordan decomposition of  .

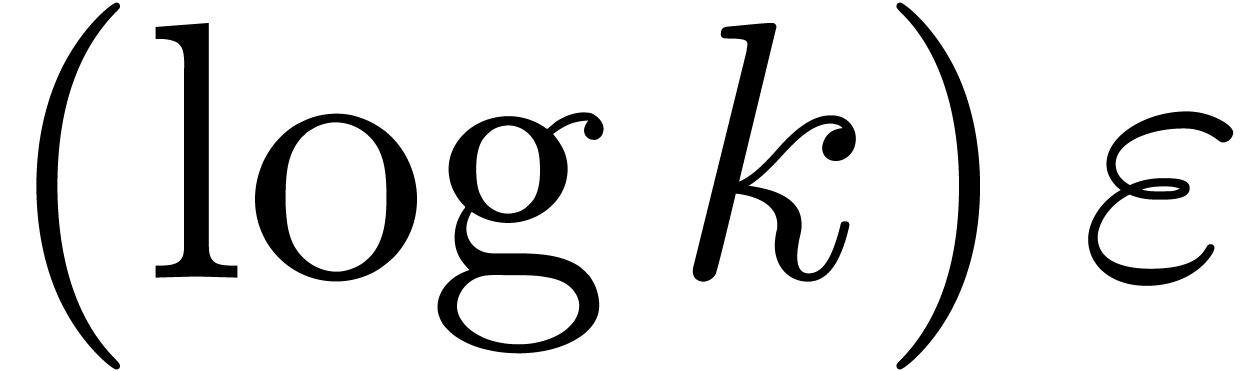

The improved quality therefore requires an additional

.

The improved quality therefore requires an additional  overhead in the worst case. Nevertheless, for a fixed matrix

overhead in the worst case. Nevertheless, for a fixed matrix  and

and  , the norm

, the norm

will eventually become sufficiently small

(i.e.

will eventually become sufficiently small

(i.e.  ) for (23) to apply. Again, the complexity thus tends to improve for

high precisions. An interesting question is whether we can avoid the

ball matrix multiplication

) for (23) to apply. Again, the complexity thus tends to improve for

high precisions. An interesting question is whether we can avoid the

ball matrix multiplication  altogether, if

altogether, if  gets really large. Theoretically, this can be

achieved by using a symbolic algorithm such as Gaussian elimination or

LR-decomposition using ball arithmetic. Indeed, even though the

overestimation is important, it does not depend on the precision

gets really large. Theoretically, this can be

achieved by using a symbolic algorithm such as Gaussian elimination or

LR-decomposition using ball arithmetic. Indeed, even though the

overestimation is important, it does not depend on the precision  . Therefore, we have

. Therefore, we have  and the cost of the bound computations becomes negligible

for large

and the cost of the bound computations becomes negligible

for large  .

.

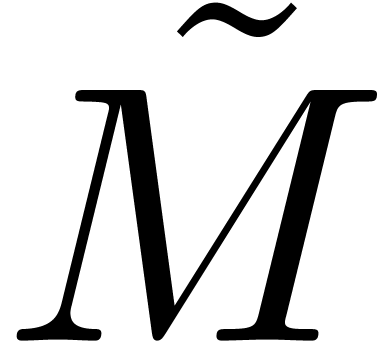

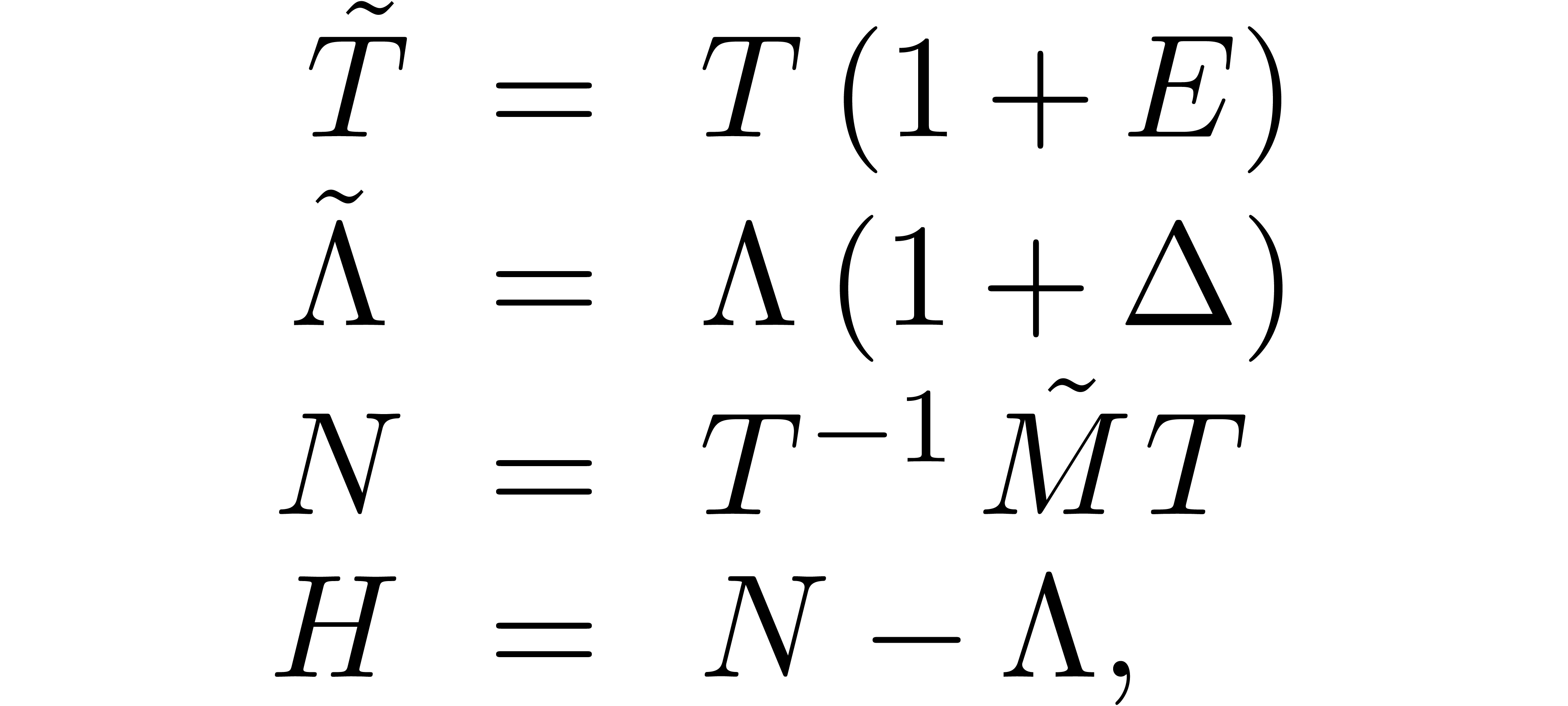

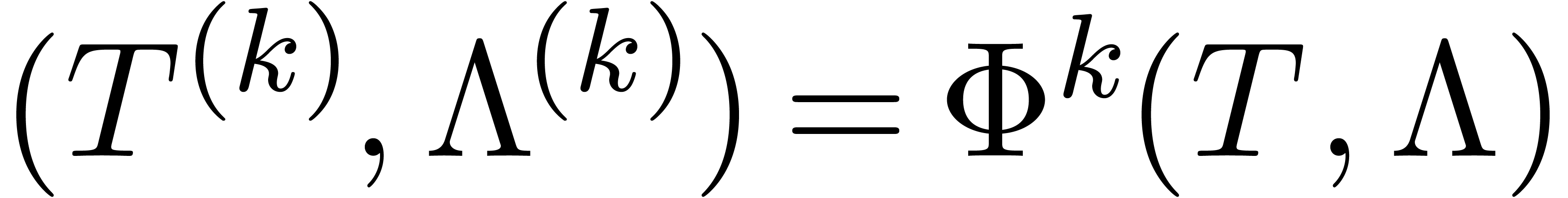

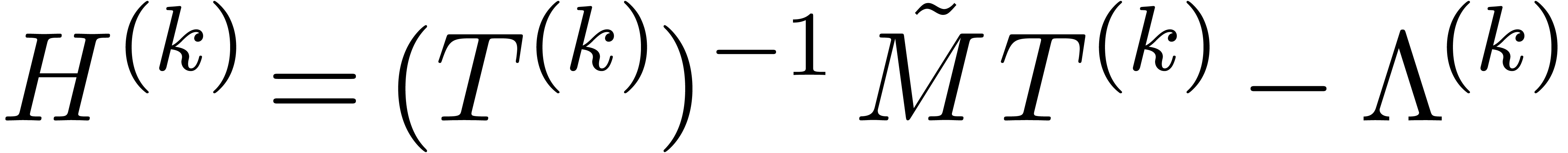

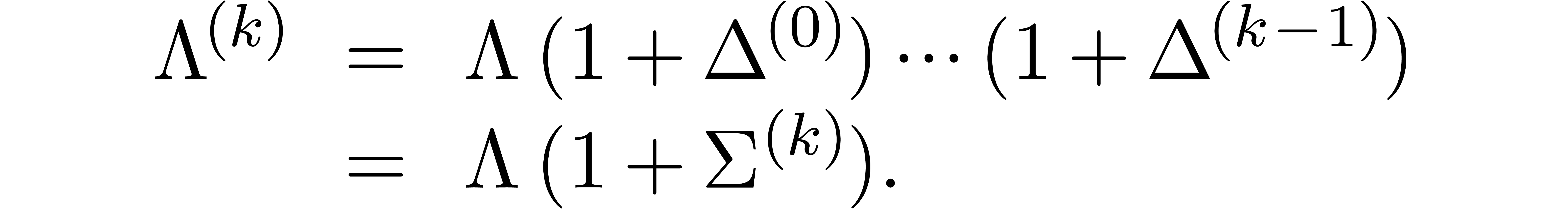

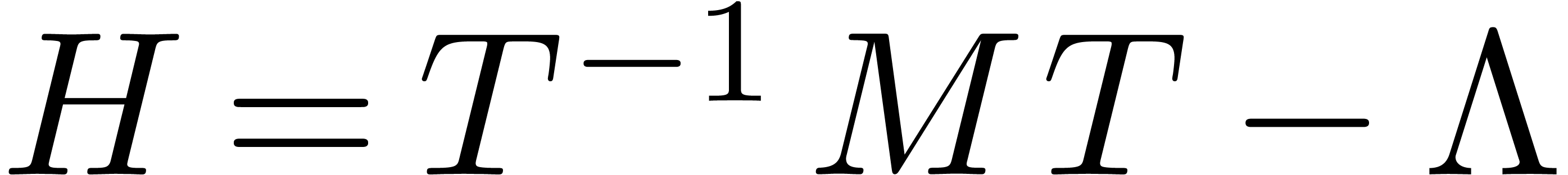

Let us now consider the problem of computing the eigenvectors of a ball

matrix  , assuming for

simplicity that the corresponding eigenvalues are non zero and pairwise

distinct. We adopt a similar strategy as in the case of matrix

inversion. Using a standard numerical method, we first compute a

diagonal matrix

, assuming for

simplicity that the corresponding eigenvalues are non zero and pairwise

distinct. We adopt a similar strategy as in the case of matrix

inversion. Using a standard numerical method, we first compute a

diagonal matrix  and an invertible transformation

matrix

and an invertible transformation

matrix  , such that

, such that

The main challenge is to find reliable error bounds for this

computation. Again, we will use the technique of small perturbations.

The equation (26) being a bit more subtle than  , this requires more work than in the case of

matrix inversion. In fact, we start by giving a numerical method for the

iterative improvement of an approximate solution. A variant of the same

method will then provide the required bounds. Again, this idea can often

be used for other problems. The results of this section are work in

common with

, this requires more work than in the case of

matrix inversion. In fact, we start by giving a numerical method for the

iterative improvement of an approximate solution. A variant of the same

method will then provide the required bounds. Again, this idea can often

be used for other problems. The results of this section are work in

common with

Given  close to

close to  ,

we have to find

,

we have to find  close to

close to  and

and  close to

close to  ,

such that

,

such that

Putting

this yields the equation

Expansion with respect to  yields

yields

Forgetting about the non-linear terms, the equation

admits a unique solution

Setting

it follows that

Setting  ,

,  and

and  , the relation (28)

also implies

, the relation (28)

also implies

Under the additional condition  ,

it follows that

,

it follows that

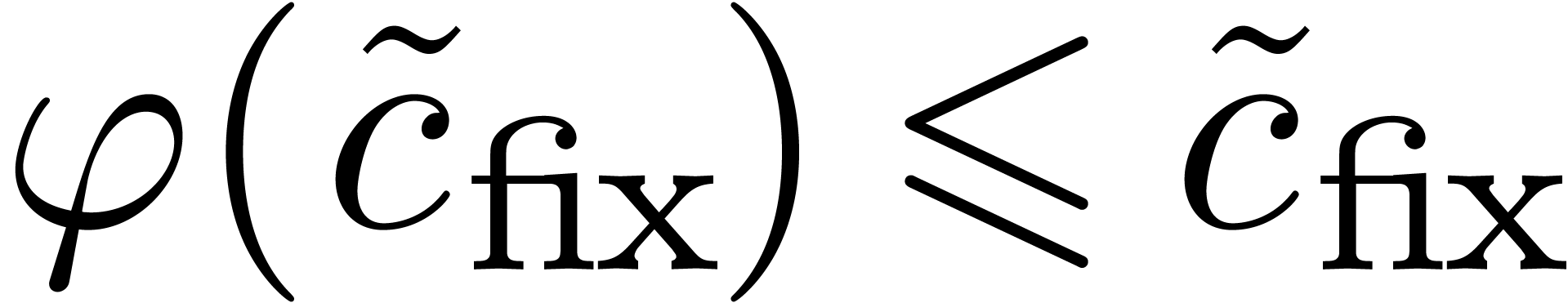

For sufficiently small  , we

claim that iteration of the mapping

, we

claim that iteration of the mapping  converges to

a solution of (27).

converges to

a solution of (27).

Let us denote  ,

,  and let

and let  be such that

be such that  and

and  . Assume

that

. Assume

that

and let us prove by induction over  that

that

This is clear for  , so assume

that

, so assume

that  . In a similar way as

(30), we have

. In a similar way as

(30), we have

Using the induction hypotheses and  ,

it follows that

,

it follows that

which proves (32). Now let  be such

that

be such

that

From (34), it follows that

On the one hand, this implies (33). On the other hand, it follows that

whence (29) generalizes to (34). This

completes the induction and the linear convergence of  to zero. In fact, the combination of (34) and (35)

show that we even have quadratic convergence.

to zero. In fact, the combination of (34) and (35)

show that we even have quadratic convergence.

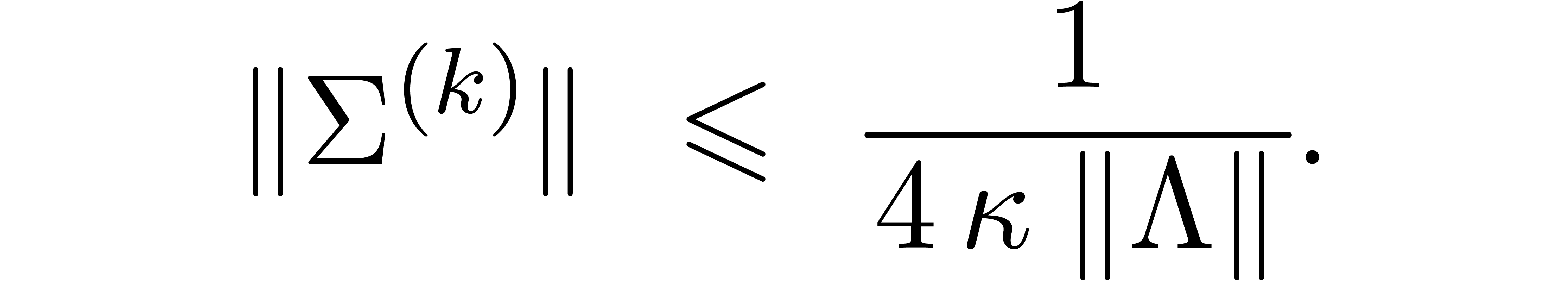

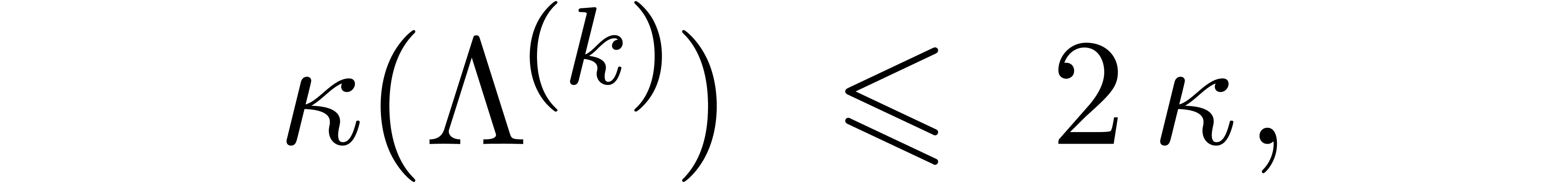

Let us now return to the original bound computation problem. We start

with the computation of  using ball arithmetic.

If the condition (31) is met (using the most pessimistic

rounding), the preceding discussion shows that for every

using ball arithmetic.

If the condition (31) is met (using the most pessimistic

rounding), the preceding discussion shows that for every  (in the sense that

(in the sense that  for all

for all  ), the equation (27)

admits a solution of the form

), the equation (27)

admits a solution of the form

with

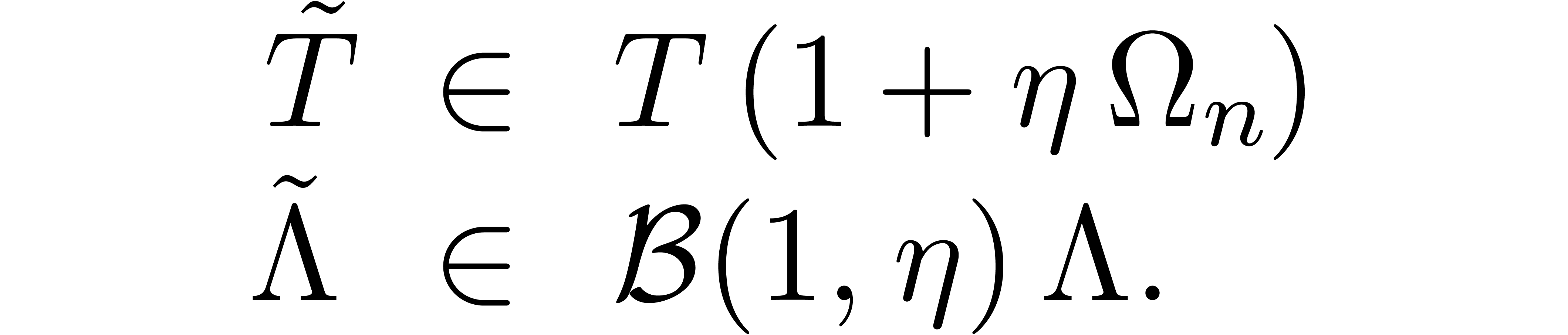

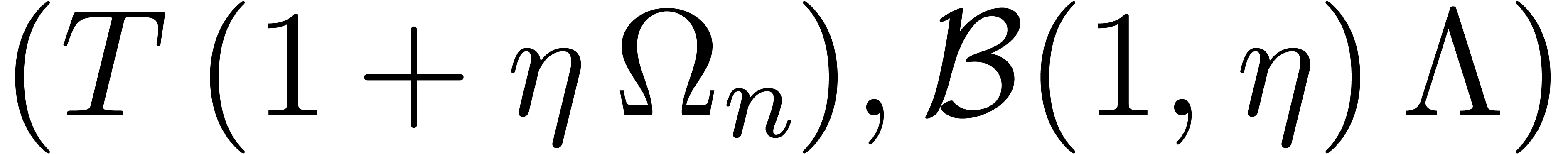

for all  . It follows that

. It follows that

We conclude that

We may thus return  as the solution to the

original eigenproblem associated to the ball matrix

as the solution to the

original eigenproblem associated to the ball matrix  .

.

The reliable bound computation essentially reduces to the computation of

three matrix products and one matrix inversion. At low precisions, the

numerical computation of the eigenvectors is far more expensive in

practice, so the overhead of the bound computation is essentially

negligible. At higher precisions  ,

the iteration

,

the iteration  actually provides an efficient way

to double the precision of a numerical solution to the eigenproblem at

precision

actually provides an efficient way

to double the precision of a numerical solution to the eigenproblem at

precision  . In particular,

even if the condition (31) is not met initially, then it

usually can be enforced after a few iterations and modulo a slight

increase of the precision. For fixed

. In particular,

even if the condition (31) is not met initially, then it

usually can be enforced after a few iterations and modulo a slight

increase of the precision. For fixed  and

and  , it also follows that the

numerical eigenproblem essentially reduces to a few matrix products. The

certification of the end-result requires a few more products, which

induces a constant overhead. By performing a more refined error

analysis, it is probably possible to make the cost certification

negligible, although we did not investigate this issue in detail.

, it also follows that the

numerical eigenproblem essentially reduces to a few matrix products. The

certification of the end-result requires a few more products, which

induces a constant overhead. By performing a more refined error

analysis, it is probably possible to make the cost certification

negligible, although we did not investigate this issue in detail.

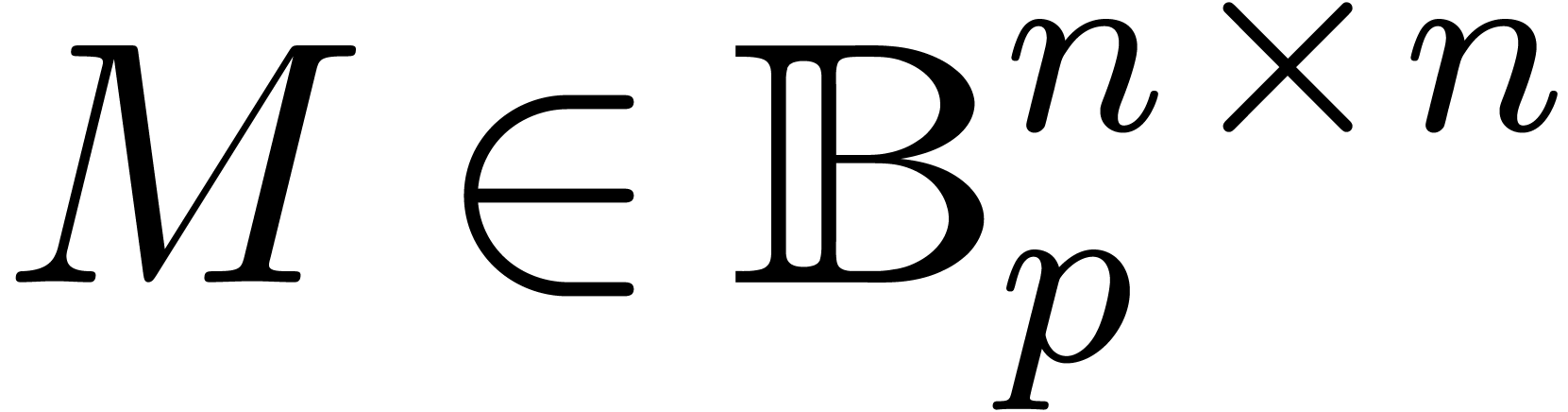

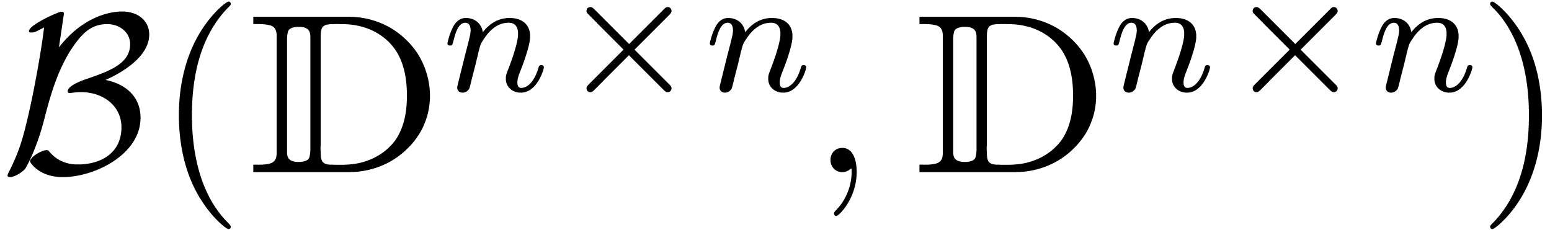

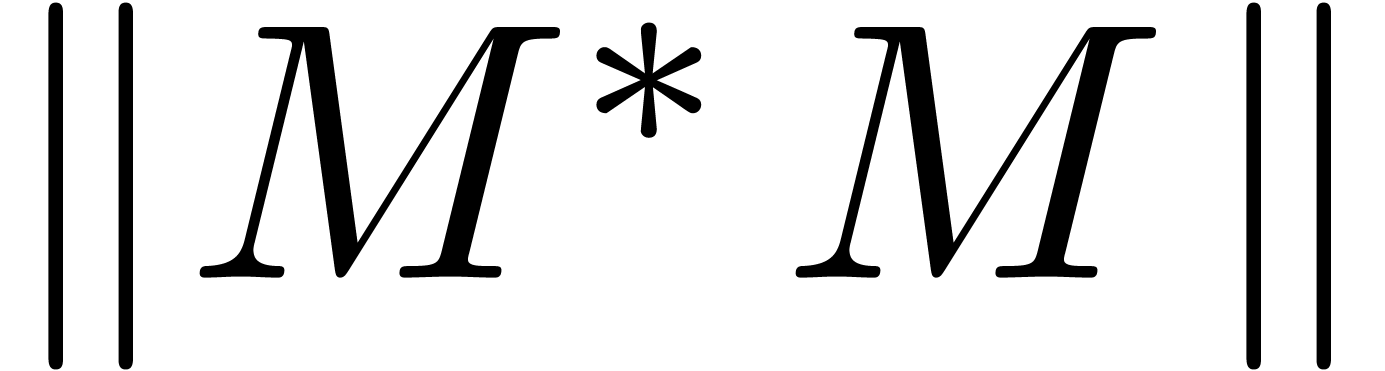

In section 4.3, we have already seen that matricial balls

in  often provide higher quality error bounds

than ball matrices in

often provide higher quality error bounds

than ball matrices in  or essentially equivalent

variants in

or essentially equivalent

variants in  . However, ball

arithmetic in

. However, ball

arithmetic in  relies on the possibility to

quickly compute a sharp upper bound for the operator norm

relies on the possibility to

quickly compute a sharp upper bound for the operator norm  of a matrix

of a matrix  .

Unfortunately, we do not know of any really efficient algorithm for

doing this.

.

Unfortunately, we do not know of any really efficient algorithm for

doing this.

One expensive approach is to compute a reliable singular value

decomposition of  , since

, since  coincides with the largest singular value.

Unfortunately, this usually boils down to the resolution of the

eigenproblem associated to

coincides with the largest singular value.

Unfortunately, this usually boils down to the resolution of the

eigenproblem associated to  ,

with a few possible improvements (for instance, the dependency of the

singular values on the coefficients of

,

with a few possible improvements (for instance, the dependency of the

singular values on the coefficients of  is less

violent than in the case of a general eigenproblem).

is less

violent than in the case of a general eigenproblem).

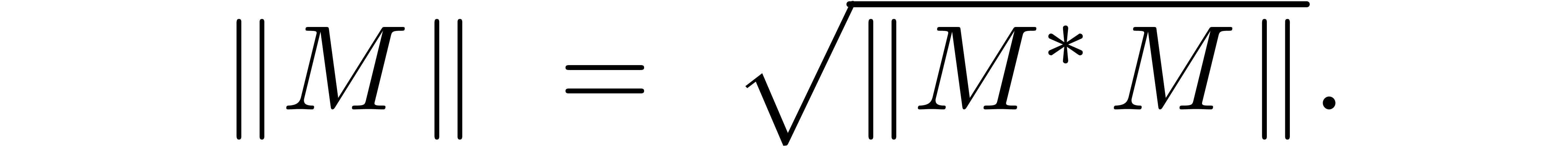

Since we only need the largest singular value, a faster approach is to

reduce the computation of  to the computation of

to the computation of

, using the formula

, using the formula

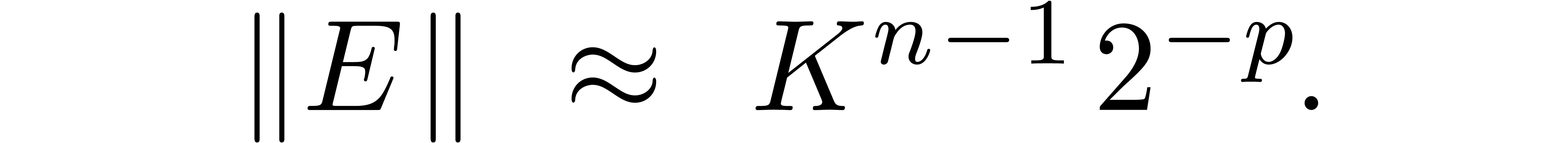

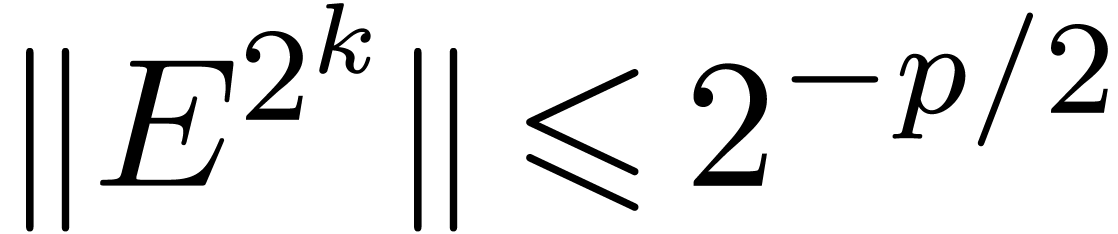

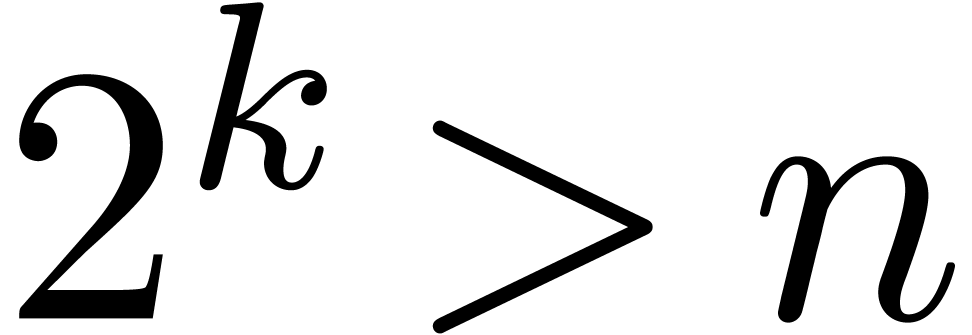

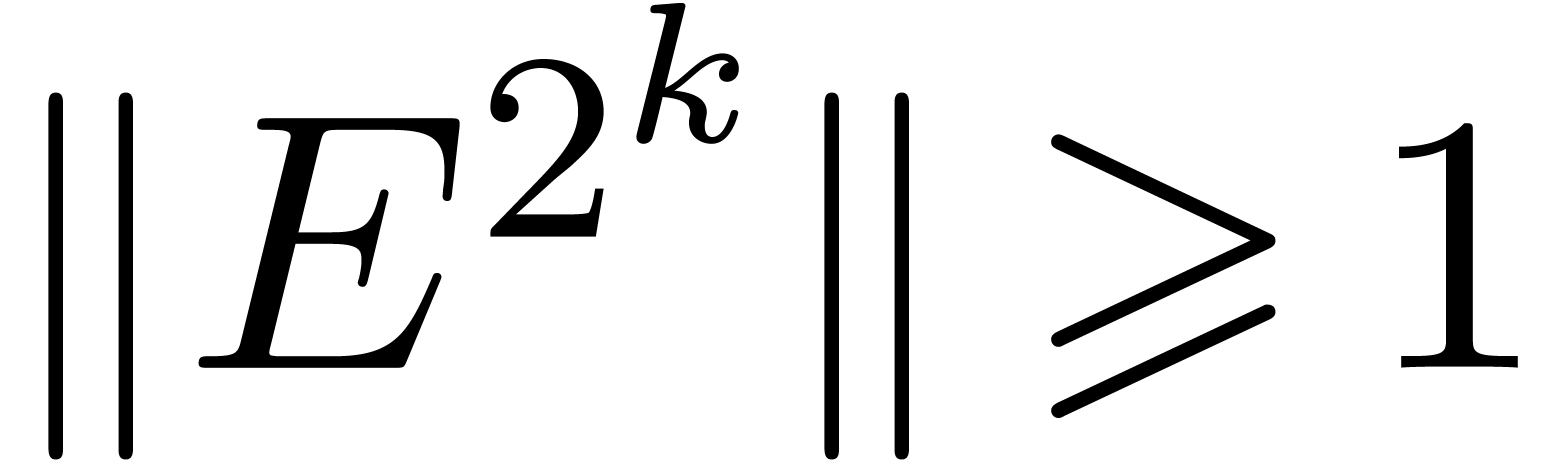

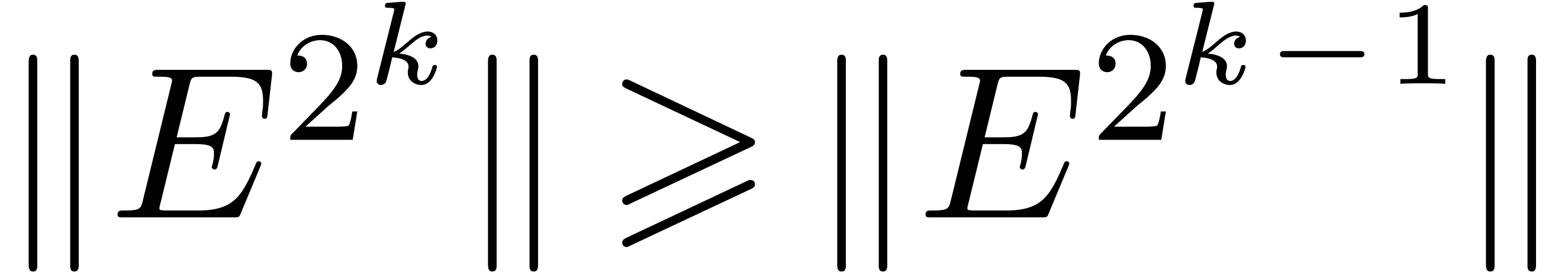

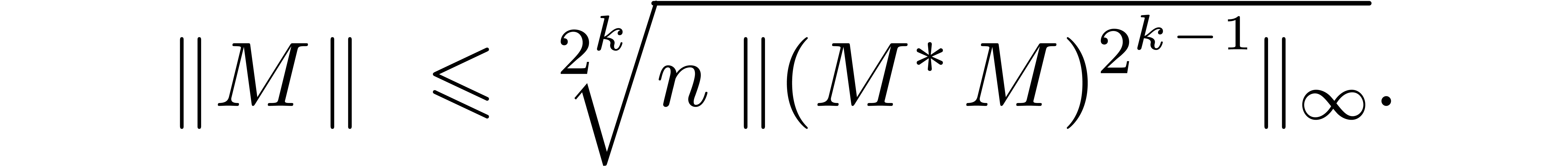

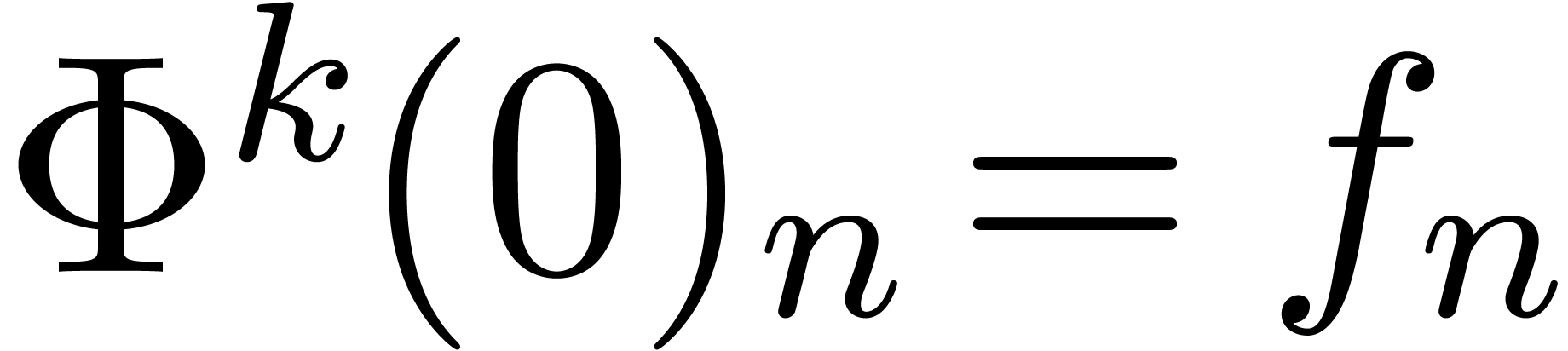

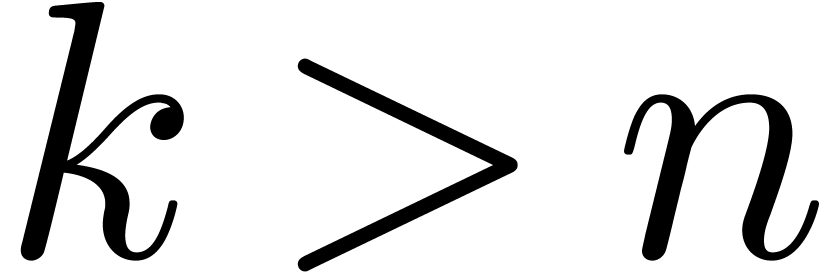

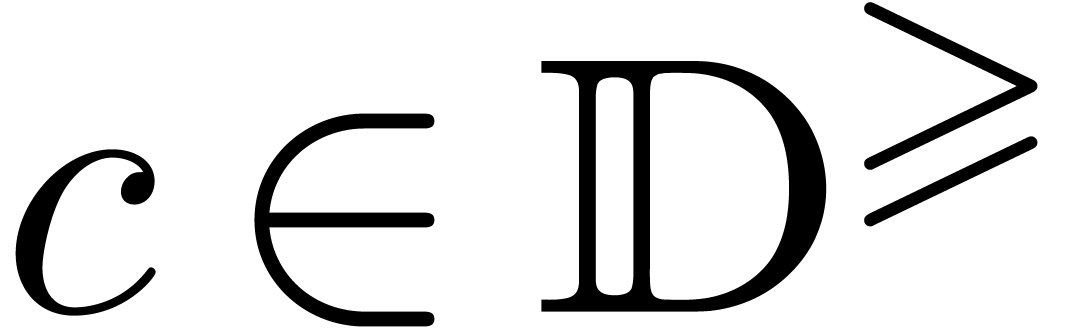

Applying this formula  times and using a naive

bound at the end, we obtain

times and using a naive

bound at the end, we obtain

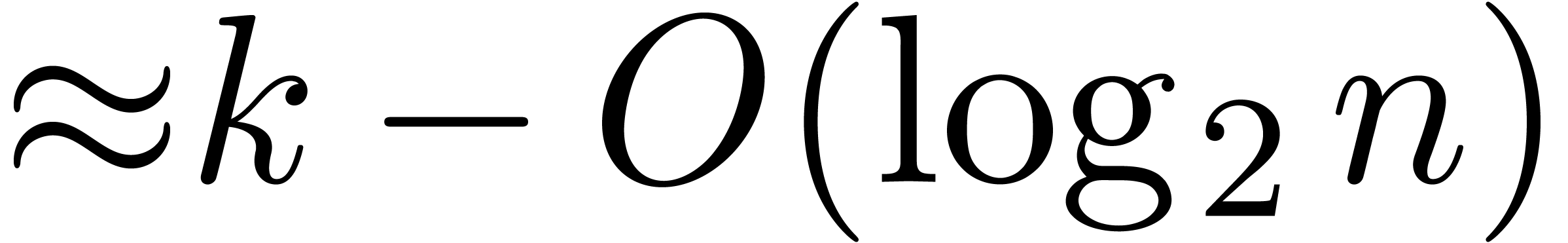

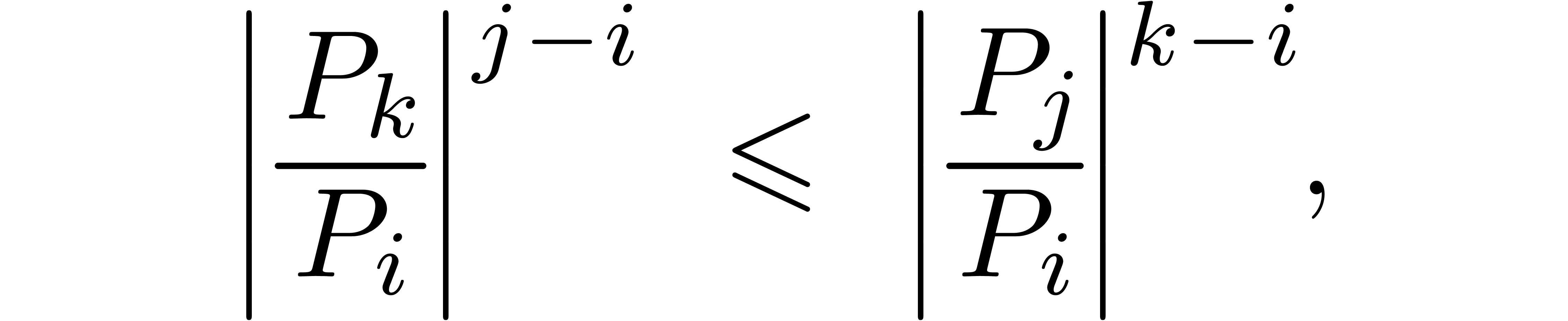

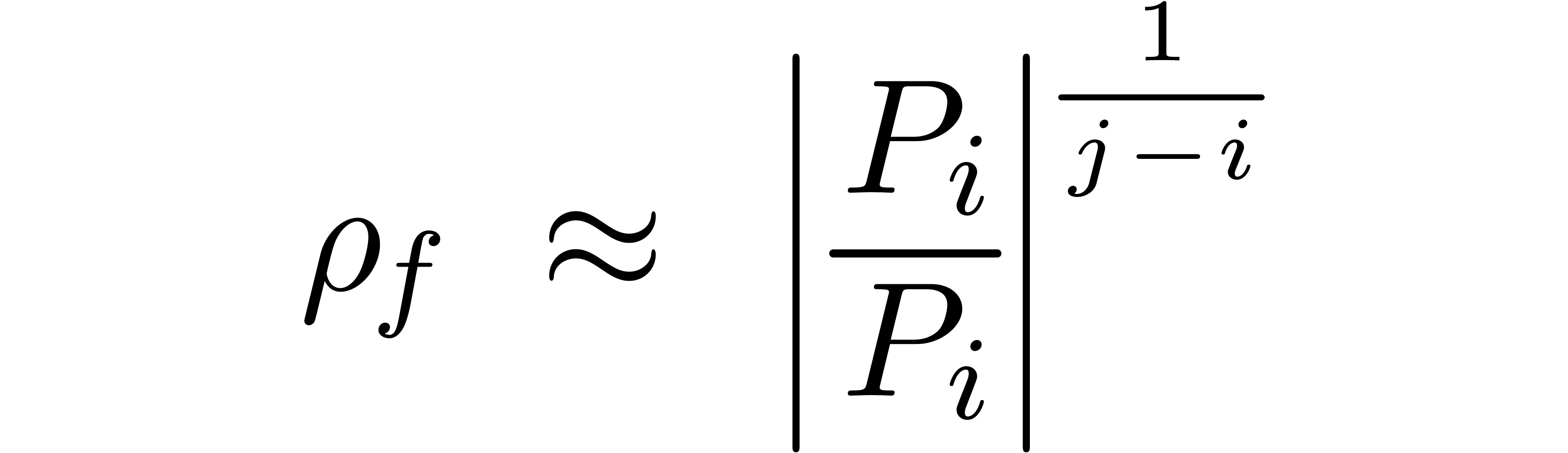

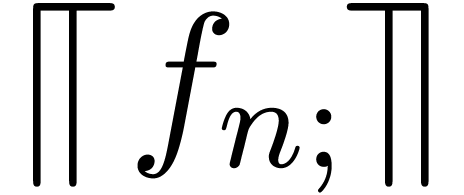

This bound has an accuracy of  bits. Since

bits. Since  is symmetric, the

is symmetric, the  repeated

squarings of

repeated

squarings of  only correspond to about

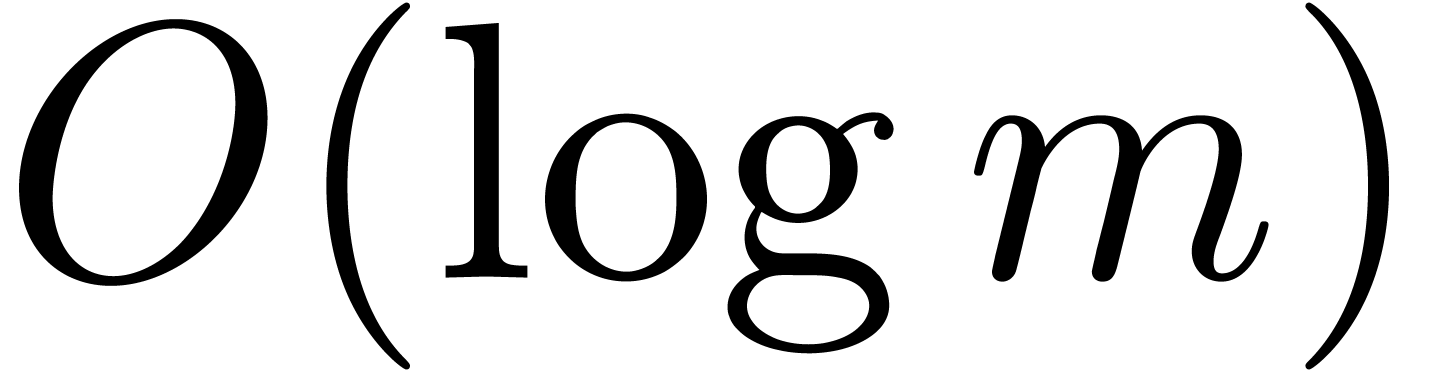

only correspond to about  matrix multiplications. Notice also that it is wise to

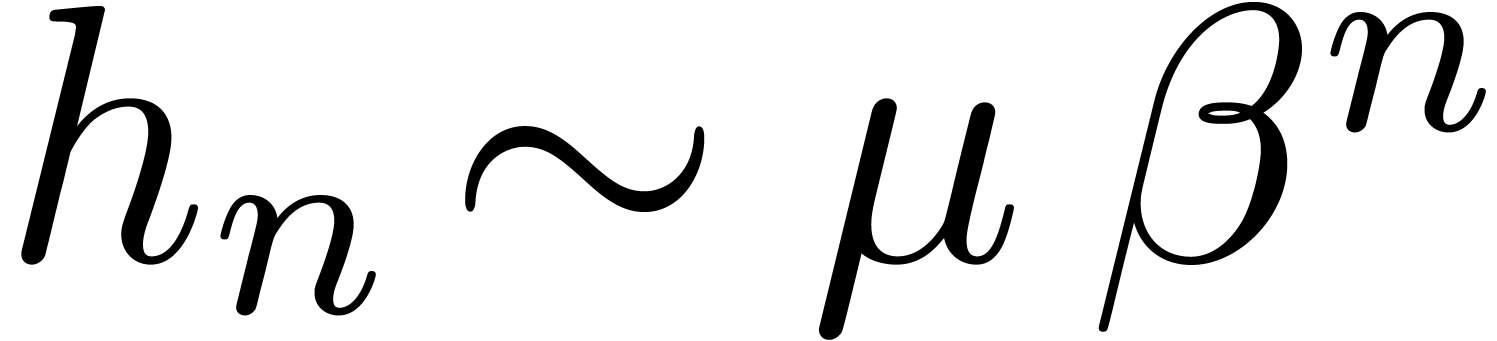

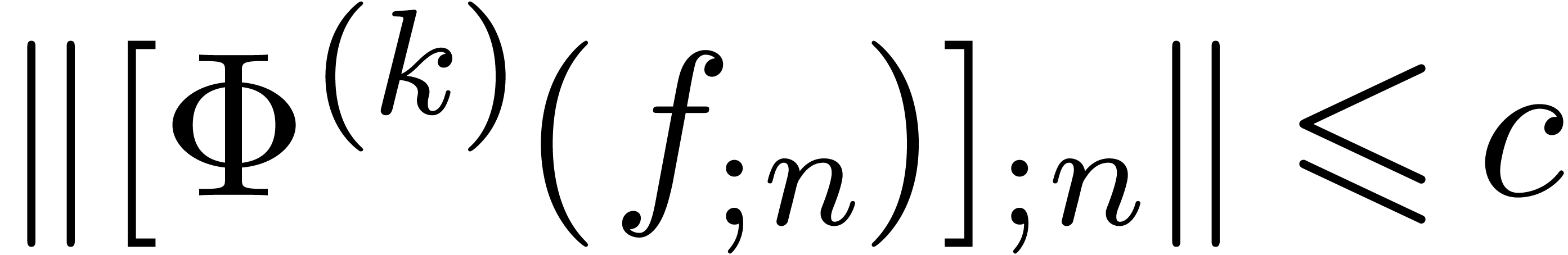

renormalize matrices before squaring them, so as to avoid overflows and