Relaxed resolution of implicit

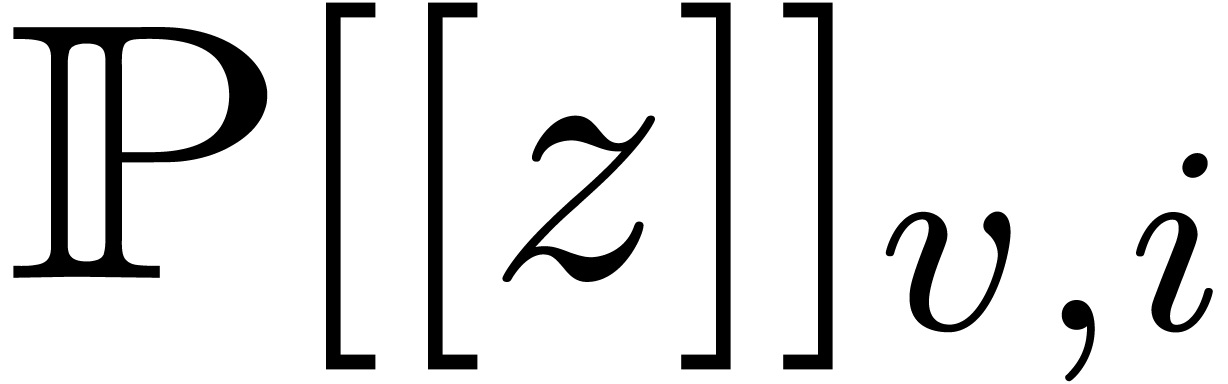

equations |

|

| December 17, 2009 |

|

. This work has

been supported by the ANR-09-JCJC-0098-01

. This work has

been supported by the ANR-09-JCJC-0098-01

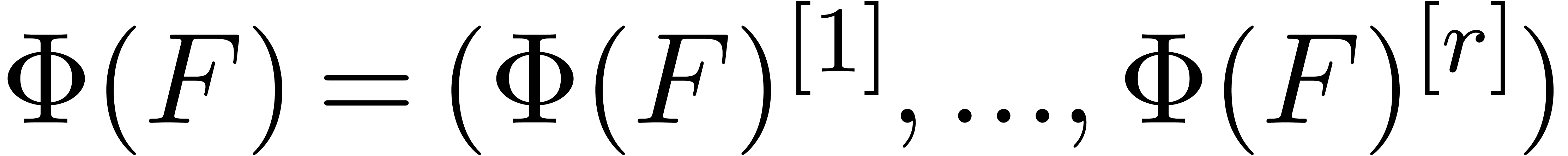

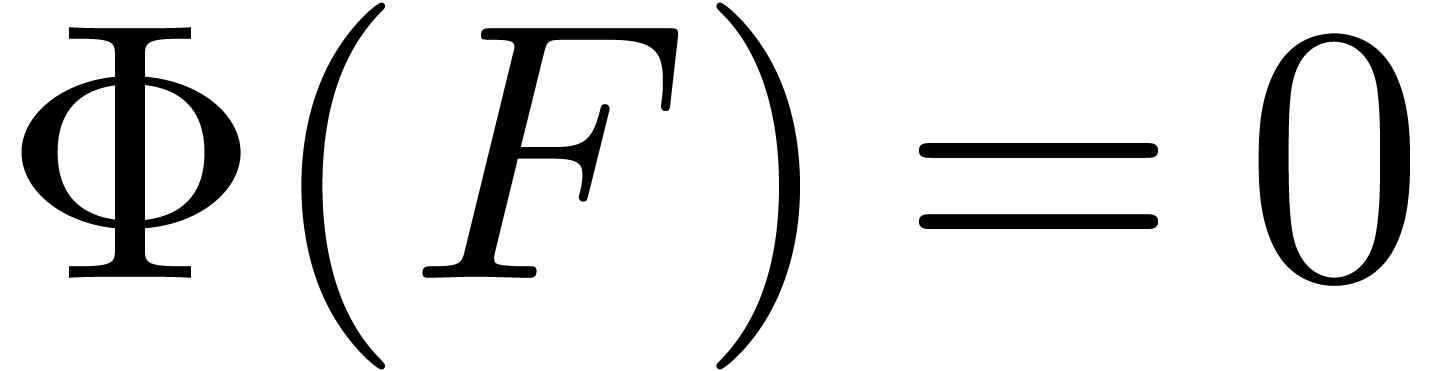

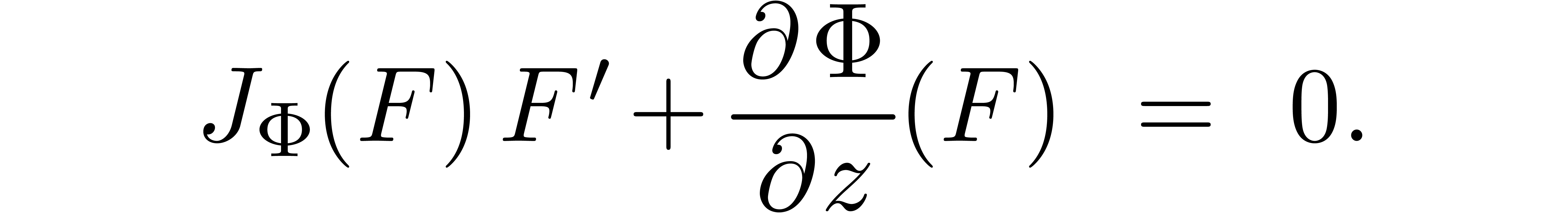

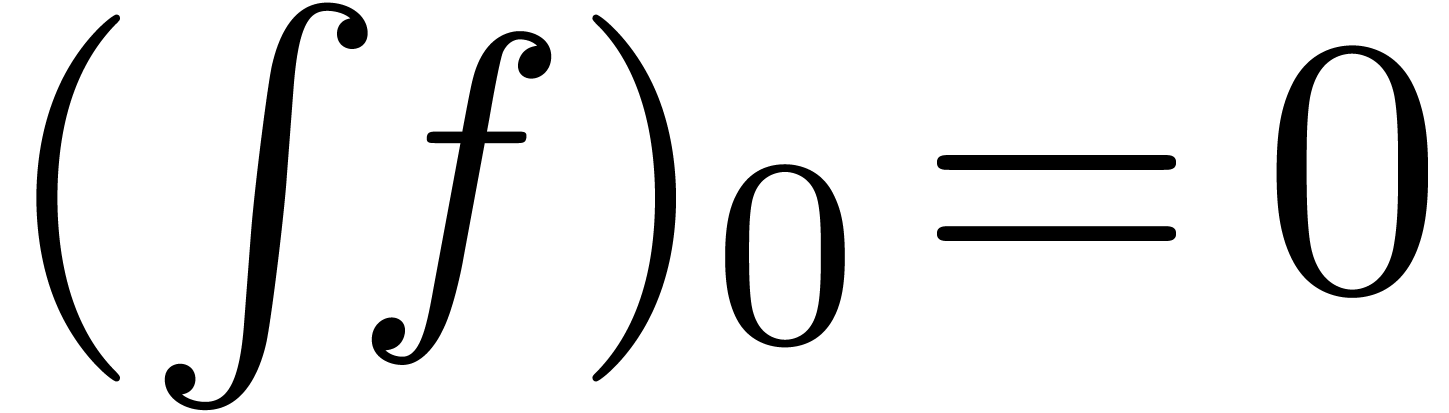

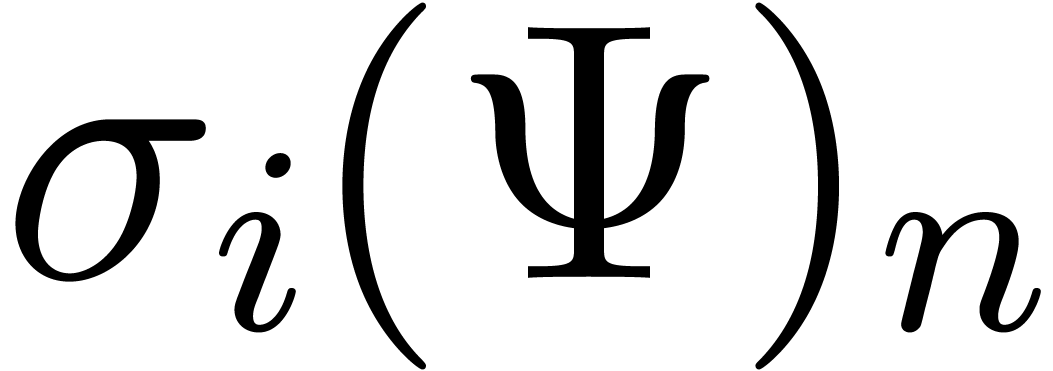

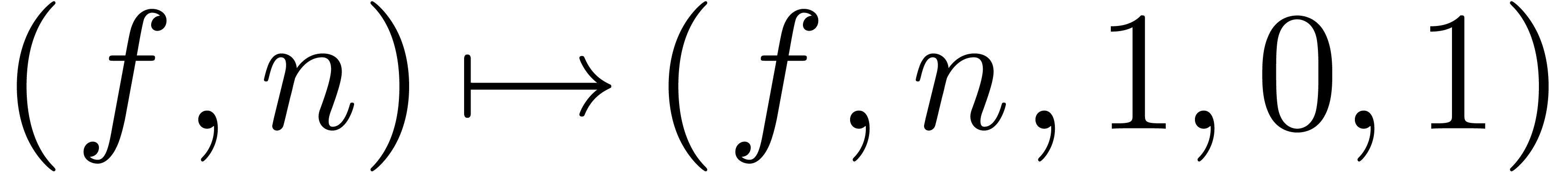

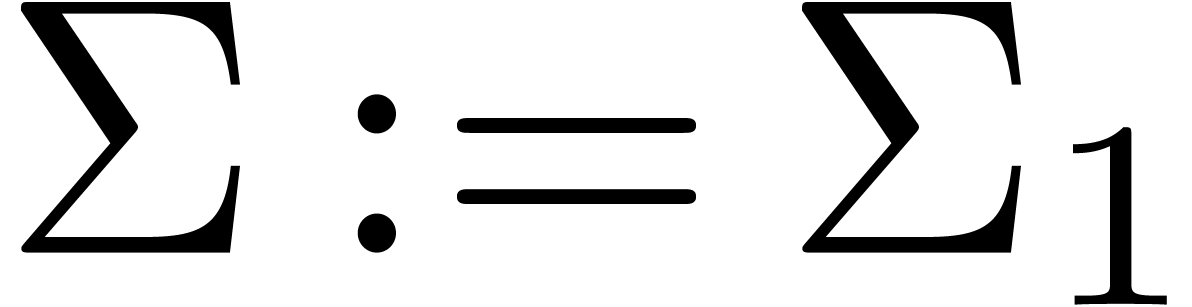

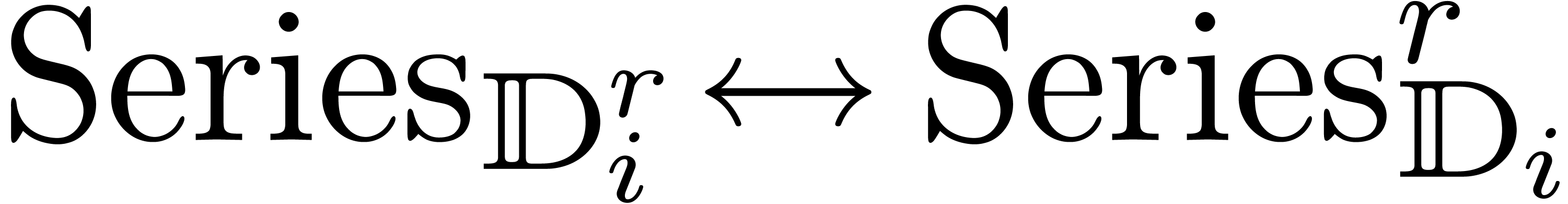

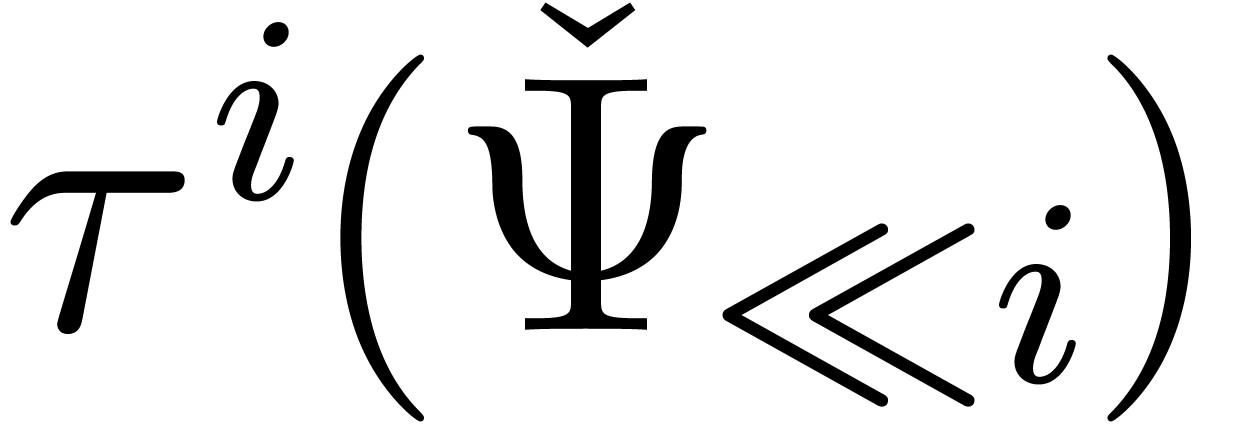

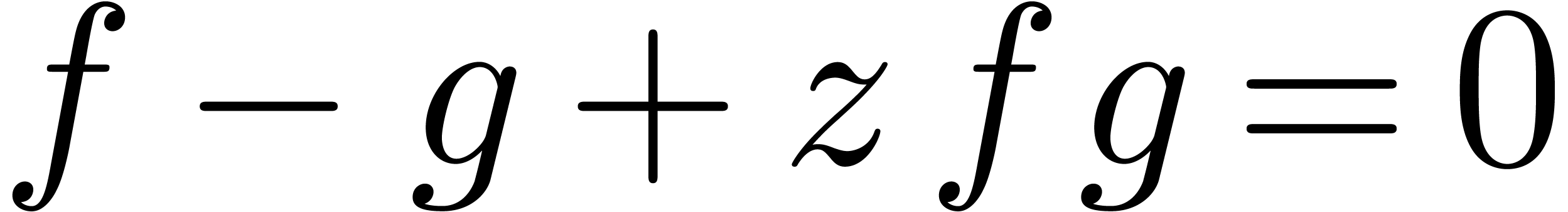

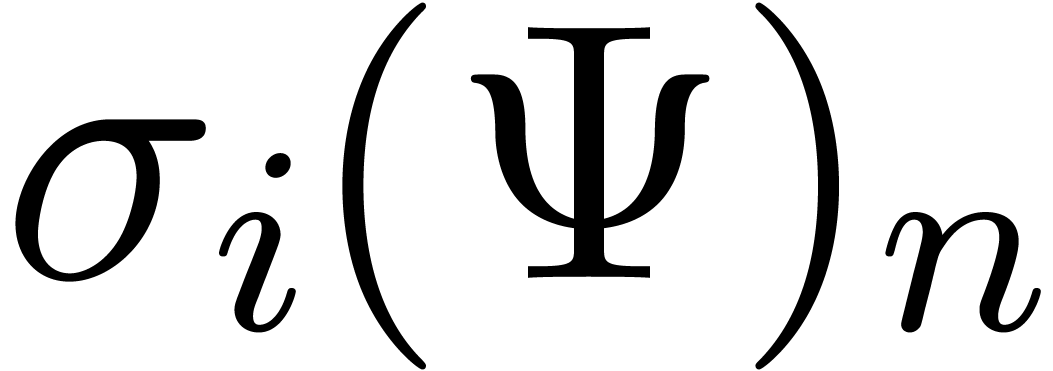

The technique of relaxed power series expansion provides an

efficient way to solve equations of the form

|

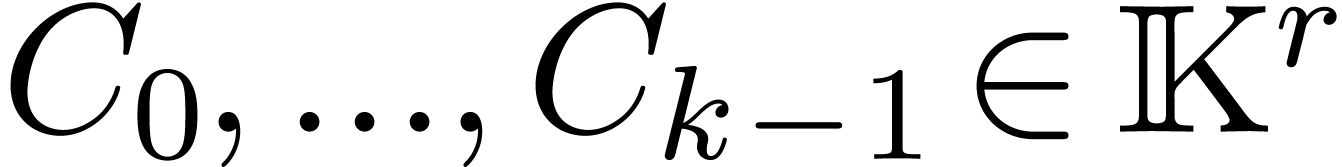

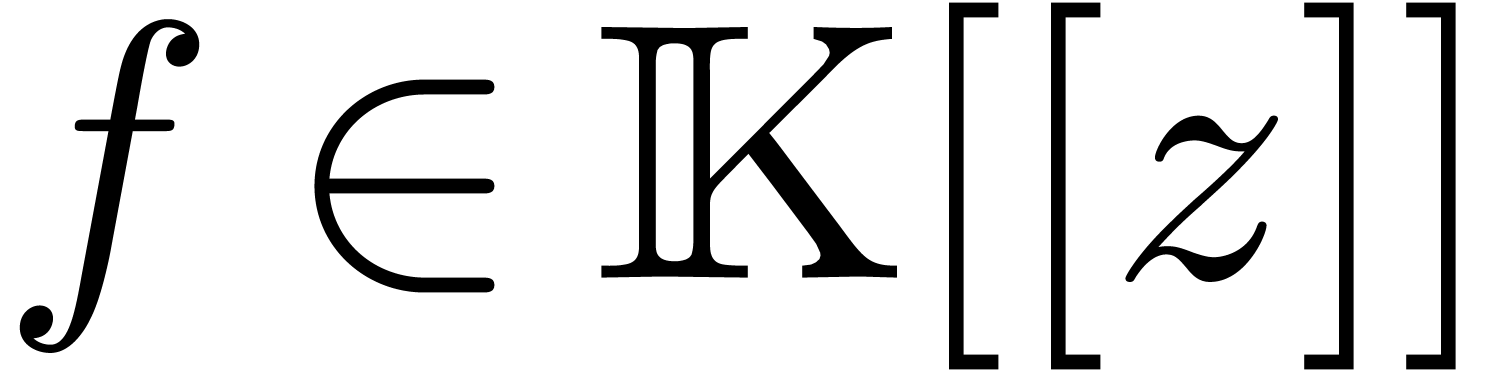

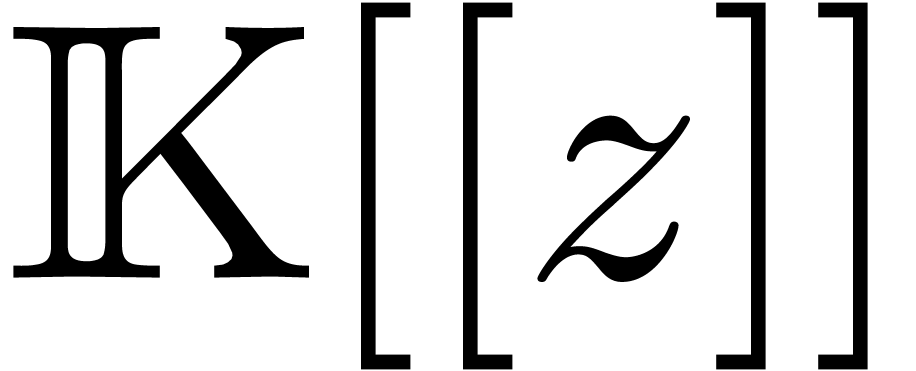

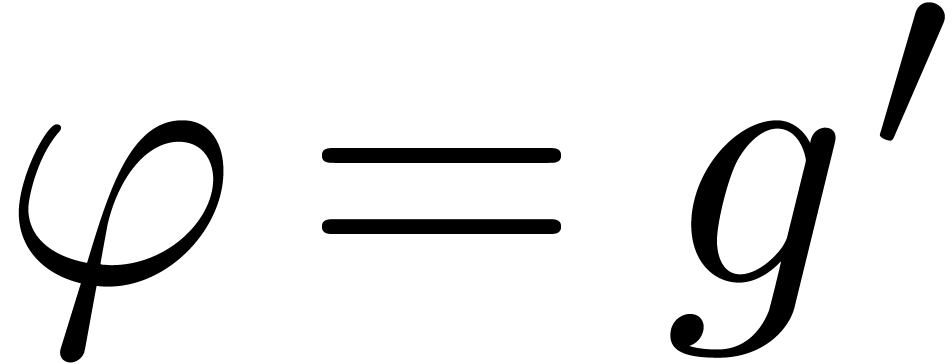

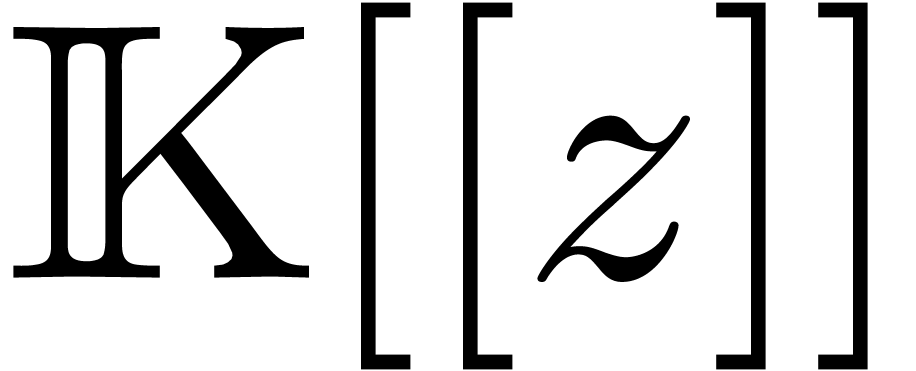

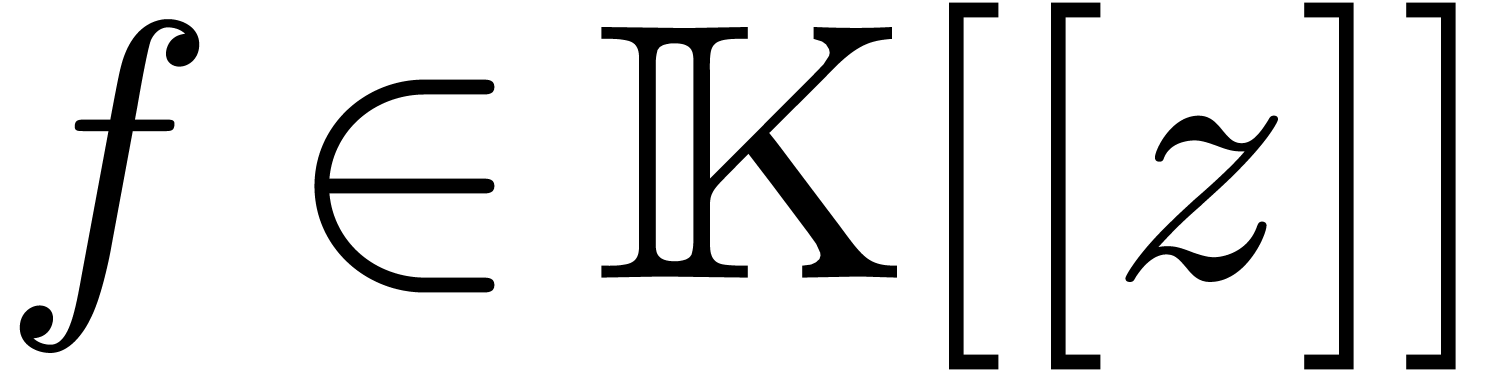

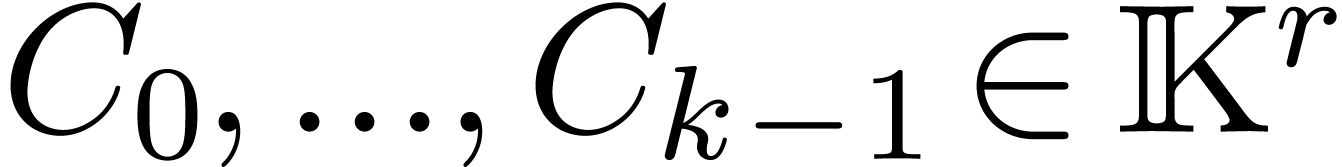

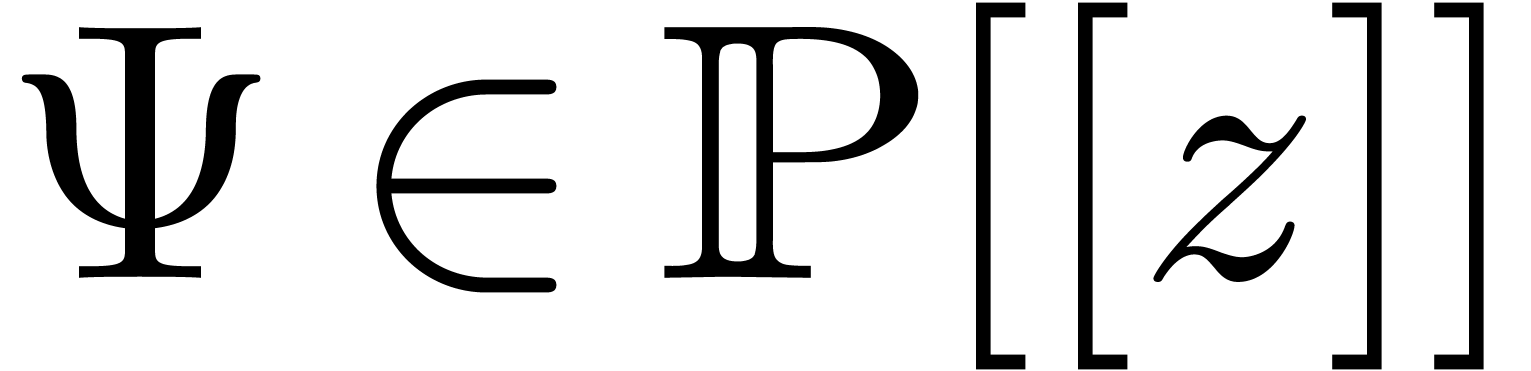

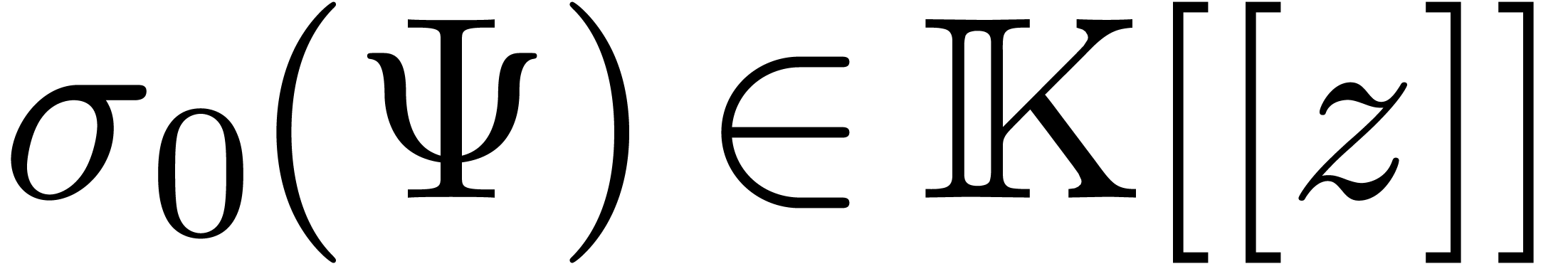

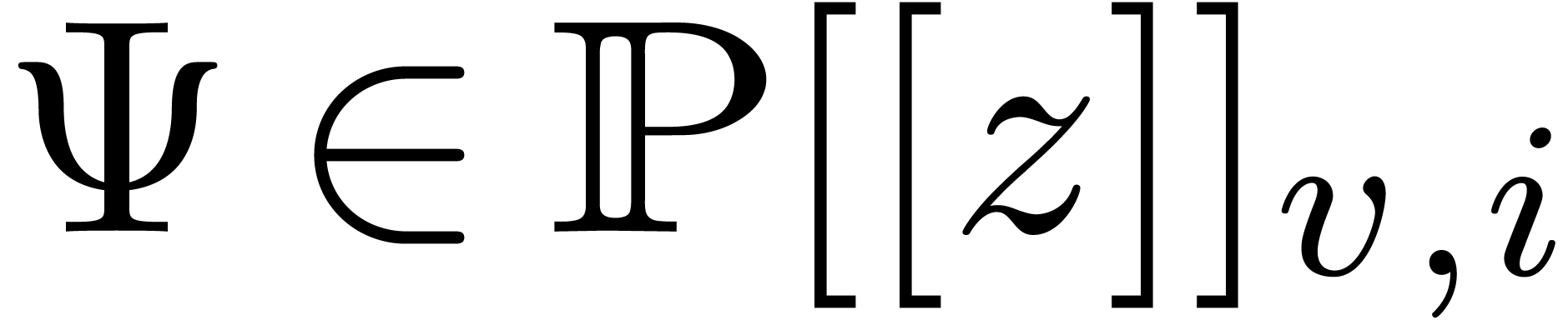

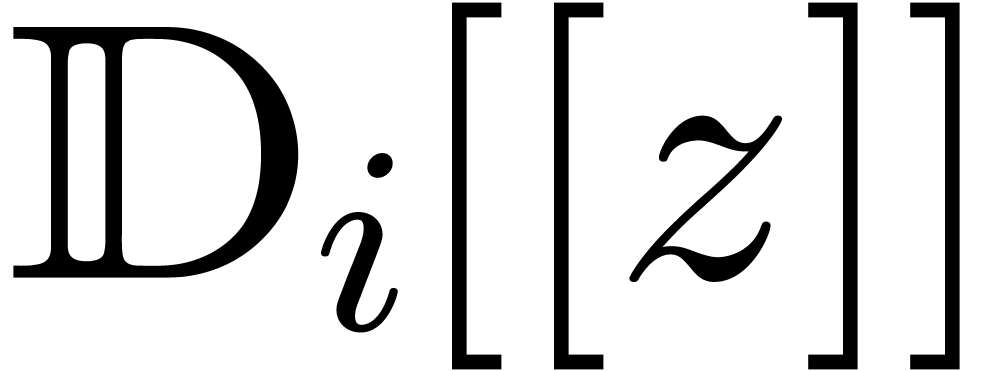

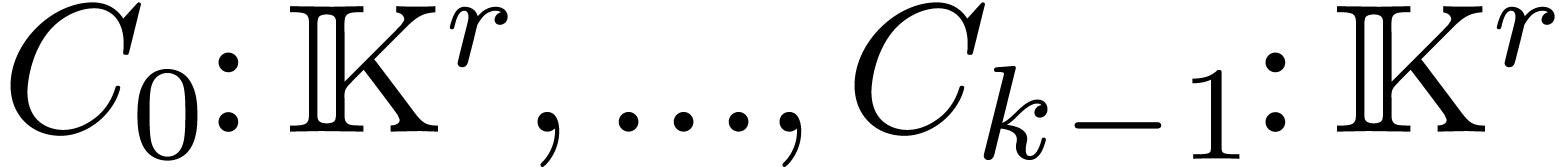

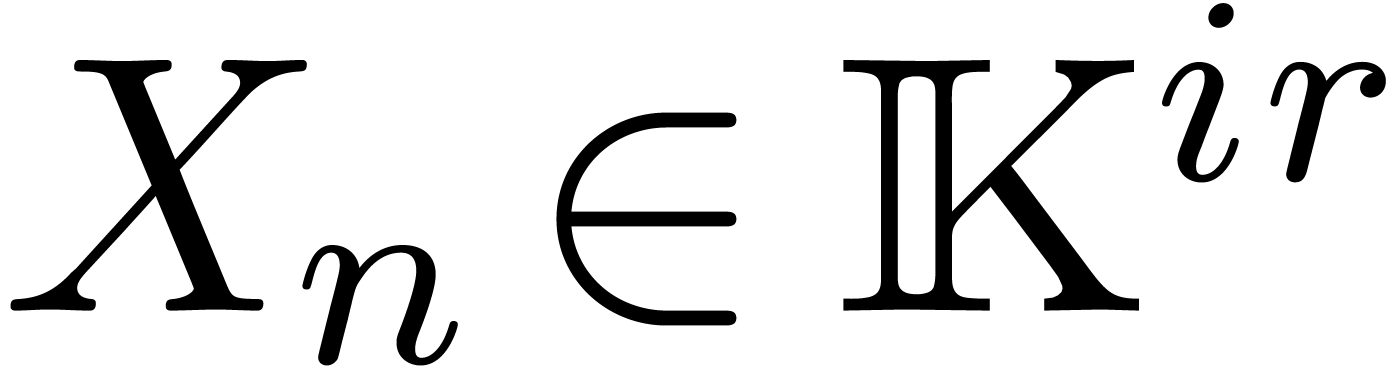

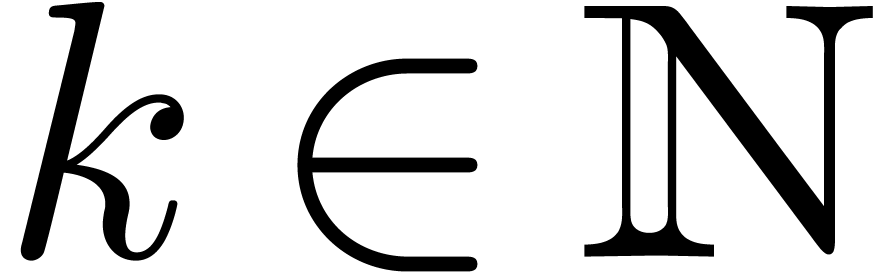

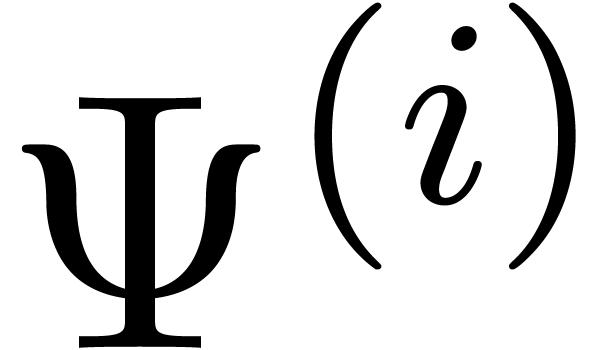

Let  be an effective field of constants of

characteristic zero. Let

be an effective field of constants of

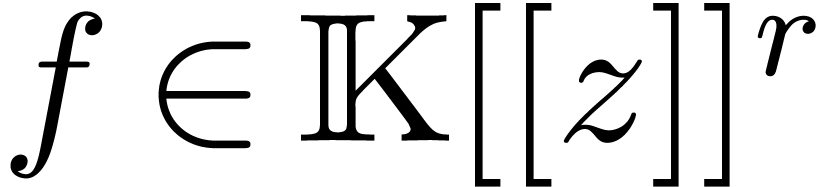

characteristic zero. Let  be a column vector of

be a column vector of

indeterminate series in

indeterminate series in  . We may also consider

. We may also consider  as a

power series

as a

power series  . Let

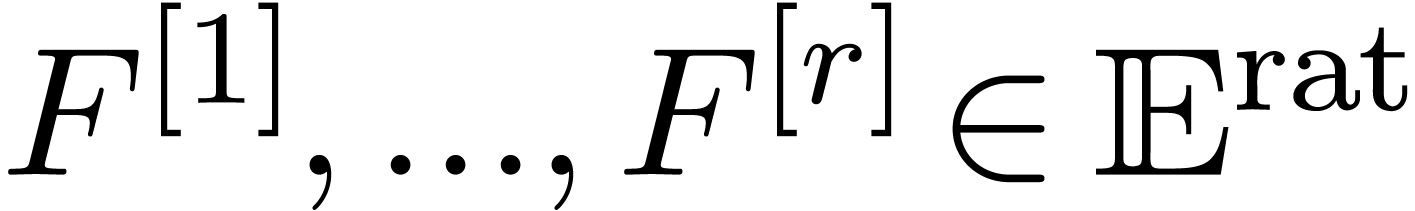

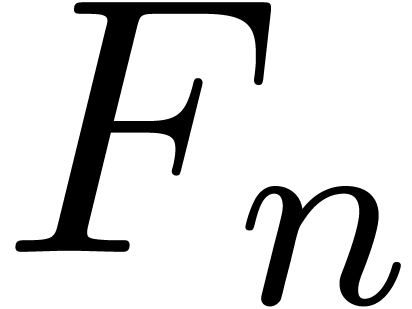

. Let  be a column vector of expressions built up from

be a column vector of expressions built up from  ,

,  and

constants in

and

constants in  using ring operations,

differentiation and integration (with constant term zero). Finally, let

using ring operations,

differentiation and integration (with constant term zero). Finally, let

be a finite number of initial conditions. Assume

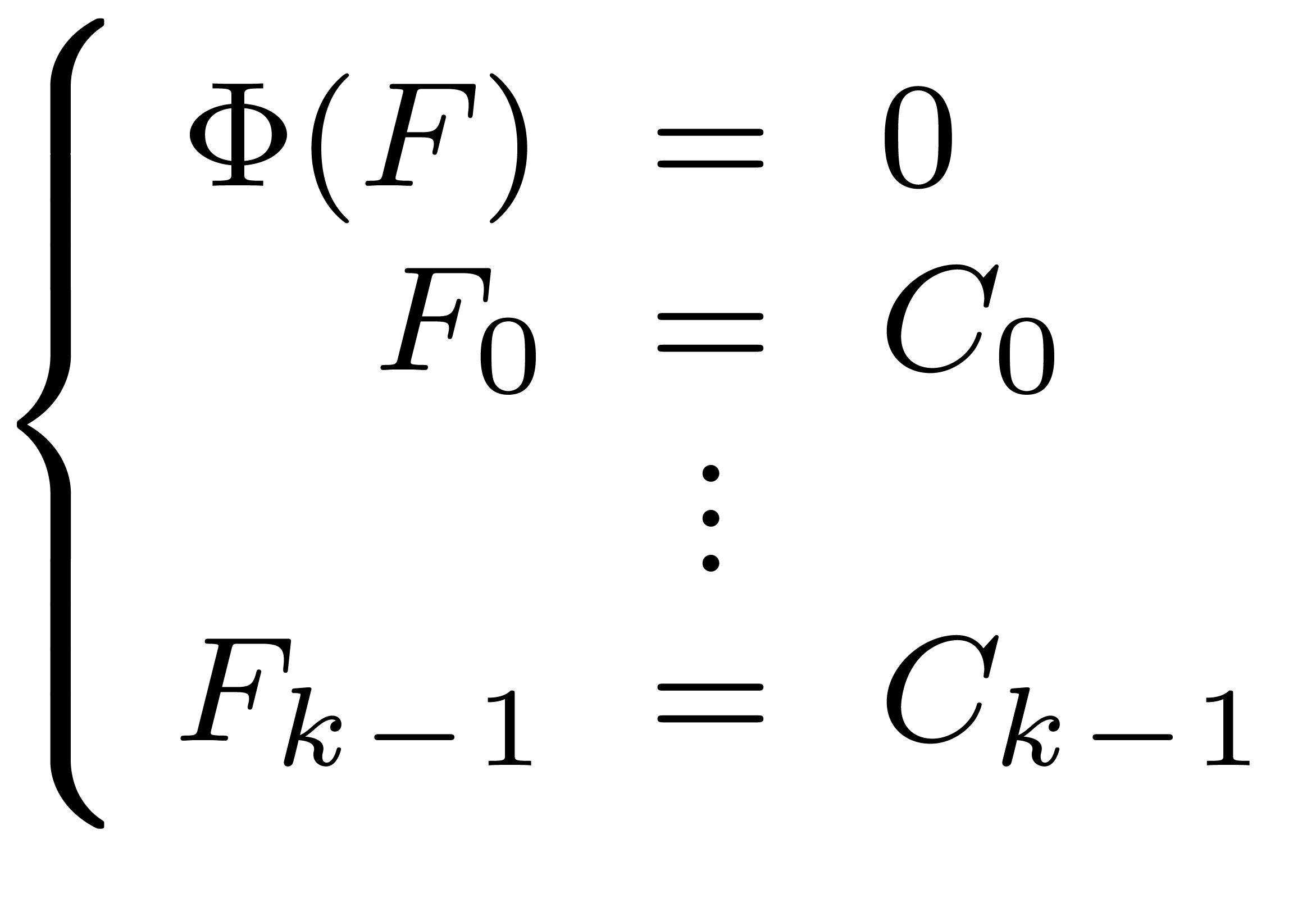

that the system

be a finite number of initial conditions. Assume

that the system

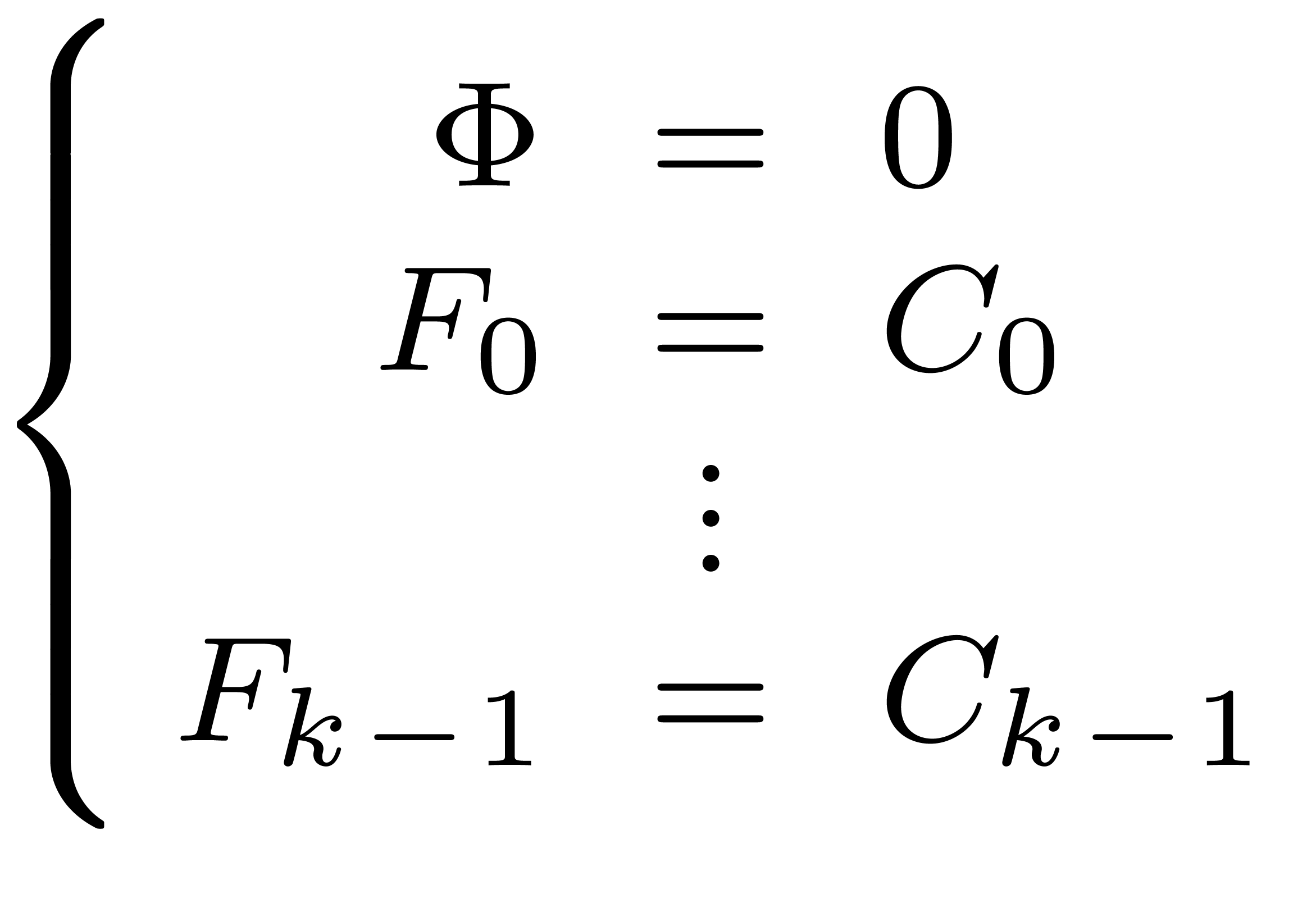

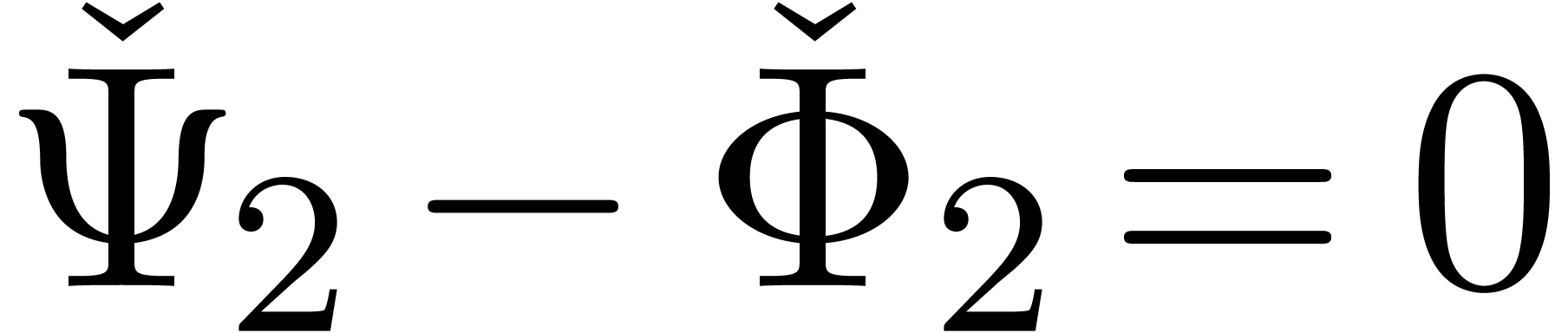

|

(1) |

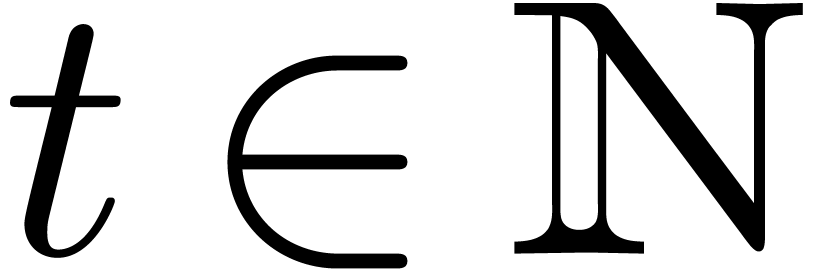

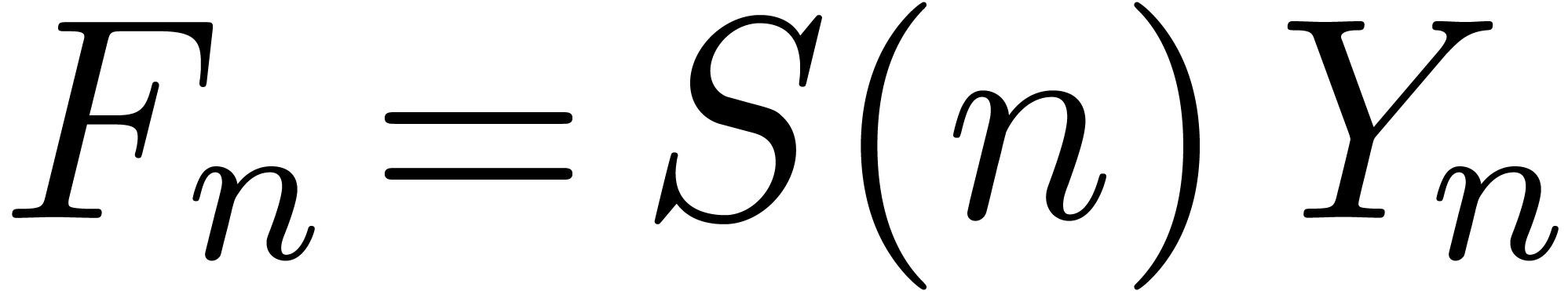

admits a unique solution  . In

this paper, we are interested in the efficient computation of this

solution up to a given order

. In

this paper, we are interested in the efficient computation of this

solution up to a given order  .

.

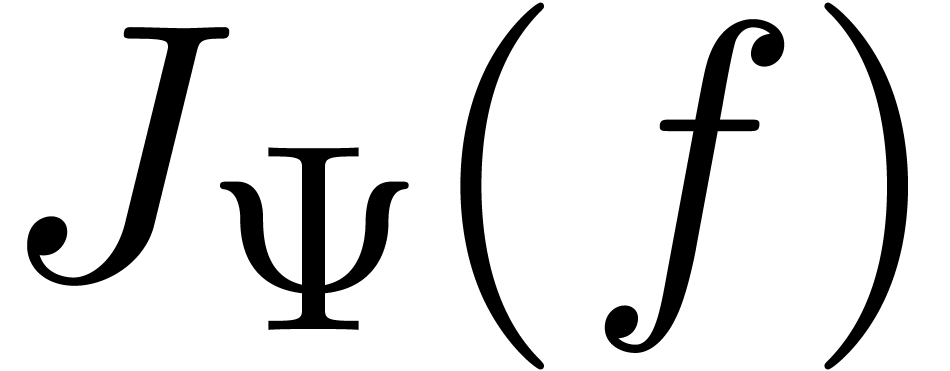

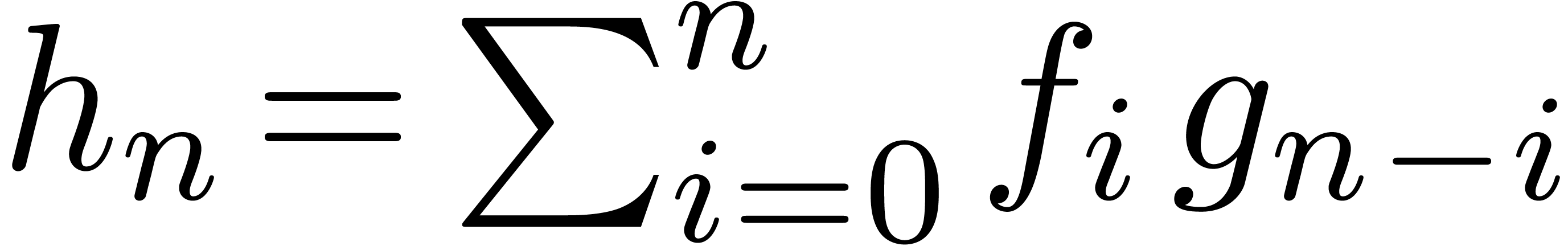

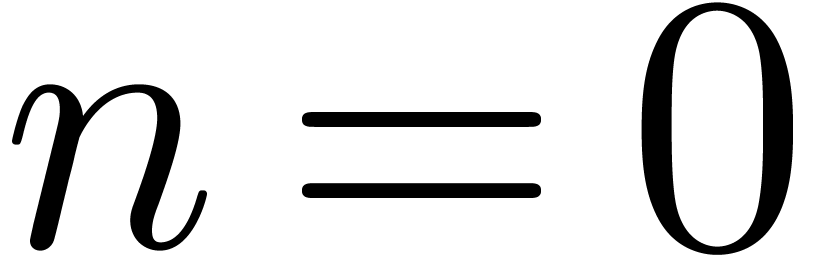

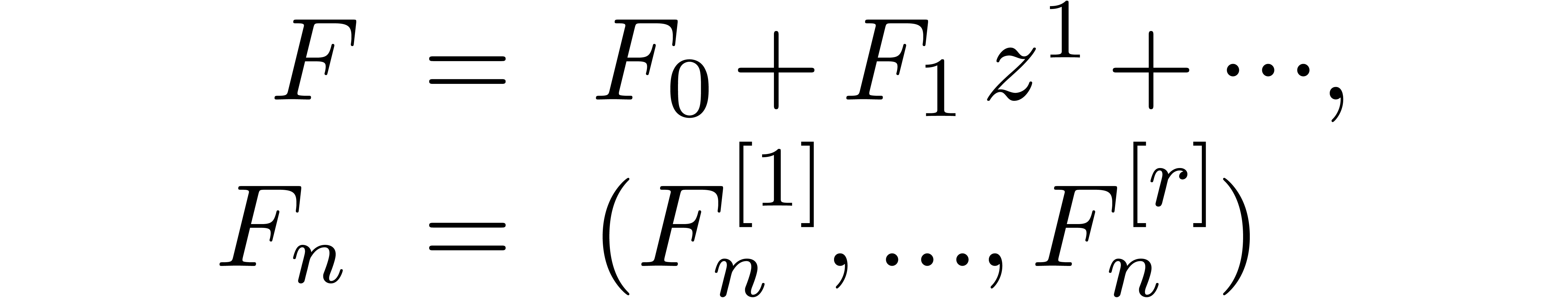

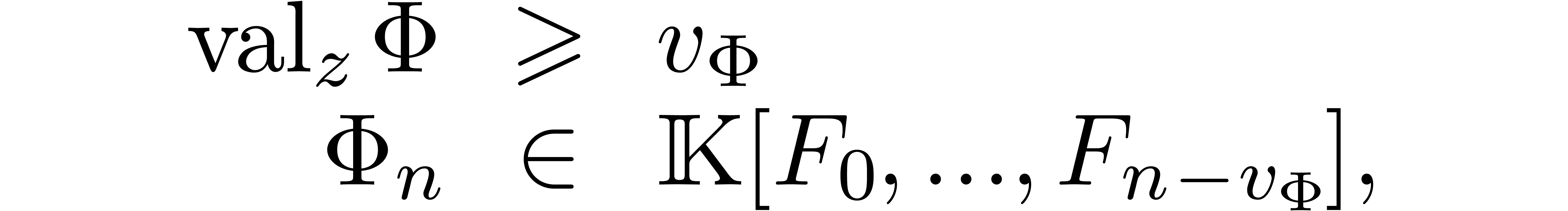

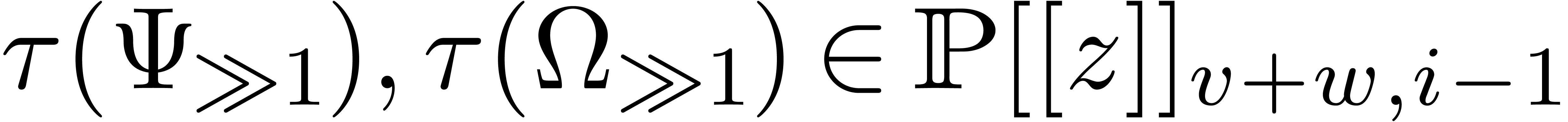

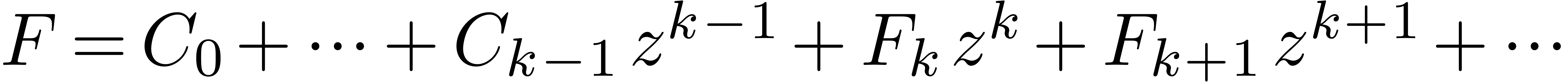

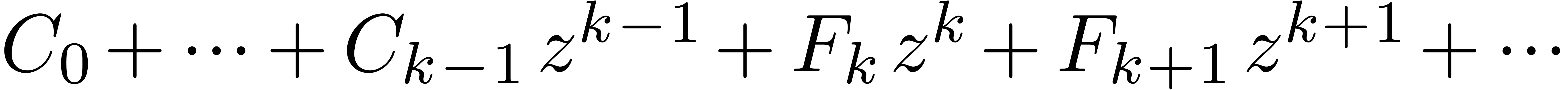

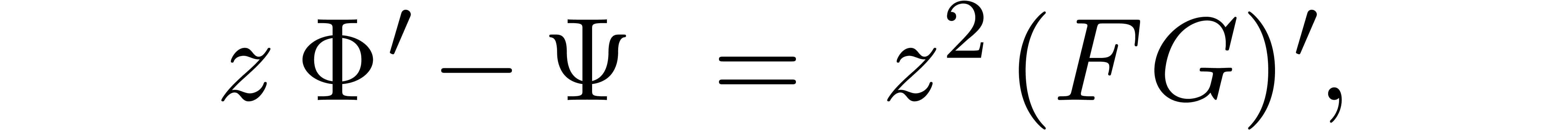

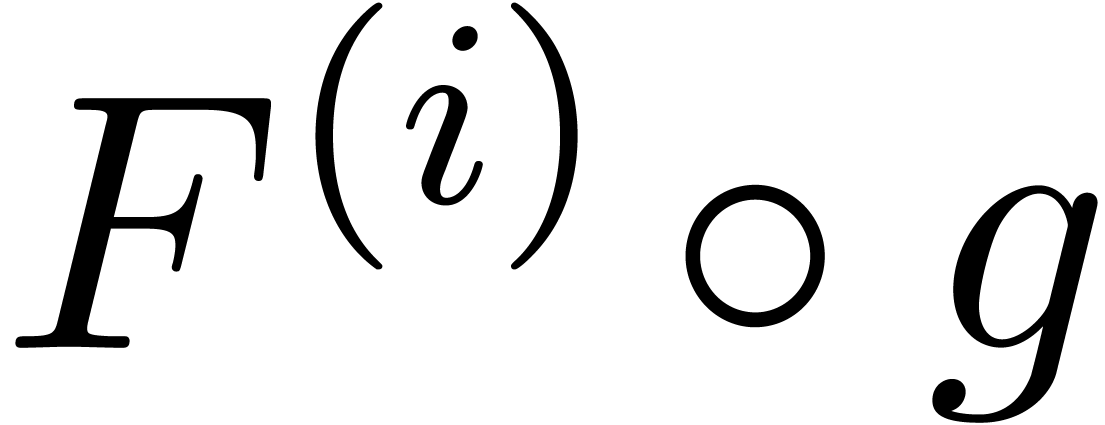

In the most favourable case, the equation  is of

the form

is of

the form

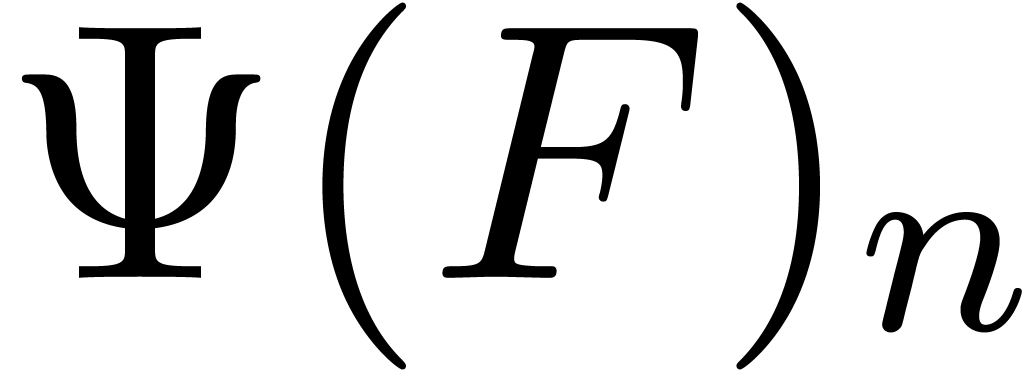

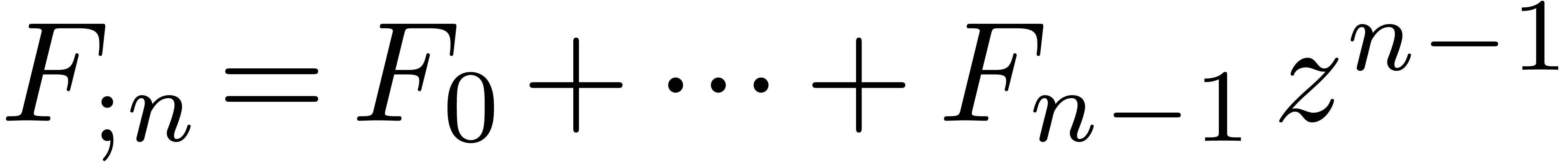

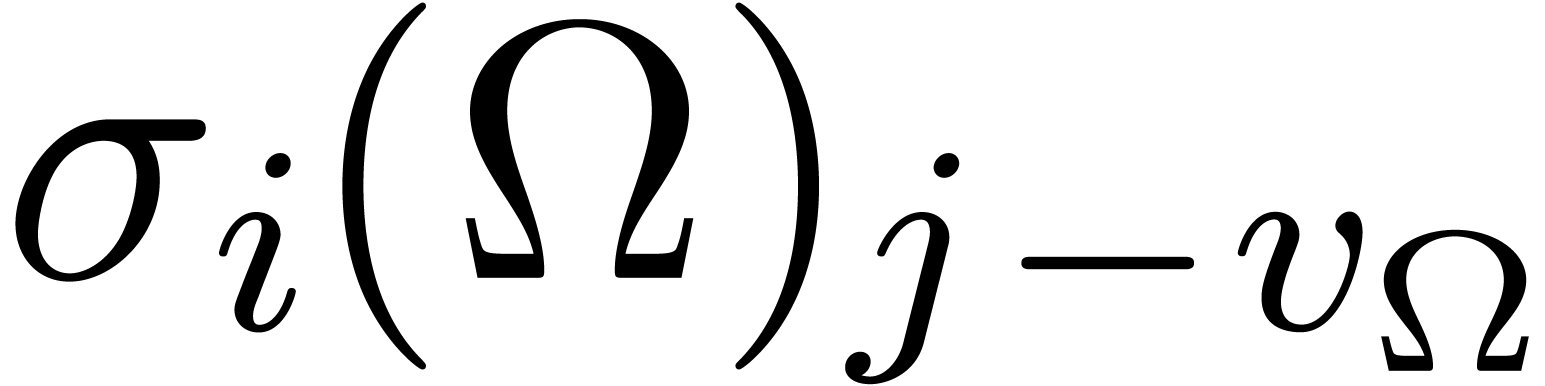

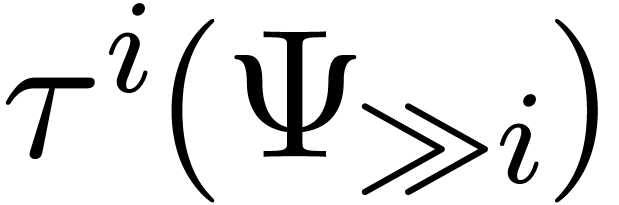

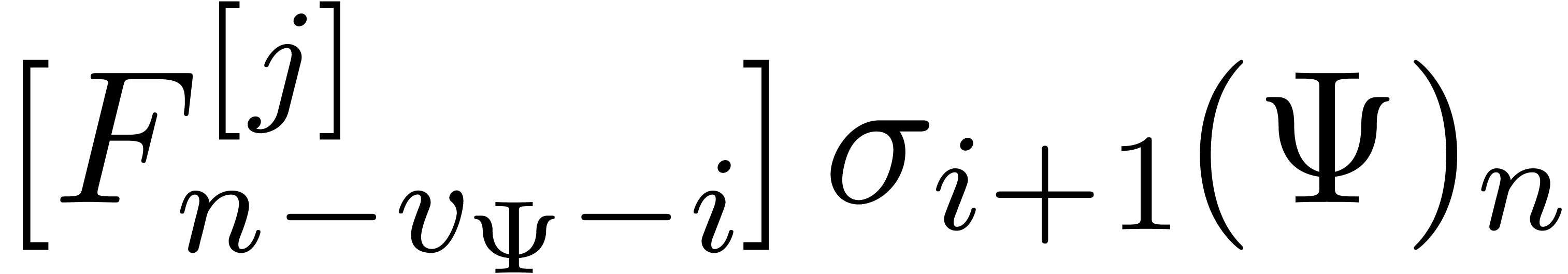

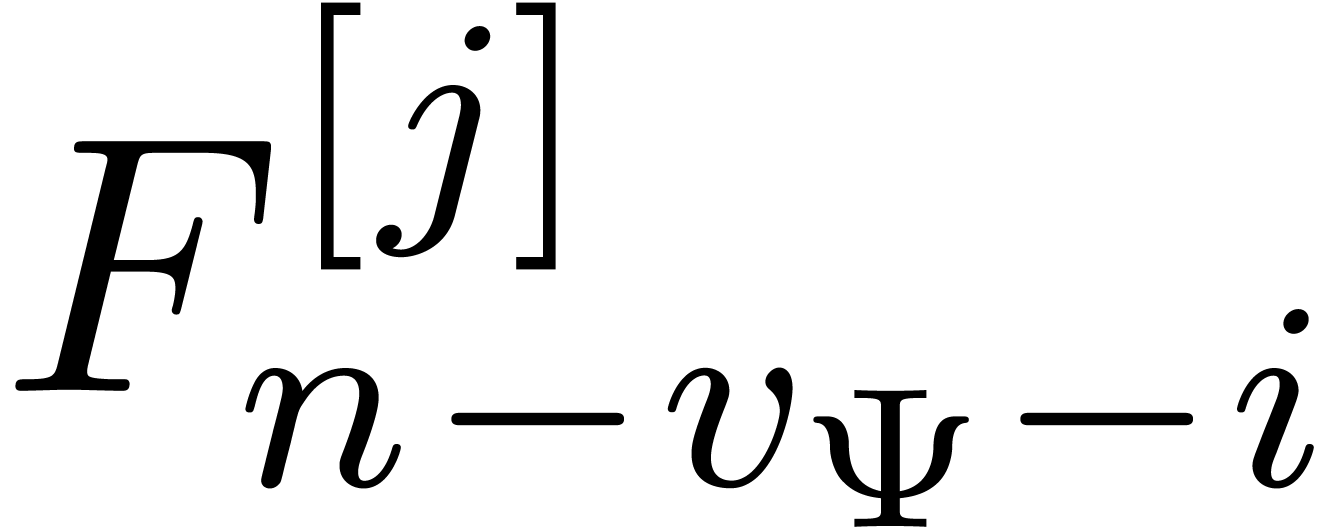

where the coefficient  of

of  in

in  only depends on earlier coefficients

only depends on earlier coefficients  of

of  , for

each

, for

each  . In that case,

. In that case,

actually provides us with a recurrence relation for the computation of

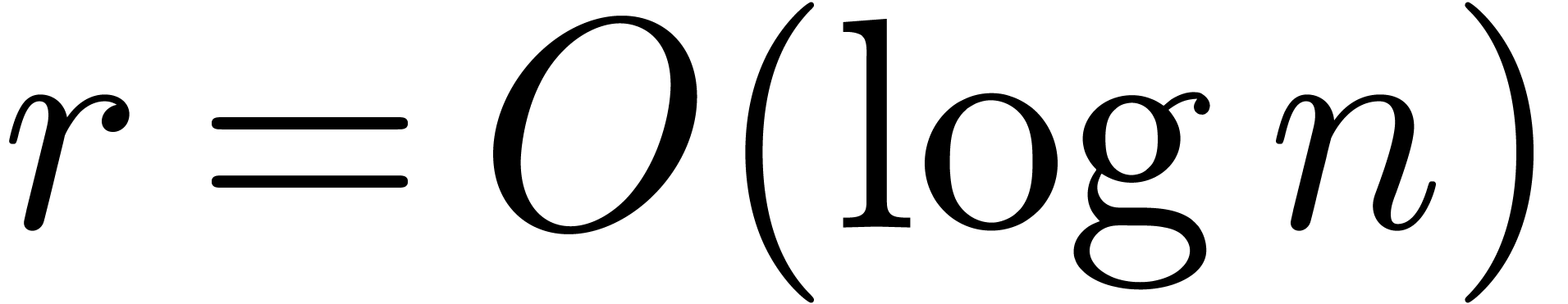

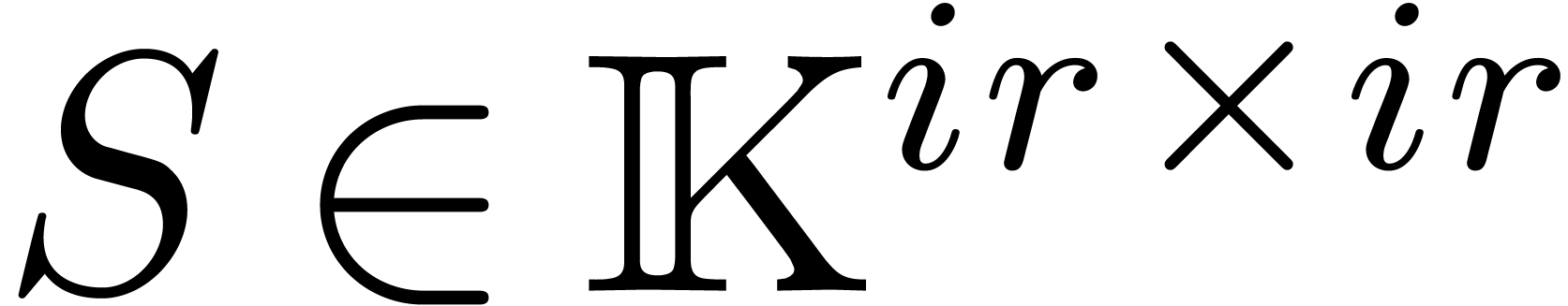

the solution. Using the technique of relaxed power series expansions [vdH02a, vdH07], which will briefly be recalled in

section 2, it is then possible to compute the expansion

up till order

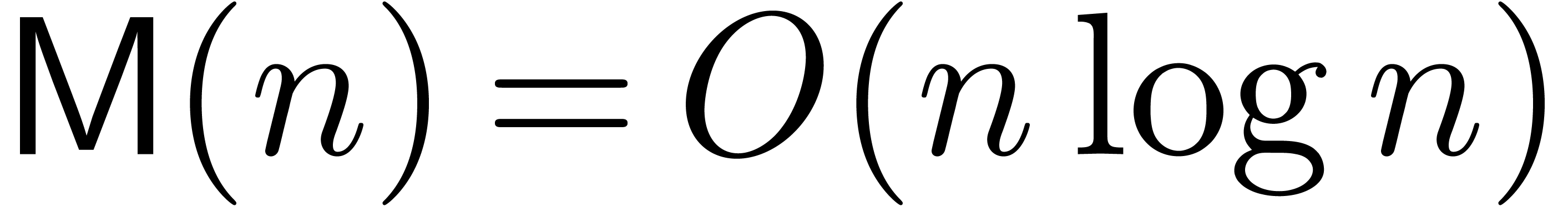

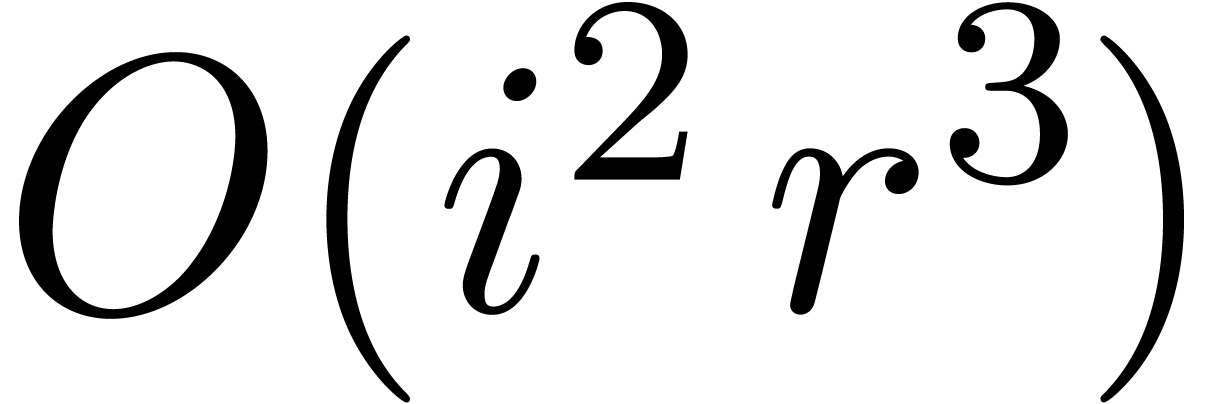

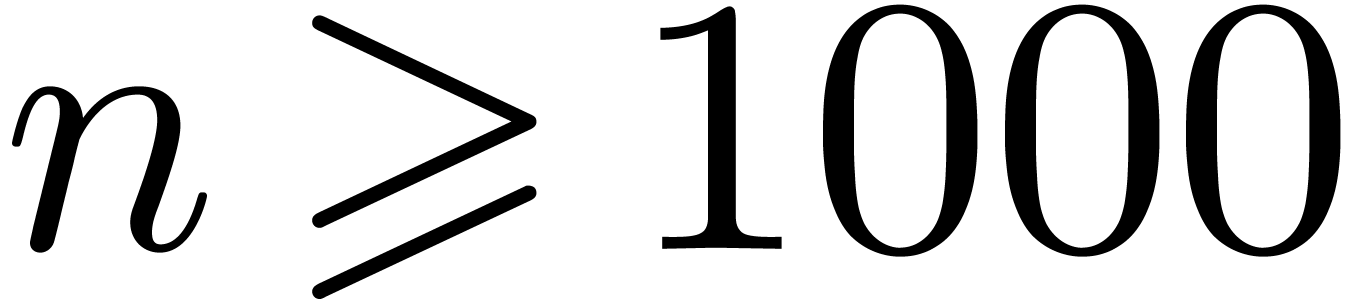

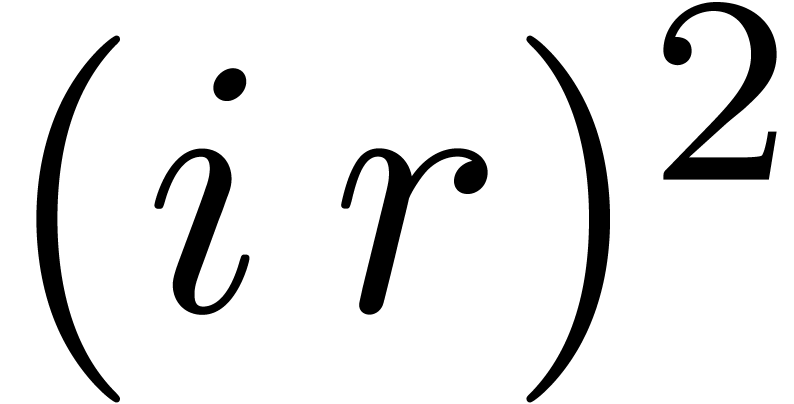

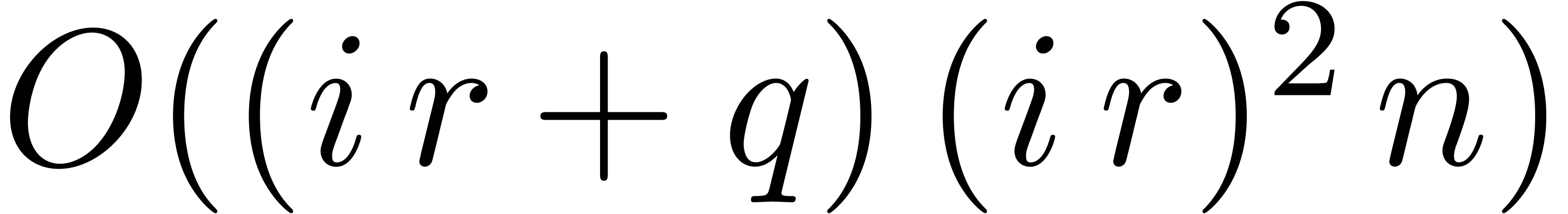

up till order  in time

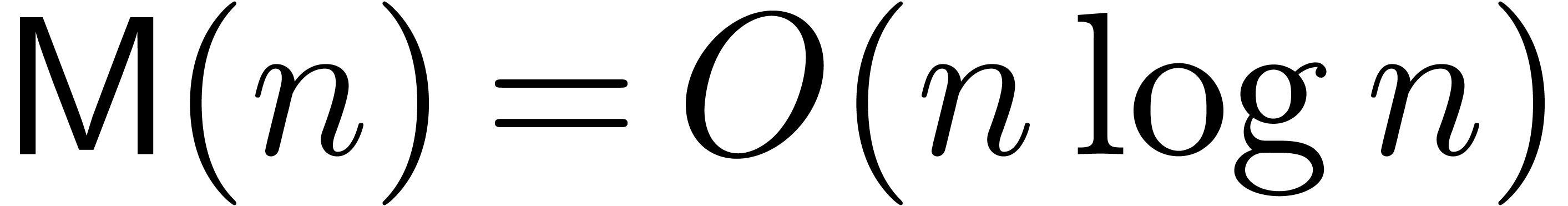

in time

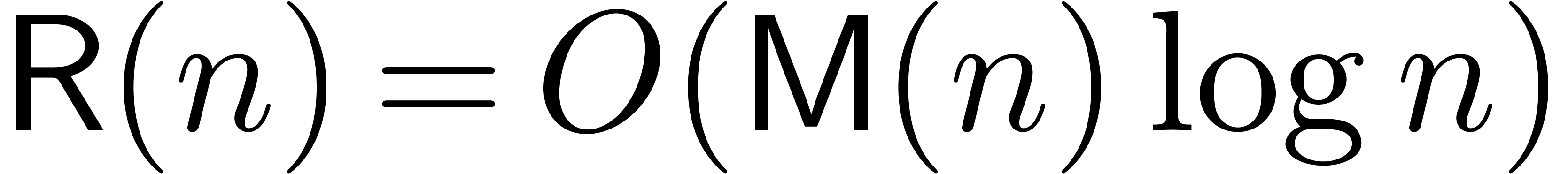

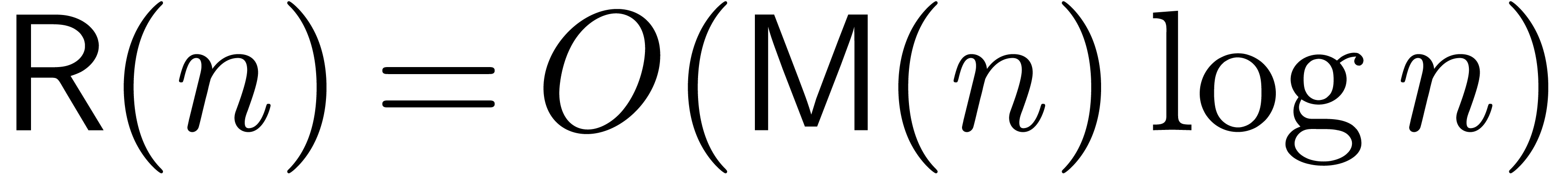

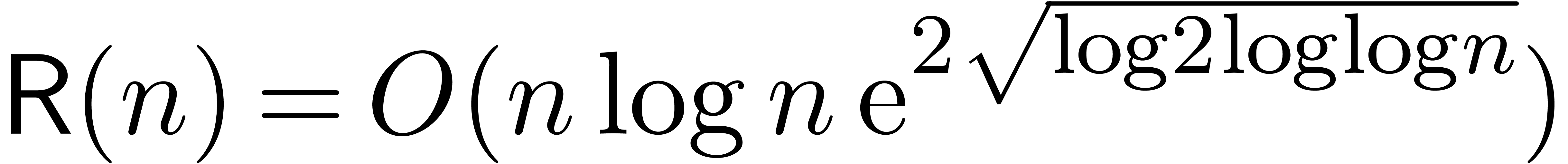

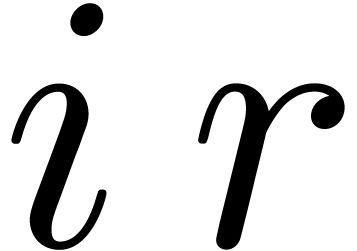

where  is the number of multiplications occurring

in

is the number of multiplications occurring

in  , where is

, where is  the total size of

the total size of  as an

expression, and

as an

expression, and  denotes the complexity of

relaxed multiplication of two power series up till order

denotes the complexity of

relaxed multiplication of two power series up till order  . Here we assume that

. Here we assume that  is represented by a directed acyclic graph, with possible common

subexpressions. For large

is represented by a directed acyclic graph, with possible common

subexpressions. For large  ,

we have

,

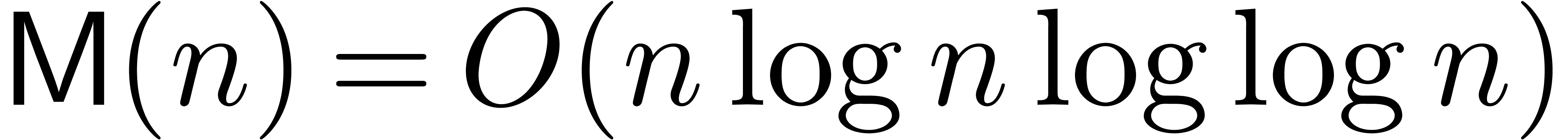

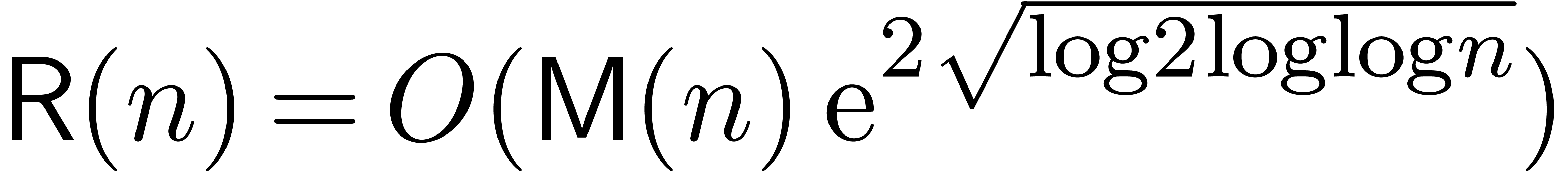

we have  , where

, where  denotes the complexity [CT65, SS71,

CK91] of multiplying two polynomials of degrees

denotes the complexity [CT65, SS71,

CK91] of multiplying two polynomials of degrees  . If

. If  admits

sufficiently many

admits

sufficiently many  -th roots

of unity, then we even have

-th roots

of unity, then we even have  and

and  . For moderate

. For moderate  ,

when polynomial multiplication is done naively or using Karatsuba's

method, relaxed multiplication is as efficient as the truncated

multiplication of polynomials at order

,

when polynomial multiplication is done naively or using Karatsuba's

method, relaxed multiplication is as efficient as the truncated

multiplication of polynomials at order  .

.

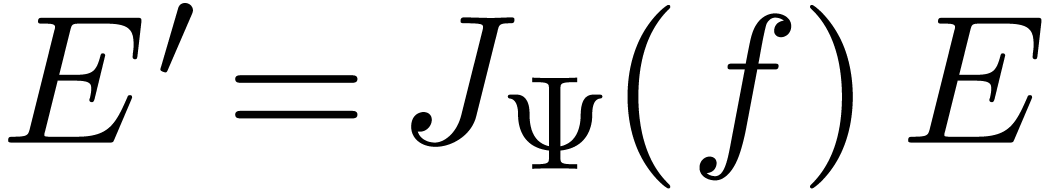

One particularly important example of an equation of the above type is the integration of a dynamical system

where  is algebraic (i.e. does not

involve differentiation or integration). In that case, given the

solution

is algebraic (i.e. does not

involve differentiation or integration). In that case, given the

solution  up till order

up till order  , we may consider the linearized system

, we may consider the linearized system

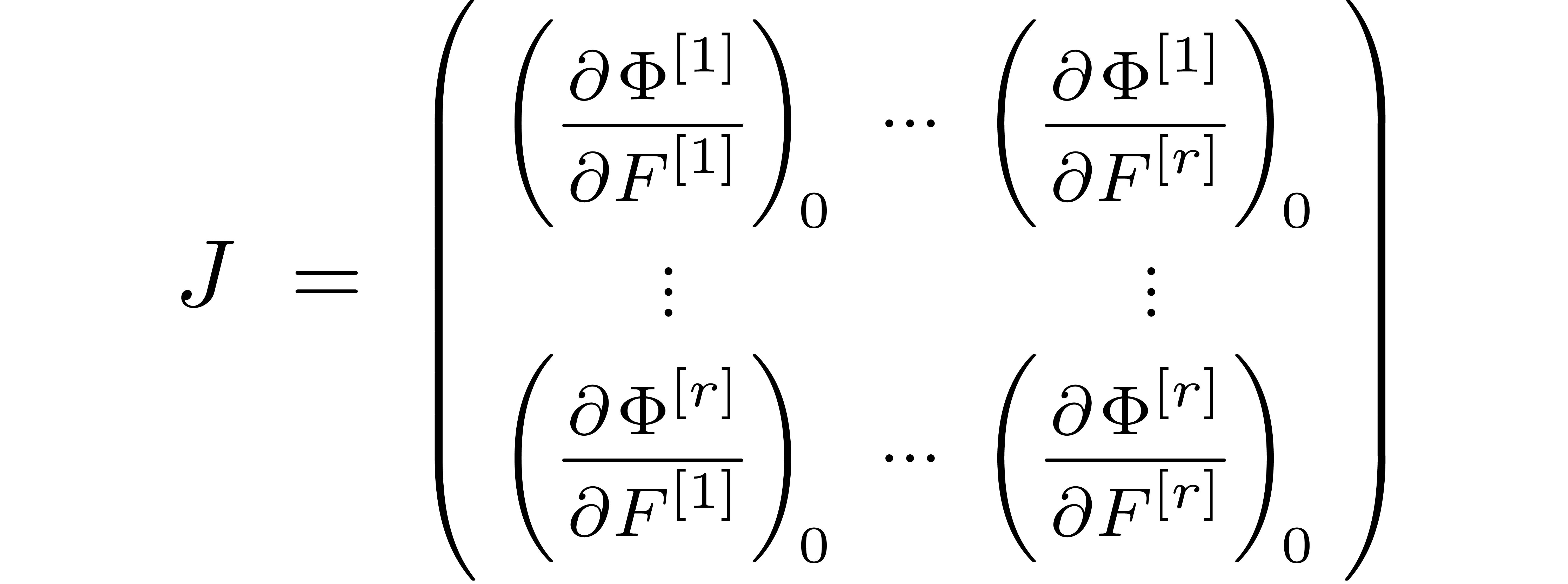

up till order  , where

, where  stands for the Jacobian matrix associated to

stands for the Jacobian matrix associated to  at

at  . If we

also have a fundamental system of solutions of

. If we

also have a fundamental system of solutions of  up till order

up till order  , then one step

of Newton's method allows us to find the solution of (4)

and a new fundamental system of solutions of the linearized equation up

till order

, then one step

of Newton's method allows us to find the solution of (4)

and a new fundamental system of solutions of the linearized equation up

till order  [BK78, BCO+06].

A careful analysis shows that this leads to an algorithm of time

complexity

[BK78, BCO+06].

A careful analysis shows that this leads to an algorithm of time

complexity

In [vdH06], this bound has been further improved to

under the assumptions that  admits sufficiently

many

admits sufficiently

many  -th roots of unity and

that

-th roots of unity and

that  .

.

Although the complexity (5) is asymptotically better than

(3) for very large  ,

the relaxed approach often turns out to be more efficient in practice.

Indeed, Newton's method both suffers from a larger constant factor and

the fact that we profit less from the potential sparsity of the system.

Moreover, as long as multiplications are done in the naive or Karatsuba

model, the relaxed approach is optimal in the sense that the computation

of the solution takes roughly the same time as its verification. Another

advantage of the relaxed approach is that it generalizes to more general

functional equations and partial differential equations.

,

the relaxed approach often turns out to be more efficient in practice.

Indeed, Newton's method both suffers from a larger constant factor and

the fact that we profit less from the potential sparsity of the system.

Moreover, as long as multiplications are done in the naive or Karatsuba

model, the relaxed approach is optimal in the sense that the computation

of the solution takes roughly the same time as its verification. Another

advantage of the relaxed approach is that it generalizes to more general

functional equations and partial differential equations.

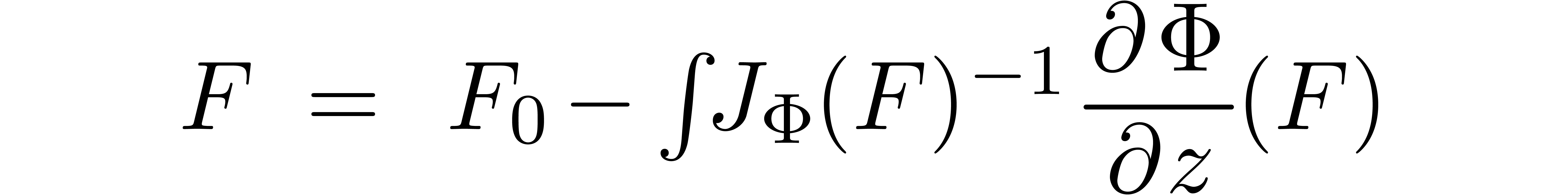

Let us now return to our original implicit system (1). A

first approach for its resolution is to keep differentiating the system

with respect to  until it becomes equivalent to a

system of the form (2). For instance, if

until it becomes equivalent to a

system of the form (2). For instance, if  is algebraic, then differentiation of (1) yields

is algebraic, then differentiation of (1) yields

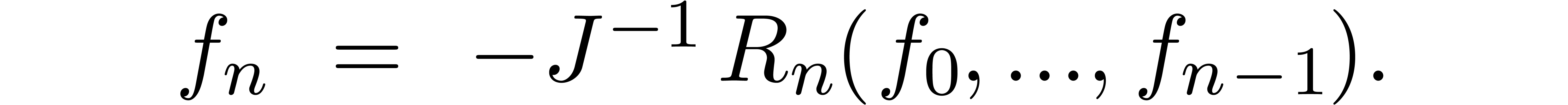

Consequently, if  is invertible, then

is invertible, then

provides us with an equivalent system which can be solved by one of the previous methods. Unfortunately, this method requires the computation of the Jacobian, so we do not longer exploit the potential sparsity of the original system.

If  is a system of differentially algebraic

equations, then we may also seek to apply Newton's method. For non

degenerate systems and assuming that we have computed the solution

is a system of differentially algebraic

equations, then we may also seek to apply Newton's method. For non

degenerate systems and assuming that we have computed the solution  and a fundamental system of solutions for the

linearized equation up till order

and a fundamental system of solutions for the

linearized equation up till order  ,

one step of Newton's method yields an extension of the solutions up till

order

,

one step of Newton's method yields an extension of the solutions up till

order  , for a fixed constant

, for a fixed constant

. From an asymptotic point of

view, this means that the complexities (5) and (6)

remain valid.

. From an asymptotic point of

view, this means that the complexities (5) and (6)

remain valid.

It is natural to ask for a relaxed algorithm for the resolution of (1), with a similar complexity as (3). We will restrict our attention to so-called “quasi-linear equations”, for which the linearized system is “non degenerate”. This concept will be introduced formally in section 3 and studied in more detail in section 6. In section 4, we present the main algorithm of this paper for the relaxed resolution of (1).

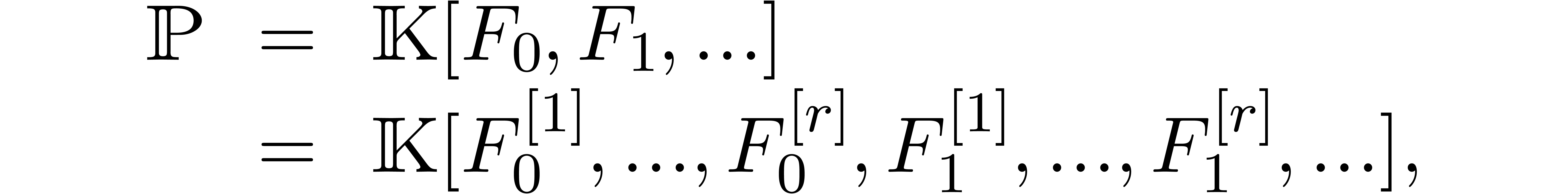

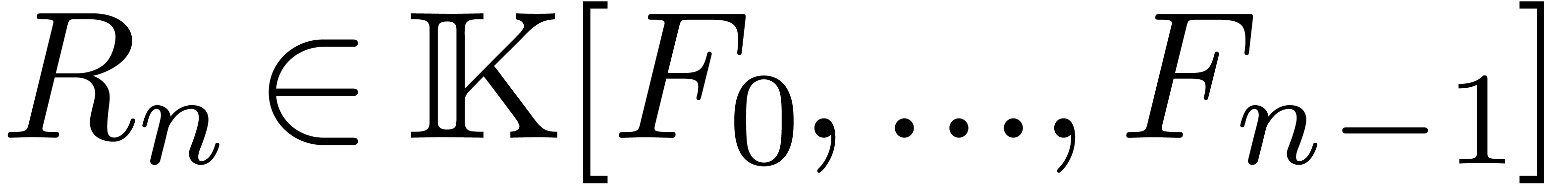

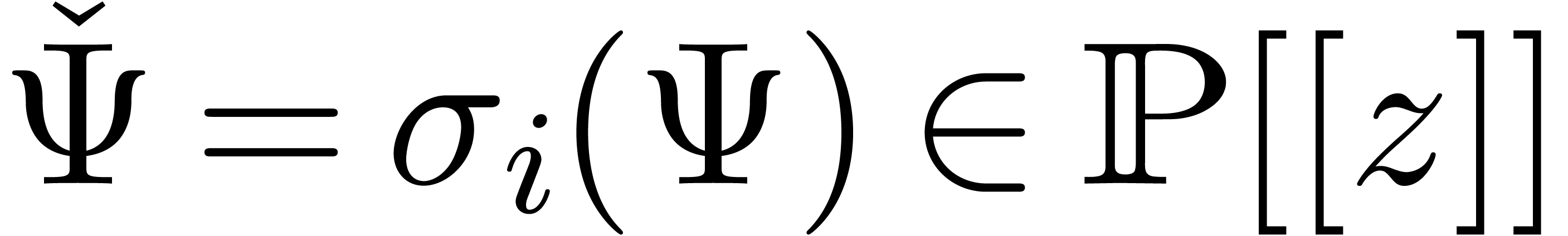

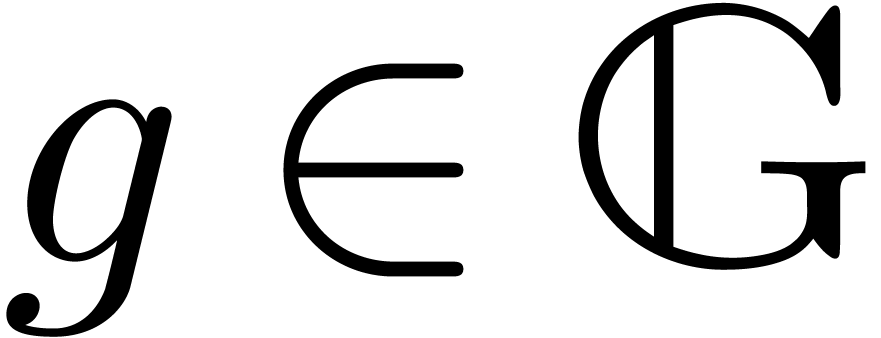

The idea behind the algorithm is simple: considering not yet computed

coefficients of  as formal unknowns, we solve the

system of equations

as formal unknowns, we solve the

system of equations  for increasing values of

for increasing values of

. In particular, the

coefficients of the power series involved in the resolution process are

no longer in

. In particular, the

coefficients of the power series involved in the resolution process are

no longer in  , but rather

polynomials in

, but rather

polynomials in  . For each

subexpression

. For each

subexpression  of

of  and

modulo adequate substitution of known coefficients

and

modulo adequate substitution of known coefficients  by their values

by their values  , it turns

out that there exist constants

, it turns

out that there exist constants  and

and  , such that

, such that  is a

constant plus a linear combination of

is a

constant plus a linear combination of  ,

for large

,

for large  . Moreover, each

relaxed multiplication with symbolic coefficients can be reduced to a

relaxed multiplication with constant coefficients and a finite number of

scalar multiplications with symbolic coefficients. The main result is

stated in theorem 5 and generalizes the previous complexity

bound (3).

. Moreover, each

relaxed multiplication with symbolic coefficients can be reduced to a

relaxed multiplication with constant coefficients and a finite number of

scalar multiplications with symbolic coefficients. The main result is

stated in theorem 5 and generalizes the previous complexity

bound (3).

In section 6, we provide a more detailed study of the

linearized system associated to (1). This will allow us to

make the dependency of  on

on  more explicit. On the one hand, given a quasi-linear system on input,

this will enable us to provide a certificate that the system is indeed

quasi-linear. On the other hand, the asymptotic complexity bounds can be

further sharpened in lucky situations (see theorem 11).

Finally, in the last section 7, we outline how to

generalize our approach to more general functional equations and partial

differential equations.

more explicit. On the one hand, given a quasi-linear system on input,

this will enable us to provide a certificate that the system is indeed

quasi-linear. On the other hand, the asymptotic complexity bounds can be

further sharpened in lucky situations (see theorem 11).

Finally, in the last section 7, we outline how to

generalize our approach to more general functional equations and partial

differential equations.

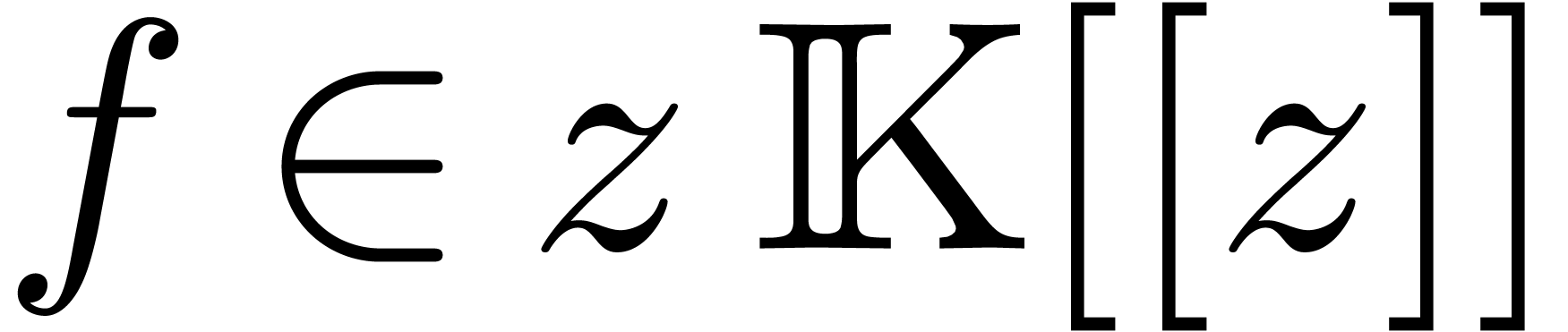

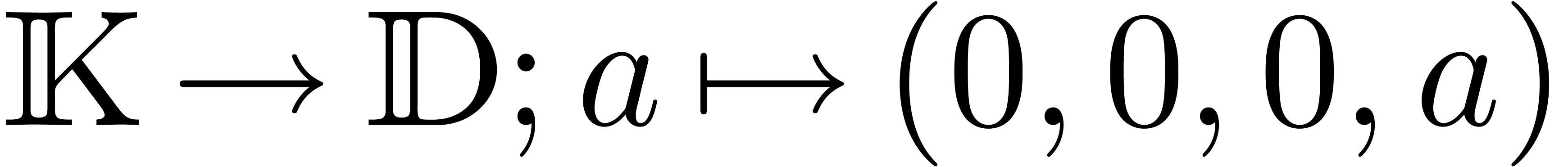

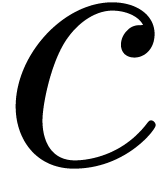

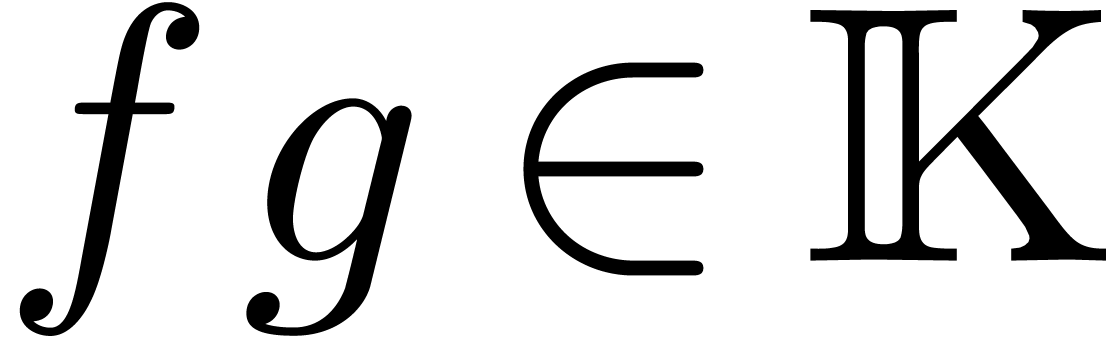

Throughout this article,  will denote an

effective field of characteristic zero. This means that elements in

will denote an

effective field of characteristic zero. This means that elements in  can be encoded by data structures on a computer and

that we have algorithms for performing the field operations in

can be encoded by data structures on a computer and

that we have algorithms for performing the field operations in  .

.

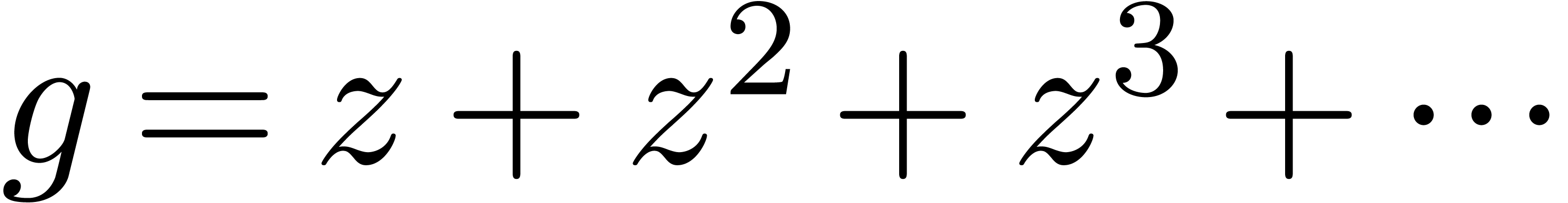

Let us briefly recall the technique of relaxed power series

computations, which is explained in more detail in [vdH02a].

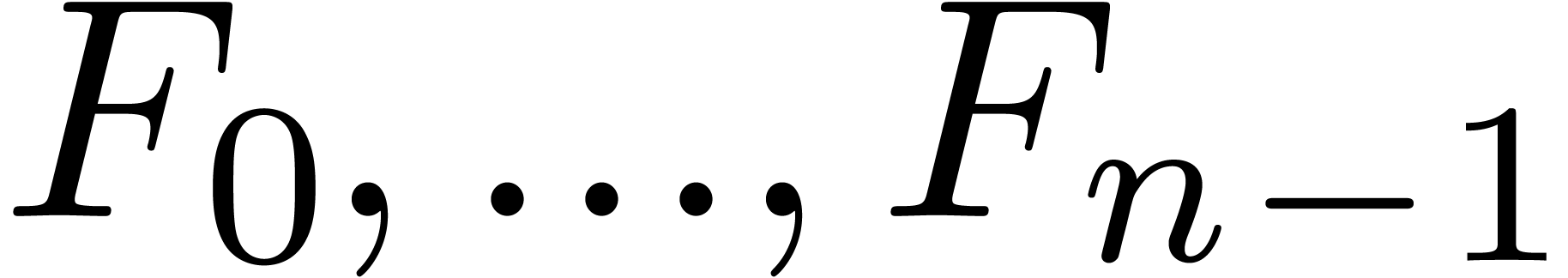

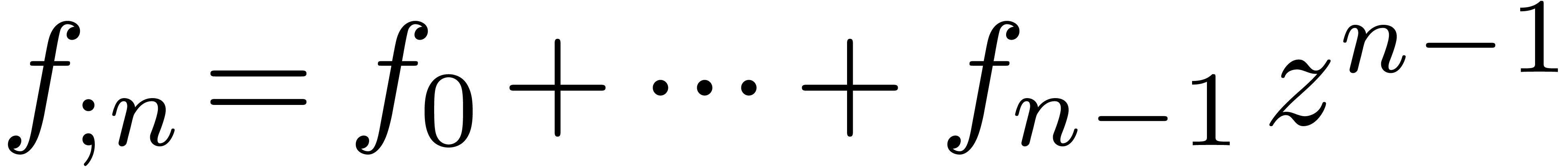

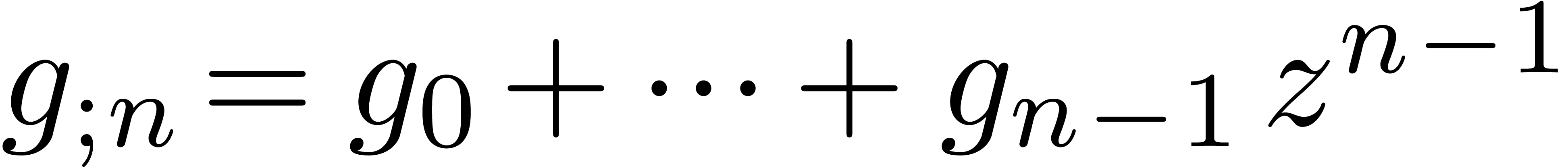

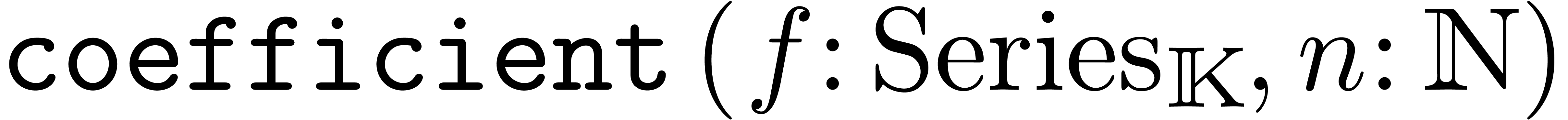

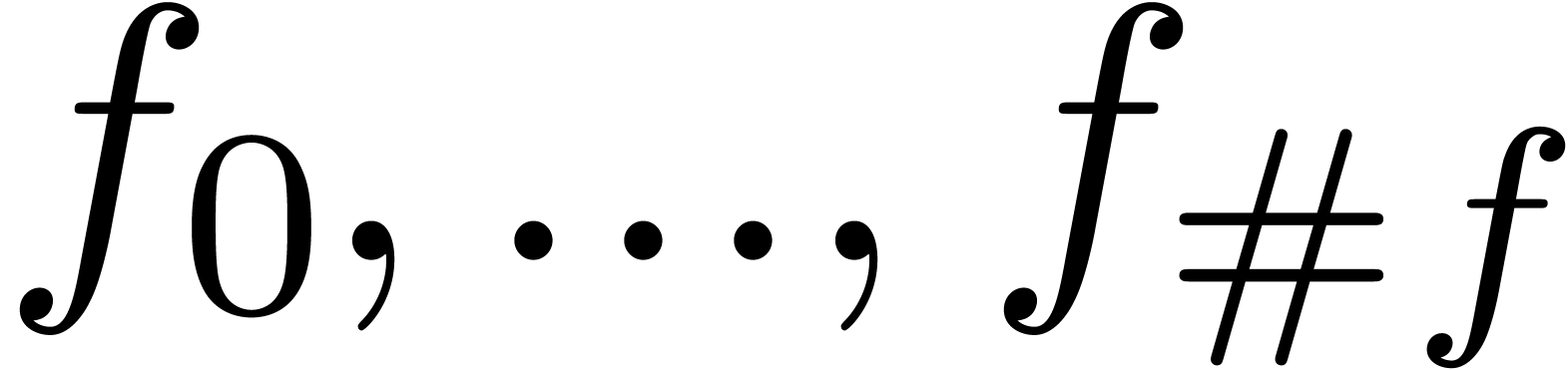

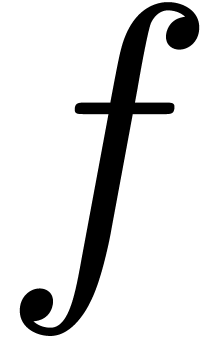

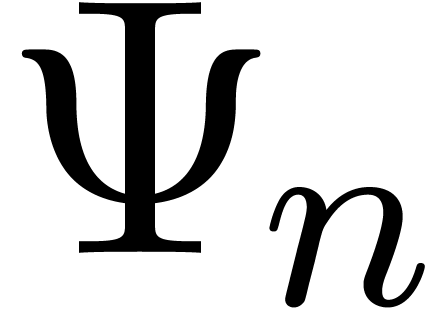

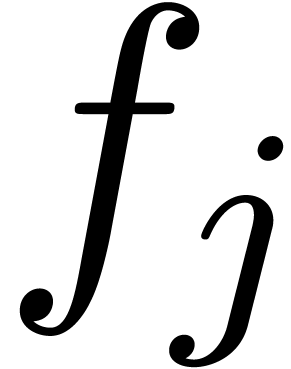

In this computational model, a power series  is

regarded as a stream of coefficients

is

regarded as a stream of coefficients  .

When performing an operation

.

When performing an operation  on power series it

is required that the coefficient

on power series it

is required that the coefficient  of the result

is output as soon as sufficiently many coefficients of the inputs are

known, so that the computation of

of the result

is output as soon as sufficiently many coefficients of the inputs are

known, so that the computation of  does not

depend on the further coefficients. For instance, in the case of a

multiplication

does not

depend on the further coefficients. For instance, in the case of a

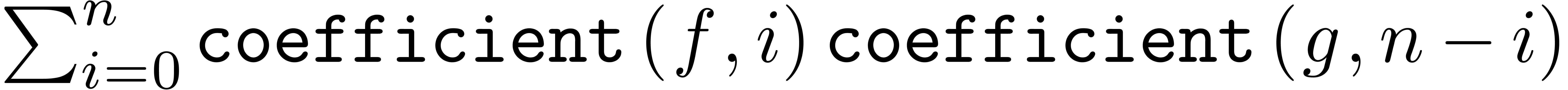

multiplication  , we require

that

, we require

that  is output as soon as

is output as soon as  and

and  are known. In particular, we may use the

naive formula

are known. In particular, we may use the

naive formula  for the computation of

for the computation of  .

.

The additional constraint on the time when coefficients should be output

admits the important advantage that the inputs may depend on the output,

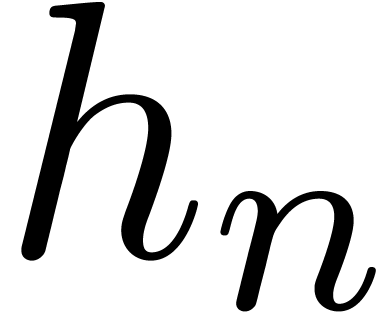

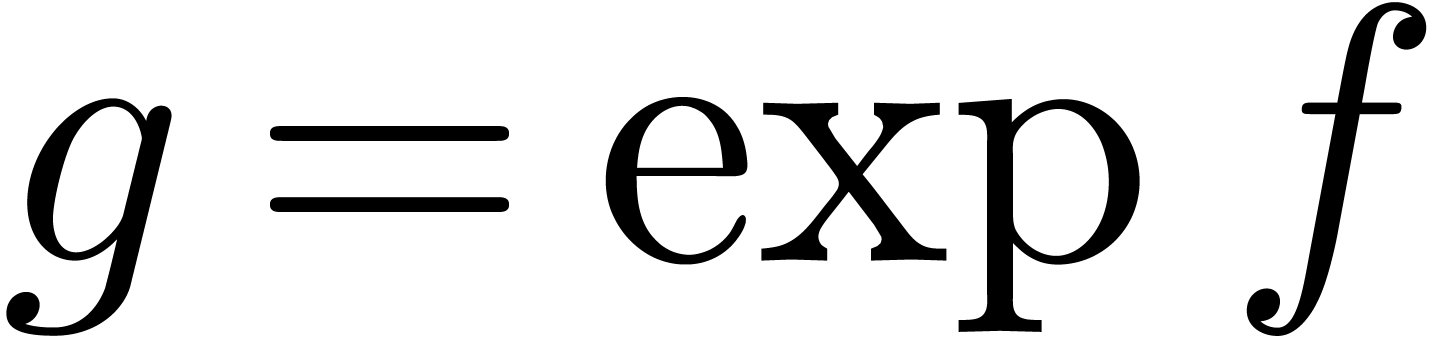

provided that we add a small delay. For instance, the exponential  of a power series

of a power series  may be

computed in a relaxed way using the formula

may be

computed in a relaxed way using the formula

Indeed, when using the naive formula for products, the coefficient  is given by

is given by

and the right-hand side only depends on the previously computed

coefficients  .

.

The main drawback of the relaxed approach is that we cannot directly use

fast algorithms on polynomials for computations with power series. For

instance, assuming that  has sufficiently many

has sufficiently many

-th roots of unity and that

field operations in

-th roots of unity and that

field operations in  can be done in time

can be done in time  , two polynomials of degrees

, two polynomials of degrees  can be multiplied in time

can be multiplied in time  , using FFT multiplication [CT65].

Given the truncations

, using FFT multiplication [CT65].

Given the truncations  and

and  at order

at order  of power series

of power series  , we may thus compute the truncated product

, we may thus compute the truncated product  in time

in time  as well. This is much

faster than the naive

as well. This is much

faster than the naive  relaxed multiplication

algorithm for the computation of

relaxed multiplication

algorithm for the computation of  .

However, the formula for

.

However, the formula for  when using FFT

multiplication depends on all input coefficients

when using FFT

multiplication depends on all input coefficients  and

and  , so the fast algorithm

is not relaxed. Fortunately, efficient relaxed multiplication algorithms

do exist:

, so the fast algorithm

is not relaxed. Fortunately, efficient relaxed multiplication algorithms

do exist:

be the time complexity for the

multiplication of polynomials of degrees

be the time complexity for the

multiplication of polynomials of degrees  in

in

. Then there exists a

relaxed multiplication algorithm for series in

. Then there exists a

relaxed multiplication algorithm for series in  of time complexity

of time complexity  .

.

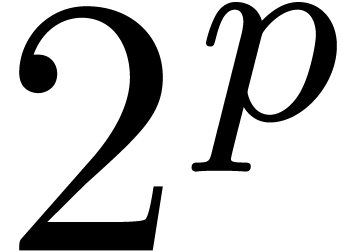

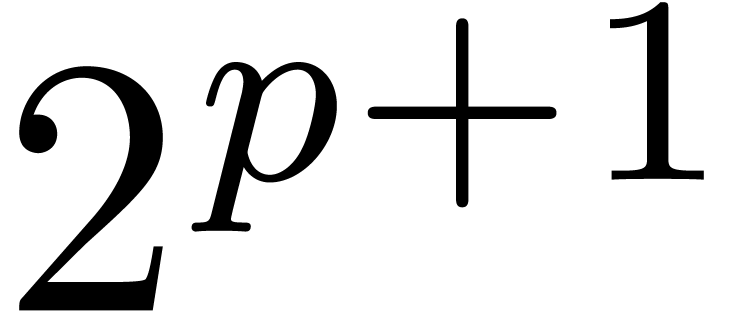

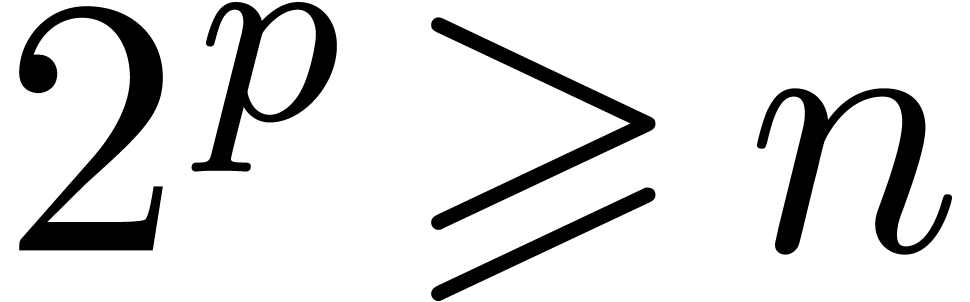

admits a primitive

admits a primitive  -th root of unity for all

-th root of unity for all  , then there exists a relaxed multiplication

algorithm of time complexity

, then there exists a relaxed multiplication

algorithm of time complexity  .

In practice, the existence of a

.

In practice, the existence of a  -th

root of unity with

-th

root of unity with  suffices for multiplication

up to order

suffices for multiplication

up to order  .

.

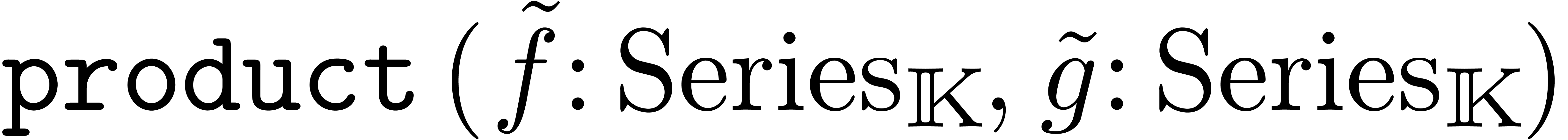

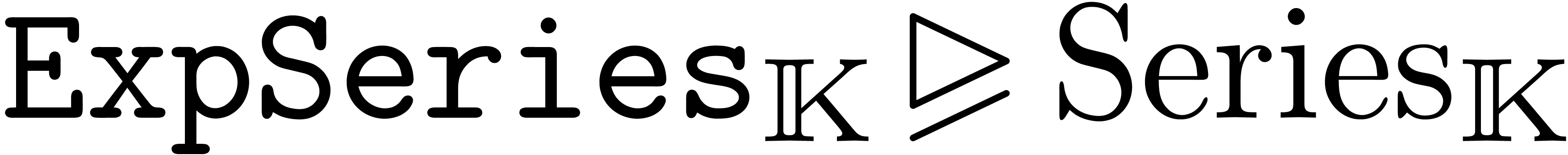

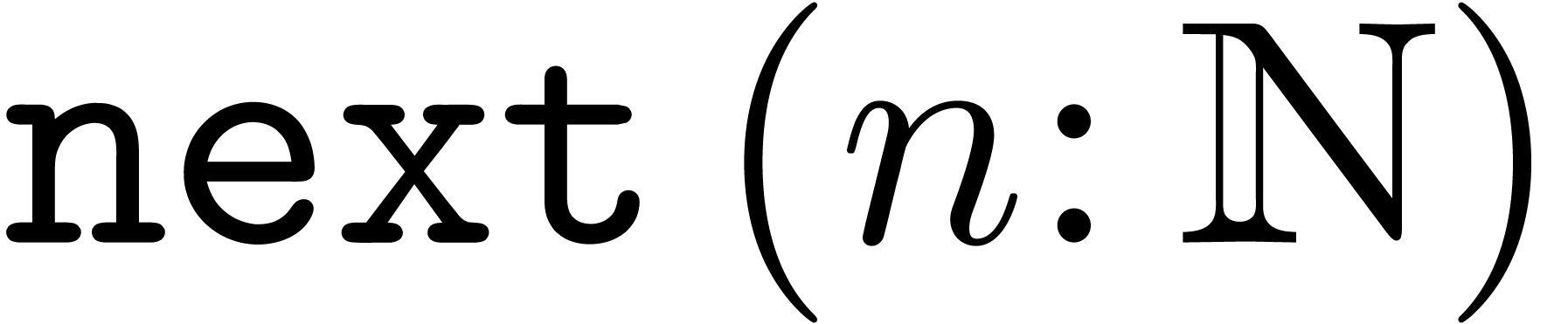

An efficient C++ implementation of relaxed power series is available in

the  are implemented as an

abstract base class

are implemented as an

abstract base class  which contains the already

computed coefficients and a protected virtual method

which contains the already

computed coefficients and a protected virtual method  for computing the next coefficient. For instance, the naive product of

for computing the next coefficient. For instance, the naive product of

can be implemented using the following concrete

derived class

can be implemented using the following concrete

derived class  :

:

Class

Fields

Constructor

Method

Return

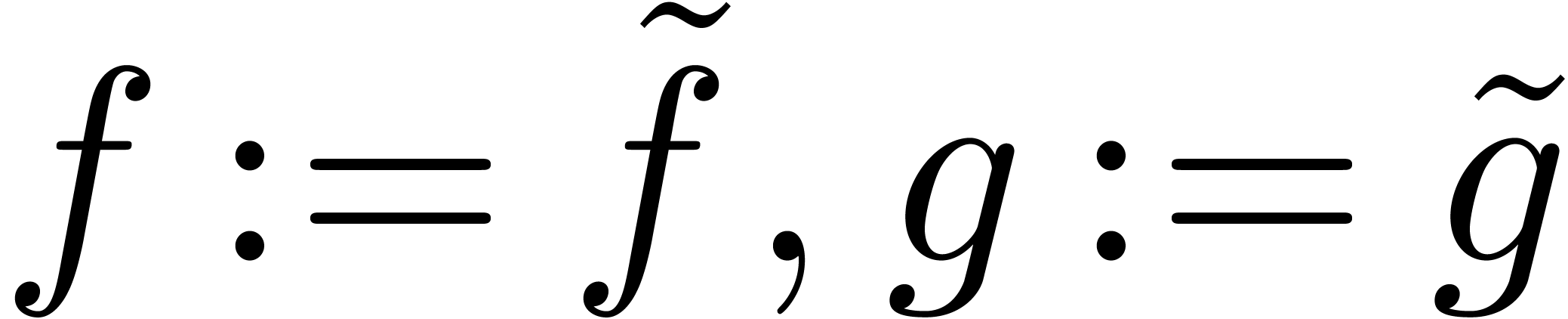

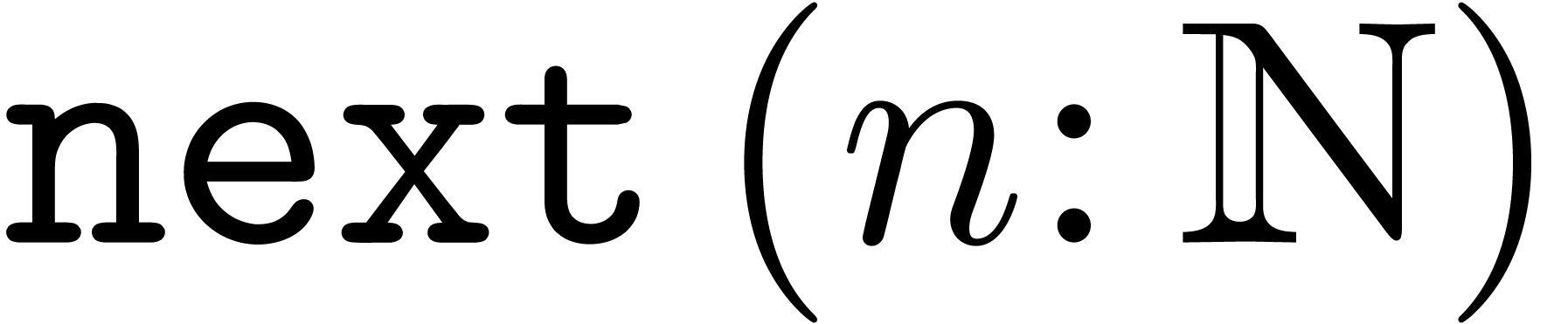

Let us briefly explain this code. In addition to the vector with the

already computed coefficients (which is derived from  ), the class

), the class  contains

two data fields for the multiplicands

contains

two data fields for the multiplicands  and

and  . The constructor

. The constructor  returns the product of two series

returns the product of two series  and the method

and the method

computes

computes  using the naive

relaxed method. The method

using the naive

relaxed method. The method  does not take care of

remembering previously computed coefficients and does not make sure that

coefficients are computed in order. Therefore, a different public

function

does not take care of

remembering previously computed coefficients and does not make sure that

coefficients are computed in order. Therefore, a different public

function  is used for the computation of the

coefficients

is used for the computation of the

coefficients  and

and  :

:

Function

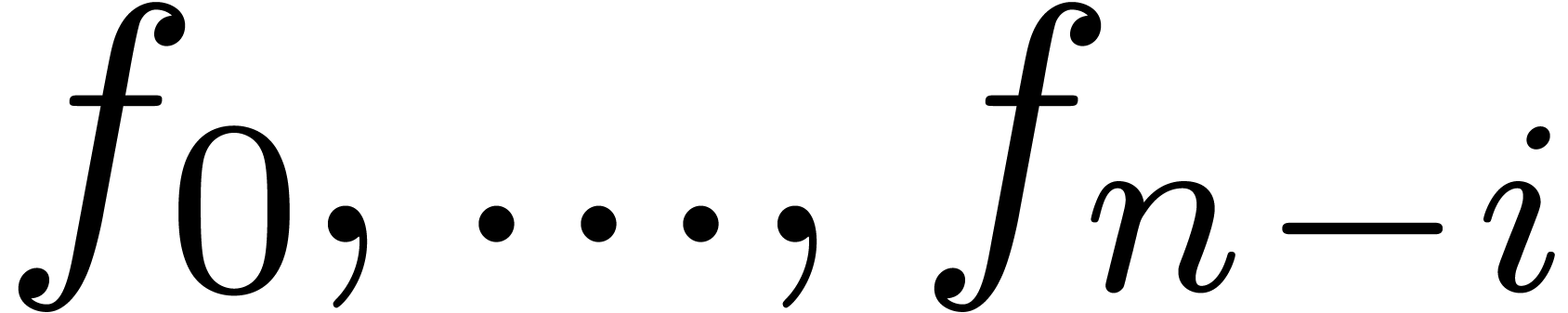

Let  denote the already computed coefficients

of

denote the already computed coefficients

of

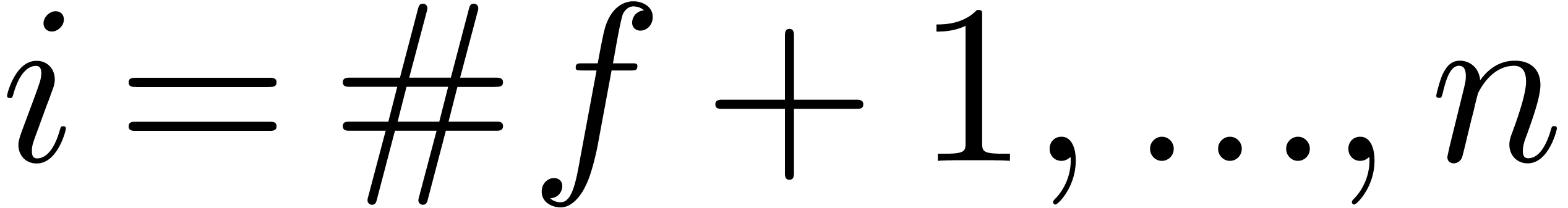

If  then

for

then

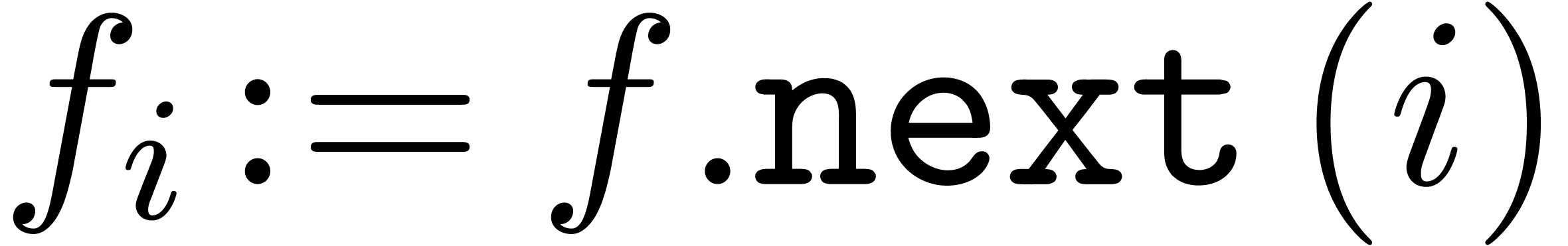

for  do

do

Return

In the case of implicitly defined power series  , the method

, the method  involves the

series

involves the

series  itself. For instance, exponentiation

itself. For instance, exponentiation  can be implemented as follows:

can be implemented as follows:

Class

Fields

Constructor

Method

If  then

return

then

return

Else return

Example  using the above algorithms.

Besides

using the above algorithms.

Besides  and

and  ,

three auxiliary series

,

three auxiliary series  ,

,  and

and  are created. Now the

computation of

are created. Now the

computation of  gives rise to the following

sequence of assignments:

gives rise to the following

sequence of assignments:

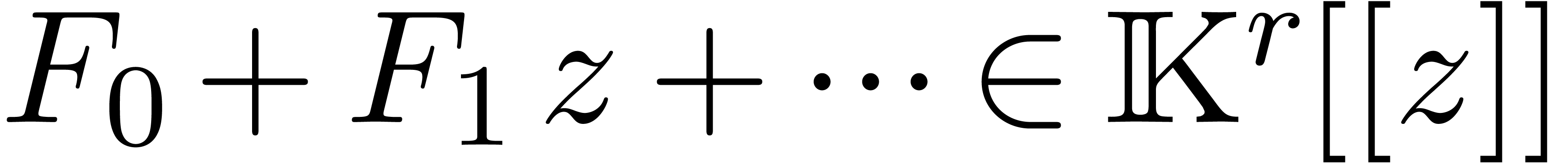

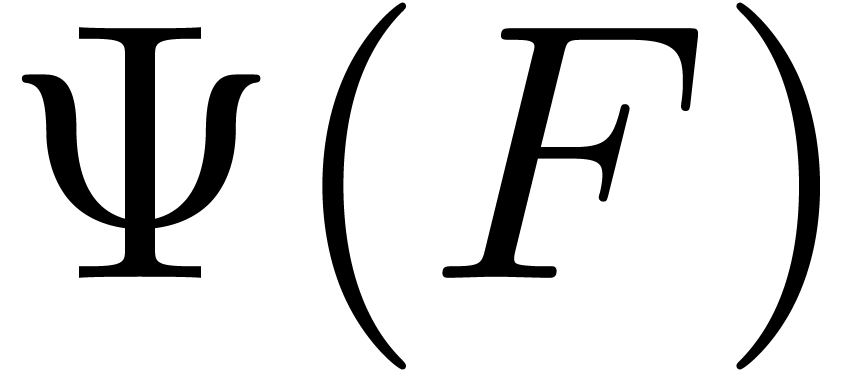

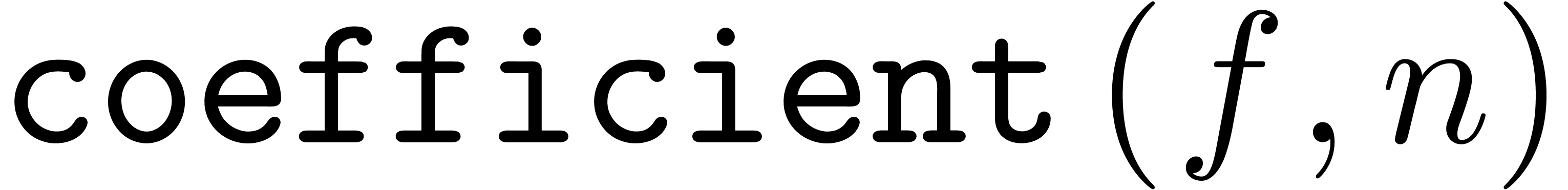

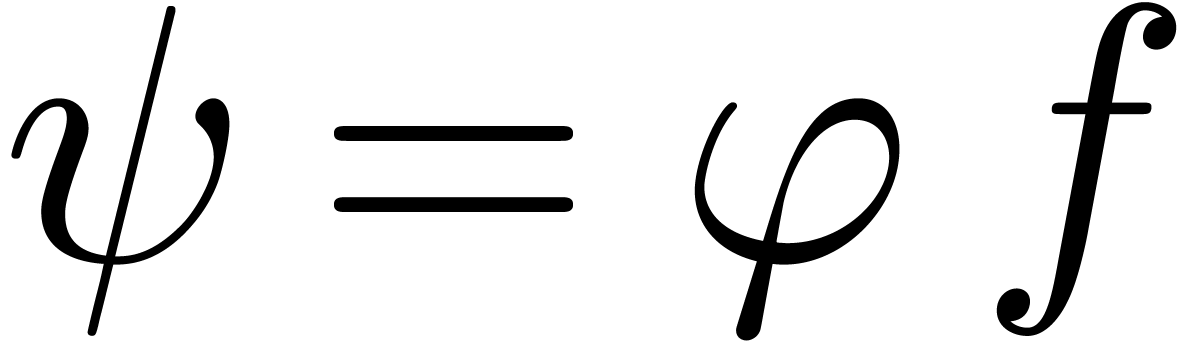

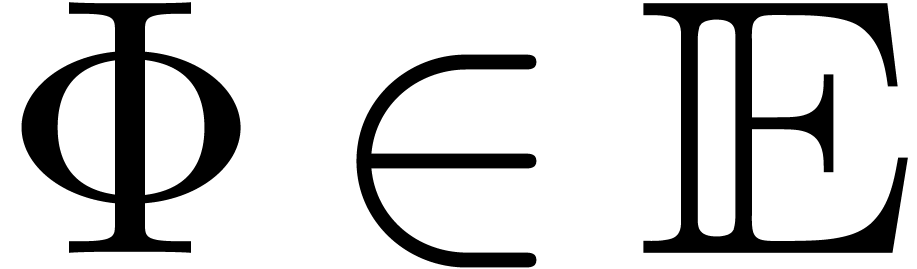

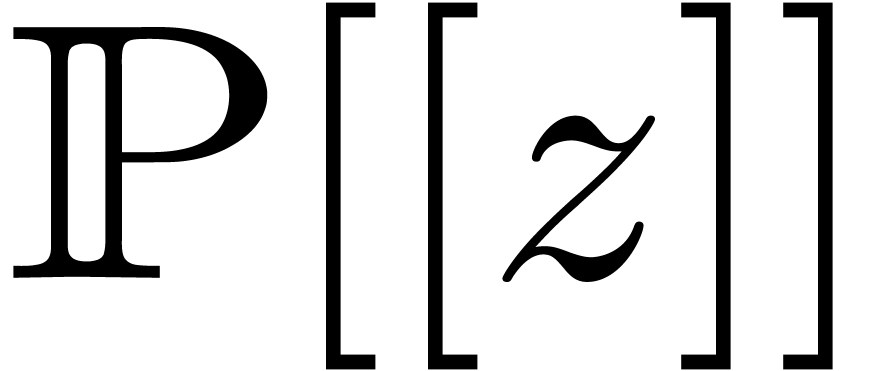

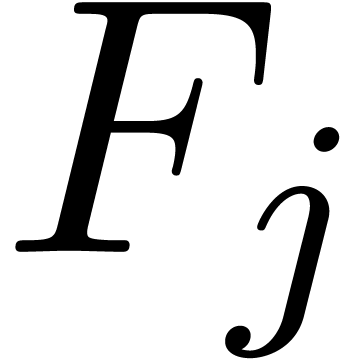

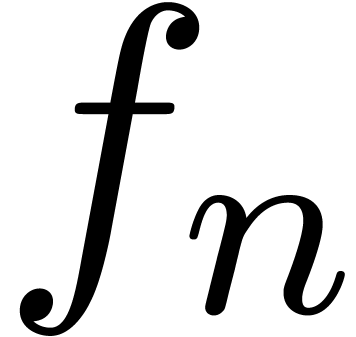

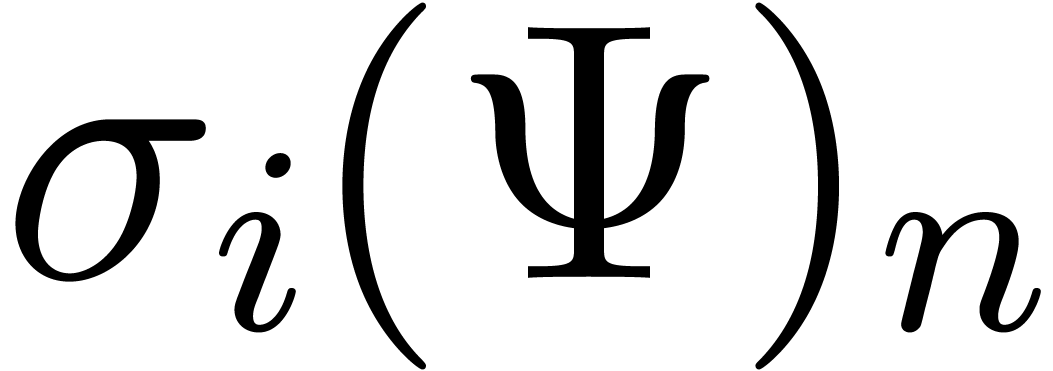

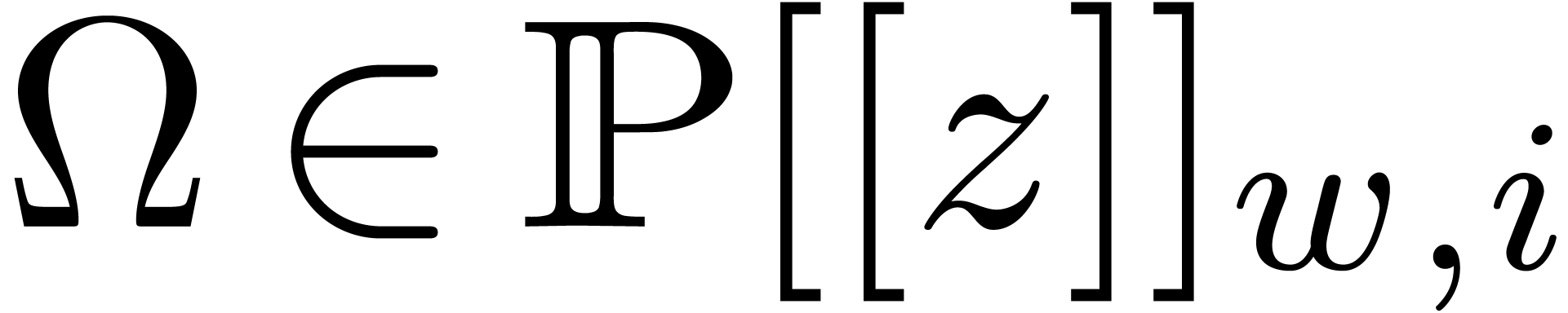

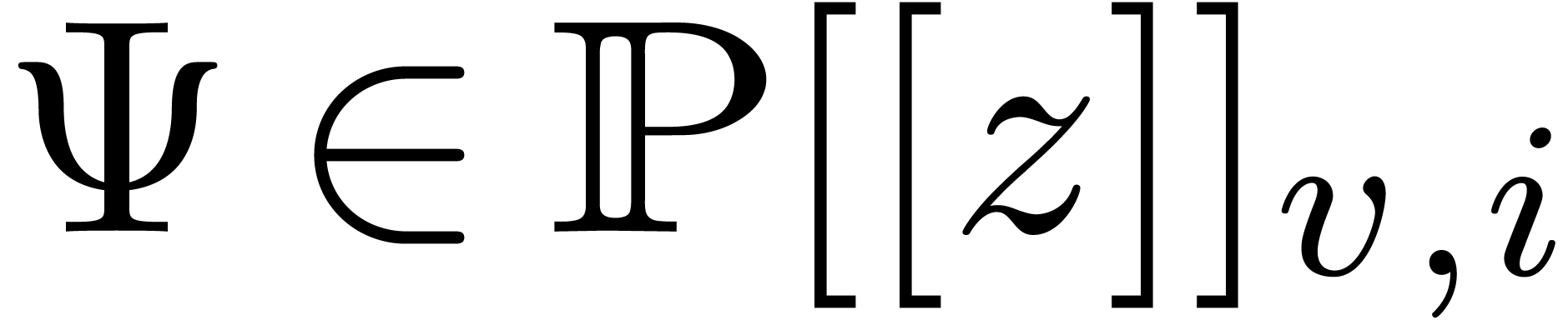

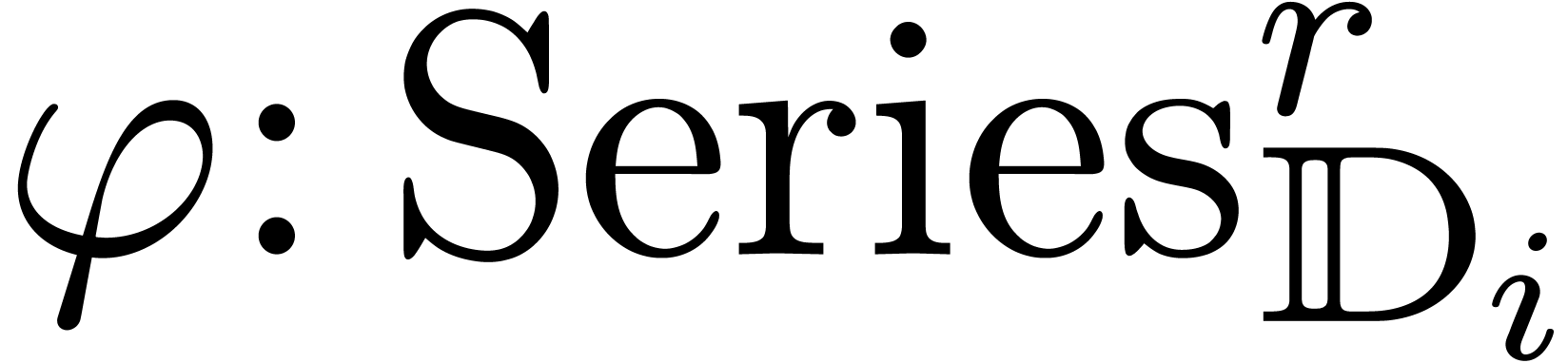

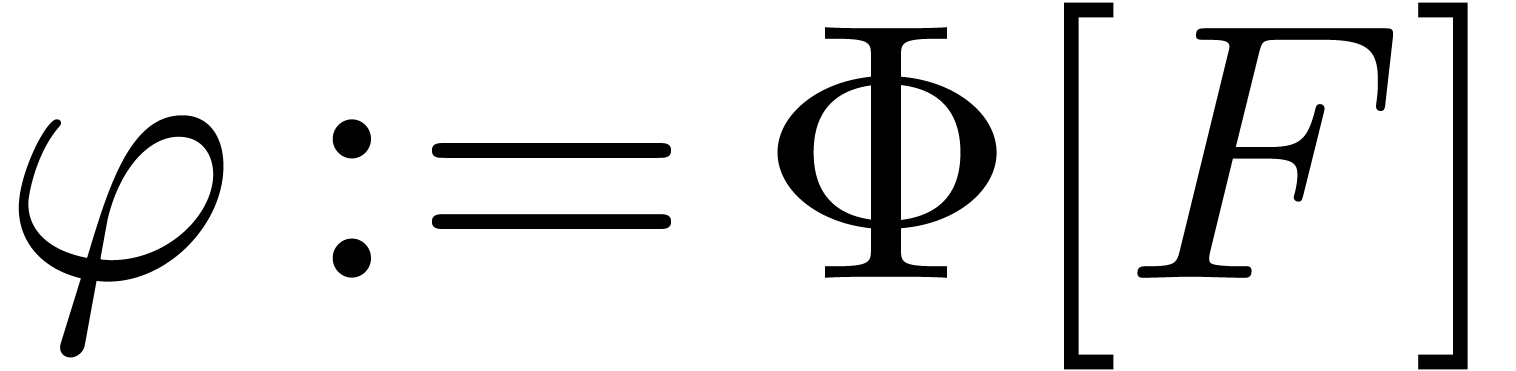

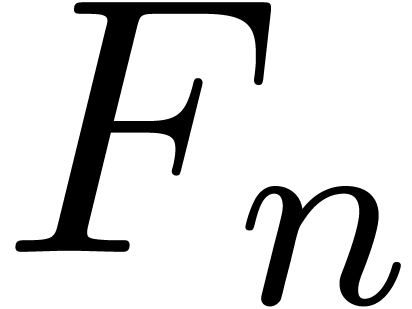

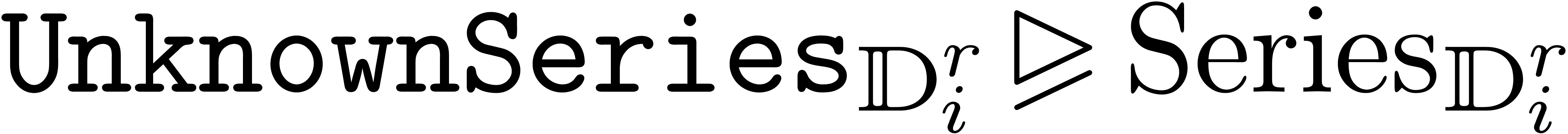

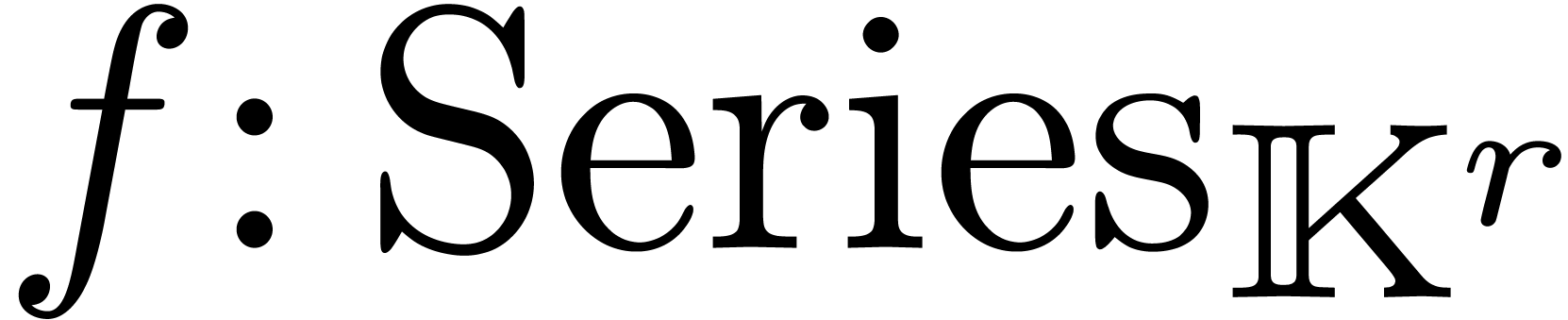

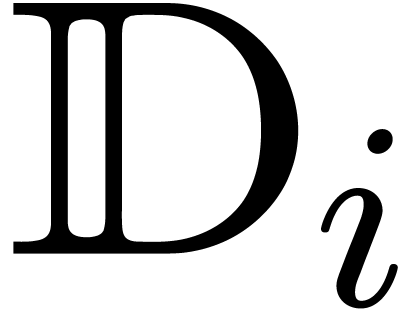

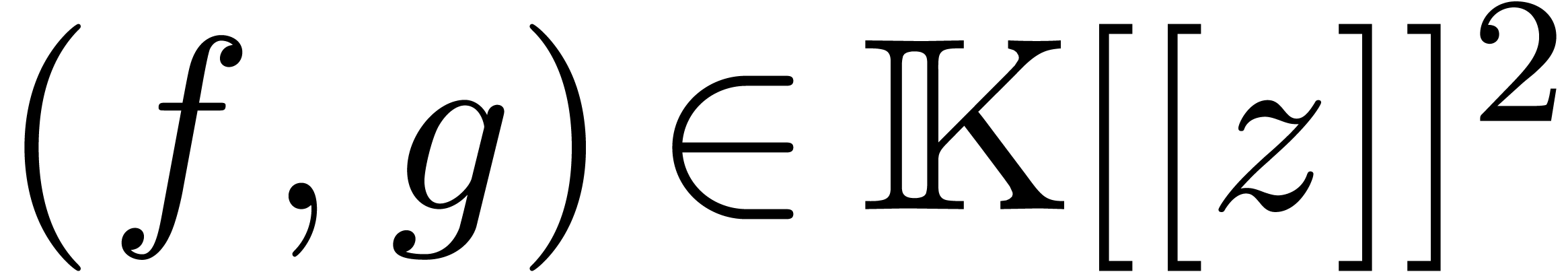

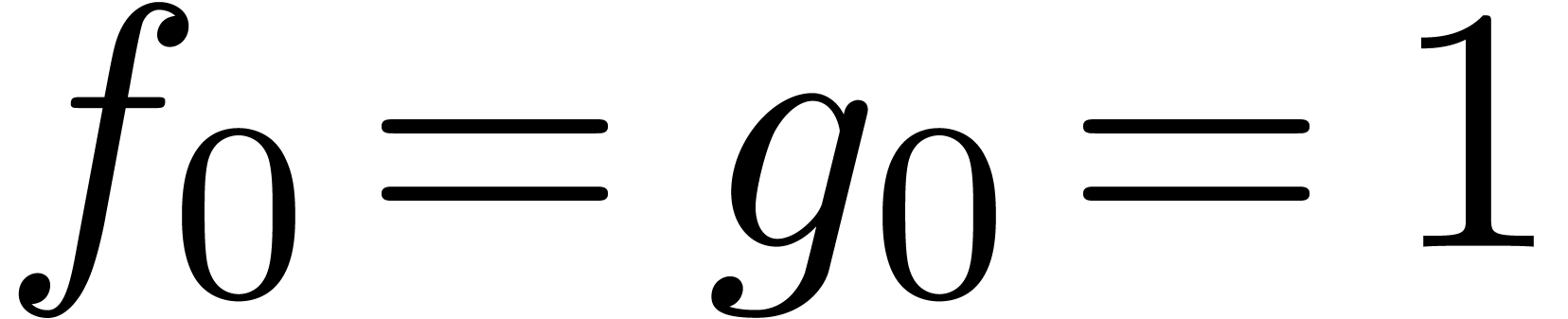

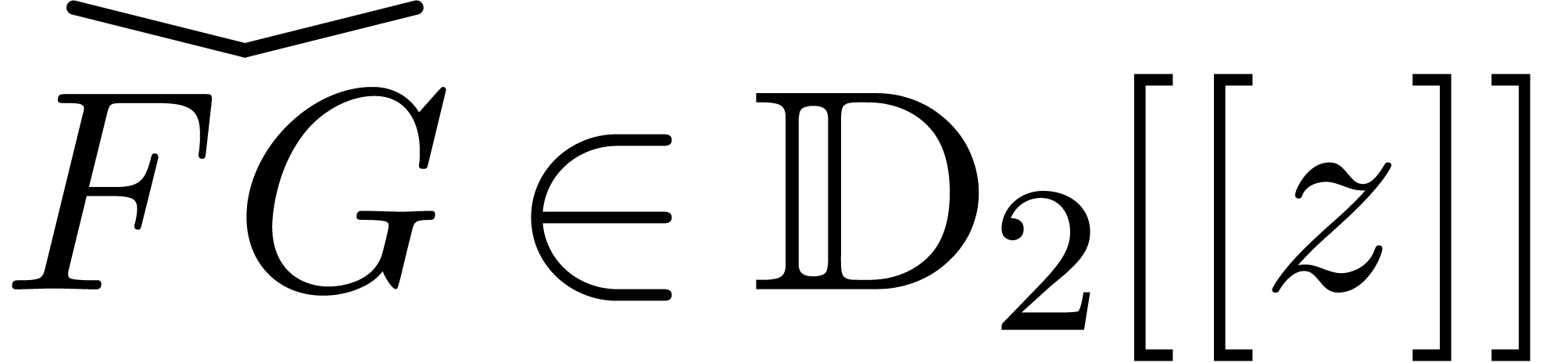

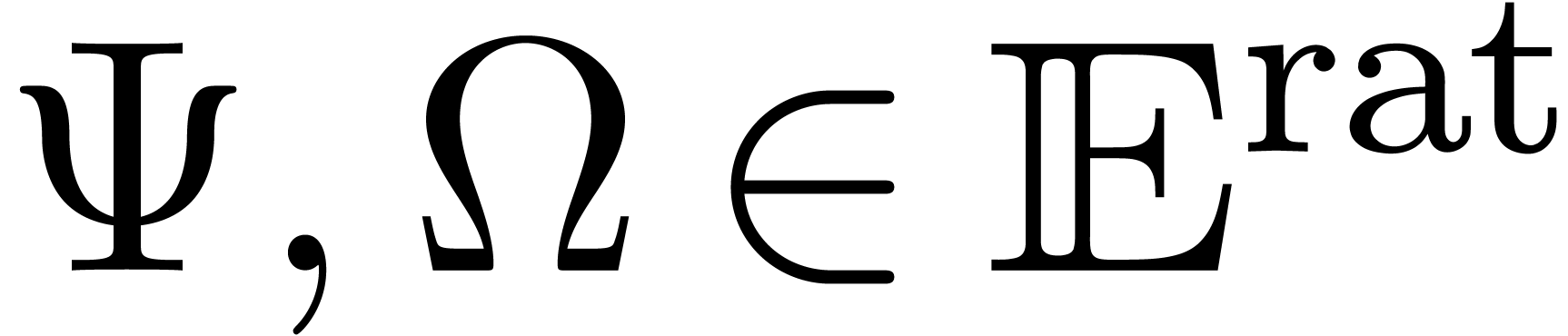

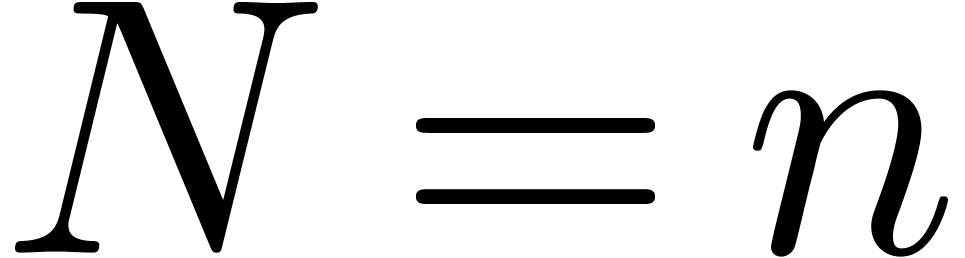

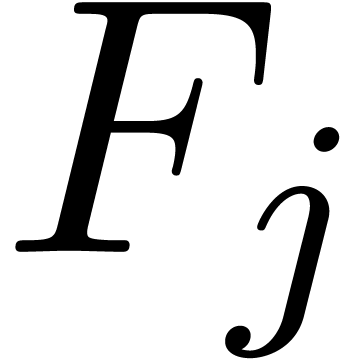

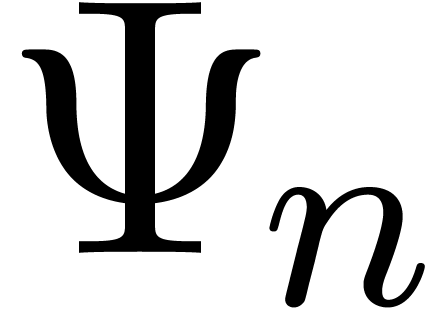

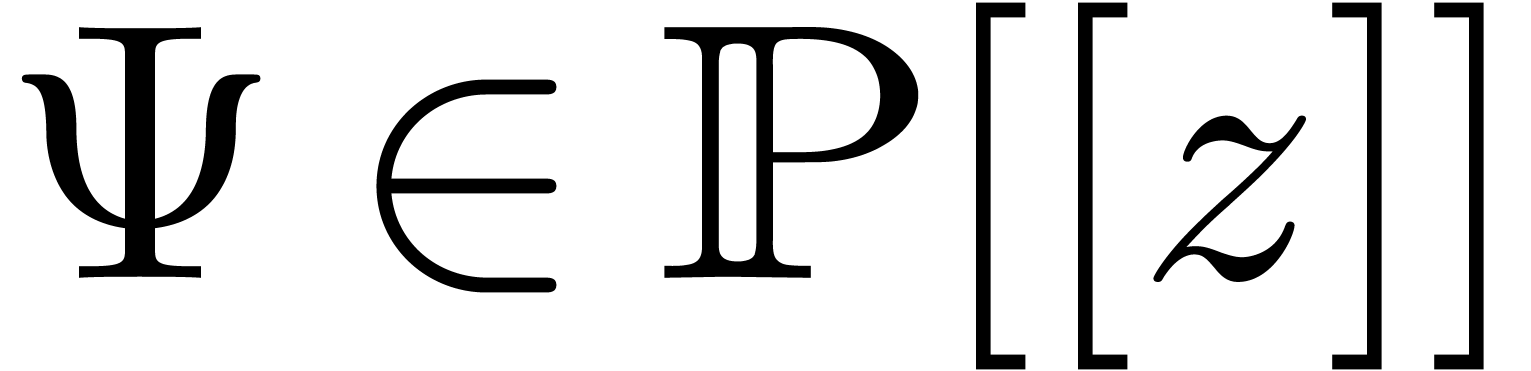

Let  be a column vector of

be a column vector of  indeterminate series in

indeterminate series in  .

Alternatively,

.

Alternatively,  may be considered as a series

with formal coefficients

may be considered as a series

with formal coefficients

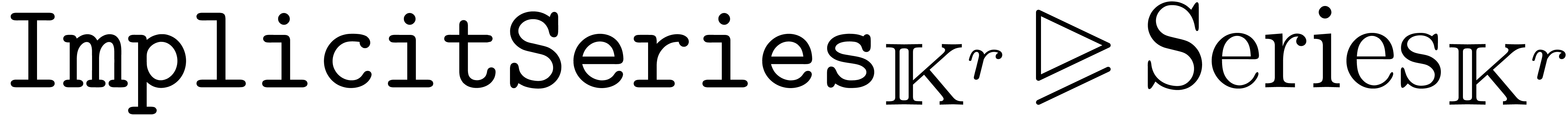

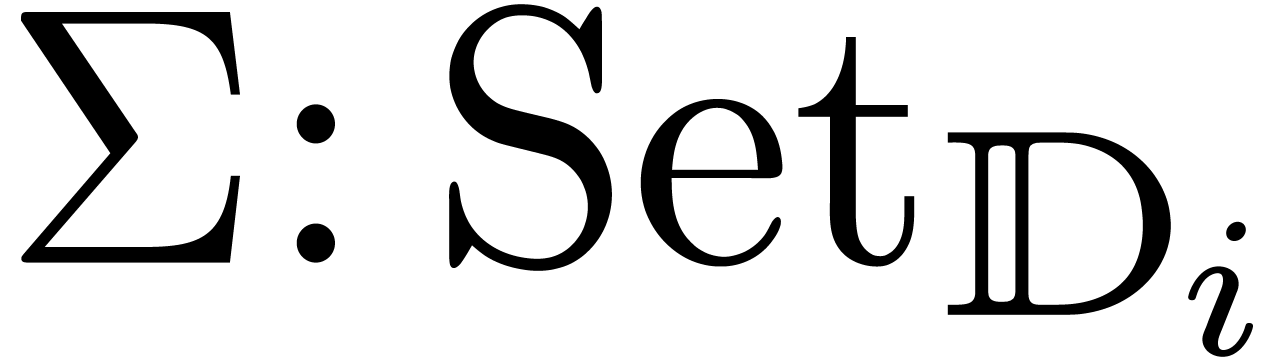

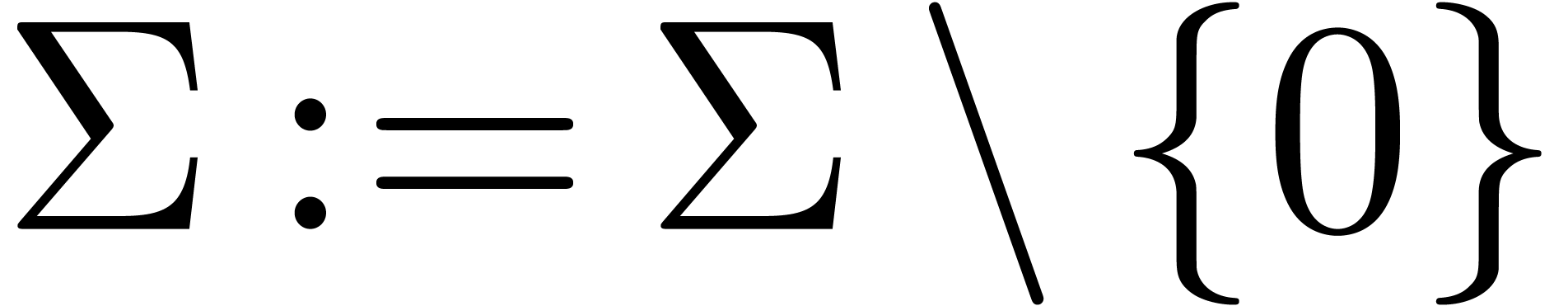

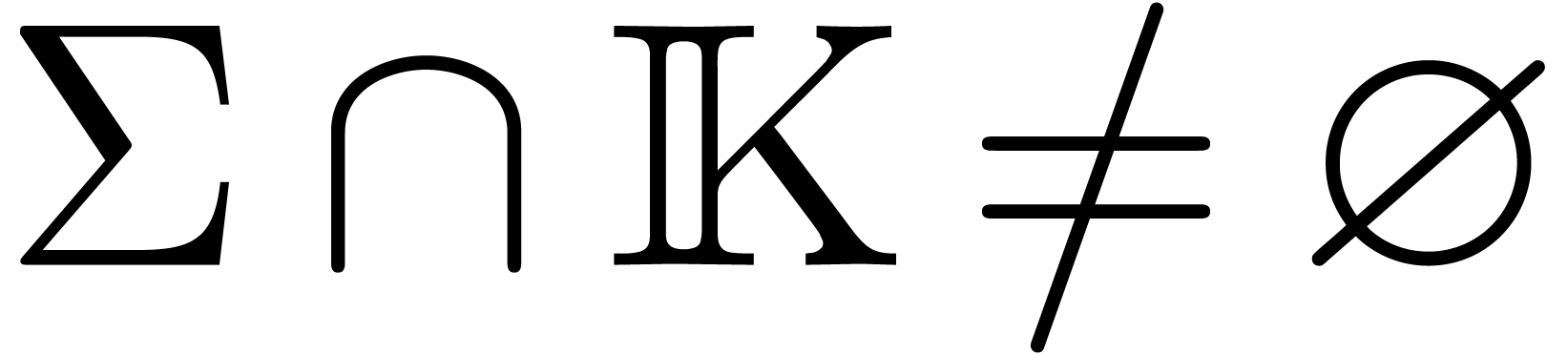

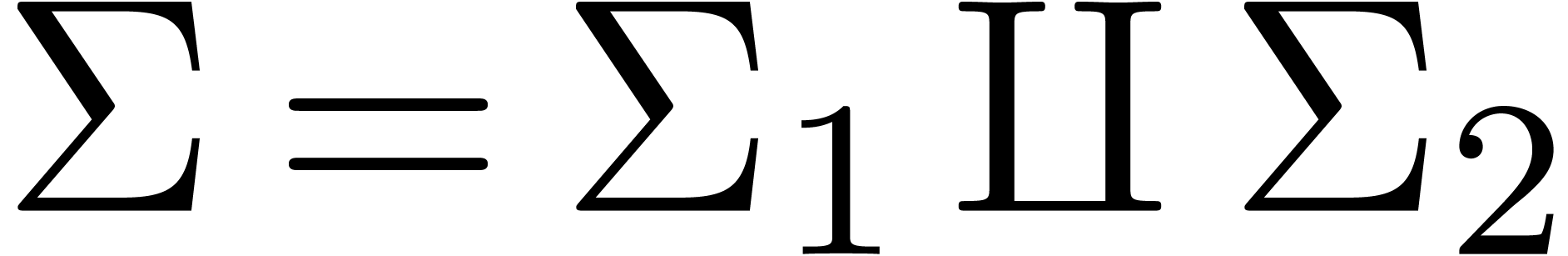

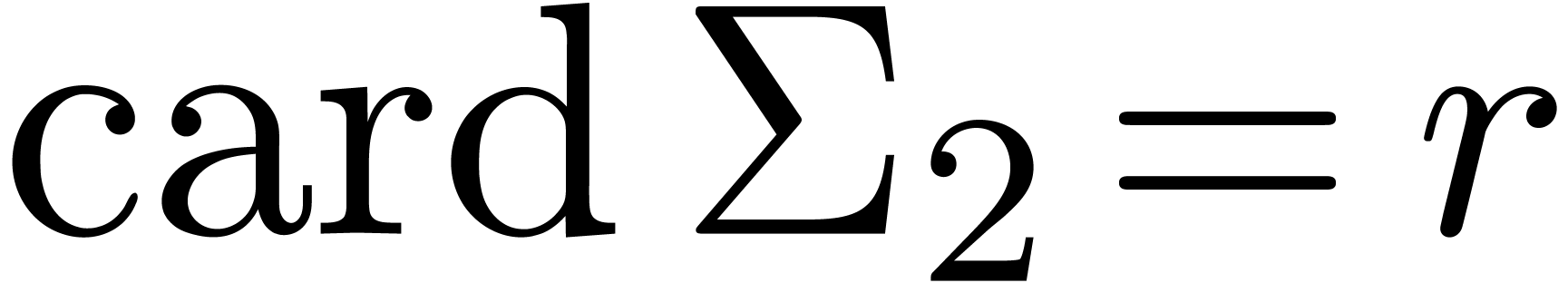

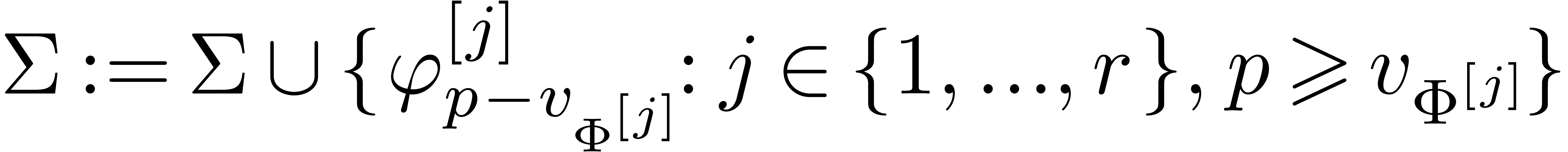

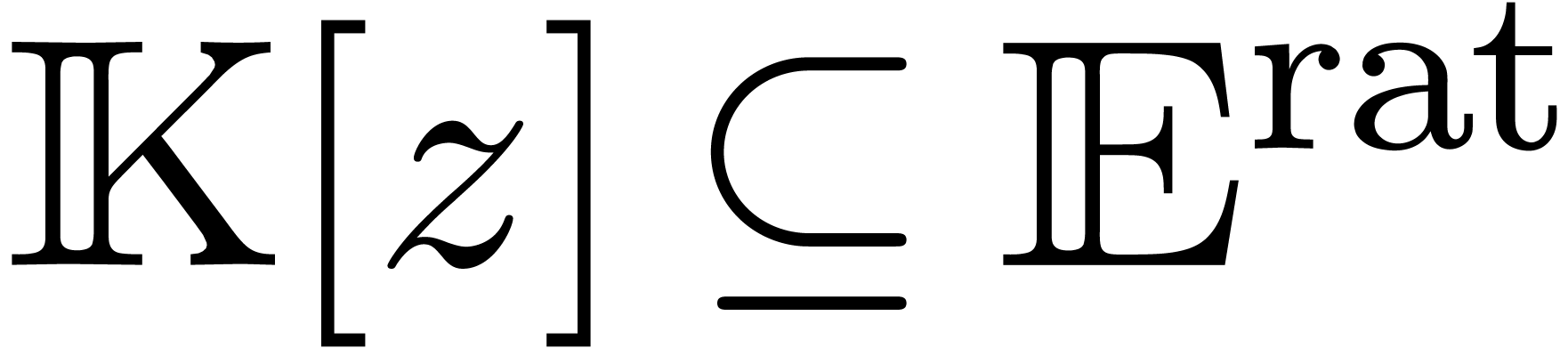

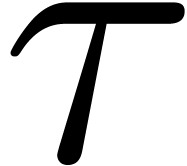

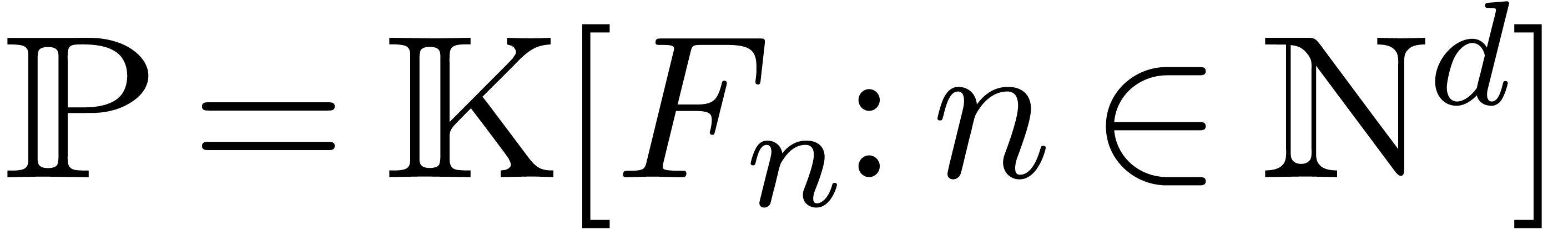

We will denote by  the set of expressions built

up from

the set of expressions built

up from  ,

,  and constants in

and constants in  using ring operations,

differentiation and integration (with

using ring operations,

differentiation and integration (with  for all

for all

). Setting

). Setting

any expression in  may then be regarded as an

element of

may then be regarded as an

element of  .

.

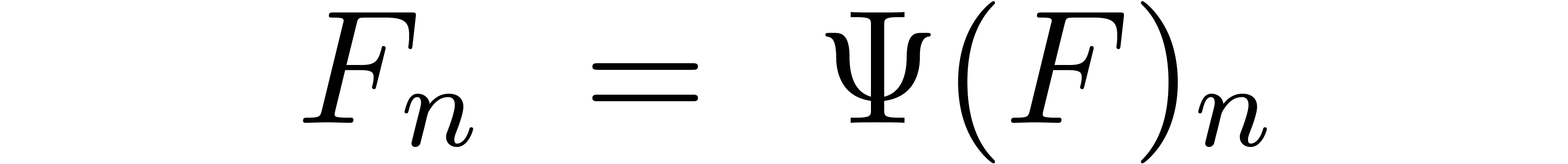

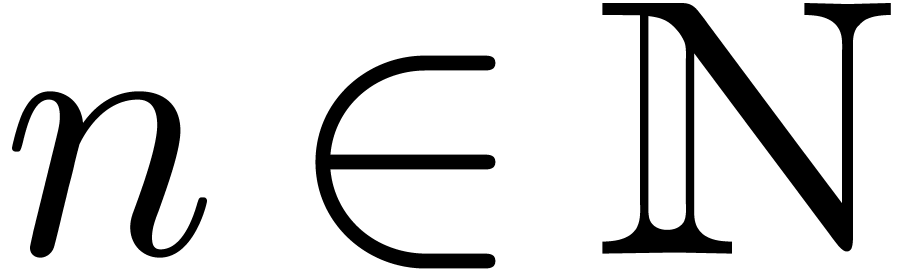

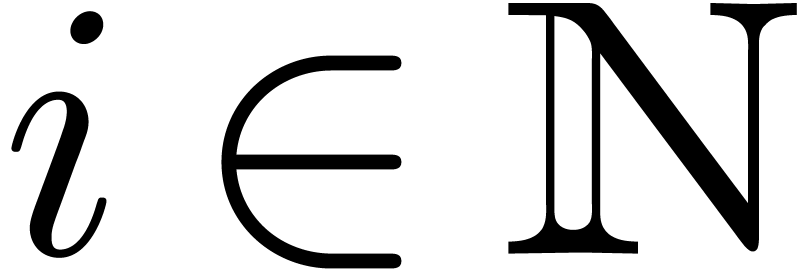

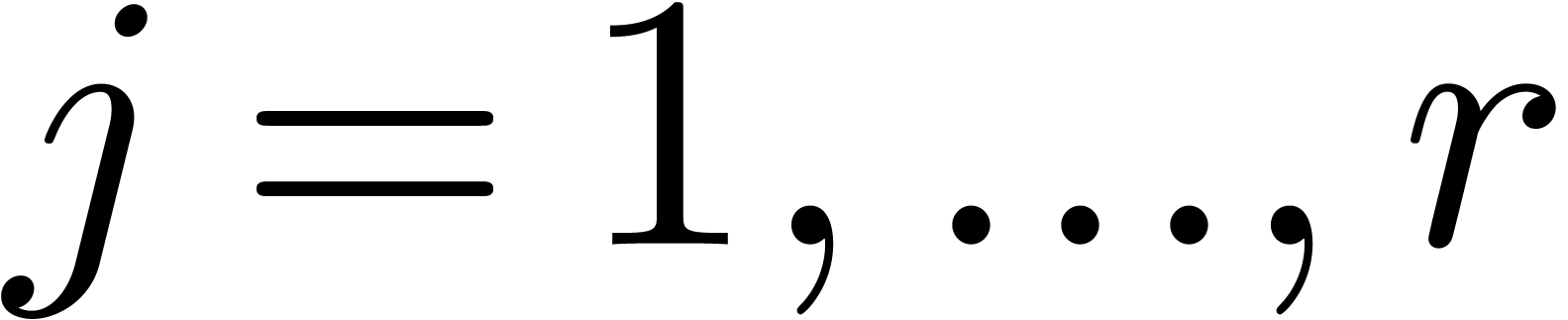

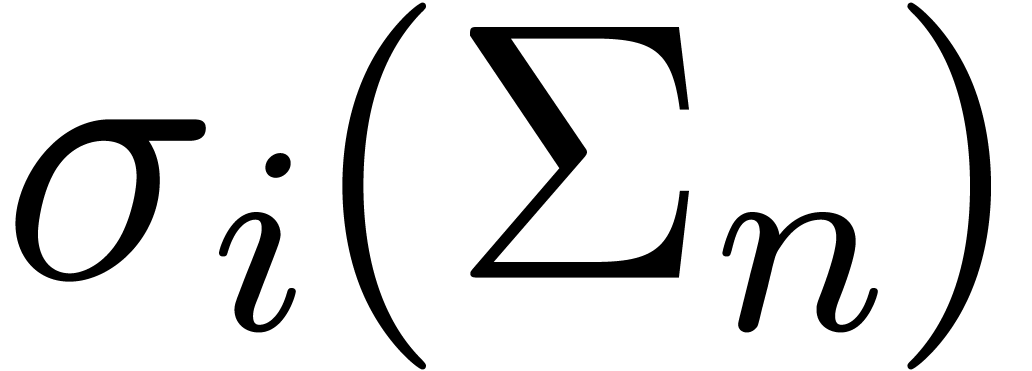

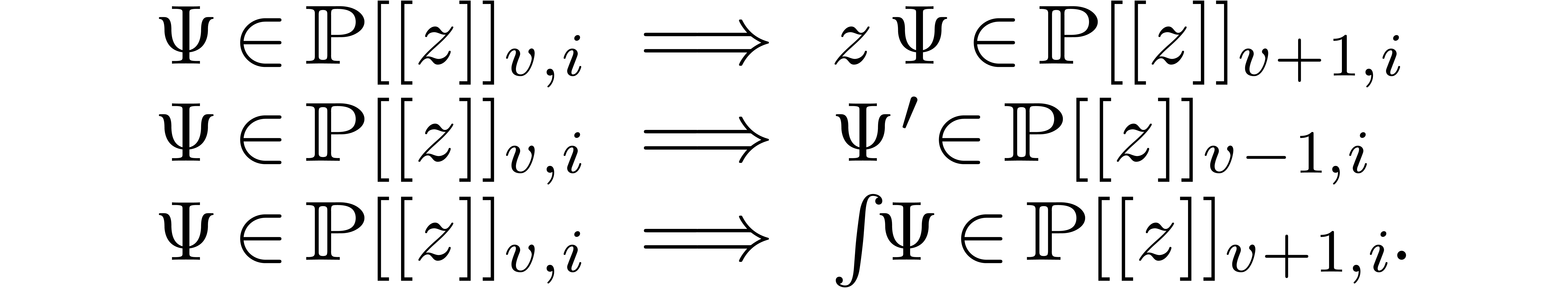

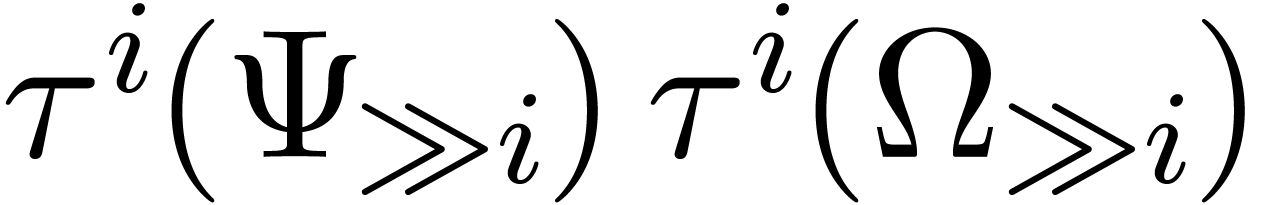

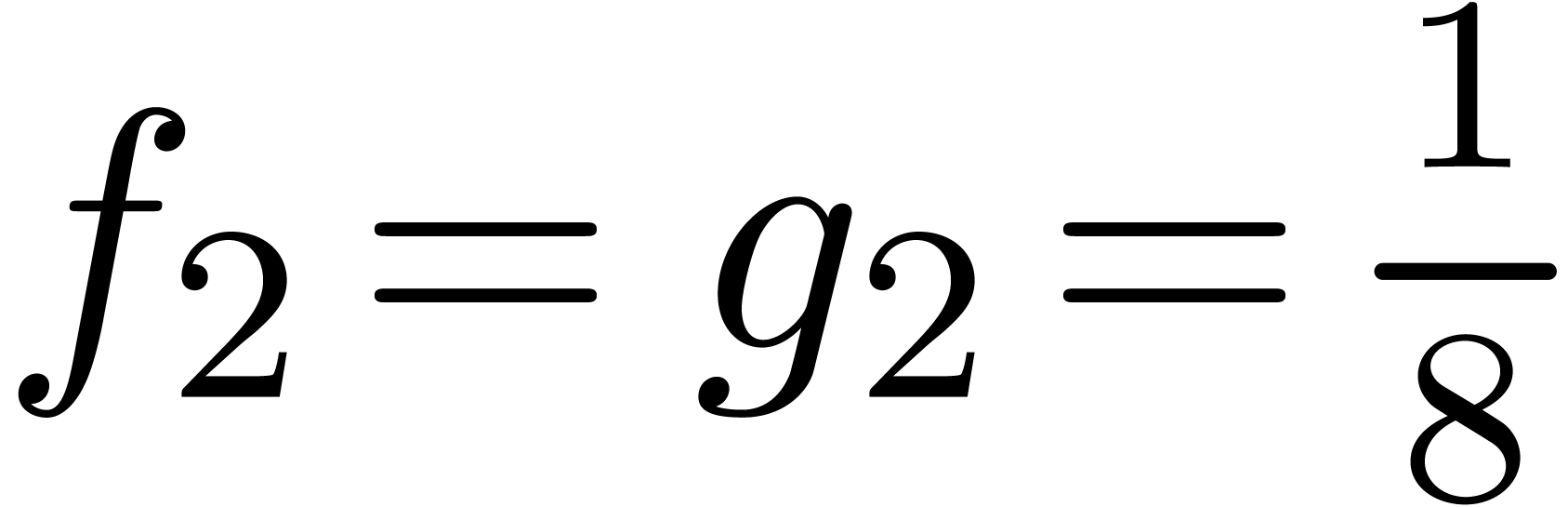

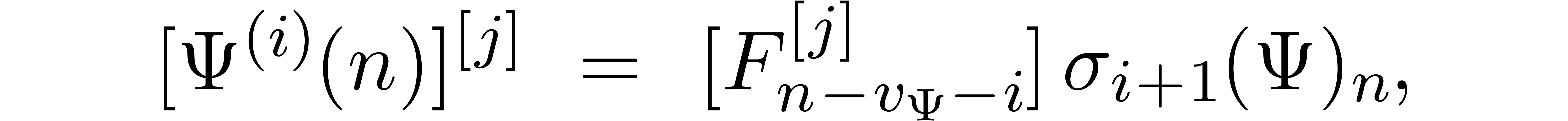

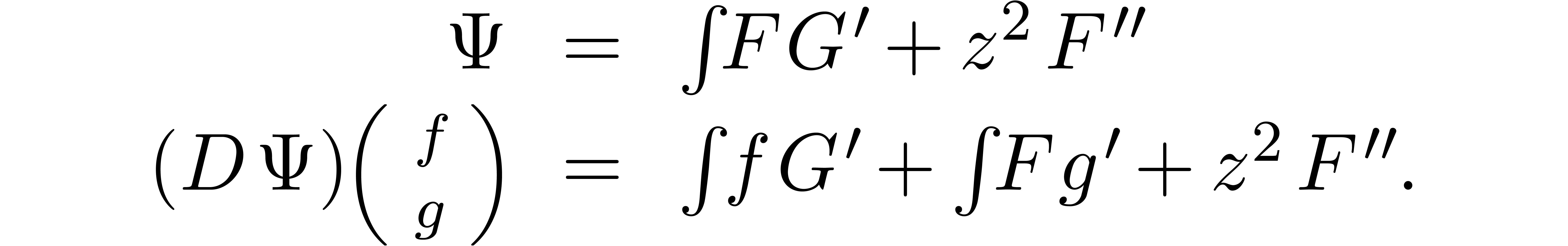

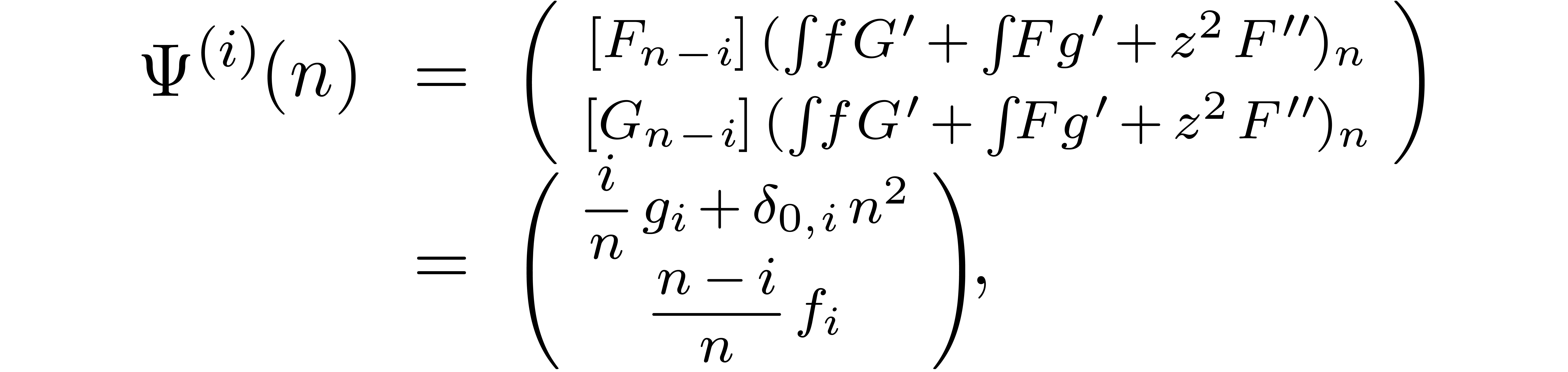

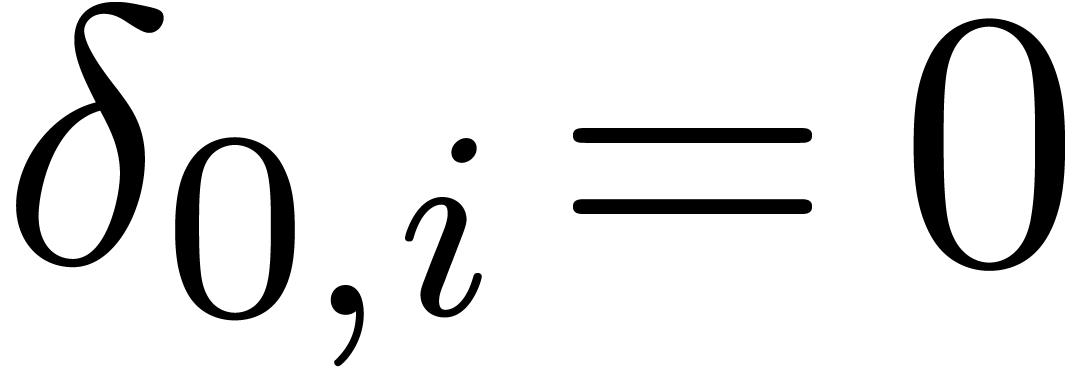

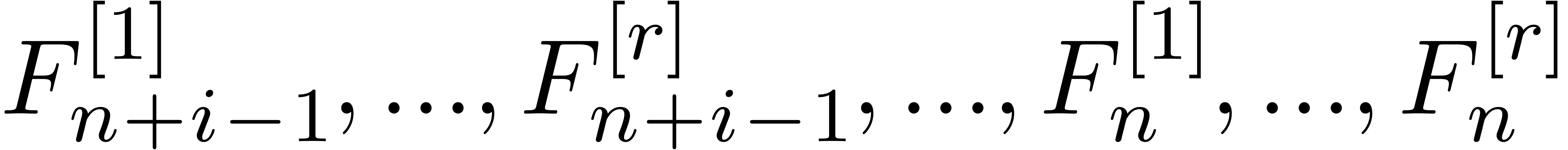

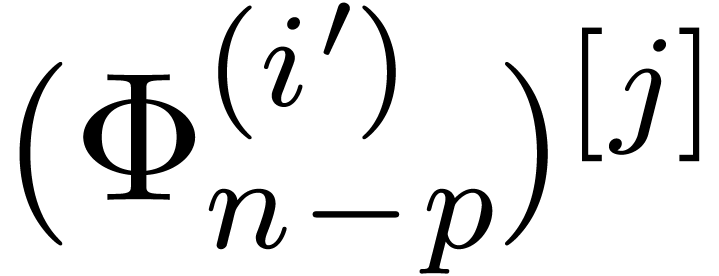

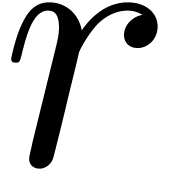

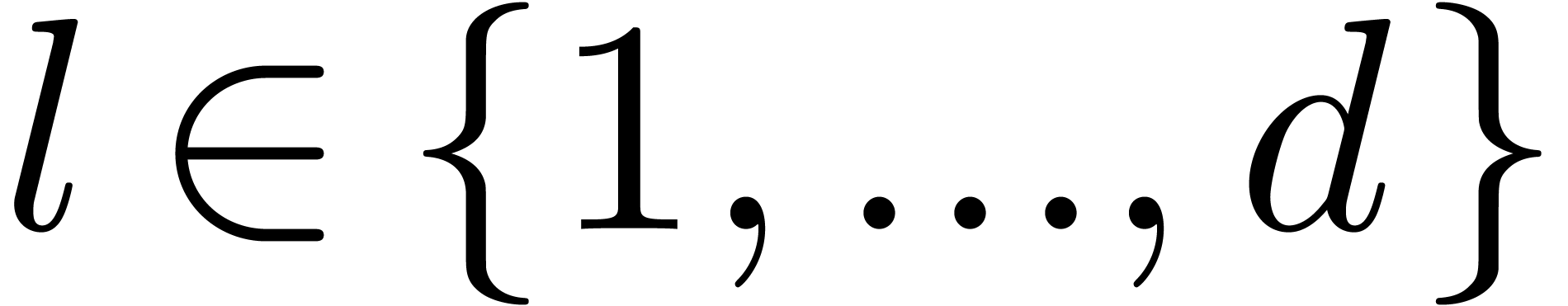

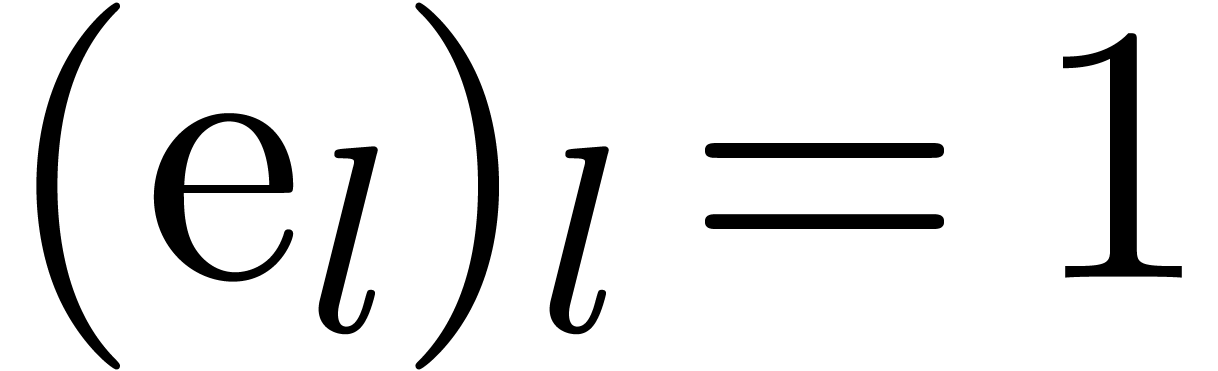

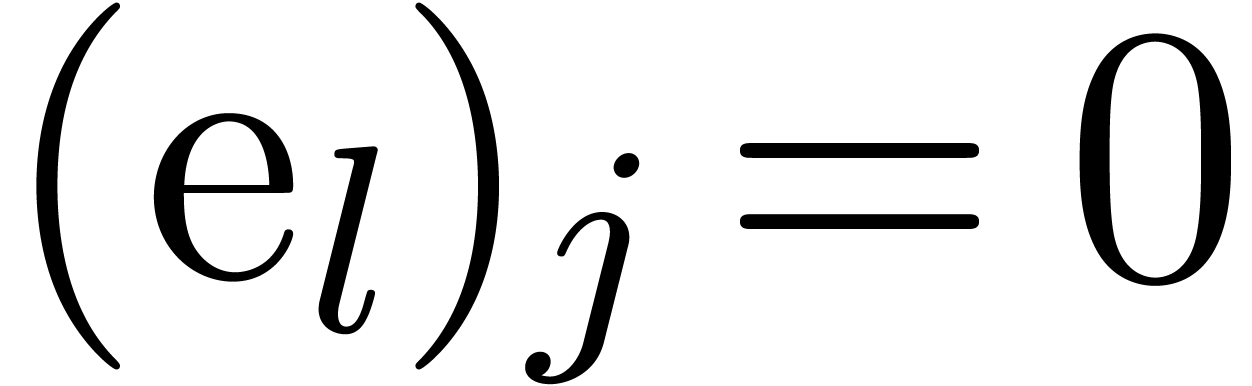

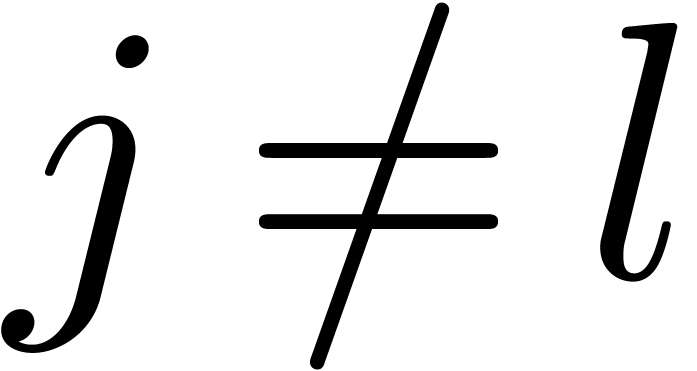

For each  , we define

, we define  using the following rules:

using the following rules:

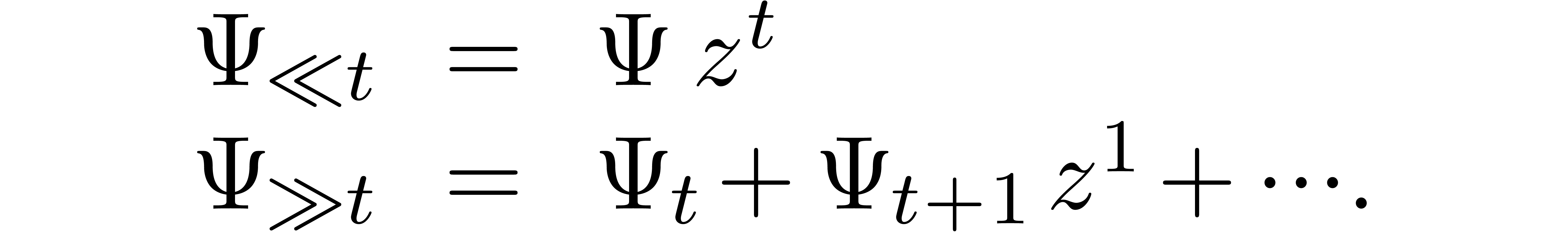

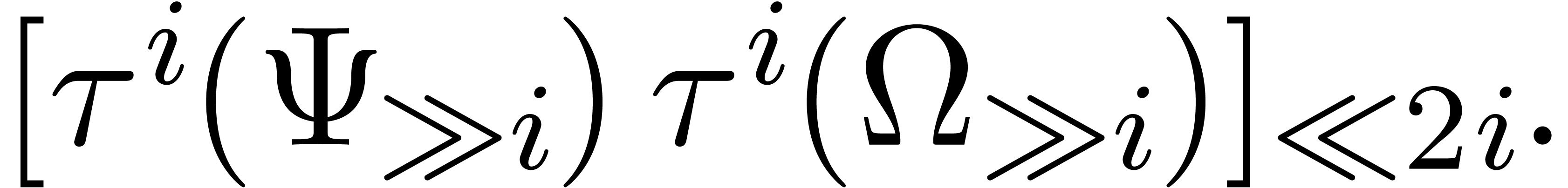

By induction, we have

for all  and

and  .

.

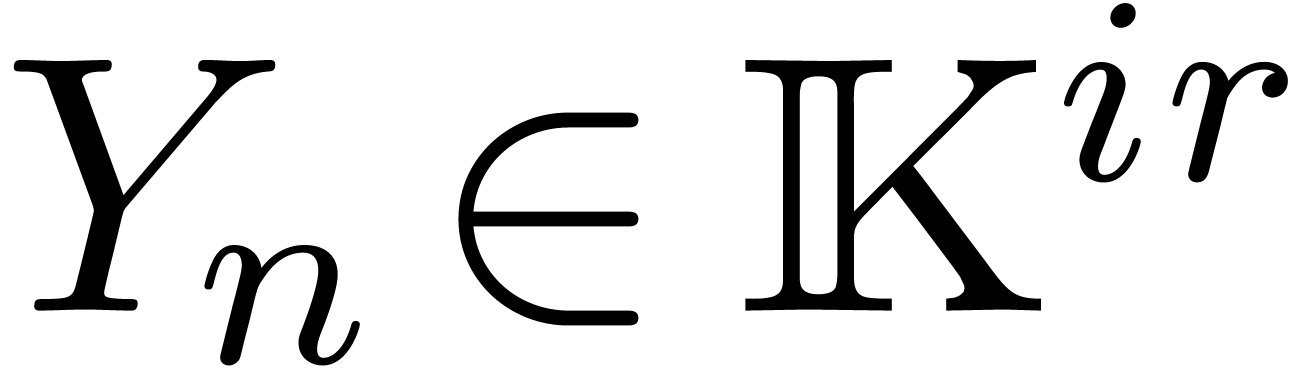

Let  be a column vector of

be a column vector of  expressions in

expressions in  . We will

assume that

. We will

assume that  depends on each of the

indeterminates

depends on each of the

indeterminates  . Given

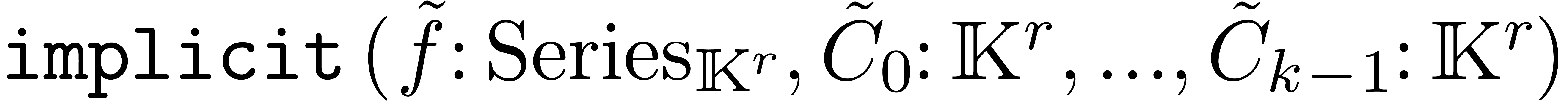

. Given  , consider the implicit system with

initial conditions

, consider the implicit system with

initial conditions

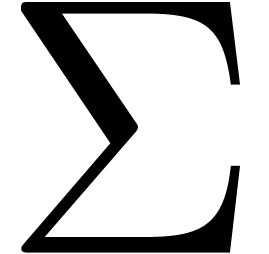

|

(7) |

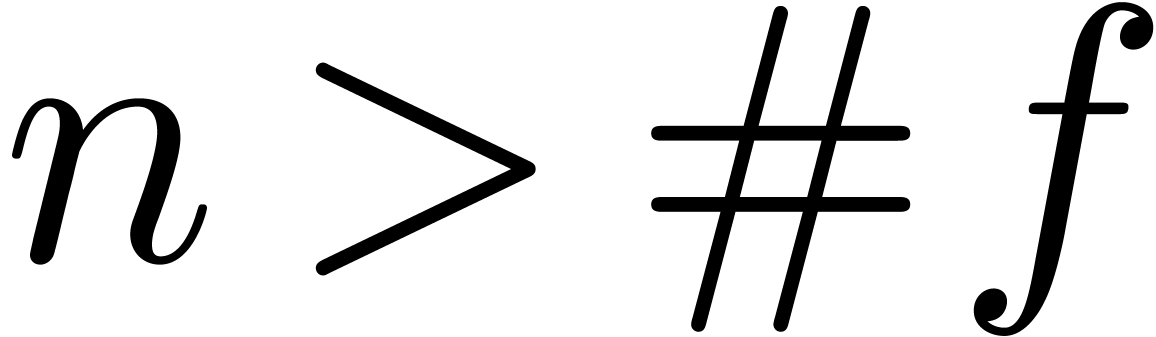

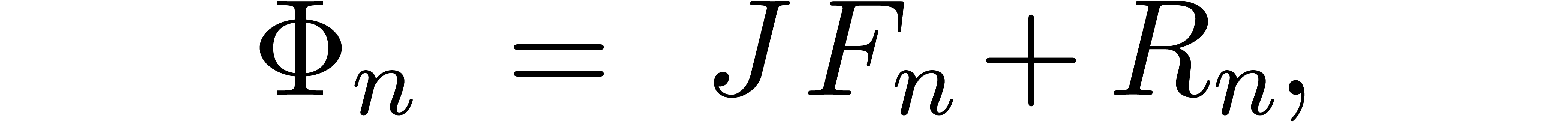

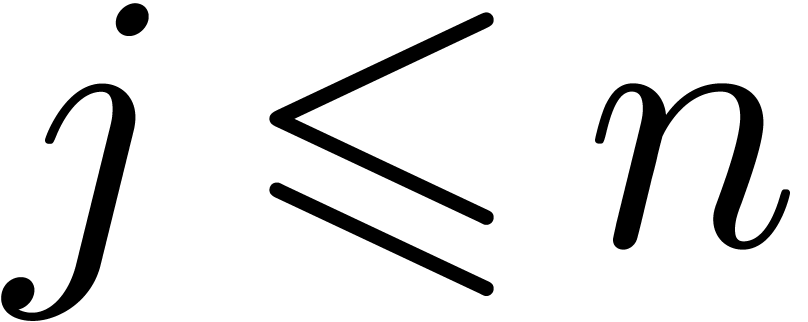

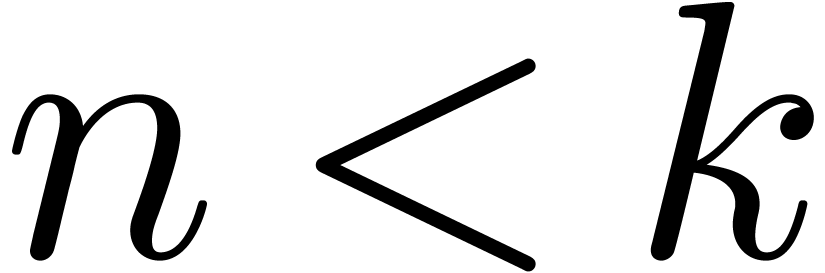

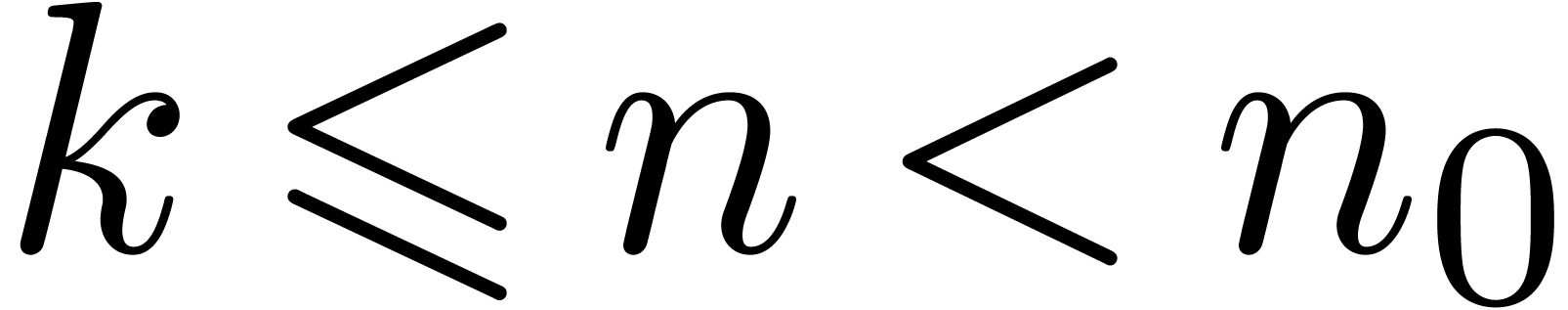

For any  , this system implies

the following system

, this system implies

the following system  of equations in

of equations in  :

:

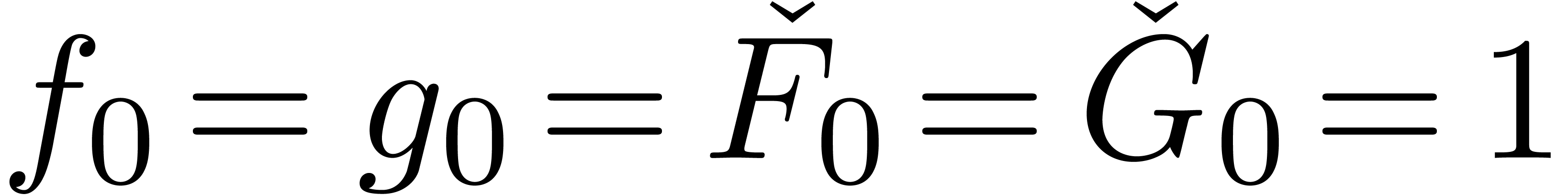

The system (7) is equivalent to the systems  together with the initial conditions

together with the initial conditions  .

.

In what follows, we will assume that (7) admits a unique

solution  . Given

. Given  and

and  , we will

denote by

, we will

denote by  the series in

the series in  such that

such that  is the result of the substitution of

is the result of the substitution of

by

by  in

in  , for all

, for all  and

and  . If, for all

. If, for all  , there exists an

, there exists an  such

that

such

that  is linear in

is linear in  for

all

for

all  and

and  ,

then we say that (7) is ultimately linear. In that

case,

,

then we say that (7) is ultimately linear. In that

case,  becomes a linear system of equations in

becomes a linear system of equations in

. More generally, the

combined system

. More generally, the

combined system

is a linear system of equations in  for all

sufficiently large

for all

sufficiently large  . If

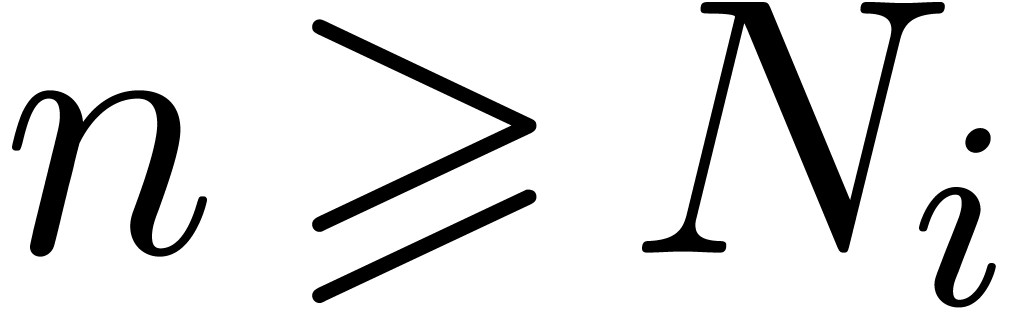

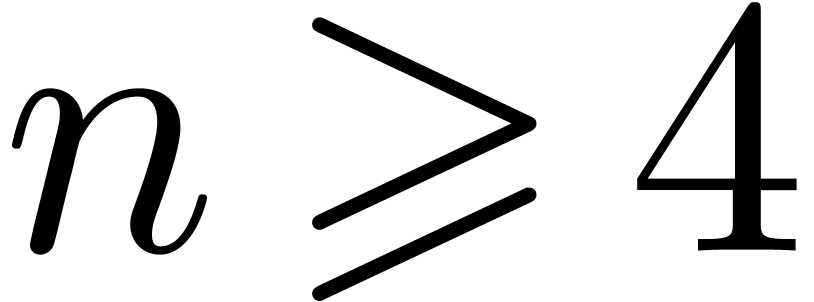

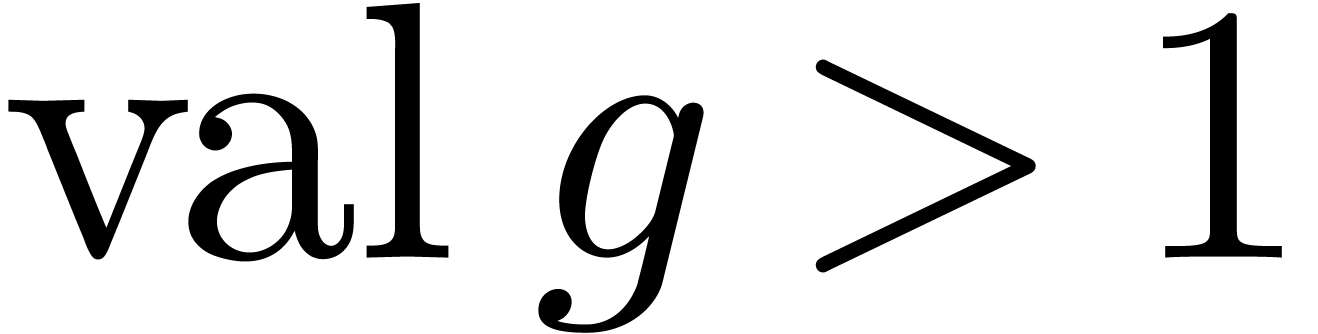

. If  is linear and

is linear and  can be

eliminated from

can be

eliminated from  for all sufficiently large, then

we say that (7) is quasi-linear. The minimal

for all sufficiently large, then

we say that (7) is quasi-linear. The minimal  for which

for which  can be eliminated

from

can be eliminated

from  for all sufficiently large

for all sufficiently large  will then be called the index of (7). The minimal

will then be called the index of (7). The minimal

such that

such that  can be

eliminated from

can be

eliminated from  for all

for all  will be called the offset.

will be called the offset.

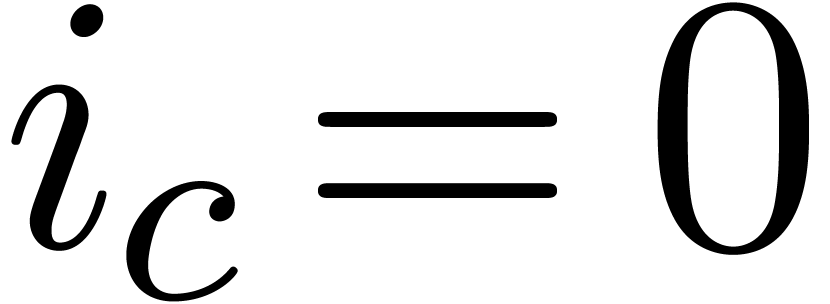

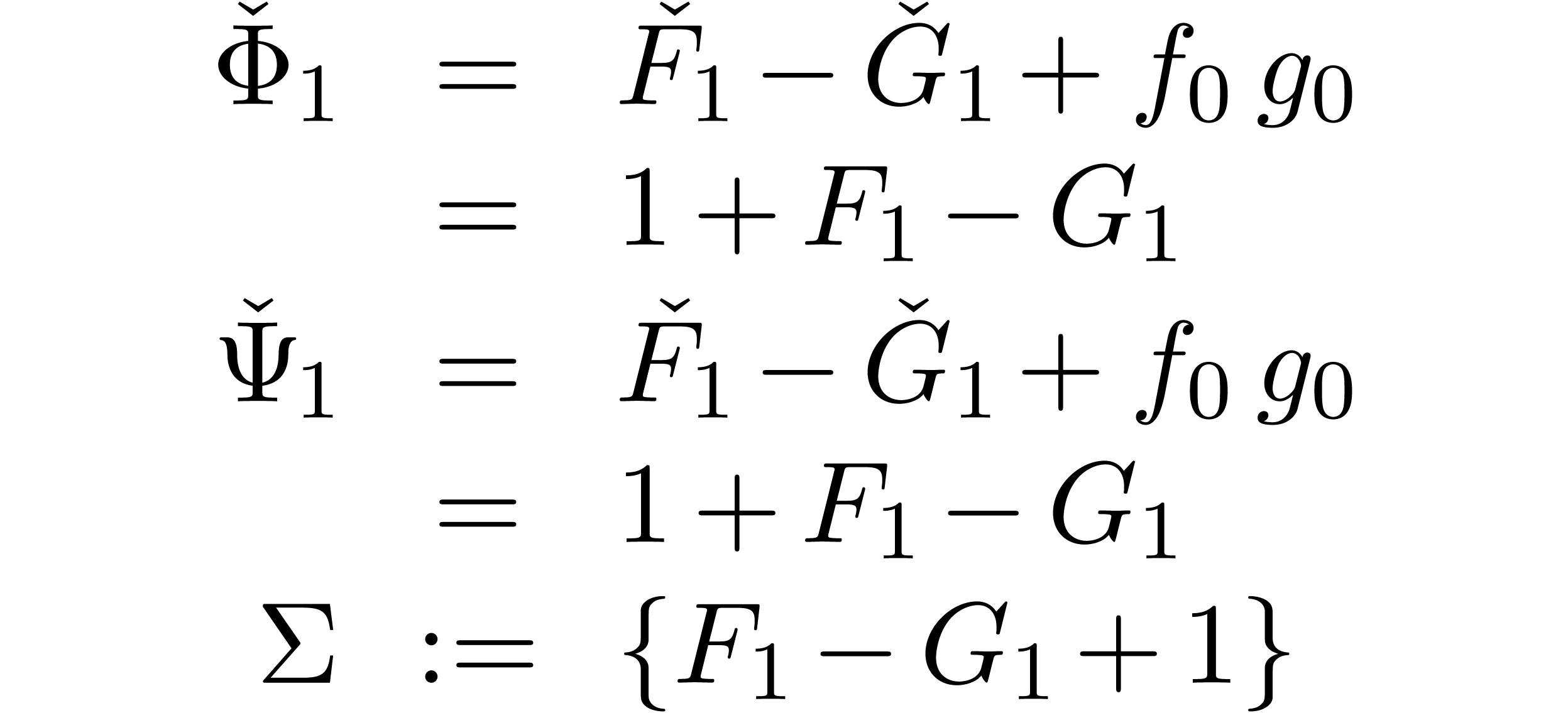

Example  ,

,  and

assume that

and

assume that

is invertible. Then for all  ,

we have

,

we have

with  . Hence,

. Hence,  can be computed from the previous coefficients using

can be computed from the previous coefficients using

The system  consists of the equation

consists of the equation

from which  can be eliminated. We conclude that

(7) is quasi-linear, of index

can be eliminated. We conclude that

(7) is quasi-linear, of index  .

.

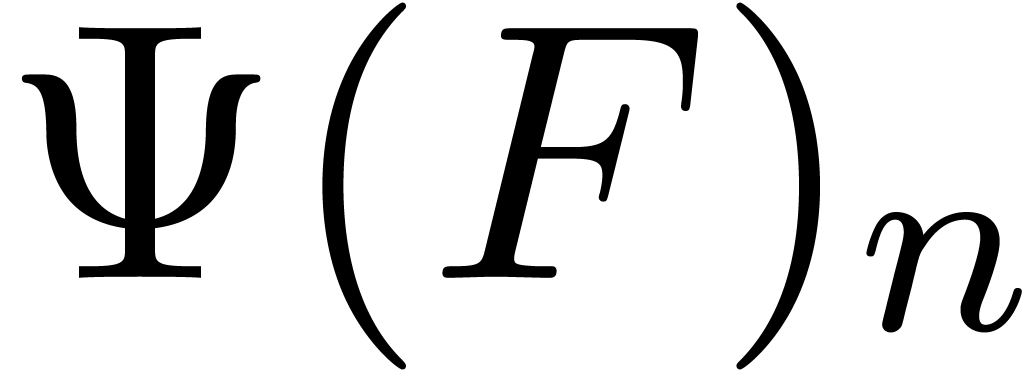

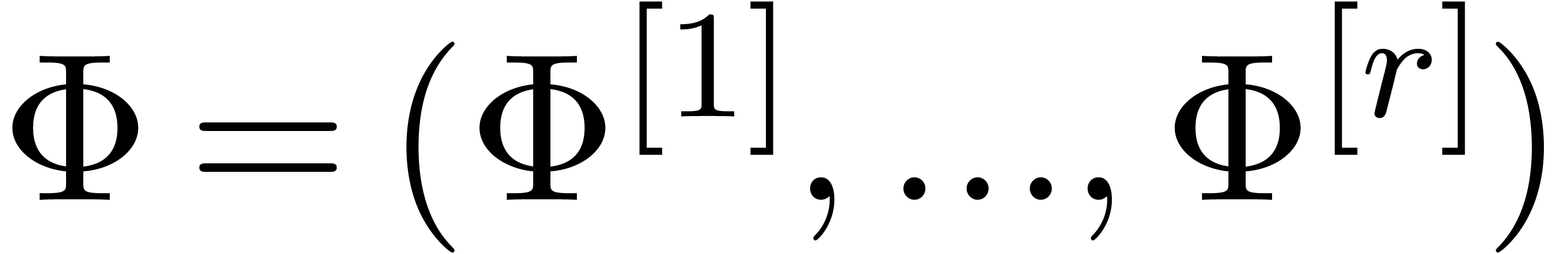

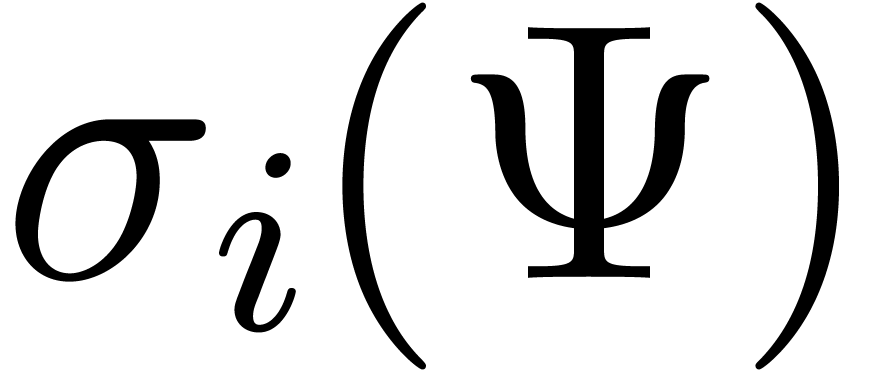

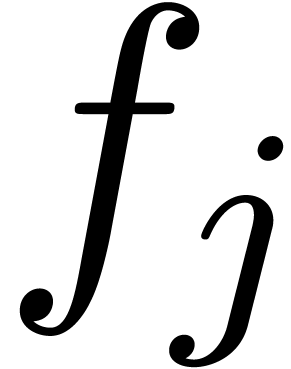

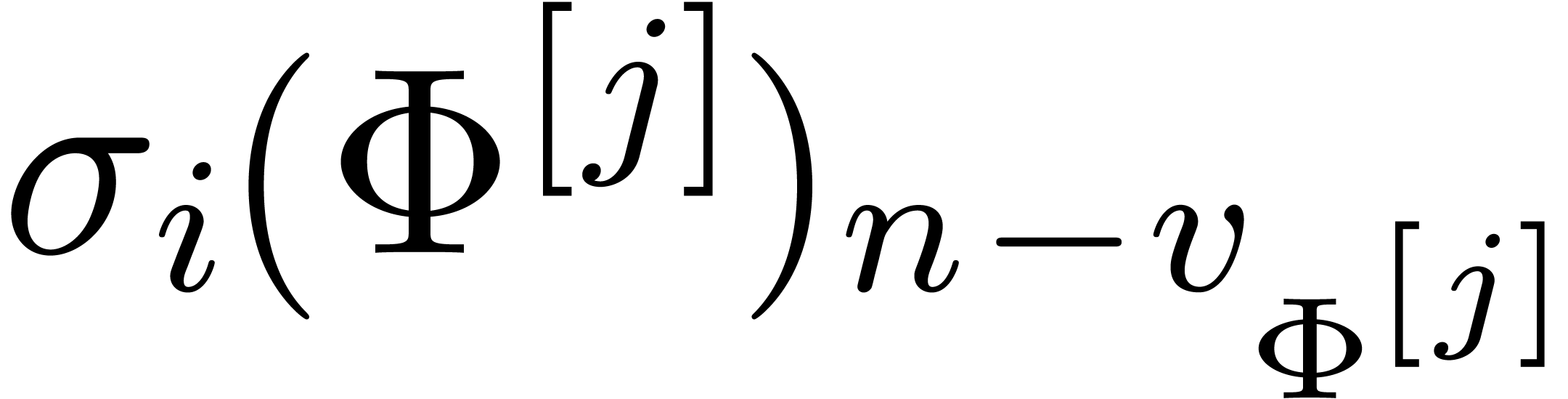

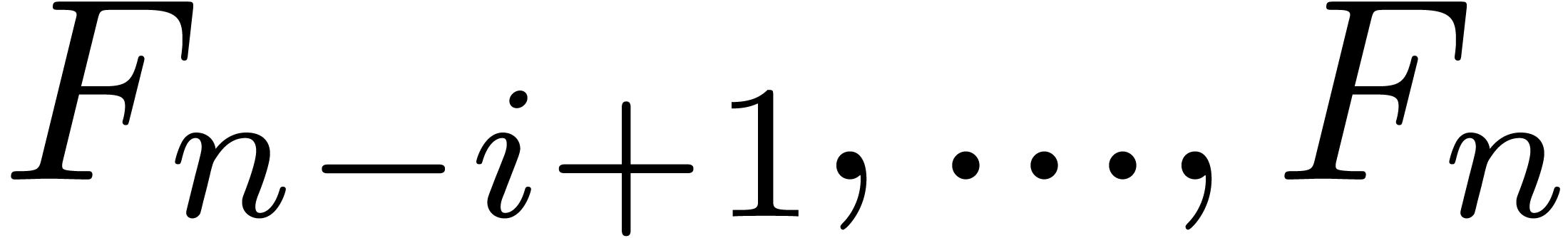

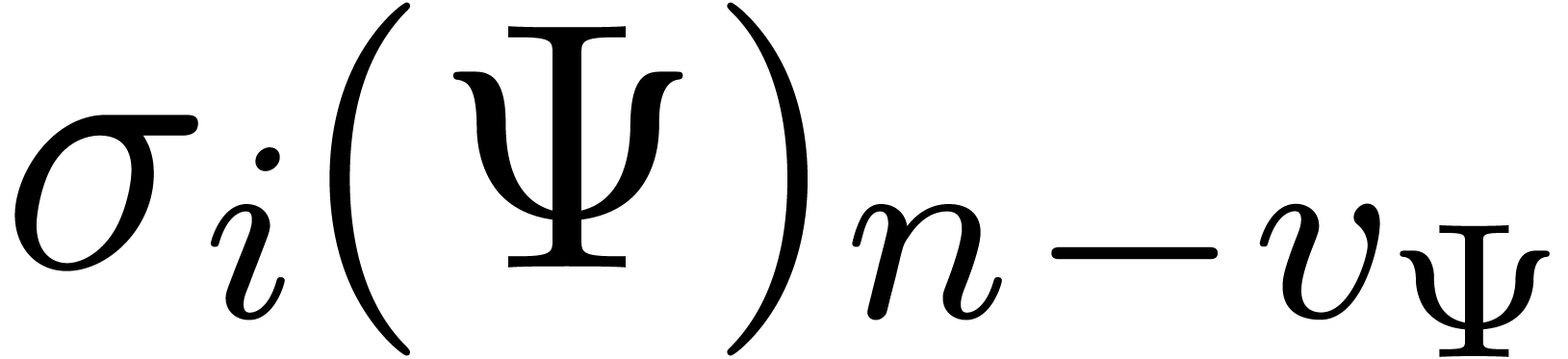

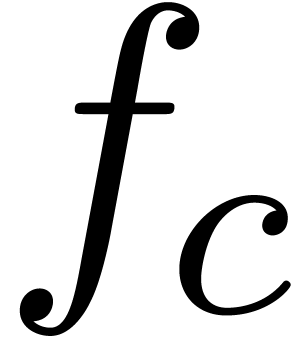

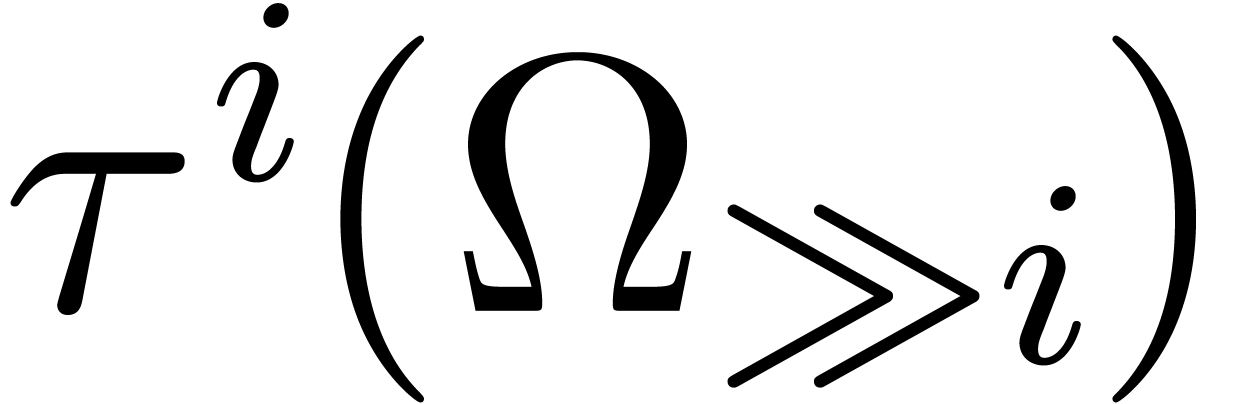

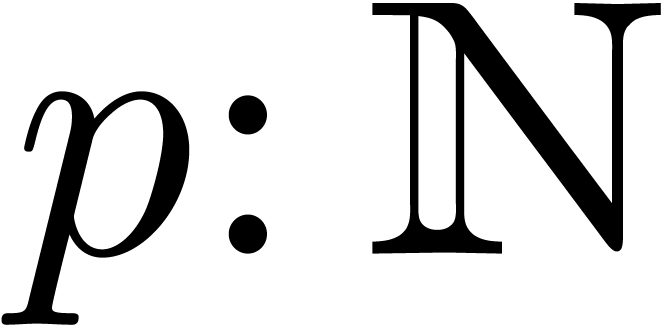

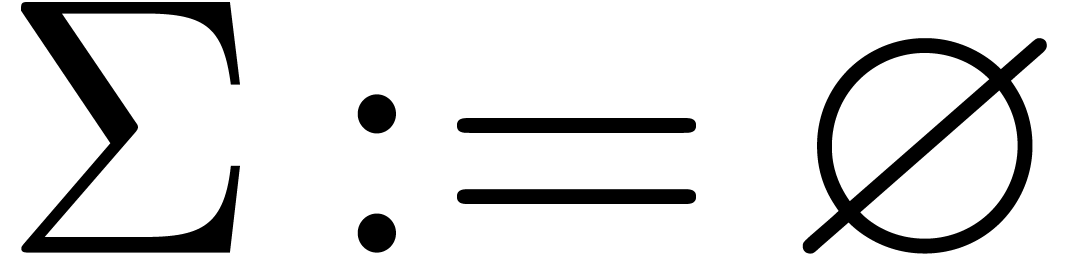

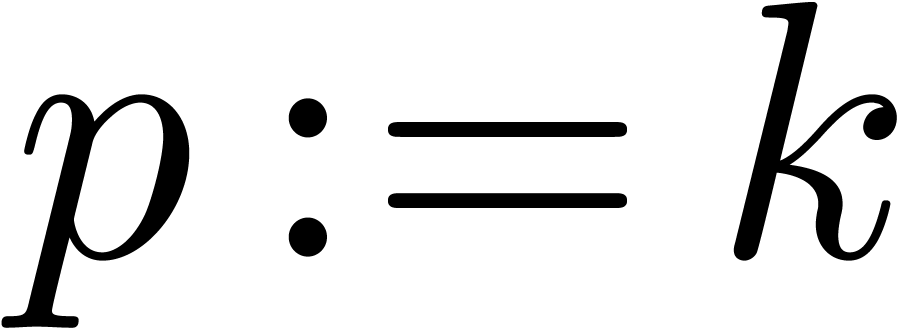

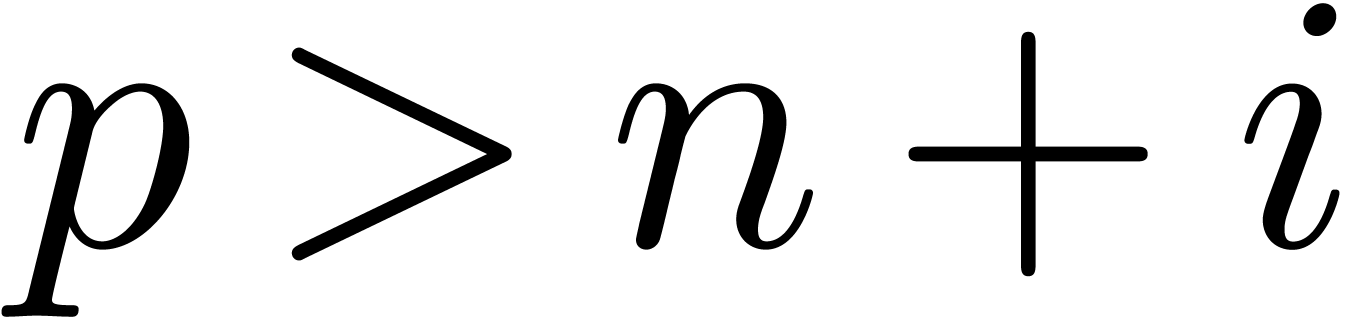

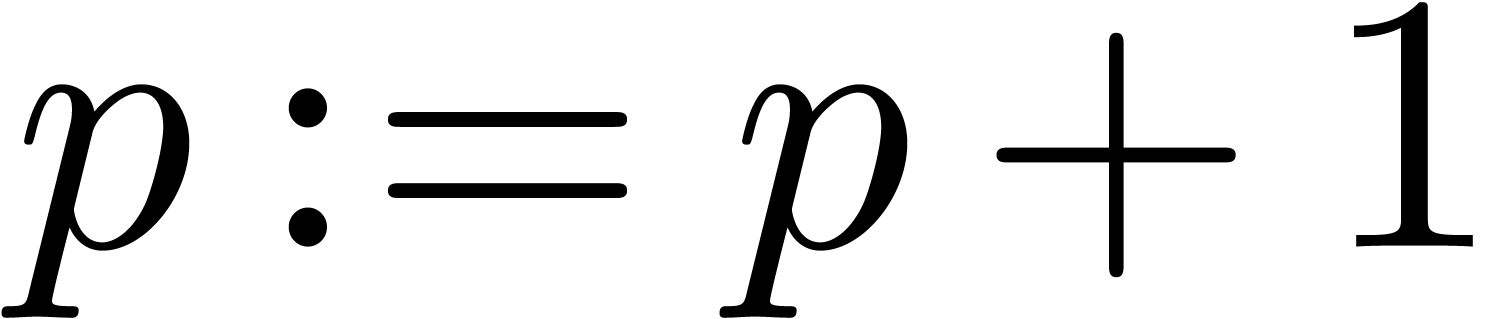

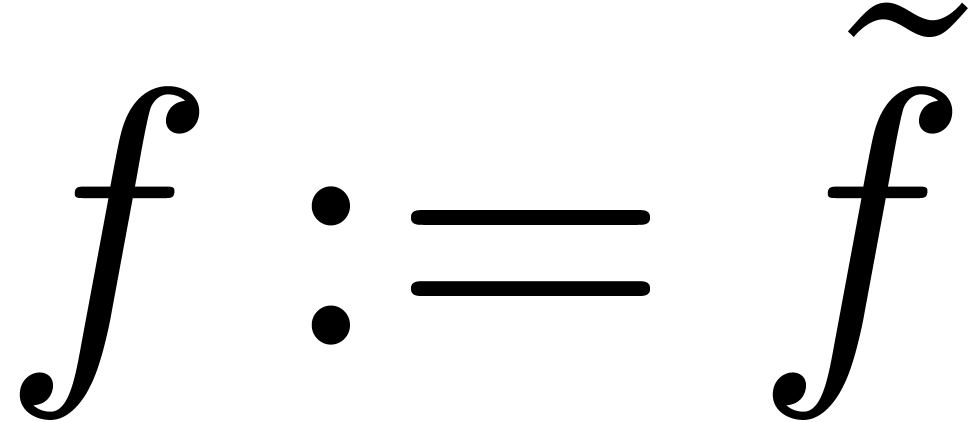

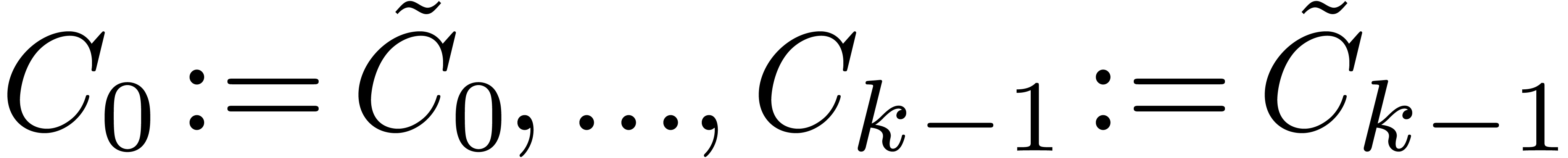

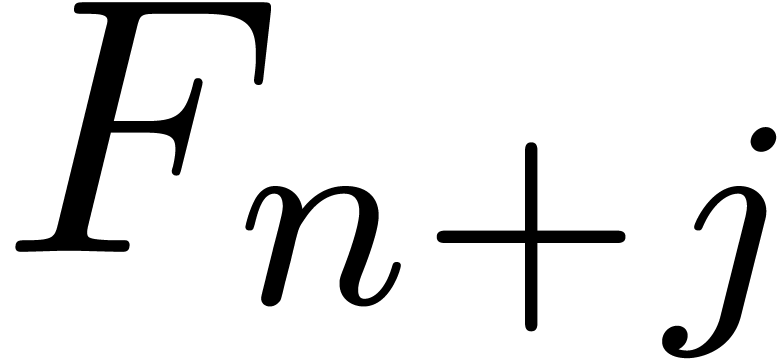

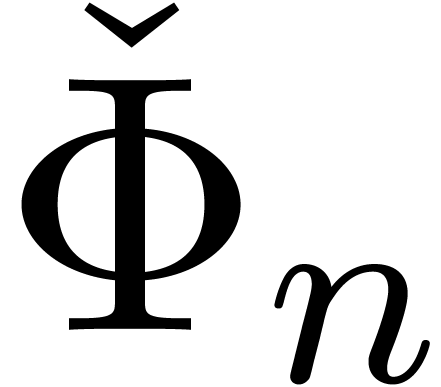

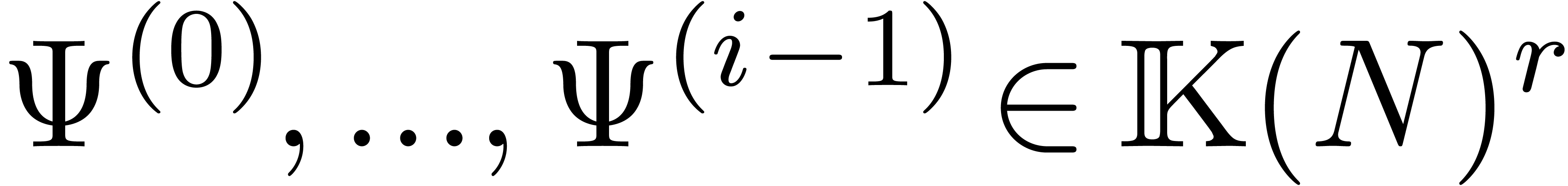

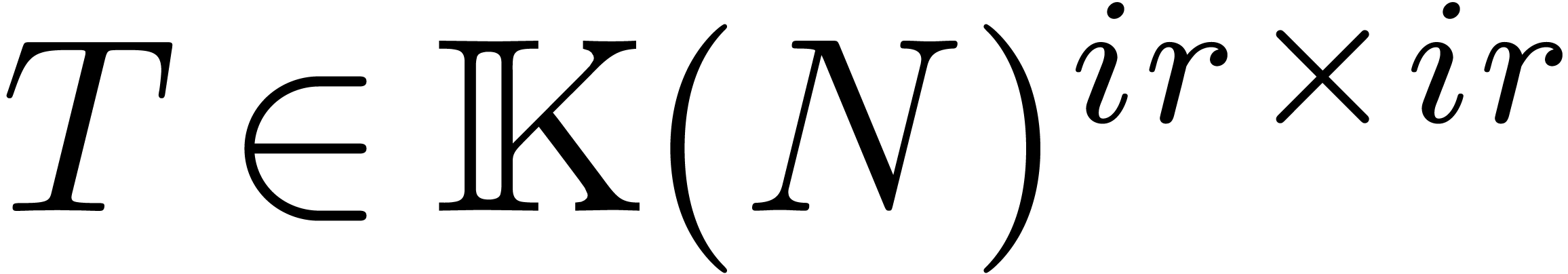

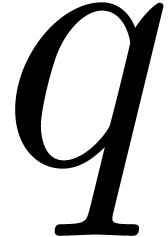

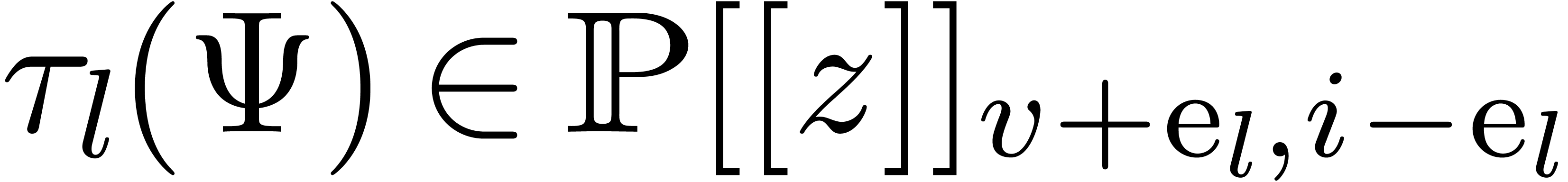

Consider a quasi-linear system (7) of index  with unique solution

with unique solution  .

We want to solve the system in a relaxed way, by computing the systems

.

We want to solve the system in a relaxed way, by computing the systems

for increasing values

for increasing values  and eliminating

and eliminating  from

from  using linear algebra. For each subexpression

using linear algebra. For each subexpression  of

of

, we need to evaluate

, we need to evaluate  in a relaxed way. The main challenge is to take

advantage of the fact that

in a relaxed way. The main challenge is to take

advantage of the fact that  is really a constant

plus a linear combination of

is really a constant

plus a linear combination of  and to integrate

the necessary substitutions of newly computed coefficients by their

values into the relaxed resolution process.

and to integrate

the necessary substitutions of newly computed coefficients by their

values into the relaxed resolution process.

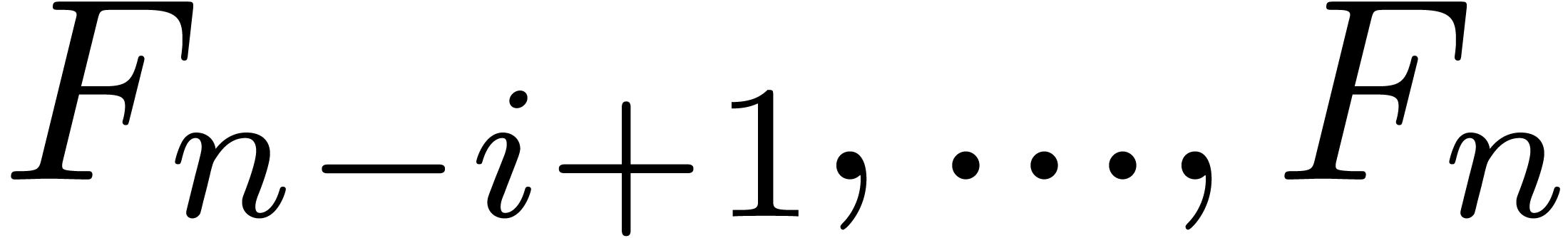

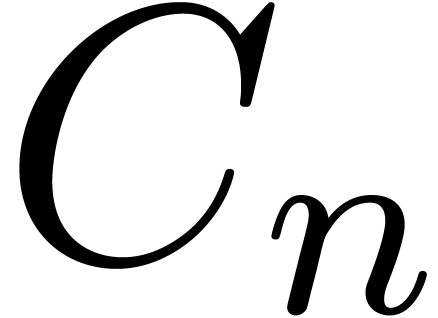

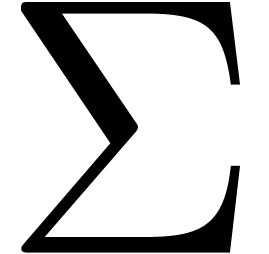

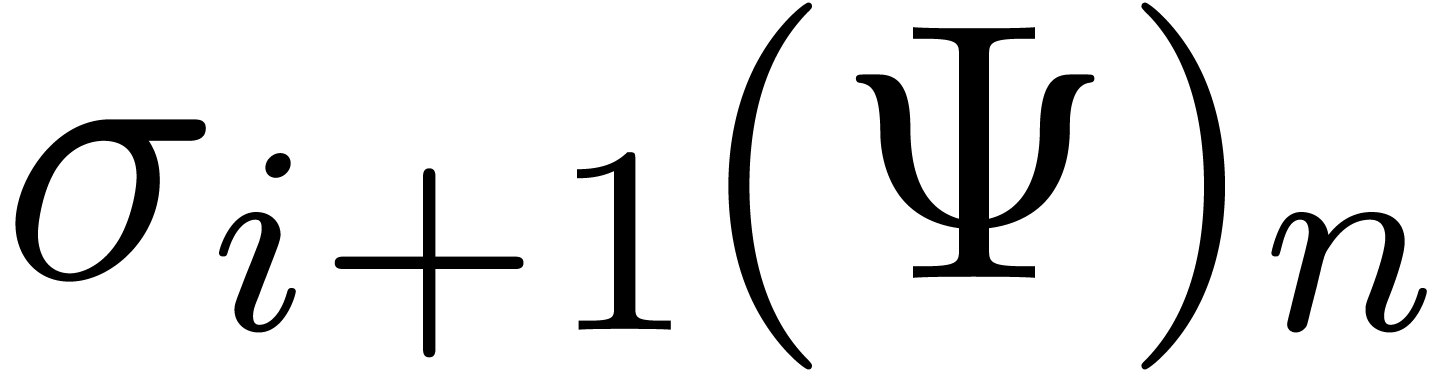

Denote the inner product of vectors by  .

For each

.

For each  and

and  ,

let

,

let  be the subset of

be the subset of  such that

such that

for all  . Then

. Then  is a

is a  -vector

space and

-vector

space and

Given  with

with  ,

we define the one-step substitution

,

we define the one-step substitution  by

by

In particular,  and the the iterate

and the the iterate  coincides with the full substitution

coincides with the full substitution  of

of  by

by  in

in  .

.

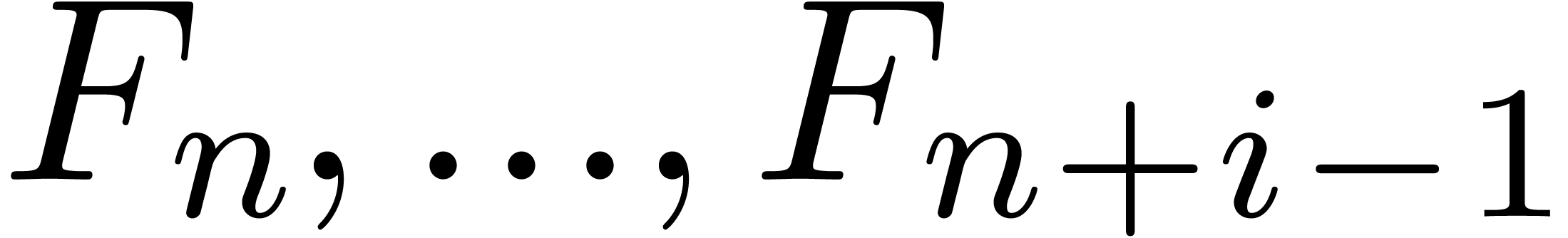

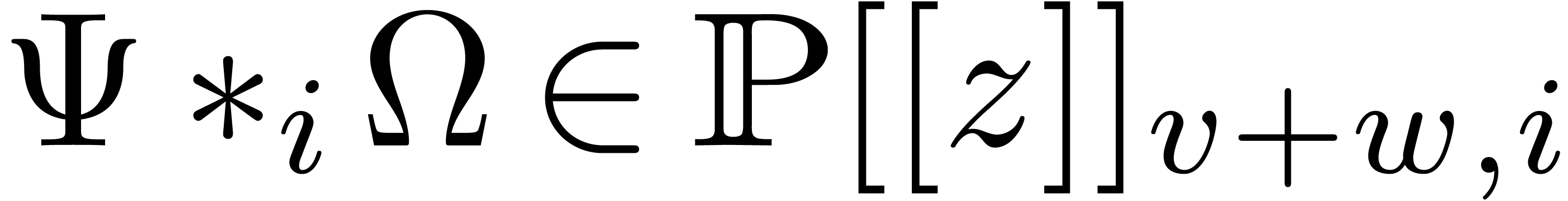

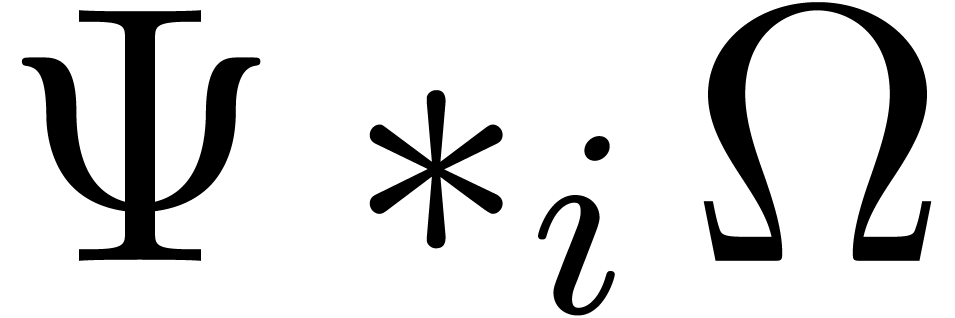

It remains to be shown how to multiply two series  and

and  in a suitable way, without introducing

quadratic terms in

in a suitable way, without introducing

quadratic terms in  . Given

. Given

, it will be convenient to

introduce shift operators

, it will be convenient to

introduce shift operators

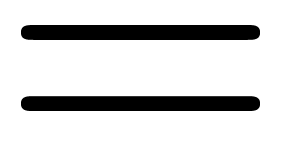

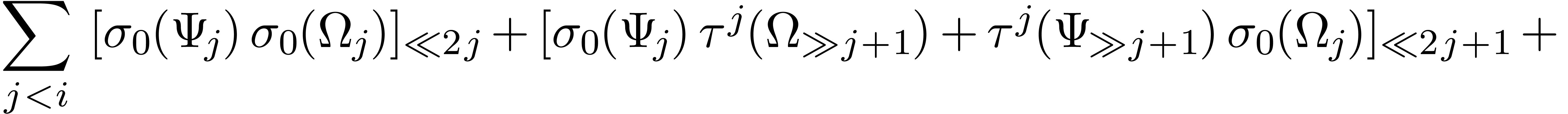

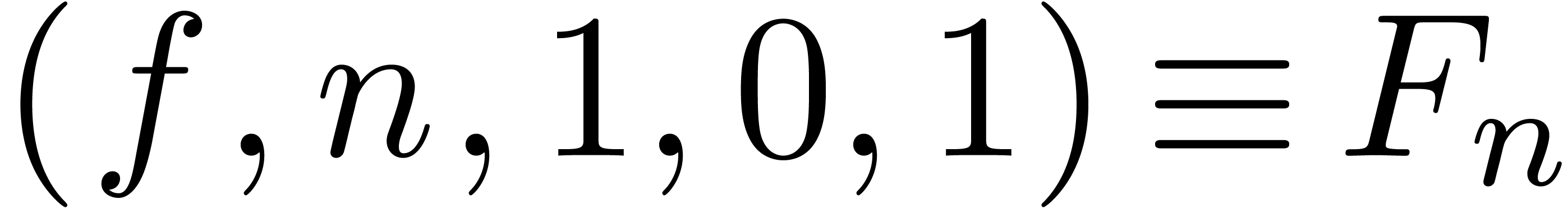

We recursively define the substitution product  of

of  and

and  by

by

using the fact that  .

Unrolling (11), we have

.

Unrolling (11), we have

|

|

|

|

|

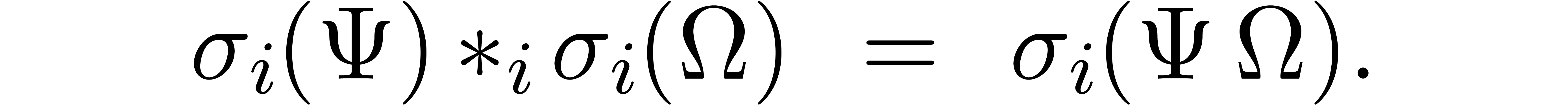

The substitution product satisfies the important property

Moreover, it respects the constraint that  can be

computed as soon as

can be

computed as soon as  and

and  are known for

are known for  . Recall that

the computation of

. Recall that

the computation of  requires the previous

computation of

requires the previous

computation of  .

.

From the implementation point of view, we proceed as follows. We

introduce a new data type  ,

whose instances are of the form

,

whose instances are of the form

where  stands for the relaxed power series

solution of (7). Such an instance

stands for the relaxed power series

solution of (7). Such an instance  represents

represents

Denoting by  the subtype of instances

the subtype of instances  in

in  with

with  , we may thus view series

, we may thus view series  as

elements of

as

elements of  . We have a

natural inclusion

. We have a

natural inclusion  , where we

notice that

, where we

notice that  does not matter if

does not matter if  , and a constructor

, and a constructor  for

the unknown

for

the unknown  . The

. The  -vector space operations on

-vector space operations on  are implemented in a straightforward way. The

one-step substitution operator

are implemented in a straightforward way. The

one-step substitution operator  is implemented by

is implemented by

if  and

and  otherwise. On a

fixed

otherwise. On a

fixed  , this allows us to

implement the substitution product

, this allows us to

implement the substitution product  using (11). Moreover, by casting

using (11). Moreover, by casting  and

and  to relaxed series in

to relaxed series in  ,

we may compute the product

,

we may compute the product  using a fast relaxed

product in

using a fast relaxed

product in  . We are now in a

position to state our relaxed algorithm for solving (7).

. We are now in a

position to state our relaxed algorithm for solving (7).

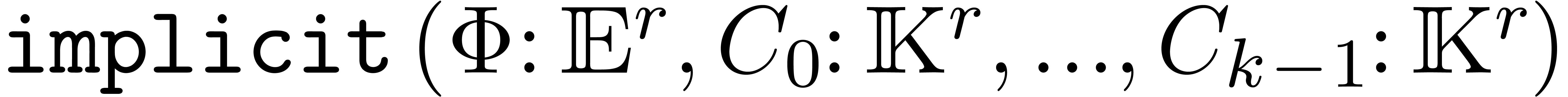

Class

Fields  ,

,

,

,

Constructor

and

and

Method

While true

If  then

raise an error

then

raise an error

and

and

Triangularize  by eliminating

by eliminating  with large

with large  first

first

If  then

raise an error

then

raise an error

If  where

where  and

and  only involves

only involves  then

then

Let  be the unique solution to

be the unique solution to  as a system in

as a system in

Let  and substitute

and substitute  for

for  in

in

Return

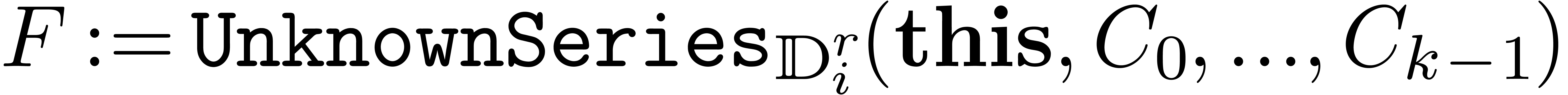

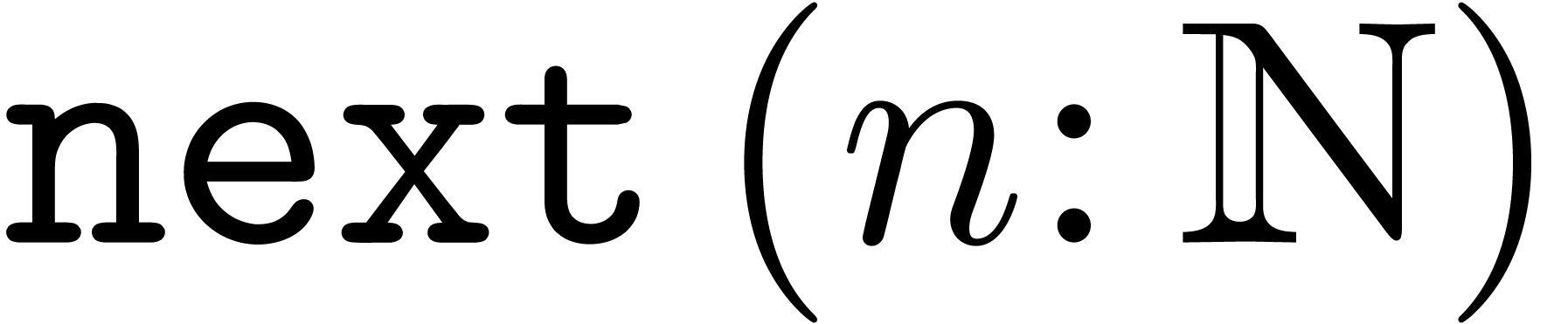

The following subalgorithm is used for the symbolic construction of the

unknown series  :

:

Class

Fields  ,

,

Constructor

,

,

Method

If  then

return

then

return

Else return

These algorithms require a few comments. First of all, we denoted by

the replacement of

the replacement of  by

by

in the expression

in the expression  ,

modulo suitable implicit conversions

,

modulo suitable implicit conversions  .

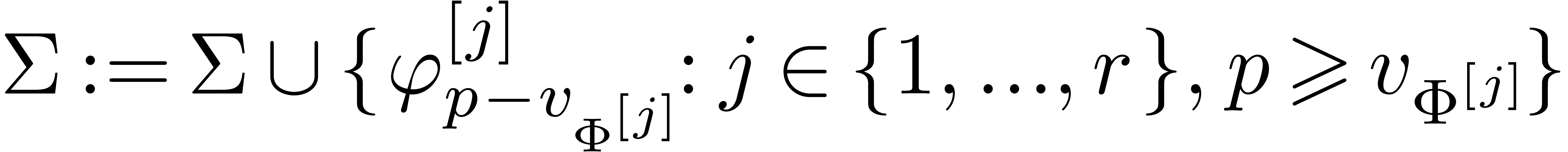

Throughout the algorithm, the set

.

Throughout the algorithm, the set  stands for the

current system of “not yet solved equations”. Each equation

is represented by an instance in

stands for the

current system of “not yet solved equations”. Each equation

is represented by an instance in  which only

involves

which only

involves  with

with  .

New equations are progressively added while keeping

.

New equations are progressively added while keeping  in a triangular form. As soon as

in a triangular form. As soon as  can be

eliminated from

can be

eliminated from  , then the

next coefficient

, then the

next coefficient  can be computed.

can be computed.

and offset

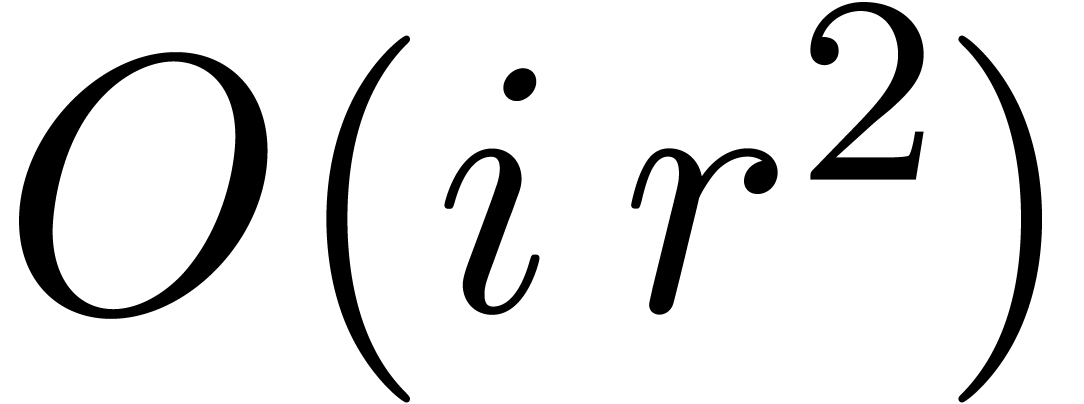

and offset  .

Then the above algorithm for the resolution of

.

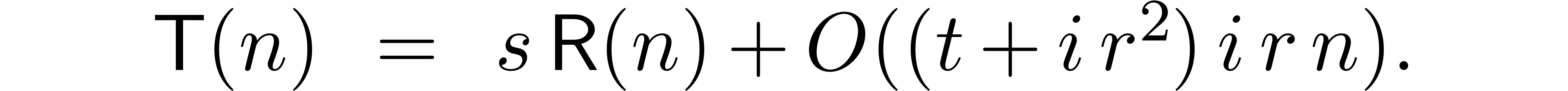

Then the above algorithm for the resolution of  involves

involves  and is of size

and is of size  as an expression, then

as an expression, then  are computed in time

are computed in time

Proof. By construction, just after setting  , the system

, the system  is equivalent to

is equivalent to  from (9). If

from (9). If  and

and  , we

may thus eliminate

, we

may thus eliminate  from the system

from the system  and compute

and compute  .

This proves the correctness. As to the complexity bound, we observe that

the substitution product in

.

This proves the correctness. As to the complexity bound, we observe that

the substitution product in  amounts to

amounts to  scalar products and one relaxed product in

scalar products and one relaxed product in  . Similarly, each linear operation (addition,

subtraction, derivation and integration) in

. Similarly, each linear operation (addition,

subtraction, derivation and integration) in  amounts to

amounts to  similar operations in

similar operations in  . If

. If  is a triangular

system of size

is a triangular

system of size  and we add

and we add  new rows, then the triangularization of the new system can be done in

time

new rows, then the triangularization of the new system can be done in

time  .

.

Remark  for the substitution products, it is actually

possible to automatically increase

for the substitution products, it is actually

possible to automatically increase  whenever

whenever  contains a non constant coefficient for some

subexpression

contains a non constant coefficient for some

subexpression  of

of  .

.

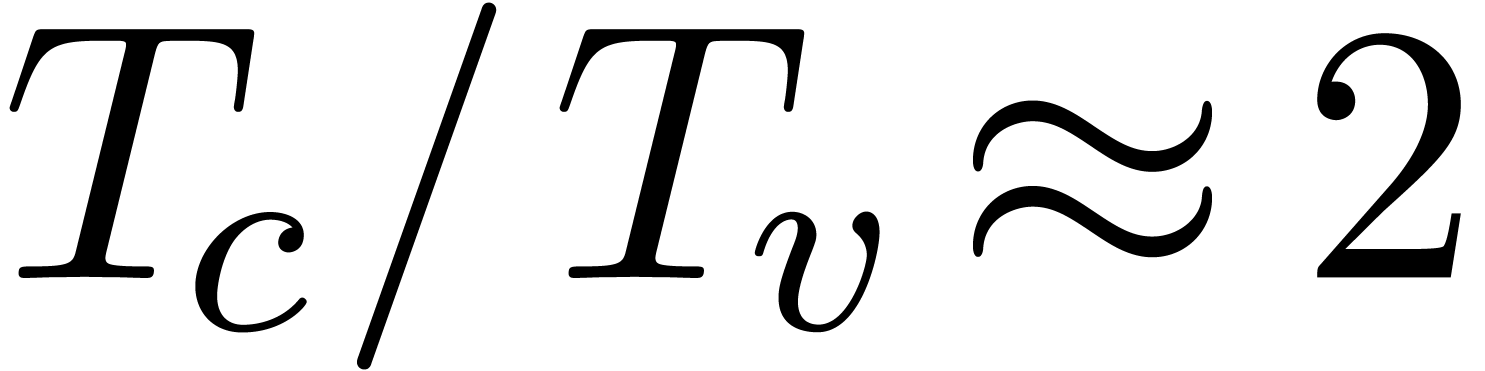

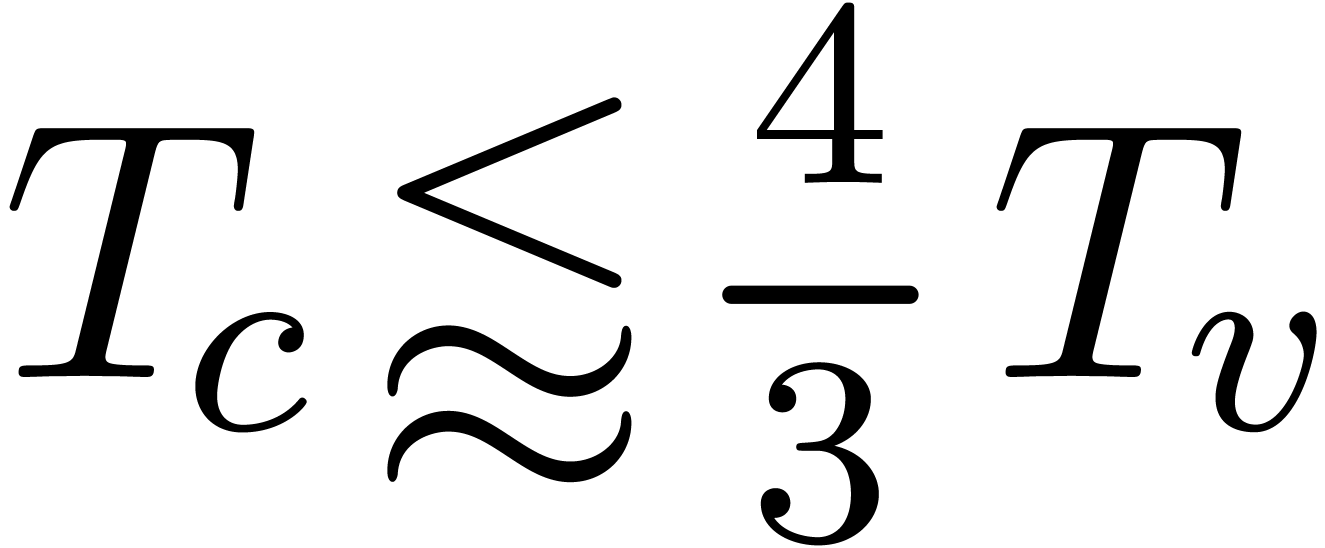

Remark  to compute the solution

up to order

to compute the solution

up to order  with the time

with the time  to verify its correctness. For small orders

to verify its correctness. For small orders  , we observed ratios

, we observed ratios  .

For large orders

.

For large orders  , we

achieved

, we

achieved  , in correspondence

with the asymptotic complexity bound.

, in correspondence

with the asymptotic complexity bound.

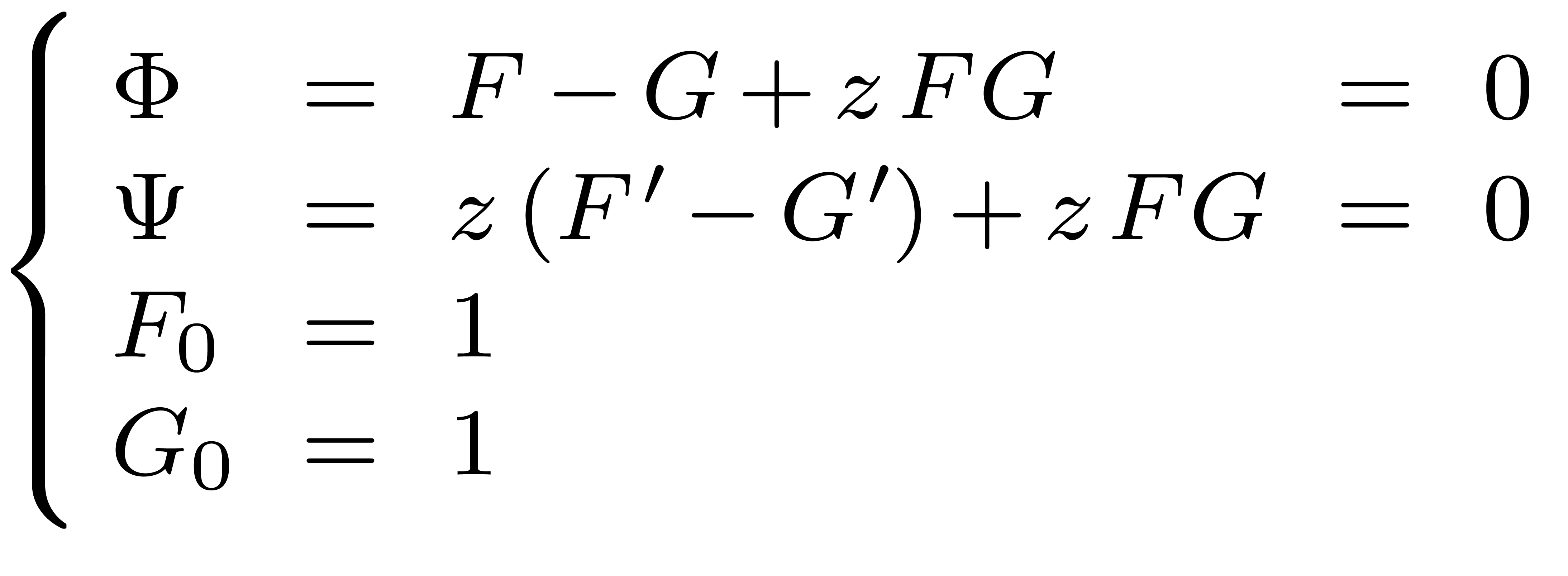

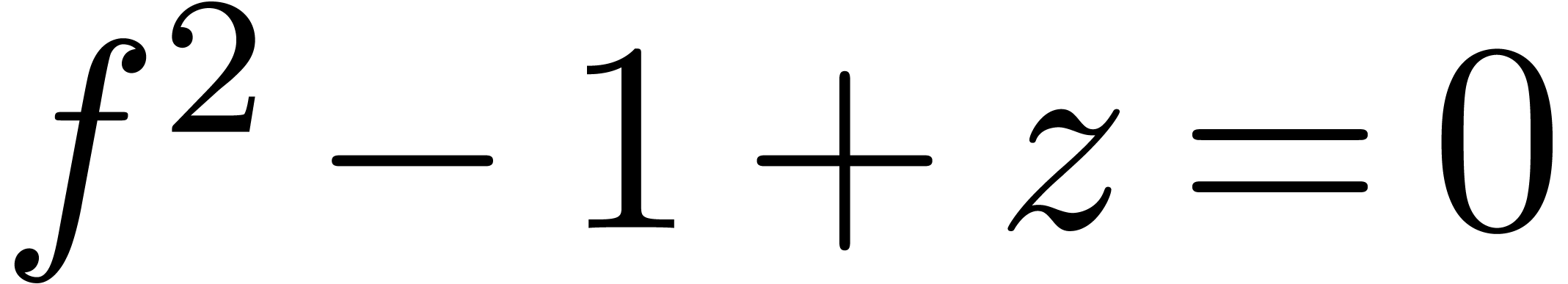

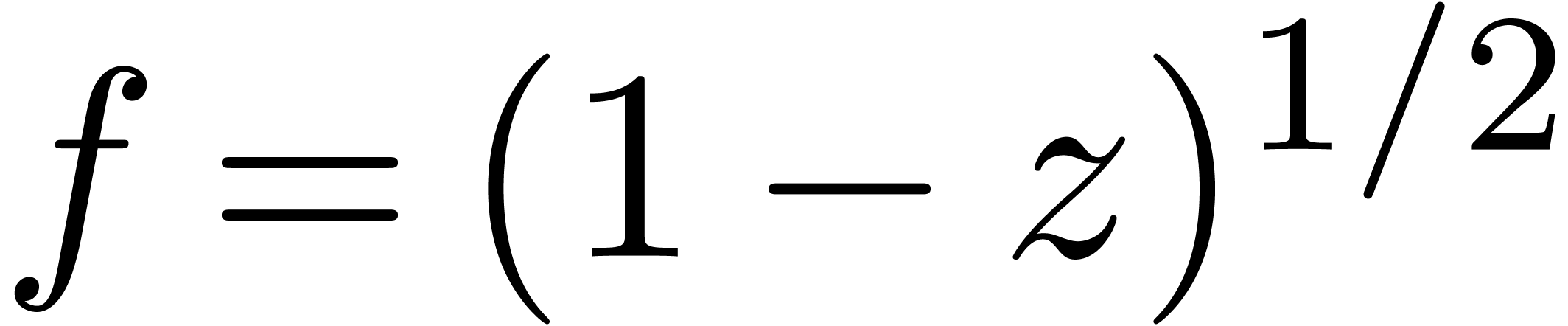

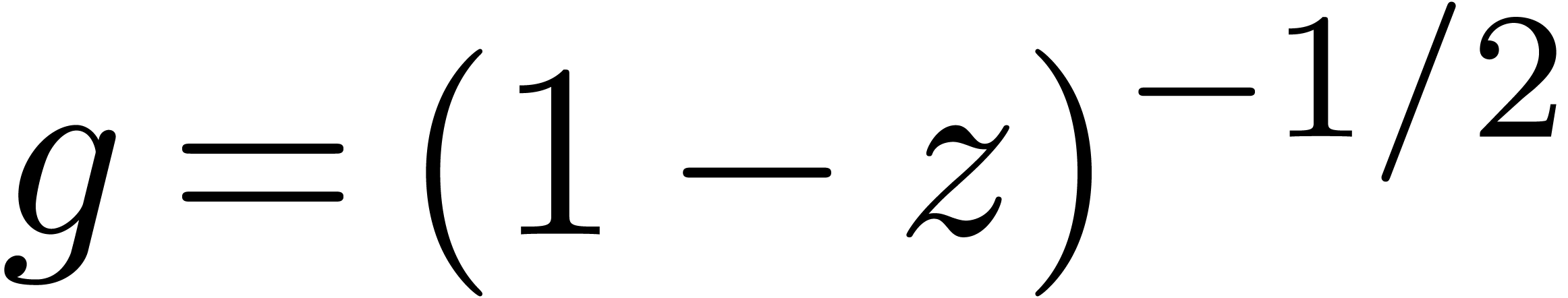

Consider the system

It is not hard to find the unique explicit solution  of this system. Indeed,

of this system. Indeed,

whence  . Since

. Since  , it follows that

, it follows that  .

Plugging this into the first equation

.

Plugging this into the first equation  ,

we get

,

we get  , whence

, whence  and

and  .

.

During the computations below, we will see that the system is

quasi-linear of index  .

Denoting the relaxed solution by

.

Denoting the relaxed solution by  ,

we will have to compute

,

we will have to compute  ,

,

and the series

and the series  ,

,

,

,  .

.

.

.

and

and  yield

yield

These relations do not yet enable us to determine  and

and  .

.

and

and  yield

yield

After triangularization, we get

The two last equations imply  and

and  .

.

and

and  and

triangularization of

and

triangularization of  yield

yield

From the equations  and

and  , we get

, we get  .

.

, the evaluations of

, the evaluations of

and

and  yield

yield

After triangularization, we thus get

Consequently,  .

.

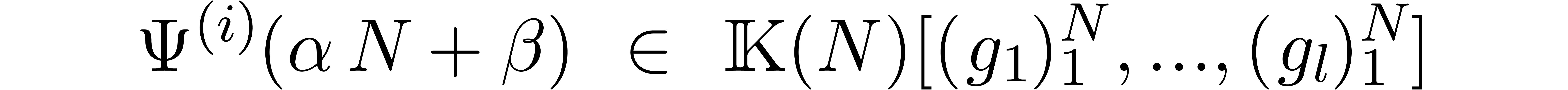

Assume that the system (7) is quasi-linear. Given a

subexpression  of

of  an

integer

an

integer  and

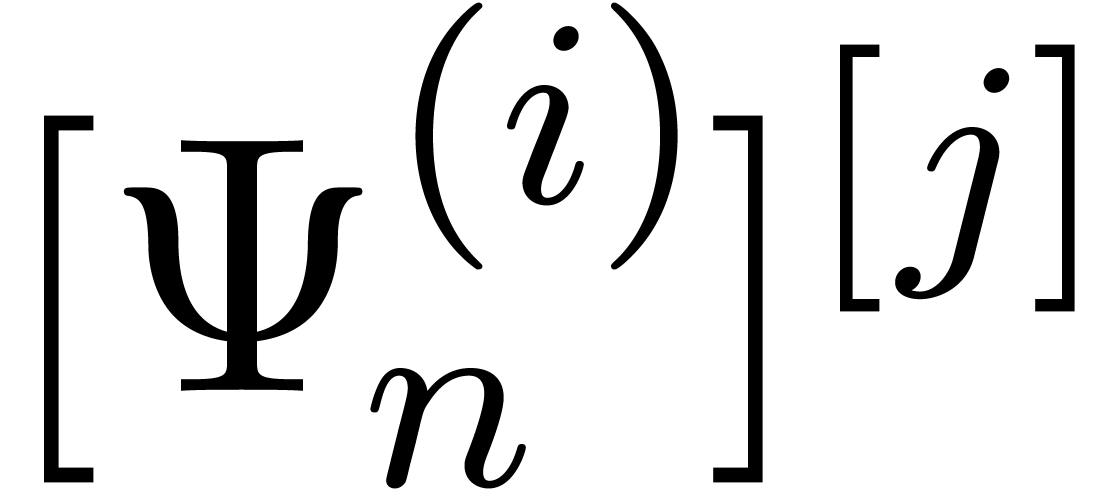

and  ,

we claim that the coefficient

,

we claim that the coefficient  of

of  in

in  (and which corresponds to

(and which corresponds to  in (10)) is a rational function in

in (10)) is a rational function in  , for sufficiently large

, for sufficiently large  . There are two ways to see this.

. There are two ways to see this.

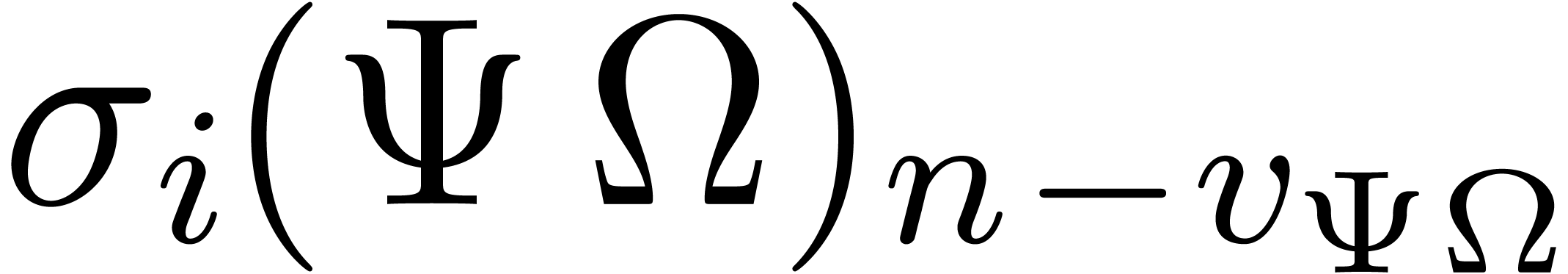

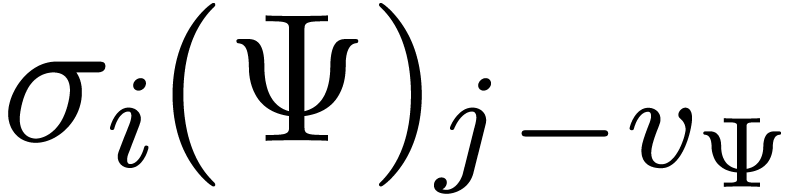

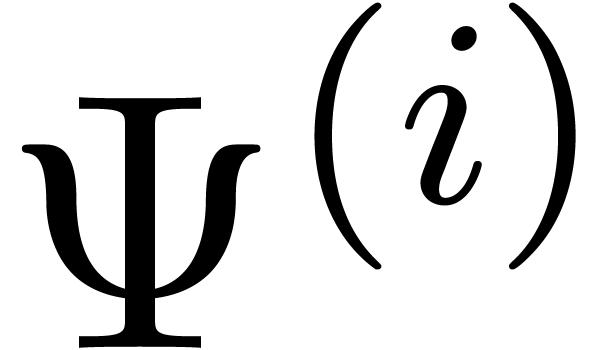

Let  denote the set of expressions

denote the set of expressions  , such that for all

, such that for all  there exist vectors of rational functions

there exist vectors of rational functions  and a

sequence

and a

sequence  with

with

for all sufficiently large  .

In other words,

.

In other words,

for  and sufficiently large

and sufficiently large  . We define

. We define  if

if  . We clearly have

. We clearly have  and

and  . Assume that

. Assume that  . Then

. Then  and

we may explicitly compute the corresponding rational functions using

and

we may explicitly compute the corresponding rational functions using

If  is a polynomial, then we notice that

is a polynomial, then we notice that  for all

for all  and

and  . If

. If  is a differential

polynomial of order

is a differential

polynomial of order  , then

, then

is a polynomial in

is a polynomial in  of

degree

of

degree  . In general, the

degrees of the numerator and denominator of

. In general, the

degrees of the numerator and denominator of  are

bounded by the maximal number of nested differentiations

resp. integrations occurring in

are

bounded by the maximal number of nested differentiations

resp. integrations occurring in  .

.

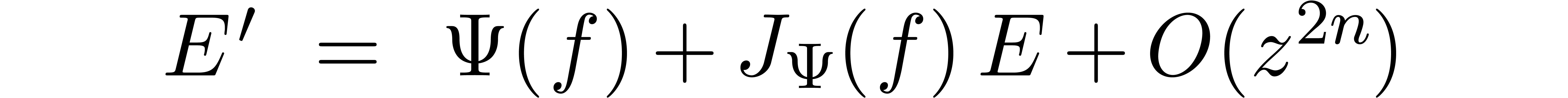

An alternative way to determine the  is to

consider

is to

consider  as a perturbation of the solution and

perform a Taylor series expansion

as a perturbation of the solution and

perform a Taylor series expansion

The coefficients  can then be read off from the

linear term using

can then be read off from the

linear term using

For instance, consider the expression

Then we have

with  if

if  and

and  otherwise.

otherwise.

A first theoretical consequence of our ability to compute symbolic

expressions for  is the following:

is the following:

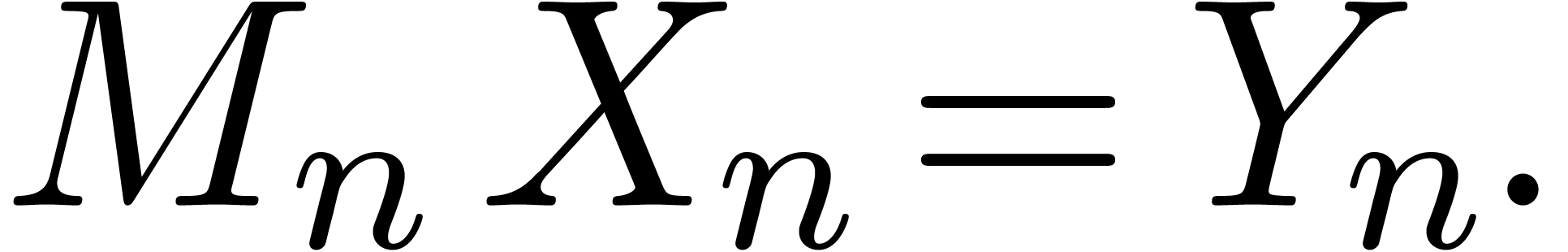

Proof. The system (9) can be rewritten as a matrix-vector equation

|

(15) |

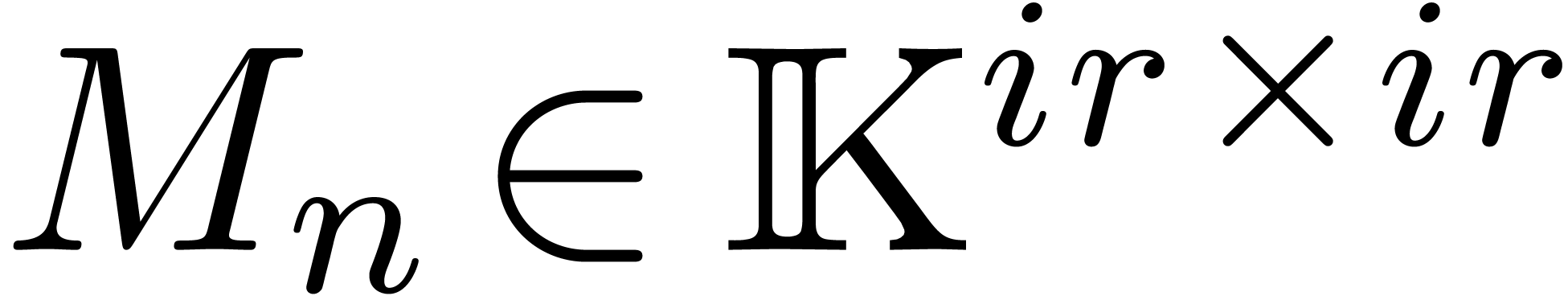

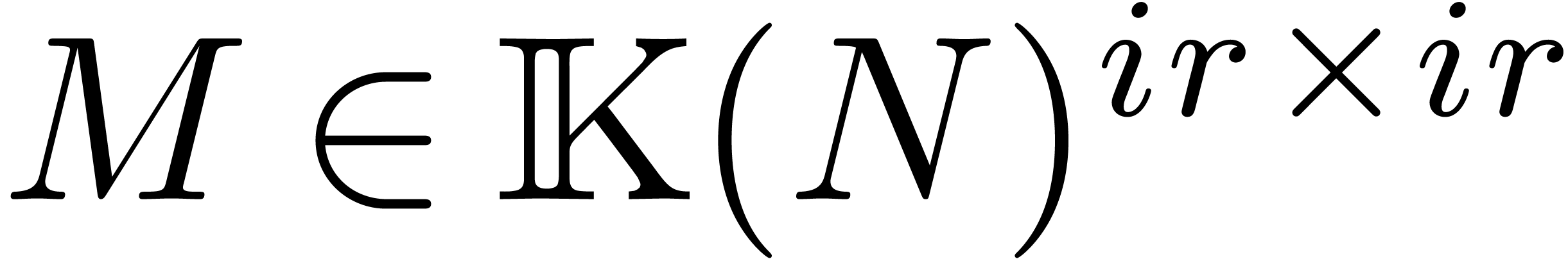

Here  is a column vector with entries

is a column vector with entries  and

and  . The

entries of the matrix

. The

entries of the matrix  are coefficients of the

form

are coefficients of the

form  . In particular, we can

compute a matrix

. In particular, we can

compute a matrix  such that the matrix

such that the matrix  is given by the specialization

is given by the specialization  of

of

at

at  for sufficiently

large

for sufficiently

large  .

.

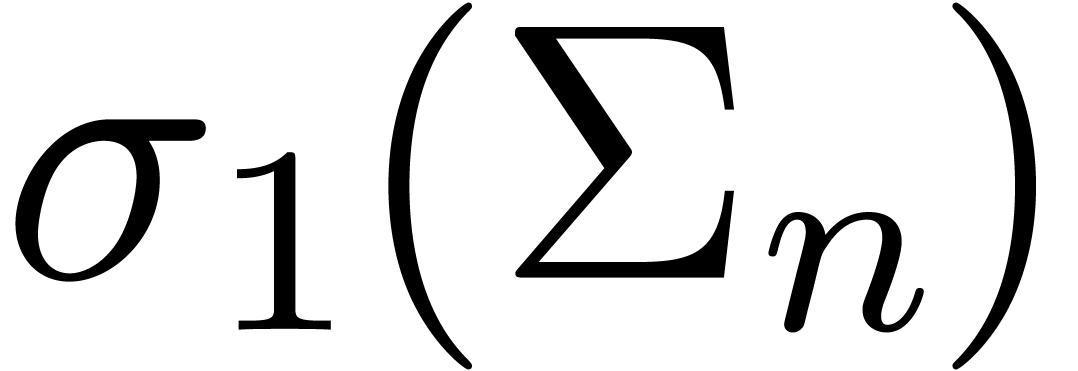

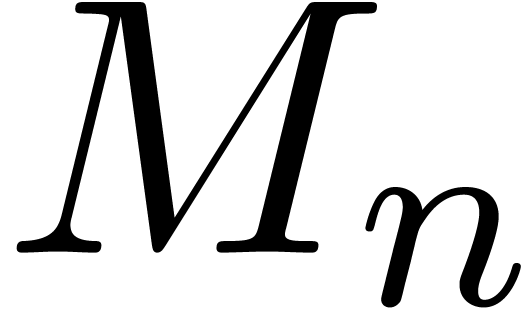

Let  be the symbolic triangularization of

be the symbolic triangularization of  . For sufficiently large

. For sufficiently large  , the triangularization

, the triangularization  of

of  coincides with

coincides with  for

for  . Now

. Now  may be eliminated from the equation (15) if and only if the

last

may be eliminated from the equation (15) if and only if the

last  non zero rows and the last

non zero rows and the last  columns of

columns of  are an invertible triangular matrix.

This is the case for all sufficiently large

are an invertible triangular matrix.

This is the case for all sufficiently large  if

and only if the last

if

and only if the last  non zero rows and the last

non zero rows and the last

columns of

columns of  are an

invertible triangular matrix in

are an

invertible triangular matrix in  .

We compute the index of (7) as being the smallest

.

We compute the index of (7) as being the smallest  for which this is the case.

for which this is the case.

As to the offset, we first observe that we may explicitly compute an

such that

such that  and

and  for all

for all  , since

the values

, since

the values  for which these equations do not hold

are roots of a polynomial with coefficients in

for which these equations do not hold

are roots of a polynomial with coefficients in  . Using the algorithm from section 4,

we may compute the solution

. Using the algorithm from section 4,

we may compute the solution  up to any given

order

up to any given

order  . We thus compute the

offset as being the smallest

. We thus compute the

offset as being the smallest  such that

such that  can be eliminated from (15) for all

can be eliminated from (15) for all  .

.

Example

Remark

A second consequence of our ability to compute symbolic expressions for

is that we can avoid the systematic computation

of the coefficients

is that we can avoid the systematic computation

of the coefficients  using arithmetic in

using arithmetic in  : we rather compute

: we rather compute  on demand, by evaluating

on demand, by evaluating  at

at  . The coefficients

. The coefficients  are essentially needed at two places: for the computation of

substitution products and for the computation of the system

are essentially needed at two places: for the computation of

substitution products and for the computation of the system  . Let

. Let  be the cost of an

evaluation of

be the cost of an

evaluation of  : if

: if  is a polynomial, then

is a polynomial, then  ;

if

;

if  is a differential polynomial, then

is a differential polynomial, then  is its order plus one; etc..

is its order plus one; etc..

When computing  by evaluating

by evaluating  at

at  , the computation of one

coefficient of a one-step substitution

, the computation of one

coefficient of a one-step substitution  amounts

to

amounts

to  evaluations of rational functions of the form

evaluations of rational functions of the form

. Consequently, every

substitution product amounts to a cost

. Consequently, every

substitution product amounts to a cost  in the

final complexity.

in the

final complexity.

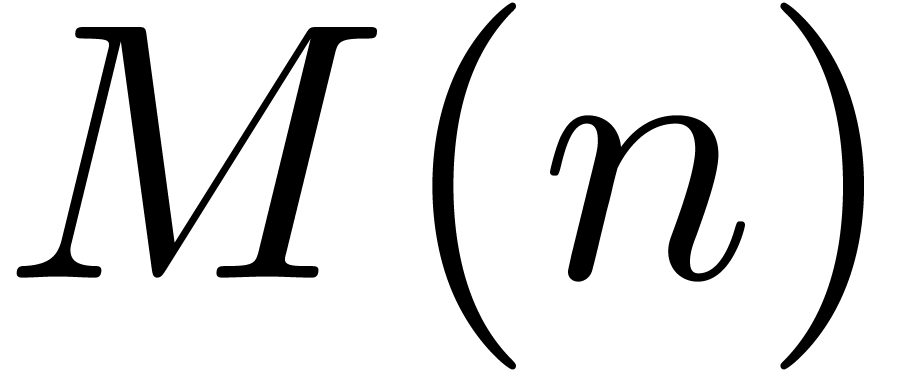

As to the computation of a coefficients  ,

we may compute

,

we may compute  as in (15) using

as in (15) using

evaluations of cost

evaluations of cost  and

then solving a linear system of size

and

then solving a linear system of size  .

This gives rise to a cost

.

This gives rise to a cost  in the final

complexity. Alternatively, as a side effect of the triangularization of

in the final

complexity. Alternatively, as a side effect of the triangularization of

with the notations from (15), we

may compute a symbolic matrix

with the notations from (15), we

may compute a symbolic matrix  such that

such that  for all sufficiently large

for all sufficiently large  . If the system (7) is algebraic, then

. If the system (7) is algebraic, then

actually has constant coefficients, so the

complexity further reduces to

actually has constant coefficients, so the

complexity further reduces to  .

In general, the evaluation of

.

In general, the evaluation of  will be more

expensive, so it is not clear whether this strategy pays off.

Altogether, we have proved:

will be more

expensive, so it is not clear whether this strategy pays off.

Altogether, we have proved:

of total size

of total size  and containing at most

and containing at most  multiplications. Assume

that the equations involve strictly less than

multiplications. Assume

that the equations involve strictly less than  nested derivations or integrations. Then

nested derivations or integrations. Then  can

be computed in time

can

be computed in time

If

Remark  gives rise to a small but non negligible amount of overhead for small

and moderate

gives rise to a small but non negligible amount of overhead for small

and moderate  (see remark 7), we

indeed expect further gains in this case.

(see remark 7), we

indeed expect further gains in this case.

For simplicity, the presentation of this paper has been focused on ordinary differential equations. Nevertheless, the techniques admit generalizations in several directions. We will outline two such generalizations.

be a set of relaxed power series

be a set of relaxed power series  with

with  and replace

and replace  by the set of expressions built up from

by the set of expressions built up from  ,

,  and constants in

and constants in

using ring operations, differentiation,

integration and right composition with series in

using ring operations, differentiation,

integration and right composition with series in  .

.

Assume first that  for all

for all  . In a similar way as in section 6,

there exists a symbolic expression of the form (13) for

each

. In a similar way as in section 6,

there exists a symbolic expression of the form (13) for

each  , except that we now

have

, except that we now

have

where  are the functions which occur as

postcomposers in

are the functions which occur as

postcomposers in  . In

particular, if

. In

particular, if  , then the

, then the

are contained in a Hardy field, and theorem 8 generalizes.

are contained in a Hardy field, and theorem 8 generalizes.

The above observation further generalizes to the case when  for certain

for certain  .

In non degenerate cases, expressions

.

In non degenerate cases, expressions  with

with  only occur as perturbations, and (16)

still holds. In general, we also have to consider degenerate situations,

such as the case when

only occur as perturbations, and (16)

still holds. In general, we also have to consider degenerate situations,

such as the case when

for a certain  and all

and all  .

.

One may even consider functional equations in which we also allow

postcompositions with general expressions  with

with

. Although the theory from

section 6 becomes more and more intricate, the algorithm

from section 4 generalizes in a straightforward way.

. Although the theory from

section 6 becomes more and more intricate, the algorithm

from section 4 generalizes in a straightforward way.

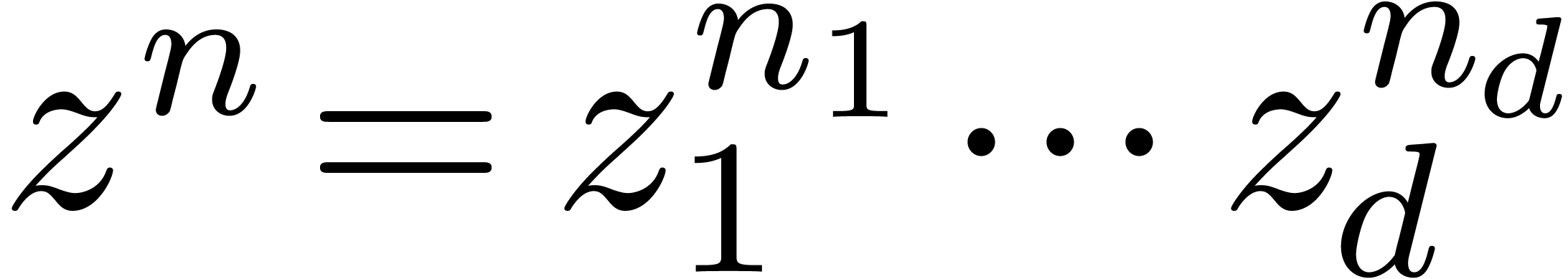

. Given a multivariate power series

. Given a multivariate power series  and

and  , we

denote by

, we

denote by  the coefficient of

the coefficient of  in

in  , and let

, and let  . The expressions in

. The expressions in  are built up from

are built up from  ,

,  and constants in

and constants in  using ring

operations and partial differentiation or integration with respect to

the

using ring

operations and partial differentiation or integration with respect to

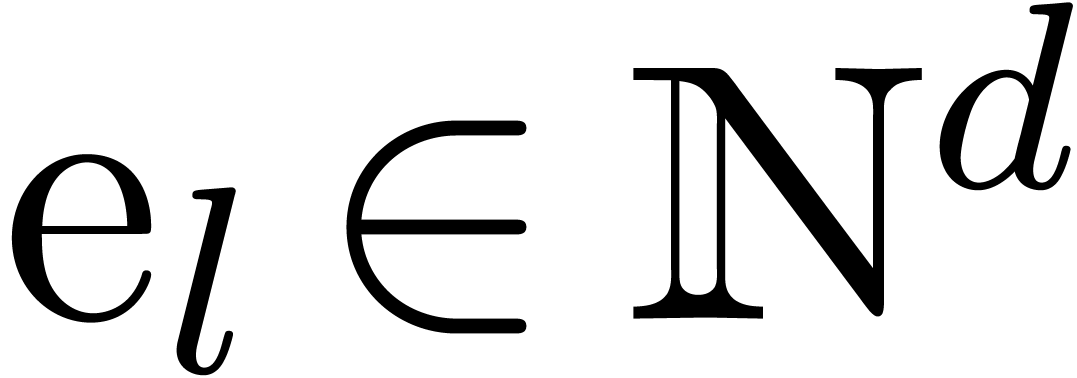

the  . The number

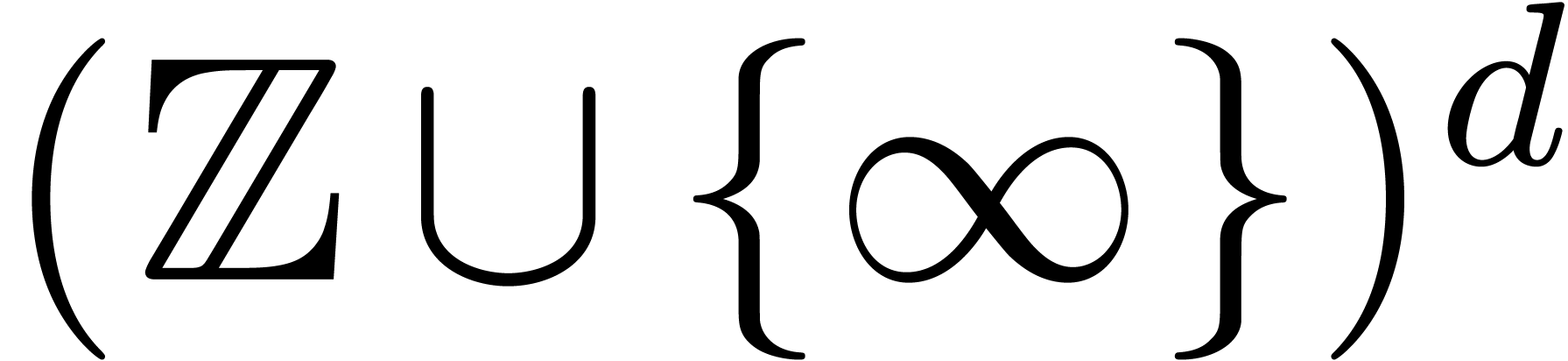

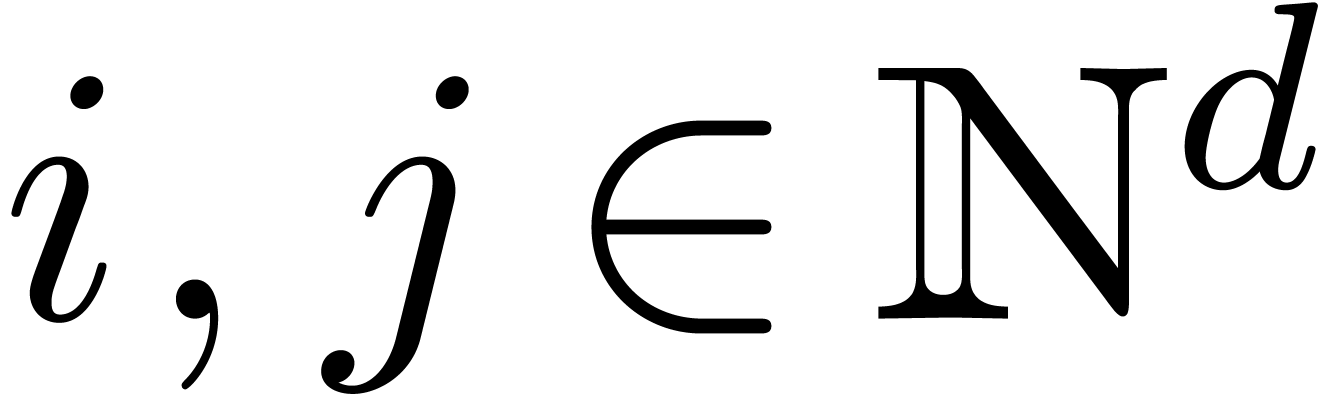

. The number  now becomes a vector in

now becomes a vector in  .

.

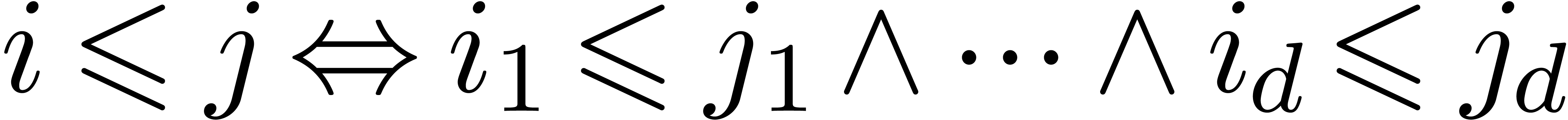

The simplest, blockwise generalization proceeds as follows. Indices  are compared using the product ordering

are compared using the product ordering  . Given

. Given  and

and  , we let

, we let  be

the series in

be

the series in  such that

such that  is the result of the substitution of

is the result of the substitution of  by

by  in

in  , for

all

, for

all  and

and  .

Given

.

Given  and

and  ,

we let

,

we let  be the subset of

be the subset of  such that

such that

For each  , let

, let  be such that

be such that  and

and  for

for  . We define the one-step

partial substitution

. We define the one-step

partial substitution  by

by

The partial shifts  and

and  are defined similarly and we denote by

are defined similarly and we denote by  the

substitution of

the

substitution of  for

for  in

in

. The substitution product is

defined recursively. If

. The substitution product is

defined recursively. If  ,

then we set

,

then we set  . Otherwise, we

let

. Otherwise, we

let  be smallest such that

be smallest such that  is maximal and take

is maximal and take

Using this substitution product, the algorithm from section 4 generalizes. The theory from section 6 can also be adapted. However, theorem 8 admits no simple analogue, due to the fact that there is no algorithm for determining the integer roots of a system of multivariate polynomials.

Several variants are possible depending on the application. For

instance, it is sometimes possible to consider only the  up till a certain total degree

up till a certain total degree  in (17),

instead of a block of coefficients. For some applications, it may also

be interesting to store the

in (17),

instead of a block of coefficients. For some applications, it may also

be interesting to store the  in a sparse vector.

in a sparse vector.

A. Bostan, F. Chyzak, F. Ollivier, B. Salvy, É. Schost, and A. Sedoglavic. Fast computation of power series solutions of systems of differential equation. preprint, april 2006. submitted, 13 pages.

R.P. Brent and H.T. Kung. Fast algorithms for manipulating formal power series. Journal of the ACM, 25:581–595, 1978.

D.G. Cantor and E. Kaltofen. On fast multiplication of polynomials over arbitrary algebras. Acta Informatica, 28:693–701, 1991.

J.W. Cooley and J.W. Tukey. An algorithm for the machine calculation of complex Fourier series. Math. Computat., 19:297–301, 1965.

A. Schönhage and V. Strassen. Schnelle Multiplikation grosser Zahlen. Computing, 7:281–292, 1971.

J. van der Hoeven. Lazy multiplication of formal power series. In W. W. Küchlin, editor, Proc. ISSAC '97, pages 17–20, Maui, Hawaii, July 1997.

J. van der Hoeven. Relax, but don't be too lazy. JSC, 34:479–542, 2002.

J. van der Hoeven et al. Mathemagix, 2002. http://www.mathemagix.org.

J. van der Hoeven. Newton's method and FFT trading. Technical Report 2006-17, Univ. Paris-Sud, 2006. Submitted to JSC.

J. van der Hoeven. New algorithms for relaxed multiplication. JSC, 42(8):792–802, 2007.