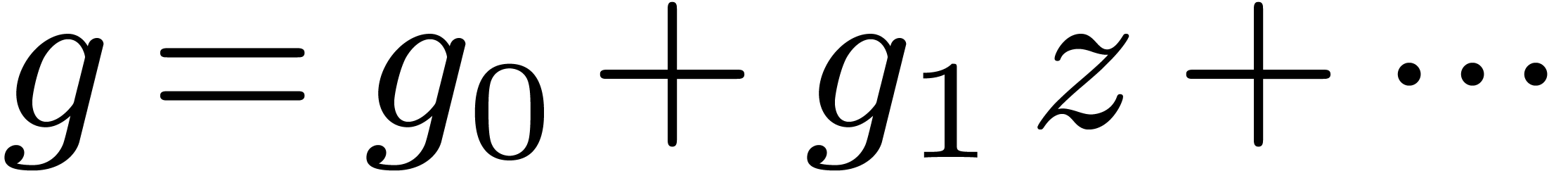

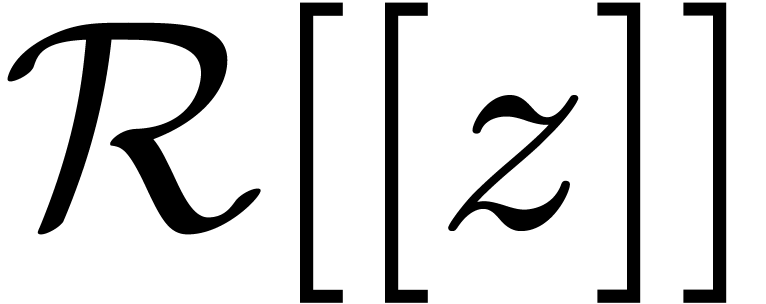

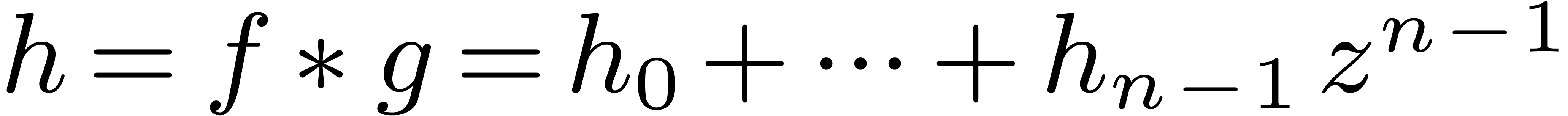

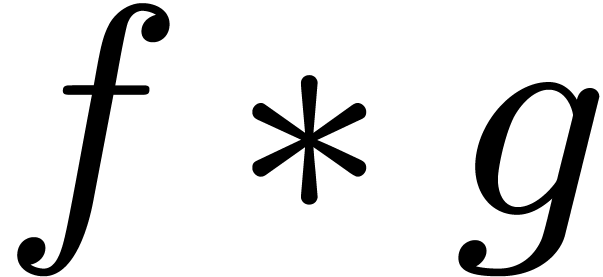

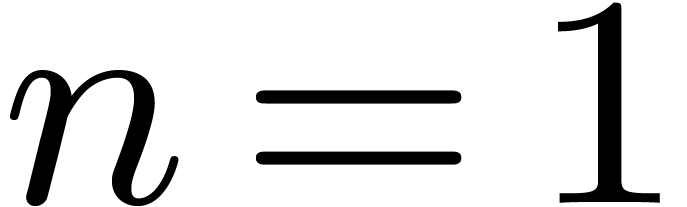

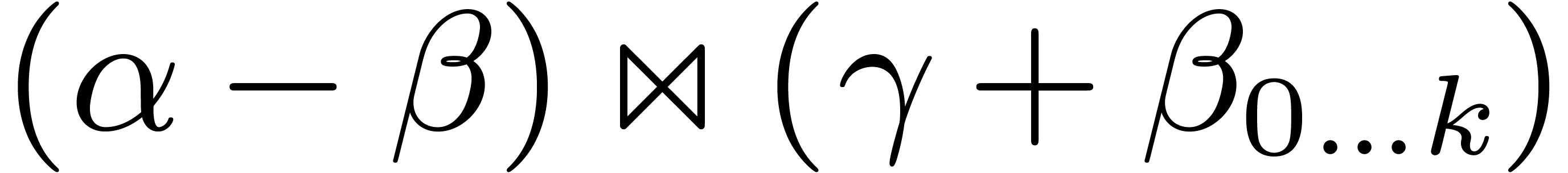

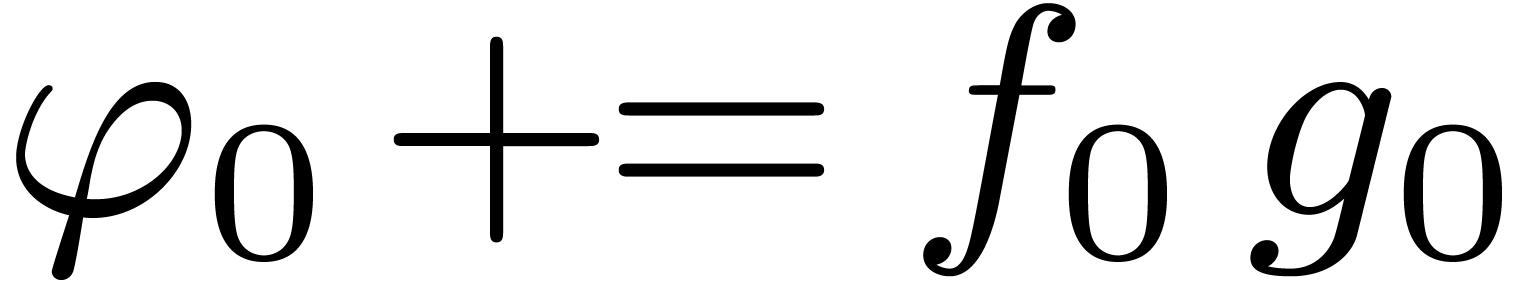

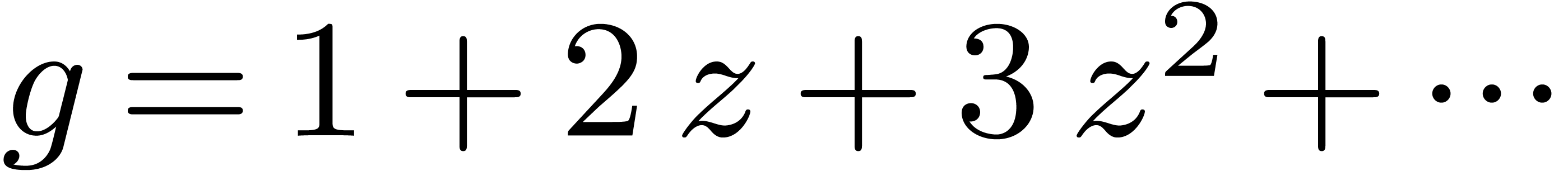

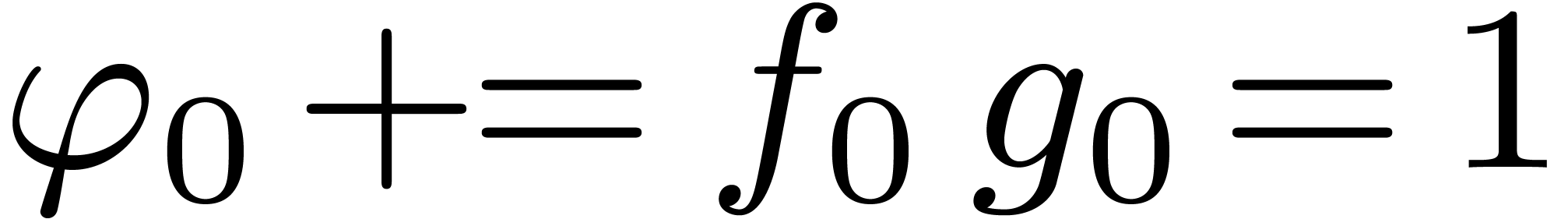

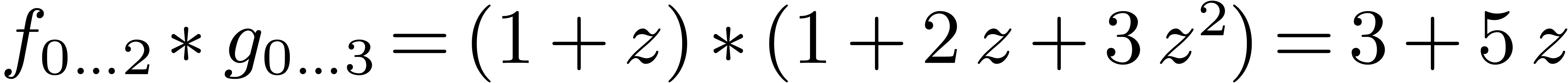

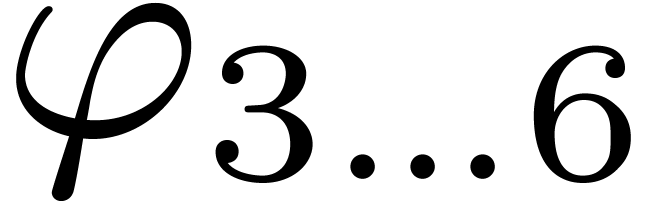

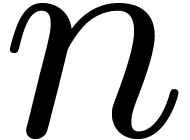

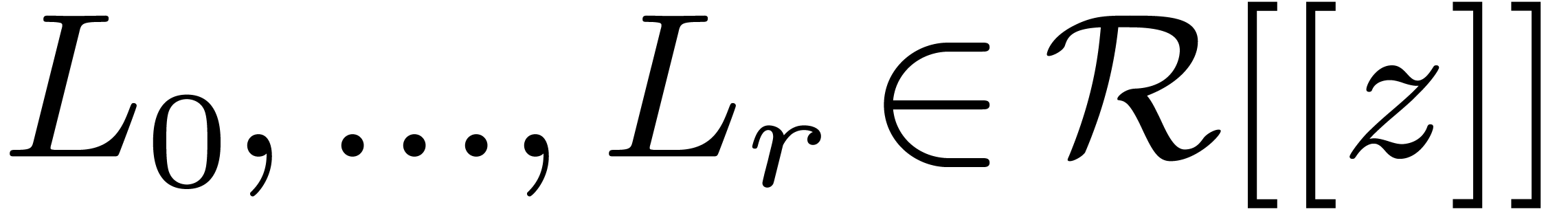

be an effective ring and consider two power

series

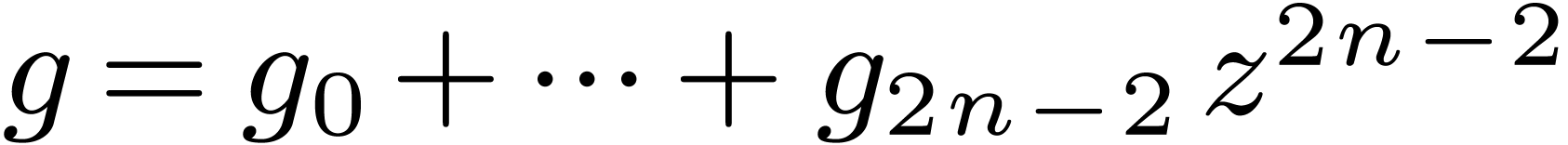

be an effective ring and consider two power

series  and

and  in

in  . In this paper we will be

concerned with the efficient computation of the first

. In this paper we will be

concerned with the efficient computation of the first  coefficients of the product

coefficients of the product  .

.

Dépt. de Mathématiques

(Bât. 425)

Université Paris-Sud

91405 Orsay Cedex

France

Email: joris@texmacs.org

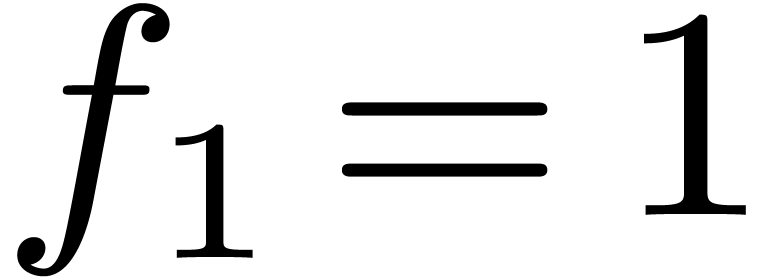

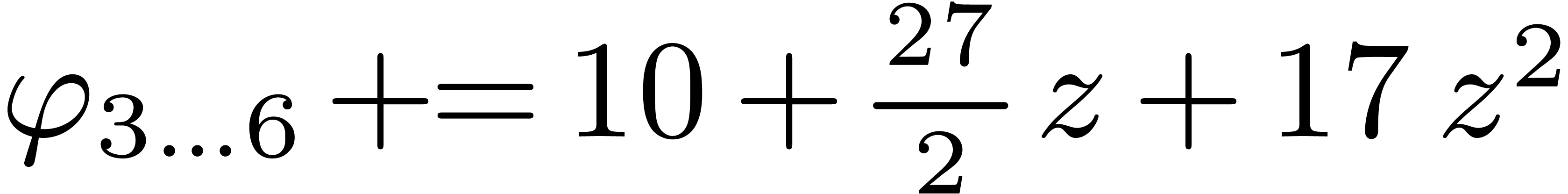

Relaxed multiplication using the

middle product

August 3, 2003

Abstract

In previous work, we have introduced the technique of relaxed power series computations. With this technique, it is possible to solve implicit equations almost as quickly as doing the operations which occur in the implicit equation. In this paper, we present a new relaxed multiplication algorithm for the resolution of linear equations. The algorithm has the same asymptotic time complexity as our previous algorithms, but we improve the space overhead in the divide and conquer model and the constant factor in the F.F.T. model.

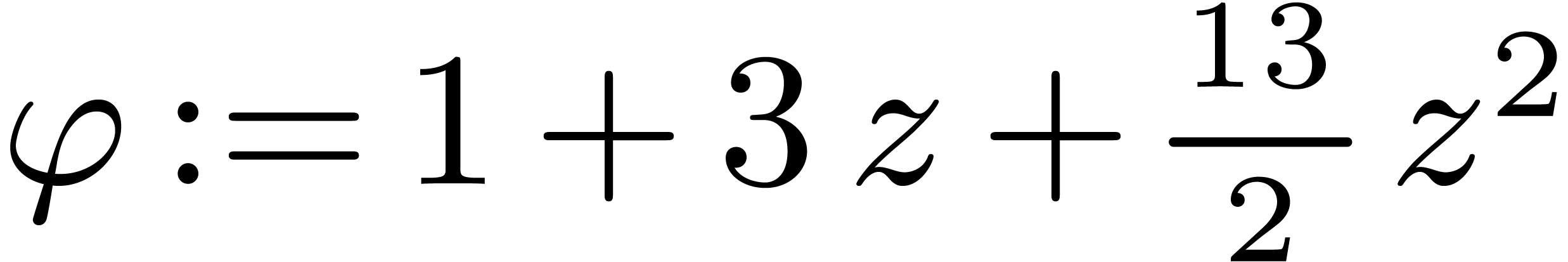

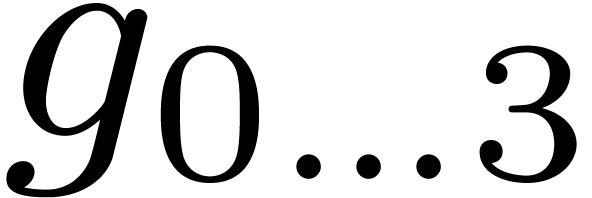

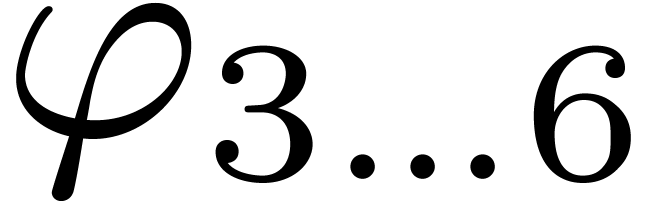

Let  be an effective ring and consider two power

series

be an effective ring and consider two power

series  and

and  in

in  . In this paper we will be

concerned with the efficient computation of the first

. In this paper we will be

concerned with the efficient computation of the first  coefficients of the product

coefficients of the product  .

.

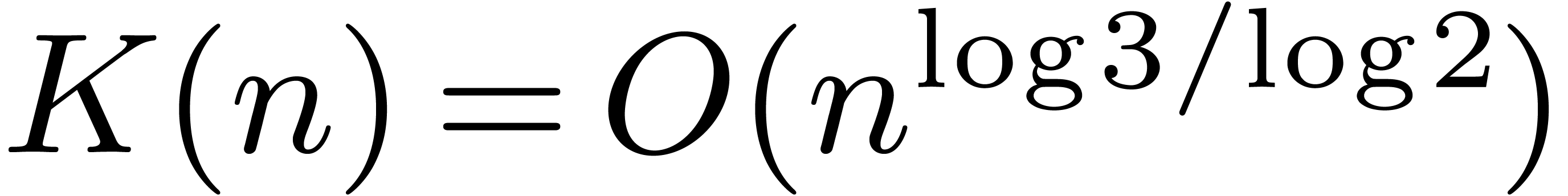

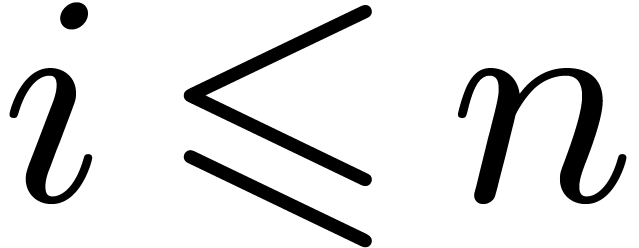

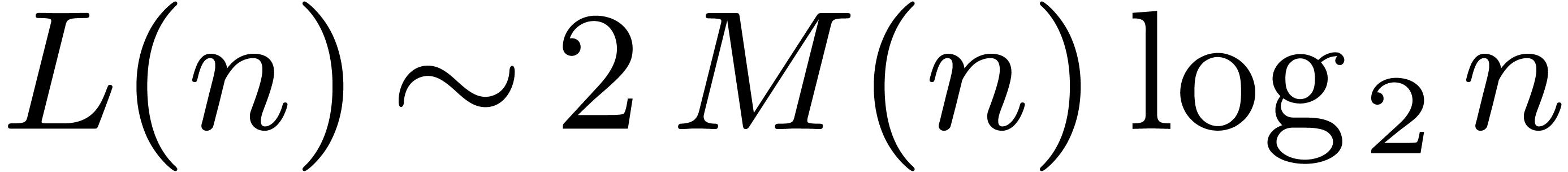

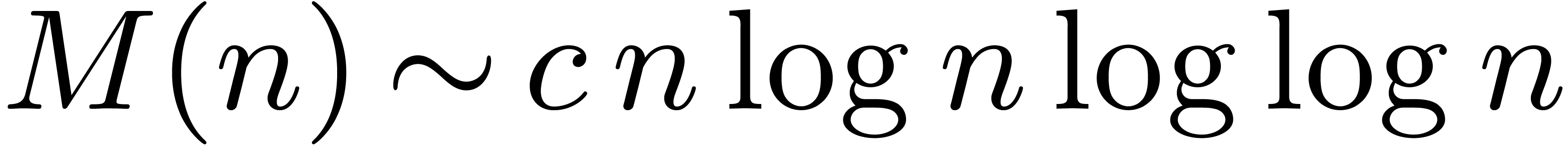

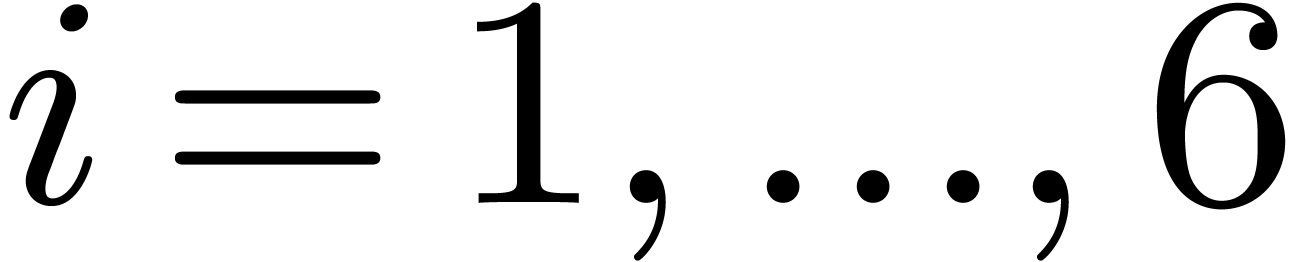

If the first  coefficients of

coefficients of  and

and  are known beforehand, then we may use any

fast multiplication for polynomials in order to achieve this goal, such

as divide and conquer multiplication [KO63, Knu97],

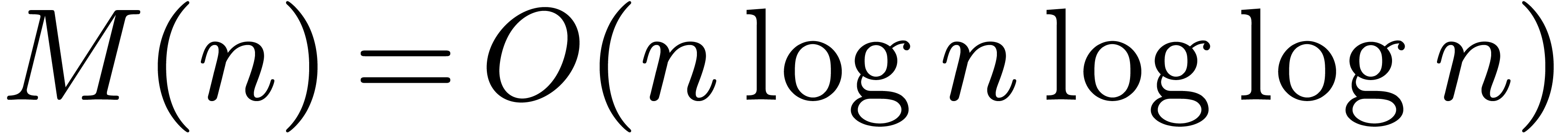

which has a time complexity

are known beforehand, then we may use any

fast multiplication for polynomials in order to achieve this goal, such

as divide and conquer multiplication [KO63, Knu97],

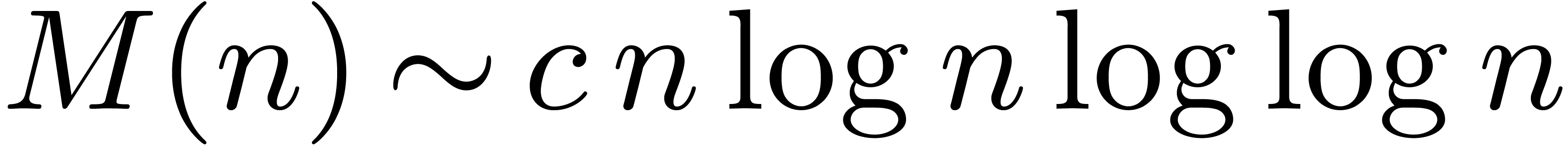

which has a time complexity  ,

or F.F.T. multiplication [CT65, SS71,

CK91, vdH02], which has a time complexity

,

or F.F.T. multiplication [CT65, SS71,

CK91, vdH02], which has a time complexity

.

.

For simplicity, “time complexity” stands for the required

number of operations in  .

Similarly, “space complexity” will stand for the number of

elements of

.

Similarly, “space complexity” will stand for the number of

elements of  which need to be stored. The

required number of multiplications

which need to be stored. The

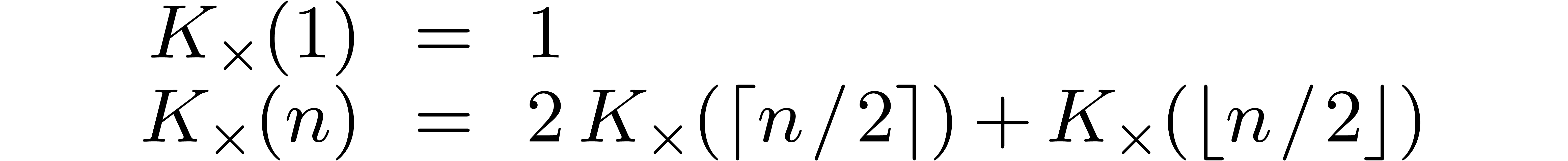

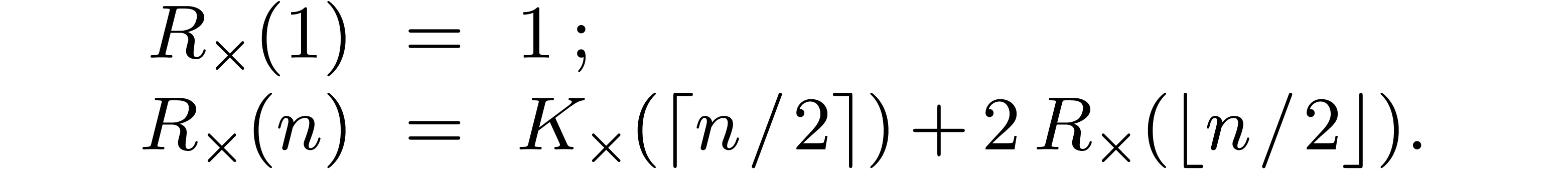

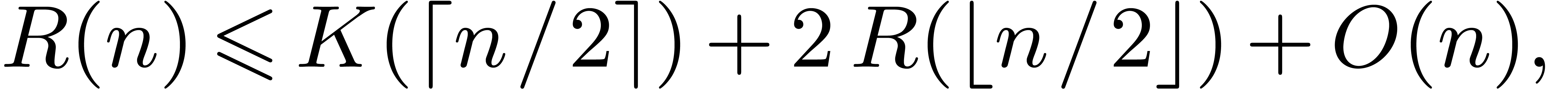

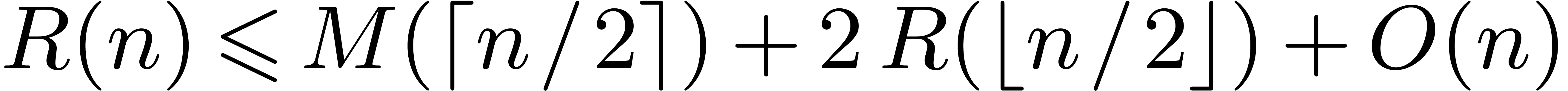

required number of multiplications  in the divide

and conquer algorithm satisfies the following recurrence relations:

in the divide

and conquer algorithm satisfies the following recurrence relations:

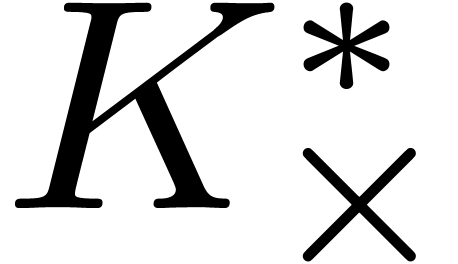

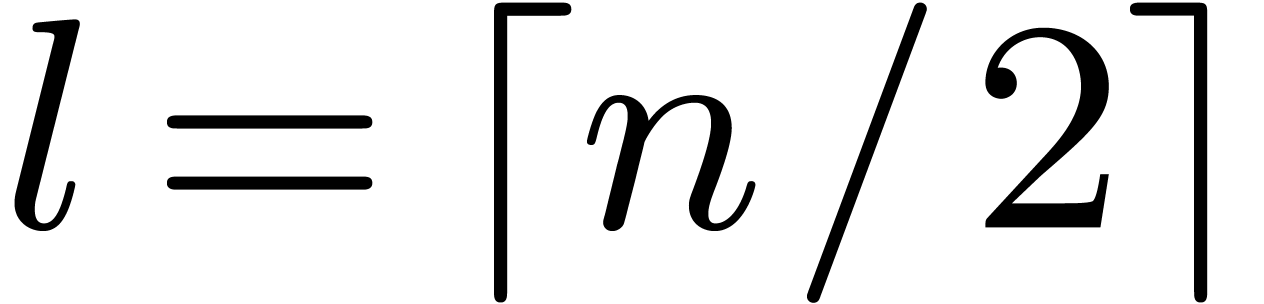

When performing computing only the product truncated at order  , then the number of

multiplications

, then the number of

multiplications  needed by the divide and conquer

algorithm becomes

needed by the divide and conquer

algorithm becomes

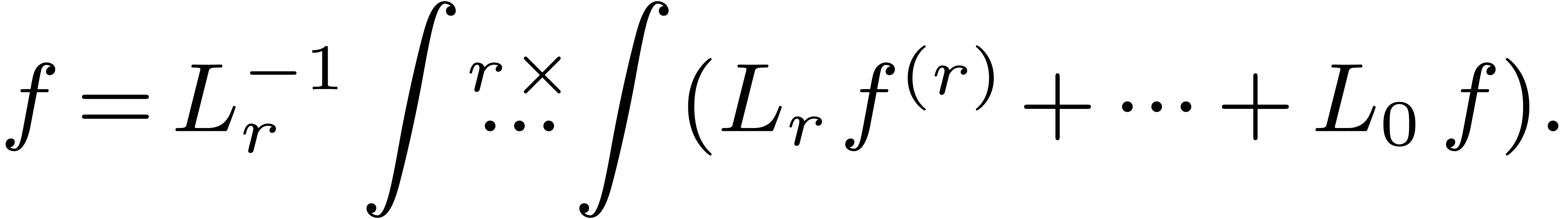

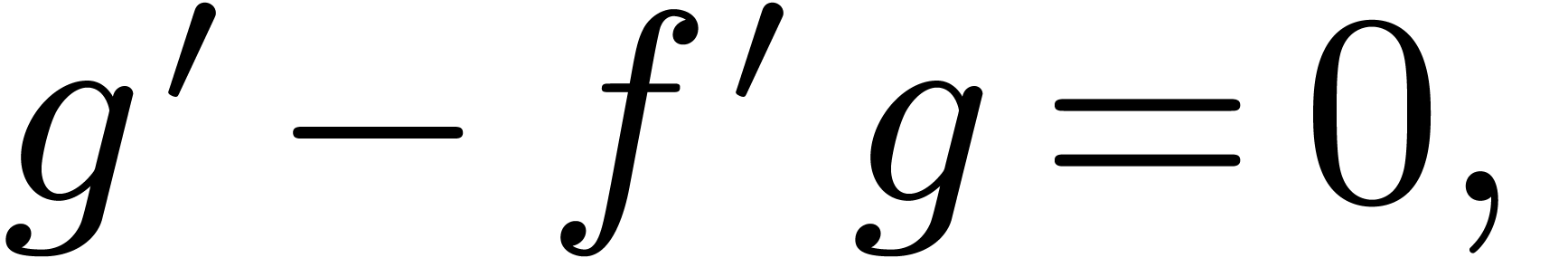

For certain computations, and most importantly the resolution of

implicit equations, it is interesting to have so called “relaxed

algorithms” which output the first  coefficients of

coefficients of  as soon as the first

as soon as the first  coefficients of

coefficients of  and

and  are known for each

are known for each  .

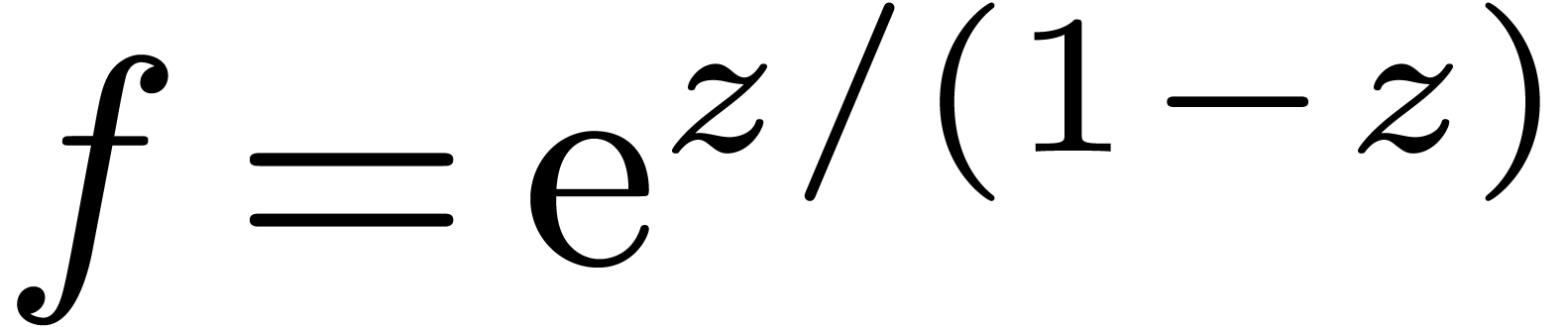

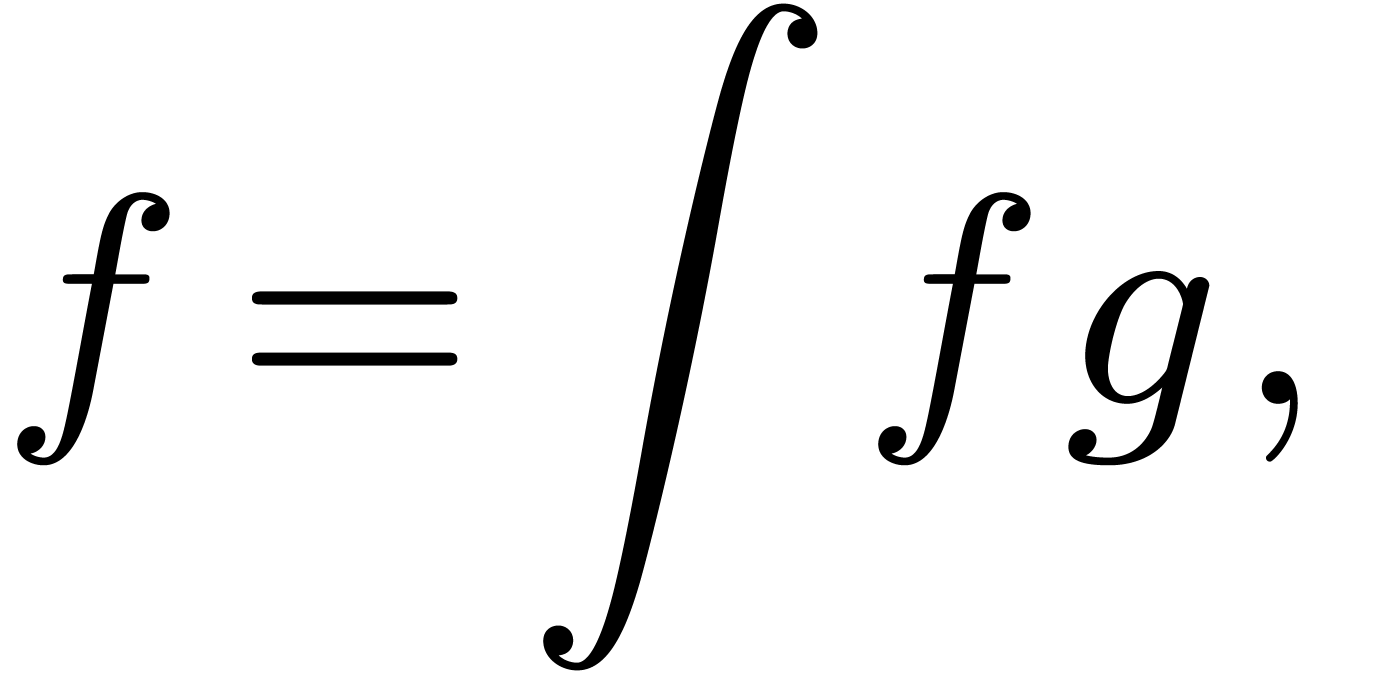

This allows for instance the computation of the exponential

.

This allows for instance the computation of the exponential  of a series

of a series  with

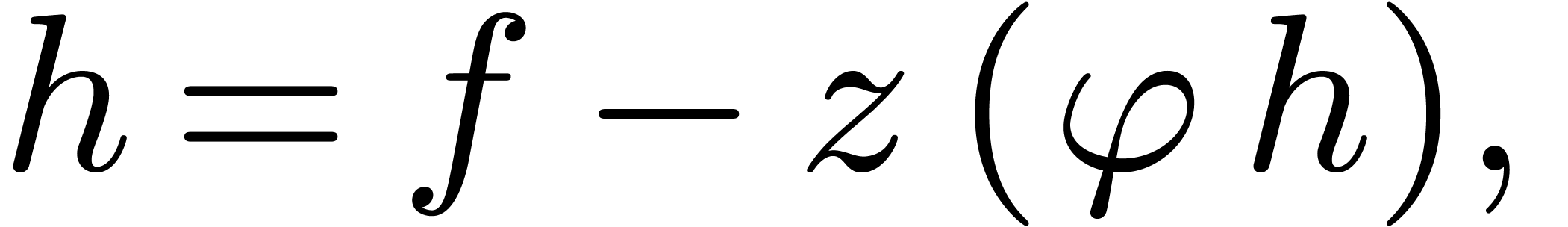

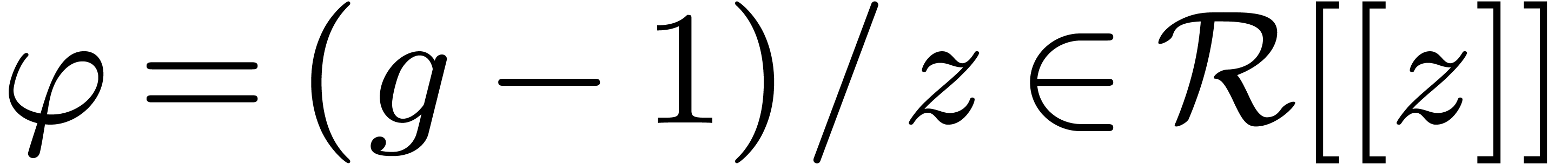

with  using the formula

using the formula

|

(1) |

In [vdH97, vdH02], we proved the following two theorems:

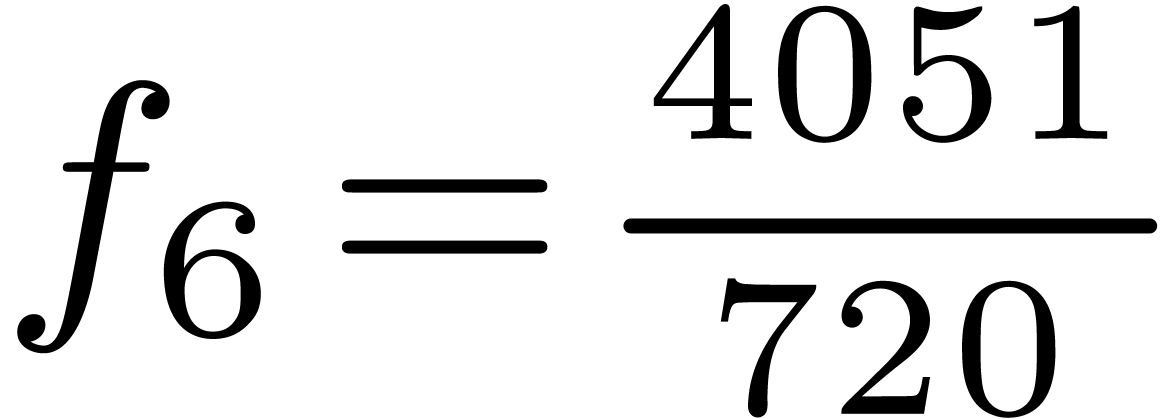

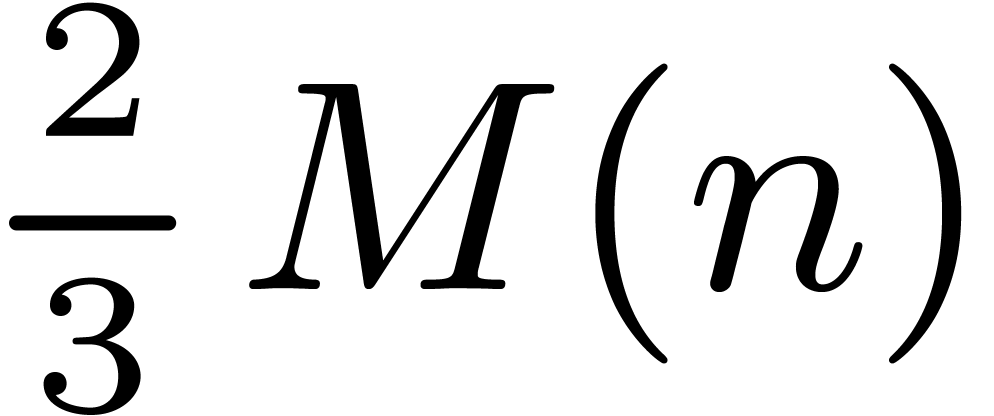

Theorem  and space complexity

and space complexity  ,

and which uses

,

and which uses  multiplications.

multiplications.

Theorem  and space complexity

and space complexity  .

.

Although these theorems are satisfactory from a theoretical point of view, they can be improved in two directions: by removing the logarithmic space overhead in the divide and conquer model and by improving the constant factor in the F.F.T. model.

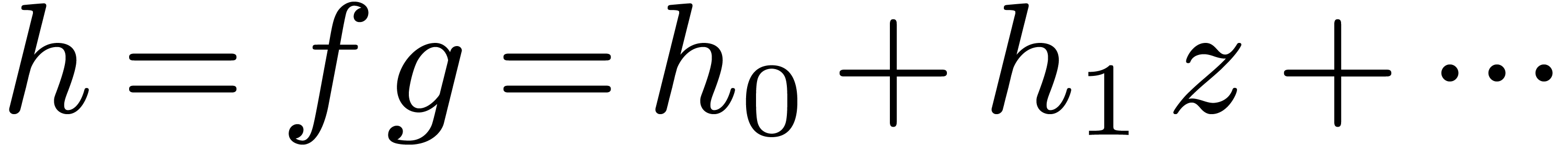

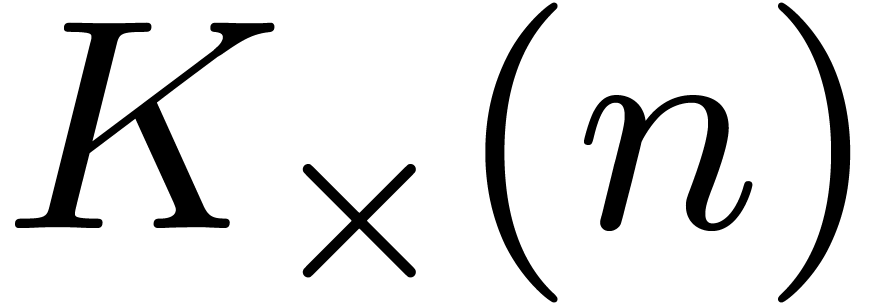

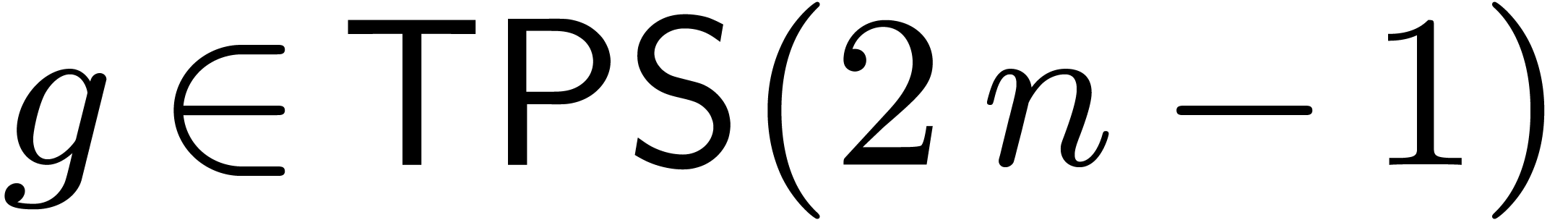

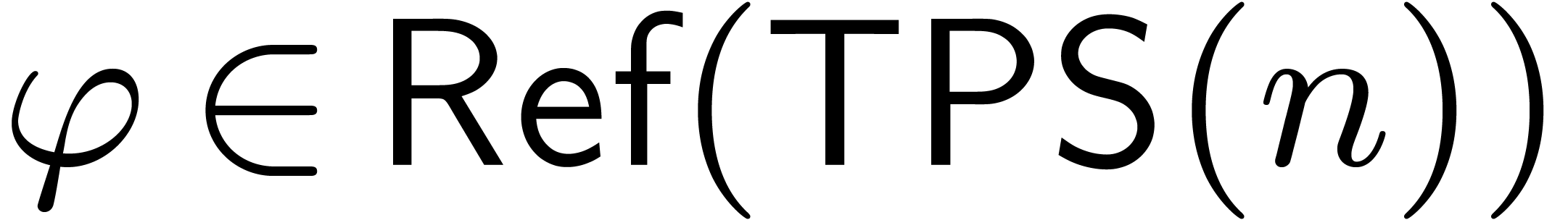

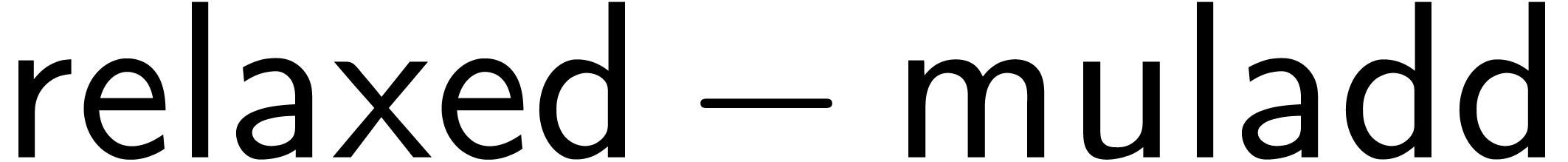

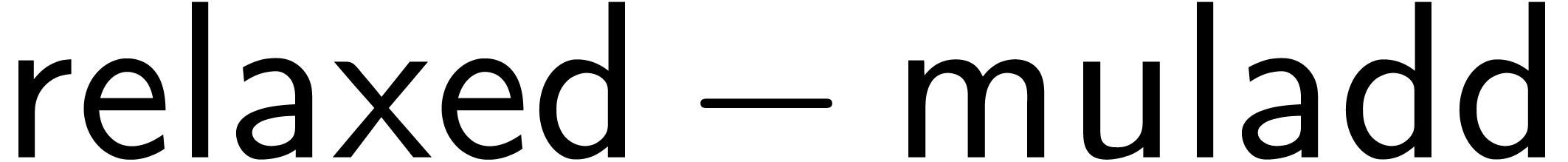

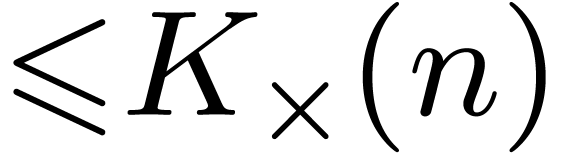

In this paper, we will present such an improved algorithm in the case of

relaxed multiplication with a fixed series. More precisely, let  and

and  be power series, such

that

be power series, such

that  is known up to order

is known up to order  . Then our algorithm will compute the product

. Then our algorithm will compute the product  up to order

up to order  and output

and output  as soon as

as soon as  are known, for all

are known, for all

. We will prove the

following:

. We will prove the

following:

Theorem  , of space

complexity

, of space

complexity  , and which uses

, and which uses

multiplications.

multiplications.

We also obtain a better constant factor in the asymptotic complexity in the F.F.T. model, but this result is harder to state in a precise theorem.

The algorithm is useful for the relaxed resolution of linear

differential or difference equations. For instance, the exponential of a

series can be computed using  multiplications in

multiplications in

. Moreover, the new algorithm

is very simple to implement, so it is likely to require less overhead

than the algorithm from theorem 1.

. Moreover, the new algorithm

is very simple to implement, so it is likely to require less overhead

than the algorithm from theorem 1.

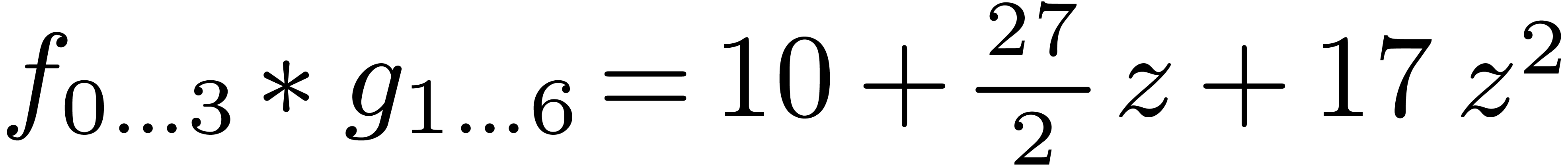

Our algorithm is based on the recent middle product algorithm [HQZ00, HQZ02], which is recalled in section 2. In section 3 we present our new algorithm and in section 5 we give some applications.

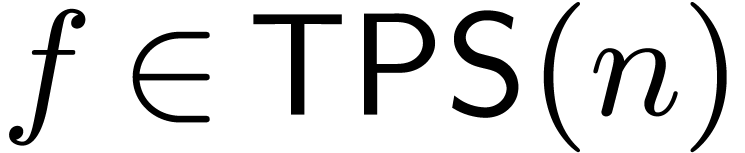

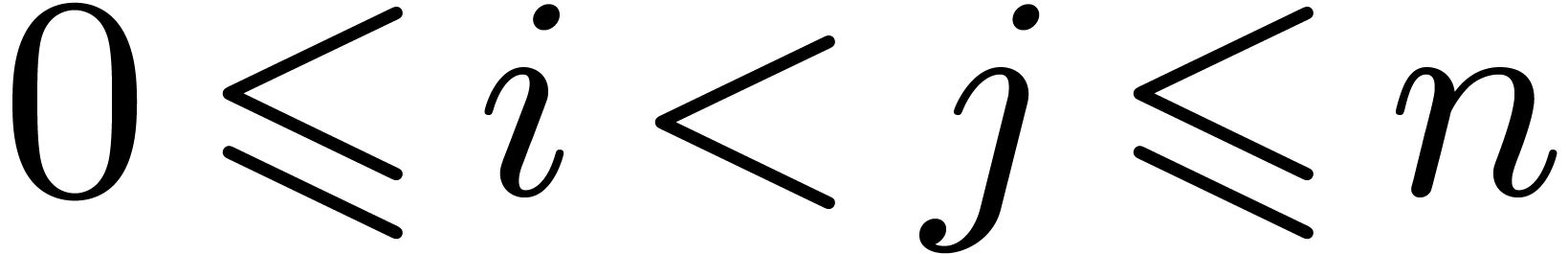

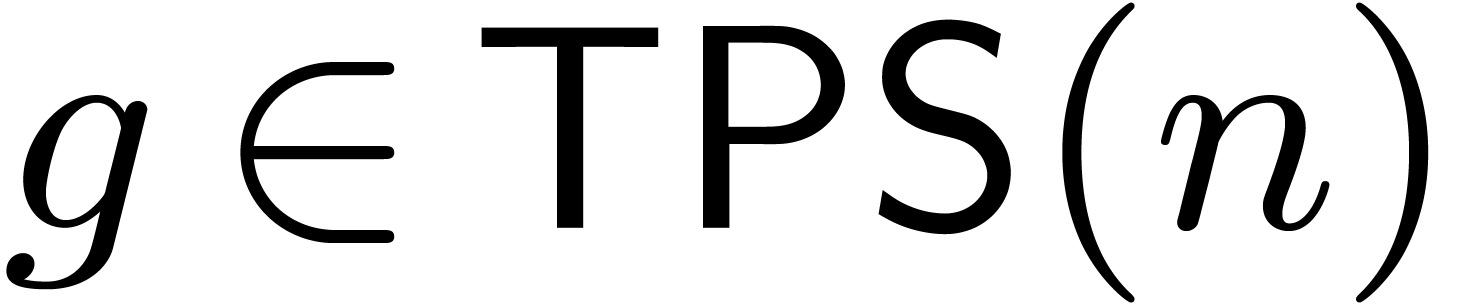

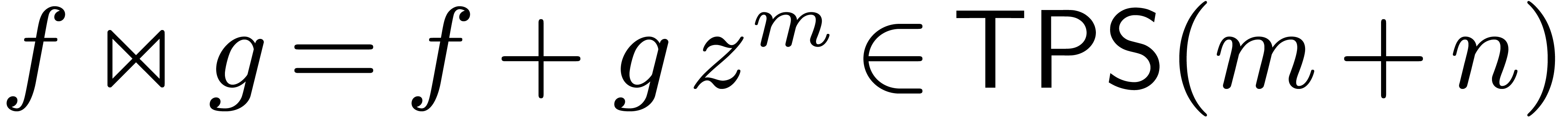

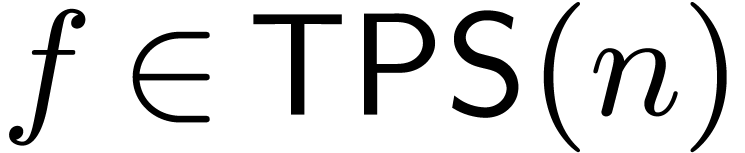

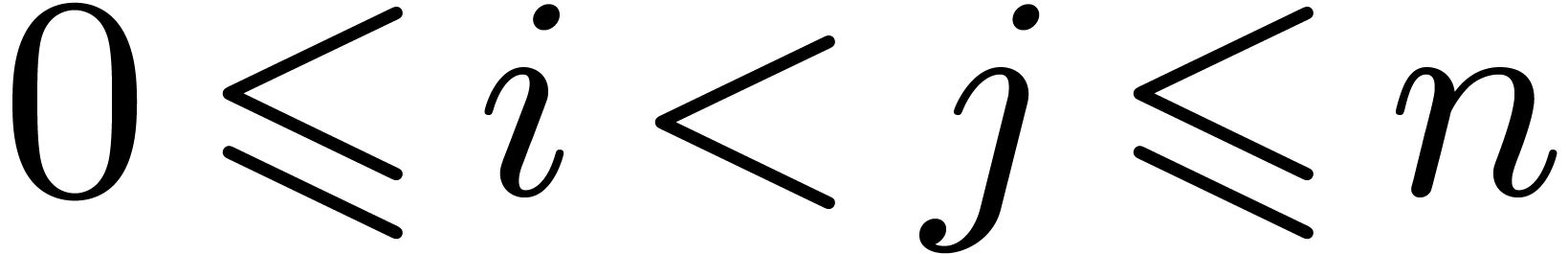

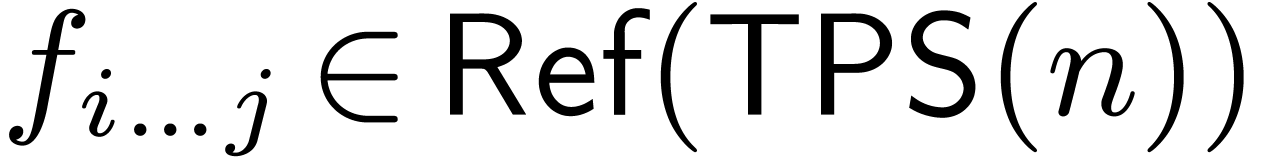

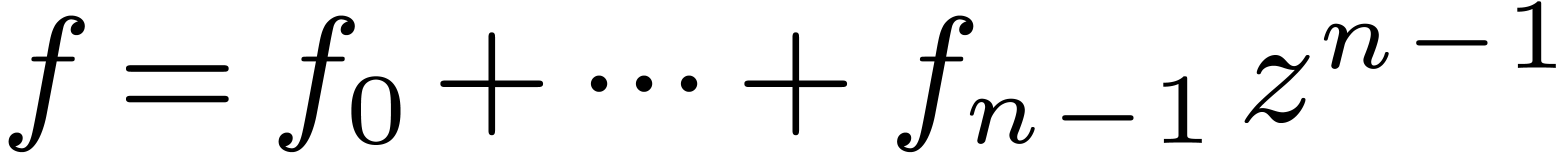

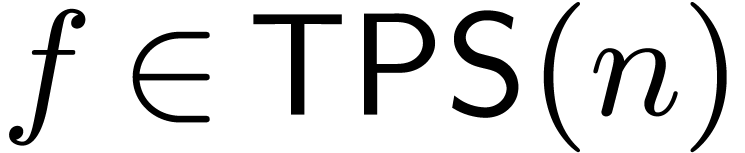

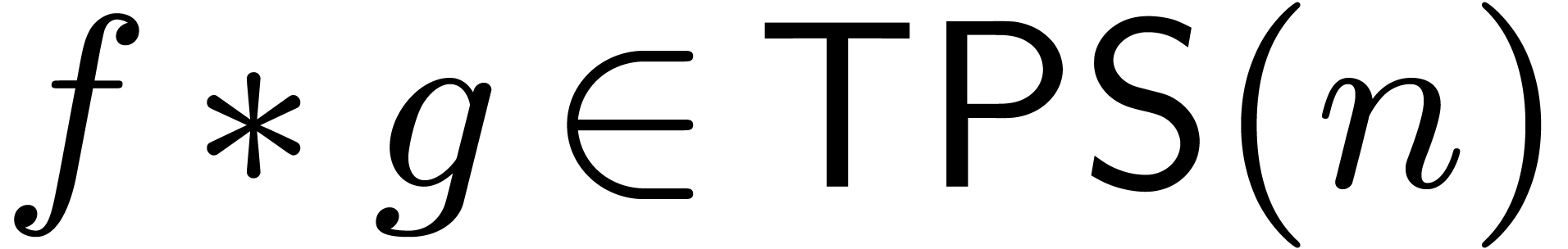

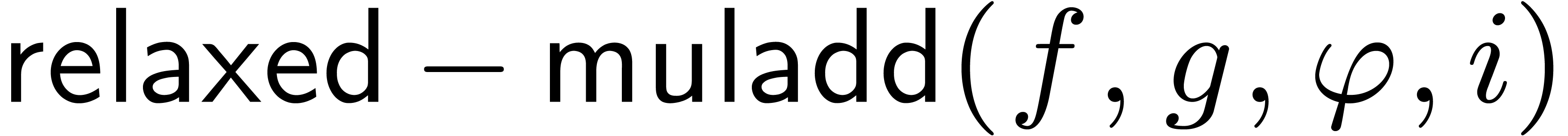

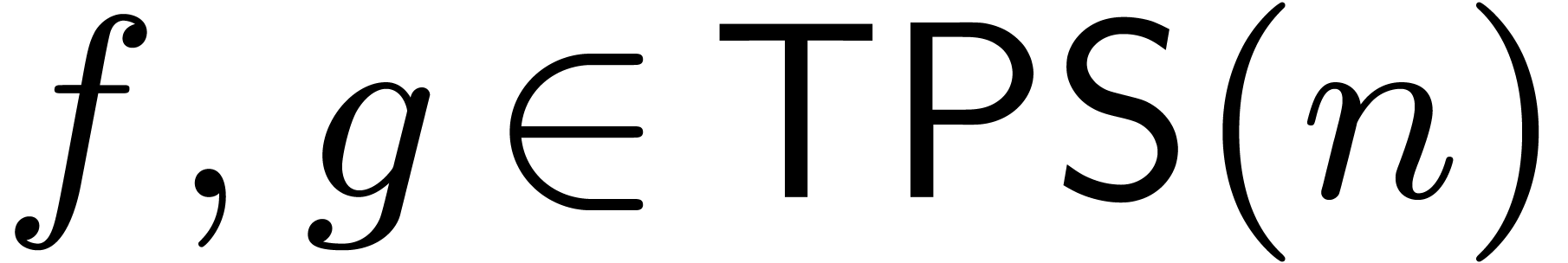

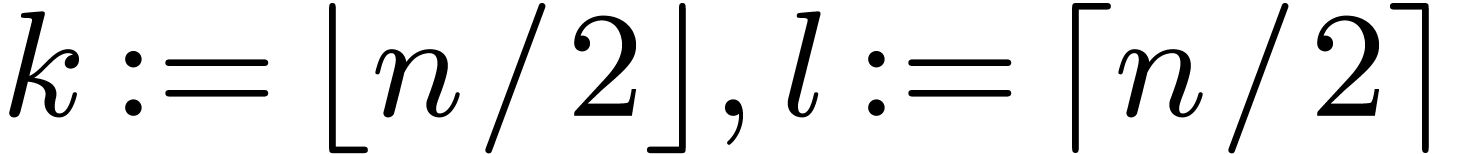

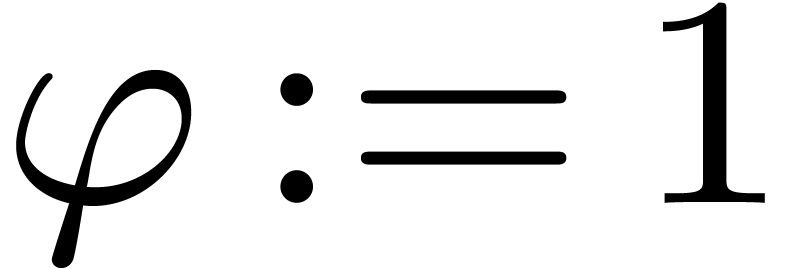

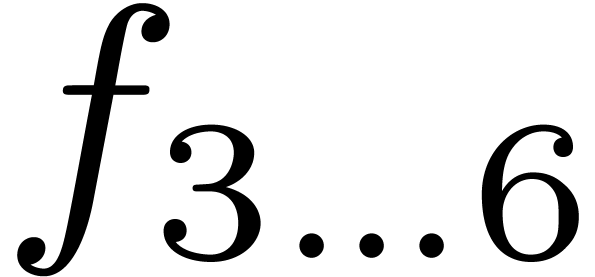

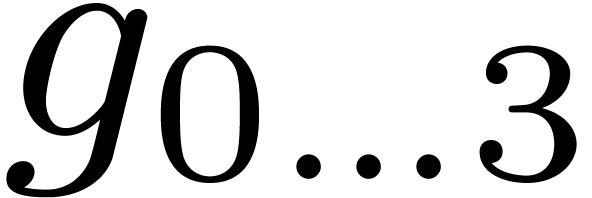

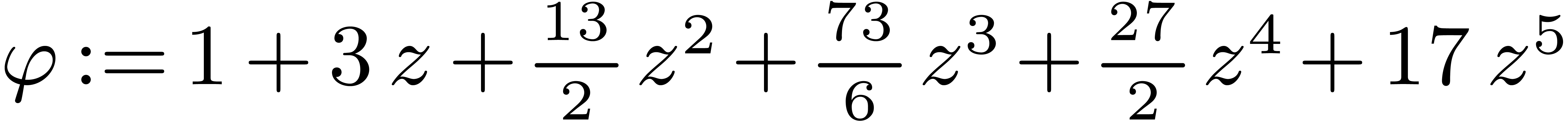

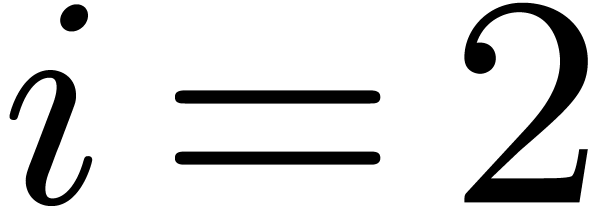

In our algorithms we will use the following notations: the data type

stands for truncated power series of order

stands for truncated power series of order  , like

, like  . Given

. Given  and

and  , we will denote

, we will denote  .

Given

.

Given  and

and  ,

we also denote

,

we also denote  . We will

denote by

. We will

denote by  the type, whose elements are

references to elements of type

the type, whose elements are

references to elements of type  .

If

.

If  and

and  ,

then we assume that

,

then we assume that  .

.

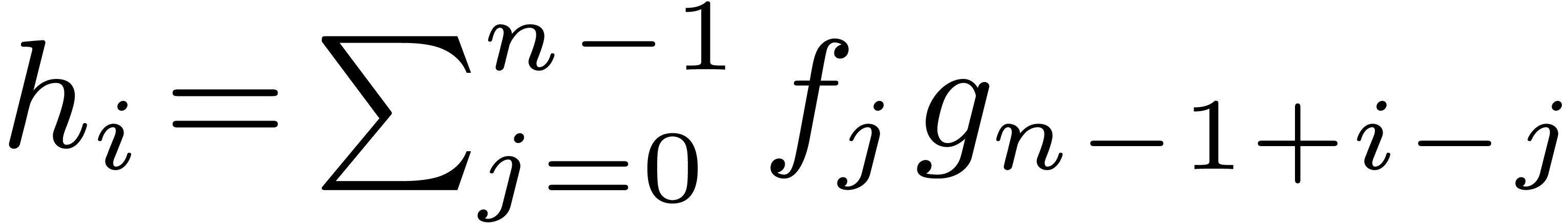

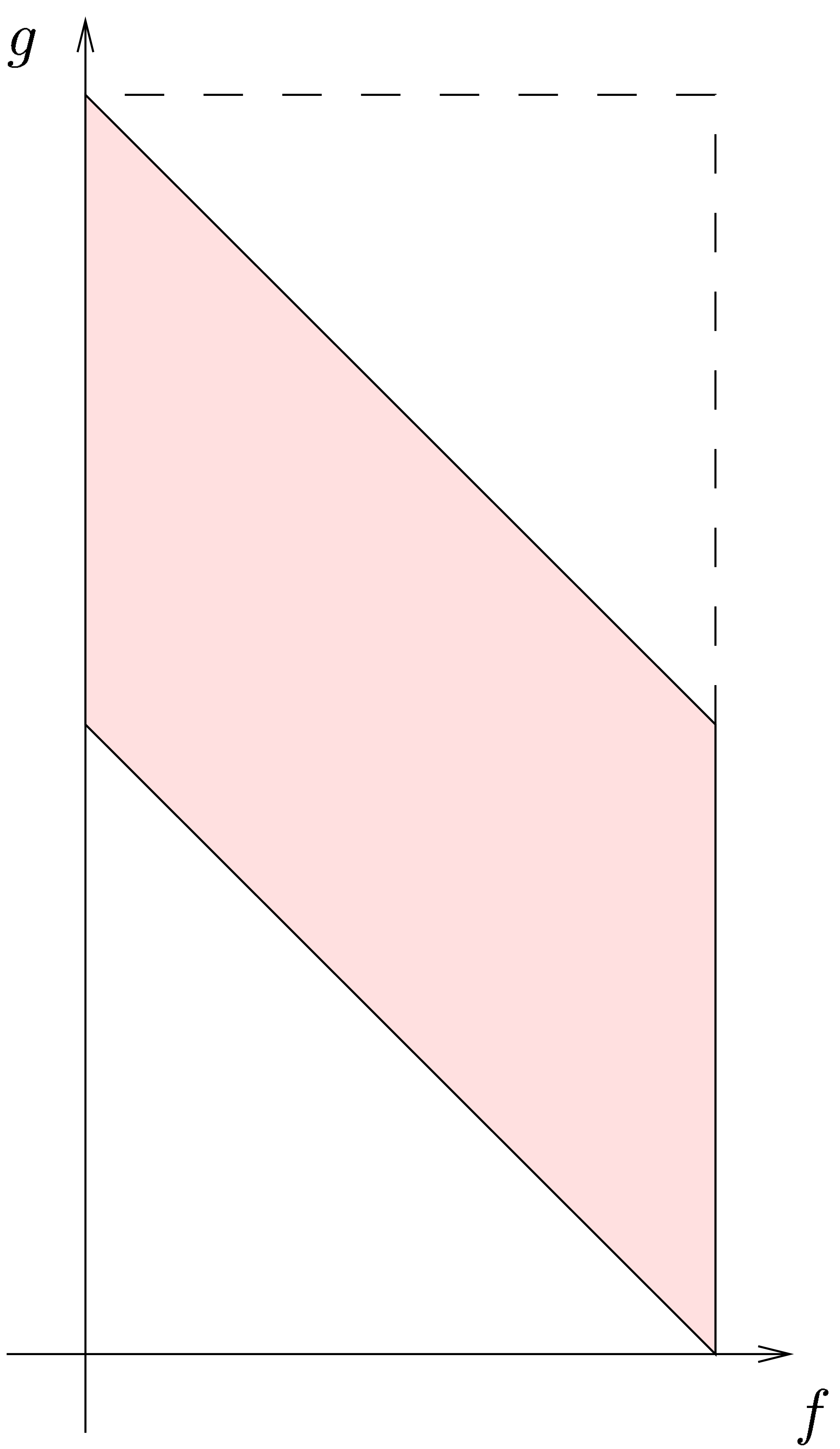

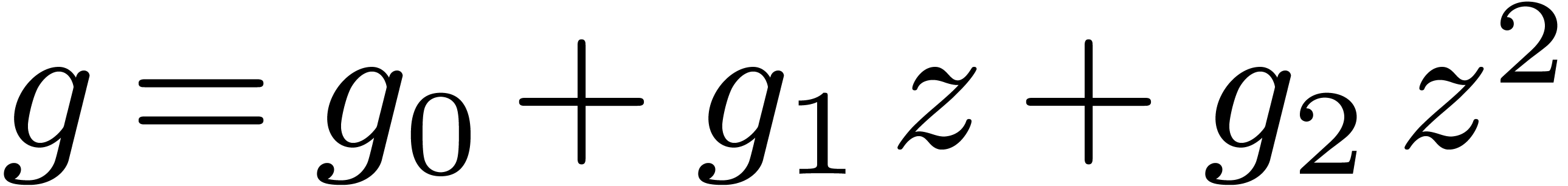

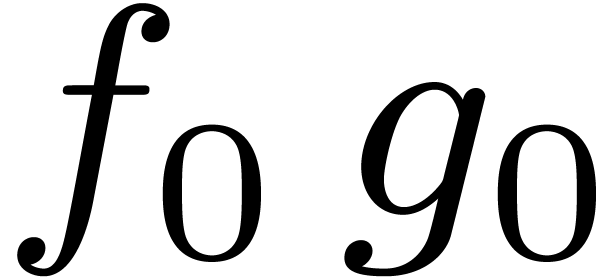

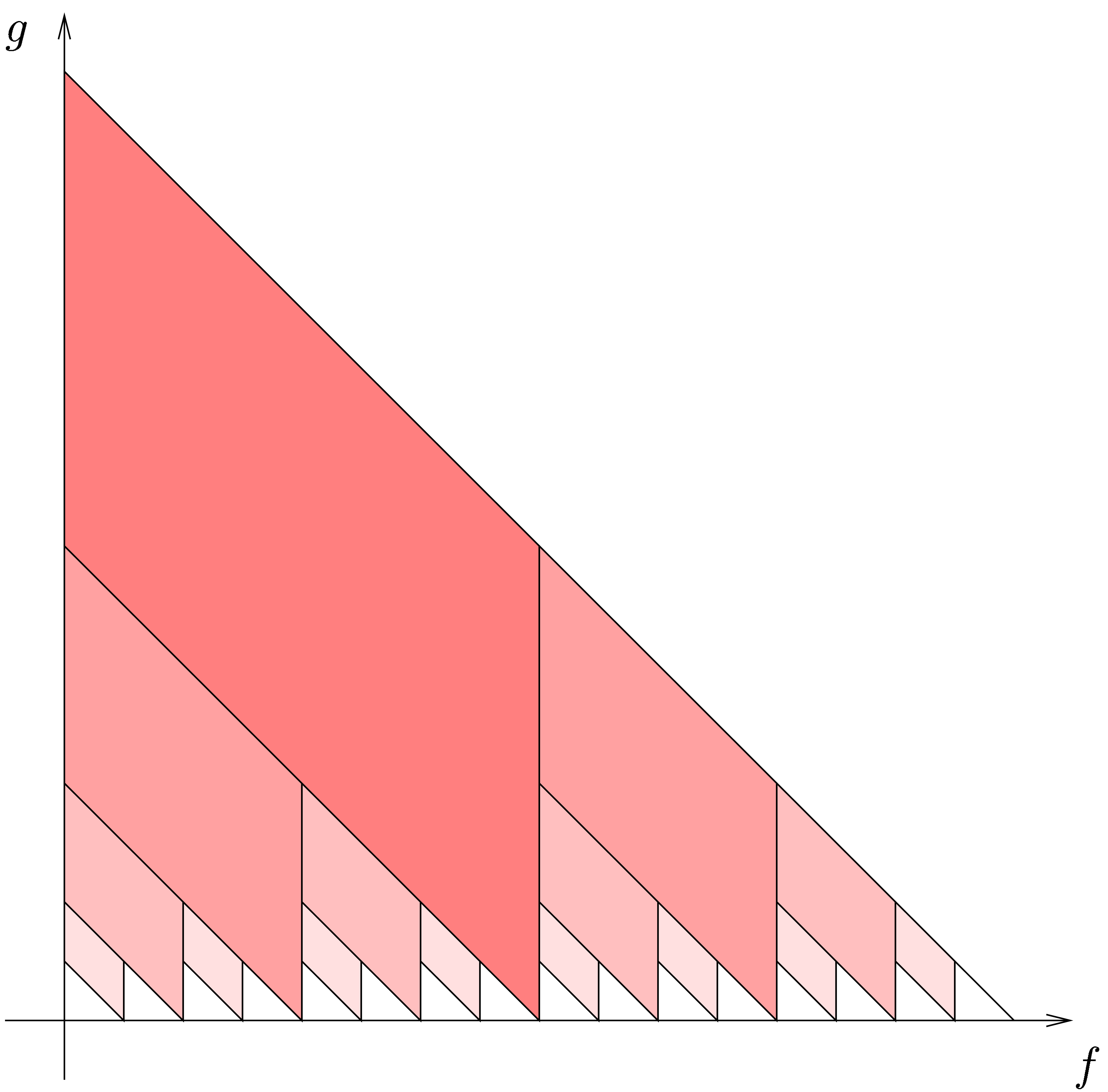

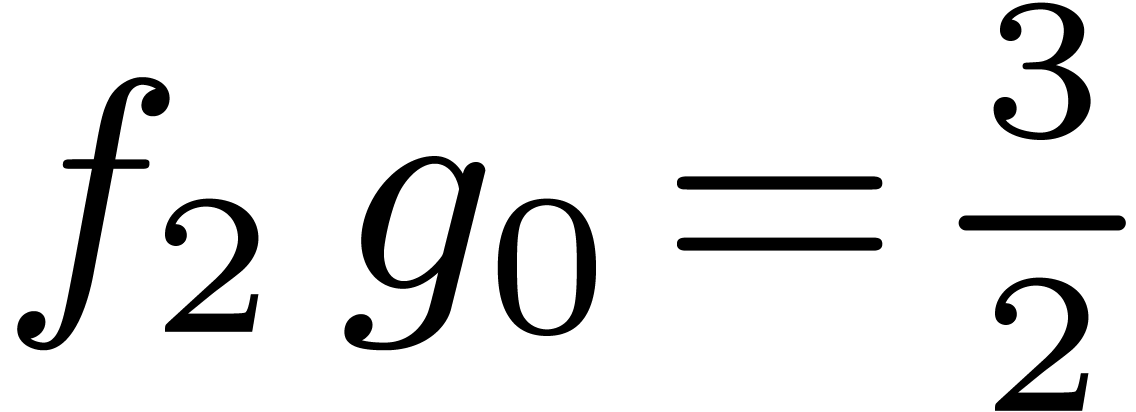

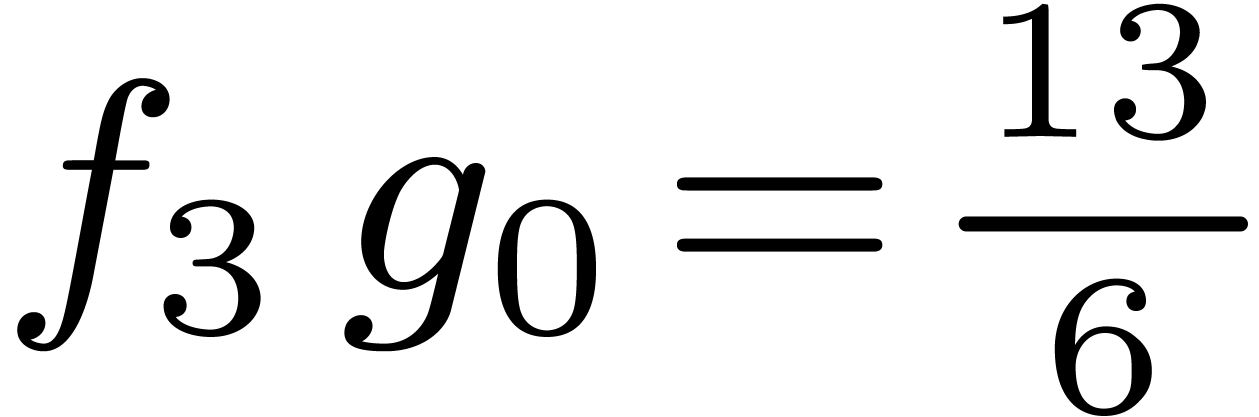

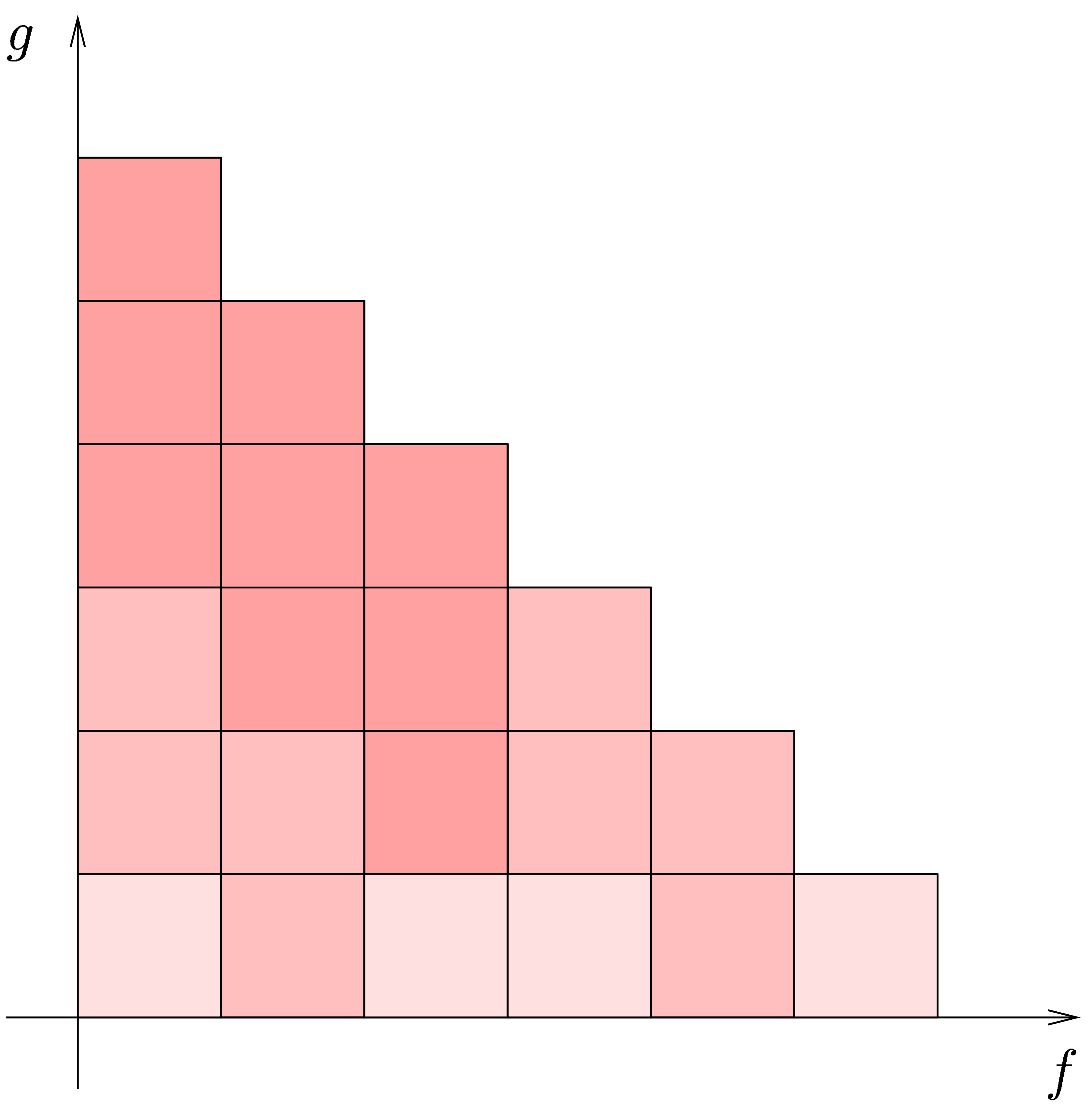

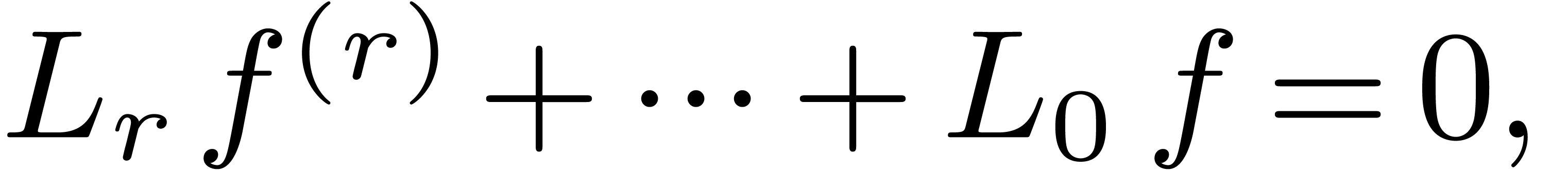

Let  and

and  be two truncated

power series at orders

be two truncated

power series at orders  resp.

resp.  . The middle product

. The middle product  and

and  is defined to be the

truncated power series

is defined to be the

truncated power series  of order

of order  , such that

, such that  for all

for all

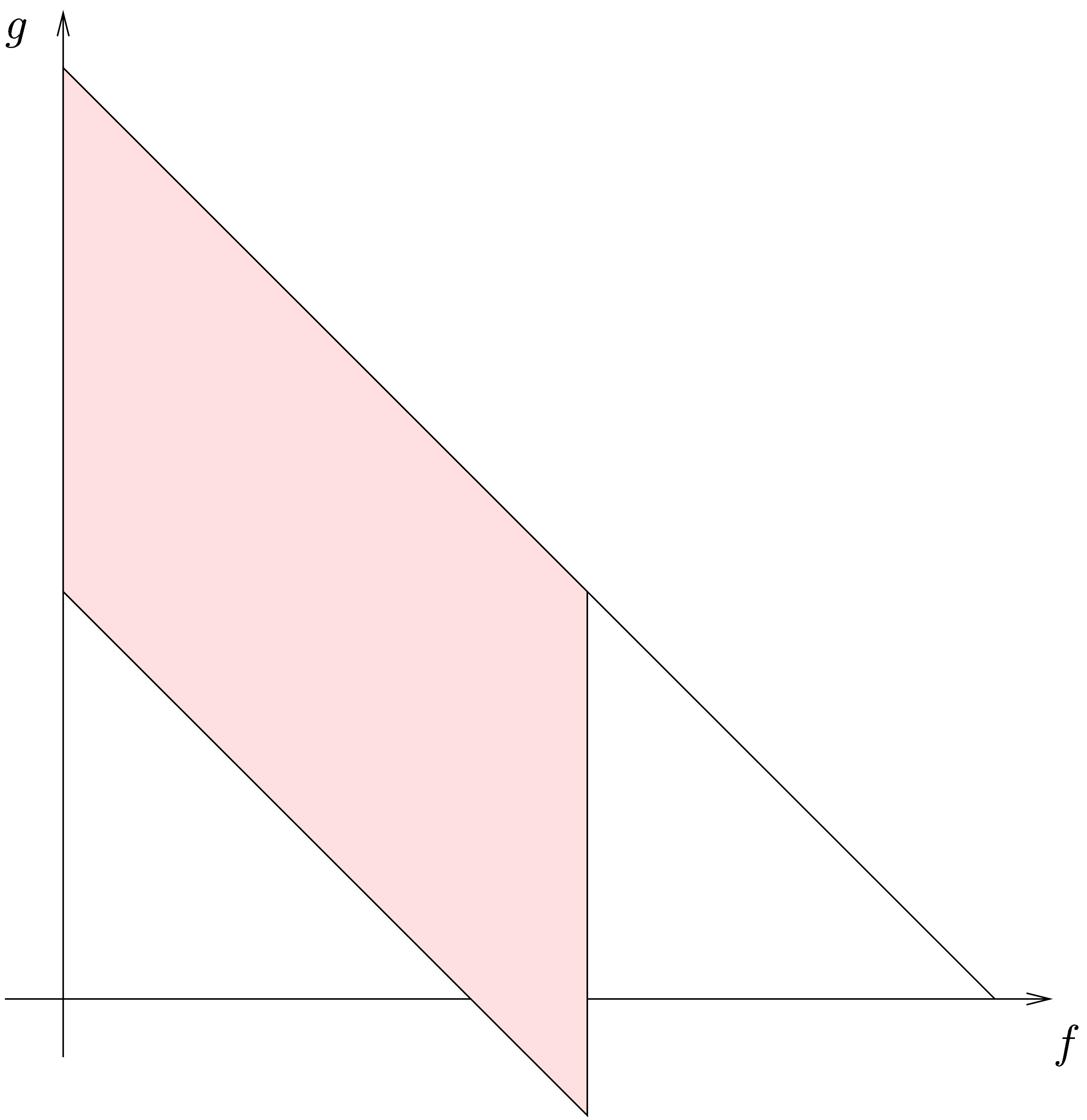

. In figure 1,

. In figure 1,

corresponds to the colored region.

corresponds to the colored region.

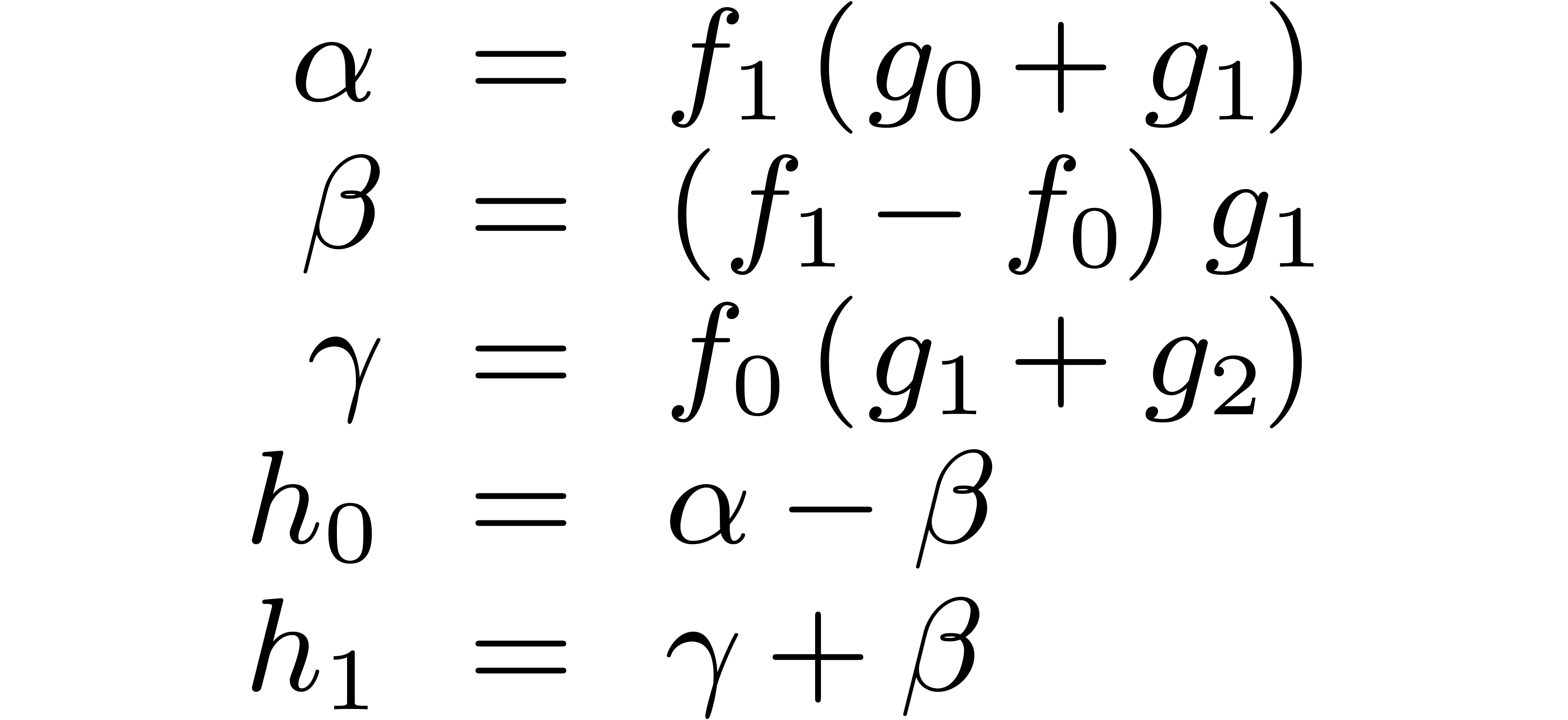

The middle product of  and

and  can be computed using only three multiplications, using the following

trick:

can be computed using only three multiplications, using the following

trick:

This trick may be applied recursively in order to yield an algorithm

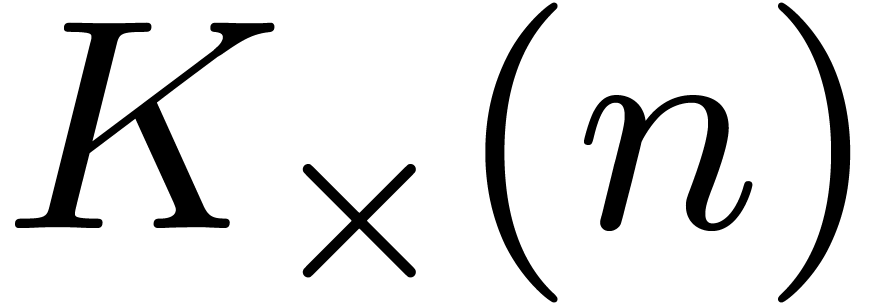

which needs exactly the same number of multiplications  as the divide and conquer algorithm for the computation of the product

of two polynomials of degree

as the divide and conquer algorithm for the computation of the product

of two polynomials of degree  .

More precisely, the following recursive algorithm comes from [HQZ00,

HQZ02].

.

More precisely, the following recursive algorithm comes from [HQZ00,

HQZ02].

Algorithm

Input  and

and

Output their middle product

then

return

then

return

is even

is even

then

else

In [HQZ02] it is also shown that, in the F.F.T. model, the middle product can still be computed in essentially the same time as the product of two polynomials.

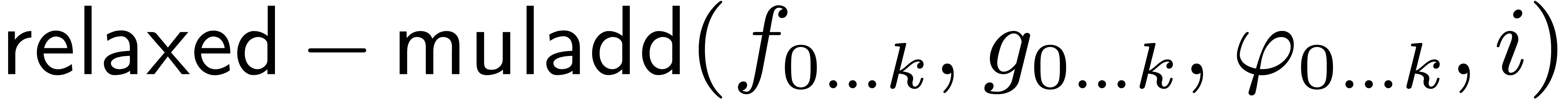

Let  and

and  be power series,

such that

be power series,

such that  is known up to order

is known up to order  . In this section, we present an algorithm

which computes the product

. In this section, we present an algorithm

which computes the product  up to order

up to order  . For each

. For each  , the algorithm outputs

, the algorithm outputs  as

soon as

as

soon as  are known.

are known.

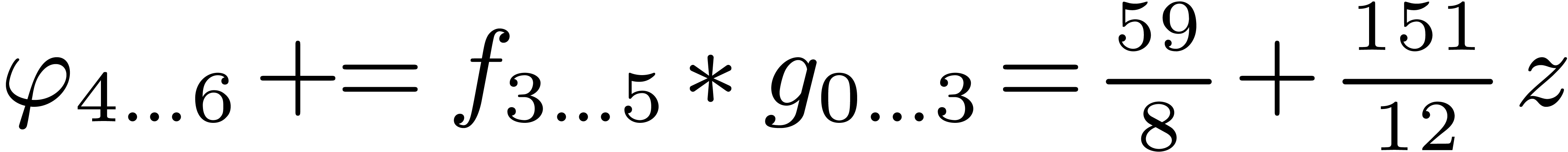

The idea of our algorithm is similar to the idea behind fast relaxed multiplication in [vdH97, vdH02] and based on a subdivision of the triangular area which corresponds to the computation of the truncated power series product. This subdivision is shown in figures 2 and 3, where each parallelogram corresponds to the computation of a middle product.

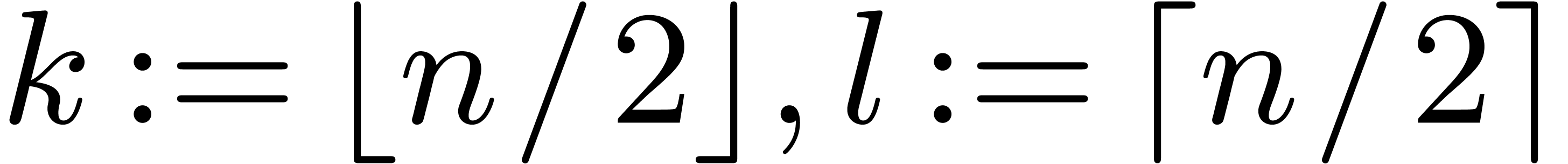

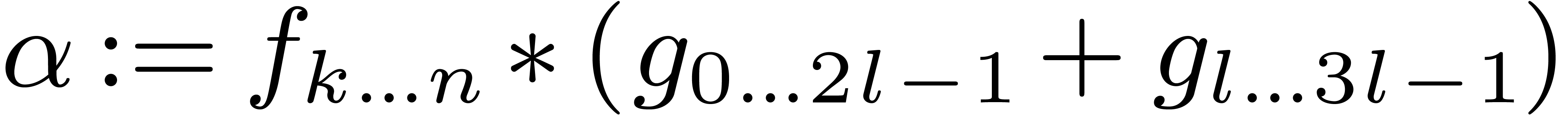

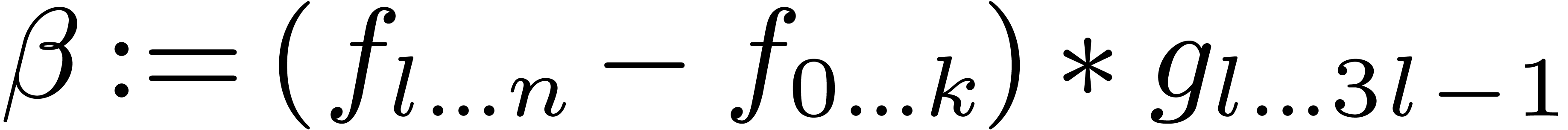

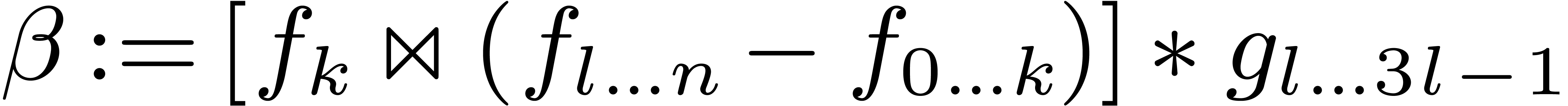

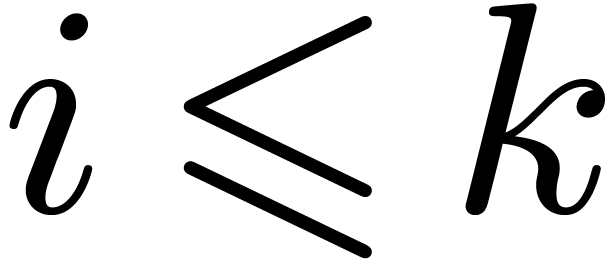

More precisely, let  and assume that

and assume that  are known. Then the contribution of

are known. Then the contribution of  to

to  may be computed using the middle product

algorithm from the previous section. The relaxed truncated products

may be computed using the middle product

algorithm from the previous section. The relaxed truncated products  and

and  may be computed

recursively.

may be computed

recursively.

In order to implement this idea, we will use an in-place algorithm,

which adds the result of  to a reference

to a reference  to an element of

to an element of  .

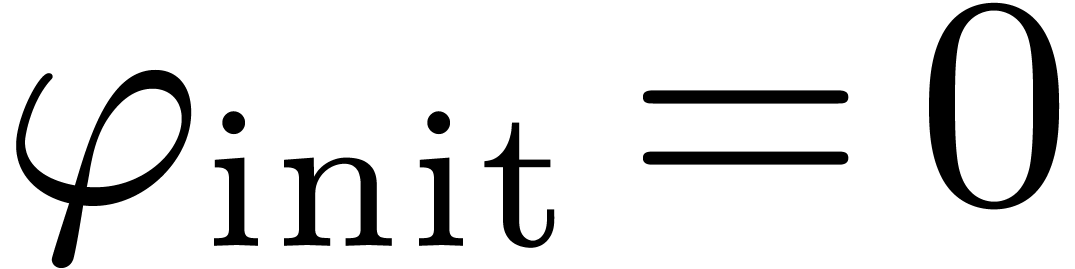

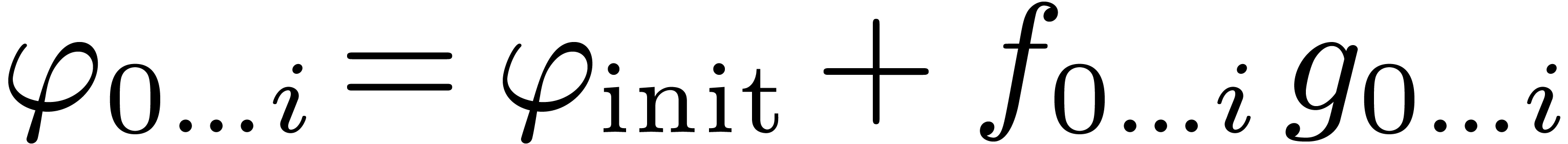

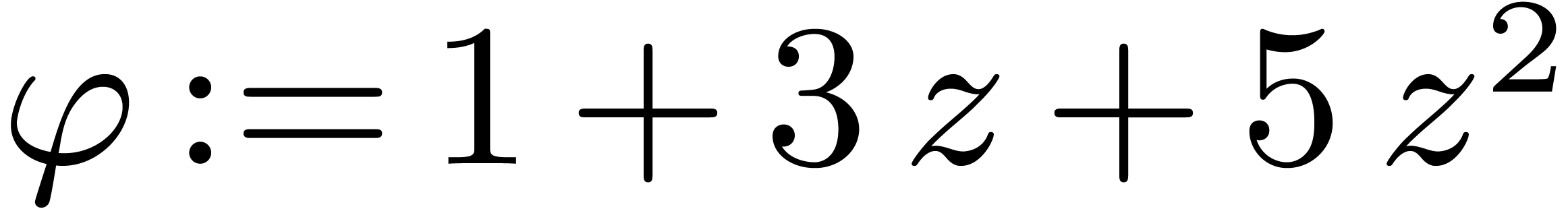

Denote by

.

Denote by  the initial value of

the initial value of  . Then the in-place algorithm should be called

successively for

. Then the in-place algorithm should be called

successively for  . After the

last call, we have

. After the

last call, we have  . Taking

. Taking

, the algorithm computes

, the algorithm computes

.

.

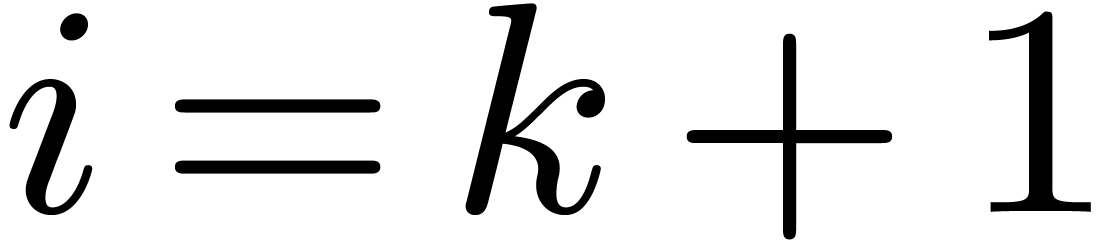

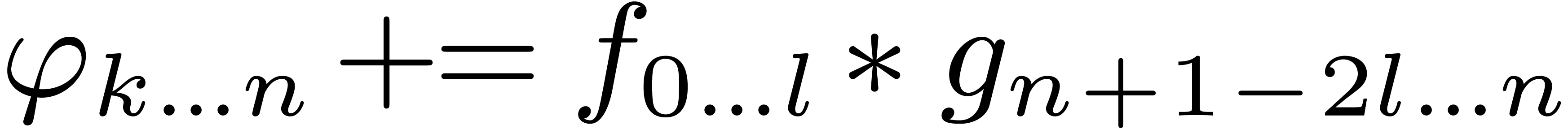

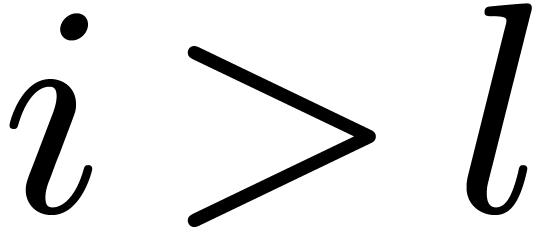

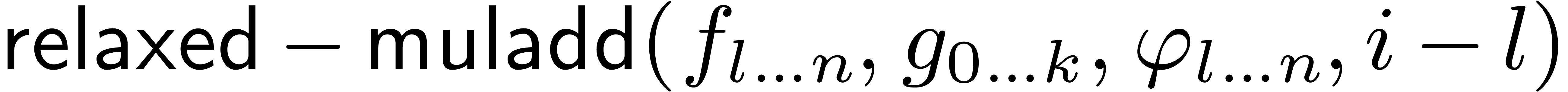

Algorithm

Input  ,

,

,

,  .

.

Action we have  on exit.

on exit.

then

then  and return

and return

then

then

then

then

then

then

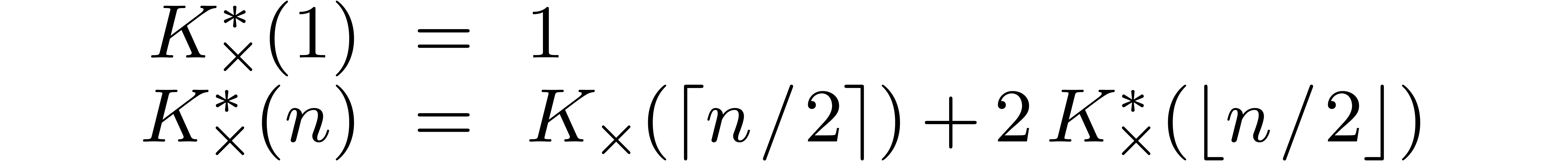

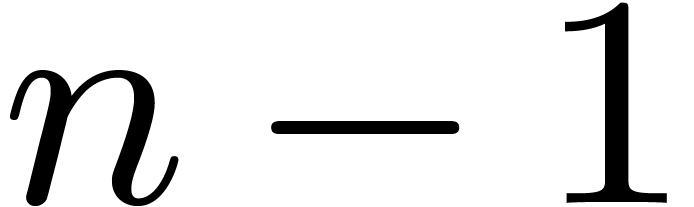

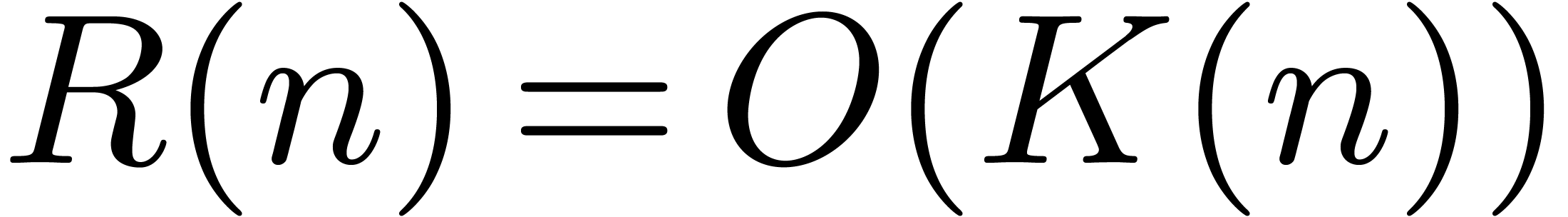

The number of multiplications  used by

used by  is determined by the relations

is determined by the relations

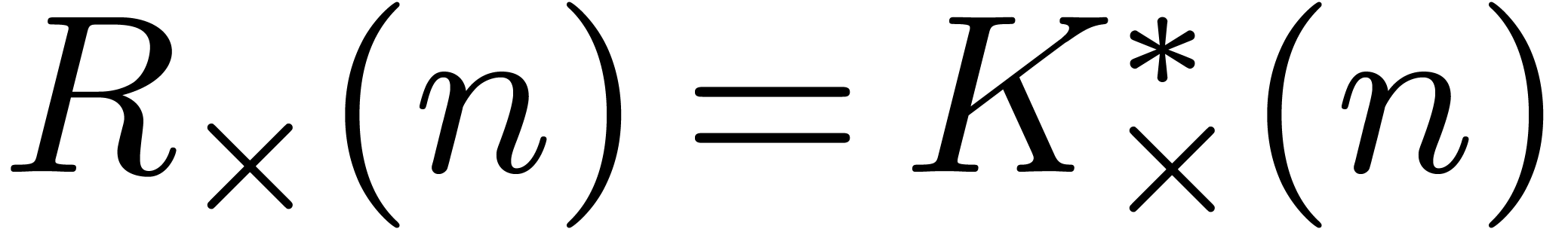

By induction, it follows that  .

The overall time complexity satisfies

.

The overall time complexity satisfies

so  . The algorithm being

in-place, its space complexity is clearly

. The algorithm being

in-place, its space complexity is clearly  .

This proves theorem 3.

.

This proves theorem 3.

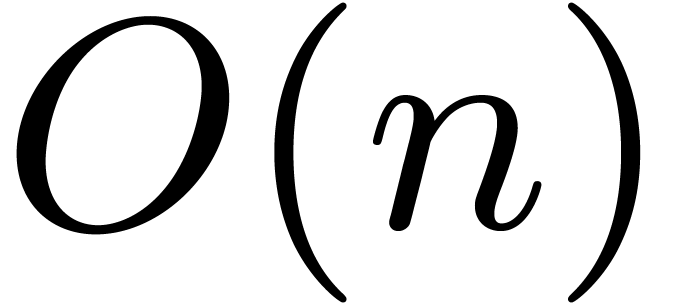

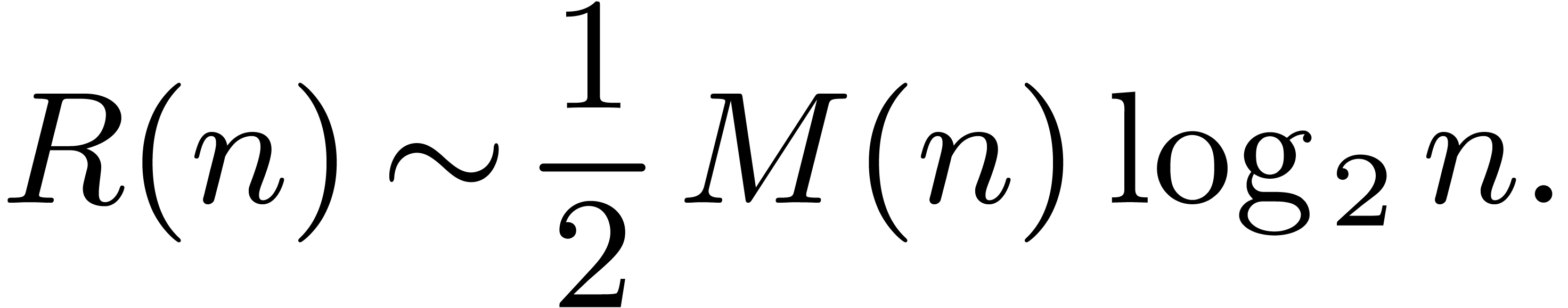

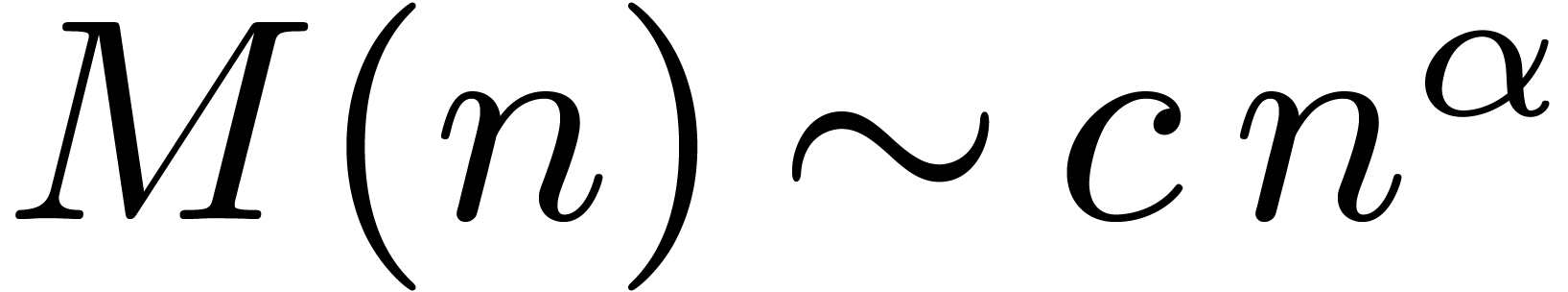

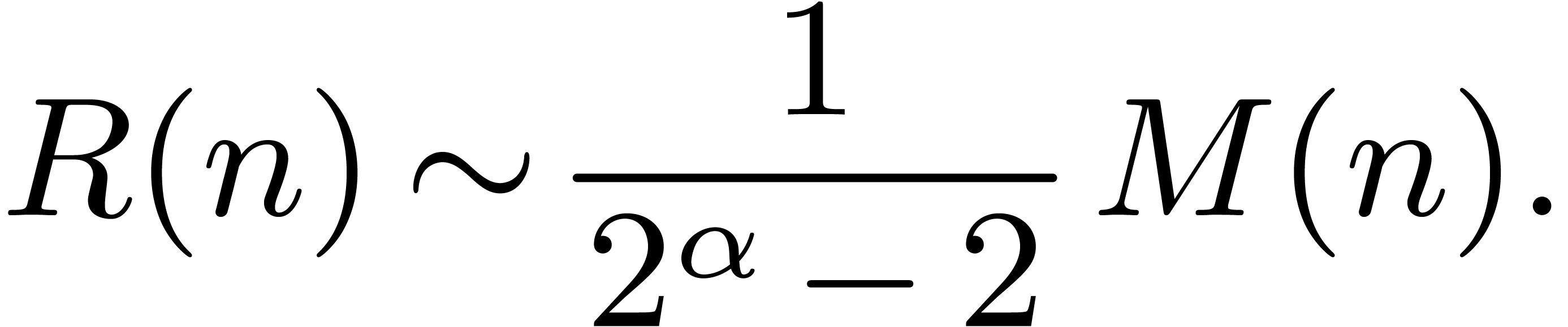

In it is also interesting to use the above algorithm in the F.F.T. model. We then have the estimation

for the asymptotic complexity  .

If

.

If  , this yields

, this yields

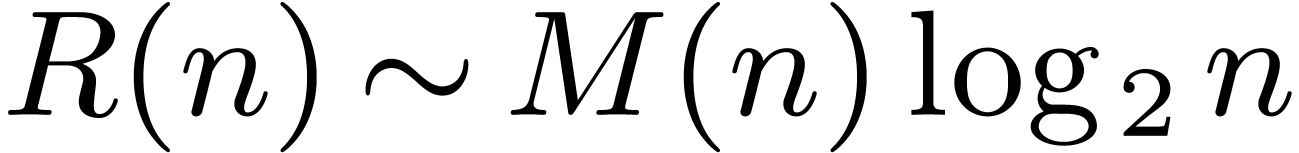

This should be compared with the complexity  of

the previously best algorithm and with the complexity

of

the previously best algorithm and with the complexity  of the standard fast relaxed multiplication algorithm.

of the standard fast relaxed multiplication algorithm.

Notice that we rarely obtain the complexity  in

practice. In the range where

in

practice. In the range where  ,

we obtain

,

we obtain

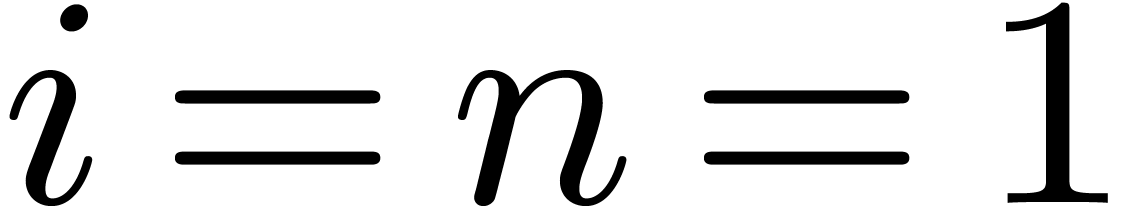

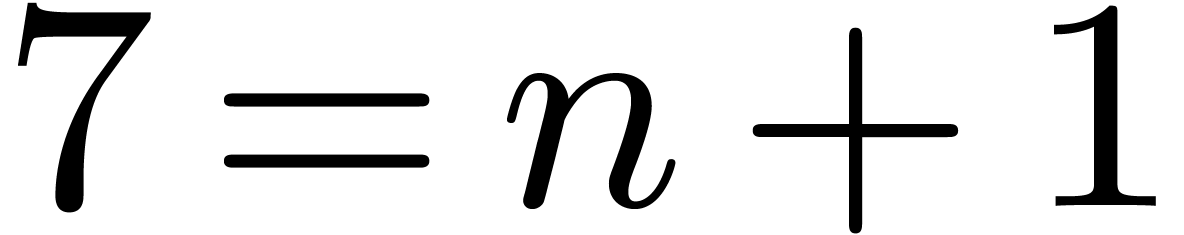

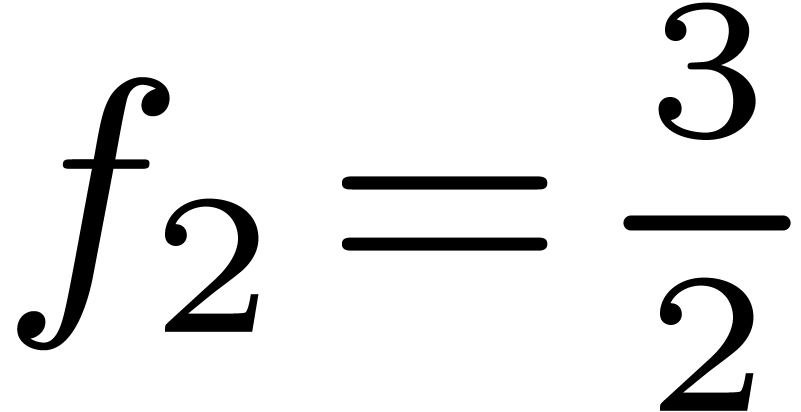

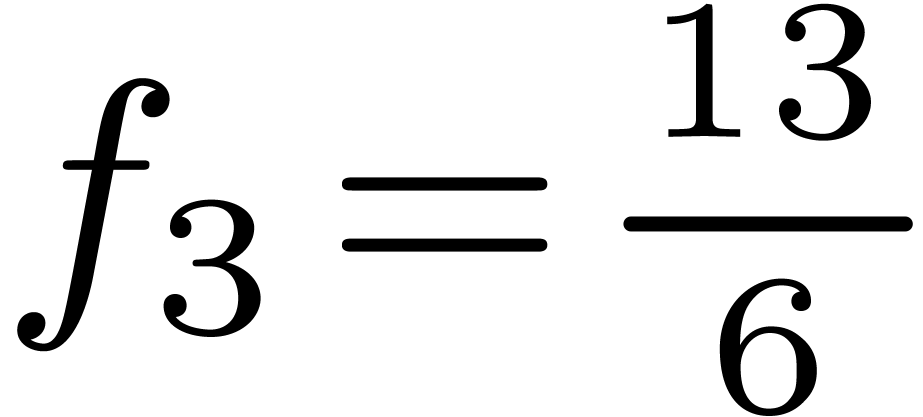

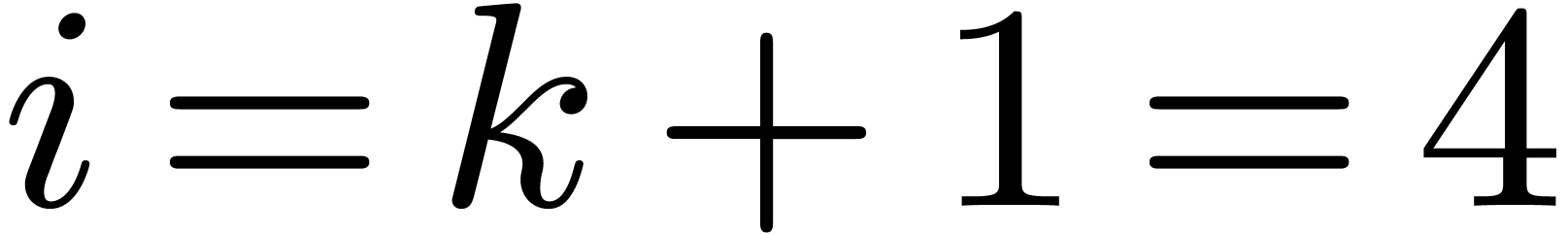

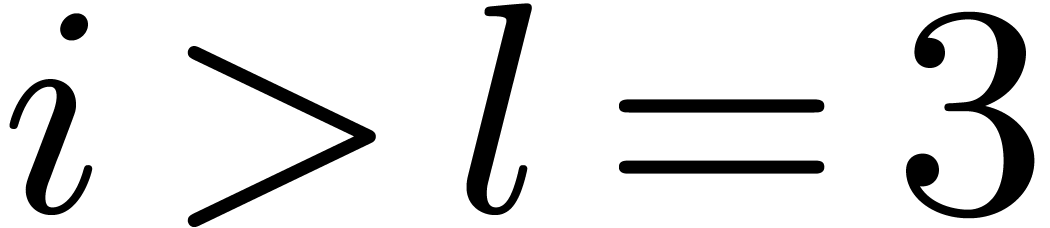

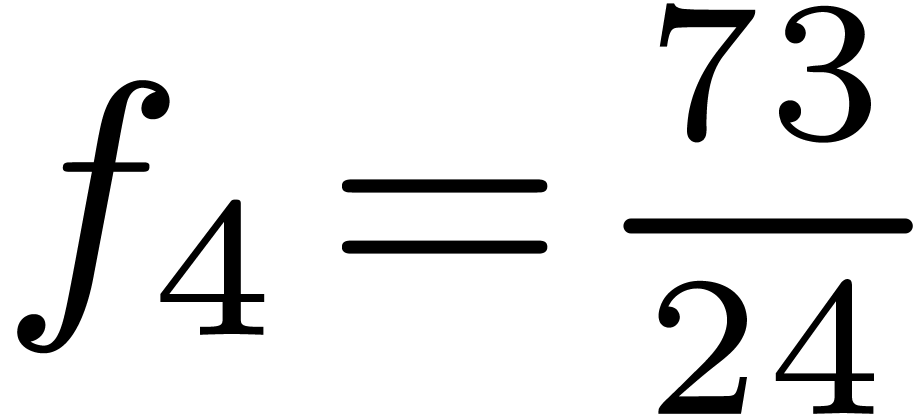

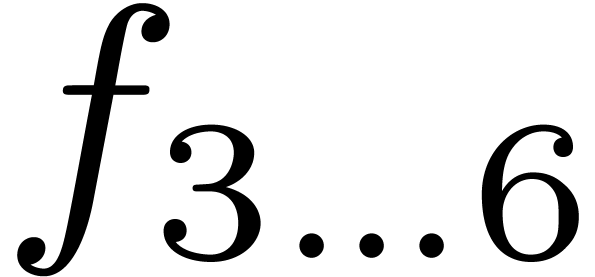

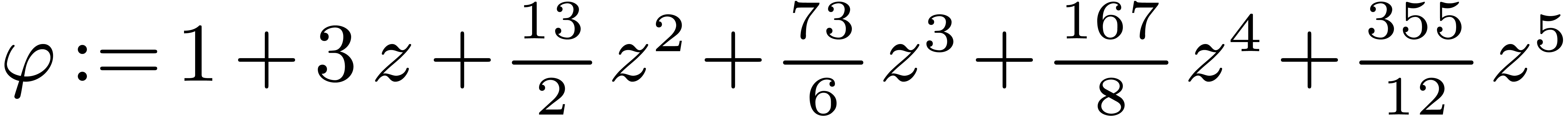

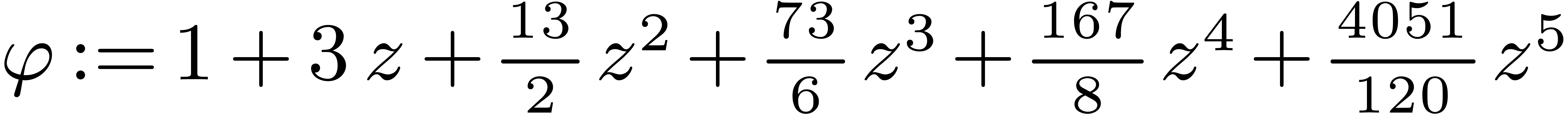

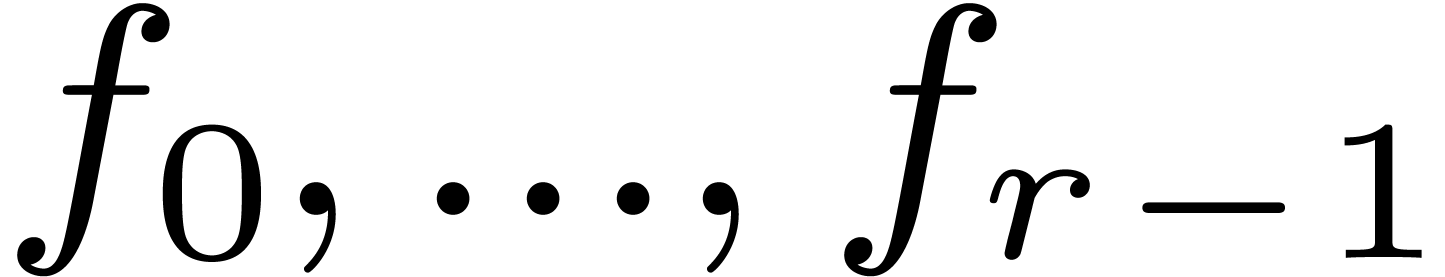

Let us consider the computation of  up till order

up till order

using our algorithm and the formula

using our algorithm and the formula

with  and

and  .

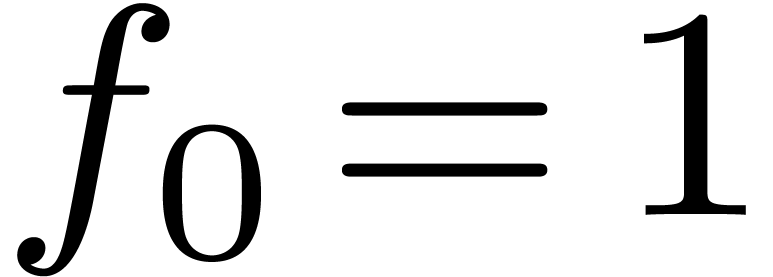

We start with

.

We start with  in

in  and

perform the following computations at successive calls for

and

perform the following computations at successive calls for  :

:

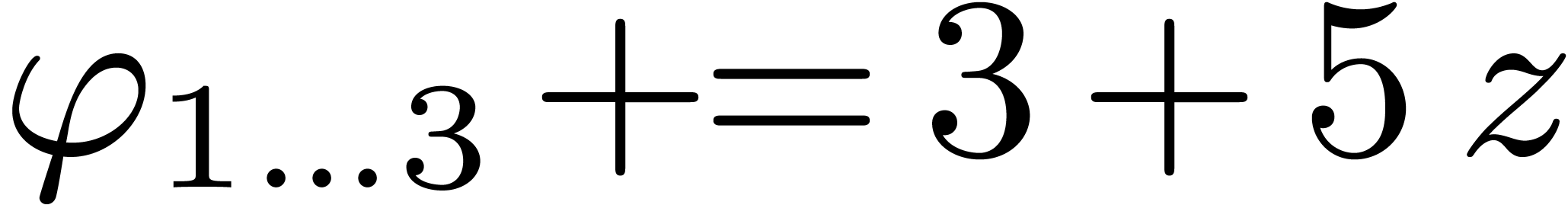

We set  , so that

, so that

and  .

.

We recursively apply  to

to  ,

,  ,

,

and

and  .

This requires the computation of

.

This requires the computation of  .

We thus increase

.

We thus increase  , so

that

, so

that

and  .

.

The two nested recursive calls to  now lead

to the increase of

now lead

to the increase of  by

by  , so that

, so that

and  .

.

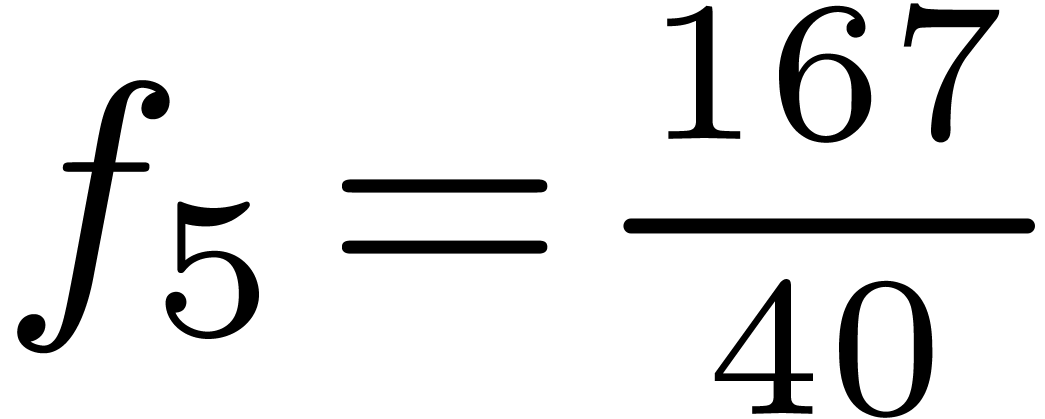

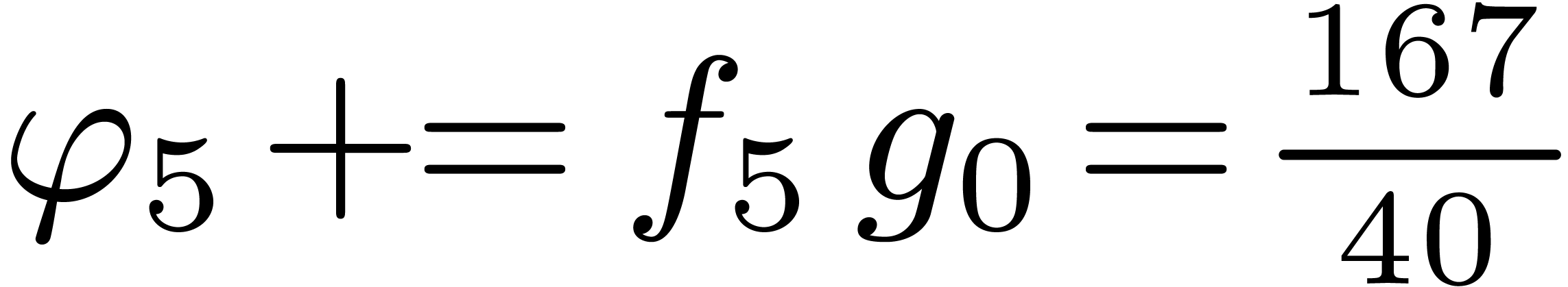

We now both have  and

and  . So we first compute

. So we first compute  and set

and set  . We next

recursively apply

. We next

recursively apply  to

to  ,

,  ,

,

and

and  ,

which leads to an increase of

,

which leads to an increase of  by

by  . Alltogether, we obtain

. Alltogether, we obtain

and  .

.

We recursively apply  to

to  ,

,  ,

,

and

and  .

This leads to the increase

.

This leads to the increase  ,

so that

,

so that

and  .

.

The two nested recursive calls lead to the increase  , so that

, so that

and  .

.

The entire computation is represented schematically in figure 3.

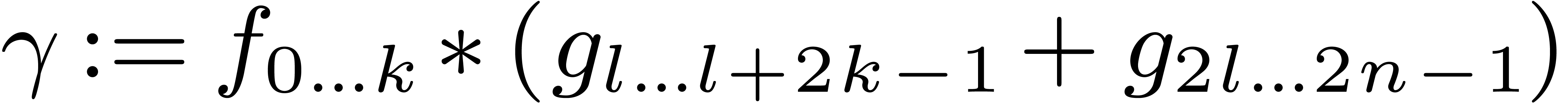

First of all, let us consider the problem of relaxed division by a fixed

power series. In other words, we are given two power series  and

and  , where

, where

is known up to order

is known up to order  and

and

. We want an algorithm for

the computation of

. We want an algorithm for

the computation of  up to order

up to order  , such that

, such that  is computed

as soon as

is computed

as soon as  are known for each

are known for each  . Now we have

. Now we have

where  . We may thus compute

. We may thus compute

in a relaxed way using the algorithm from the

previous section. Computing

in a relaxed way using the algorithm from the

previous section. Computing  up till

up till  terms will then necessitate

terms will then necessitate  multiplications in

multiplications in  .

.

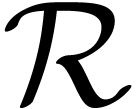

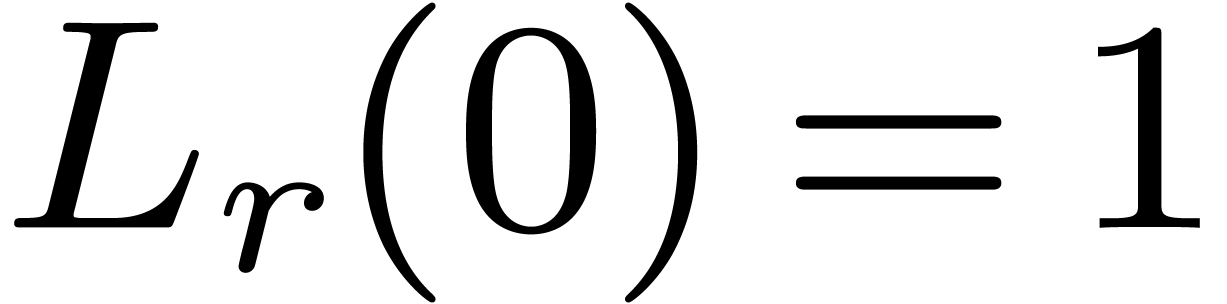

Let us next consider a linear differential equation

|

(2) |

with  and

and  .

Given initial conditions for

.

Given initial conditions for  ,

there exists a unique solution to this equation. We may compute this

solution using the relaxed algorithm from the previous section, the

above algorithm for relaxed division, and the formula

,

there exists a unique solution to this equation. We may compute this

solution using the relaxed algorithm from the previous section, the

above algorithm for relaxed division, and the formula

In order to compute  coefficients, we need to

perform

coefficients, we need to

perform  multiplications in

multiplications in  and

and  multiplications and divisions by integers.

If

multiplications and divisions by integers.

If  , then we only need

, then we only need  multiplications.

multiplications.

For instance, the exponential  of a series

of a series  with

with  satisfies the equation

satisfies the equation

so  can be computed using

can be computed using  multiplications, using the formula (1).

multiplications, using the formula (1).

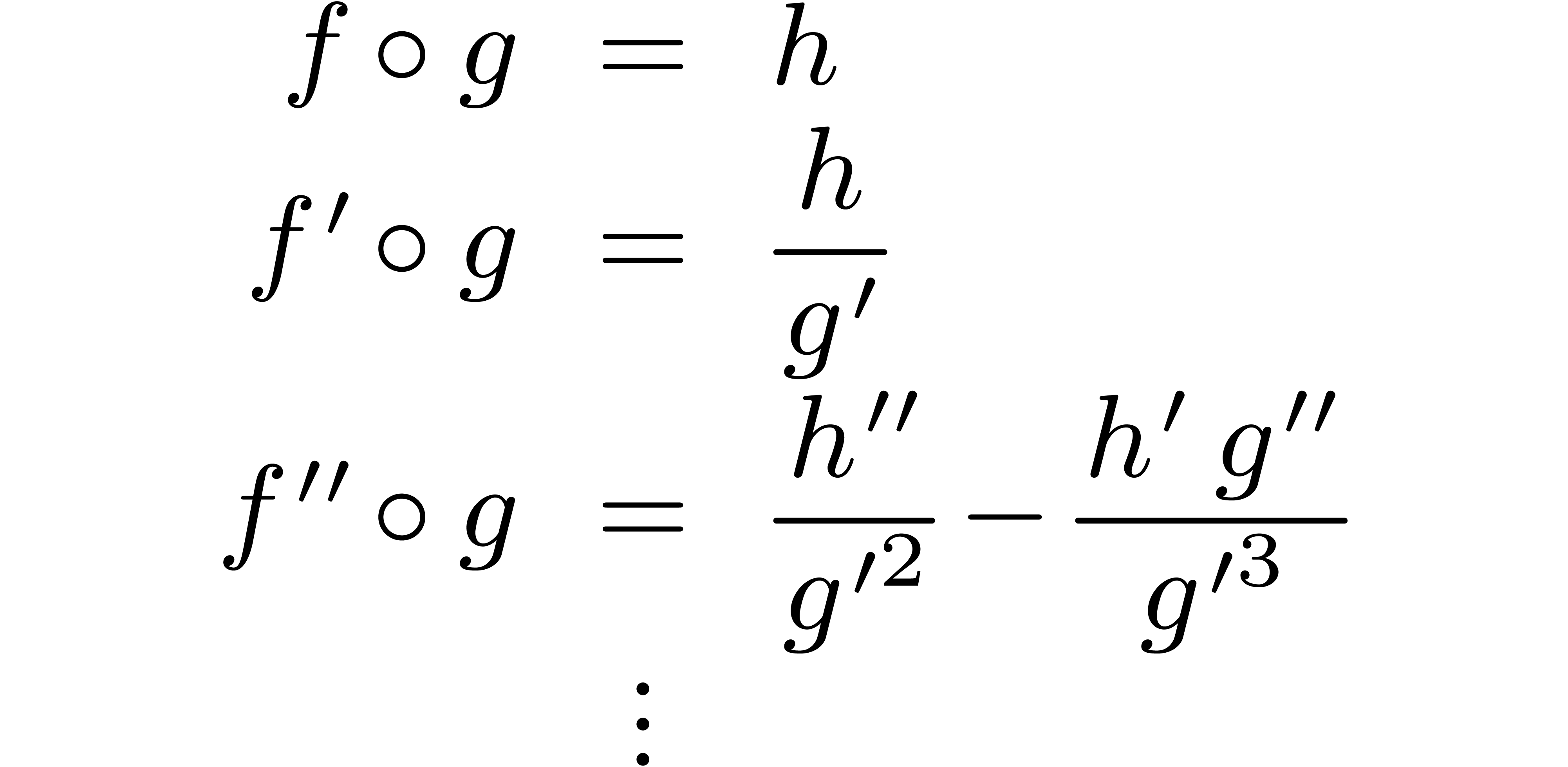

More generally, consider the solution to (2) with the

prescribed initial conditions, and let  be

another series with

be

another series with  . Then

the composition

. Then

the composition  again satisfies a linear

differential equation. Indeed, we have the relations

again satisfies a linear

differential equation. Indeed, we have the relations

Postcomposing (2) with  and using

these relations, we obtain a linear differential equation for

and using

these relations, we obtain a linear differential equation for  .

.

In fact, our algorithm may be used to solve far more general linear equations, such as linear partial differential equations, or linear differential-difference equations. In the case of difference equations, we notice that the relaxed multiplications in the algorithms from [vdH02] for relaxed right composition with a fixed series all have one fixed argument. So we may indeed apply the algorithm from section 3.

We finally notice that our algorithm can even be used in a non-linear

context. Indeed, after computing  coefficients of

a truncated relaxed product, the computation of the remaining products

reduces to the computation of two truncated relaxed products with one

fixed argument. Actually, this corresponds to an implicit application of

Newton's method.

coefficients of

a truncated relaxed product, the computation of the remaining products

reduces to the computation of two truncated relaxed products with one

fixed argument. Actually, this corresponds to an implicit application of

Newton's method.

We have presented a new algorithm for relaxed multiplication. Although the new algorithm does not yield a significant improvement from the asymptotic complexity point of view, we do expect it to be very useful for practical applications, such as the exponentiation of power series.

First of all, the algorithm is easy to implement. Secondly, it only needs a linear amount of memory in the range where divide and conquer multiplication is appropriate. In combination with F.F.T. multiplication, the algorithm yields a better constant factor in the asymptotic complexity.

When implementing a library for power series computations, it is interesting to incorporate a mechanism to automatically detect relaxed and fixed multiplicands in a complex computation. This is possible by examining the dependency graph. With such a mechanism, one may use the new algorithm whenever possible.

Some interesting questions remain open in the divide and conquer model:

can we apply Mulders' trick [Mul00, HZ02] for

the computation of “short products” in our setting while

maintaining the linear space complexity (see figure 4)? In

that case, we might improve the number of multiplications in theorem 3 to  .

.

In a similar vein, does there exist a relaxed multiplication algorithm

of time complexity  and linear space complexity?

This would be so, if the middle product algorithm could be made relaxed

in an in-place way (the algorithm is already “essentially

relaxed” in the sense of [vdH97, vdH02]

in the divide and conquer model).

and linear space complexity?

This would be so, if the middle product algorithm could be made relaxed

in an in-place way (the algorithm is already “essentially

relaxed” in the sense of [vdH97, vdH02]

in the divide and conquer model).

As it stands now, with the above questions still unanswered, the

original relaxed multiplication algorithm from theorem 1

remains best from the time complexity point of view in the divide and

conquer model. Moreover, Mulders' trick can be applied in this setting,

so as to yield a short relaxed multiplication algorithm of complexity

, or even better [HZ02].

, or even better [HZ02].

This has surprising consequences for the complexities of several

operations like short division and square roots: we obtain algorithms of

time complexities  and

and  when using

when using  space, while the best known

algorithms which use linear space have time complexities

space, while the best known

algorithms which use linear space have time complexities  and

and  . In order

to obtain the complexity of

. In order

to obtain the complexity of  in the case of

square roots, one should use a relaxed version of the fast squaring

algorithm from [HQZ02], which is based on middle products.

in the case of

square roots, one should use a relaxed version of the fast squaring

algorithm from [HQZ02], which is based on middle products.

We finally remark that this relaxed version of squaring using middle

products is also interesting in the F.F.T. model. In this

case, the relaxed middle product corresponds to a full relaxed product

with one fixed argument. Such products can be computed in time  , so that we obtain a relaxed

squaring algorithm of time complexity

, so that we obtain a relaxed

squaring algorithm of time complexity  .

This is twice as good as general relaxed multiplication. In the

non-relaxed setting, squares can be computed in a time between

.

This is twice as good as general relaxed multiplication. In the

non-relaxed setting, squares can be computed in a time between  and

and  , depending

on whether most time is spent on inner multiplications or fast Fourier

transforms respectively.

, depending

on whether most time is spent on inner multiplications or fast Fourier

transforms respectively.

D.G. Cantor and E. Kaltofen. On fast multiplication of polynomials over arbitrary algebras. Acta Informatica, 28:693–701, 1991.

J.W. Cooley and J.W. Tukey. An algorithm for the machine calculation of complex Fourier series. Math. Computat., 19:297–301, 1965.

Guillaume Hanrot, Michel Quercia, and Paul Zimmermann. Speeding up the division and square root of power series. Research Report 3973, INRIA, July 2000. Available from http://www.inria.fr/RRRT/RR-3973.html.

Guillaume Hanrot, Michel Quercia, and Paul Zimmermann. The middle product algorithm I. speeding up the division and square root of power series. Accepted for publication in AAECC, 2002.

Guillaume Hanrot and Paul Zimmermann. A long note on Mulders' short product. Research Report 4654, INRIA, December 2002. Available from http://www.loria.fr/ hanrot/Papers/mulders.ps.

D.E. Knuth. The Art of Computer Programming, volume 2: Seminumerical Algorithms. Addison-Wesley, 3-rd edition, 1997.

A. Karatsuba and J. Ofman. Multiplication of multidigit numbers on automata. Soviet Physics Doklady, 7:595–596, 1963.

T. Mulders. On short multiplication and division. AAECC, 11(1):69–88, 2000.

A. Schönhage and V. Strassen. Schnelle Multiplikation grosser Zahlen. Computing 7, 7:281–292, 1971.

J. van der Hoeven. Lazy multiplication of formal power series. In W. W. Küchlin, editor, Proc. ISSAC '97, pages 17–20, Maui, Hawaii, July 1997.

J. van der Hoeven. Relax, but don't be too lazy. JSC, 34:479–542, 2002.