From implicit to recursive

equations |

|

| August 30, 2018 |

|

. This work has

been supported by the ANR-09-JCJC-0098-01

. This work has

been supported by the ANR-09-JCJC-0098-01

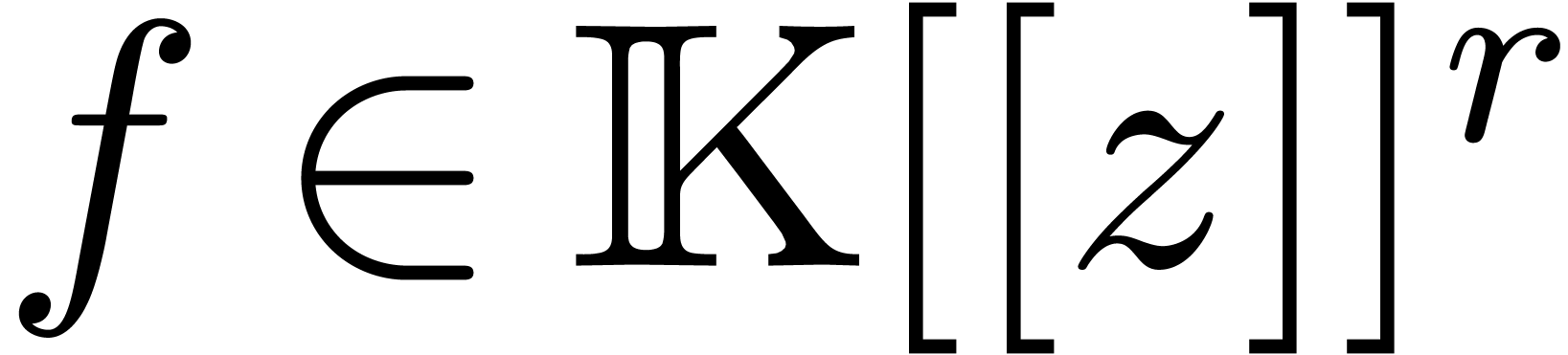

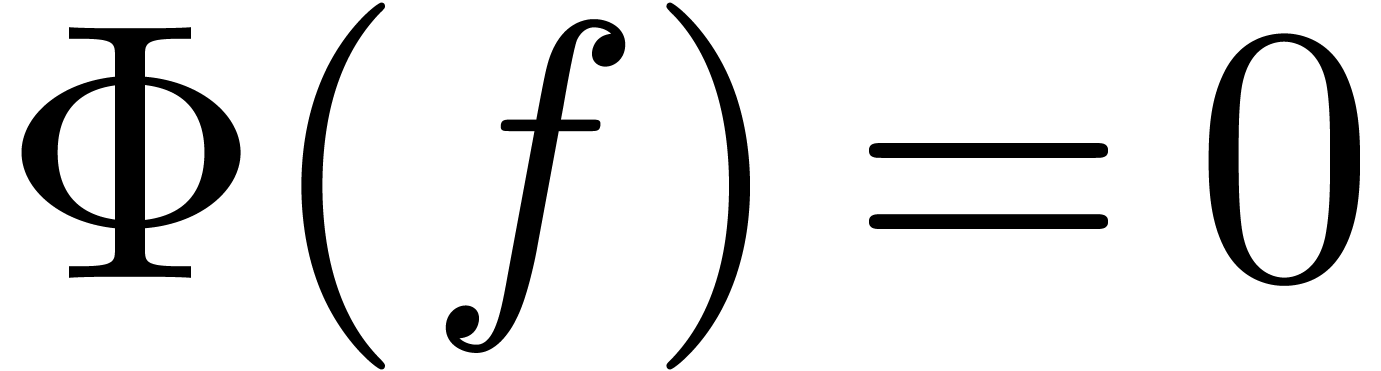

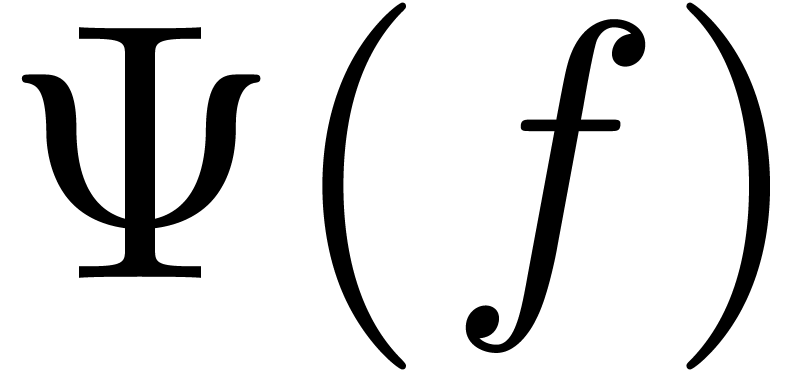

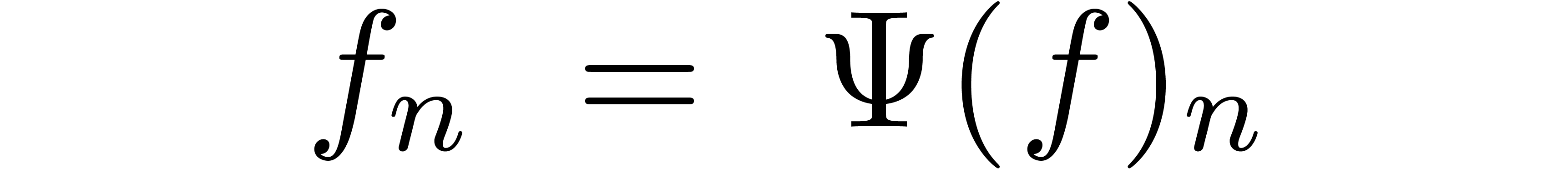

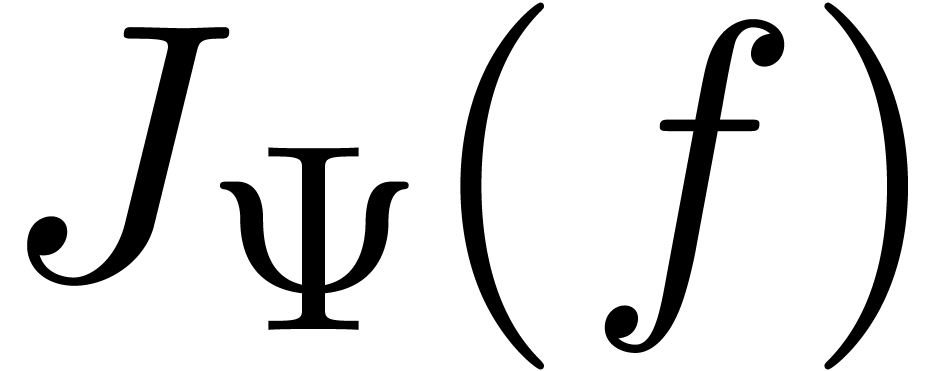

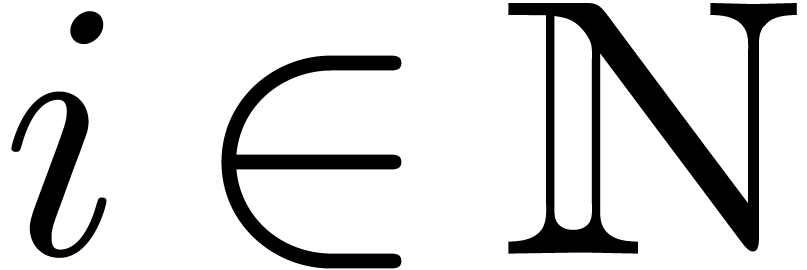

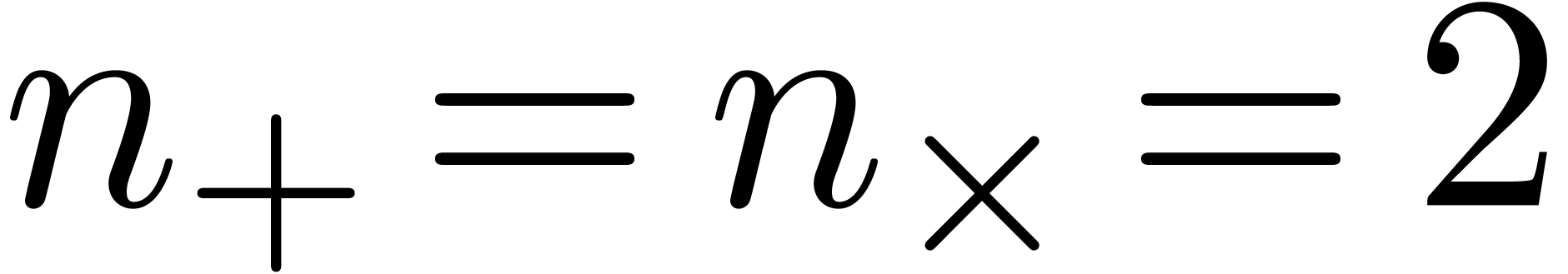

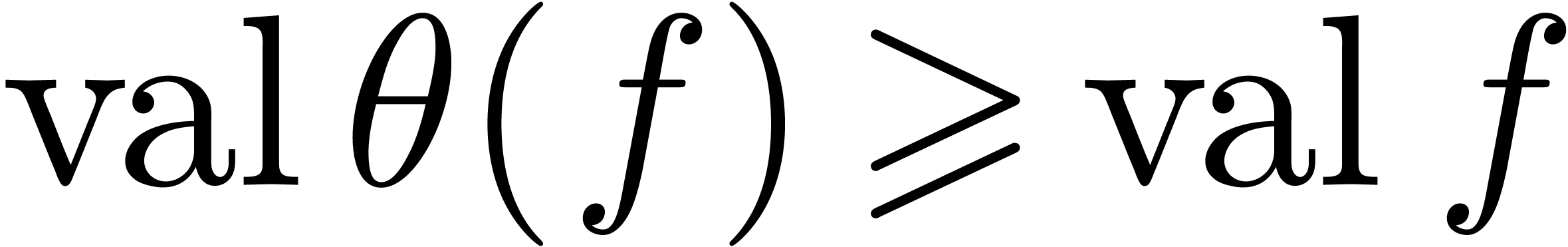

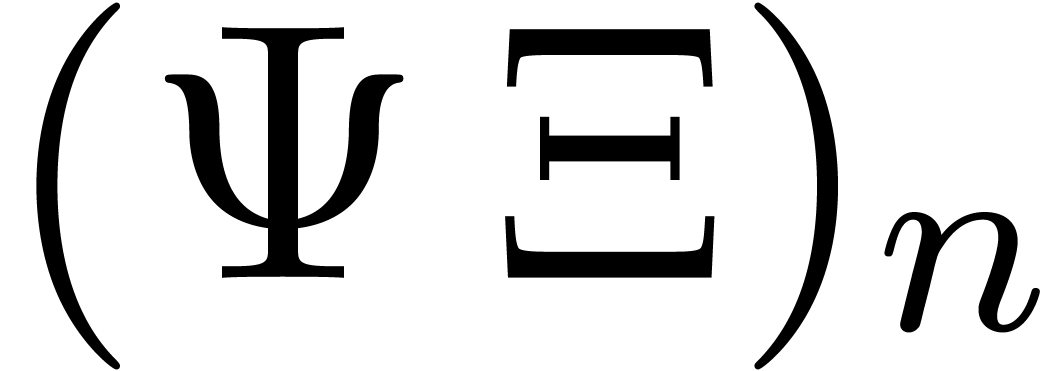

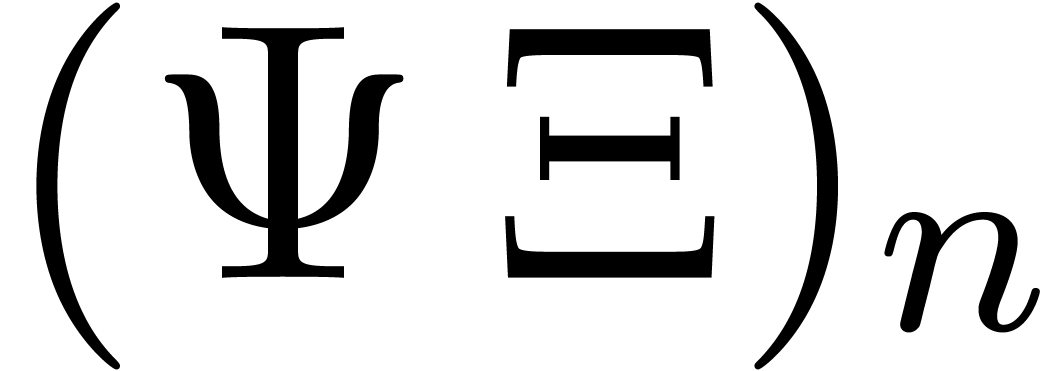

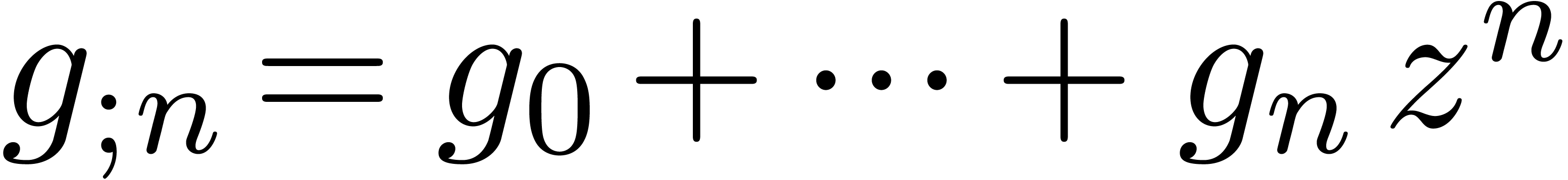

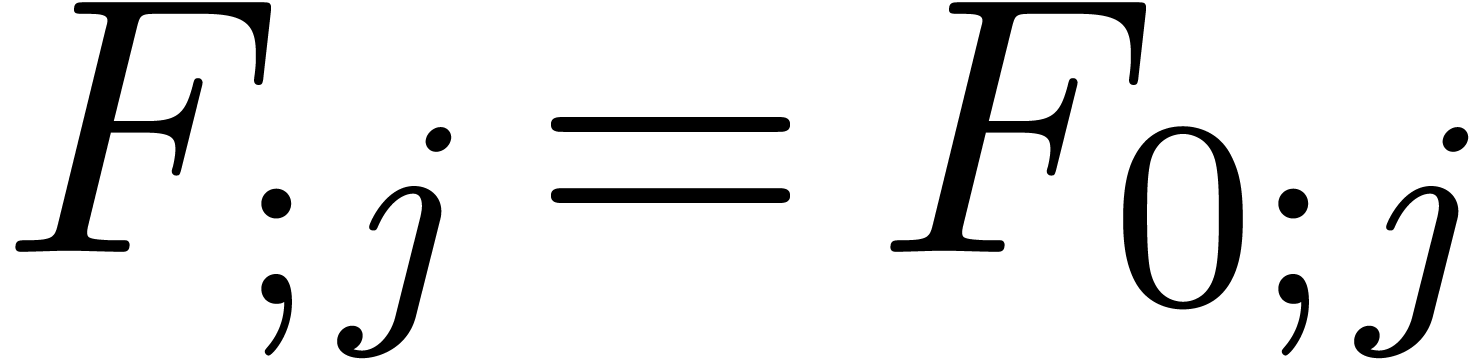

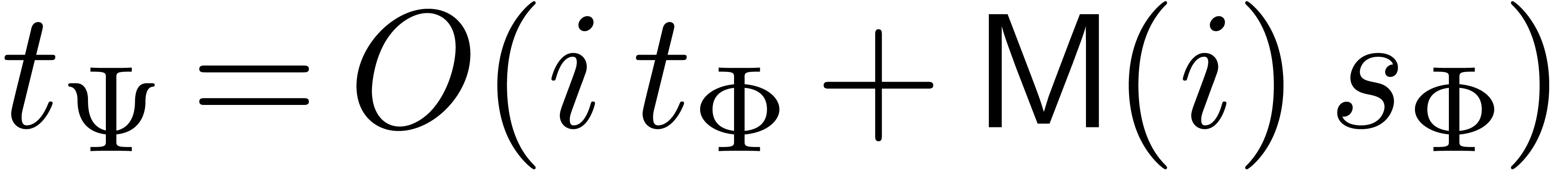

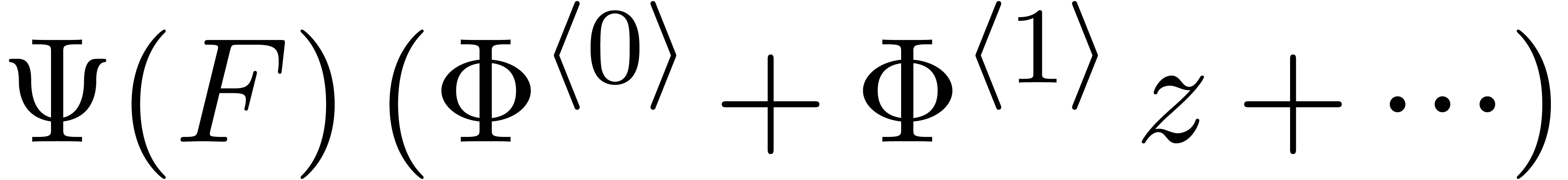

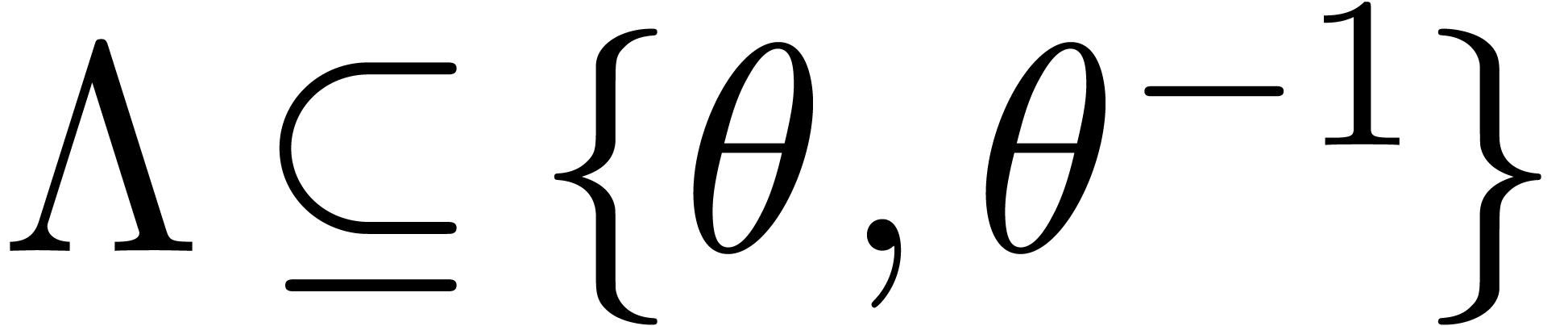

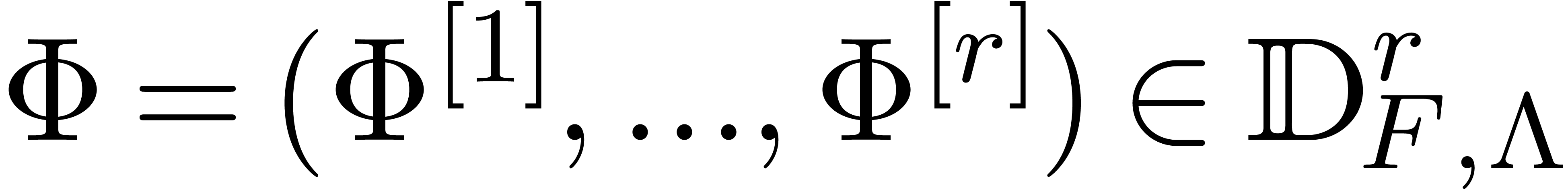

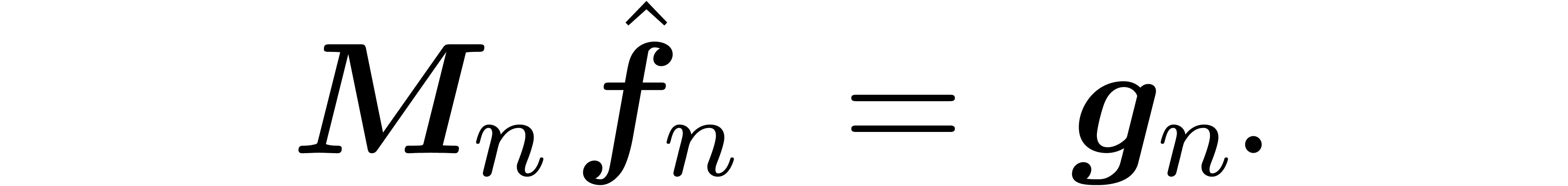

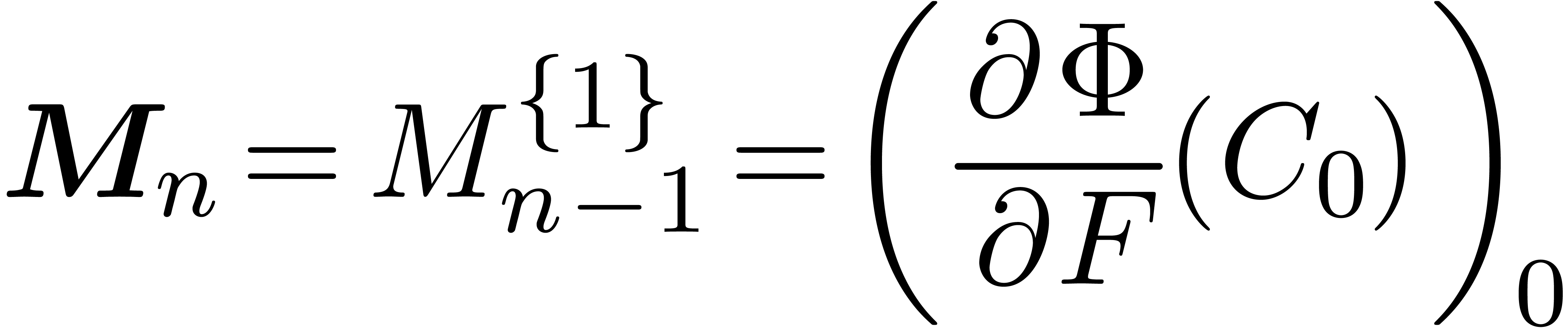

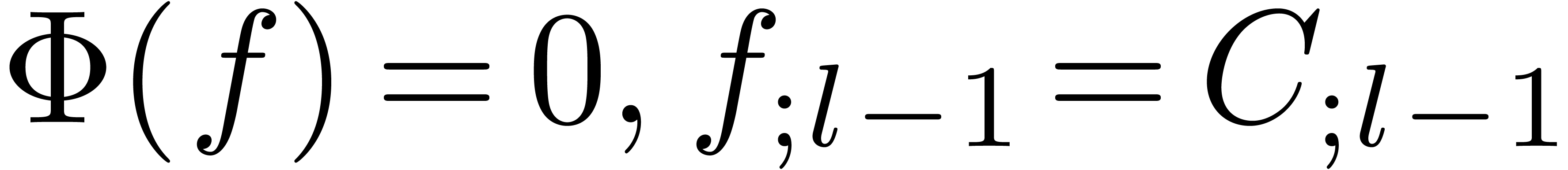

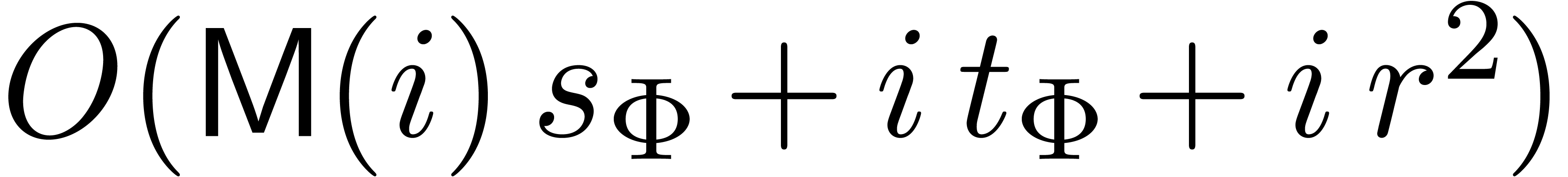

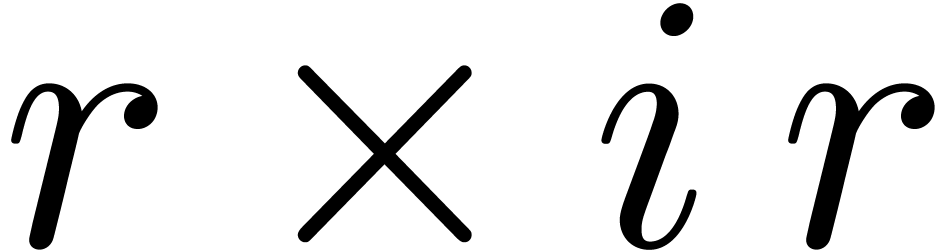

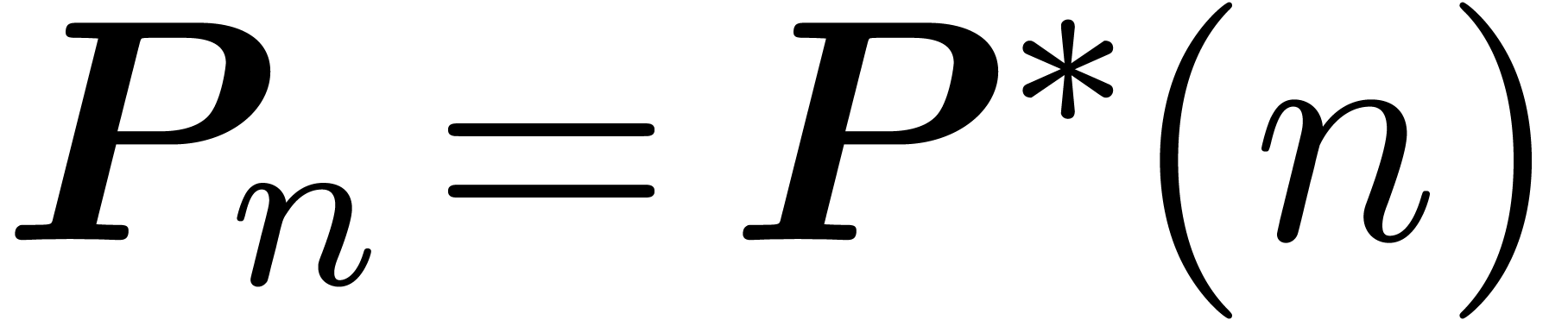

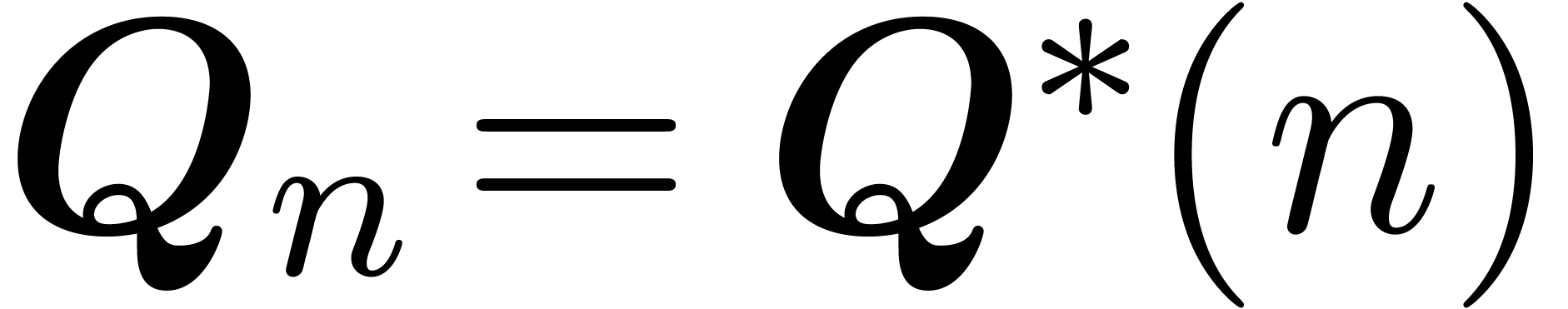

The technique of relaxed power series expansion provides an

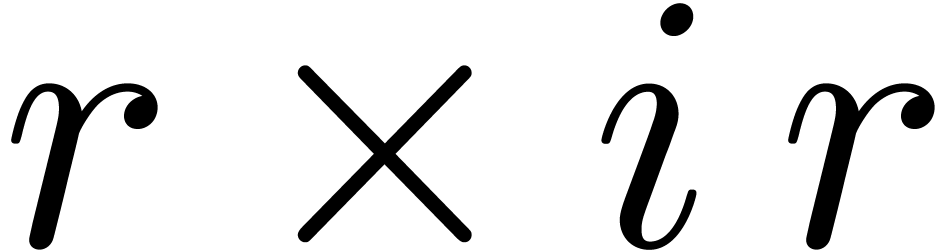

efficient way to solve so called recursive equations of the form

|

Let  be an effective field of constants of

characteristic zero. This means that elements in

be an effective field of constants of

characteristic zero. This means that elements in  can be encoded by data structures on a computer and that we have

algorithms for performing the field operations of

can be encoded by data structures on a computer and that we have

algorithms for performing the field operations of  .

.

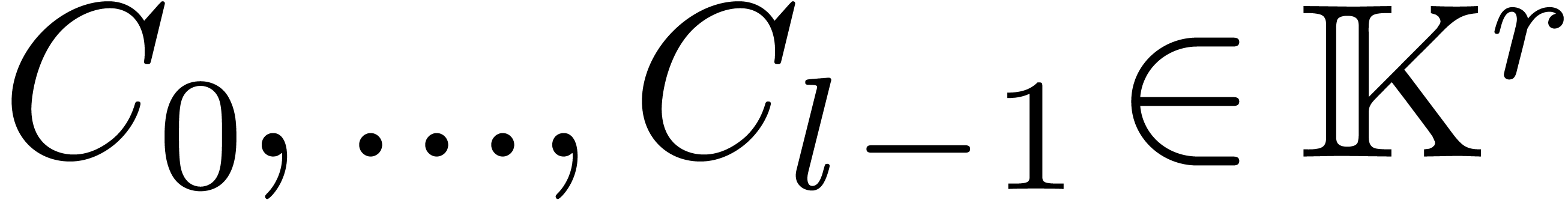

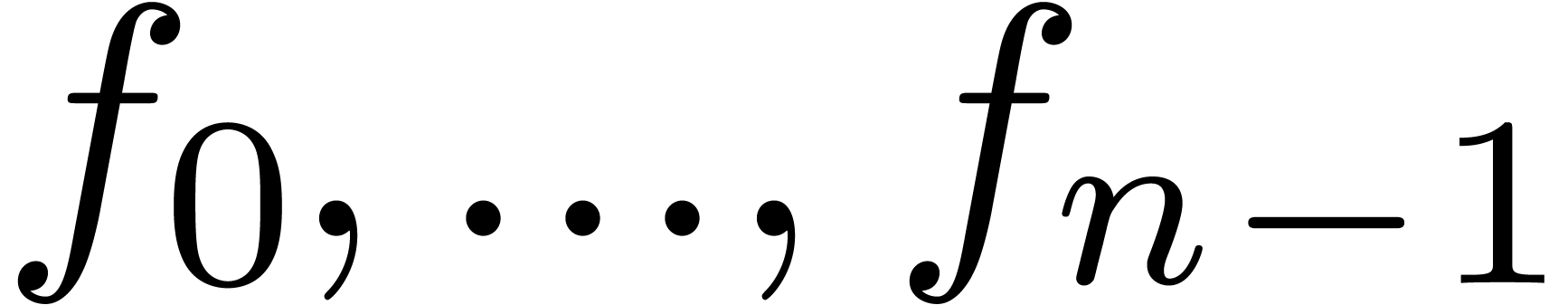

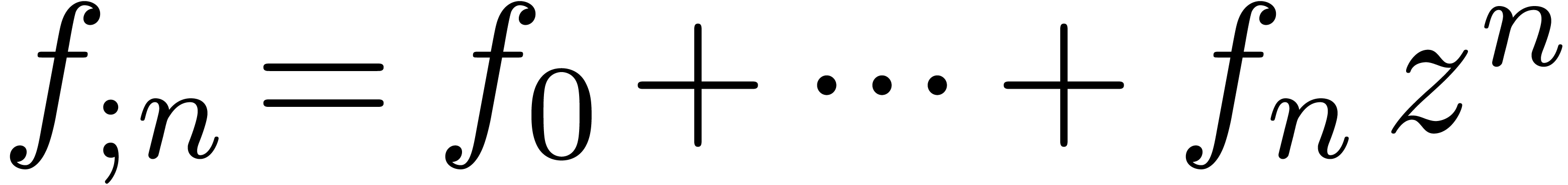

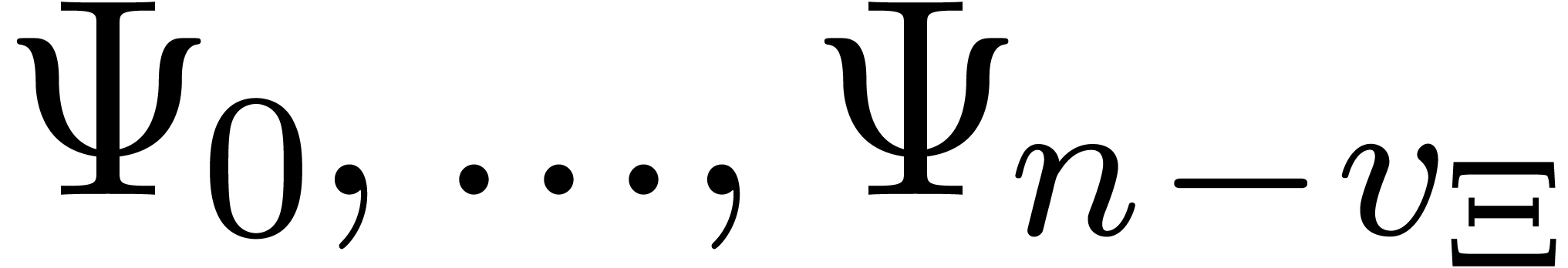

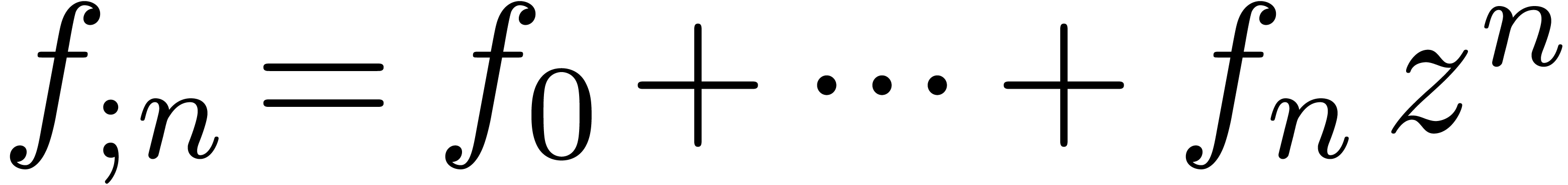

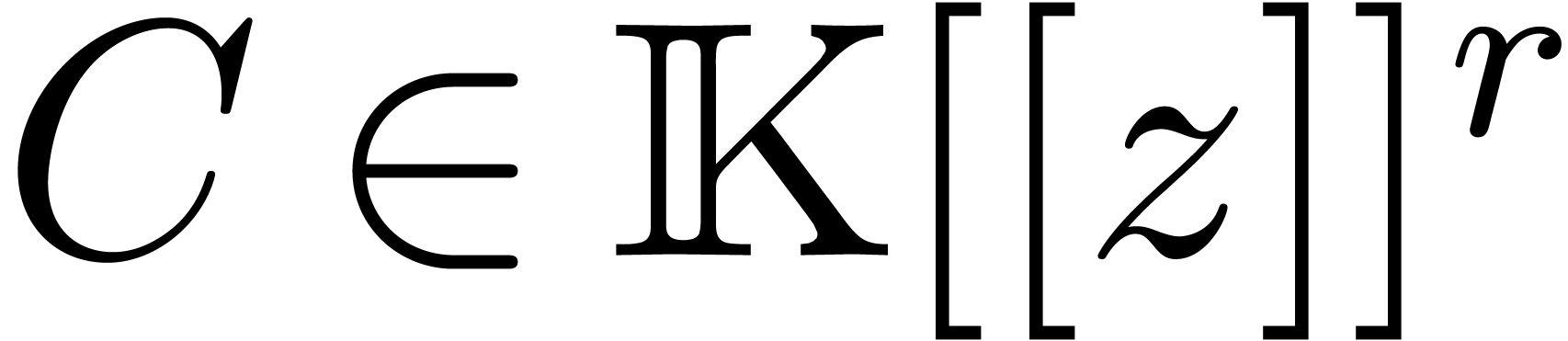

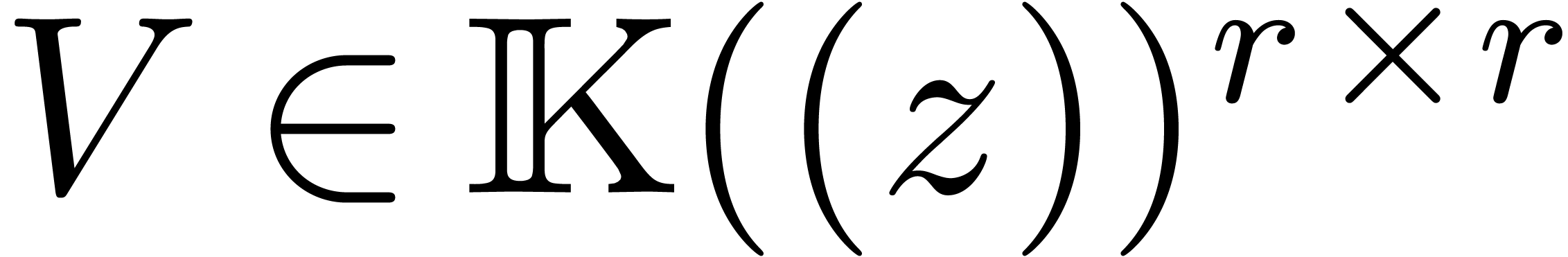

Let  be a column vector of

be a column vector of  indeterminate series in

indeterminate series in  . We

may also consider

. We

may also consider  as a power series

as a power series  . Let

. Let  be a column

vector of expressions built up from

be a column

vector of expressions built up from  ,

,

and constants in

and constants in  using

ring operations, differentiation and integration (with constant term

zero). Finally, let

using

ring operations, differentiation and integration (with constant term

zero). Finally, let  be a finite number of

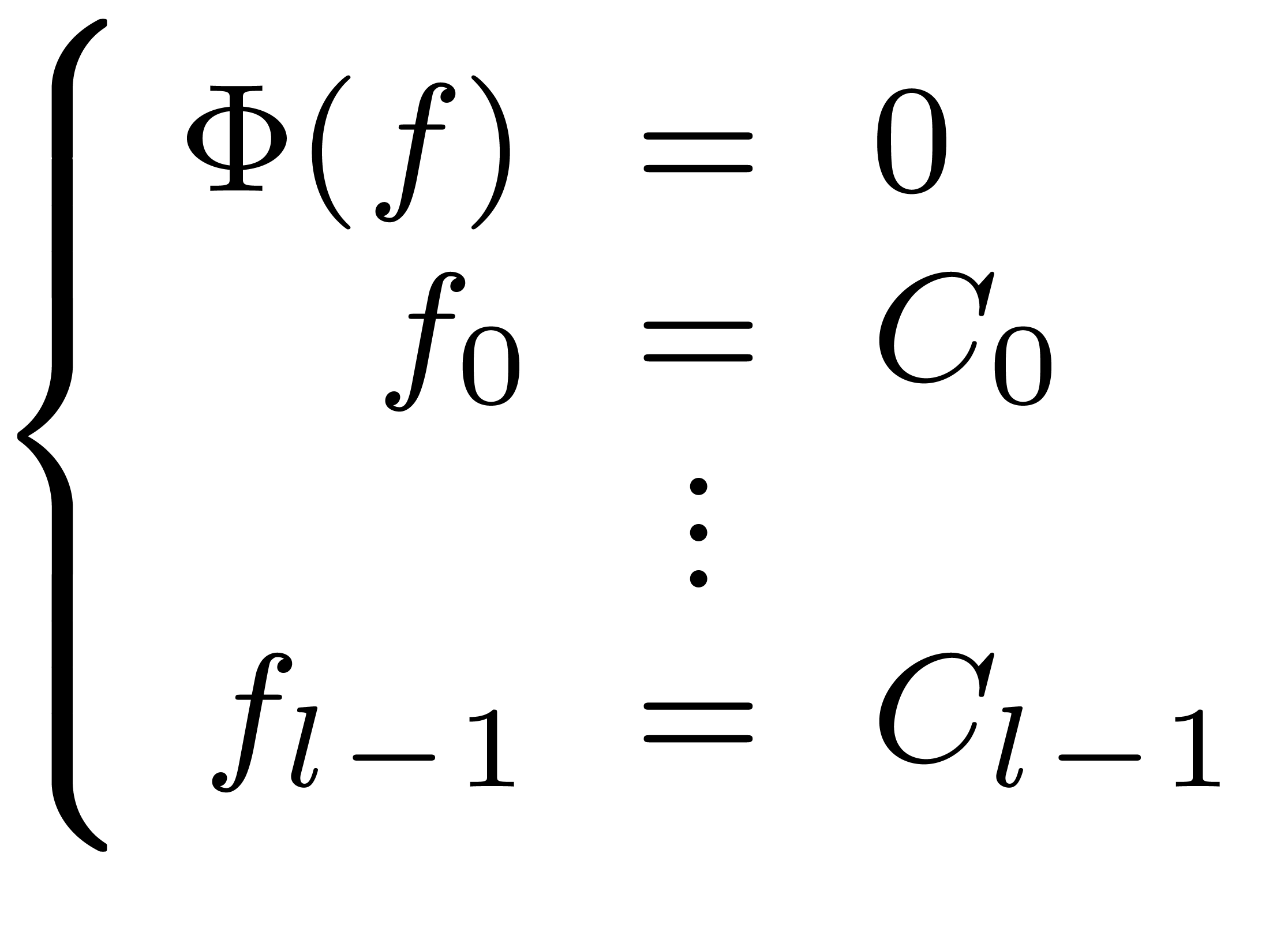

initial conditions. Assume that the system

be a finite number of

initial conditions. Assume that the system

|

(1) |

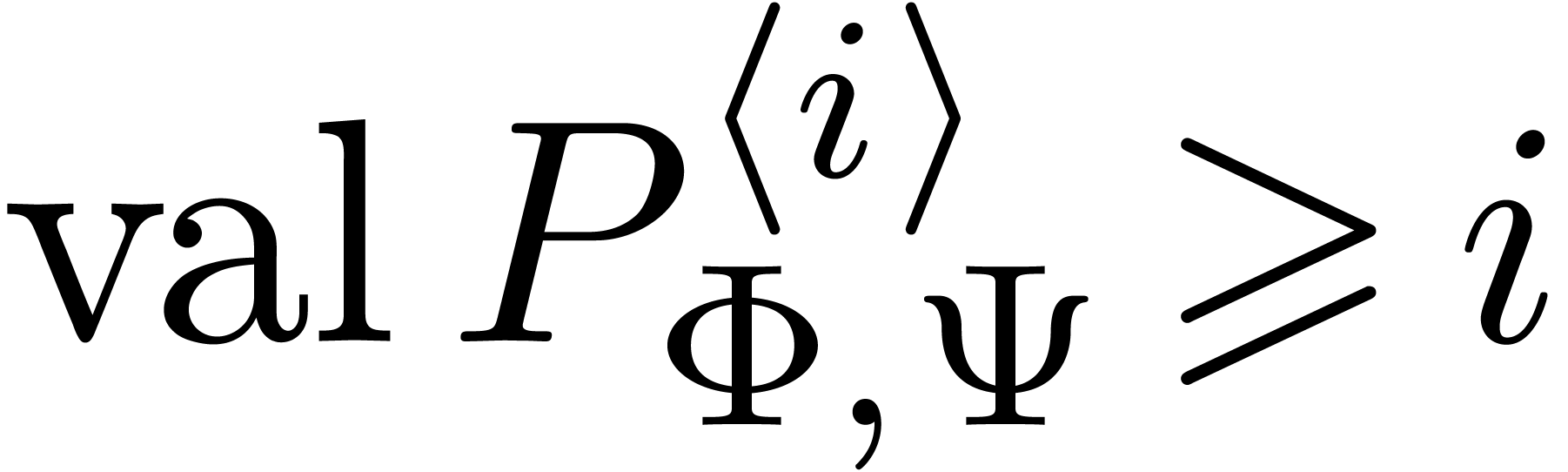

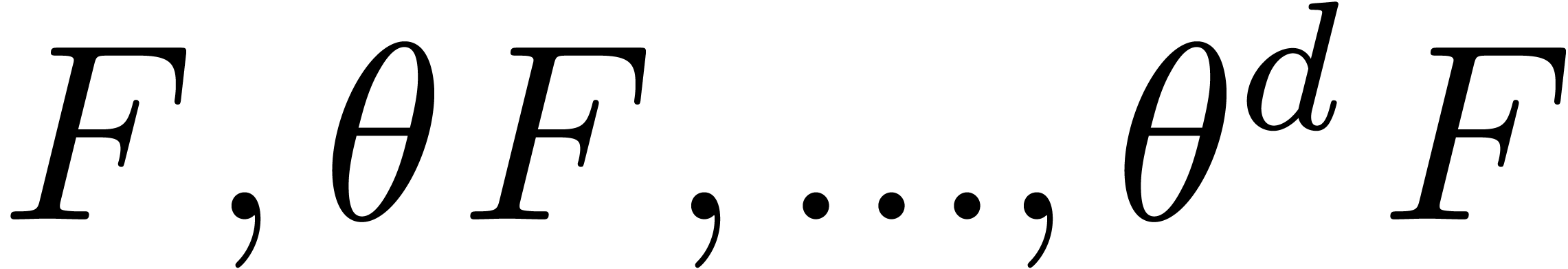

admits a unique solution  . In

this paper, we are interested in the efficient computation of this

solution up to a given order

. In

this paper, we are interested in the efficient computation of this

solution up to a given order  .

.

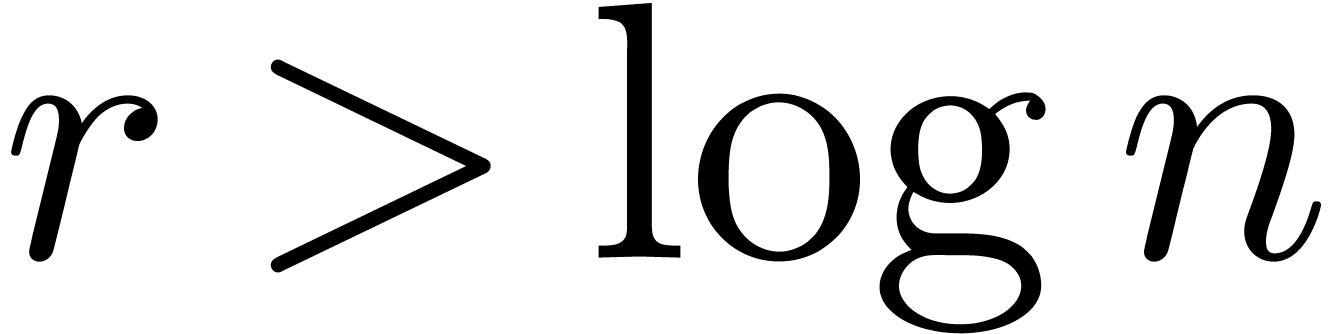

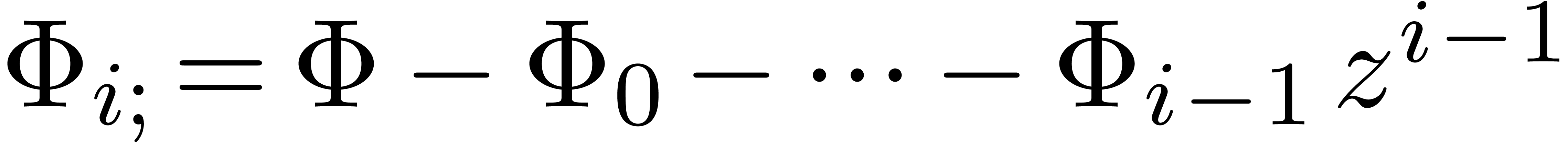

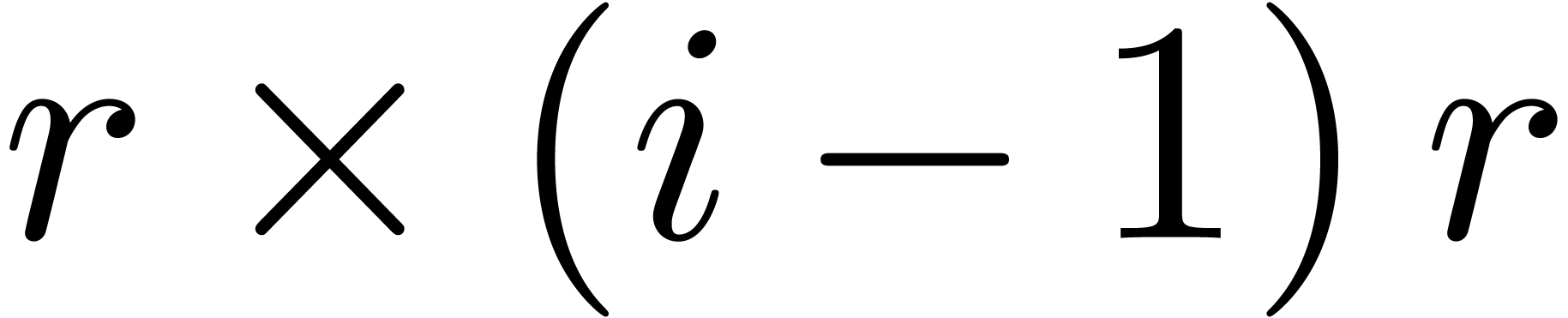

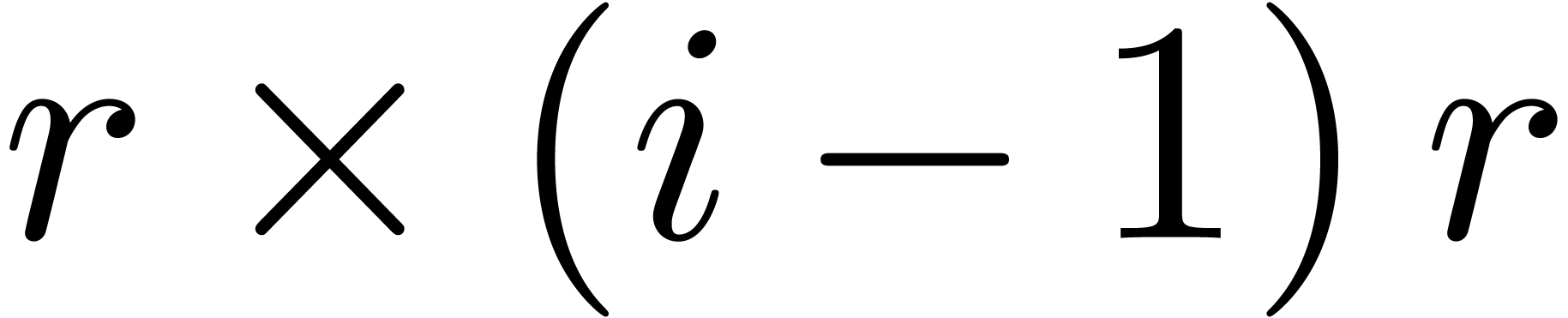

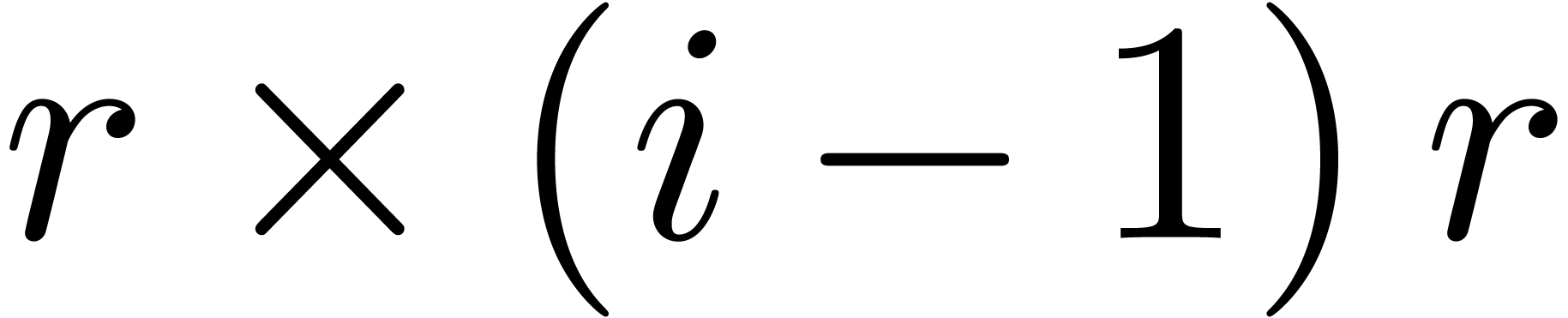

In the most favourable case, the equation  is of

the form

is of

the form

where the coefficient  of

of  in

in  only depends on earlier coefficients

only depends on earlier coefficients  of

of  , for

each

, for

each  . In that case,

. In that case,

actually provides us with a recurrence relation for the computation of

the solution. Using the technique of relaxed power series expansions [Hoe02, Hoe07], which will briefly be recalled in

section 2.4, it is then possible to compute the expansion

at order

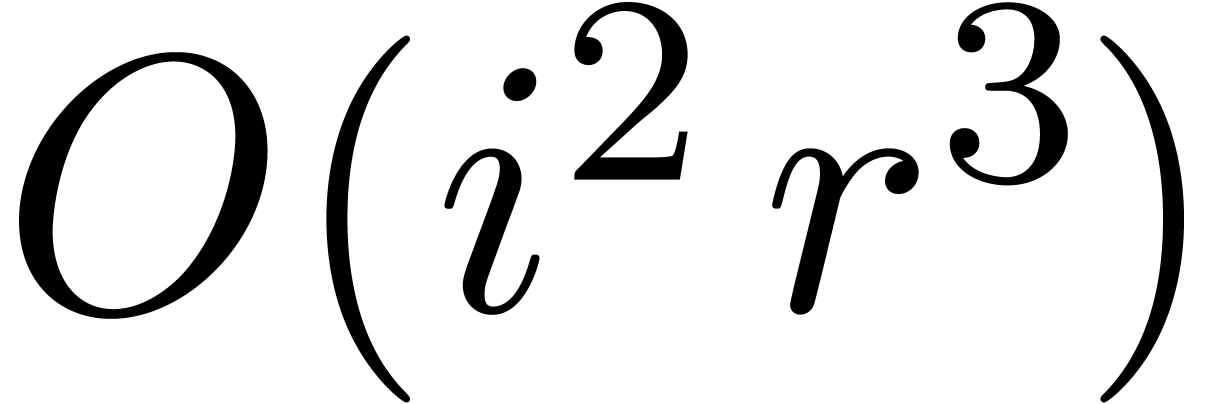

at order  in time

in time

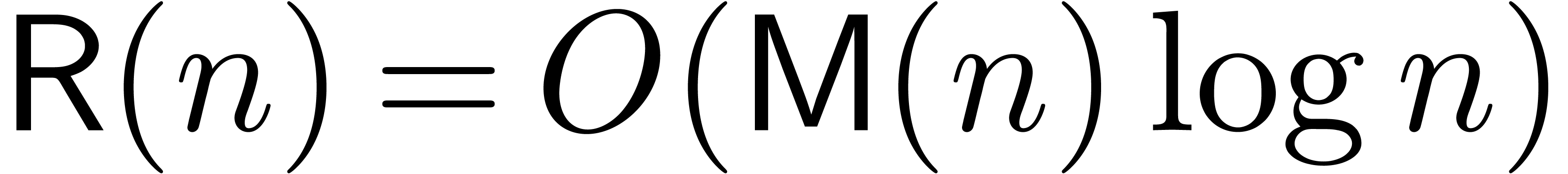

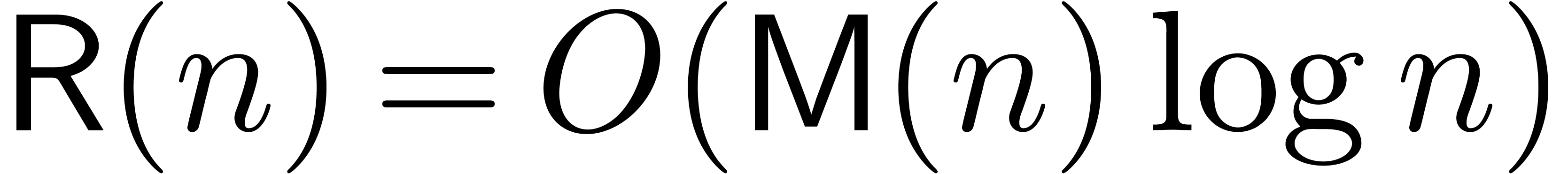

where  is the number of multiplications occurring

in

is the number of multiplications occurring

in  ,

,  is the total size of

is the total size of  as an expression, and

as an expression, and  denotes the complexity of relaxed multiplication of

two power series at order

denotes the complexity of relaxed multiplication of

two power series at order  .

Here we assume that

.

Here we assume that  is represented by a directed

acyclic graph, with possible common subexpressions. For large

is represented by a directed

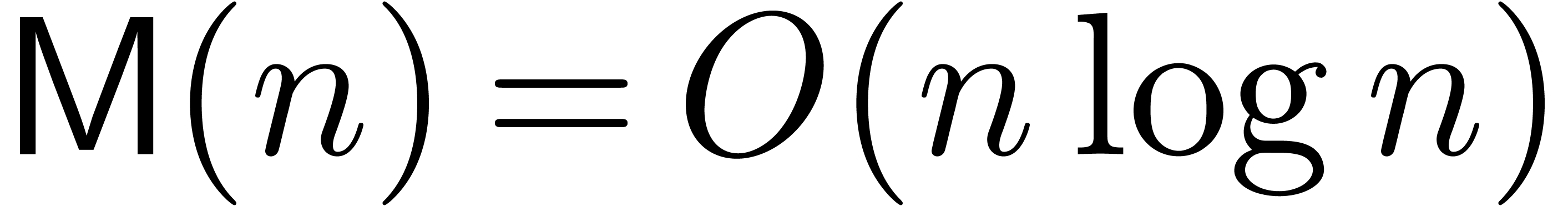

acyclic graph, with possible common subexpressions. For large  , it was shown in [Hoe02,

FS74, Hoe03, LS16] that

, it was shown in [Hoe02,

FS74, Hoe03, LS16] that  , where

, where  denotes the complexity [CT65, SS71, CK91]

of multiplying two polynomials of degrees

denotes the complexity [CT65, SS71, CK91]

of multiplying two polynomials of degrees  .

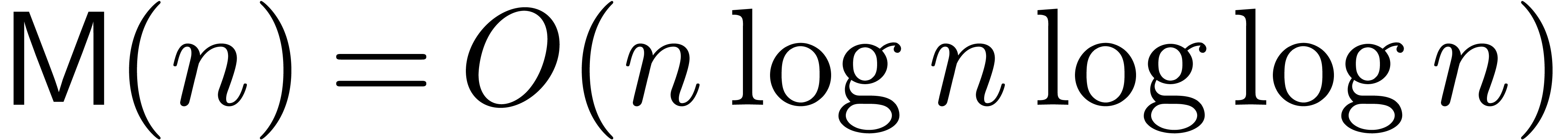

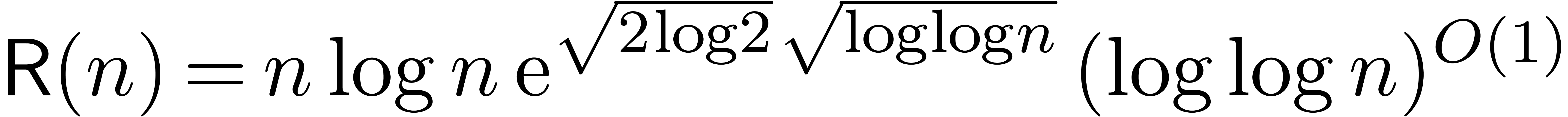

More recently, it has been shown [Hoe14, Hoe07]

that we even have

.

More recently, it has been shown [Hoe14, Hoe07]

that we even have  . For

moderate

. For

moderate  , when polynomial

multiplication is done naively or using Karatsuba's method, relaxed

multiplication is as efficient as the truncated multiplication of

polynomials at order

, when polynomial

multiplication is done naively or using Karatsuba's method, relaxed

multiplication is as efficient as the truncated multiplication of

polynomials at order  [Hoe97, Hoe02].

[Hoe97, Hoe02].

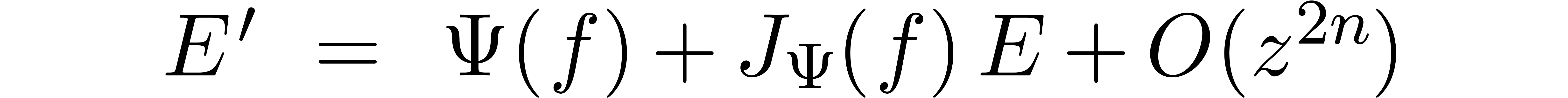

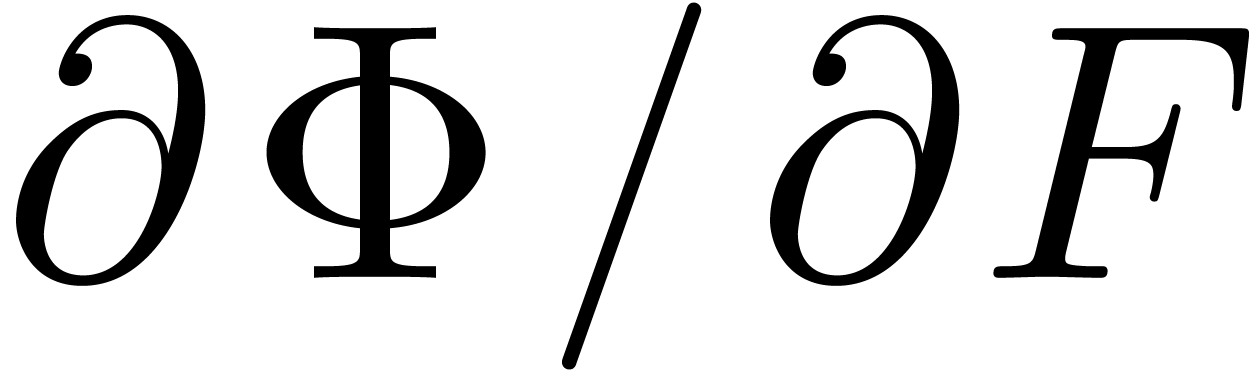

One particularly important example of an equation of the above type is the integration of a dynamical system

where  is algebraic (i.e. does not

involve differentiation or integration). In that case, given the

solution

is algebraic (i.e. does not

involve differentiation or integration). In that case, given the

solution  at order

at order  ,

we may consider the linearized system

,

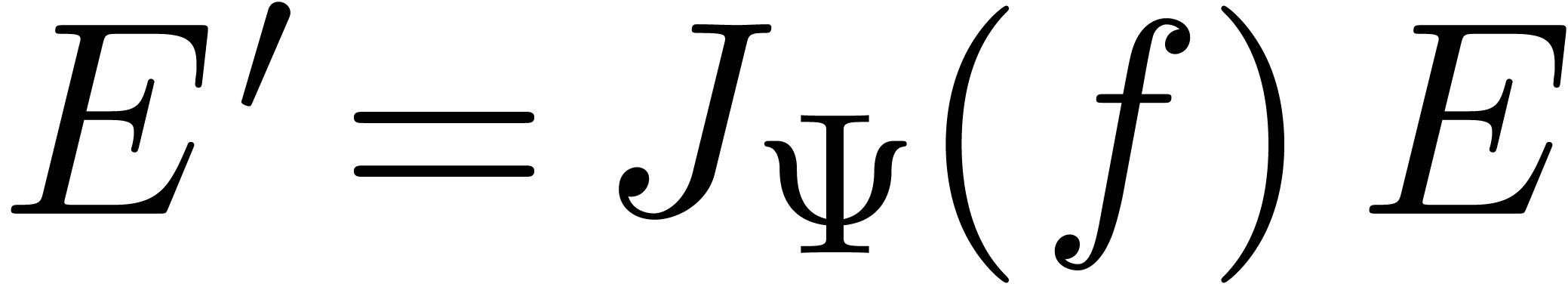

we may consider the linearized system

at order  , where

, where  stands for the Jacobian matrix associated to

stands for the Jacobian matrix associated to  at

at  . If we have

a fundamental system of solutions of

. If we have

a fundamental system of solutions of  at order

at order

, then one step of Newton's

method allows us to find the solution of (4) and a new

fundamental system of solutions of the linearized equation at order

, then one step of Newton's

method allows us to find the solution of (4) and a new

fundamental system of solutions of the linearized equation at order  [BK78, Sed01, BCO+07].

A careful analysis shows that this leads to an algorithm of time

complexity

[BK78, Sed01, BCO+07].

A careful analysis shows that this leads to an algorithm of time

complexity

In [Hoe10], this bound has been further improved to

under the assumptions that  admits sufficiently

many

admits sufficiently

many  -th roots of unity and

that

-th roots of unity and

that  .

.

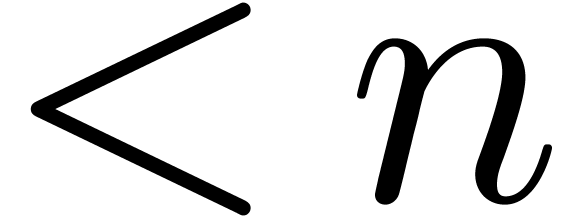

Although the complexity (5) is asymptotically better than

(3) for very large  ,

the relaxed approach often turns out to be more efficient in practice.

Indeed, Newton's method both suffers from a larger constant factor and

the fact that we profit less from the potential sparsity of the system.

In particular, if

,

the relaxed approach often turns out to be more efficient in practice.

Indeed, Newton's method both suffers from a larger constant factor and

the fact that we profit less from the potential sparsity of the system.

In particular, if  , then the

relaxed approach is generally faster. Moreover, as long as

multiplications are done in the naive or Karatsuba model, the relaxed

approach is optimal in the sense that the computation of the solution

takes roughly the same time as its verification. Another advantage of

the relaxed approach is that it generalizes to more general functional

equations and partial differential equations.

, then the

relaxed approach is generally faster. Moreover, as long as

multiplications are done in the naive or Karatsuba model, the relaxed

approach is optimal in the sense that the computation of the solution

takes roughly the same time as its verification. Another advantage of

the relaxed approach is that it generalizes to more general functional

equations and partial differential equations.

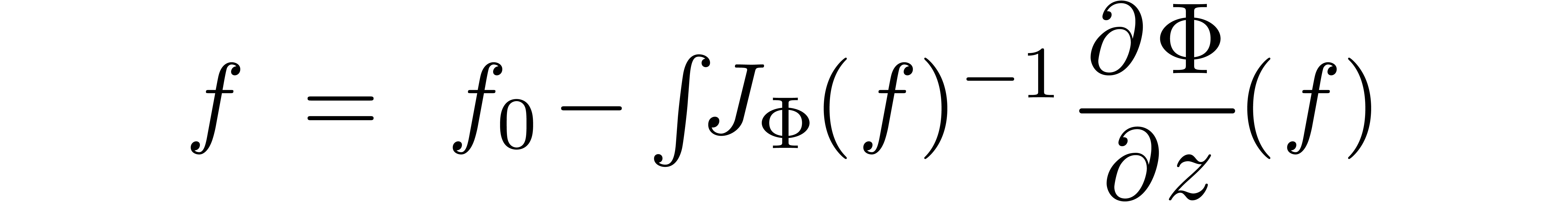

Let us now return to our original implicit system (1). If

is a system of differentially algebraic

equations, then we may also seek to apply Newton's method. For non

degenerate systems and assuming that we have computed the solution

is a system of differentially algebraic

equations, then we may also seek to apply Newton's method. For non

degenerate systems and assuming that we have computed the solution  and a fundamental system of solutions for the

linearized equation at order

and a fundamental system of solutions for the

linearized equation at order  ,

one step of Newton's method yields an extension of the solutions at

order

,

one step of Newton's method yields an extension of the solutions at

order  , for a fixed constant

, for a fixed constant

. From an asymptotic point of

view, this means that the complexities (5) and (6)

remain valid, modulo multiplication of

. From an asymptotic point of

view, this means that the complexities (5) and (6)

remain valid, modulo multiplication of  by the

differential order of the system in these bounds.

by the

differential order of the system in these bounds.

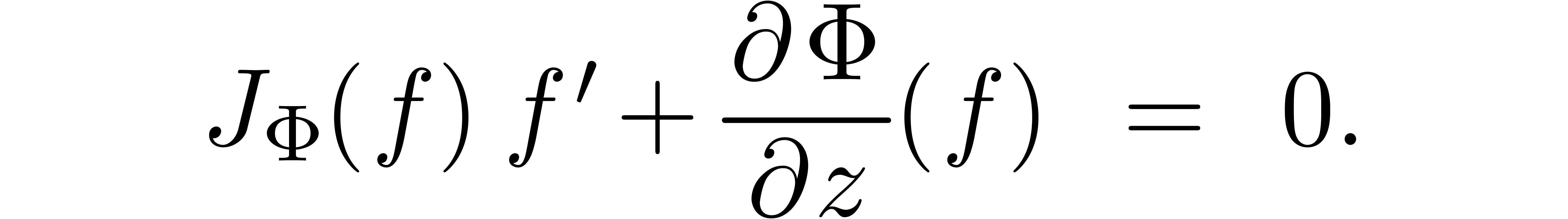

Another approach for the resolution of (1) is to keep

differentiating the system with respect to  until

it becomes equivalent to a system of the form (2). For

instance, if

until

it becomes equivalent to a system of the form (2). For

instance, if  is algebraic, then differentiation

of (1) yields

is algebraic, then differentiation

of (1) yields

Consequently, if  is invertible, then

is invertible, then

provides us with an equivalent system that can be solved by one of the previous methods. Unfortunately, this method requires the computation of the Jacobian, so we do not longer exploit the potential sparsity of the original system.

Yet another recent approach [Hoe09] is to consider not yet

computed coefficients of  as formal unknowns, and

solve the system of equations

as formal unknowns, and

solve the system of equations  for increasing

values of

for increasing

values of  . For large

. For large  , the system

, the system  usually reduces to a linear system of equations. In particular, the

coefficients of series with unknown coefficients are not polynomials but

merely linear combinations. Using the so called “substitution

product”, the multiplication of series with unknown coefficients

can be done while taking advantage of this linearity.

usually reduces to a linear system of equations. In particular, the

coefficients of series with unknown coefficients are not polynomials but

merely linear combinations. Using the so called “substitution

product”, the multiplication of series with unknown coefficients

can be done while taking advantage of this linearity.

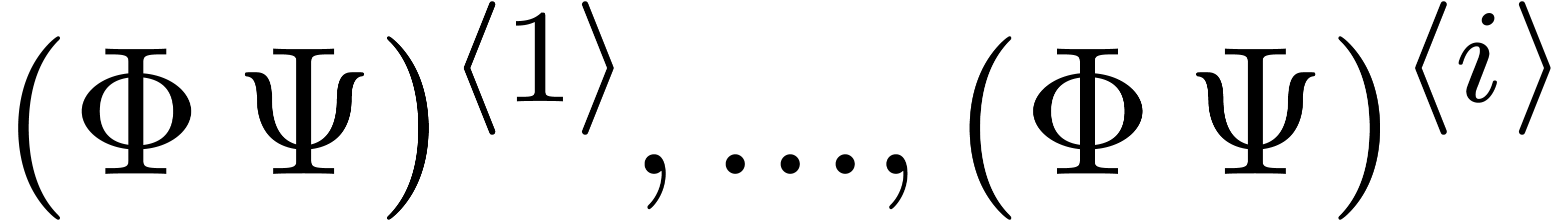

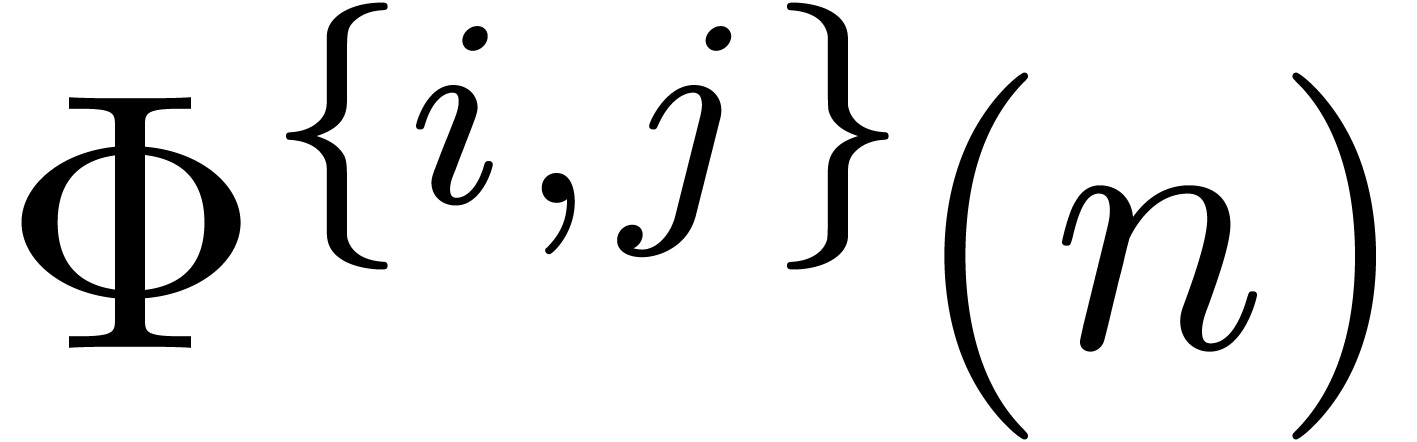

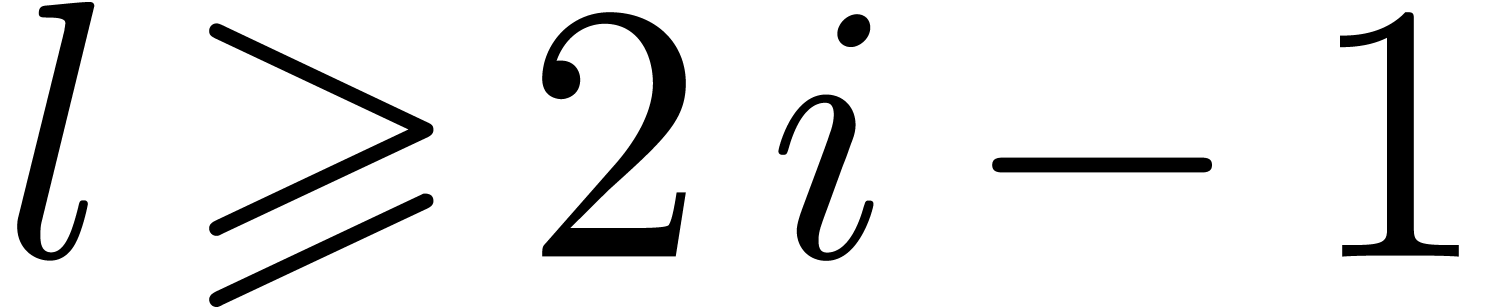

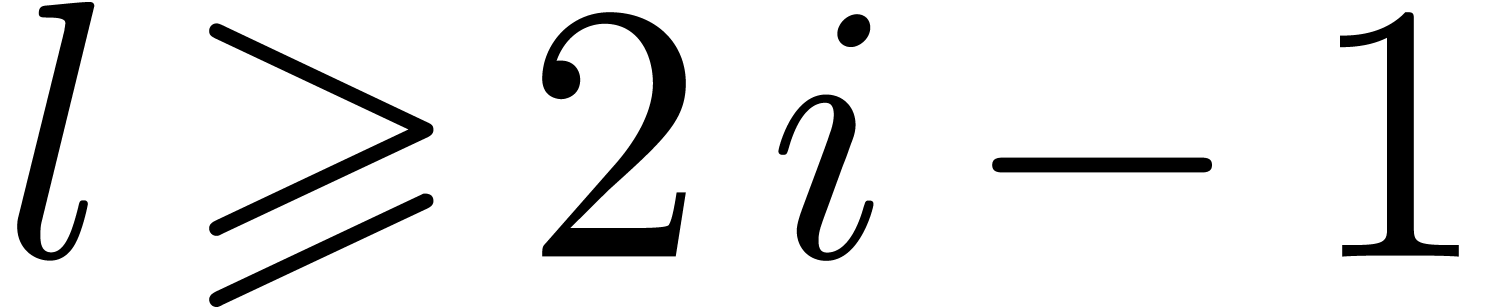

In this paper, we will present a variant of the approach of [Hoe09].

Roughly speaking, we reconsider the series with unknown coefficients as

vectors of partially unknown series. Technically speaking, this is done

via the concept of anticipators, which will be introduced in

section 3. Using this technique, and under mild

assumptions, we show in section 4.1 how to rewrite the

original system of equations into a new system that is both recursive

and not much larger in size. We may then apply a standard relaxed

algorithm for its resolution. In section 4.2 we show that

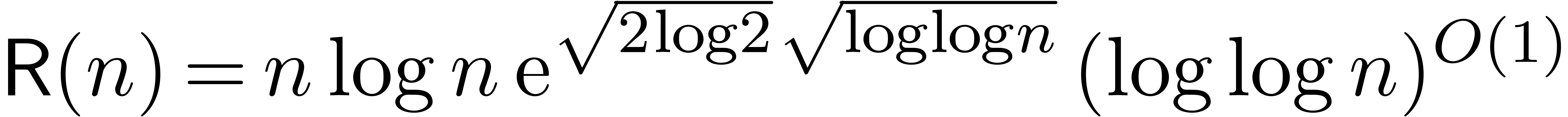

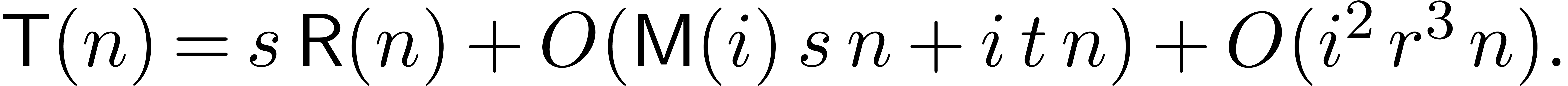

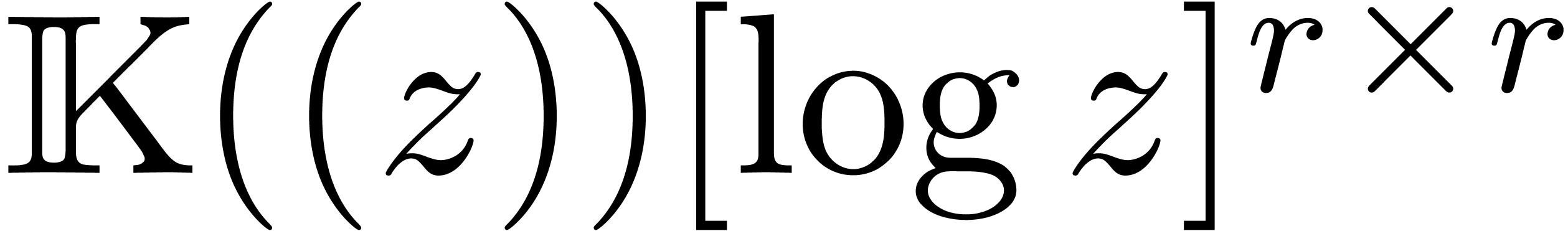

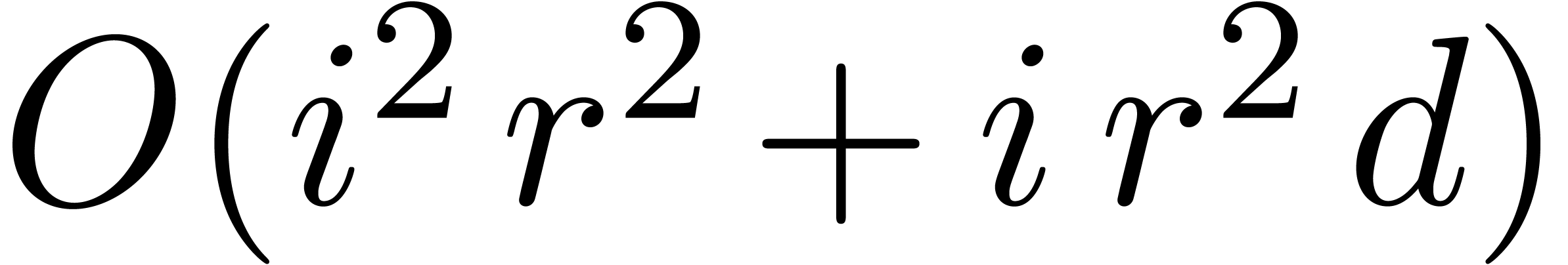

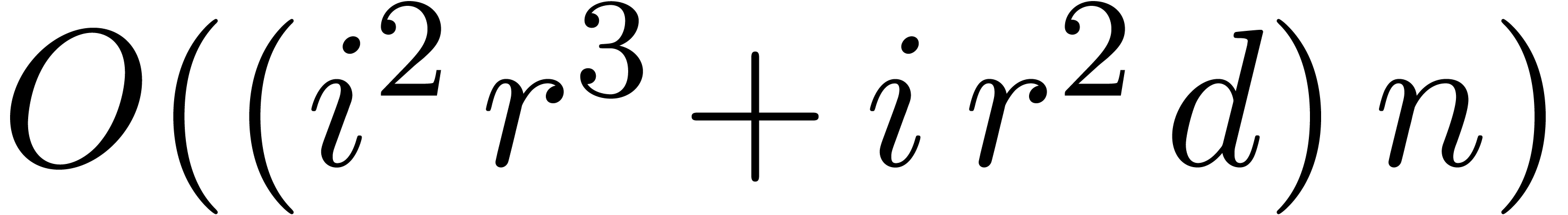

this leads to slightly sharper complexity bounds than those from [Hoe09]. Roughly speaking, in the case of a system of  differential equations of order

differential equations of order  that must be evaluated at order

that must be evaluated at order  in order to

determine the solution at order

in order to

determine the solution at order  ,

we prove the following generalization of (3):

,

we prove the following generalization of (3):

|

(7) |

Especially for larger values of  ,

this still compares favourably with respect to (5). Another

major benefit of the new technique is the fact that it allows for the

direct resolution of implicit equations using existing software for

recursive equations.

,

this still compares favourably with respect to (5). Another

major benefit of the new technique is the fact that it allows for the

direct resolution of implicit equations using existing software for

recursive equations.

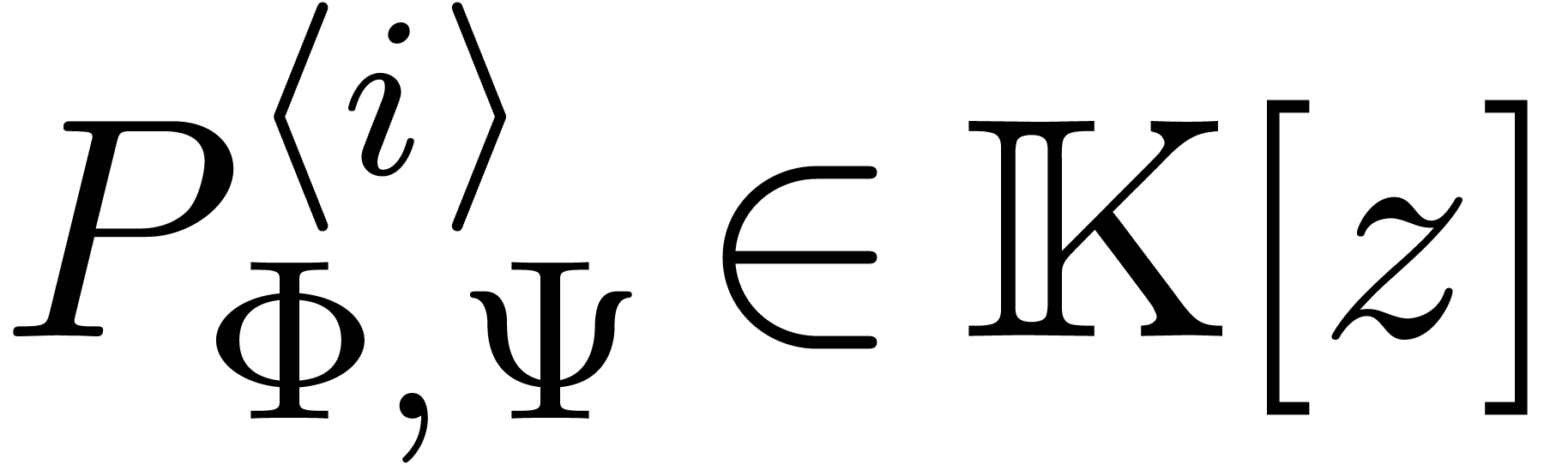

For algebraic equations over the  -adic

numbers, relaxed algorithms with similar complexities have been

developed in [BL12, Leb15]. The index

-adic

numbers, relaxed algorithms with similar complexities have been

developed in [BL12, Leb15]. The index  in (7) corresponds to the opposite of

the notion of shift in [Leb15]. We notice that a preprint

version of the present paper [Hoe11] appeared shortly

before [BL12], so the main ideas in the present paper were

not influenced by [BL12, Leb15]. Predecessors

of the algorithms in this paper were implemented in

in (7) corresponds to the opposite of

the notion of shift in [Leb15]. We notice that a preprint

version of the present paper [Hoe11] appeared shortly

before [BL12], so the main ideas in the present paper were

not influenced by [BL12, Leb15]. Predecessors

of the algorithms in this paper were implemented in

Our algorithm can for instance been used for the computation of power series solutions to differential-algebraic systems of equations. The well known example of a pendulum is treated in detail in section 5. The reader may wish to fast forward to this example when struggling through the more technical parts of this paper.

Acknowledgments. We wish to thank the referees for their careful reading and their comments and suggestions.

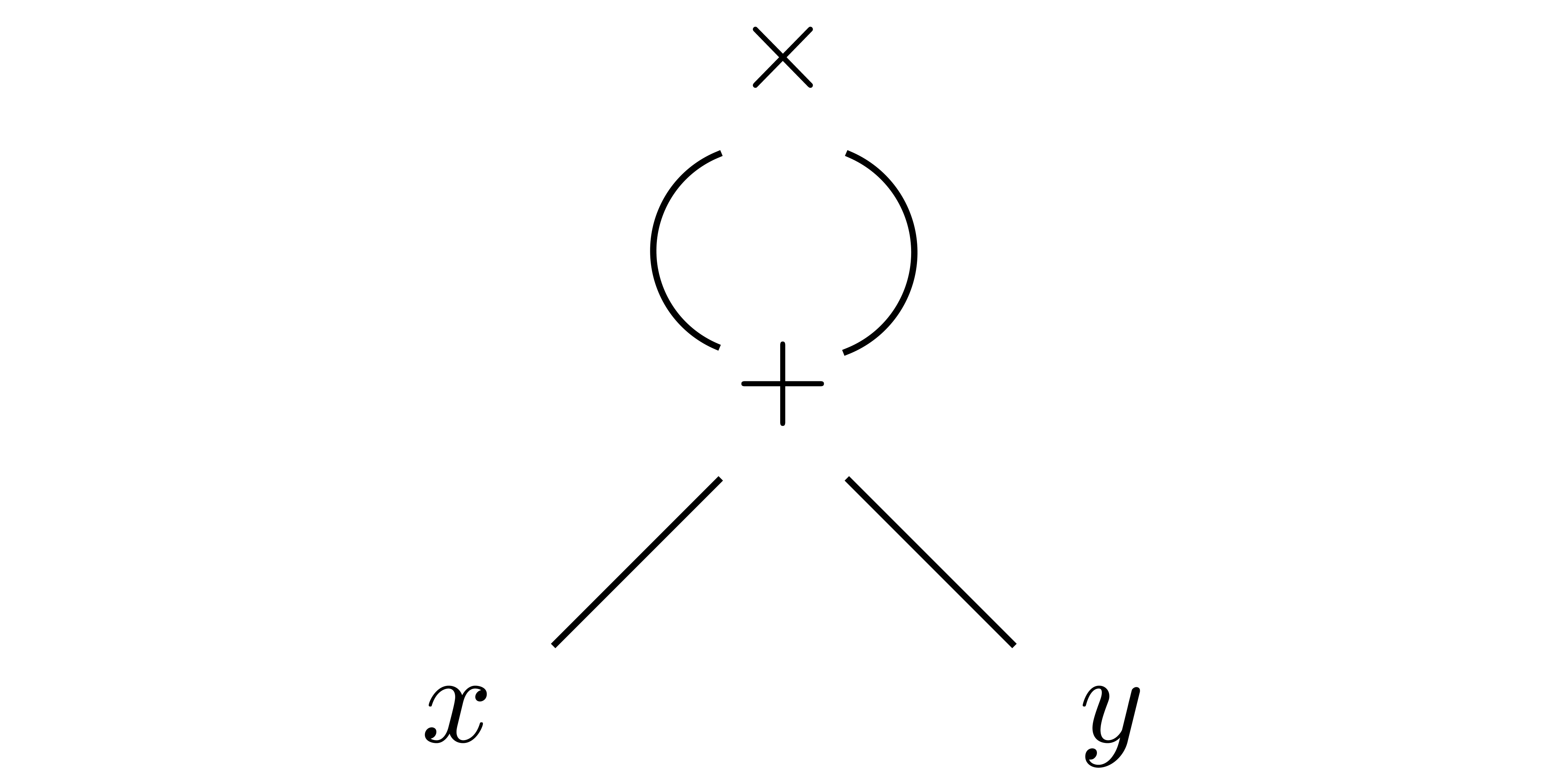

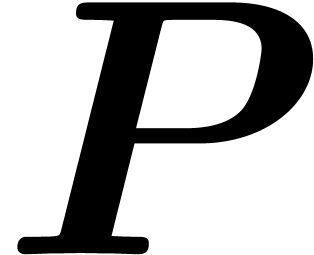

Assume that we have fixed a set  of function

symbols, together with an arity

of function

symbols, together with an arity  for each

for each  . Then a

dag over

. Then a

dag over  is a rooted finite

directed acyclic

is a rooted finite

directed acyclic  -labeled

graph, such that each

-labeled

graph, such that each  -labeled

node has

-labeled

node has  successors, together with an ordering

on the successors. For instance,

successors, together with an ordering

on the successors. For instance,

is a typical dag for the expression  ,

with

,

with  ,

,  and

and  . We will denote by

. We will denote by  the number of nodes of a dag

the number of nodes of a dag  (also called its size) and by

(also called its size) and by  its number of

multiplications (also called its multiplicative size). For our example

dag

its number of

multiplications (also called its multiplicative size). For our example

dag  , we thus have

, we thus have  and

and  . We will

denote by

. We will

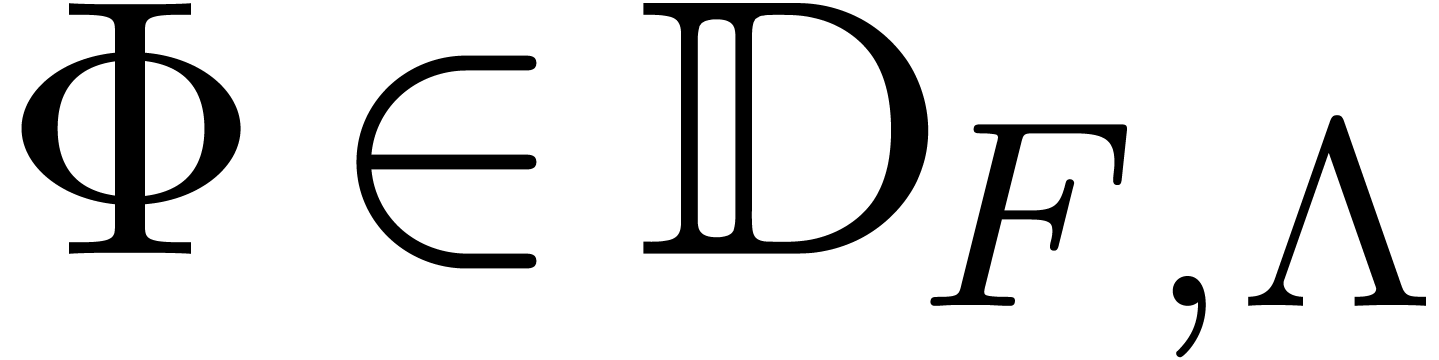

denote by  the set of dags over

the set of dags over  .

.

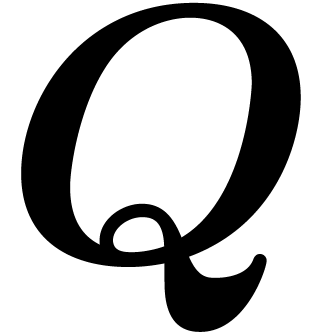

More generally, we may consider multivariate dags with an arbitrary number of roots, which again come with ordering. For instance,

is a bivariate dag that represents a vector of two expressions  and

and  . We will

denote by

. We will

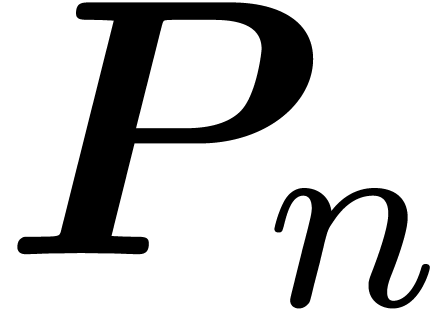

denote by  the number of roots of a multivariate

dag

the number of roots of a multivariate

dag  , which we will also call

its dimension. We will write

, which we will also call

its dimension. We will write  , where

, where  stands for the

subdag whose root is the

stands for the

subdag whose root is the  -th

root of

-th

root of  . We will denote by

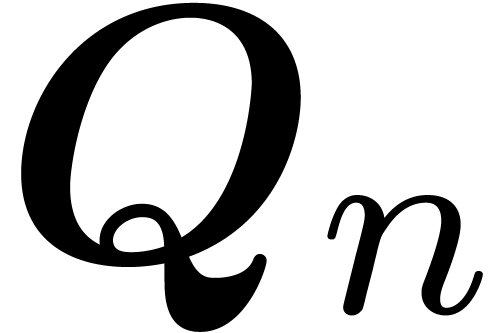

. We will denote by

the set of multivariate dags over

the set of multivariate dags over  of dimension

of dimension  and

and  .

.

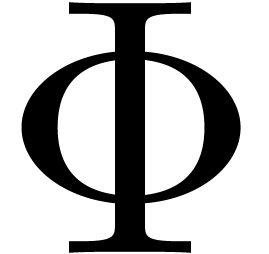

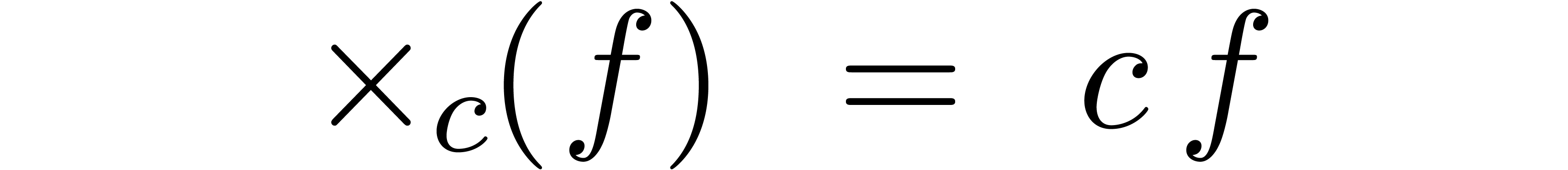

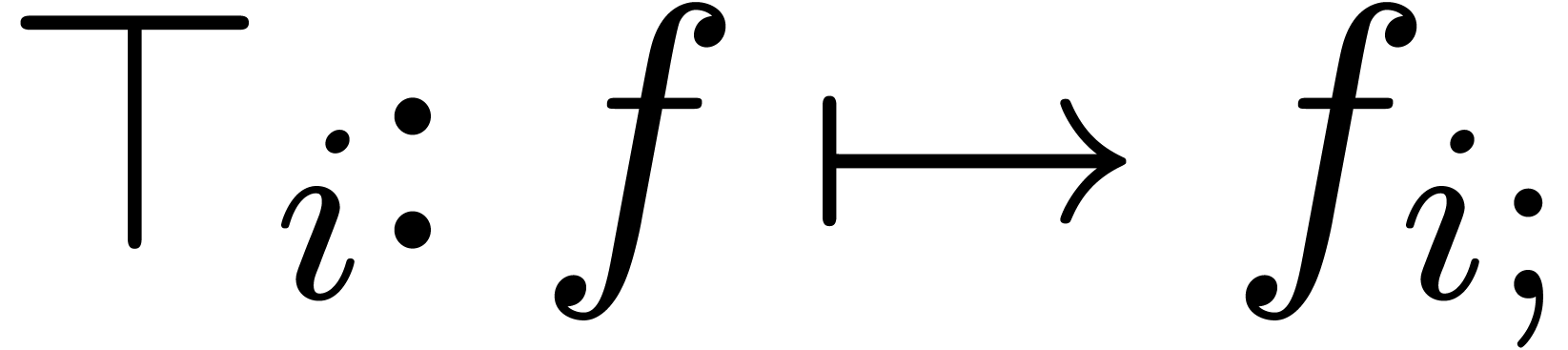

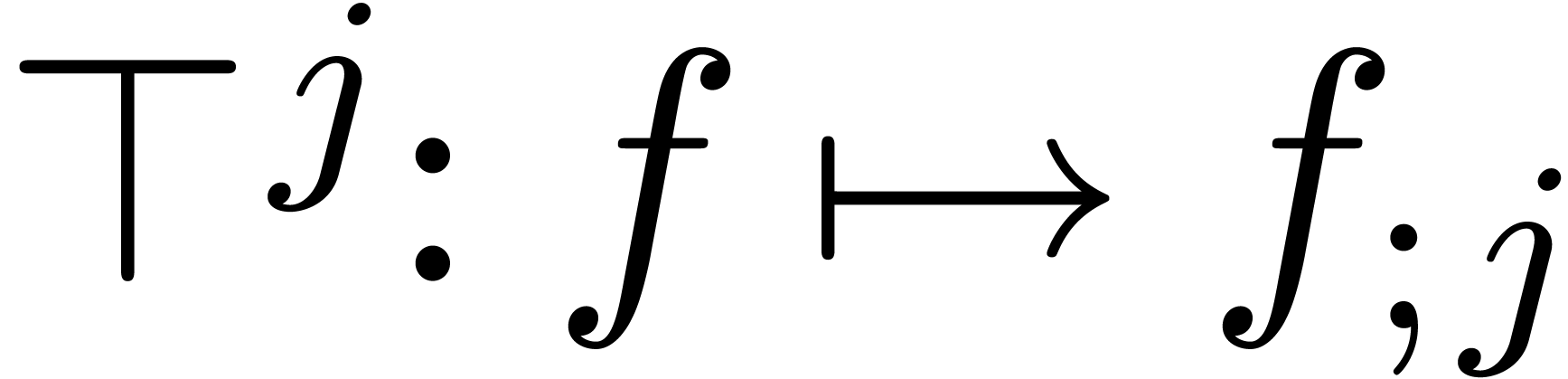

Consider a linear operator  .

We say that

.

We say that  is a

coefficientwise operator, if there exist fixed

constants

is a

coefficientwise operator, if there exist fixed

constants  such that

such that

for all  . For every

. For every  , the operator

, the operator  defined by

defined by

is an example of a coefficientwise operator. The truncation operators

,

,  and

and

defined by

defined by

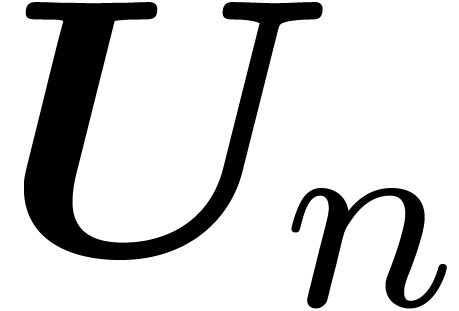

constitute another family of examples. We will denote by  and

and  the sets of all operators of

the form

the sets of all operators of

the form  with

with  resp.

resp.  with

with  . Finally, we define the coefficientwise operators

. Finally, we define the coefficientwise operators

and

and  by

by

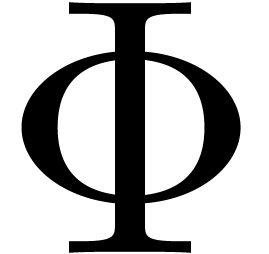

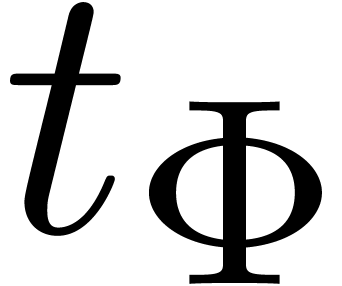

The operator  is called the Euler

derivation with respect to

is called the Euler

derivation with respect to  and we

notice that

and we

notice that  is the inverse of

is the inverse of  on

on  . The Euler derivation

admits the advantage with respect to the ordinary derivation that

. The Euler derivation

admits the advantage with respect to the ordinary derivation that  for all

for all  ,

where “

,

where “ ” stands

for the valuation in

” stands

for the valuation in  .

Nevertheless, any differential equation for the usual derivation can be

rewritten as a differential equation for the Euler derivation: it

suffices to multiply the equation by a sufficiently large power of

.

Nevertheless, any differential equation for the usual derivation can be

rewritten as a differential equation for the Euler derivation: it

suffices to multiply the equation by a sufficiently large power of  .

.

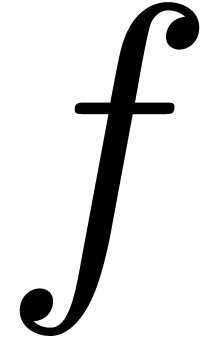

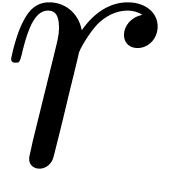

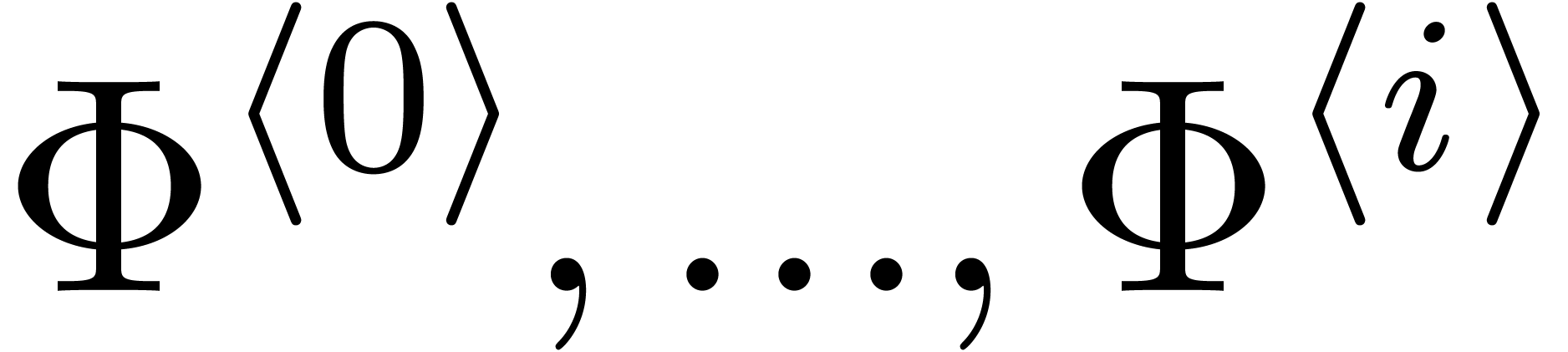

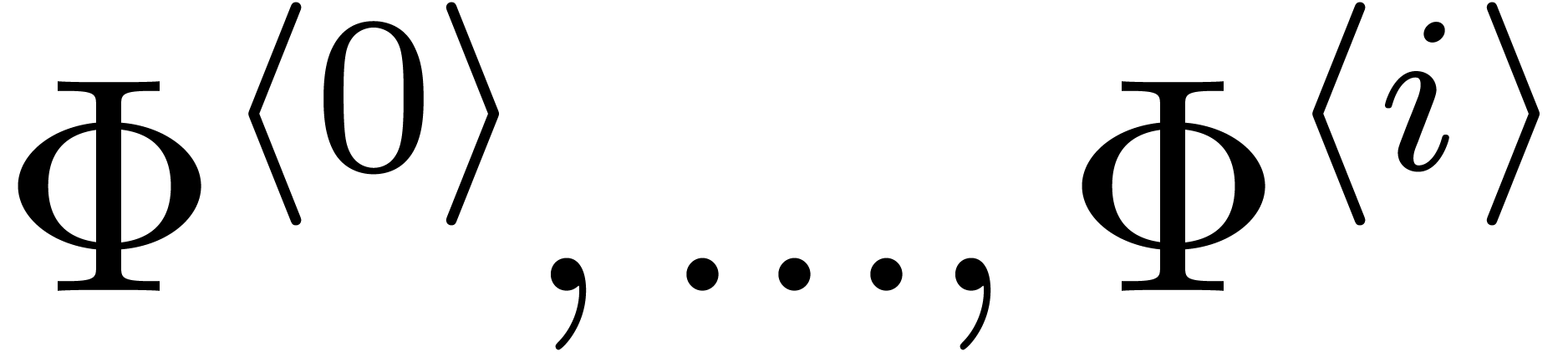

Let  be

be  “indeterminate series” in

“indeterminate series” in  .

We will sometimes consider

.

We will sometimes consider  as a series with

formal coefficients

as a series with

formal coefficients

Let  be a set of coefficientwise linear

operators. In what follows, we will take

be a set of coefficientwise linear

operators. In what follows, we will take

and denote by  the set of dags over

the set of dags over  . Similarly, we set

. Similarly, we set  and

and

. Dags in

. Dags in  ,

,  and

and  will respectively be called algebraic,

differential and integral. Notice that

polynomials in

will respectively be called algebraic,

differential and integral. Notice that

polynomials in  are regarded as dags of size

are regarded as dags of size

, independently of their

degree; this is motivated by the fact that coefficient extraction is

trivial for explicit polynomials.

, independently of their

degree; this is motivated by the fact that coefficient extraction is

trivial for explicit polynomials.

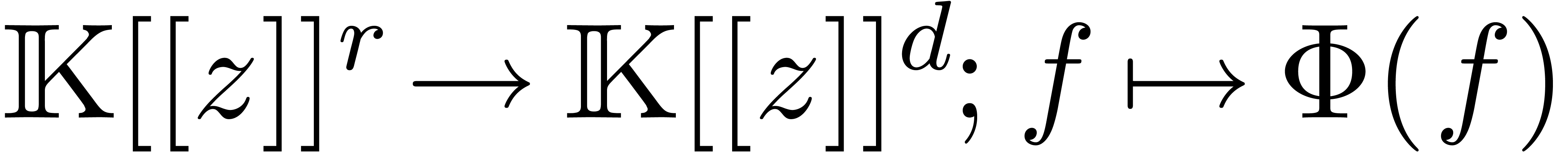

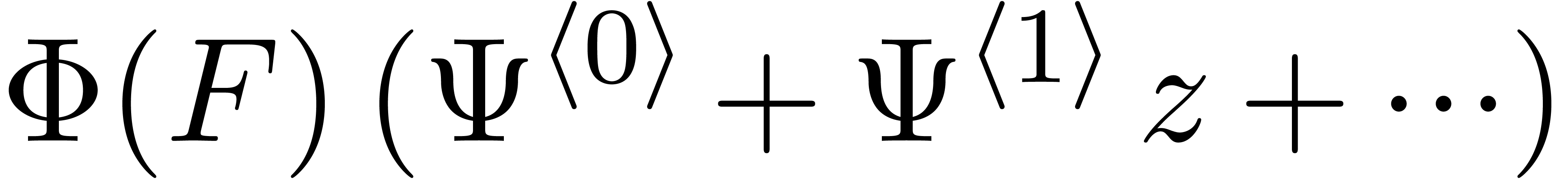

Clearly, any dag  can be considered as a function

can be considered as a function

. Given a small symbolic

perturbation

. Given a small symbolic

perturbation  , we may expand

, we may expand

as a Taylor series in

as a Taylor series in

and truncation at order  yields

yields

We claim that  can be regarded as a dag in

can be regarded as a dag in  . For instance, if

. For instance, if

then

In general, the claim is easily shown by induction over  .

.

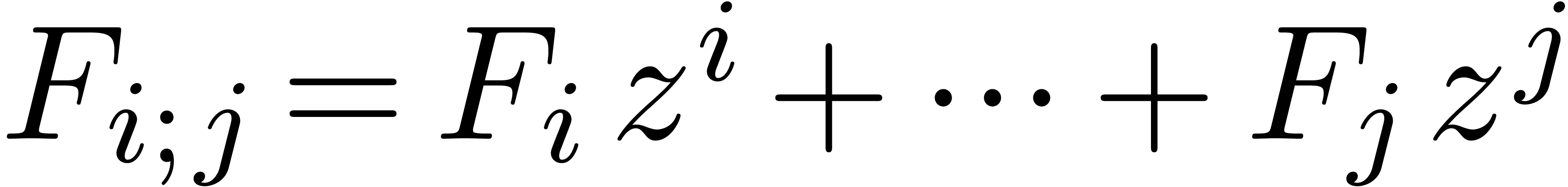

We claim that any dag  can be regarded as a

series in

can be regarded as a

series in

such that each coefficient  is a dag over

is a dag over

Indeed, by induction over the size of  ,

we first define the valuation

,

we first define the valuation  of

of  by

by

We next define the coefficients  by another

induction over the size of

by another

induction over the size of  .

If

.

If  , then we take

, then we take  . Otherwise, we take

. Otherwise, we take

As a result of the claim, we emphasize that  only

depends on the coefficients

only

depends on the coefficients  in

in  of

of  .

.

Remark  can be replaced by any algebraically equivalent

formula, as long as

can be replaced by any algebraically equivalent

formula, as long as  only depends on

only depends on  and

and  . Assuming

the concept of relaxed power series, to be introduced below, this allows

us to compute

. Assuming

the concept of relaxed power series, to be introduced below, this allows

us to compute  using the formula

using the formula

where we may use a relaxed algorithm for the multiplication  . From now on, we will assume that all products

. From now on, we will assume that all products

are expanded in this way.

are expanded in this way.

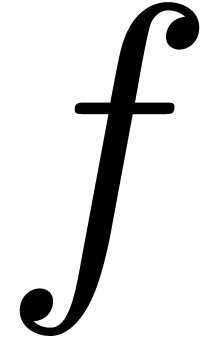

Let us briefly recall the technique of relaxed power series

computations, which is explained in more detail in [Hoe02].

In this computational model, a power series  is

regarded as a stream of coefficients

is

regarded as a stream of coefficients  .

When performing an operation

.

When performing an operation  on power series it

is required that the coefficient

on power series it

is required that the coefficient  of the result

is output as soon as sufficiently many coefficients of the inputs are

known, so that the computation of

of the result

is output as soon as sufficiently many coefficients of the inputs are

known, so that the computation of  does not

depend on the further coefficients. For instance, in the case of a

multiplication

does not

depend on the further coefficients. For instance, in the case of a

multiplication  , we require

that

, we require

that  is output as soon as

is output as soon as  and

and  are known. In particular, we may use the

naive formula

are known. In particular, we may use the

naive formula  for the computation of

for the computation of  .

.

The additional constraint on the time when coefficients should be output

admits the important advantage that the inputs may depend on the output,

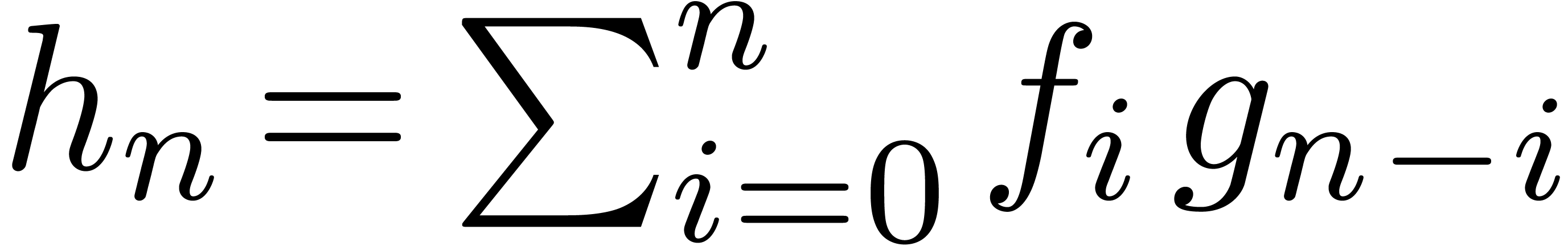

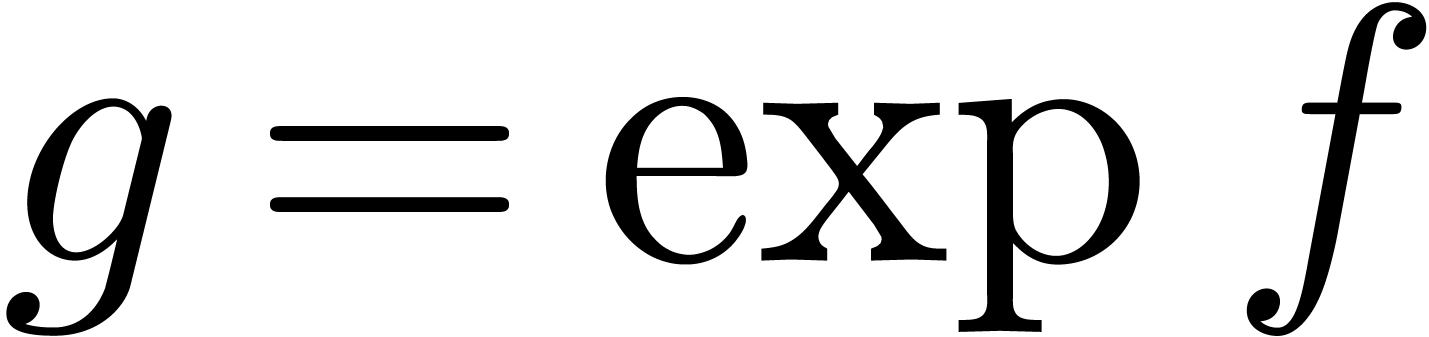

provided that we add a small delay. For instance, the exponential  of a power series

of a power series  may be

computed in a relaxed way using the formula

may be

computed in a relaxed way using the formula

Indeed, when using the naive formula for products, the coefficient  is given by

is given by

and the right-hand side only depends on the previously computed

coefficients  .

.

The main drawback of the relaxed approach is that we cannot directly use

fast algorithms on polynomials for computations with power series. For

instance, assuming that  has sufficiently many

has sufficiently many

-th roots of unity and that

field operations in

-th roots of unity and that

field operations in  can be done in time

can be done in time  , two polynomials of degrees

, two polynomials of degrees  can be multiplied in time

can be multiplied in time  , using FFT multiplication [CT65].

Given the truncations

, using FFT multiplication [CT65].

Given the truncations  and

and  at order

at order  of power series

of power series  , we may thus compute the truncated product

, we may thus compute the truncated product  in time

in time  as well. This is much

faster than the naive

as well. This is much

faster than the naive  relaxed multiplication

algorithm for the computation of

relaxed multiplication

algorithm for the computation of  .

However, the formula for

.

However, the formula for  when using FFT

multiplication depends on all input coefficients

when using FFT

multiplication depends on all input coefficients  and

and  , so the fast algorithm

is not relaxed. Fortunately, efficient relaxed multiplication algorithms

do exist:

, so the fast algorithm

is not relaxed. Fortunately, efficient relaxed multiplication algorithms

do exist:

be the

time complexity for the multiplication of polynomials of degrees

be the

time complexity for the multiplication of polynomials of degrees  in

in  .

Then there exists a relaxed multiplication algorithm for series in

.

Then there exists a relaxed multiplication algorithm for series in

of time complexity

of time complexity  .

.

.

.

In what follows, we will denote by  the

complexity of relaxed multiplication at order

the

complexity of relaxed multiplication at order  . Let us now consider a general equation of the form

. Let us now consider a general equation of the form

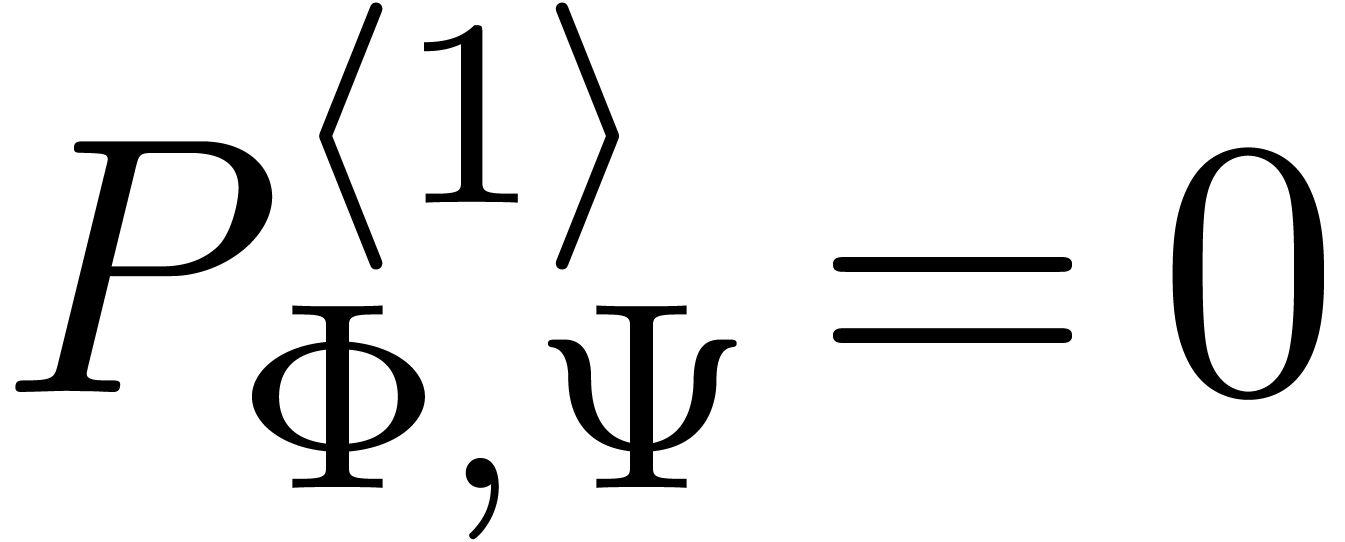

where  an

an  -dimensional

dag. We say that (8) is a recursive

equation, if each coefficient

-dimensional

dag. We say that (8) is a recursive

equation, if each coefficient  only

depends on earlier coefficients

only

depends on earlier coefficients  of

of  . That is,

. That is,  for all

for all

. In order to solve (8)

at order

. In order to solve (8)

at order  , we then need to

perform

, we then need to

perform  relaxed multiplications at order

relaxed multiplications at order  and

and  coefficientwise

operations

coefficientwise

operations  or

or  at order

at order

. This yields the following

complexity bound:

. This yields the following

complexity bound:

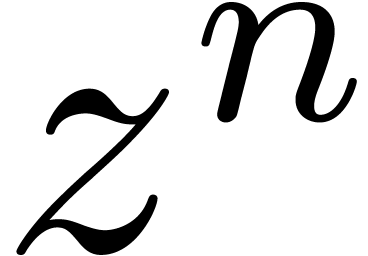

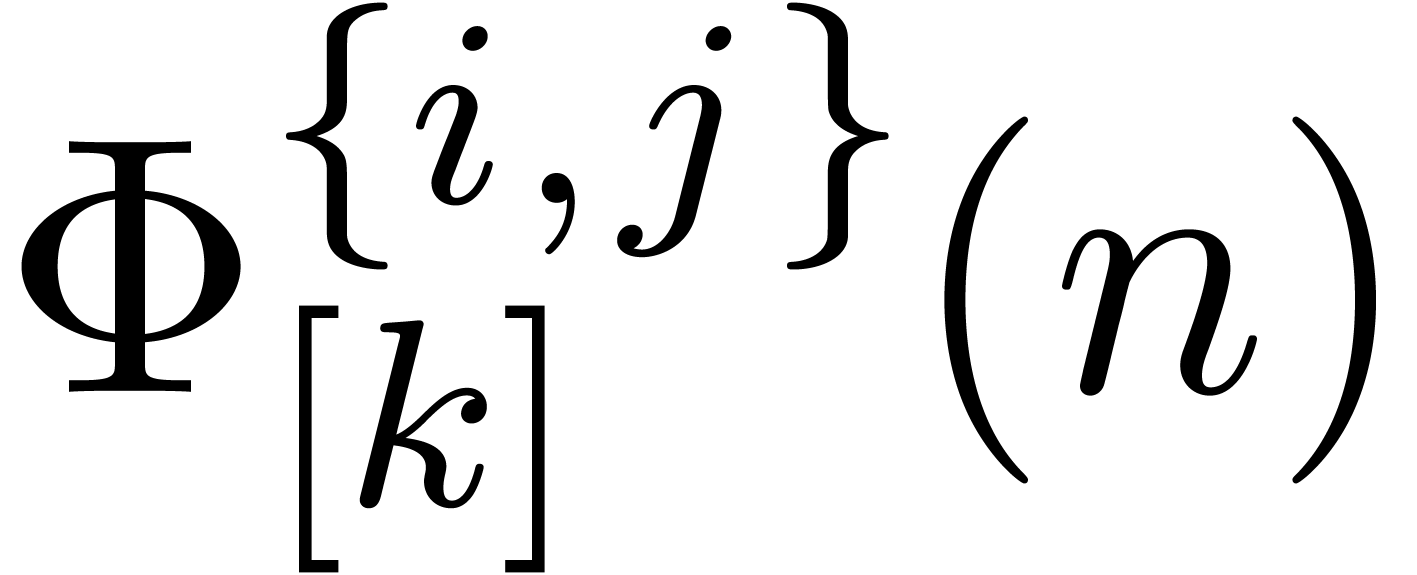

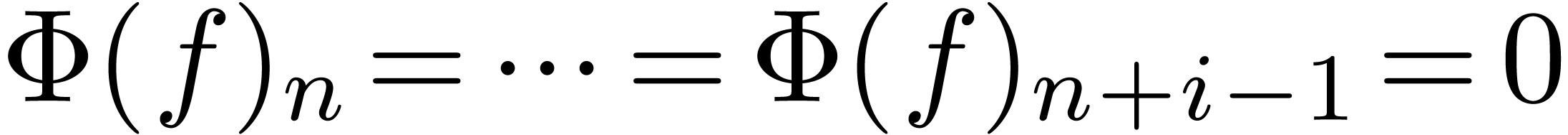

When solving an implicit equation in  using a

relaxed algorithm, the coefficients

using a

relaxed algorithm, the coefficients  are computed

only gradually. During the resolution process, it might happen that we

wish to evaluate dags at higher orders than the number of known

coefficients of

are computed

only gradually. During the resolution process, it might happen that we

wish to evaluate dags at higher orders than the number of known

coefficients of  . That is,

given

. That is,

given  and

and  ,

we might need

,

we might need  , even though

only

, even though

only  are known. In that case, we have a problem,

but we may still do the best we can, and compute

are known. In that case, we have a problem,

but we may still do the best we can, and compute  instead of

instead of  .

.

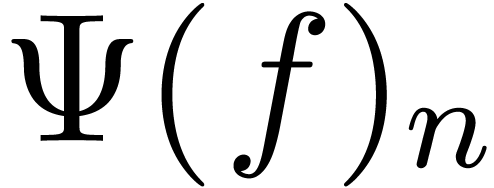

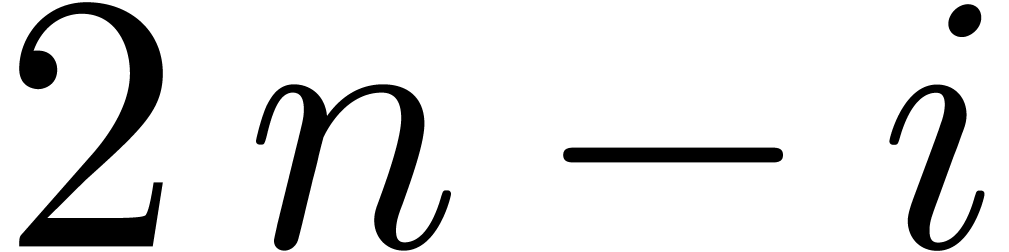

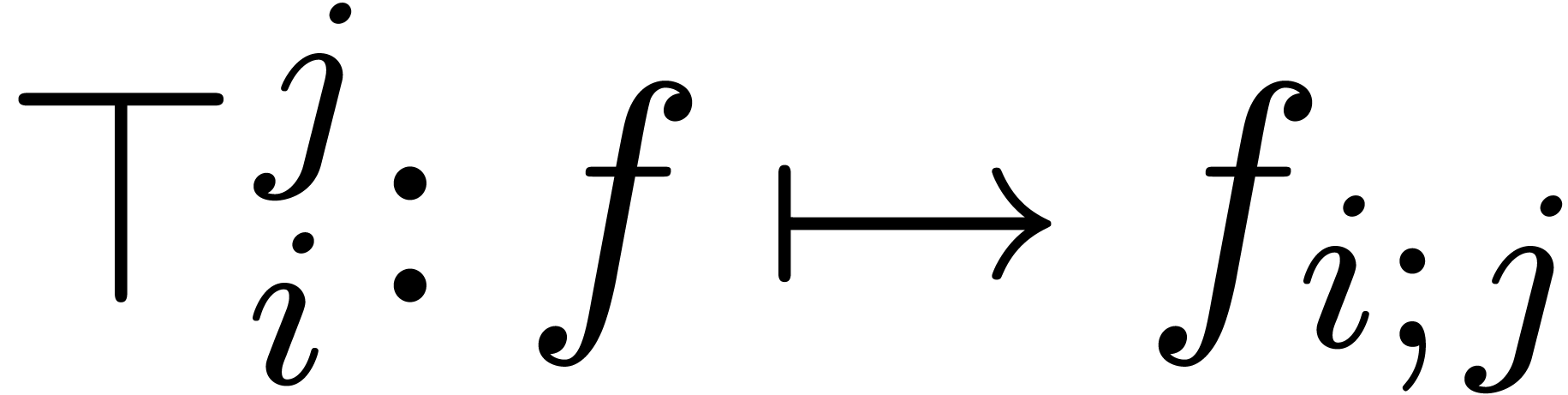

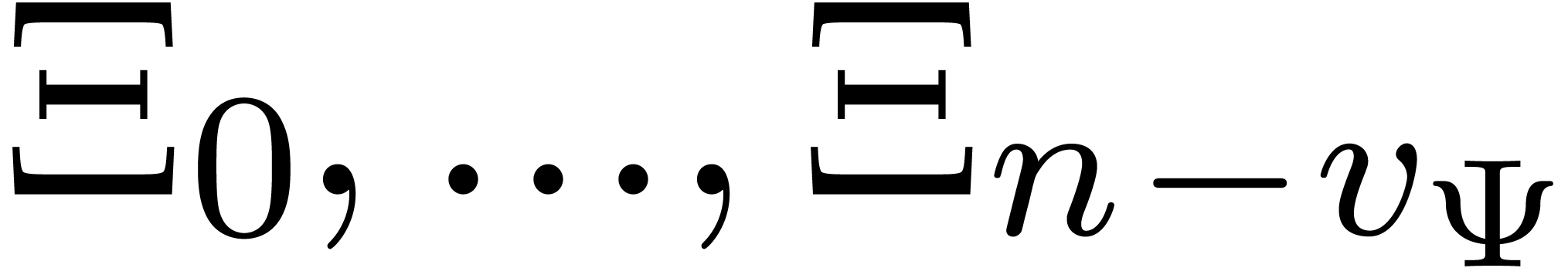

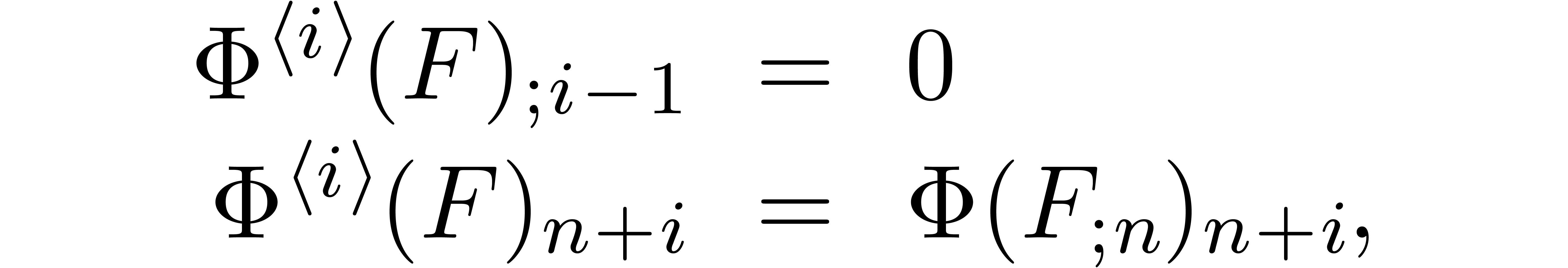

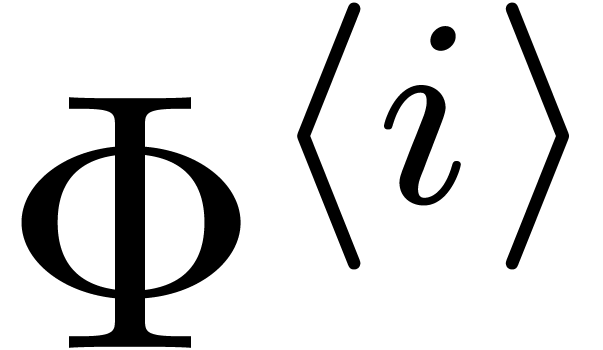

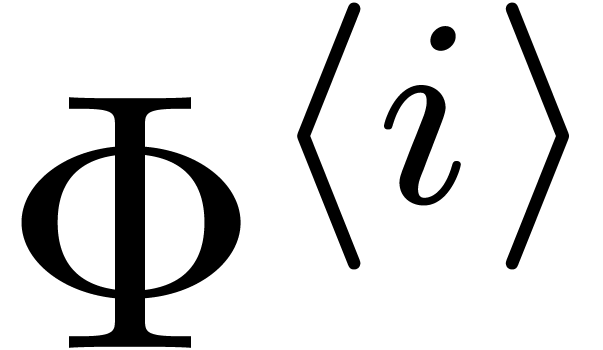

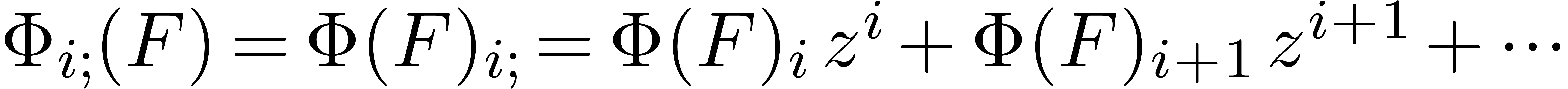

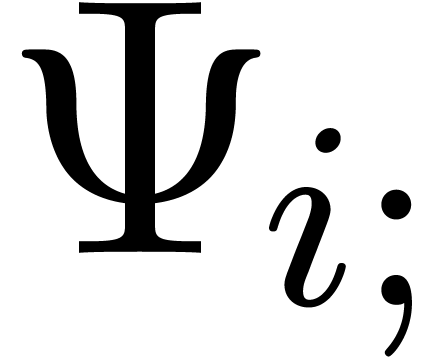

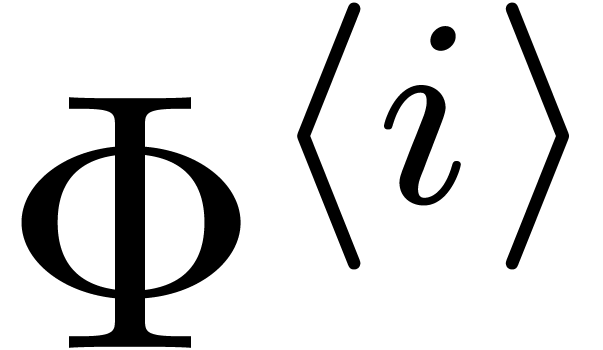

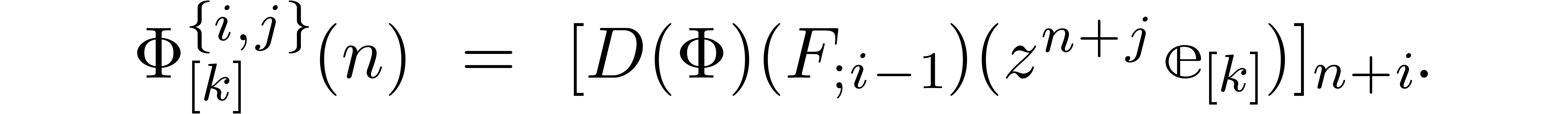

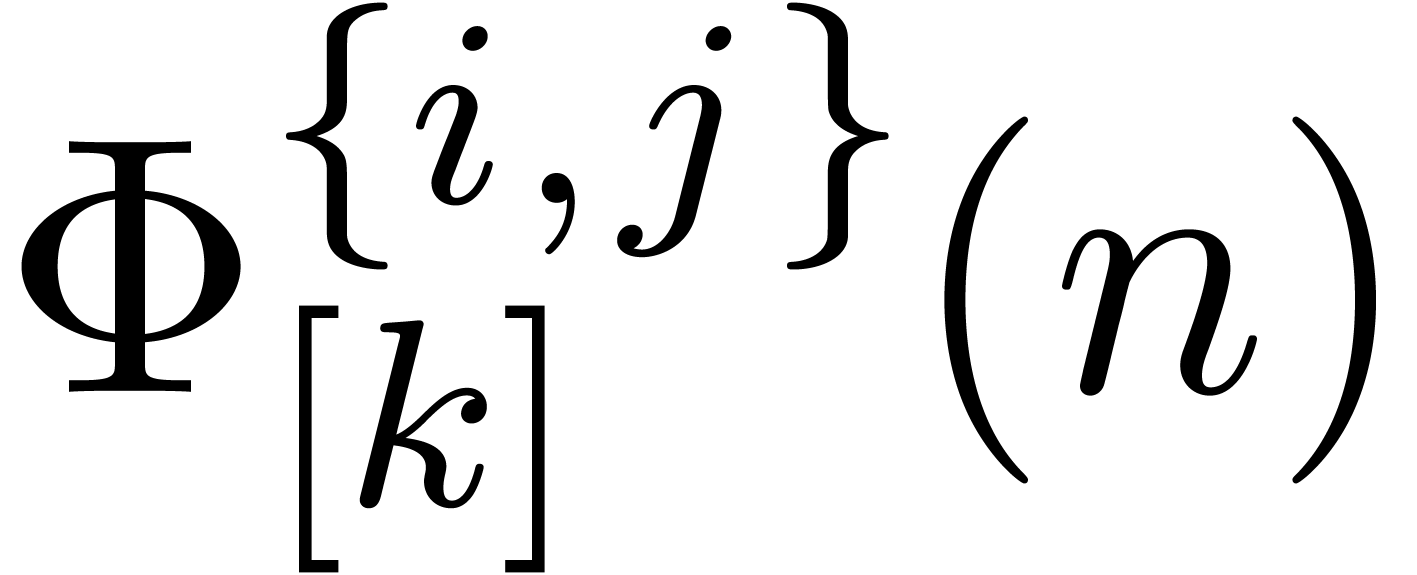

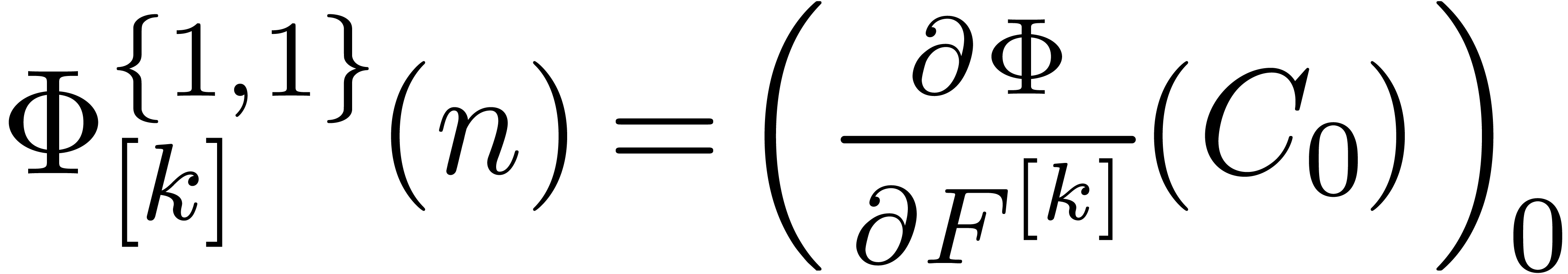

This motivates the introduction of the  -th

order anticipator

-th

order anticipator  of

of  by

by

where we recall that  and

and  . On the one hand, we will show in this section that

. On the one hand, we will show in this section that

can be computed simultaneously by a dag

can be computed simultaneously by a dag  of multiplicative size

of multiplicative size  and

total size

and

total size  . On the other

hand, we will show that

. On the other

hand, we will show that  is essentially a linear

perturbation of

is essentially a linear

perturbation of  , that can be

computed explicitly.

, that can be

computed explicitly.

as

a dag

as

a dag

Let us show how to compute a dag for  .

The following rules are straightforward:

.

The following rules are straightforward:

As to multiplication, for  ,

we have

,

we have

where  stands for the operator with

stands for the operator with  and similarly for

and similarly for  .

Consequently,

.

Consequently,

for some polynomial  with

with  and

and  (in particular,

(in particular,  ). Notice also that

). Notice also that

Now assume that  and

and  are

known. Then we may simultaneously compute

are

known. Then we may simultaneously compute  in the

following way:

in the

following way:

This computation involves one series product and  additions and scalar multiplications. For large

additions and scalar multiplications. For large  , we may further reduce the cost to

, we may further reduce the cost to  since the computation of

since the computation of  really comes down to

the computation of two truncated power series products

really comes down to

the computation of two truncated power series products  and

and  at order

at order  .

In summary, we obtain

.

In summary, we obtain

as a perturbation of

as a perturbation of

Since  only depends on

only depends on  , we notice that

, we notice that

In general, for  and

and  , we may expand

, we may expand

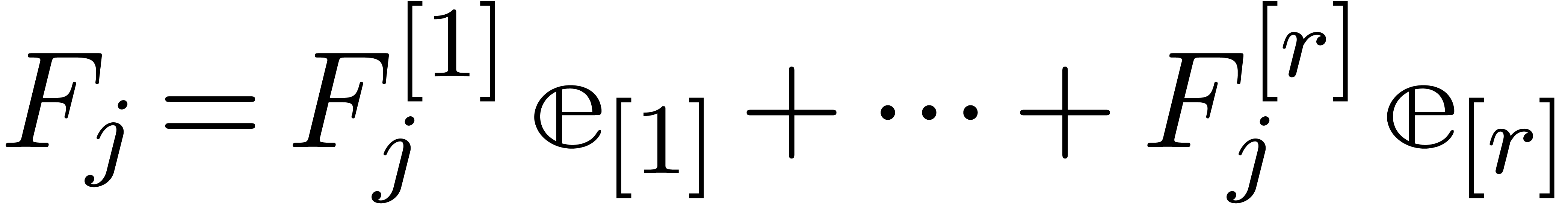

Let  denote the

denote the  -th

basis element of

-th

basis element of  , so that

, so that

for all

for all  .

When considering

.

When considering  as a column vector, it follows

by linearity that

as a column vector, it follows

by linearity that

where  is a row matrix whose

is a row matrix whose  -th entry is given by

-th entry is given by

Notice that  depends on

depends on  , but

, but  does not. Let us

investigate the functions

does not. Let us

investigate the functions  more closely for some

important examples.

more closely for some

important examples.

Example  is algebraic, then we have

is algebraic, then we have

whence

In particular,  is actually constant.

is actually constant.

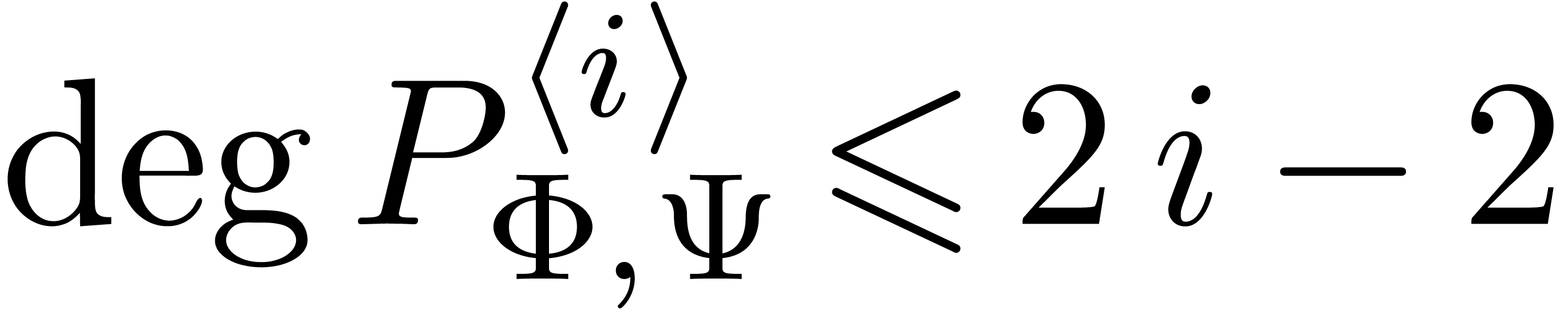

Example  is differential, of differential order

is differential, of differential order  (this means that

(this means that  is the maximal

number of

is the maximal

number of  -nodes on a path

from the root of

-nodes on a path

from the root of  to a leaf), then, considering

to a leaf), then, considering

as a differential polynomial in

as a differential polynomial in  , we have

, we have

whence

|

is a polynomial of degree at most  .

.

Example  is algebraic in

is algebraic in  ,

where

,

where  , then

, then

whence

Consequently, there exists a polynomial  of

degree

of

degree  with

with

for all  .

.

Example  , it

can be checked by induction over the size of

, it

can be checked by induction over the size of  that

that  is still a rational function in

is still a rational function in  , that remains bounded for

, that remains bounded for  , and whose denominator has integer

coefficients. Similarly, for any dag

, and whose denominator has integer

coefficients. Similarly, for any dag  where

where  , the expression

, the expression  is a rational function in

is a rational function in  ,

whose denominator has integer coefficients.

,

whose denominator has integer coefficients.

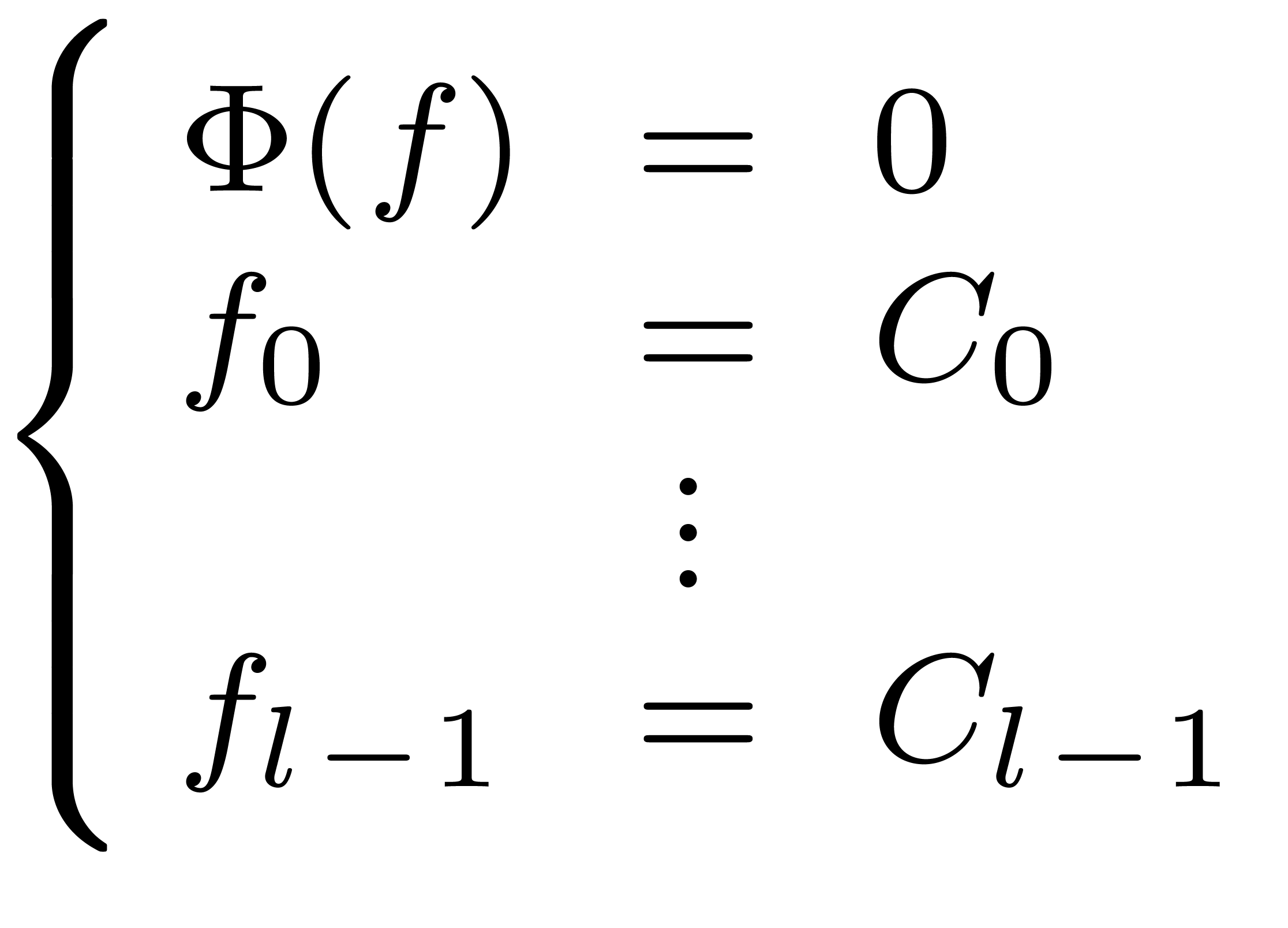

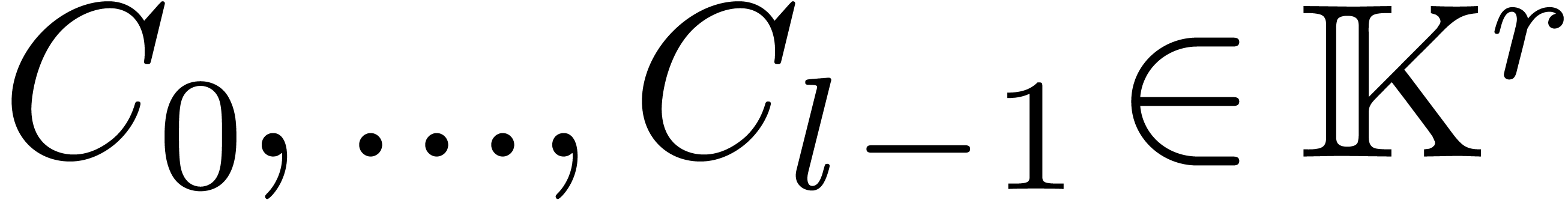

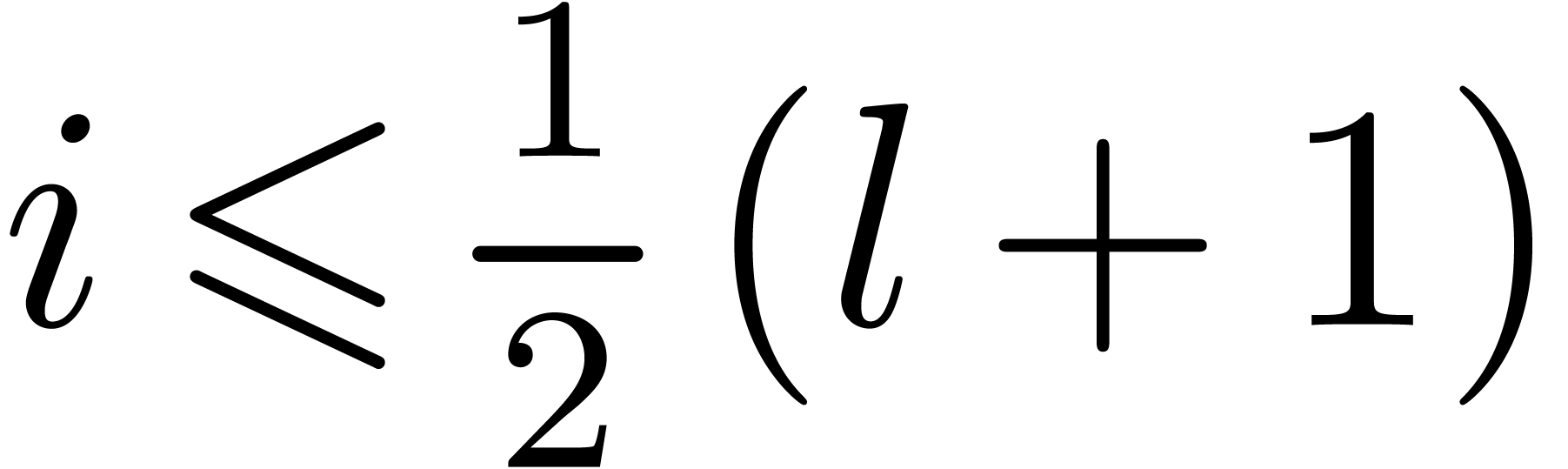

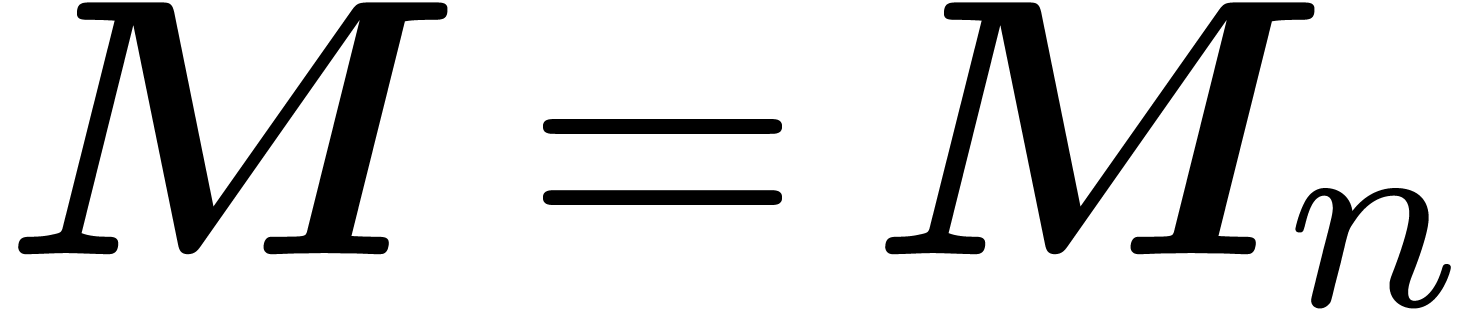

Assume now that we want to solve a system of power series equations

|

(15) |

where  is a vector of dags and

is a vector of dags and  a finite number of initial conditions. For definiteness, it is also

important that (15) admits a unique solution

a finite number of initial conditions. For definiteness, it is also

important that (15) admits a unique solution  . This will be guaranteed by an even stronger

technical assumption to be detailed below. Roughly speaking, for a given

index

. This will be guaranteed by an even stronger

technical assumption to be detailed below. Roughly speaking, for a given

index  , we

will assume that each coefficient

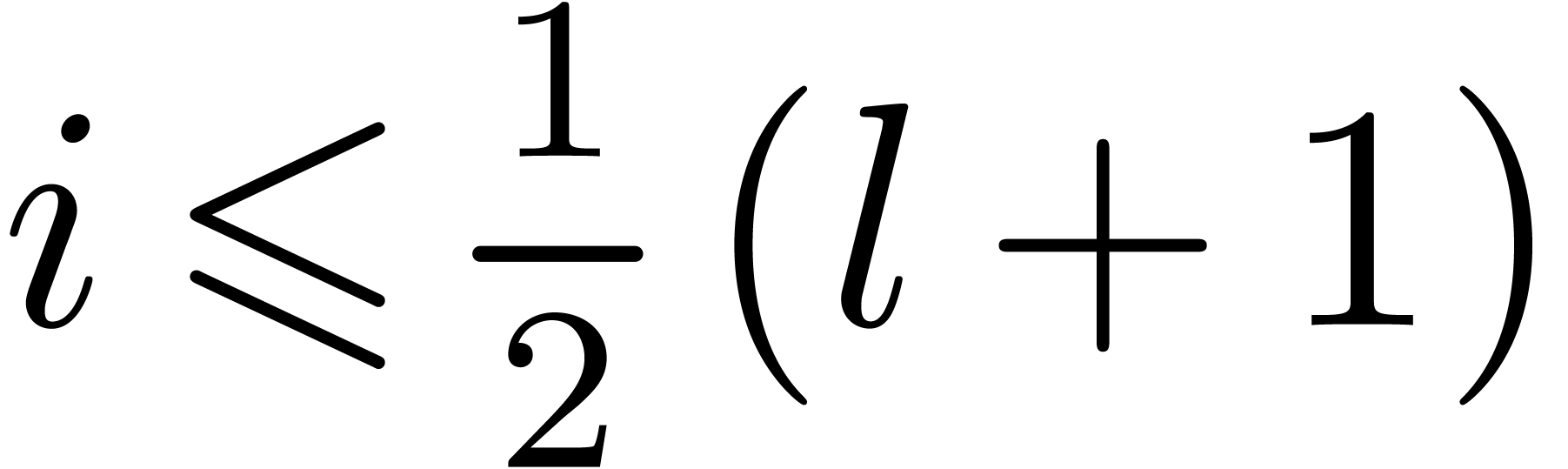

, we

will assume that each coefficient  with

with  can be determined as a function of the previous

coefficients

can be determined as a function of the previous

coefficients  using only the equations

using only the equations  . In fact, we will only use the

equations

. In fact, we will only use the

equations  , which requires us

to assume that

, which requires us

to assume that  in order to determine

in order to determine  via (11); this explains why we need

via (11); this explains why we need

initial conditions.

initial conditions.

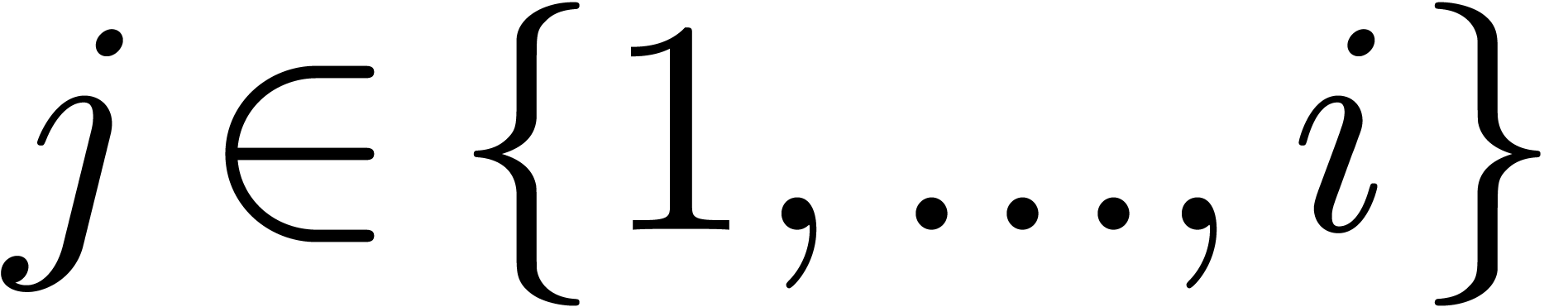

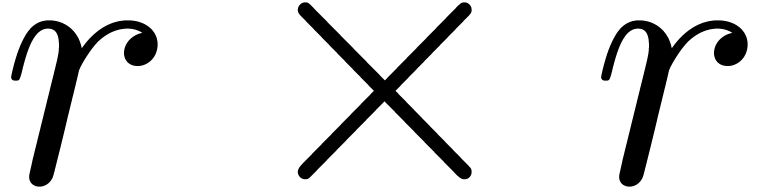

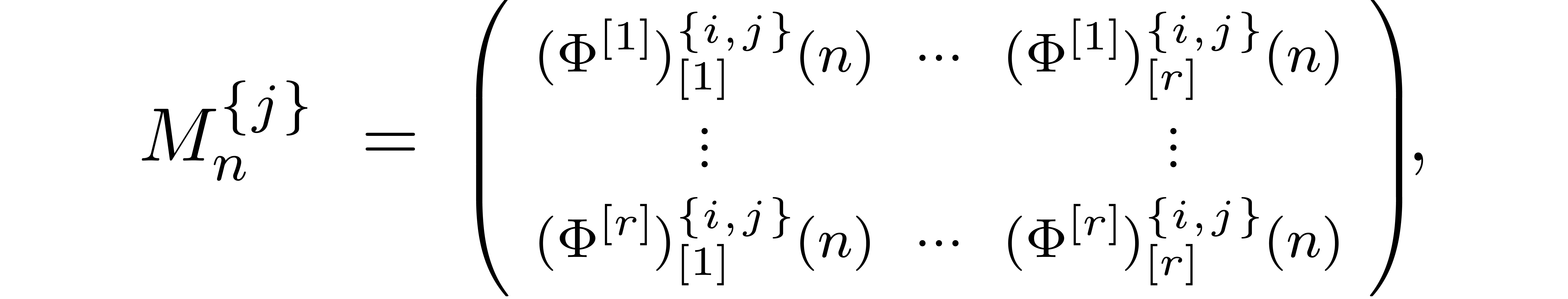

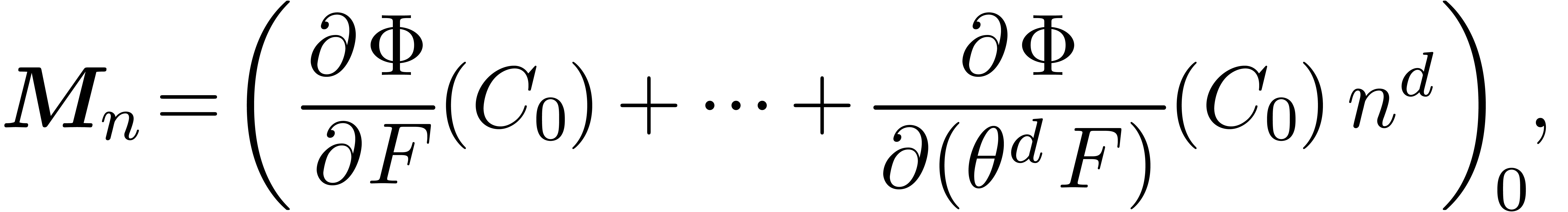

Let  . For each

. For each  and

and  , we

introduce the

, we

introduce the  matrix

matrix

the  block matrix

block matrix

the  ,

,  and

and  block column vectors

block column vectors

and the  column vector

column vector

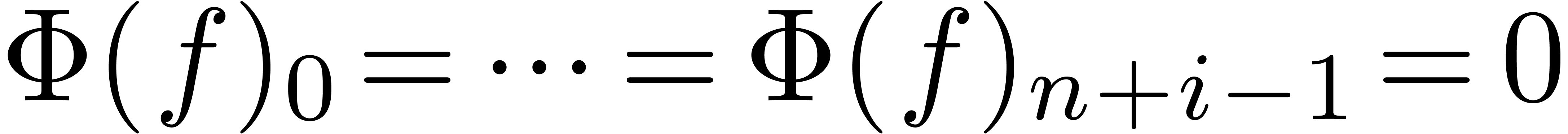

In view of (11), the equations  then

translate into

then

translate into

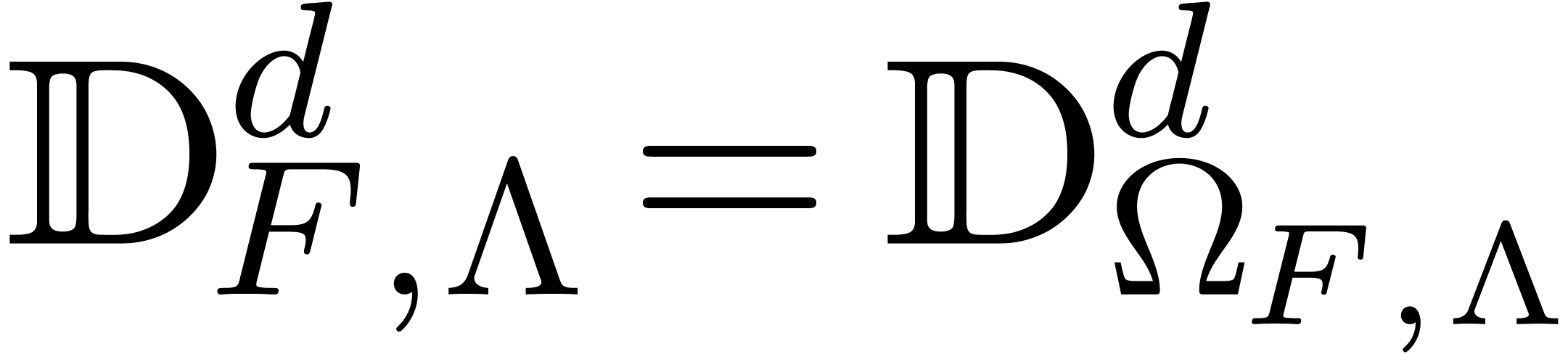

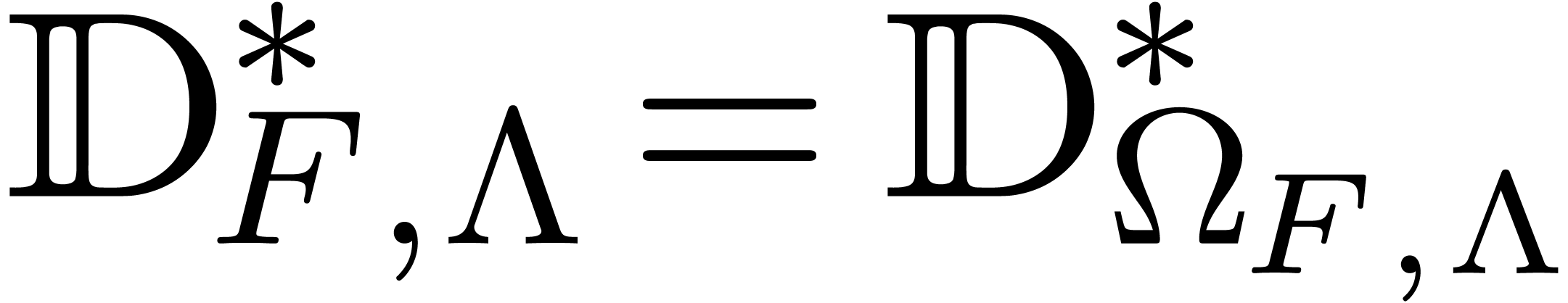

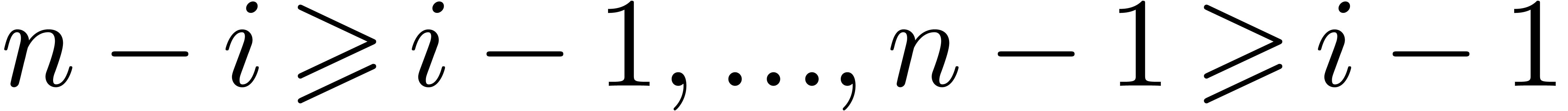

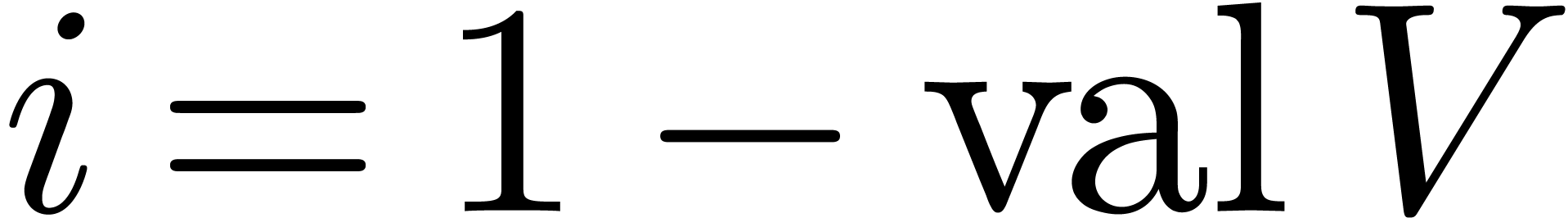

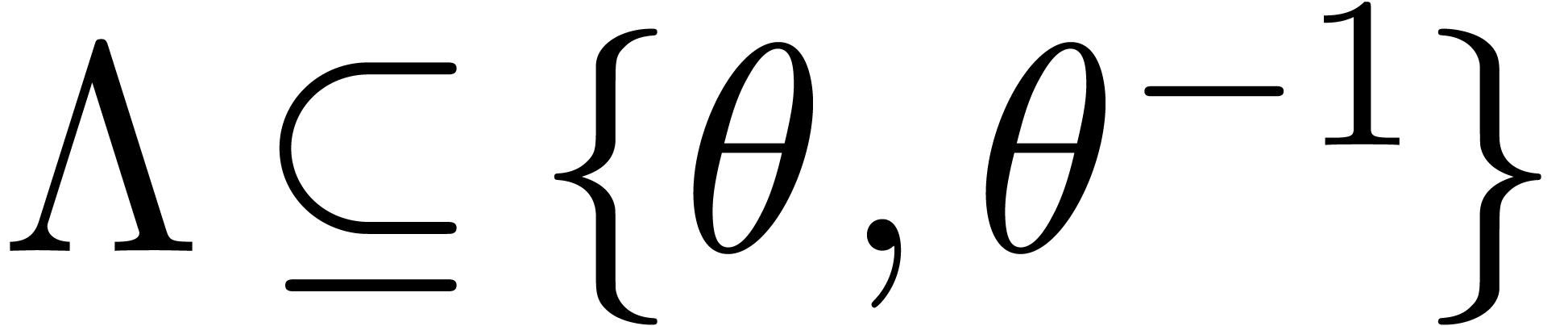

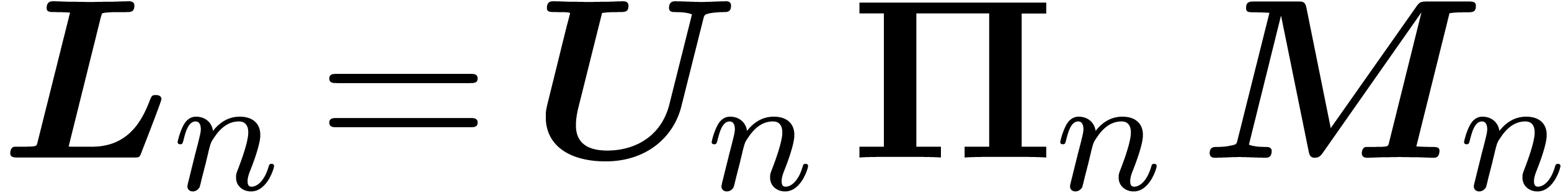

We will say that (15) is  -predictive or predictive

of index

-predictive or predictive

of index  , if, for all

, if, for all  , there exist

, there exist  and

and  matrices

matrices  and

and  , such that

, such that

In that case, we have

whence

provides us with an explicit formula for  .

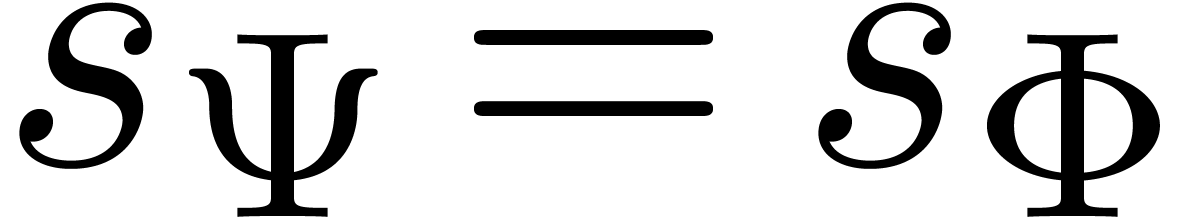

Now let

.

Now let  and

and  be the

operators on vectors of power series

be the

operators on vectors of power series  with the

property that

with the

property that  and

and  .

Then we may rewrite (17) into

.

Then we may rewrite (17) into

for a suitable vector  of polynomials in

of polynomials in  of degree

of degree  .

This is the desired recursive equation for

.

This is the desired recursive equation for  .

.

Example  is algebraic, then we recall from Example 6 that

is algebraic, then we recall from Example 6 that

for all  . If

. If  , then it follows that the matrix

, then it follows that the matrix

does not depend on  and that it simply equals the

evaluation of the Jacobian matrix of

and that it simply equals the

evaluation of the Jacobian matrix of  with

respect to

with

respect to  at the “initial

condition”

at the “initial

condition”  . In

particular, the equation (15) is

. In

particular, the equation (15) is  -predictive if and only if this matrix is

invertible.

-predictive if and only if this matrix is

invertible.

Example  is of differential

order

is of differential

order  . If

. If  , then it follows in a similar way as above

that

, then it follows in a similar way as above

that

so we may regard  as the evaluation at

as the evaluation at  of a matrix

of a matrix  in

in  of degree

of degree  in

in  .

In particular, the equation (15) is

.

In particular, the equation (15) is  -predictive for a sufficiently large value of

-predictive for a sufficiently large value of  if and only if

if and only if  admits an

inverse in

admits an

inverse in  . More precisely,

if

. More precisely,

if  is invertible, then we need

is invertible, then we need  to be larger than each of the poles of

to be larger than each of the poles of  (which

are finite in number).

(which

are finite in number).

Example  is algebraic and

is algebraic and  is such that the

Jacobian matrix

is such that the

Jacobian matrix  evaluated at

evaluated at  admits an inverse

admits an inverse  with Laurent series

coefficients, then it can be shown that the system

with Laurent series

coefficients, then it can be shown that the system  is

is  -predictive for

-predictive for  , whenever

, whenever  . Notice that Example 10 is a special

case of this situation when

. Notice that Example 10 is a special

case of this situation when  and

and  . The observation actually generalizes to more

general dags

. The observation actually generalizes to more

general dags  , for a suitable

interpretation of the inverse

, for a suitable

interpretation of the inverse  as a matrix of

operators acting on

as a matrix of

operators acting on  and assuming that

and assuming that  is taken sufficiently large. This also means that the

method of this paper can be applied to the same class of equations as a

suitable generalization of Newton's method. However, the details behind

these claims are beyond the scope of this paper.

is taken sufficiently large. This also means that the

method of this paper can be applied to the same class of equations as a

suitable generalization of Newton's method. However, the details behind

these claims are beyond the scope of this paper.

Remark

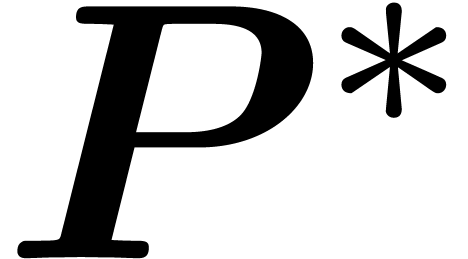

Let us first consider the case of an algebraic dag  . In that case, the matrix

. In that case, the matrix  does not depend on

does not depend on  and its coefficients are

explicitly given by (12). We may now determine

and its coefficients are

explicitly given by (12). We may now determine  and

and  matrices

matrices  and

and  with

with

using Gaussian elimination in order, and whenever such matrices exist.

The equation  -predictive, if and only if this is indeed

possible.

-predictive, if and only if this is indeed

possible.

-predictive

equation

-predictive

equation  is algebraic. Then we may compute

is algebraic. Then we may compute  terms of its unique solution

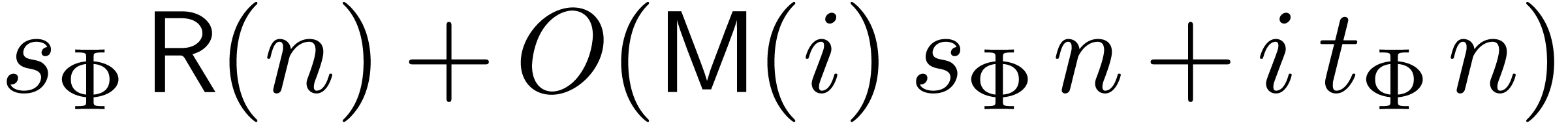

terms of its unique solution  in time

in time

Proof. By what precedes, the operators  and

and  in (18) are

really the constant matrices from (19). By lemma 5,

the size of the righthand side of (18) as a dag is

therefore bounded by

in (18) are

really the constant matrices from (19). By lemma 5,

the size of the righthand side of (18) as a dag is

therefore bounded by  and its multiplicative size

is exactly

and its multiplicative size

is exactly  . The result thus

follows from proposition 4.

. The result thus

follows from proposition 4.

Assume now that  . Then we

claim that there exists an algorithms for checking

. Then we

claim that there exists an algorithms for checking  -predictivity and constructing a general

formula for the corresponding matrices

-predictivity and constructing a general

formula for the corresponding matrices  and

and  . Indeed, we recall from section 3.2 that

. Indeed, we recall from section 3.2 that  is the evaluation at

is the evaluation at  of a matrix

of a matrix  with entries in

with entries in

and denominators in

and denominators in  . We may thus use Gaussian elimination in order to

compute

. We may thus use Gaussian elimination in order to

compute  and

and  matrices

matrices

and

and  with entries in

with entries in  and

and

whenever such matrices exist. For those  that are

not positive integer roots of one of the denominators of the entries of

that are

not positive integer roots of one of the denominators of the entries of

, we now have

, we now have  and

and  . For each

of the finite number of integer roots

. For each

of the finite number of integer roots  ,

we may directly compute the matrices

,

we may directly compute the matrices  and

and  by Gaussian elimination over

by Gaussian elimination over  , whenever such matrices exist.

, whenever such matrices exist.

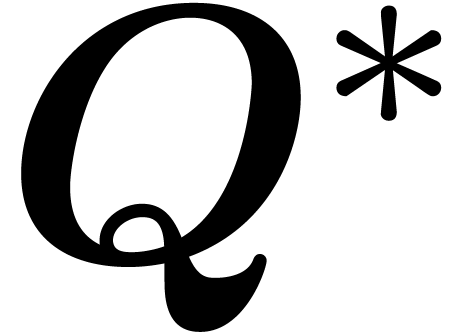

-predictive

equation

-predictive

equation  be the maximal degree of an entry of

be the maximal degree of an entry of  . Then we may compute

. Then we may compute  terms of the solution

terms of the solution  to

to

Proof. The computation of  (and the finite number of exceptional

(and the finite number of exceptional  for which

for which

is a root of one of the denominators) is a

precomputation. The determination of every next

is a root of one of the denominators) is a

precomputation. The determination of every next  can be done in time

can be done in time  , via a

diagonal translation of

, via a

diagonal translation of  and evaluation of the

and evaluation of the

rational functions that are the entries of the

bottom

rational functions that are the entries of the

bottom  submatrix. Now assume that we maintain

upper and lower triangular matrices

submatrix. Now assume that we maintain

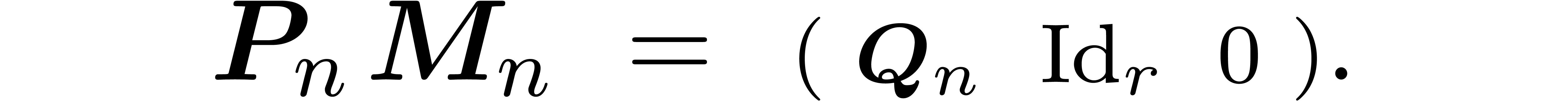

upper and lower triangular matrices  ,

,

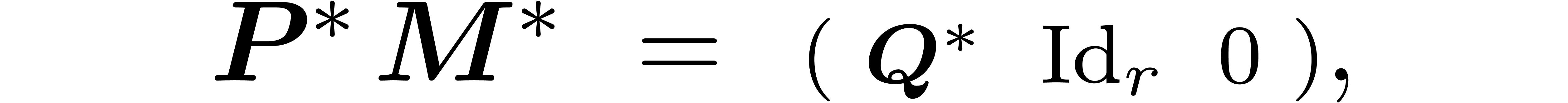

and a permutation matrix

and a permutation matrix  at each stage such that

at each stage such that  .

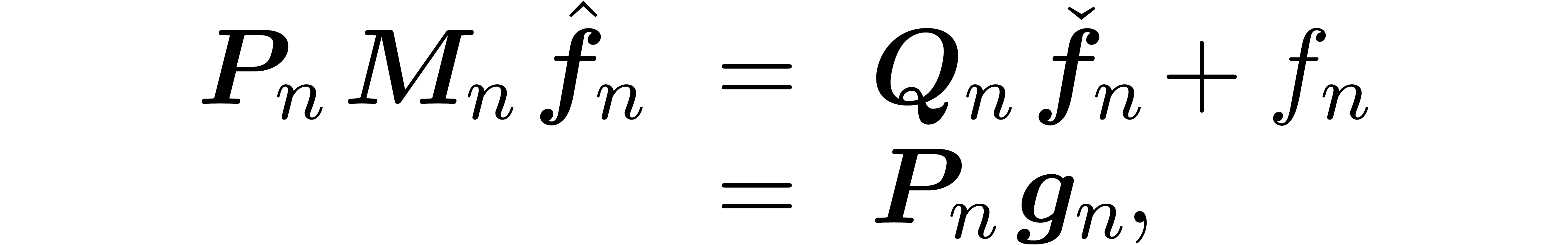

Then the determination of

.

Then the determination of  ,

,

and

and  as a function of

as a function of

and

and  can be done in time

can be done in time

using naive linear algebra. The determination of

using naive linear algebra. The determination of

and

and  from

from  ,

,  and

and  can again be done in time

can again be done in time  .

Consequently, the cost of applying the operators

.

Consequently, the cost of applying the operators  and

and  during the relaxed resolution of (18)

at order

during the relaxed resolution of (18)

at order  is bounded by

is bounded by  . The cost of the evaluation of the remaining dag is

bounded by

. The cost of the evaluation of the remaining dag is

bounded by  , as in the

algebraic case.

, as in the

algebraic case.

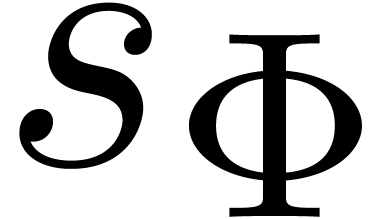

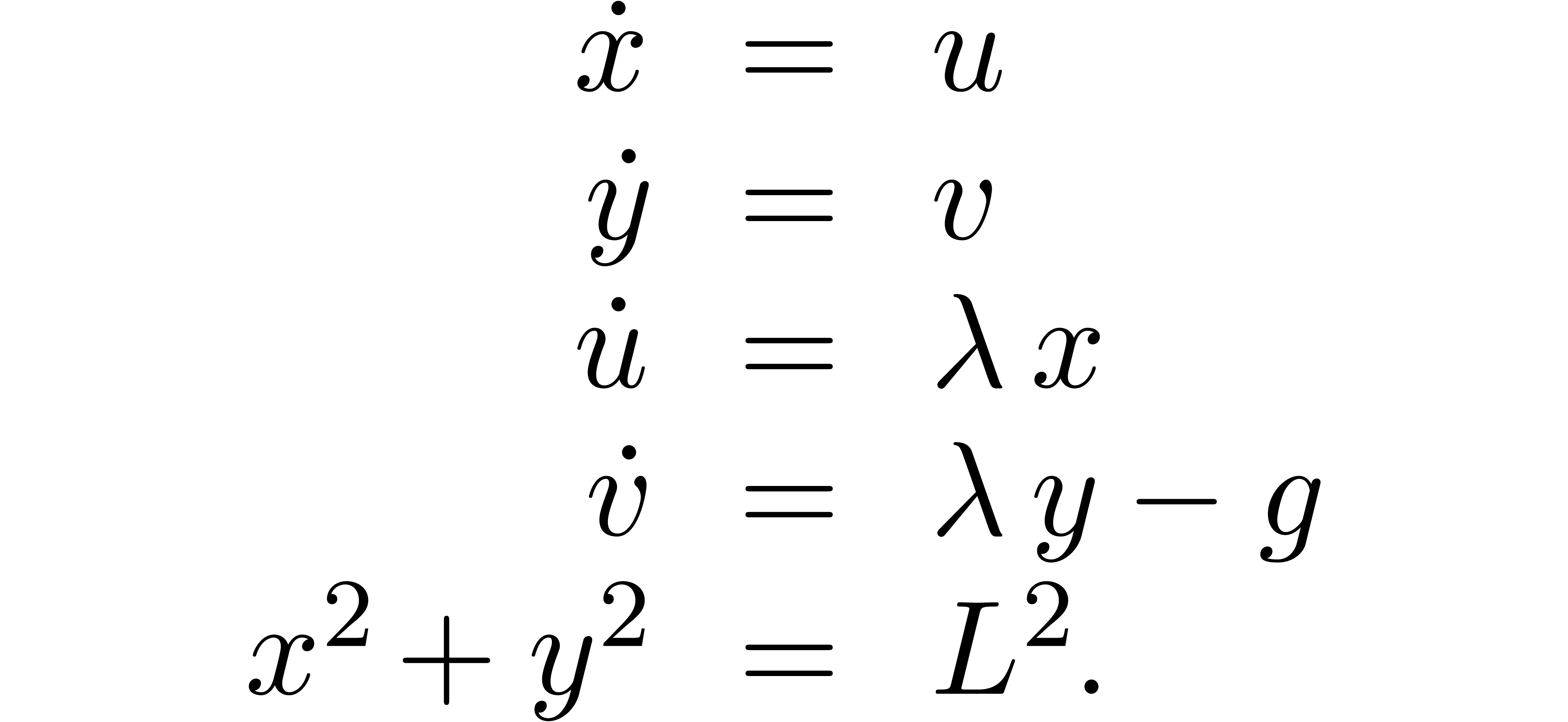

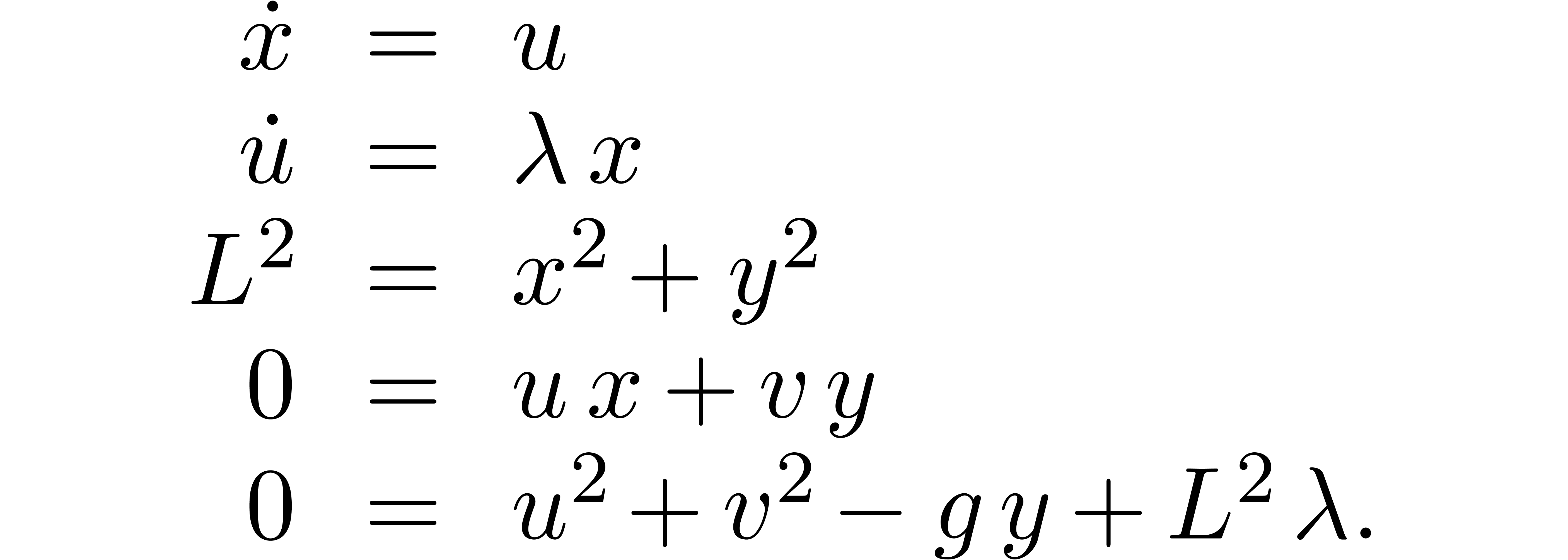

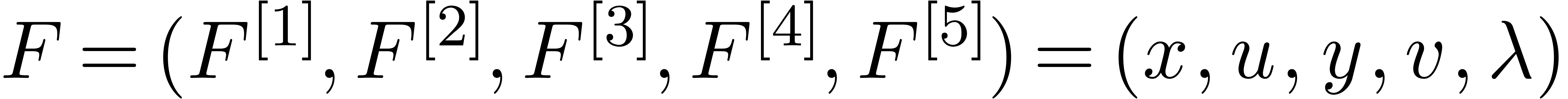

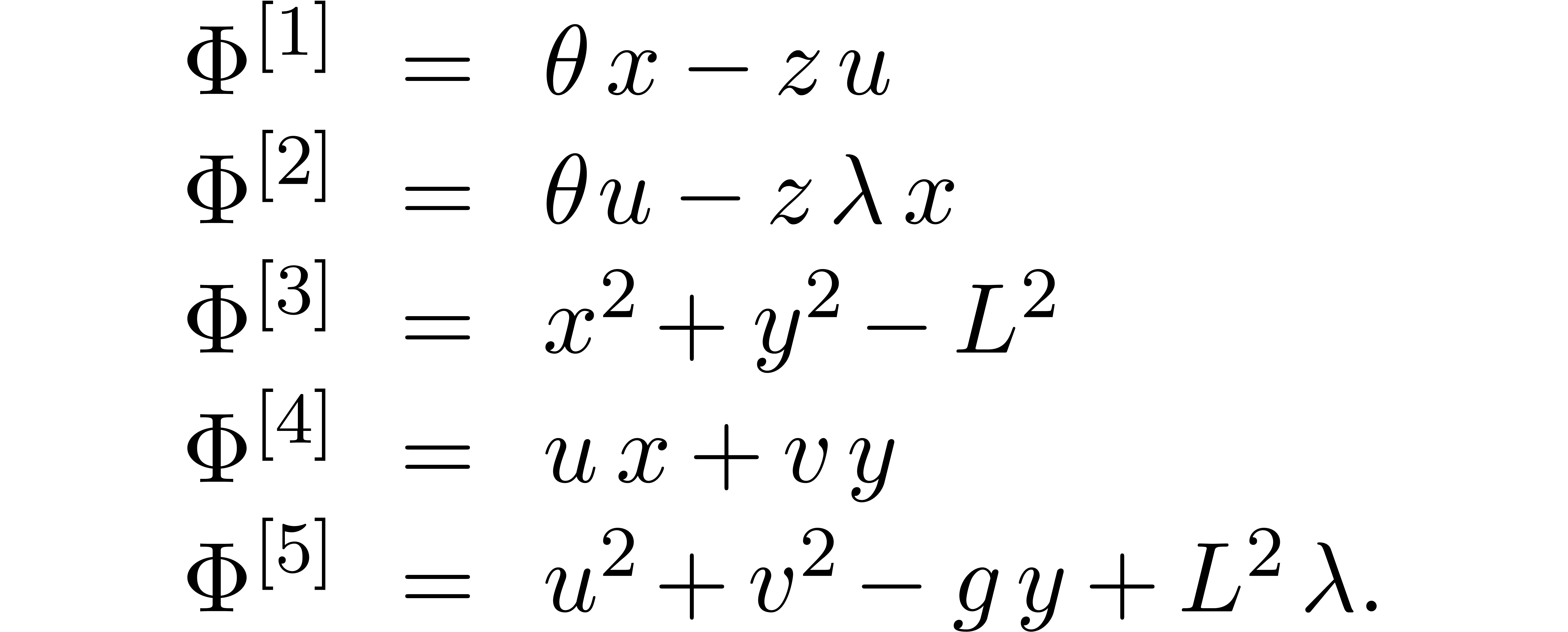

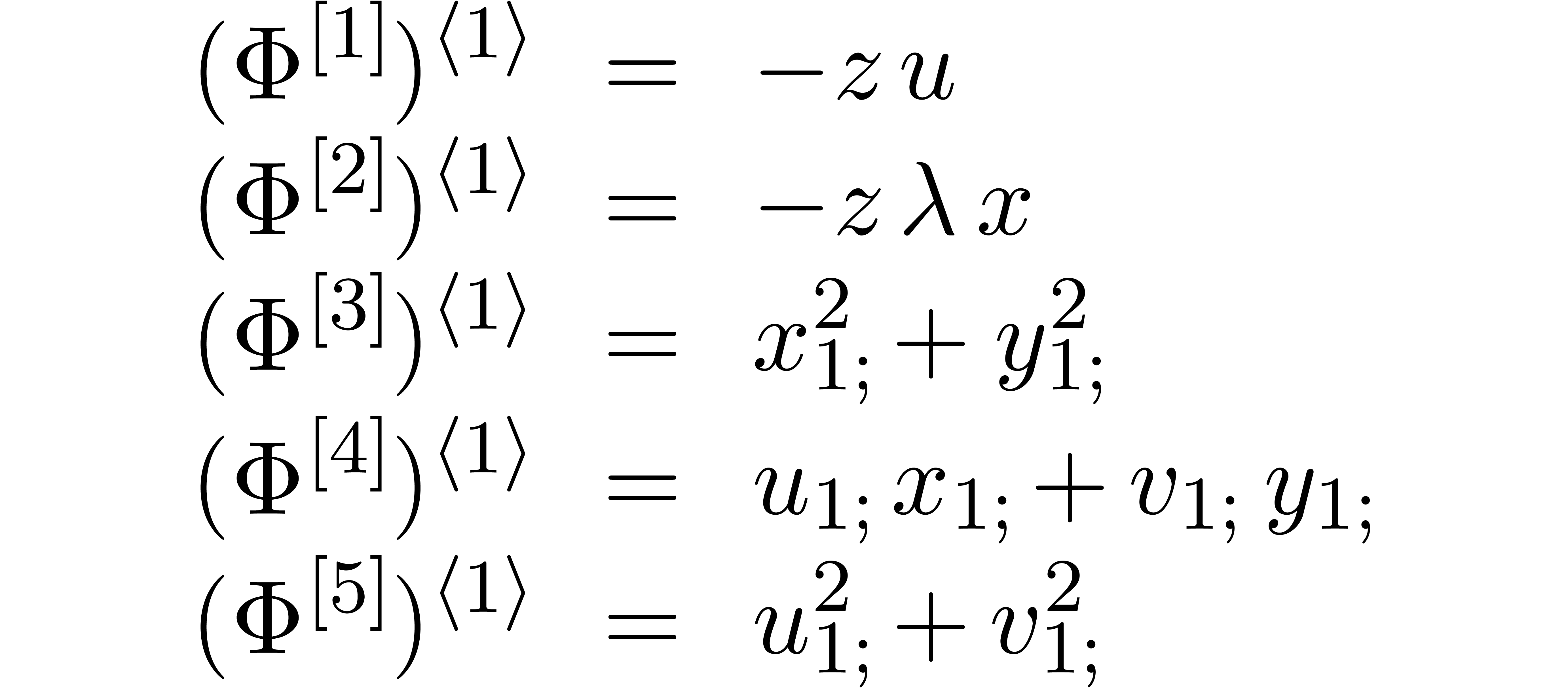

The prototype application of the techniques in this paper is the integration of differential-algebraic systems of equations. Let us consider the traditional example of a pendulum, whose equations are given by

Here

,

,

,

,

,

,

,

,

are the unknowns and

are the unknowns and

,

,

are constant parameters; see Figure

1

.

are constant parameters; see Figure

1

.

:

:

Setting  , the latter system

corresponds the following system

, the latter system

corresponds the following system  using our

notations:

using our

notations:

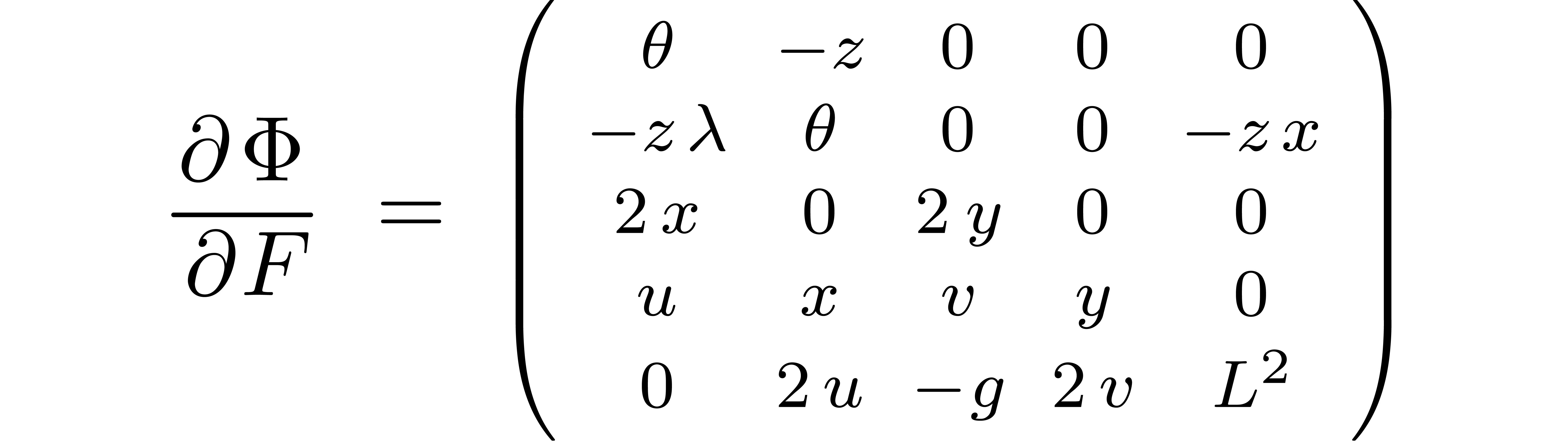

The order one anticipators of these equations are given by

and the Jacobian matrix of  is given by

is given by

For the initial conditions, one typically takes  ,

,  ,

,  , and

, and  .

For these initial conditions, the formula for the Jacobian implies

.

For these initial conditions, the formula for the Jacobian implies

For every  , this matrix

admits the inverse

, this matrix

admits the inverse

Now consider the operator

This operator is the coefficientwise operator with  for all

for all  and

and  .

The desired recursive equation (18) for

.

The desired recursive equation (18) for  is therefore given by

is therefore given by

or, equivalently,

One may now use any traditional relaxed evaluation algorithm in order to compute the power series solution:

This shows how our algorithm applies to the derived index  formulation of the equations of the pendulum. In fact, our

algorithm can also be applied directly to the original system of index

formulation of the equations of the pendulum. In fact, our

algorithm can also be applied directly to the original system of index

, which we indeed regard as a

major advantage of the method. However, the resulting matrices

, which we indeed regard as a

major advantage of the method. However, the resulting matrices  have size

have size  ,

which makes this variant less suitable for pedagogic purposes.

,

which makes this variant less suitable for pedagogic purposes.

A. Bostan, F. Chyzak, F. Ollivier, B. Salvy, É. Schost, and A. Sedoglavic. Fast computation of power series solutions of systems of differential equations. In Proceedings of the 18th ACM-SIAM Symposium on Discrete Algorithms, pages 1012–1021. New Orleans, Louisiana, U.S.A., January 2007.

R. P. Brent and H. T. Kung. Fast algorithms for manipulating formal power series. Journal of the ACM, 25:581–595, 1978.

J. Berthomieu and R. Lebreton. Relaxed  -adic hensel lifting for algebraic

systems. In J. van der Hoeven and M. van Hoeij, editors,

Proc. ISSAC '12, pages 59–66. Grenoble, France,

July 2012.

-adic hensel lifting for algebraic

systems. In J. van der Hoeven and M. van Hoeij, editors,

Proc. ISSAC '12, pages 59–66. Grenoble, France,

July 2012.

D. G. Cantor and E. Kaltofen. On fast multiplication of polynomials over arbitrary algebras. Acta Informatica, 28:693–701, 1991.

J. W. Cooley and J. W. Tukey. An algorithm for the machine calculation of complex Fourier series. Math. Computat., 19:297–301, 1965.

M. J. Fischer and L. J. Stockmeyer. Fast on-line integer multiplication. Proc. 5th ACM Symposium on Theory of Computing, 9:67–72, 1974.

J. van der Hoeven. Lazy multiplication of formal power series. In W. W. Küchlin, editor, Proc. ISSAC '97, pages 17–20. Maui, Hawaii, July 1997.

J. van der Hoeven. Relax, but don't be too lazy. JSC, 34:479–542, 2002.

J. van der Hoeven. Relaxed multiplication using the middle product. In Manuel Bronstein, editor, Proc. ISSAC '03, pages 143–147. Philadelphia, USA, August 2003.

J. van der Hoeven. New algorithms for relaxed multiplication. JSC, 42(8):792–802, 2007.

J. van der Hoeven. Relaxed resolution of implicit equations. Technical Report, HAL, 2009. http://hal.archives-ouvertes.fr/hal-00441977.

J. van der Hoeven. Newton's method and FFT trading. JSC, 45(8):857–878, 2010.

J. van der Hoeven. From implicit to recursive equations. Technical Report, HAL, 2011. http://hal.archives-ouvertes.fr/hal-00583125.

J. van der Hoeven. Faster relaxed multiplication. In Proc. ISSAC '14, pages 405–412. Kobe, Japan, July 2014.

R. Lebreton. Relaxed hensel lifting of triangular sets. JSC, 68(2):230–258, 2015.

R. Lebreton and É. Schost. A simple and fast online power series multiplication and its analysis. JSC, 72:231–251, 2016.

A. Sedoglavic. Méthodes seminumériques en algèbre différentielle ; applications à l'étude des propriétés structurelles de systèmes différentiels algébriques en automatique. PhD thesis, École polytechnique, 2001.

A. Schönhage and V. Strassen. Schnelle Multiplikation großer Zahlen. Computing, 7:281–292, 1971.