Towards a library for straight-line

programs  |

|

| Preliminary version of May 20, 2025 |

| This work is dedicated to the memory of Joos Heintz. |

|

. This work has

been supported by an ERC-2023-ADG grant for the ODELIX project

(number 101142171).

. This work has

been supported by an ERC-2023-ADG grant for the ODELIX project

(number 101142171).

Funded by the European Union. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Research Council Executive Agency. Neither the European Union nor the granting authority can be held responsible for them. |

|

. This article has

been written using GNU TeXmacs [65].

. This article has

been written using GNU TeXmacs [65].

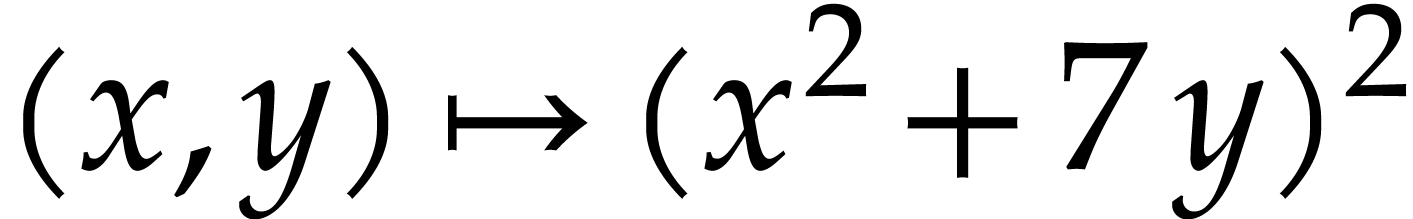

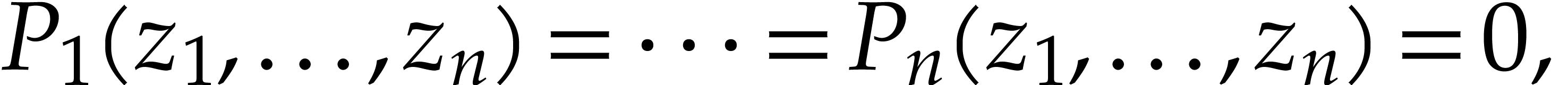

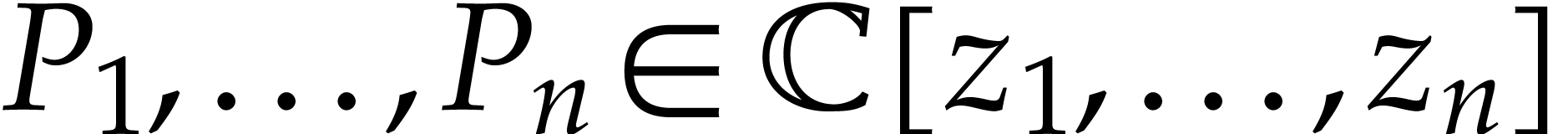

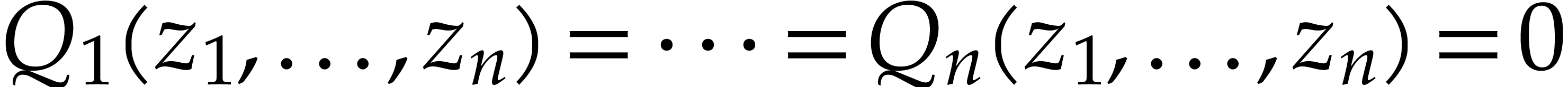

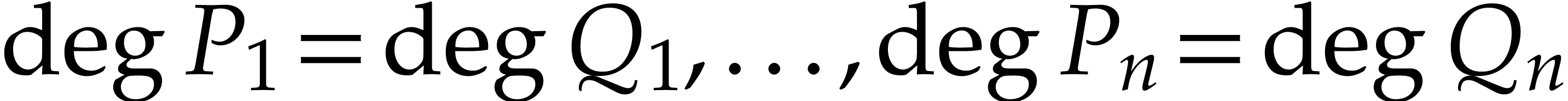

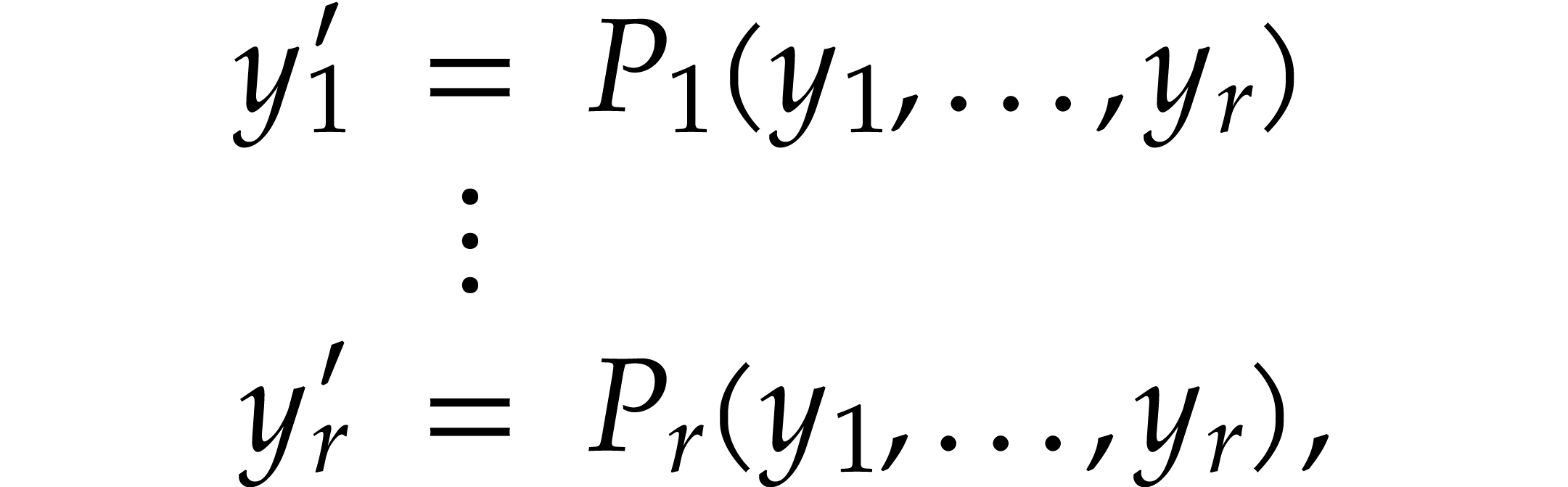

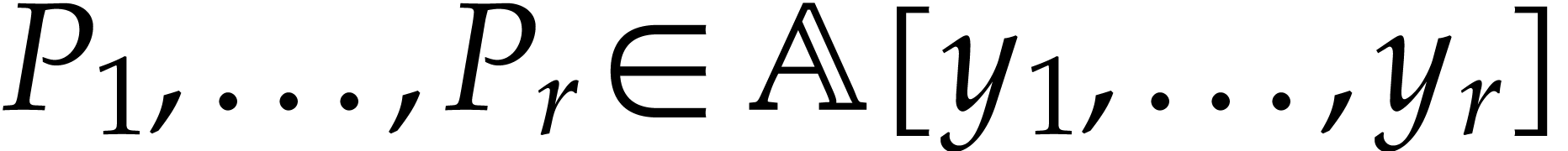

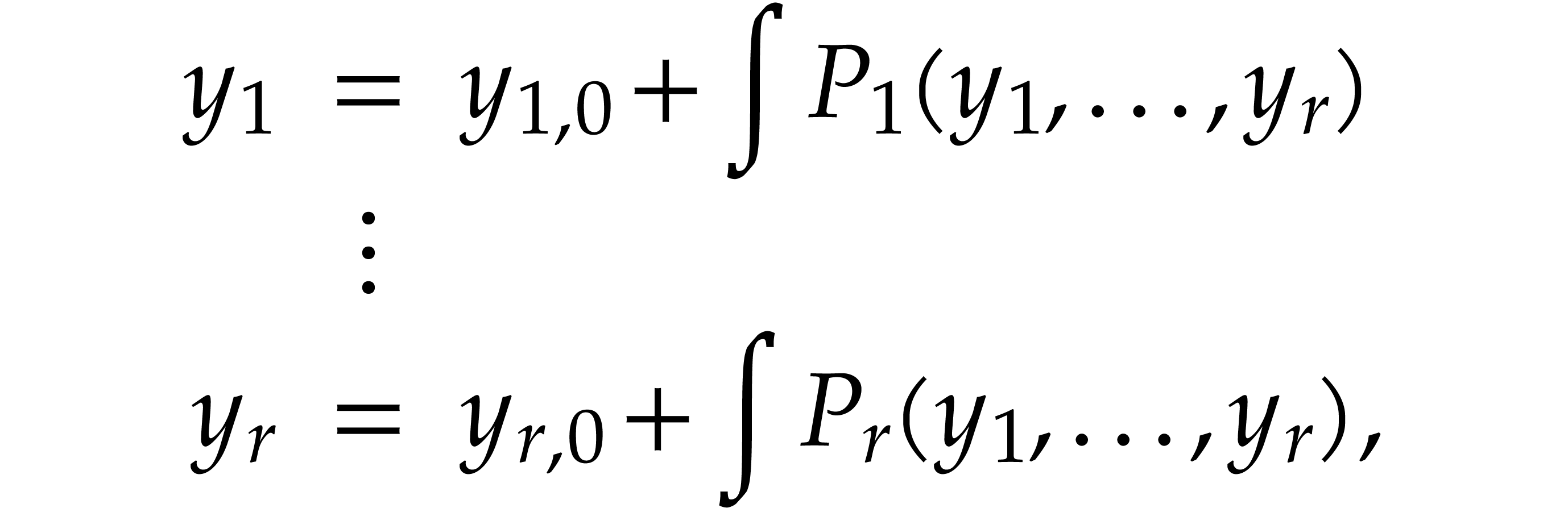

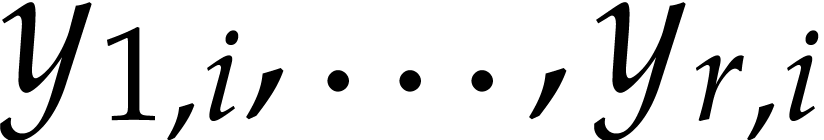

Straight-line programs have proved to be an extremely useful framework both for theoretical work on algebraic complexity and for practical implementations. In this paper, we expose ideas for the development of high performance libraries dedicated to straight-line programs, with the hope that they will allow to fully leverage the theoretical advantages of this framework for practical applications. |

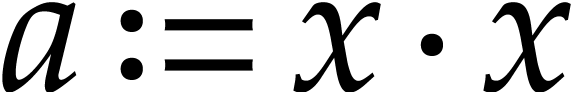

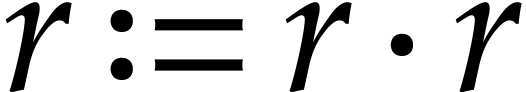

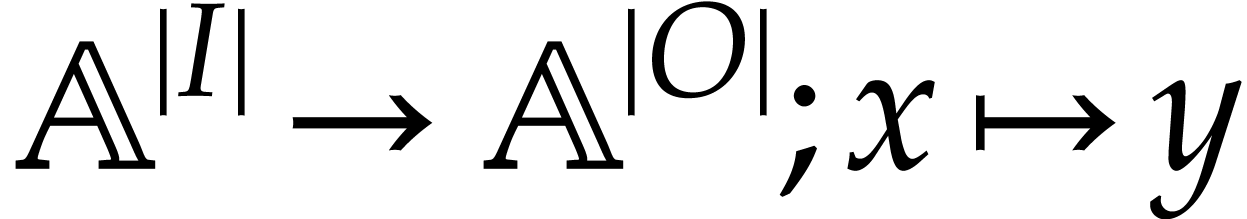

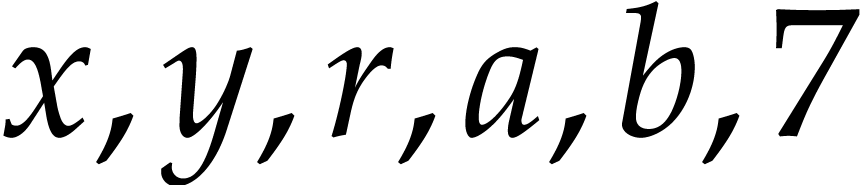

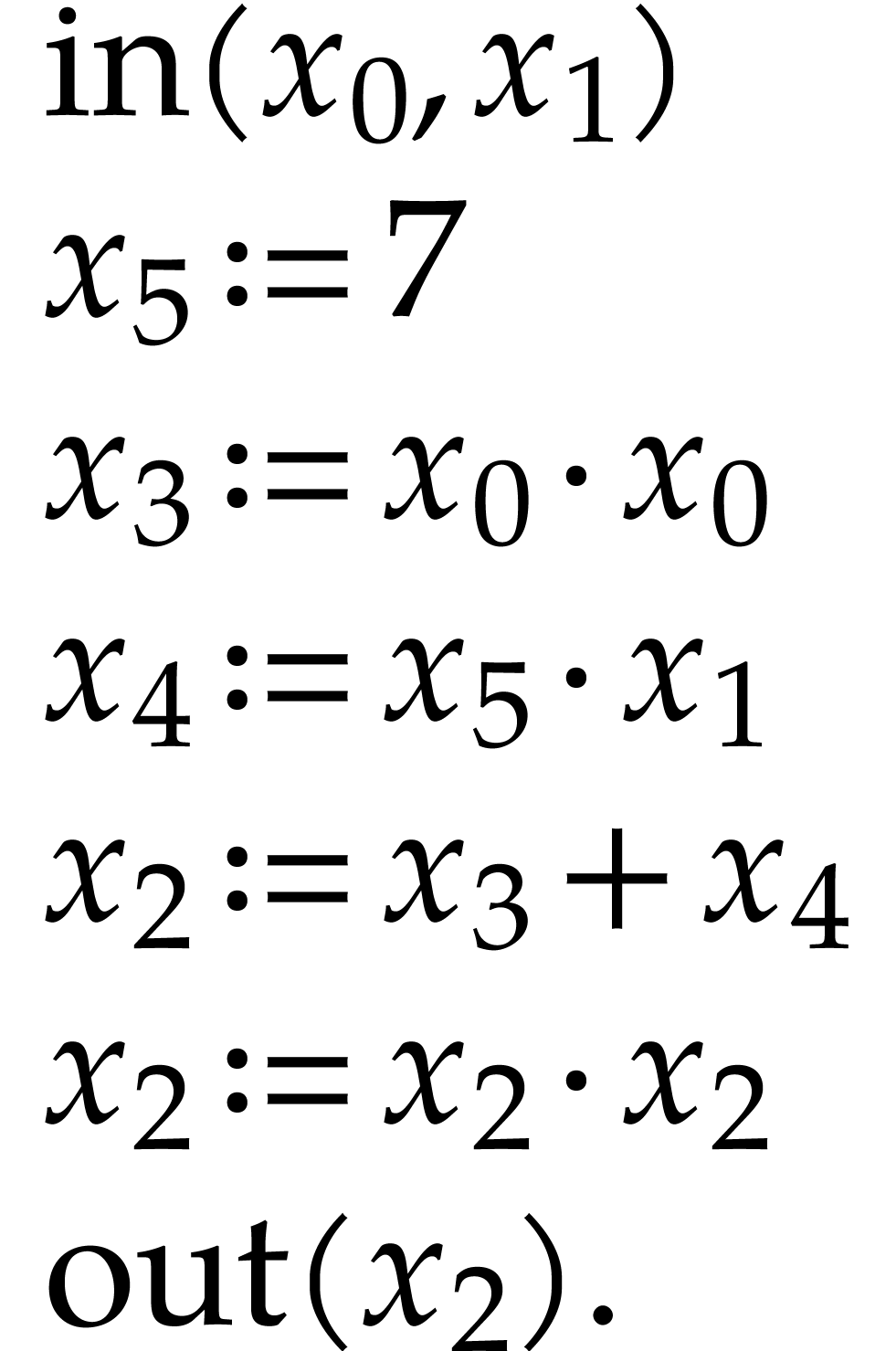

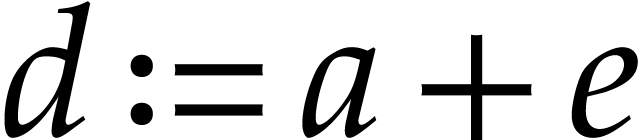

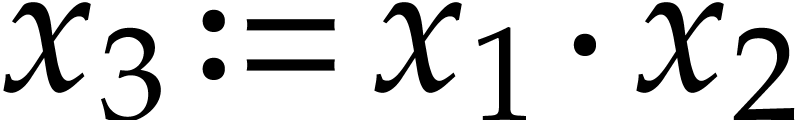

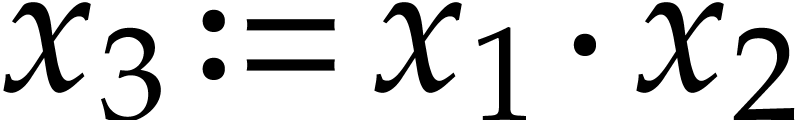

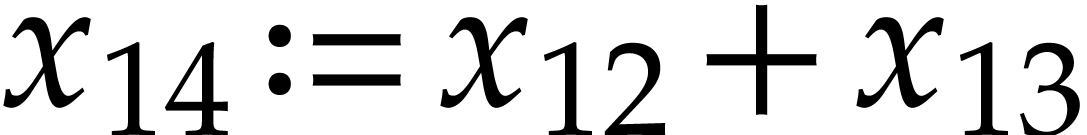

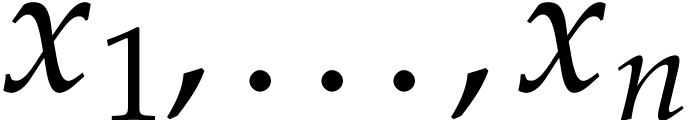

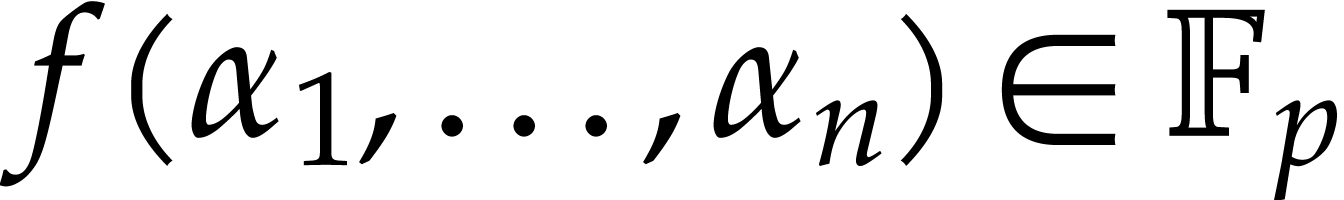

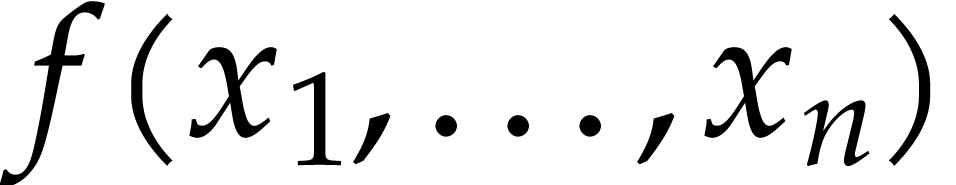

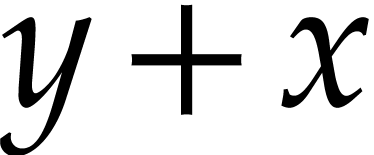

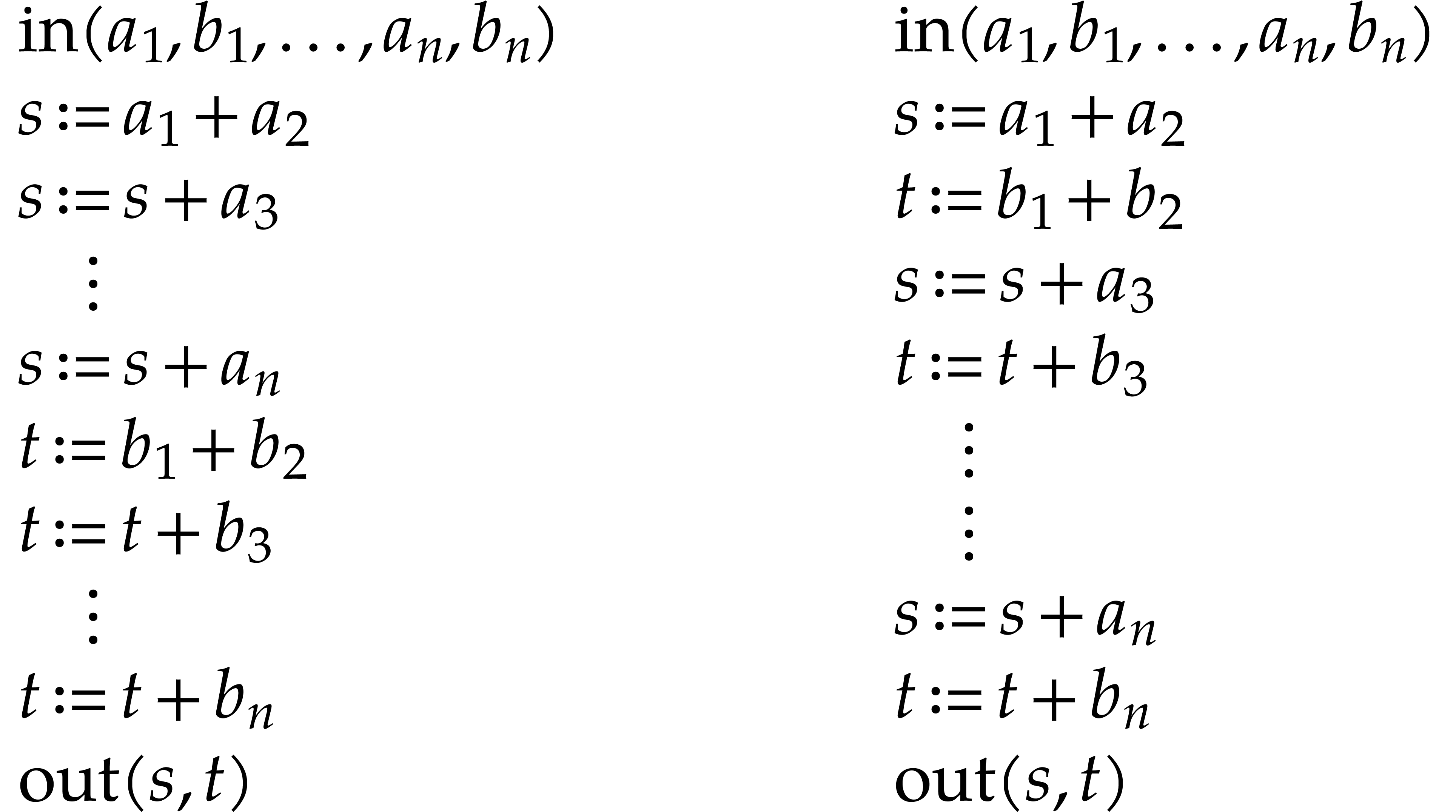

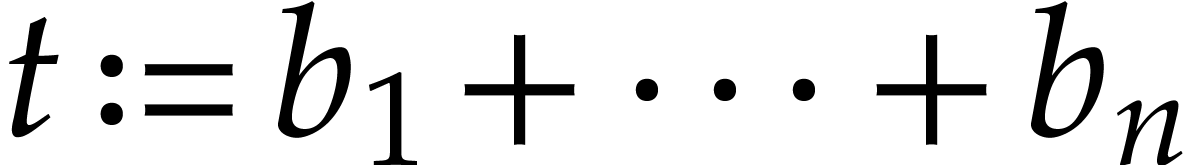

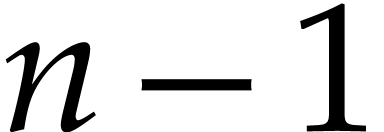

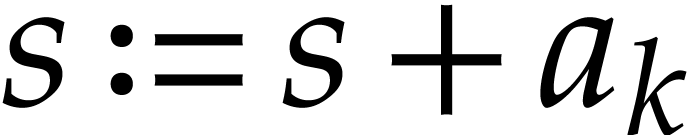

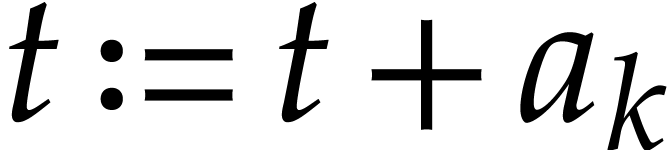

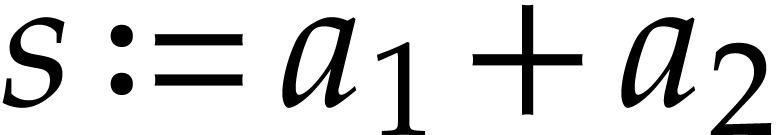

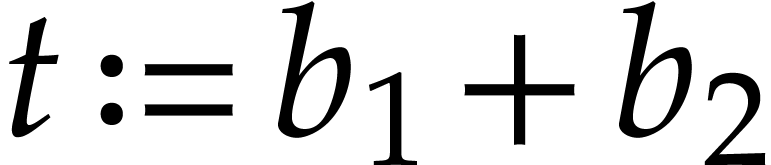

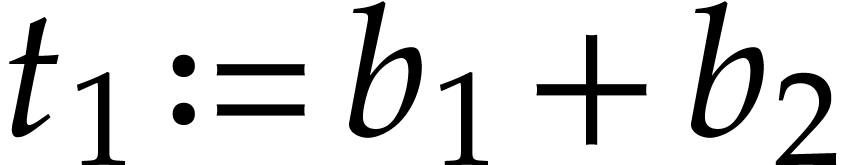

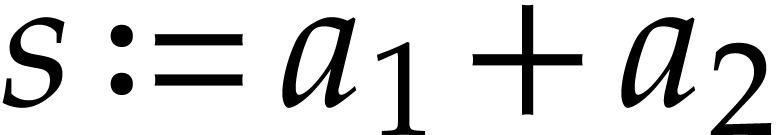

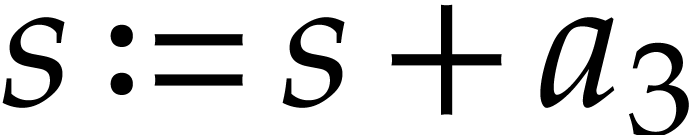

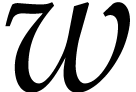

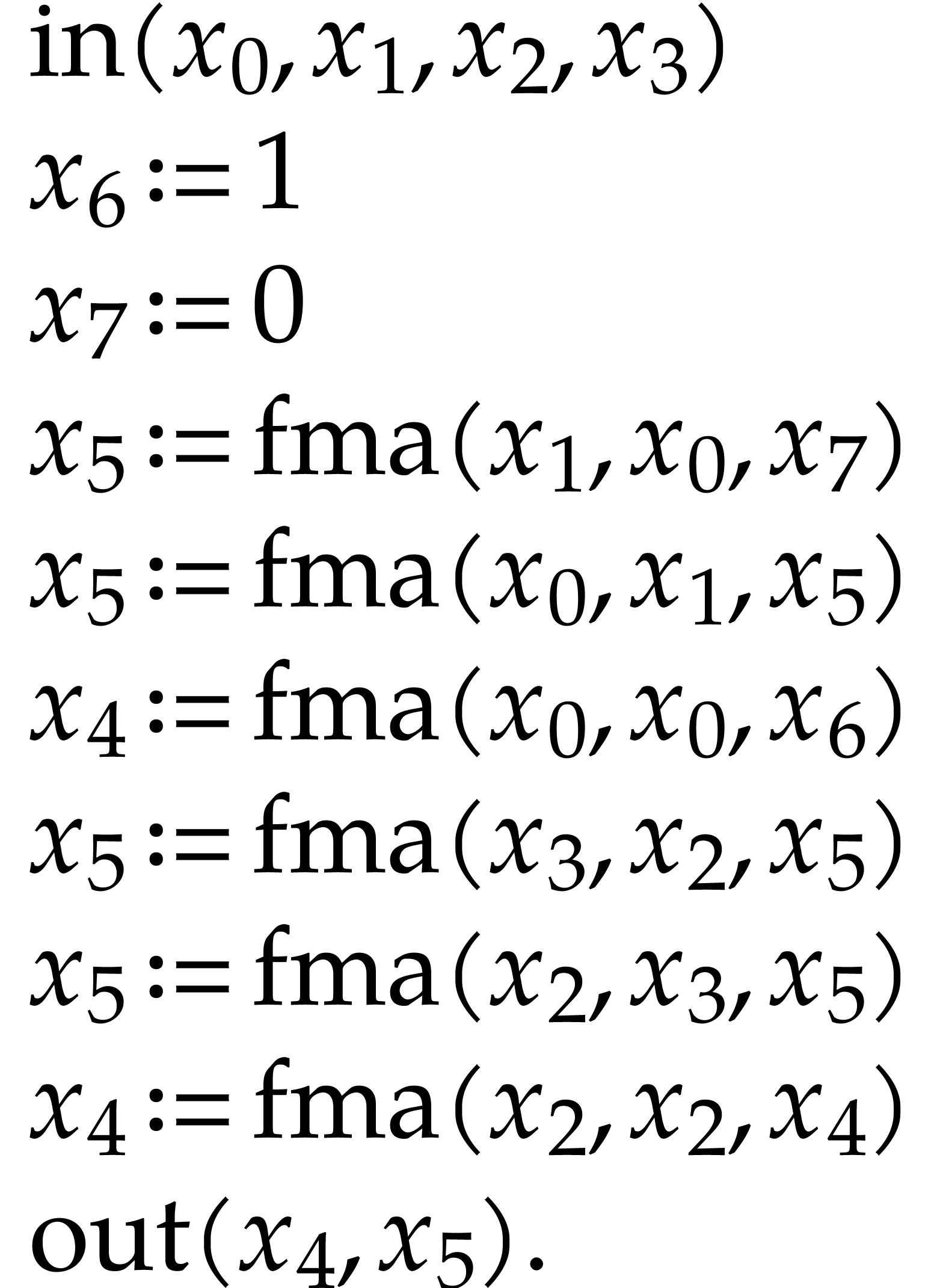

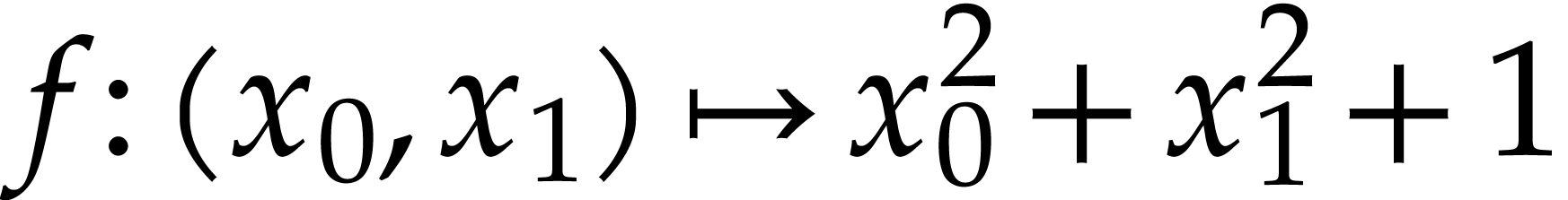

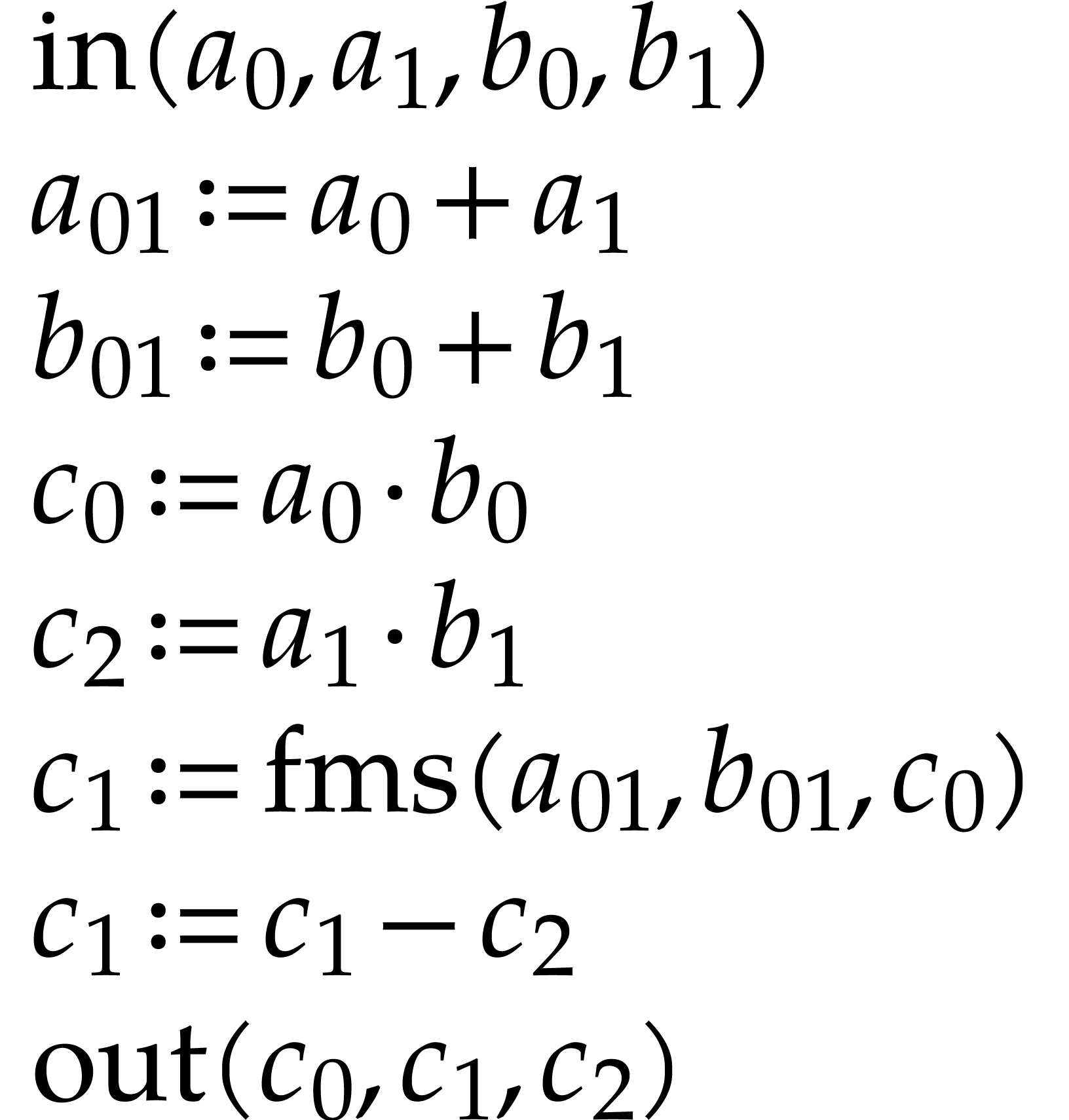

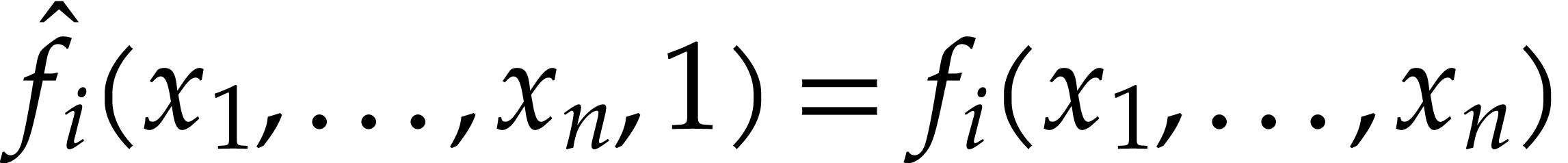

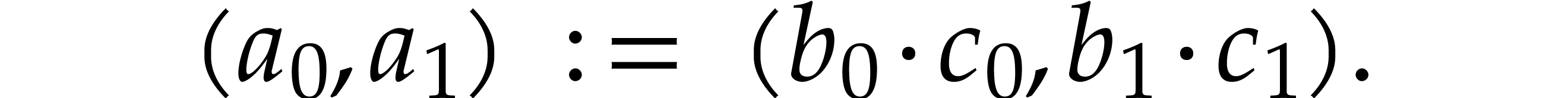

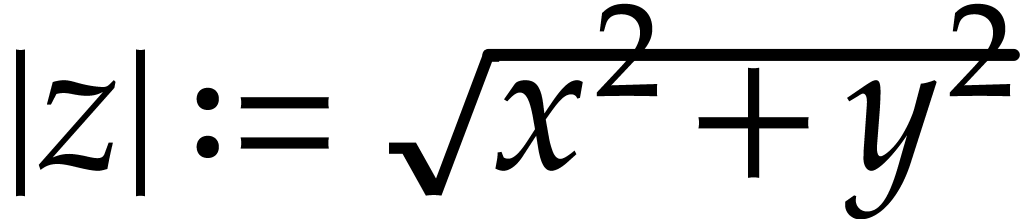

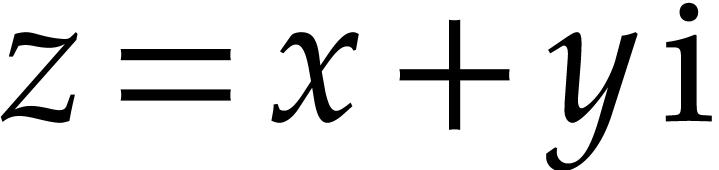

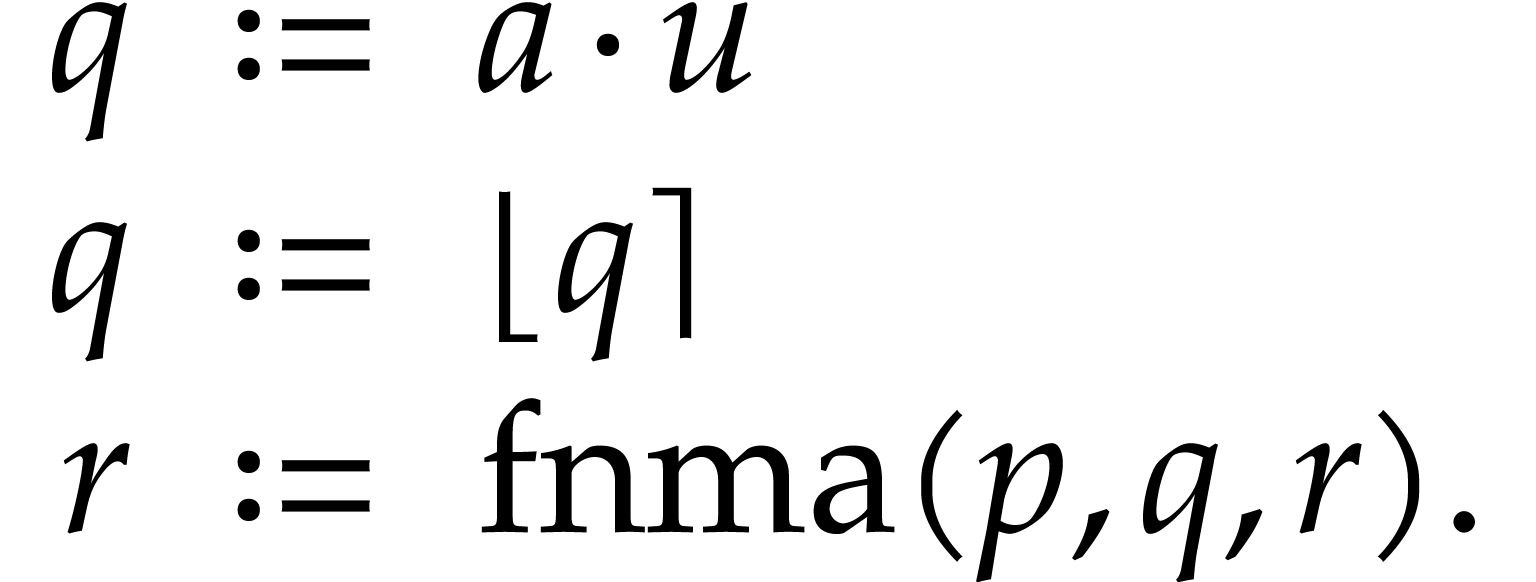

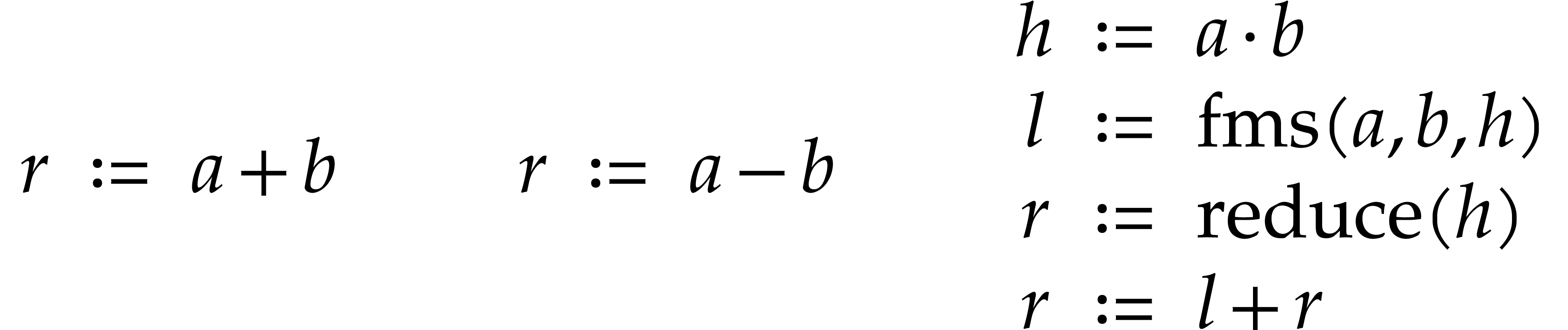

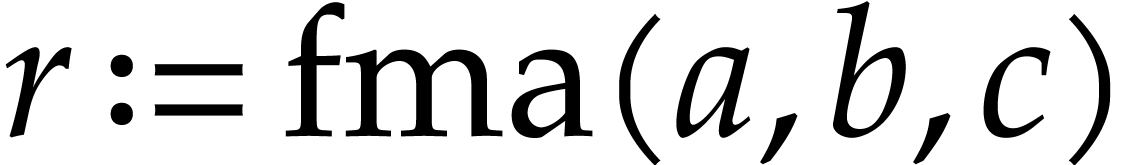

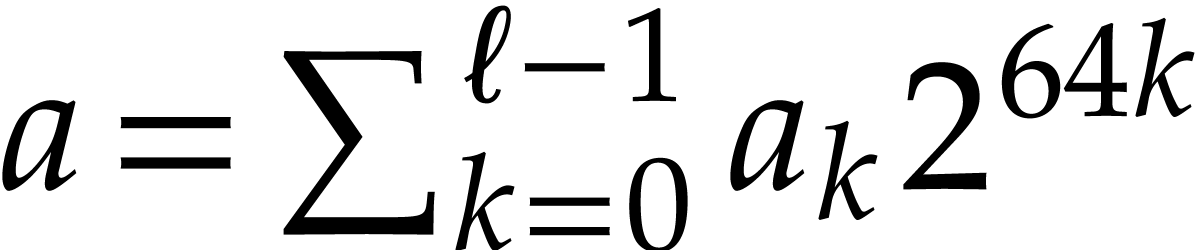

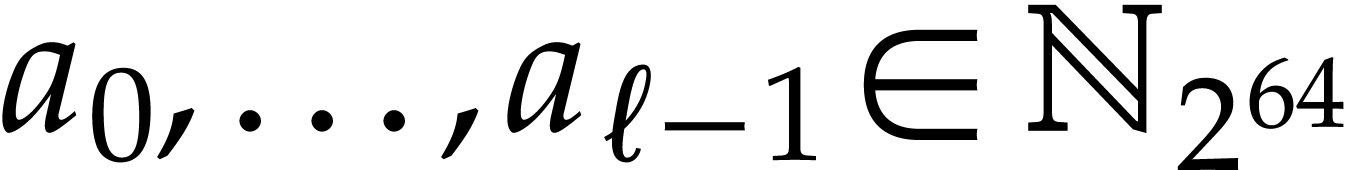

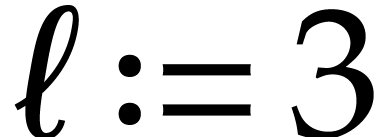

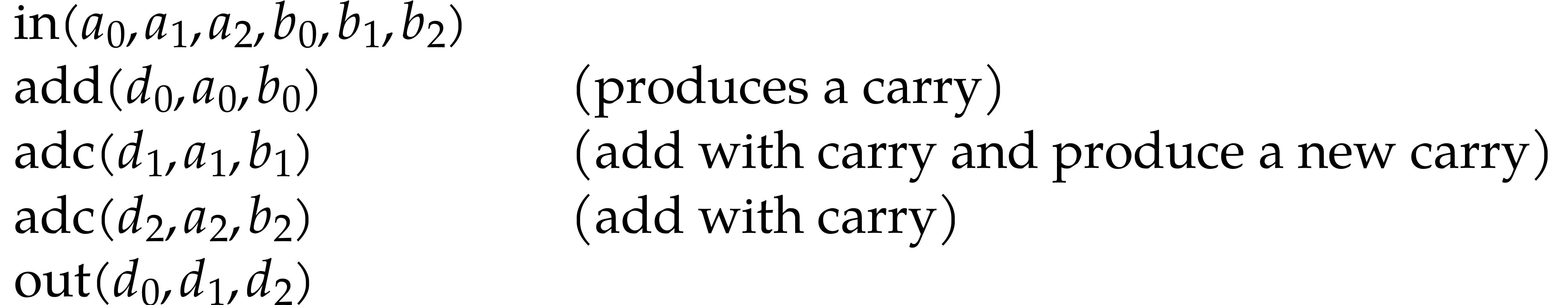

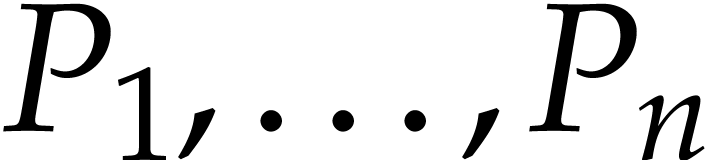

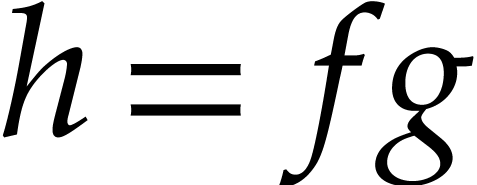

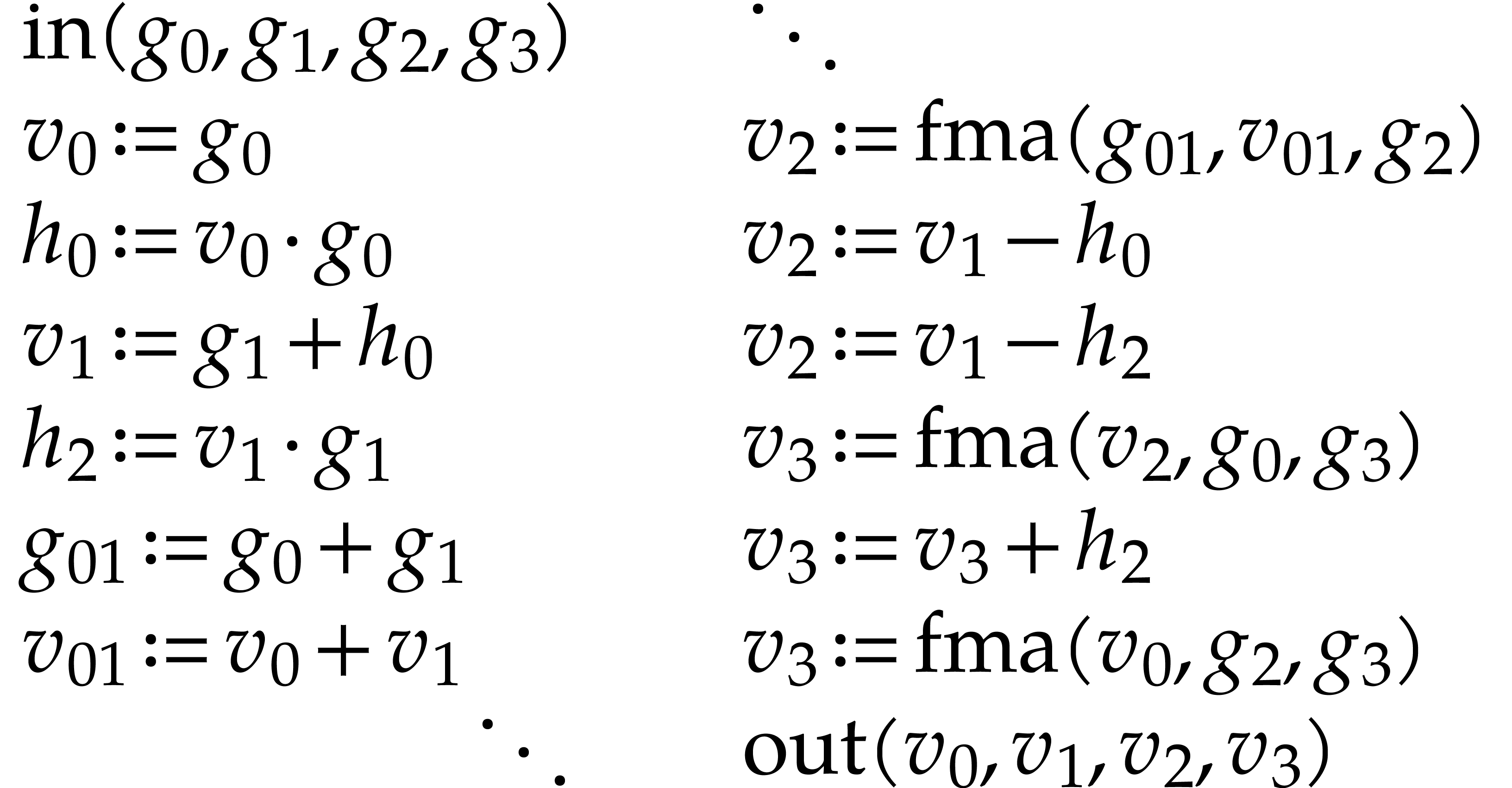

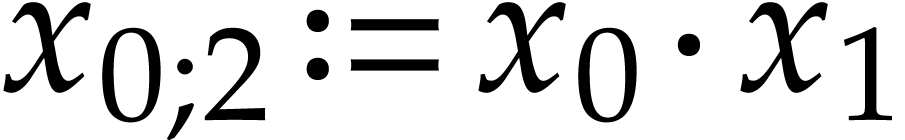

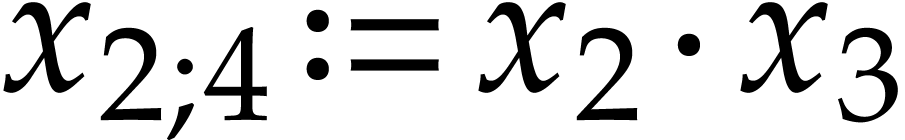

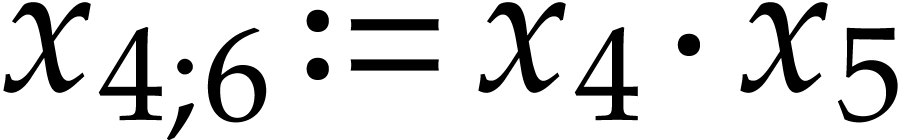

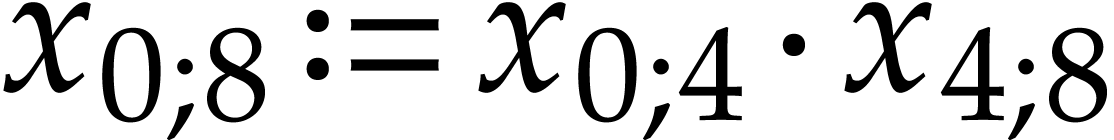

A straight-line program (or SLP) is a program that takes a finite number

of input arguments, then executes a sequence of simple arithmetic

instructions, and finally returns a finite number of output values. For

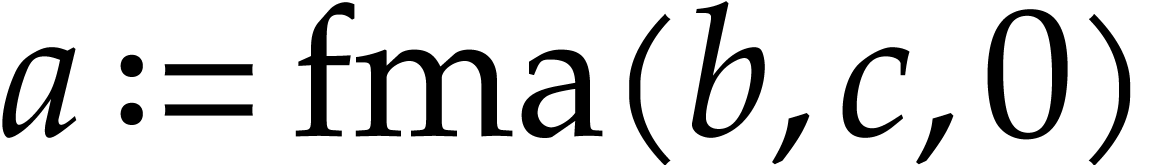

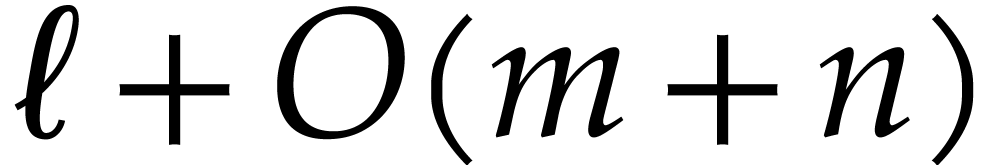

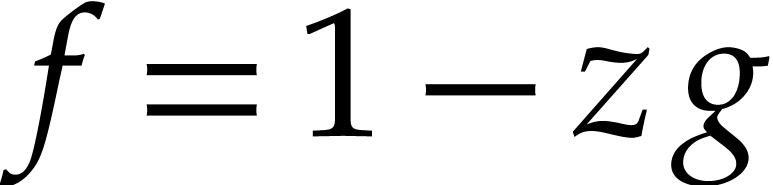

instance, the function  can be computed using the

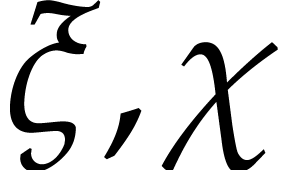

following SLP:

can be computed using the

following SLP:

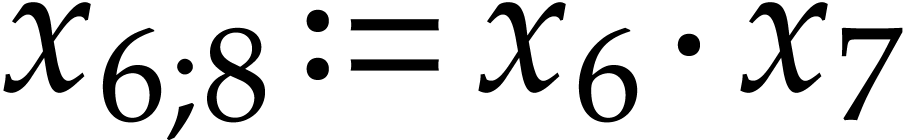

|

(1.1) |

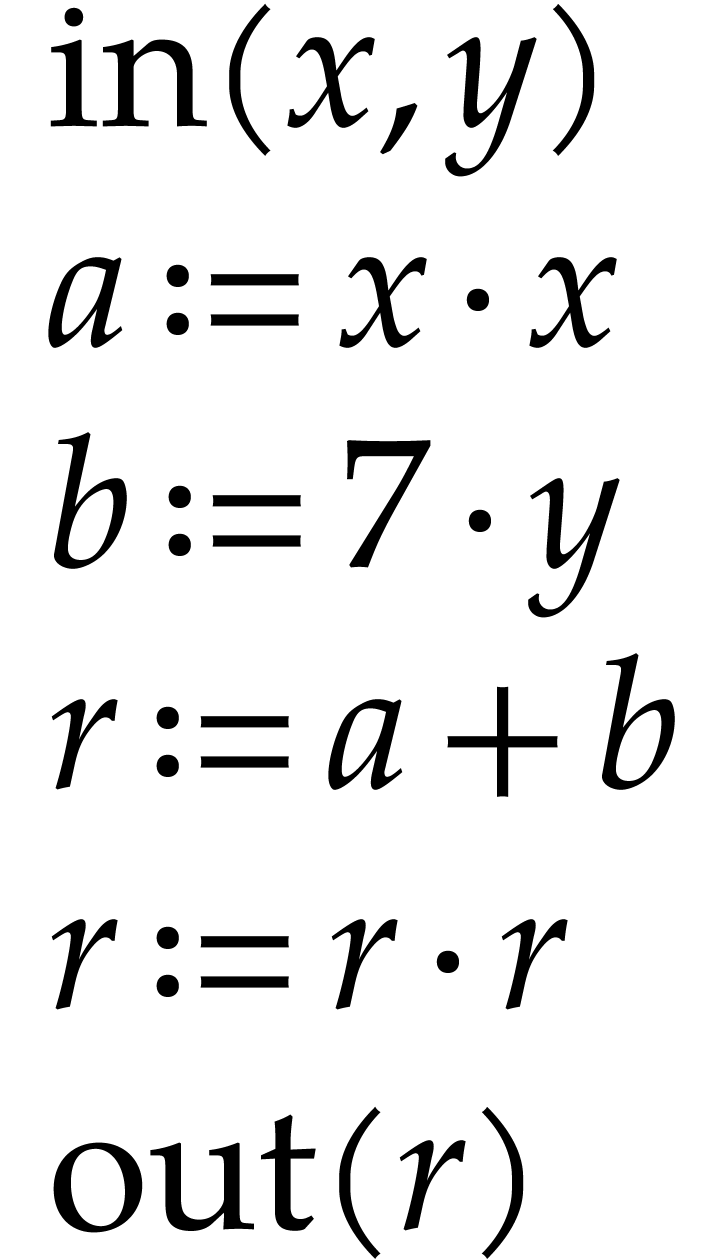

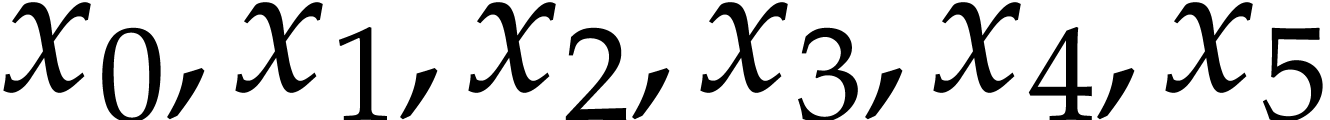

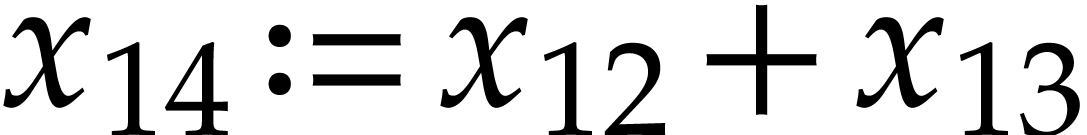

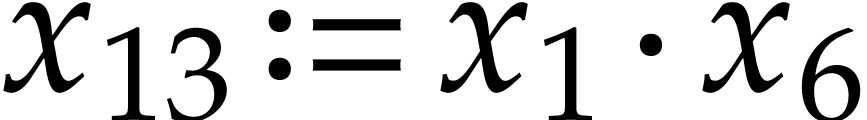

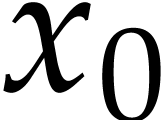

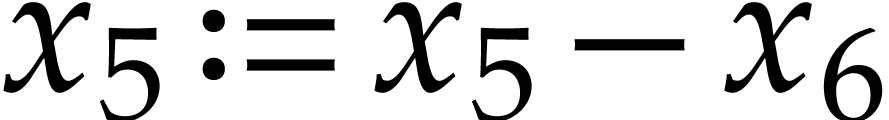

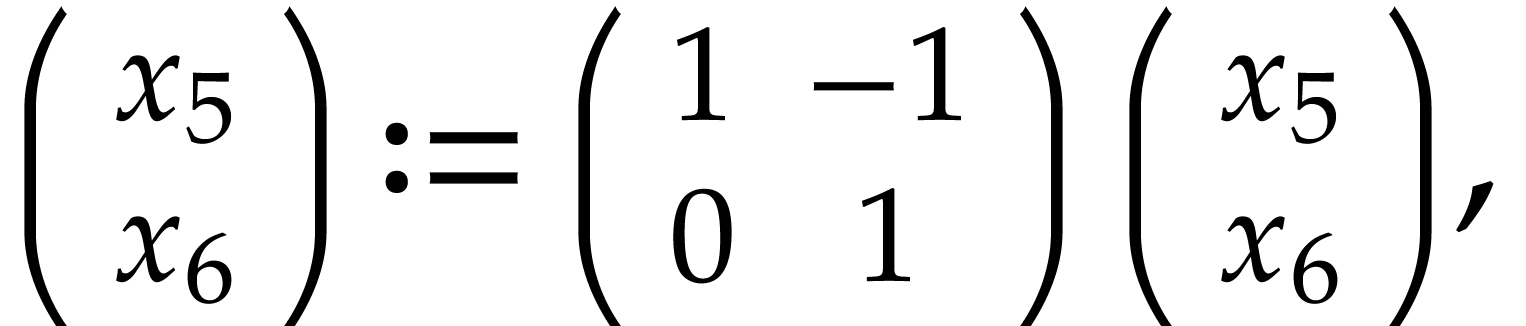

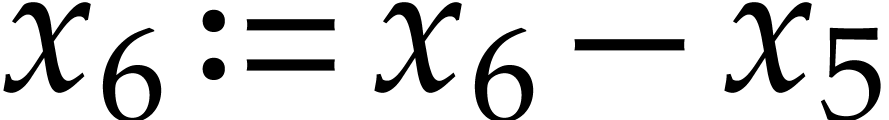

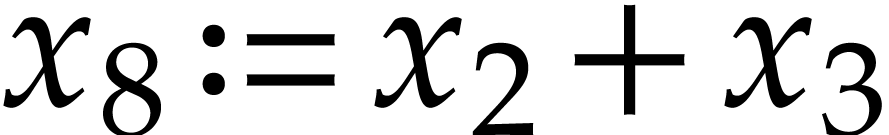

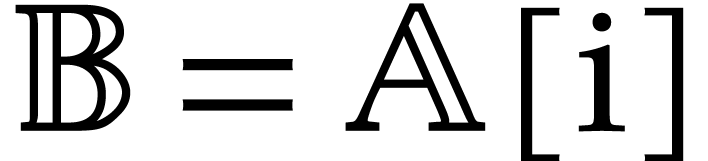

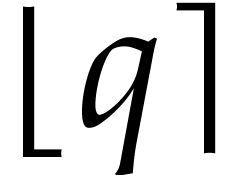

The instructions operate on a finite list of variables (here  ) and constants (here

) and constants (here  ). There are no restrictions on how variables

are allowed to be reused: they can be reassigned several times (like

). There are no restrictions on how variables

are allowed to be reused: they can be reassigned several times (like

) and they may occur multiple

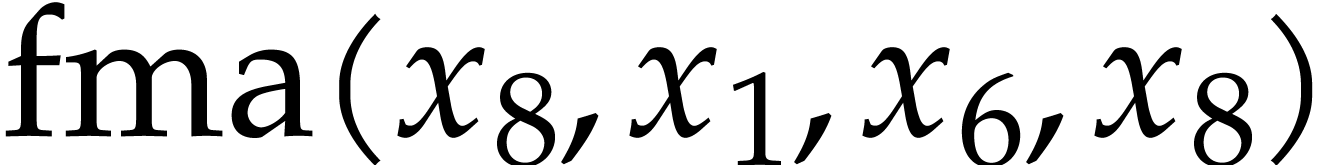

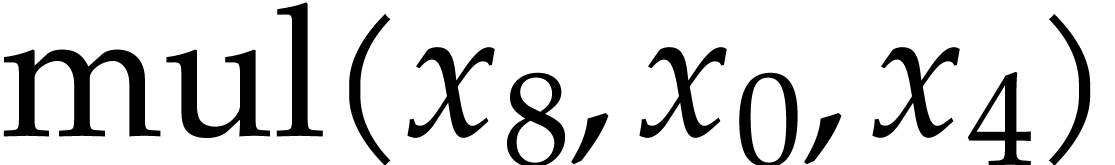

times in the input and output of an operation (like in the operations

) and they may occur multiple

times in the input and output of an operation (like in the operations

and

and  ).

).

SLPs have a profoundly dual nature as programs and data. As programs, SLPs are eligible for a range of operations and techniques from the theory of compilation [3]: constant folding, common subexpression elimination, register allocation, etc. Although the expressive power of SLPs is very limited, their simplicity can be turned into an advantage: many program optimizations that are hard to implement for general purpose programs become simpler and more efficient when restricted to SLPs. In particular, most classical program transformations can be done in linear time. Traditional general purpose compilation techniques also rarely exploit mathematical properties (beyond basic algebraic rules that are useful during simplifications). In the SLP world, algebraic transformations such as automatic differentiation [14] and transposition [17] are easy to implement; more examples will be discussed in this paper.

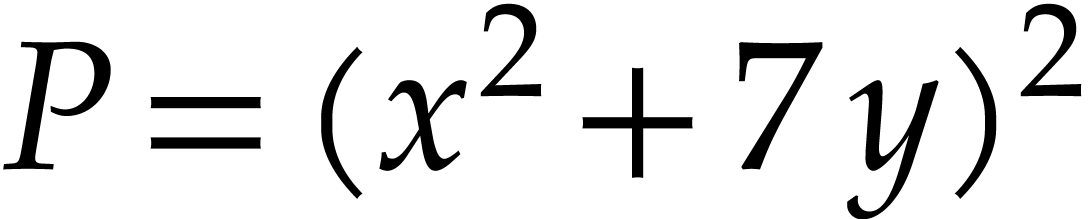

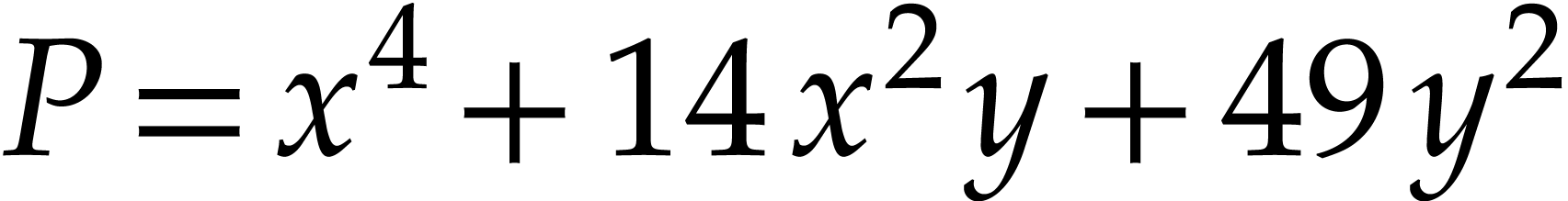

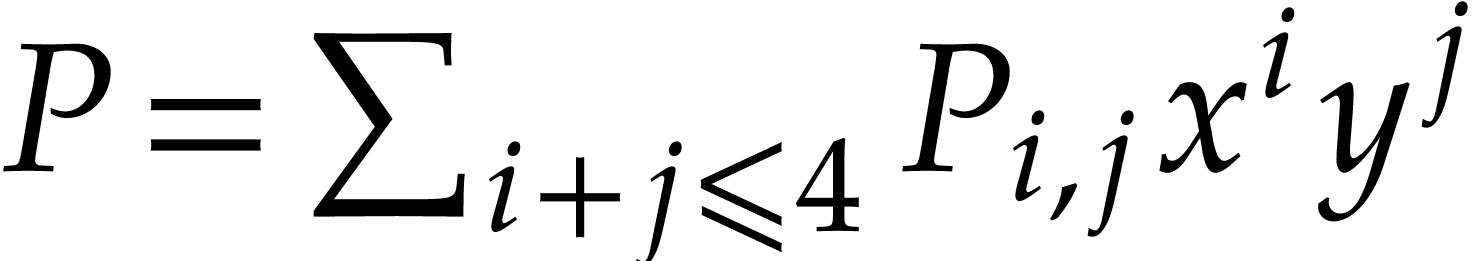

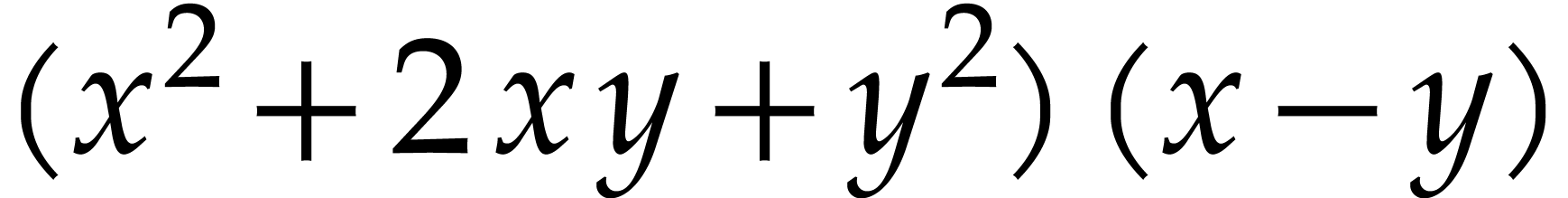

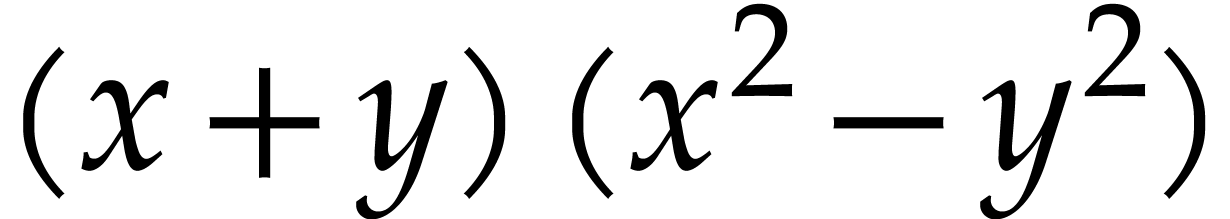

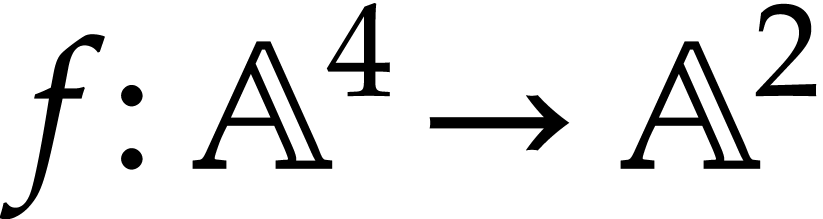

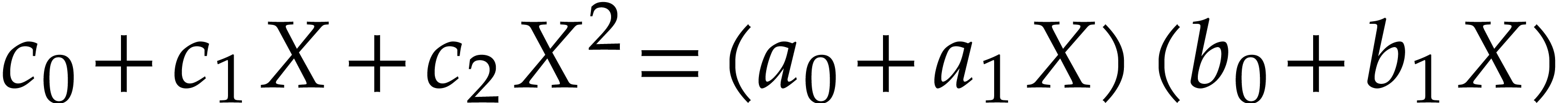

Let us now turn to the data side. The example SLP (1.1) can

be regarded as a polynomial  .

In this sense, SLPs constitute a data structure for the representation

of multivariate polynomials. Note that this representation is more

compact than the (expanded) sparse representation

.

In this sense, SLPs constitute a data structure for the representation

of multivariate polynomials. Note that this representation is more

compact than the (expanded) sparse representation  and way more compact than the dense “total degree”

representation

and way more compact than the dense “total degree”

representation  . If our main

goal is to evaluate a given polynomial many times, then the SLP

representation tends to be particularly efficient. However, for explicit

computations on polynomials, other representations may be

preferred: for instance, the dense representation allows for FFT

multiplication [40, section 8.2] and the sparse

representation also allows for special evaluation-interpolation

techniques [101].

. If our main

goal is to evaluate a given polynomial many times, then the SLP

representation tends to be particularly efficient. However, for explicit

computations on polynomials, other representations may be

preferred: for instance, the dense representation allows for FFT

multiplication [40, section 8.2] and the sparse

representation also allows for special evaluation-interpolation

techniques [101].

The above efficiency considerations explain why SLPs have become a central framework in the area of algebraic complexity [21]. Joos Heintz has been an early proponent of polynomial SLPs in computer algebra. Together with Schnorr, he designed a polynomial time algorithm to test if an SLP represents the zero function or not, up to precomputations that only depend on the size of the SLP [55]. Later, Giusti and Heintz proposed an efficient algorithm to compute the dimension of the solution set of a system of homogeneous polynomials given by SLPs [43]. Then, Heintz and his collaborators showed that the polynomials involved in the Nullstellensatz had good evaluation properties, and could therefore be better represented by SLPs [47, 33] than by dense polynomials. These results led to a new method, called geometric resolution, which allows polynomial systems to be solved in a time that is polynomial in intrinsic geometric quantities [41, 44, 46, 51, 87].

In order to implement the geometric resolution algorithm, it was

necessary to begin with programming efficient evaluation data

structures. The first steps were presented at the TERA'1996 conference

held in Santander (Spain) by Aldaz, and by Castaño, Llovet, and

Martìnez [22]. Later on, a

There are a few other concepts that are very similar to SLPs. Trees and directed acyclic graphs (DAGs) are other popular structures for the representation of mathematical expressions. Conversions to and from SLPs are rather straightforward, but SLPs have the advantage that they expose more low-level execution details. Since performance is one of our primary concerns, we therefore prefer the framework of SLPs in this paper.

Another related concept is the blackbox representation. Blackbox

programs can be evaluated, but their “source code” is not

available. This representation is popular in numerical analysis and the

complexity of various numerical methods [98, Chapters 4, 9,

10, and 17] can be expressed in terms of the required number of function

evaluations (the length of an SLP corresponds to the cost of one

function evaluation in this context). The blackbox representation is

also popular in computer algebra, especially in the area of sparse

interpolation [81, 26, 101].

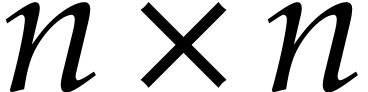

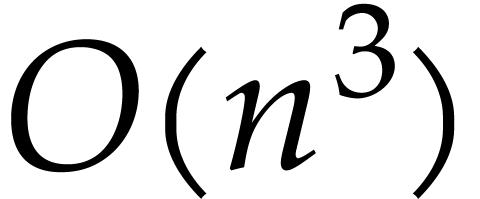

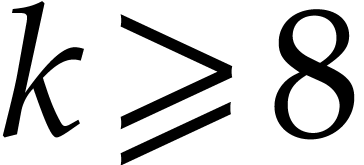

However, for programs that we write ourselves, it is unnatural to

disallow opening the blackboxes. This restriction makes it artificially

hard to perform many algebraic transformations such as modular

reduction, automatic differentiation, etc. On the other hand, computing

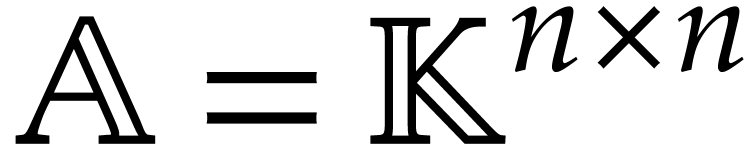

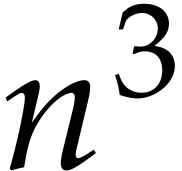

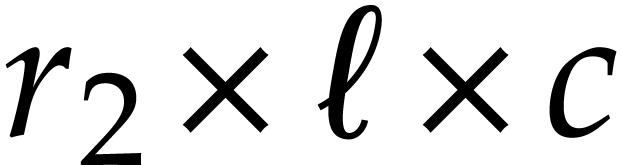

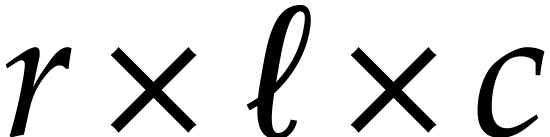

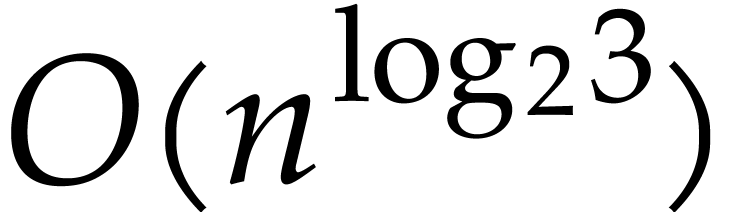

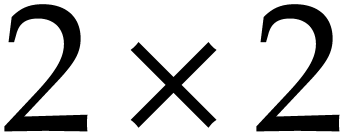

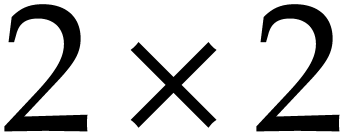

the determinant of an  matrix can easily be done

in time

matrix can easily be done

in time  by a blackbox using Gaussian

elimination. This task is less trivial for SLPs, since branching to find

appropriate pivots is forbidden.

by a blackbox using Gaussian

elimination. This task is less trivial for SLPs, since branching to find

appropriate pivots is forbidden.

Despite the importance of SLPs for theoretical and practical purposes,

they have not yet been the central target of a dedicated high

performance library, up to our knowledge. (We refer to [36,

22, 20] for early packages for polynomial

SLPs.) In contrast, such libraries exist for integer and rational

numbers (

In 2015, we started the development of such a dedicated library for

SLPs, called

Performance is a primary motivation behind our work. Both operations on SLPs and their execution should be as efficient as possible. At the same time, we wish to be able to fully exploit the generality of the SLP framework. This requires a suitable representation of SLPs that is both versatile and efficient to manipulate. As will be detailed in section 2, we will represent SLPs using a suitable low-level encoding of pseudo-code as in (1.1), while allowing its full semantic richness to be expressed. For instance, the ordering of the instructions in (1.1) is significant, as well as the ability to assign values multiple times to the same variables. One may also specify a precise memory layout.

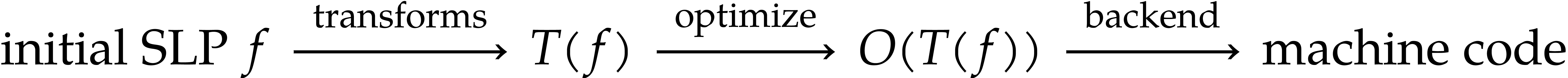

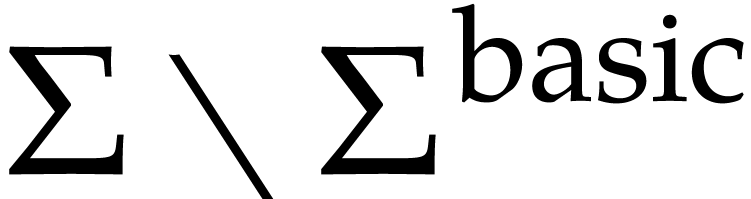

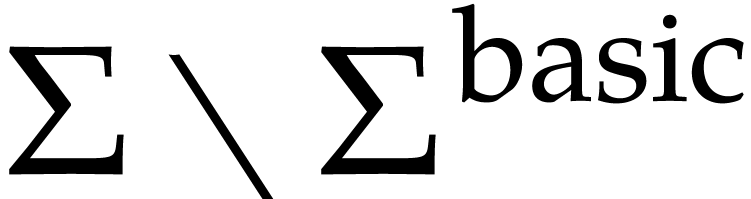

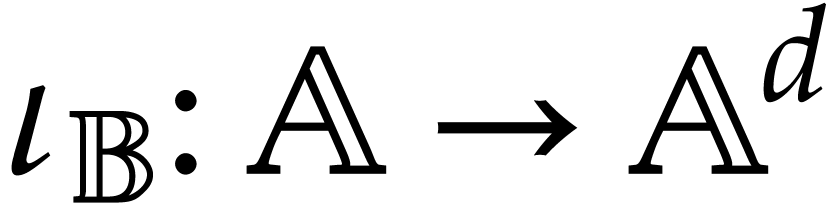

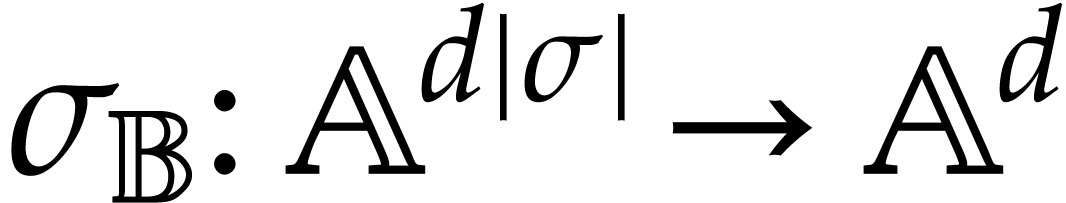

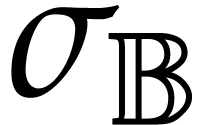

One typical workflow for SLP libraries is the following:

|

(1.2) |

For instance, we might start with an SLP  that

computes the determinant of a

that

computes the determinant of a  matrix

multiplication, then compute its gradient using automatic

differentiation, then optimize this code, and finally generate

executable machine code.

matrix

multiplication, then compute its gradient using automatic

differentiation, then optimize this code, and finally generate

executable machine code.

The initial SLP can be specified directly using low-level pseudo-code.

But this is usually not most convenient, except maybe for very simple

SLPs. In section 3, we describe a higher level recording

mechanism. Given an existing, say, C++ template implementation for the

computation of determinants of matrices, we may specialize this routine

for a special “recorder” type and run it on a  matrix with generic entries. The recording mechanism will

then be able to recover a trace of this computation and produce an SLP

for determinants of

matrix with generic entries. The recording mechanism will

then be able to recover a trace of this computation and produce an SLP

for determinants of  matrices.

matrices.

In section 4, we turn to common program transformations like simplification, register allocation, etc. Although this is part of the classical theory of compilation, the SLP framework gives rise to several twists. Since our libraries are required to be very efficient, they allow us to efficiently generate SLPs on the fly. Such SLPs become disposable as soon as they are no longer needed. Consequently, our SLPs can be very large, which makes it crucial that transformations on SLPs run in linear time. The philosophy is to sacrifice expensive optimizations that require more time, but to make up for this by generating our SLPs in such a way that cheaper optimizations already produce almost optimal code. That being said, there remains a trade-off between speed of compilation and speed of execution: when a short SLP needs to be executed really often, it could still make sense to implement more expensive optimizations. But the primary focus of our libraries is on transformations that run in linear time.

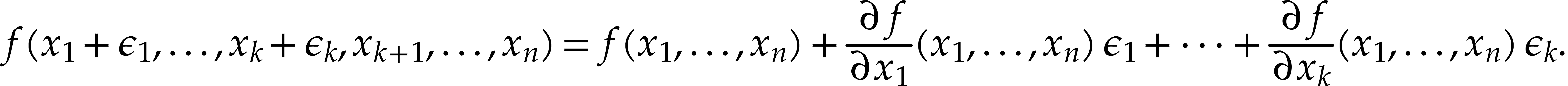

When considering SLPs over specific algebraic domains such as integers, modular integers, floating point numbers, polynomials, matrices, balls, etc., it may be possible to exploit special mathematical properties of these domains in order to generate more efficient SLPs. This type of transformations, which can be problematic to implement in general purpose compilers, are the subject of section 5.

Popular examples from computer algebra are automatic differentiation [14] and transposition [17]. Another example was described in [70]: ball arithmetic allows us to lift any numerical algorithm into a reliable one that also produces guaranteed error bounds for all results. However, rounding errors need to be taken into account when implementing a generic ball arithmetic, which leads to a non-trivial overhead. When the algorithm is actually an SLP, this overhead can be eliminated through a static analysis. Similarly, assume given an SLP over arbitrary precision integers, together with a bound for the inputs. Then one may statically compute bounds for all intermediate results and generate a dedicated SLP that eliminates the need to examine the precisions during runtime. This may avoid many branches and subroutine calls.

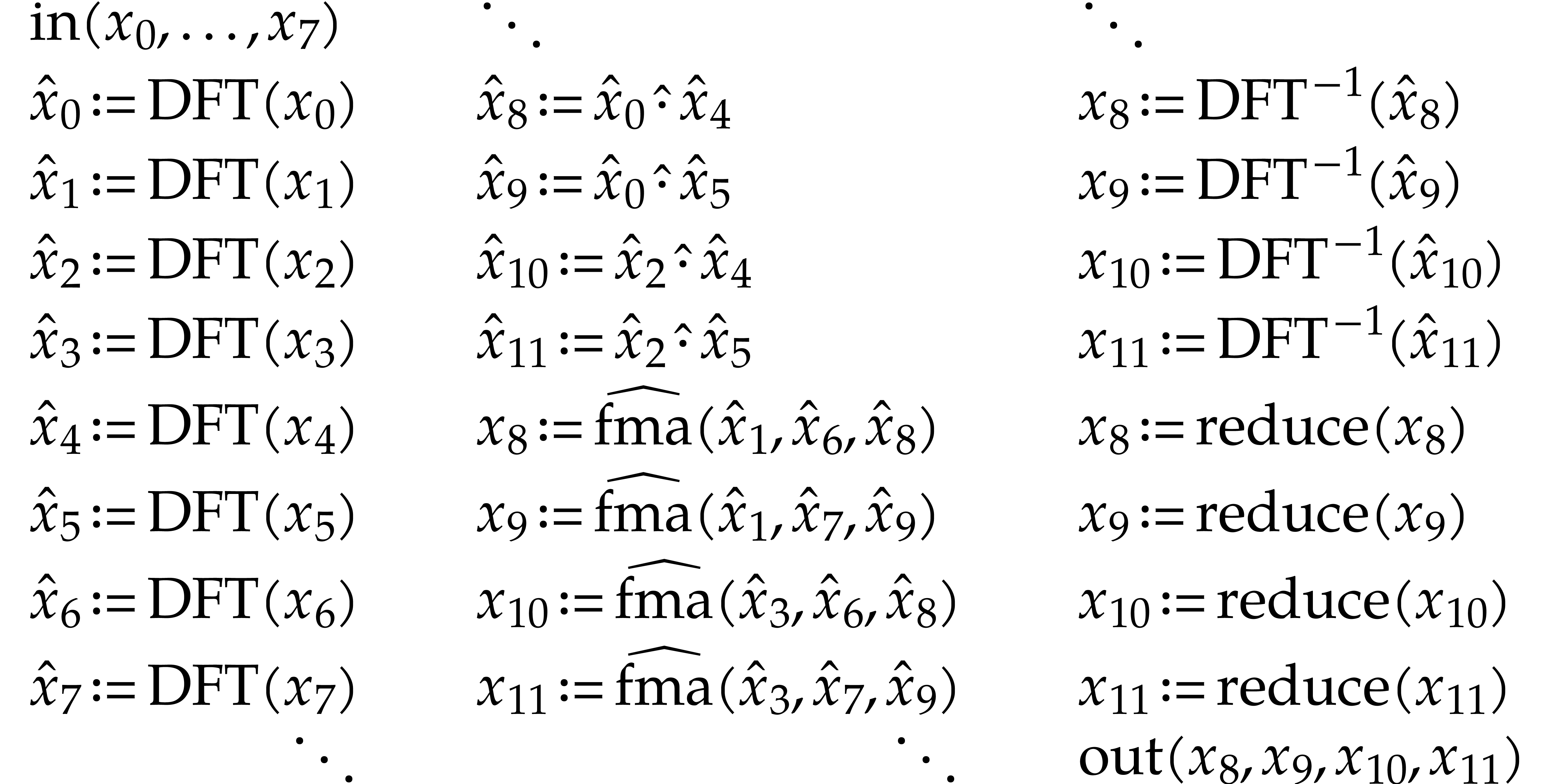

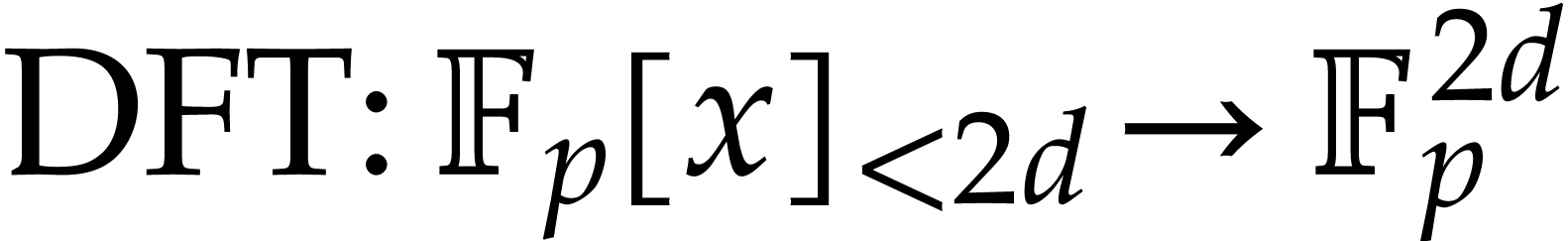

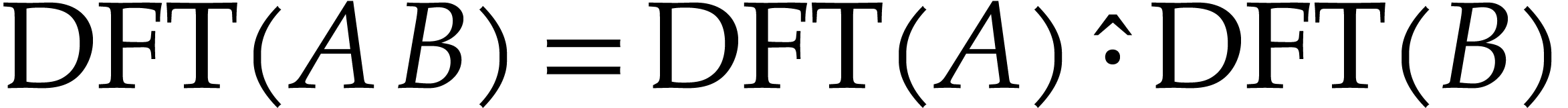

Several applications that motivated our work are discussed in section 6. From the perspective of a high level application, the SLP library is a mixture of a compiler and a traditional computer algebra library. Instead of directly calling a high level function like a matrix product or a discrete Fourier transform (DFT), the idea is rather to compute a function that is able to efficiently compute matrix products or DFTs of a specified kind (i.e. over a particular coefficient ring and for particular dimensions). In this scenario, the library is used as a tool to accelerate critical operations.

High level master routines for matrix products and DFTs may automatically compile and cache efficient machine code for matrices and DFTs of small or moderate sizes. Products and DFTs of large sizes can be reduced to these base cases. It is an interesting question whether these master routines should also be generated by the SLP library. In any case, the SLP framework allows for additional flexibility: instead of statically instantiating C++ templates for a given type, we may generate these instantiations dynamically. Moreover, using the techniques from section 5, this can be done in a way that exploits mathematical properties of the type, which are invisible for the C++ compiler.

More specifically, we will discuss applications to numerical homotopy continuation, discrete Fourier transforms, power series computations, differential equations, integer arithmetic, and geometric resolution of polynomial systems. There are many other applications, starting with linear algebra, but our selection will allow us to highlight various particular advantages and challenges for the SLP framework.

The last section 7 is dedicated to benchmarks. Some timings

for the

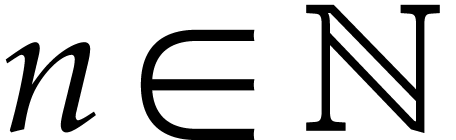

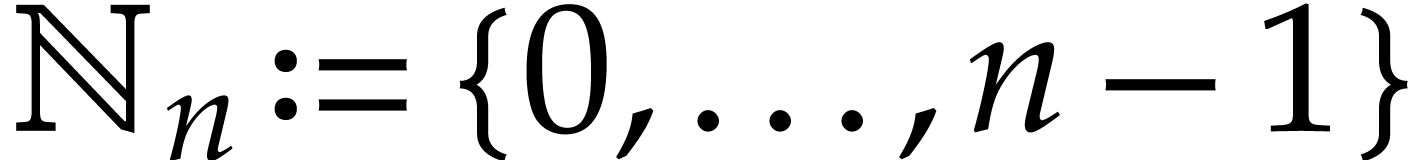

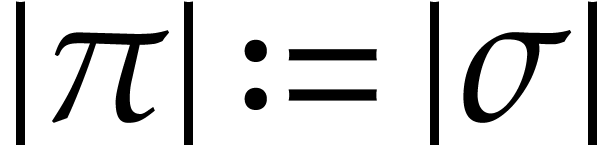

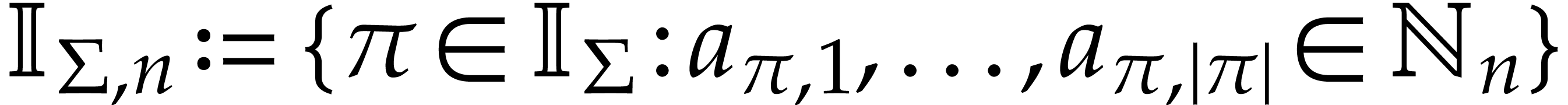

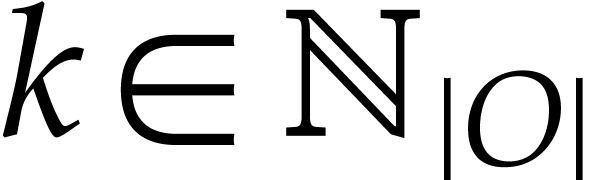

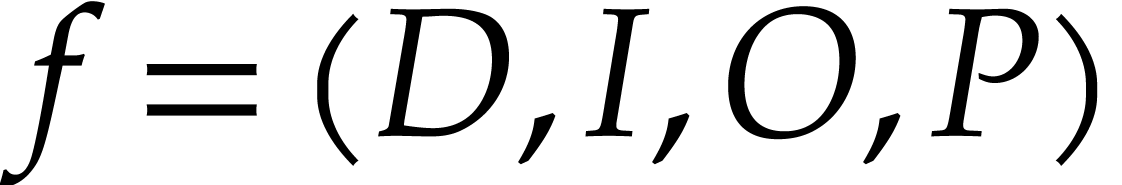

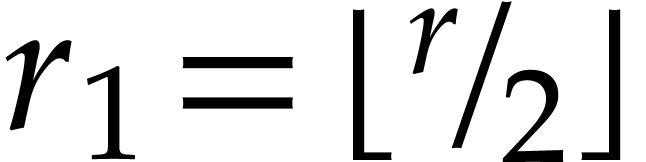

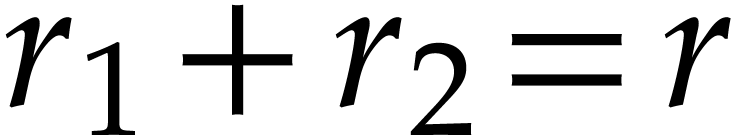

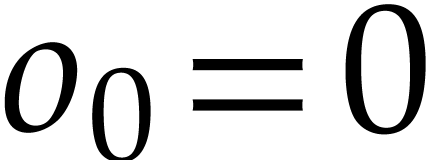

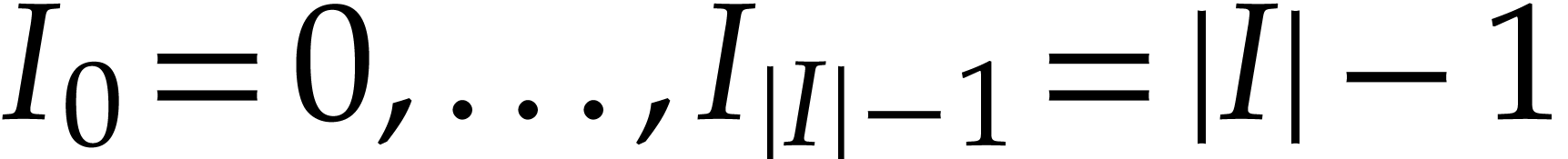

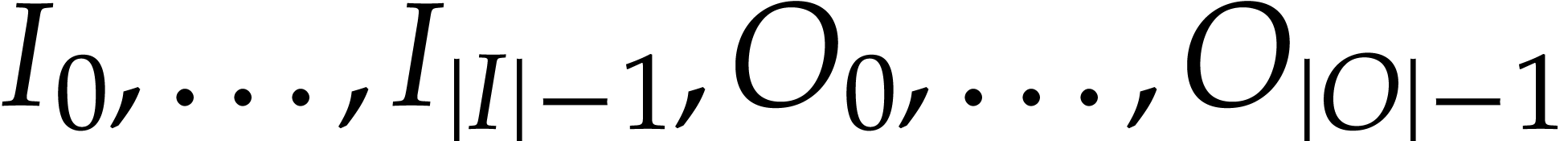

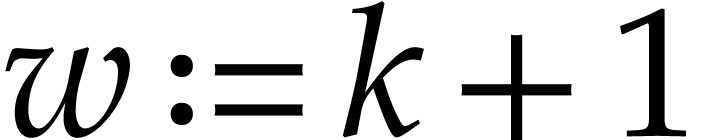

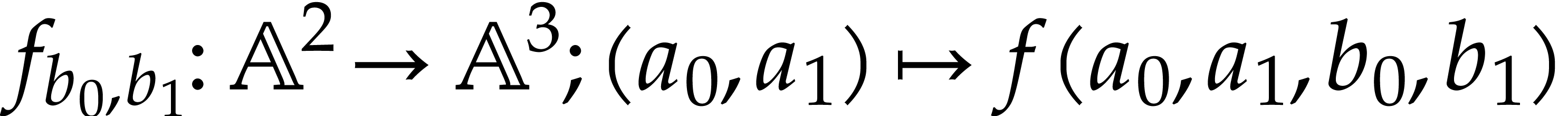

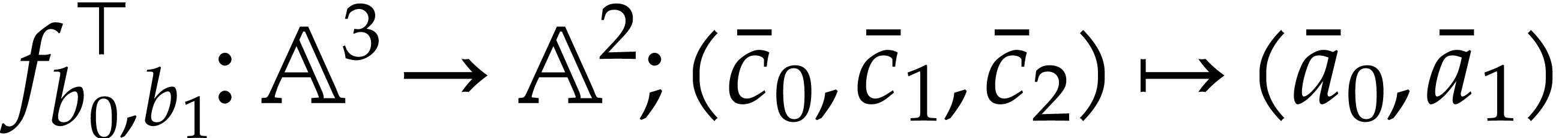

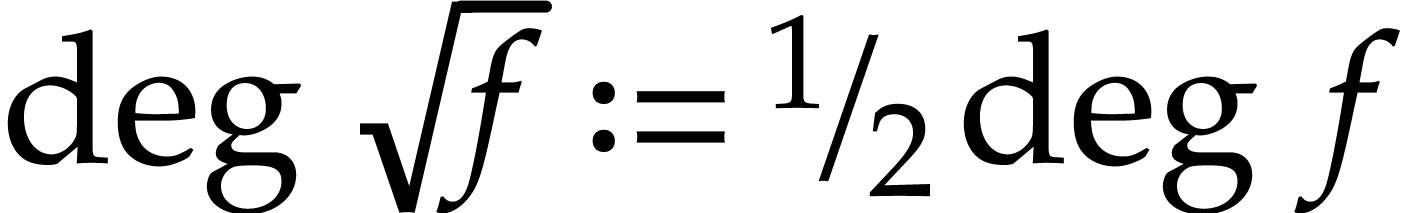

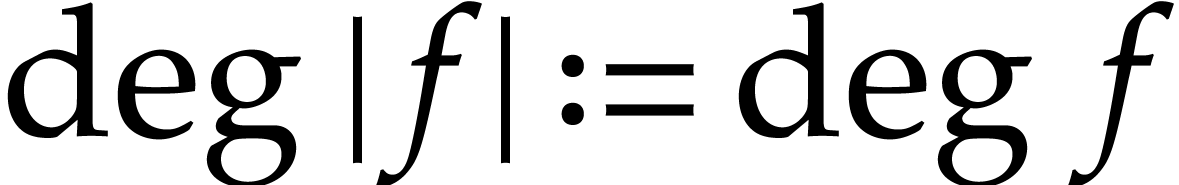

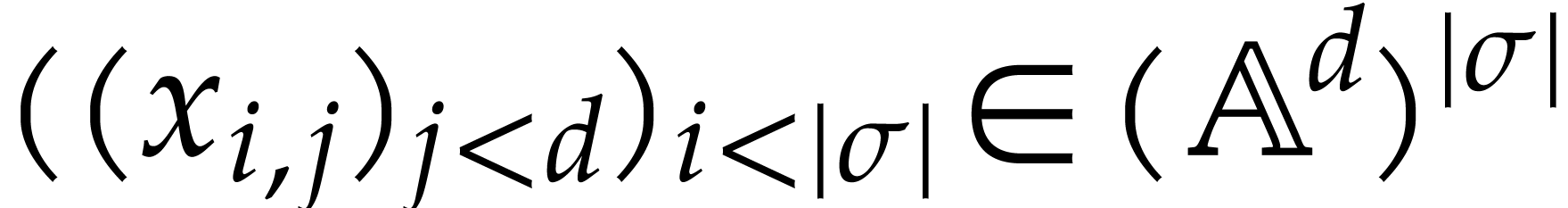

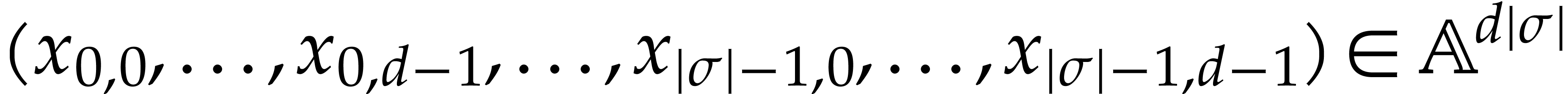

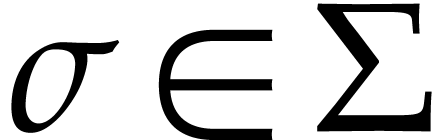

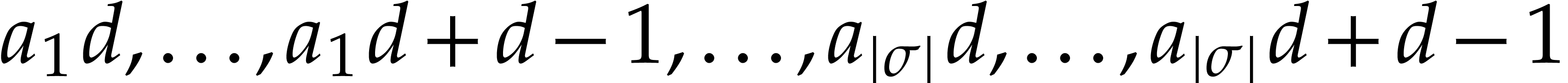

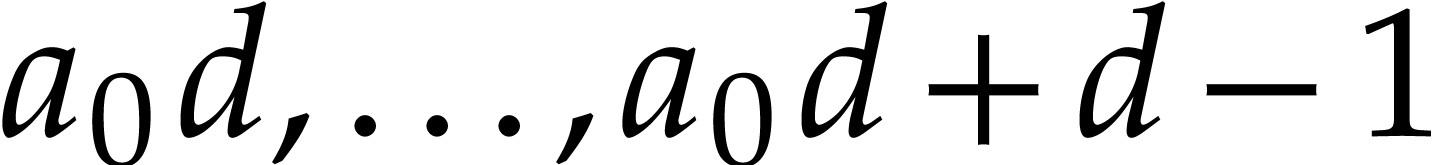

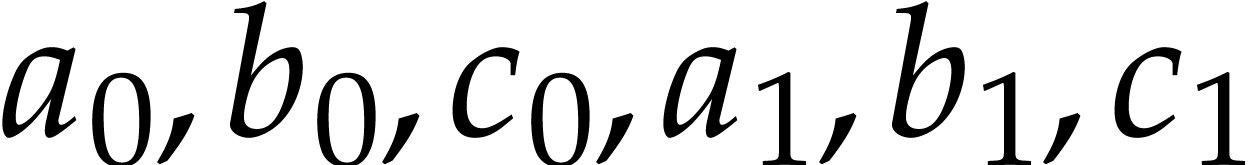

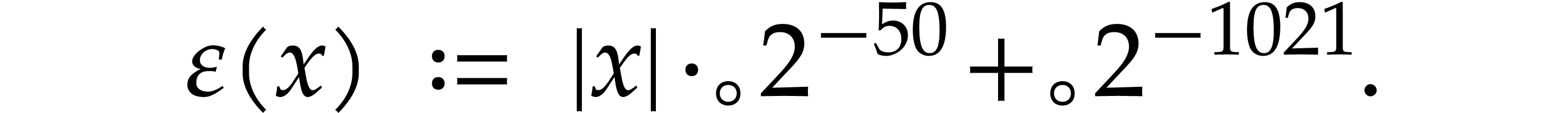

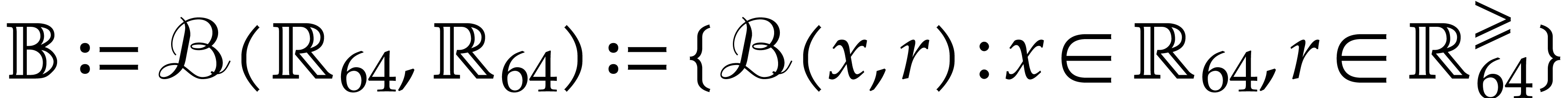

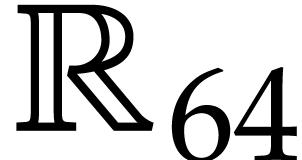

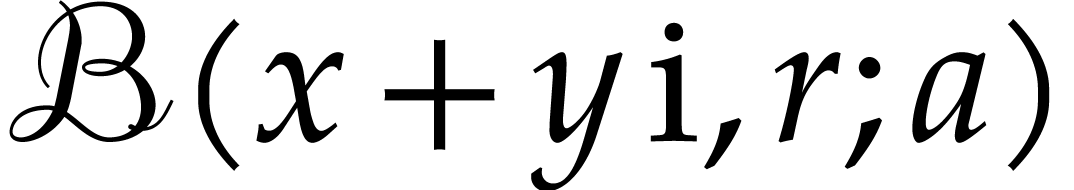

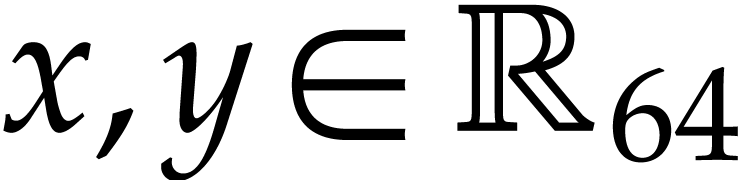

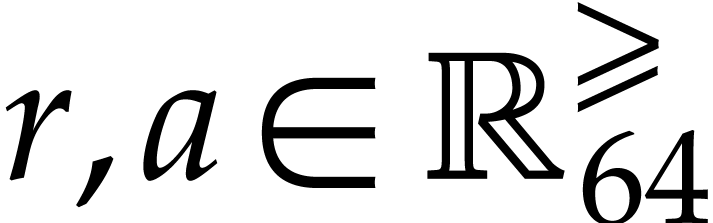

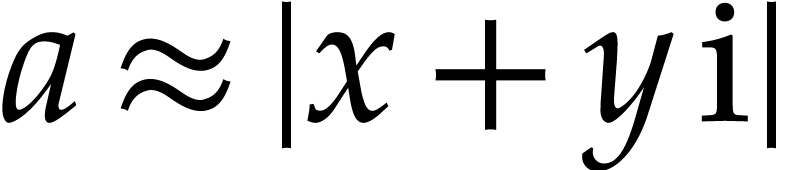

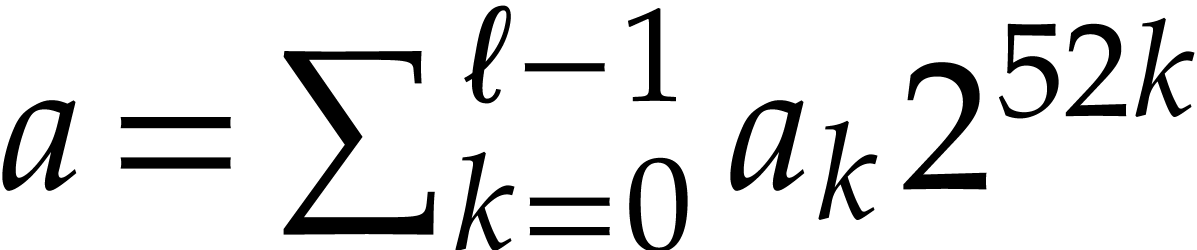

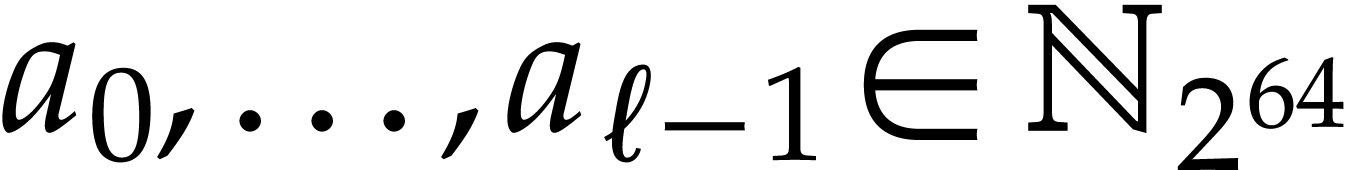

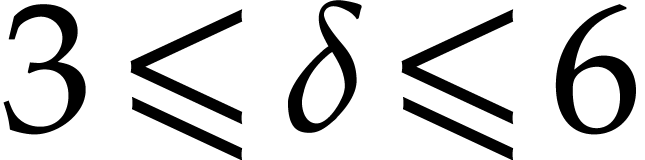

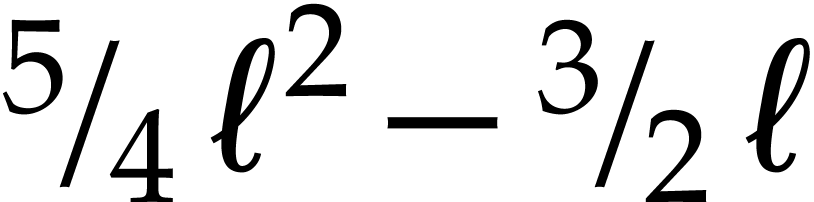

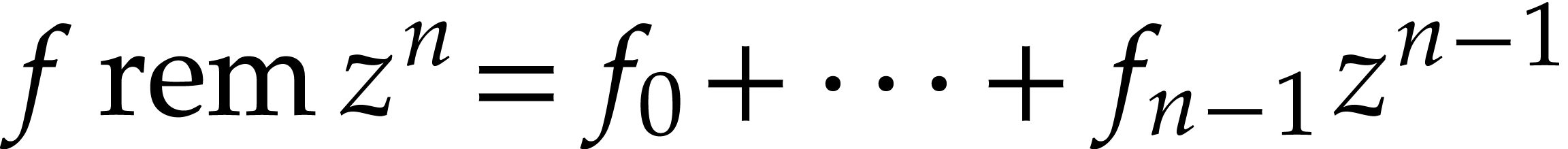

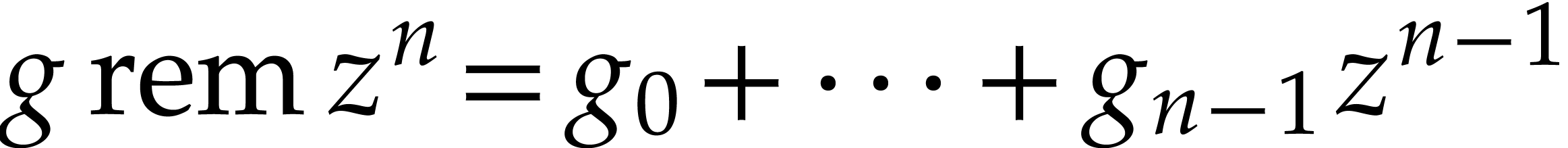

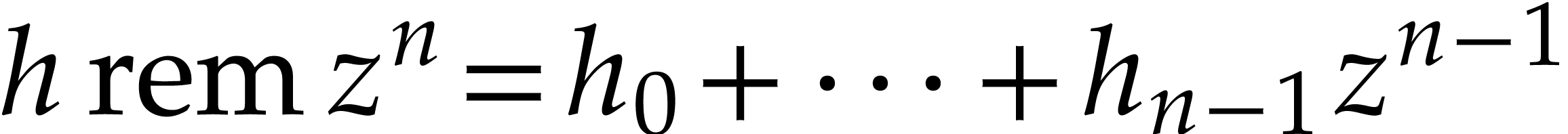

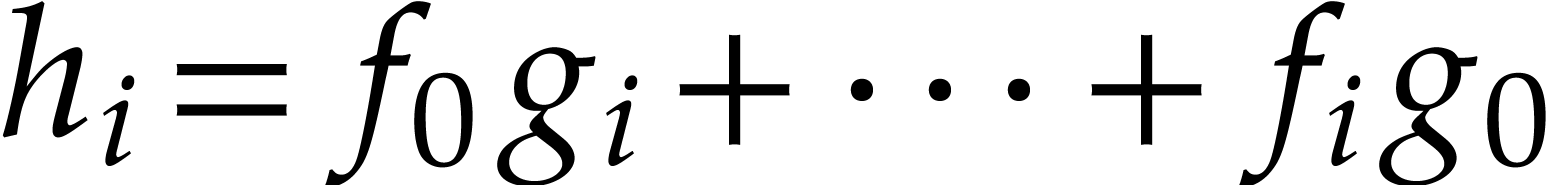

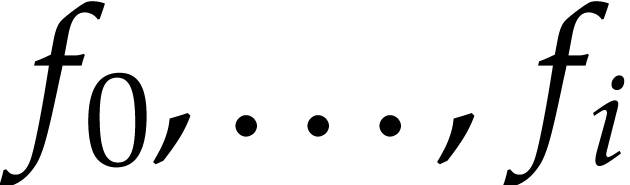

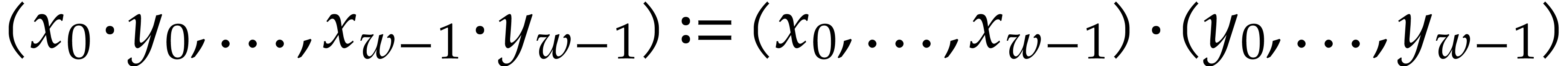

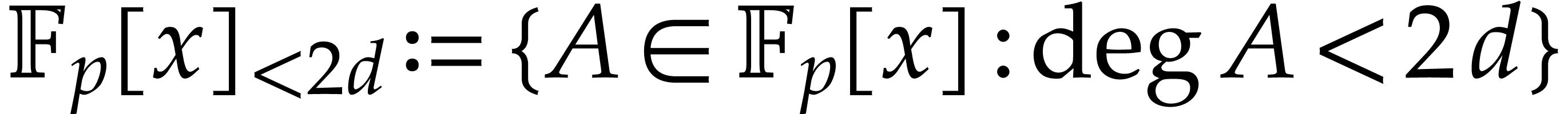

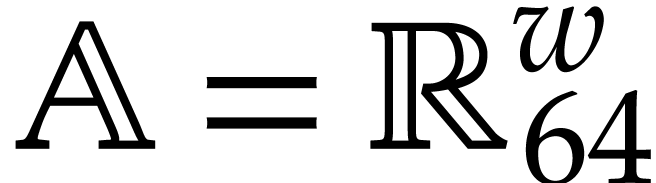

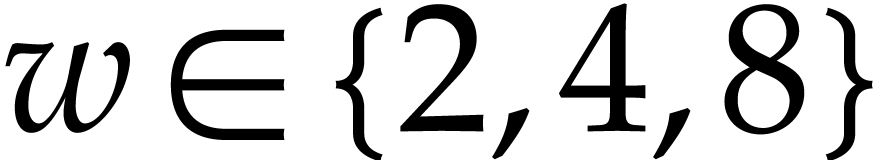

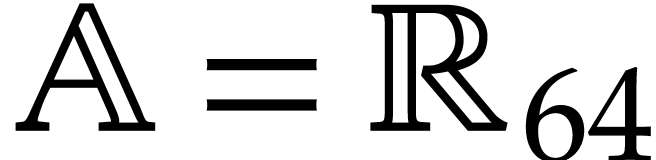

Notation. Throughout this paper, we denote  . For every

. For every  ,

we also define

,

we also define  .

.

Acknowledgments. We wish to thank Albin

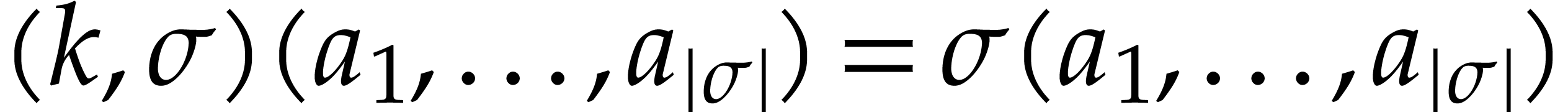

We start this section with an abstract formalization of SLPs. Our presentation is slightly different from [21] or [70], in order to be somewhat closer to the actual machine representation that we will describe next.

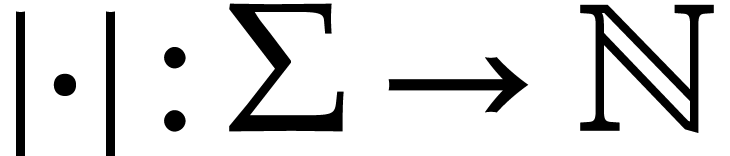

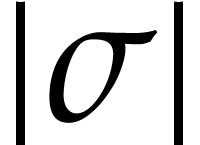

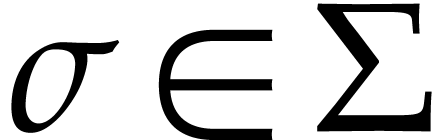

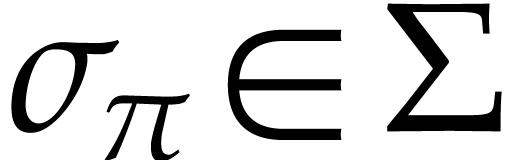

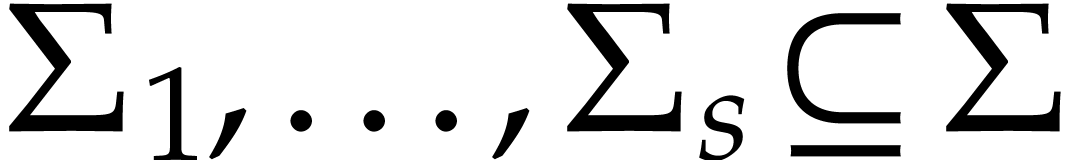

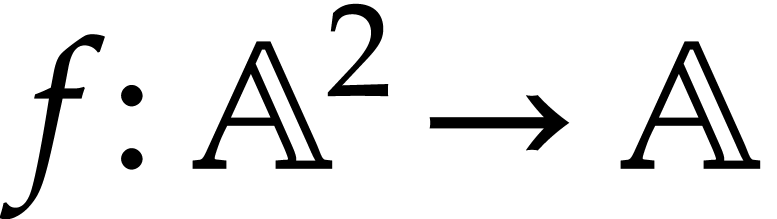

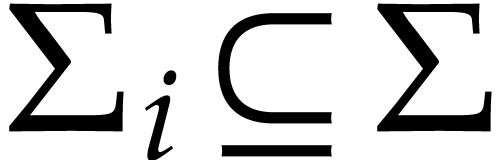

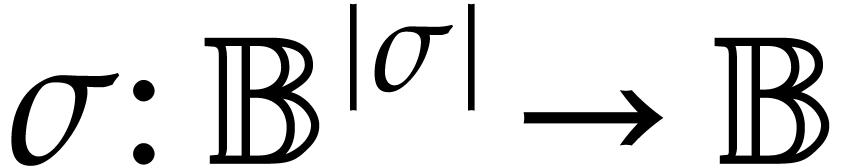

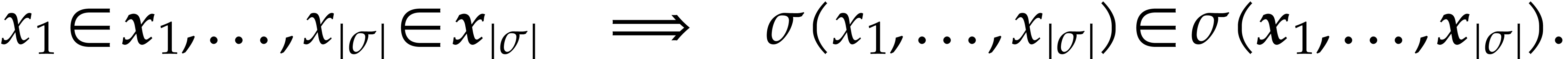

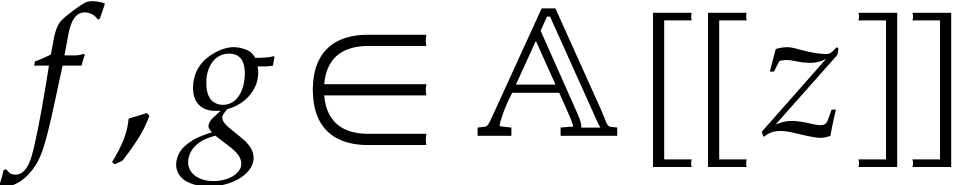

Let  be a set of operation symbols together with

a function

be a set of operation symbols together with

a function  . We call

. We call  a signature and

a signature and  the

arity of

the

arity of  , for any

operation

, for any

operation  . A domain

with signature

. A domain

with signature  is a set

is a set  together with a function

together with a function  for every

for every  . We often write

. We often write  instead of

instead of  if no confusion can arise. The domain

if no confusion can arise. The domain

is said to be effective if we have data

structures for representing the elements of

is said to be effective if we have data

structures for representing the elements of  and

and

on a computer and if we have programs for

on a computer and if we have programs for  and

and  , for

all

, for

all  .

.

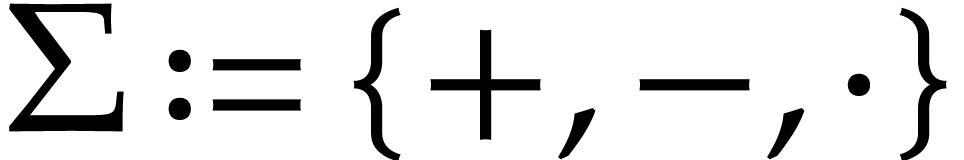

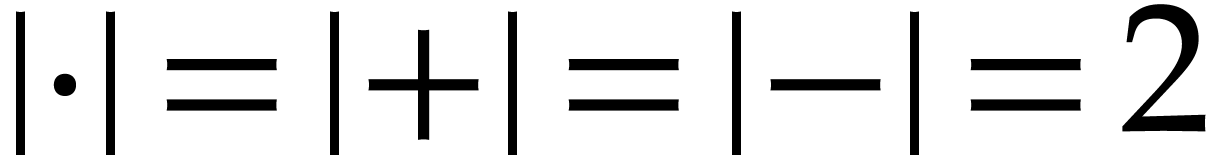

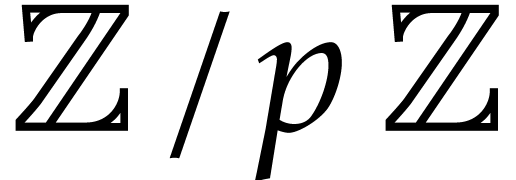

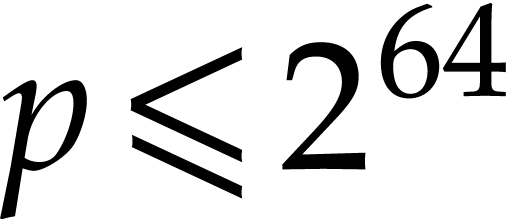

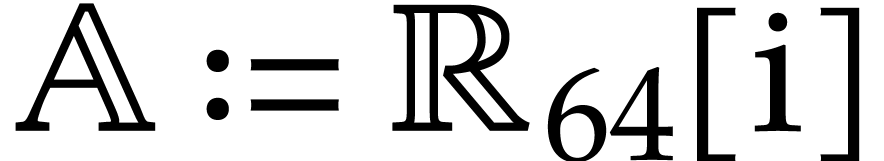

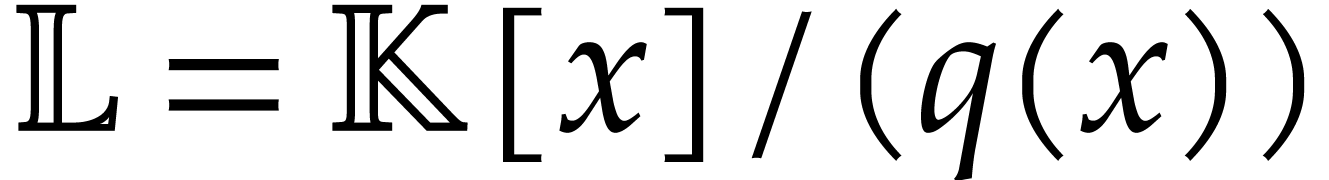

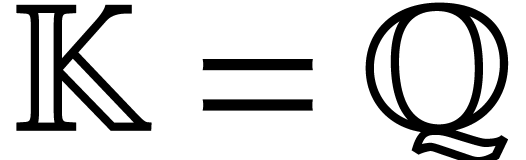

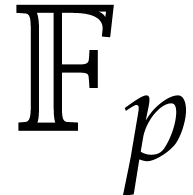

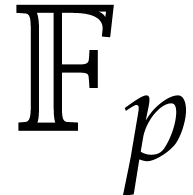

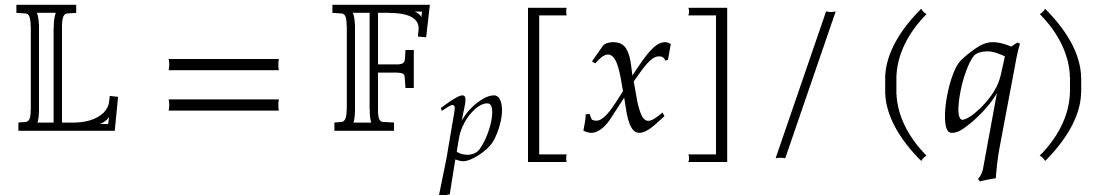

Example  to be the signature of rings with

to be the signature of rings with  and

and  to be the ring

to be the ring  or

or

.

.

Remark  is also

called a language (without relation symbols) and

is also

called a language (without relation symbols) and  a structure for this language; see, e.g., [7, Appendix B]. For simplicity, we restricted ourselves to

one-sorted languages, but extensions to the multi-sorted case are

straightforward.

a structure for this language; see, e.g., [7, Appendix B]. For simplicity, we restricted ourselves to

one-sorted languages, but extensions to the multi-sorted case are

straightforward.

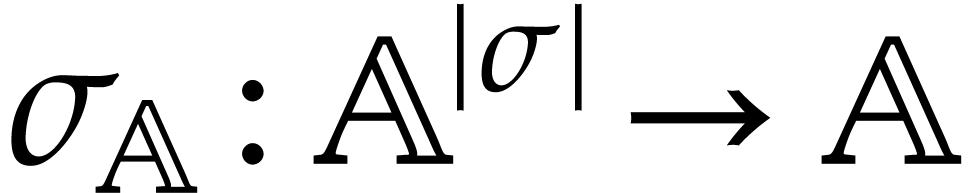

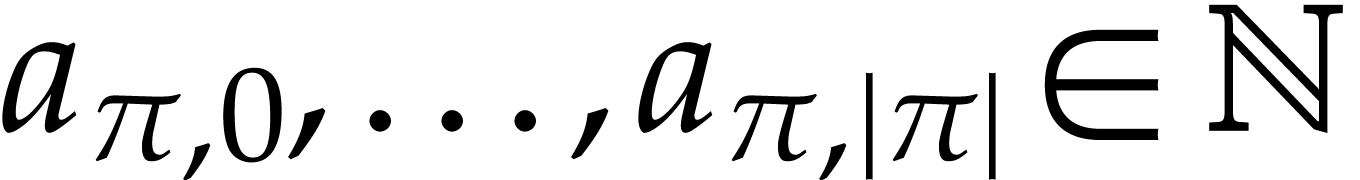

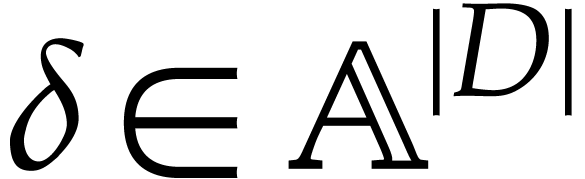

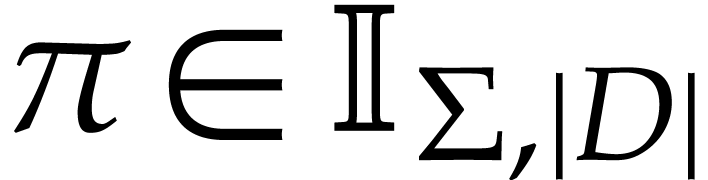

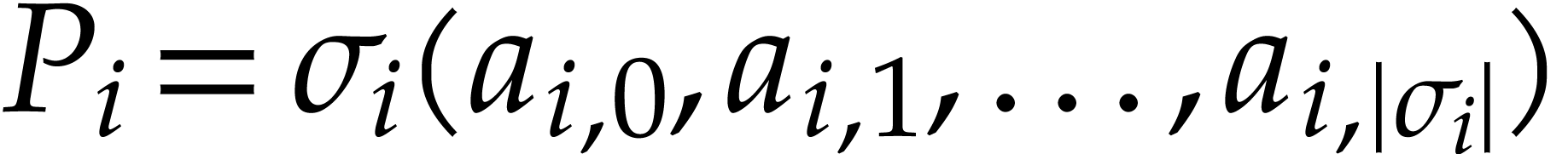

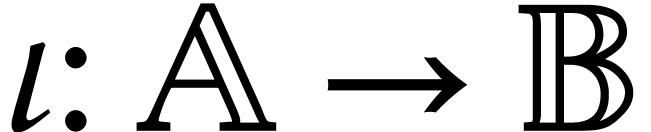

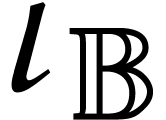

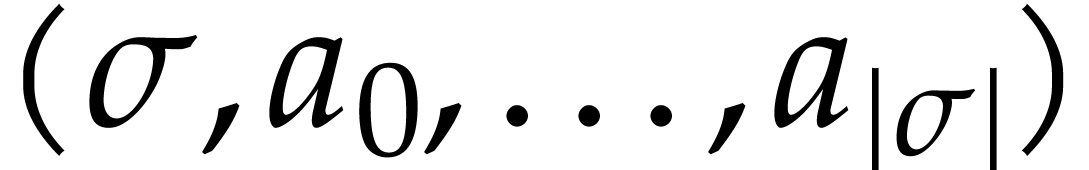

Let  be an effective domain with signature

be an effective domain with signature  . An instruction is a

tuple

. An instruction is a

tuple

with  and arguments

and arguments  , where

, where  .

We call

.

We call  the destination argument or

operand and

the destination argument or

operand and  the source

arguments or operands. We denote by

the source

arguments or operands. We denote by  the set of such instructions. Given

the set of such instructions. Given  ,

we also let

,

we also let  . A

straight-line program (SLP) over

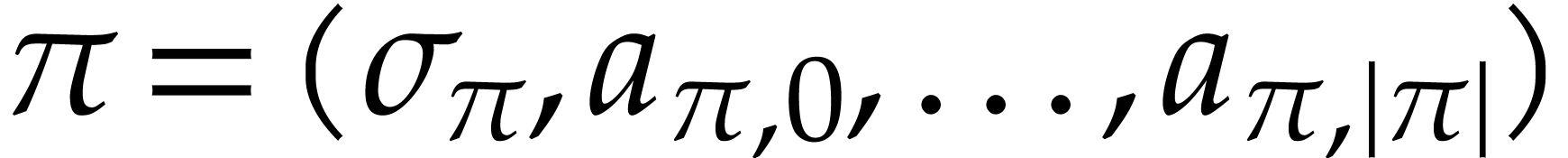

. A

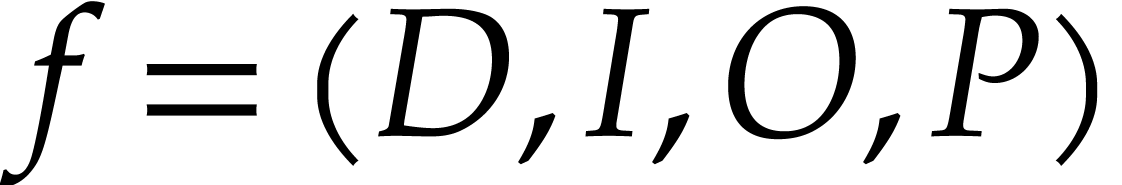

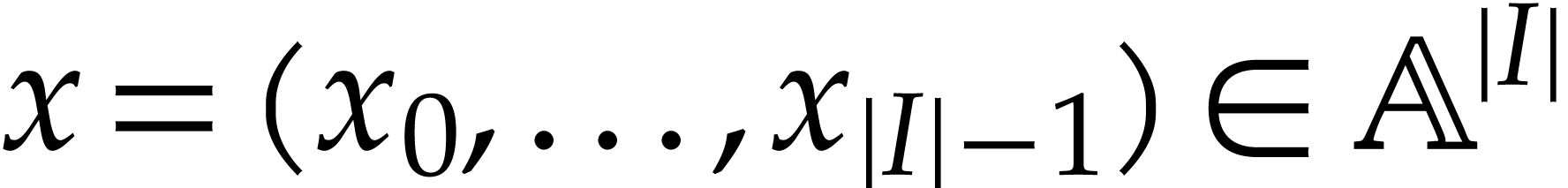

straight-line program (SLP) over  is a quadruple

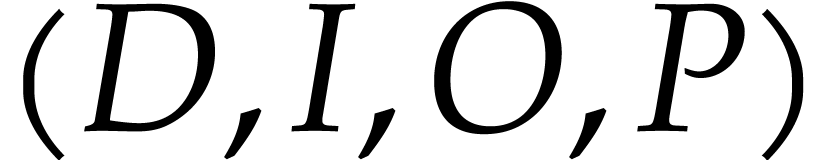

is a quadruple  , where

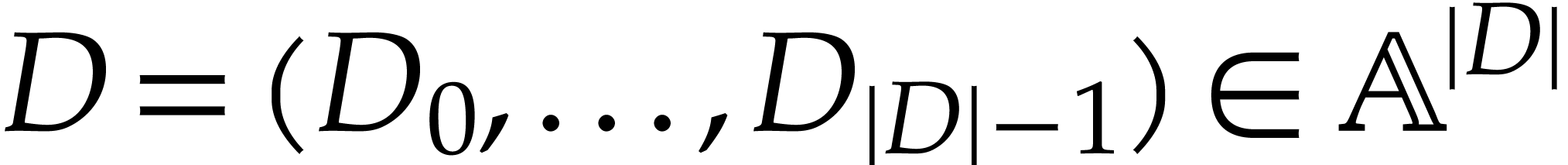

, where

is a tuple of data fields,

is a tuple of data fields,

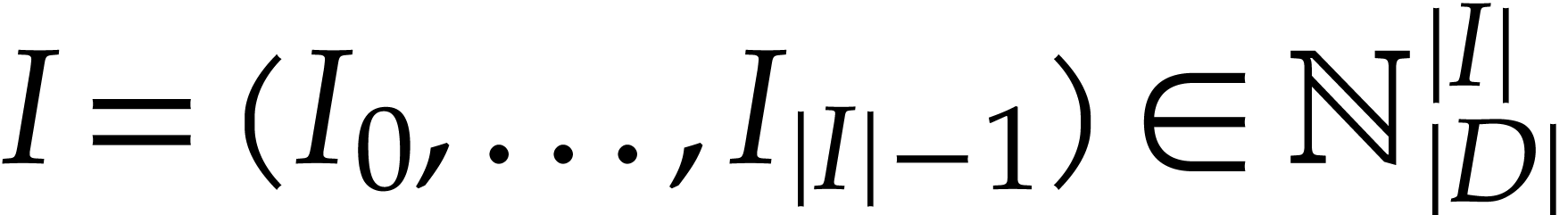

is a tuple of pairwise distinct input

locations,

is a tuple of pairwise distinct input

locations,

is a tuple of output locations,

is a tuple of output locations,

is a tuple of instructions.

is a tuple of instructions.

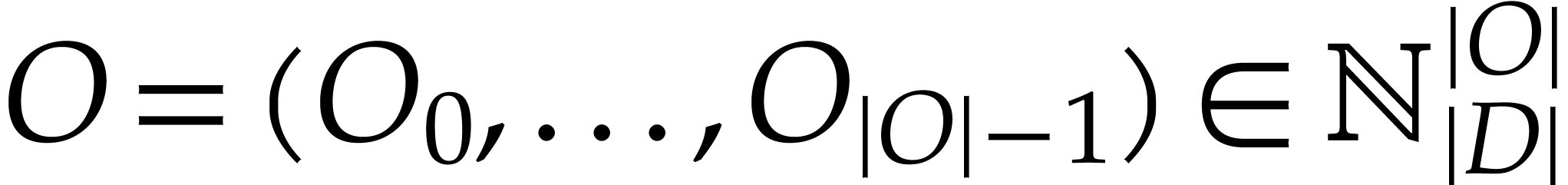

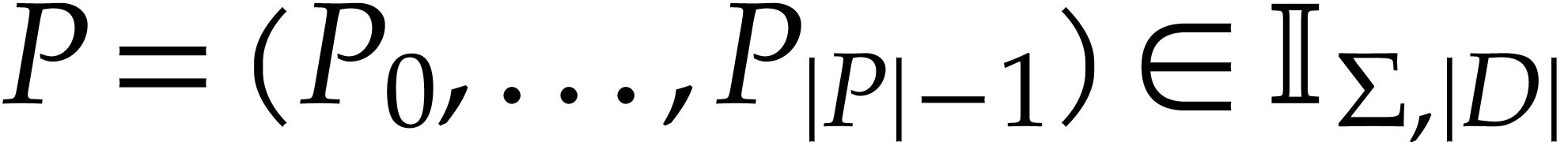

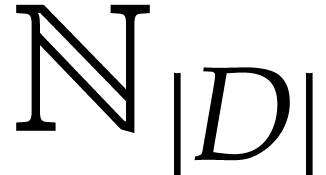

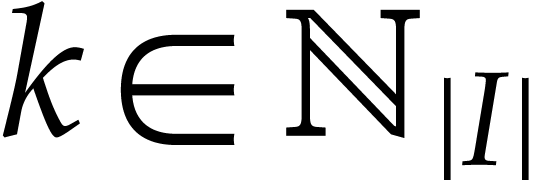

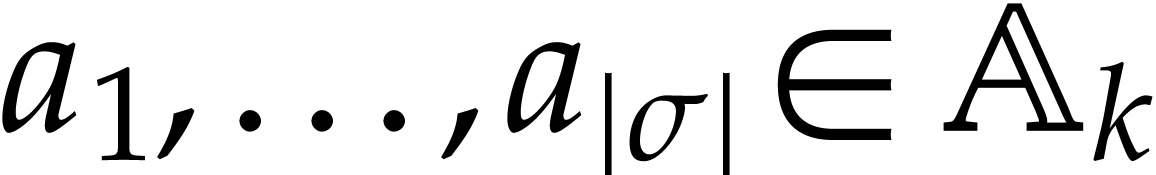

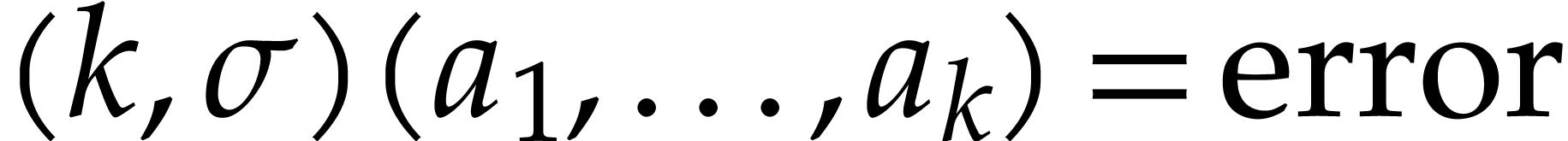

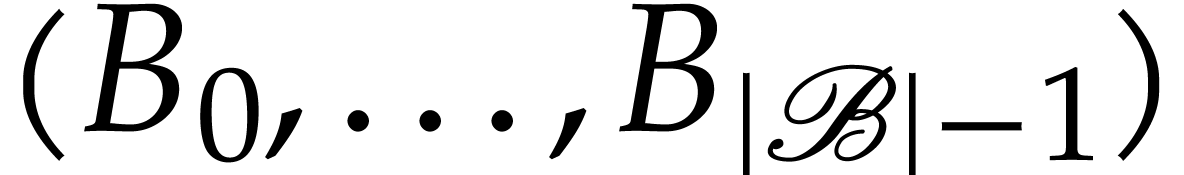

We regard  as the working space for our SLP. Each

location in this working space can be represented using an integer in

as the working space for our SLP. Each

location in this working space can be represented using an integer in

and instructions directly operate on the working

space as we will describe now.

and instructions directly operate on the working

space as we will describe now.

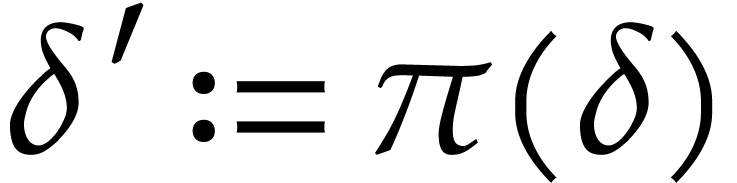

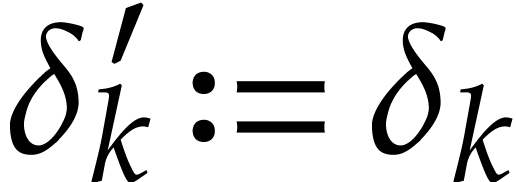

Given a state  of our working space, the

execution of an instruction

of our working space, the

execution of an instruction  gives rise

to a new state

gives rise

to a new state  where

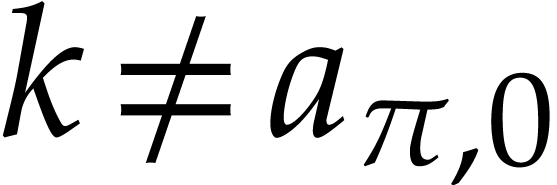

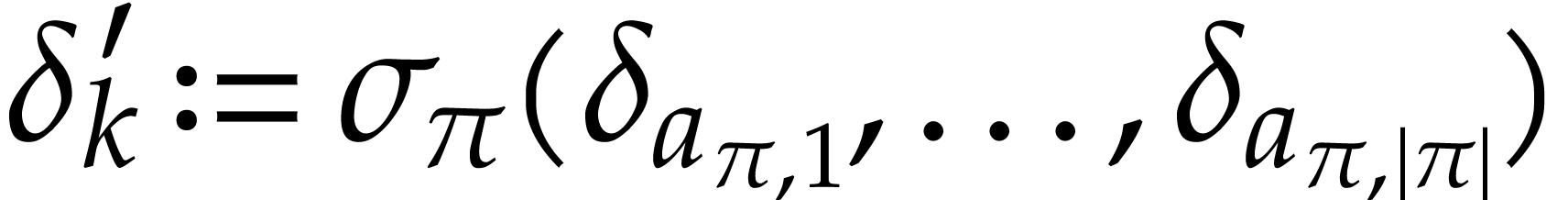

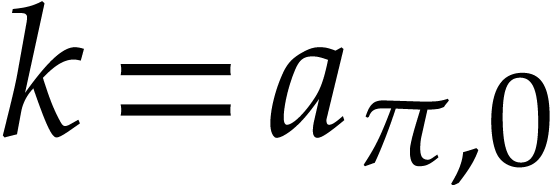

where  if

if

and

and  if

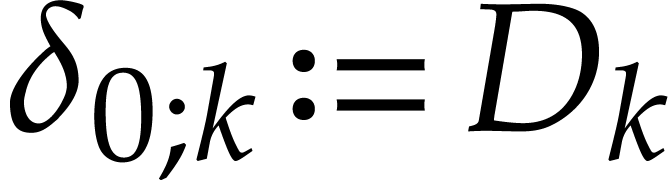

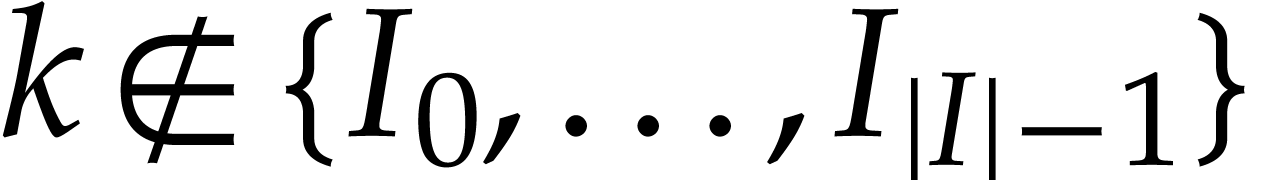

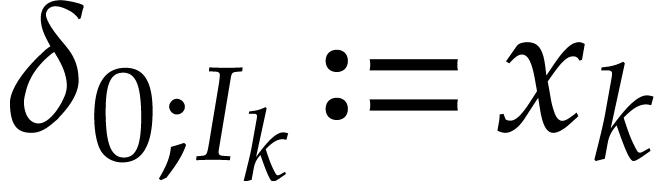

if  . Given input values

. Given input values  , the corresponding begin state

, the corresponding begin state  of our SLP is given by

of our SLP is given by  if

if  and

and  for

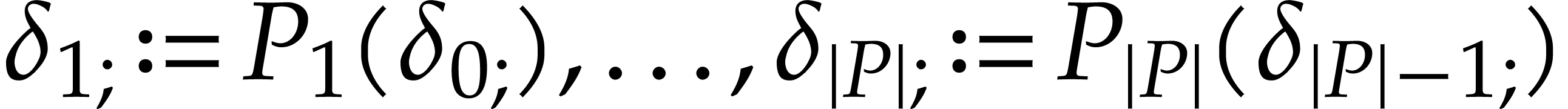

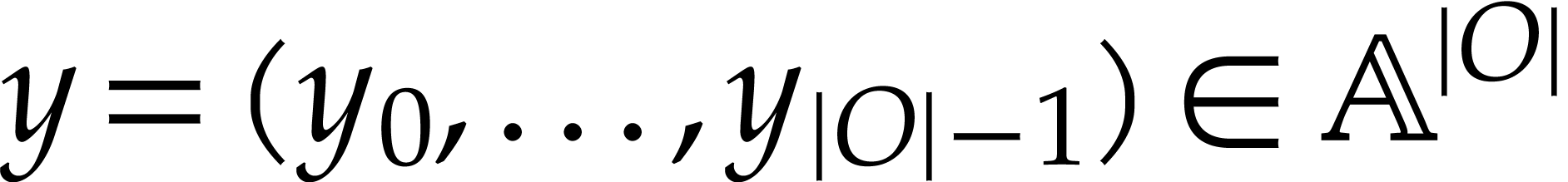

for  . Execution of the SLP next gives rise to a

sequence of states

. Execution of the SLP next gives rise to a

sequence of states  . The

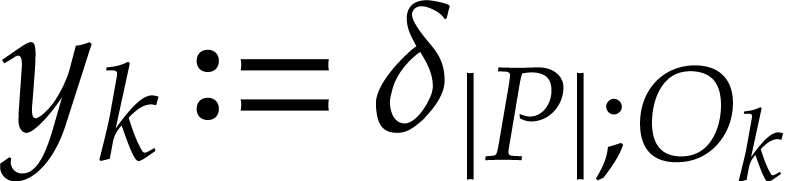

output values

. The

output values  of our SLP are now given

by

of our SLP are now given

by  for

for  .

In this way our SLP

.

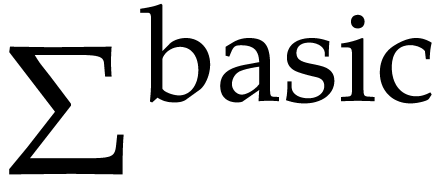

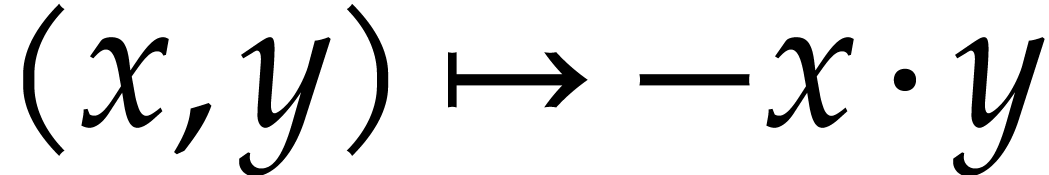

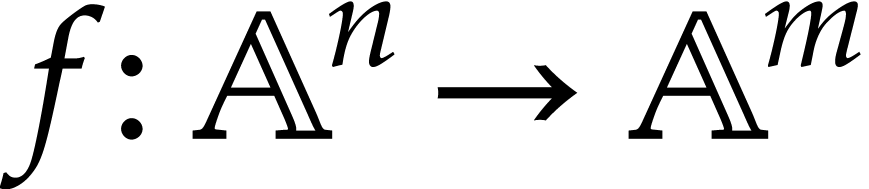

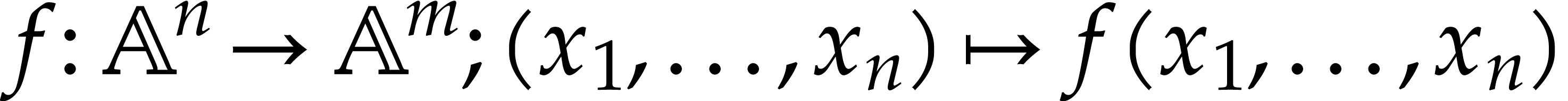

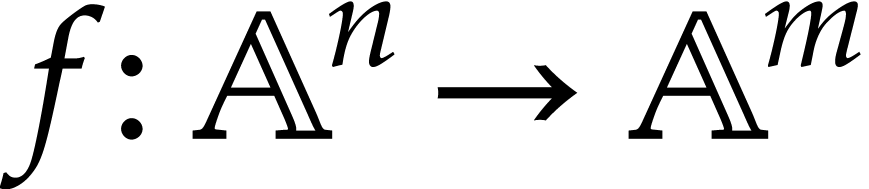

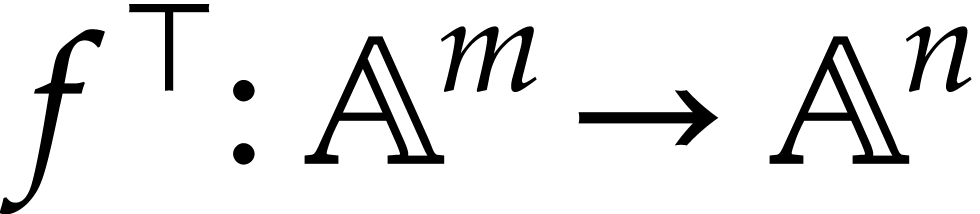

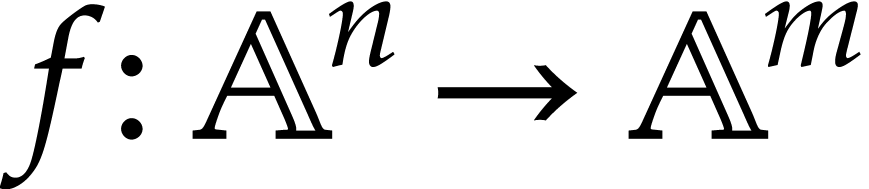

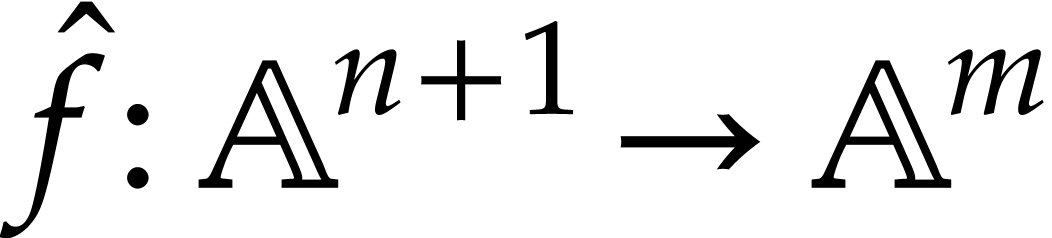

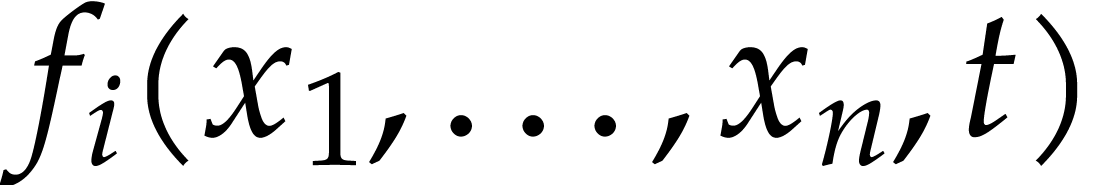

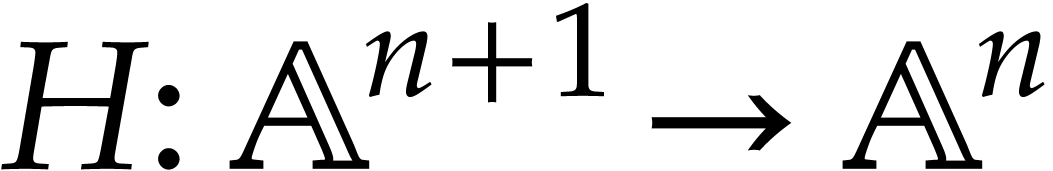

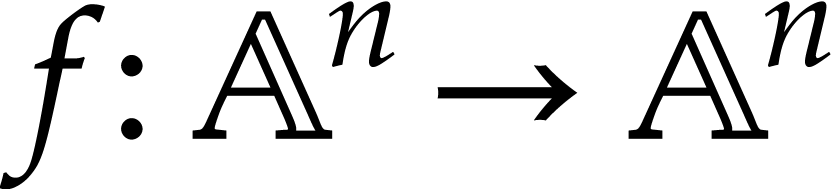

In this way our SLP  gives rise to a function

gives rise to a function

that we will also denote by

that we will also denote by  . Two SLPs will be said to be

equivalent if they compute the same function.

. Two SLPs will be said to be

equivalent if they compute the same function.

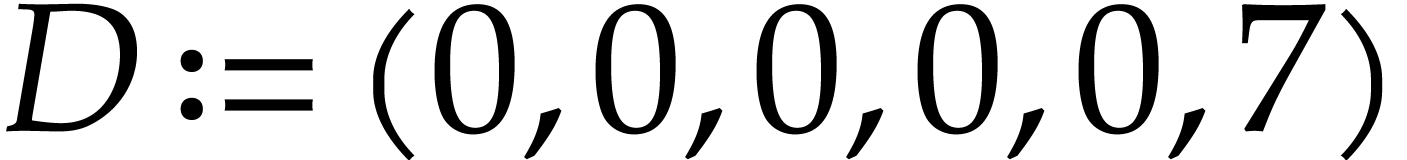

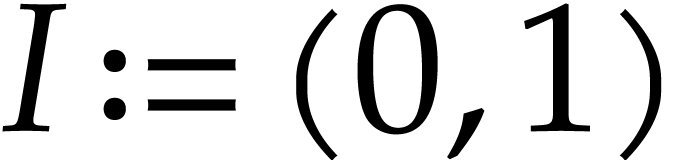

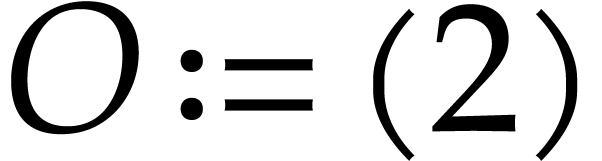

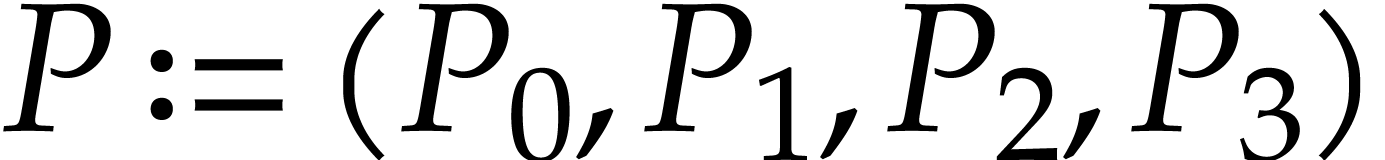

Example  and

and  as in Example 2.1,

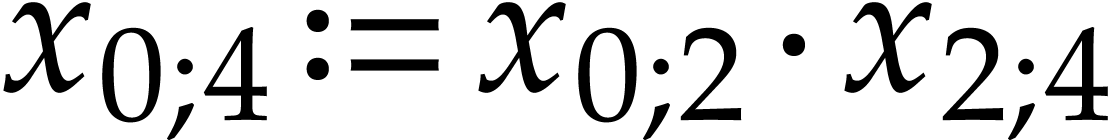

our introductory example (1.1) can be represented as

follows:

as in Example 2.1,

our introductory example (1.1) can be represented as

follows:  ,

,  ,

,  ,

and

,

and  with

with

One may check that this SLP indeed computes the intended function when

using the above semantics. Note that we may chose arbitrary values for

the input fields  , the output

field

, the output

field  , and the auxiliary

data fields

, and the auxiliary

data fields  ,

,  . So we may just as well take them to be zero,

as we did.

. So we may just as well take them to be zero,

as we did.

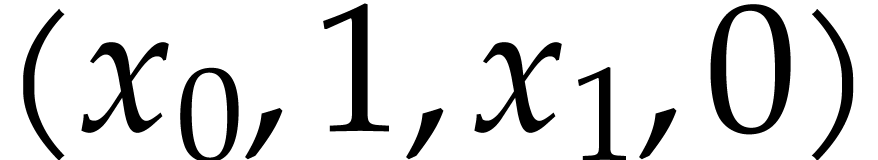

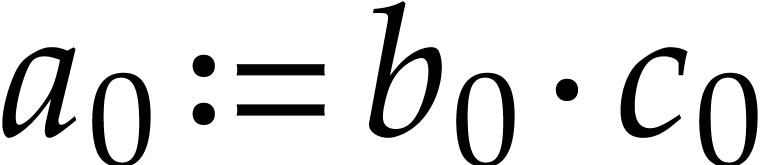

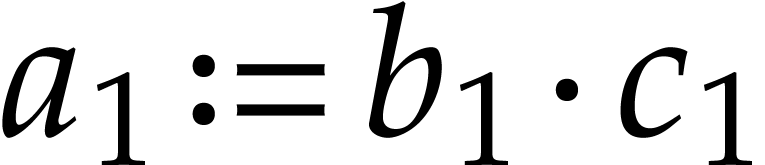

For the sake of readability, we will continue to use the notation (1.1) instead (2.1), the necessary rewritings

being clear. If we wish to make the numbering of registers precise in

(1.1), then we will replace  by

by

:

:

|

(2.2) |

Note that  is not really an instruction, but

rather a way to indicate that the data field

is not really an instruction, but

rather a way to indicate that the data field  contains the constant

contains the constant  .

.

It will be useful to call elements of  variables and denote them by more intelligible symbols like

variables and denote them by more intelligible symbols like

whenever convenient. The entries of

whenever convenient. The entries of  and

and  are called input and

output variables, respectively. Non-input variables that also

do not occur as destination operands of instructions in

are called input and

output variables, respectively. Non-input variables that also

do not occur as destination operands of instructions in  are called constants. An auxiliary variable is a

variable that is neither an input variable, nor an output variable, nor

a constant. Given a variable

are called constants. An auxiliary variable is a

variable that is neither an input variable, nor an output variable, nor

a constant. Given a variable  the corresponding

data field is

the corresponding

data field is  .

Input variables, output variables, and constants naturally correspond to

input fields, output fields, and constant

fields.

.

Input variables, output variables, and constants naturally correspond to

input fields, output fields, and constant

fields.

Remark  of the SLP as small

as possible.

of the SLP as small

as possible.

Remark

One crucial design decision concerns the choice of the signature  and the way how operations are represented.

and the way how operations are represented.

On the one hand, we do not want to commit ourselves to one specific

signature, so it is important to plan future extensions and even allow

users to specify their own dynamic signatures. This kind of flexibility

requires no extra implementation effort for many

“signature-agnostic” operations on SLPs, such as common

subexpression elimination, register allocation, etc. On the other hand,

the precise signature does matter for operations like simplification,

automatic differentiation, etc. We need our code to be as efficient as

possible for the most common operations in  ,

while providing the possibility to smoothly extend our implementations

to more exotic operations whenever needed.

,

while providing the possibility to smoothly extend our implementations

to more exotic operations whenever needed.

In the  by abstract expressions.

Exploiting pattern matching facilities inside

by abstract expressions.

Exploiting pattern matching facilities inside

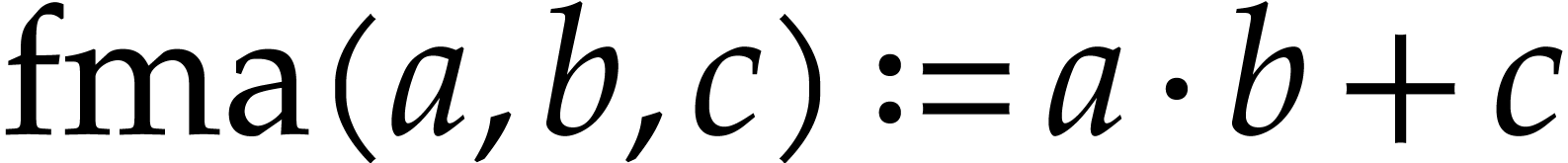

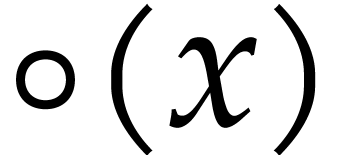

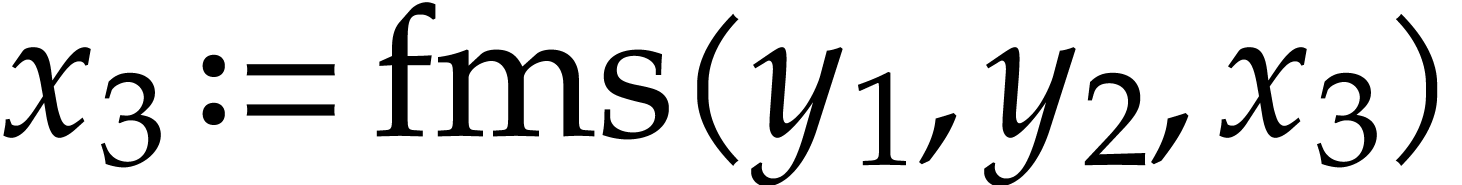

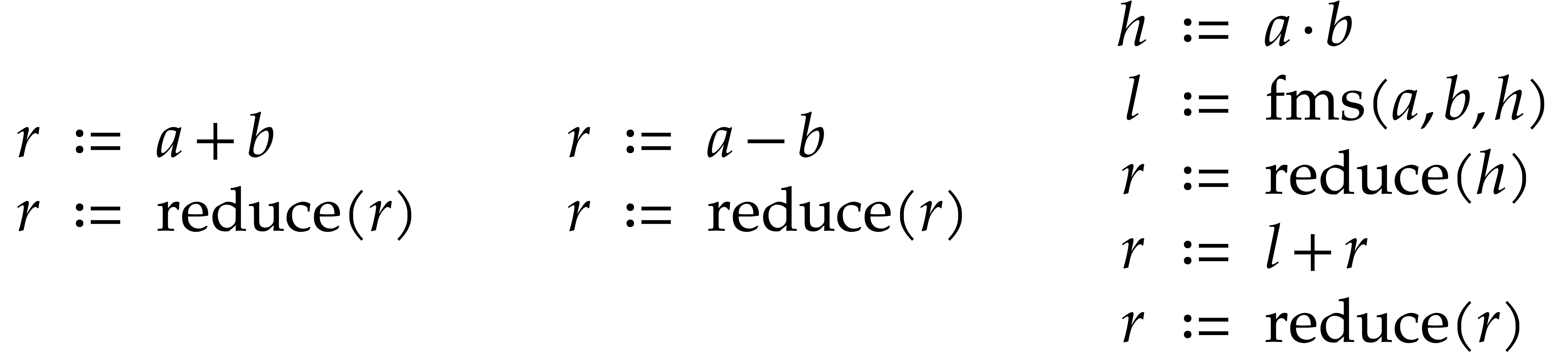

What about the choice of a subsignature  of

operations that we deem to be “most common” and that should

benefit from particularly efficient support in the library? At the end

of the day, we wish to turn our SLPs into efficient machine code. It is

therefore useful to draw inspiration from the instruction sets of modern

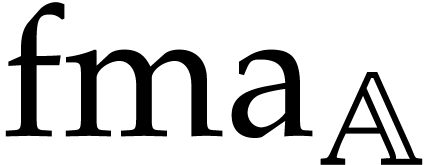

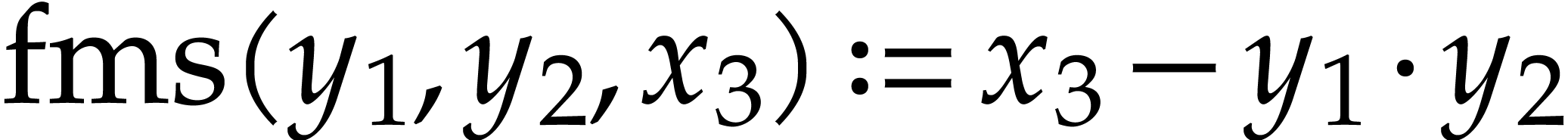

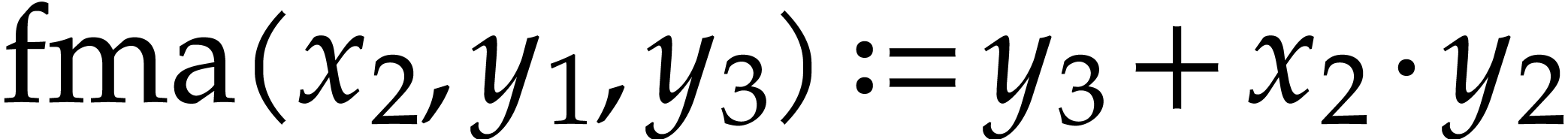

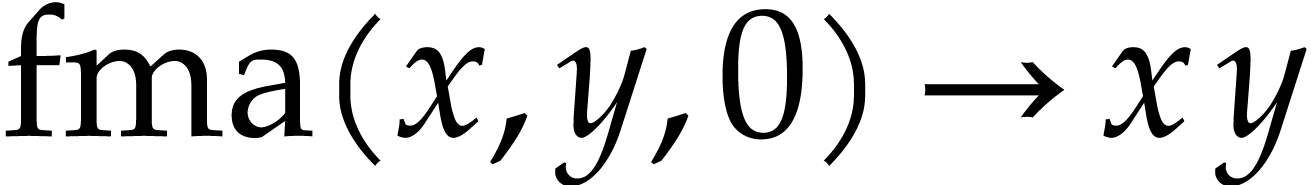

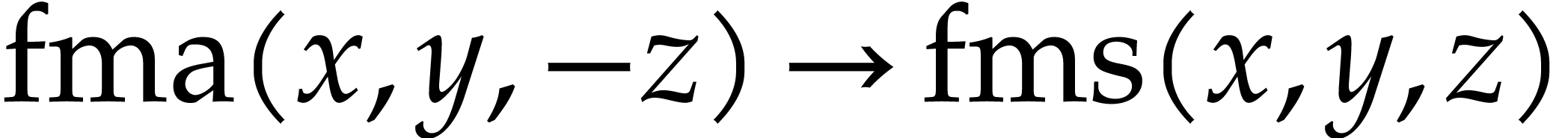

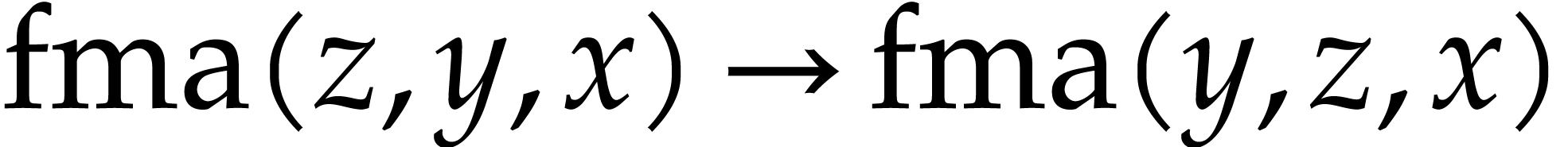

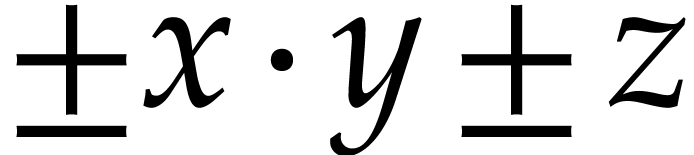

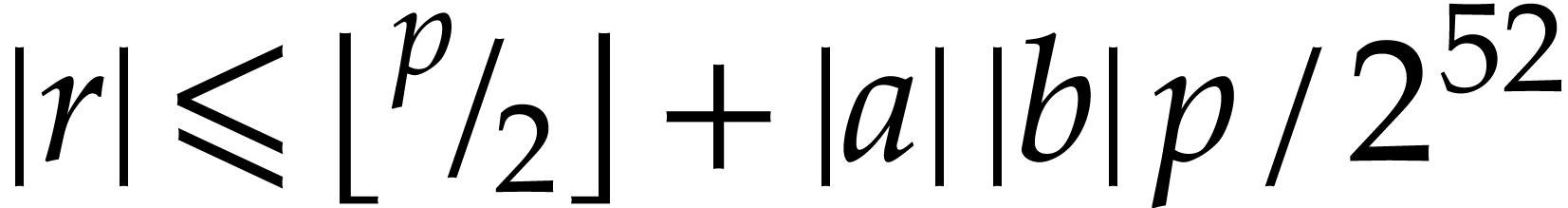

CPUs and GPUs. For example, this motivated our decision to include the

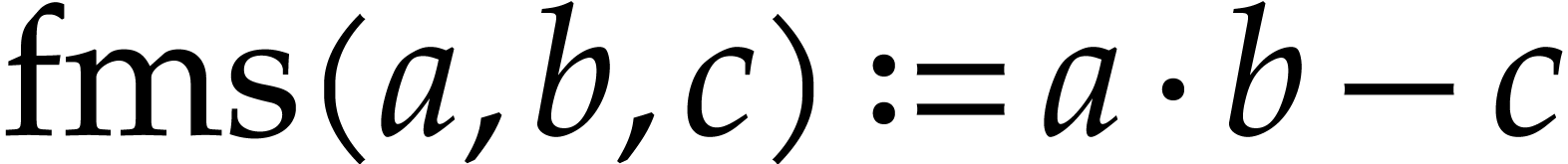

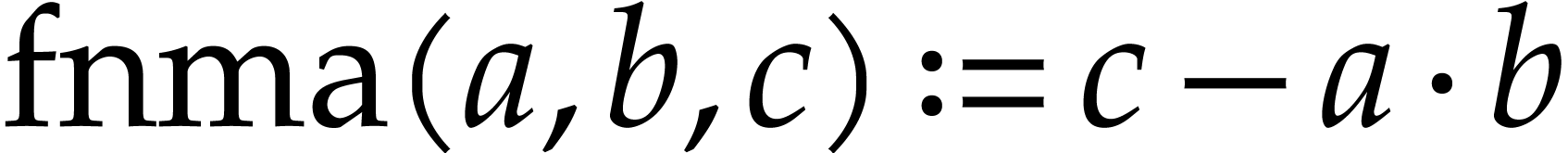

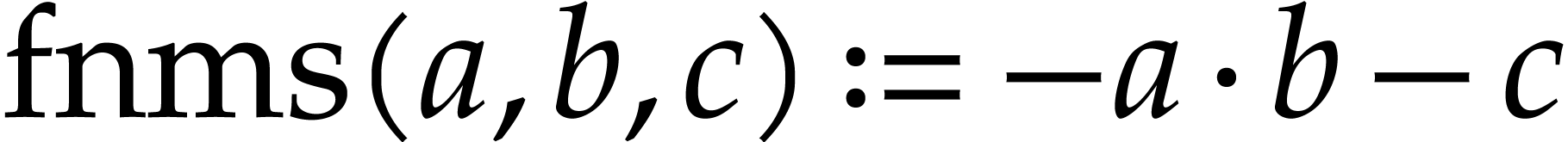

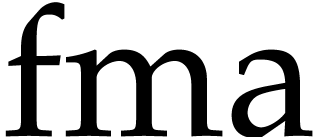

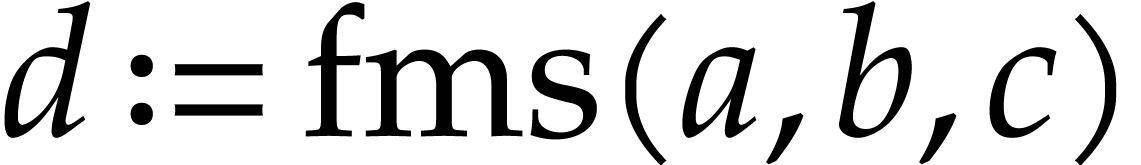

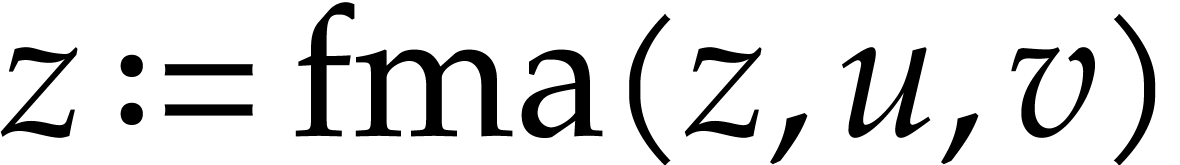

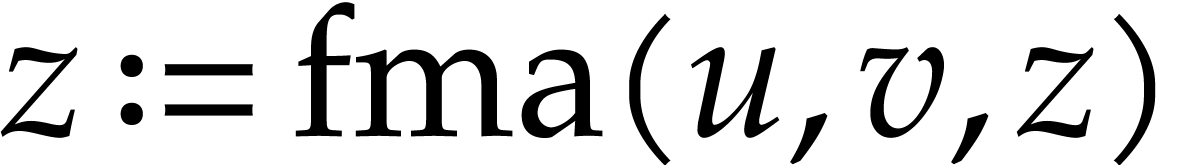

fused multiply add (FMA) operation

of

operations that we deem to be “most common” and that should

benefit from particularly efficient support in the library? At the end

of the day, we wish to turn our SLPs into efficient machine code. It is

therefore useful to draw inspiration from the instruction sets of modern

CPUs and GPUs. For example, this motivated our decision to include the

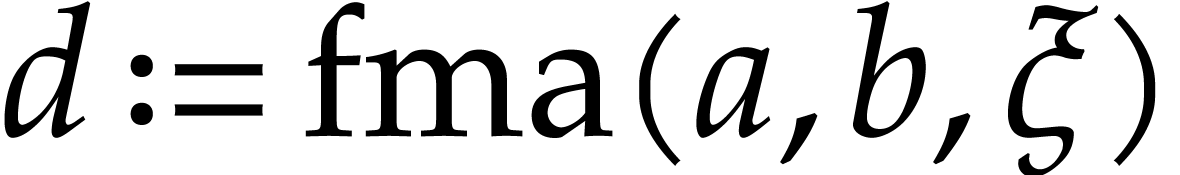

fused multiply add (FMA) operation  in

in

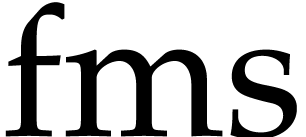

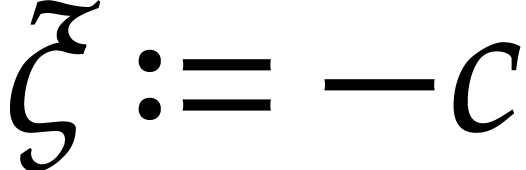

, together with its signed

variants

, together with its signed

variants  ,

,  , and

, and  .

Although this adds to the complexity of

.

Although this adds to the complexity of  (why not

emulate FMA using multiplication and addition?), this decision tends to

result in better machine code (more about this below; see also section

5.7).

(why not

emulate FMA using multiplication and addition?), this decision tends to

result in better machine code (more about this below; see also section

5.7).

However, the instructions sets of modern processors are very large. In order to keep our SLP library as simple as possible, it would be unreasonable to directly support all these instructions. Much of the complexity of modern instruction sets is due to multiple instances of the same instruction for different data types, SIMD vector widths, immediate arguments, addressing modes, guard conditions, etc. In the SLP setting it is more meaningful to implement general mechanisms for managing such variants. Modern processors also tend to provide a large number of exotic instructions, which are only needed at rare occasions, and which can typically be emulated using simpler instructions.

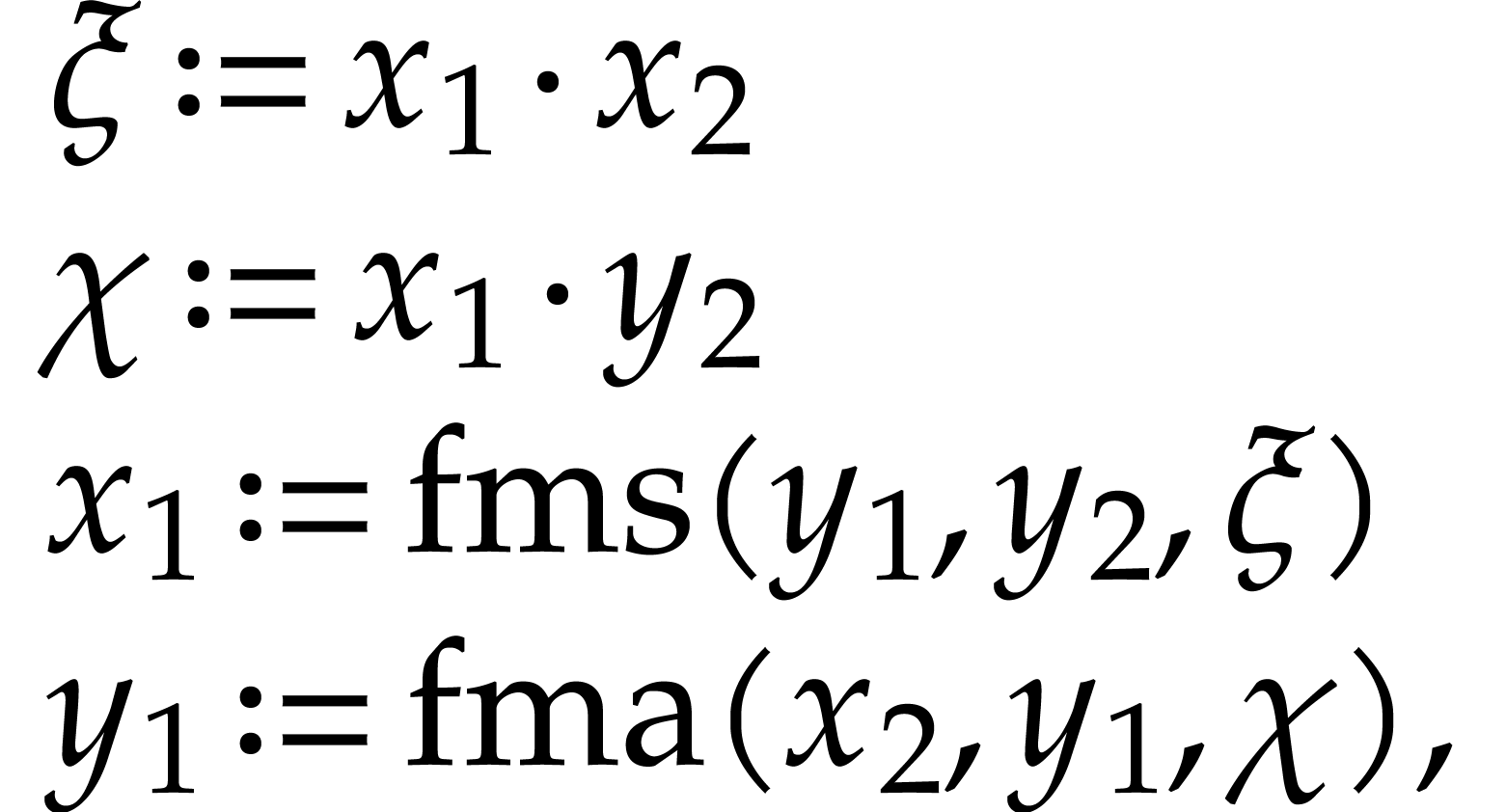

One useful criterion for deciding when and where to support particular operations is to ask the question how easily the operation can be emulated, while resulting in final machine code of the same quality. Let us illustrate this idea on a few examples.

Imagine that our hardware supports FMA, but that we did not include this

operation in  . In order to

exploit this hardware feature, we will need to implement a special

post-processing transformation on SLPs for locating and replacing pairs

of instructions like

. In order to

exploit this hardware feature, we will need to implement a special

post-processing transformation on SLPs for locating and replacing pairs

of instructions like  and

and  by FMAs, even when they are separated by several independent

instructions. This is doable, but not so easy. Why bother, if it

suffices to add FMA to our signature and generate high quality code with

FMAs from the outset? Maybe it will require more implementation efforts

to systematically support FMAs inside our routines on SLPs, but we won't

need a separate and potentially expensive post-processing step.

by FMAs, even when they are separated by several independent

instructions. This is doable, but not so easy. Why bother, if it

suffices to add FMA to our signature and generate high quality code with

FMAs from the outset? Maybe it will require more implementation efforts

to systematically support FMAs inside our routines on SLPs, but we won't

need a separate and potentially expensive post-processing step.

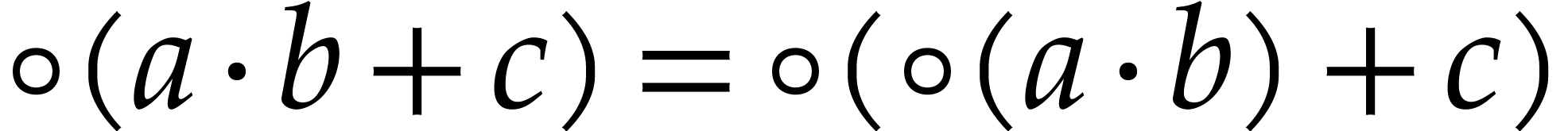

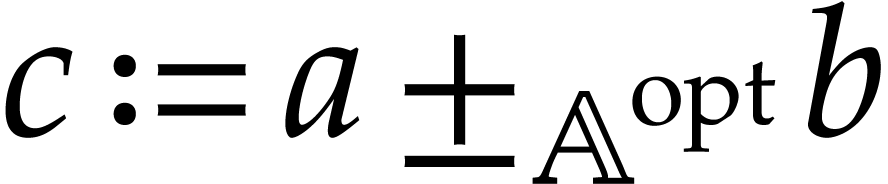

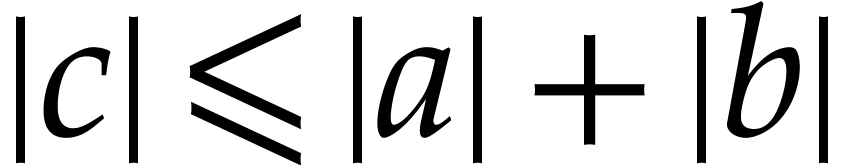

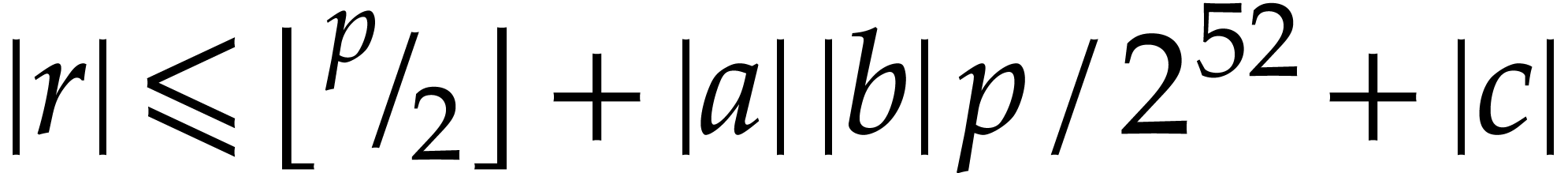

In fact, for floating point numbers that follow the IEEE 754 standard

[5], the FMA instruction cannot be emulated using one

multiplication and one addition. This is due to the particular semantics

of correct rounding: if  denotes the double

precision number that is nearest to a real number

denotes the double

precision number that is nearest to a real number  , then we do not always have

, then we do not always have  . The IEEE 754 rounding semantics can be exploited

for the implementation of extended precision arithmetic [25,

99, 69, 77] and modular

arithmetic [13, 73]. This provides additional

motivation for including FMA in our basic signature

. The IEEE 754 rounding semantics can be exploited

for the implementation of extended precision arithmetic [25,

99, 69, 77] and modular

arithmetic [13, 73]. This provides additional

motivation for including FMA in our basic signature  .

.

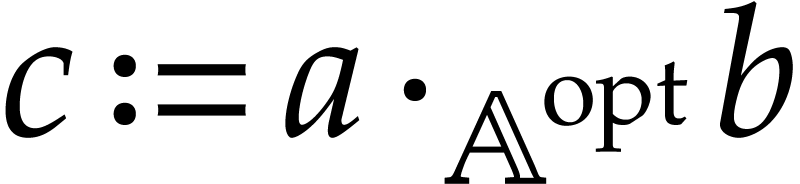

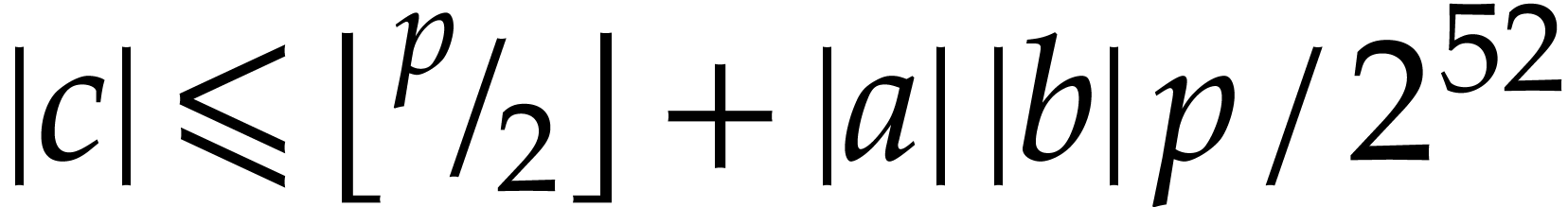

We do not only have flexibility regarding the signature  , but also when it comes to the domains

, but also when it comes to the domains  that we wish to support. For the selection of

operations in

that we wish to support. For the selection of

operations in  , it is also

important to consider their implementations for higher level domains,

such as modular integers, matrices, truncated power series, etc. Now the

implementation of

, it is also

important to consider their implementations for higher level domains,

such as modular integers, matrices, truncated power series, etc. Now the

implementation of  is often simpler than the

combined implementation of

is often simpler than the

combined implementation of  and

and  . For instance, if

. For instance, if  is

the domain of

is

the domain of  matrices over a field, then

matrices over a field, then  can be implemented using

can be implemented using  FMAs

over

FMAs

over  , whereas its emulation

via

, whereas its emulation

via  and

and  would require

would require

instructions. One may argue that the better code

should be recoverable using a suitable post-processing step as described

above. This is true for matrix multiplication, but turns out to become

more problematic for other domains, such as modular integers that are

implemented using floating point FMAs. Once more, the inclusion of FMA

into our signature

instructions. One may argue that the better code

should be recoverable using a suitable post-processing step as described

above. This is true for matrix multiplication, but turns out to become

more problematic for other domains, such as modular integers that are

implemented using floating point FMAs. Once more, the inclusion of FMA

into our signature  tends to facilitate the

generation of high quality machine code.

tends to facilitate the

generation of high quality machine code.

Let us finish with an example of some operations that we do not

wish to include in  .

Instruction sets of CPUs allow us to work with integers and floating

point numbers of various precisions. How can we do computations with

mixed types in our framework? Instead of mimicking the CPUs instruction

set within

.

Instruction sets of CPUs allow us to work with integers and floating

point numbers of various precisions. How can we do computations with

mixed types in our framework? Instead of mimicking the CPUs instruction

set within  , the idea is

rather to provide a general mechanism to dynamically enrich

, the idea is

rather to provide a general mechanism to dynamically enrich  . Here we may exploit the fact that our

framework made very few assumptions on

. Here we may exploit the fact that our

framework made very few assumptions on  and

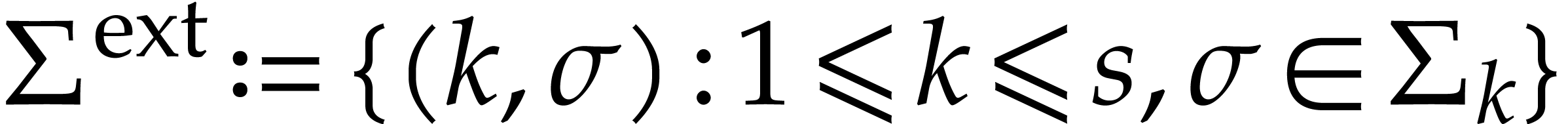

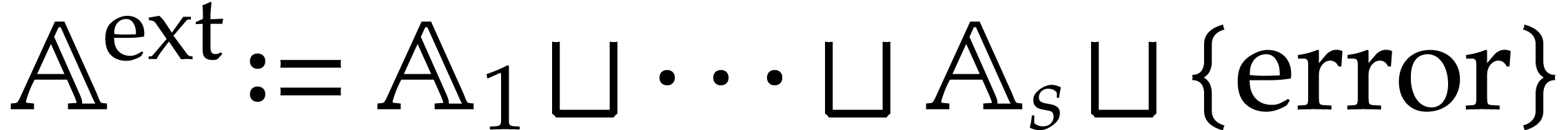

and  . Given domains

. Given domains  of signatures

of signatures  , the idea is

to dynamically create a signature

, the idea is

to dynamically create a signature  and a domain

and a domain

such that

such that  operates on

operates on

via

via  if

if  and

and  otherwise. We regard

otherwise. We regard  and

and  as being disjoint, which allows us to

dynamically add

as being disjoint, which allows us to

dynamically add  to

to  .

.

In this way, it is easy to emulate computations with mixed types and signatures in our abstract SLP framework (and thereby support multi-sorted structures as in Remark 2.2). This kind of emulation comes with some overhead though. But we consider this to be a small price to pay for the simplicity that we gain by avoiding the management of multiple types and signatures in the framework itself.

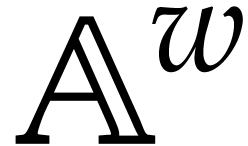

The SLP framework is more general than it may seem at first sight due to

the fact that we are completely free to chose the signature  and the underlying domain

and the underlying domain  .

In principle, this allows for all data types found in computer algebra

systems. Nonetheless, for our library it is wise to include privileged

support for a more restricted set of domains, similarly to what we did

with

.

In principle, this allows for all data types found in computer algebra

systems. Nonetheless, for our library it is wise to include privileged

support for a more restricted set of domains, similarly to what we did

with  in the case of signatures.

in the case of signatures.

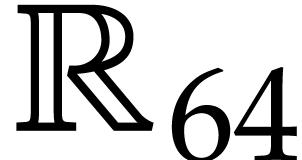

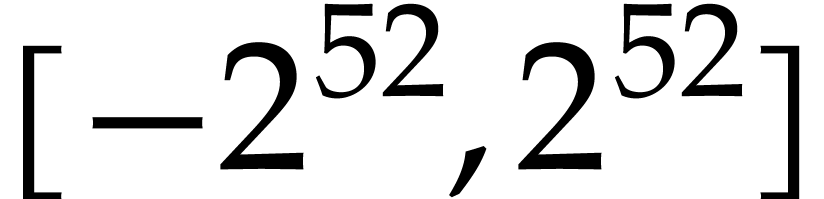

First of all, we clearly wish to be able to have domains for all the

machines types that can be found in hardware. In traditional CPUs, these

types were limited to floating point numbers and (signed and unsigned)

integers of various bit-sizes. In modern CPUs and GPUs, one should add

SIMD vector versions of these types for various widths and possibly a

few more special types like matrices and polynomials over  of several sizes and degrees.

of several sizes and degrees.

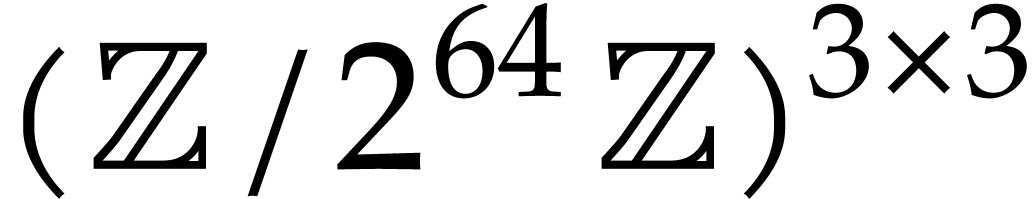

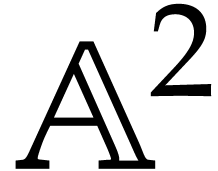

On top of the machine types, we next require support for basic

arithmetical domains such as vectors, matrices, polynomials, jets,

modular integers and polynomials, extended precision numbers, intervals,

balls, etc. Such functionality is commonplace in computer algebra

systems or specialized HPC libraries such as  of

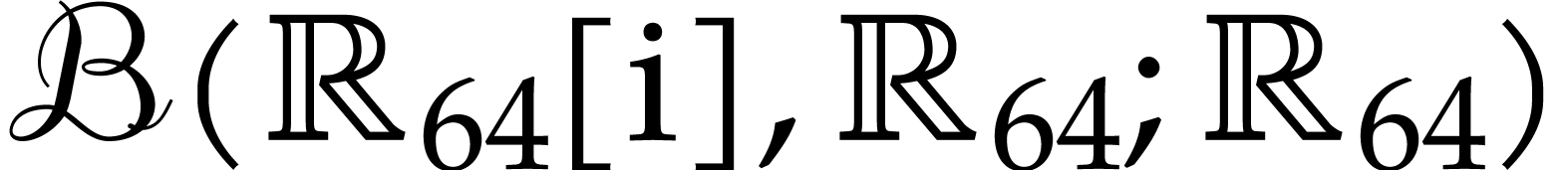

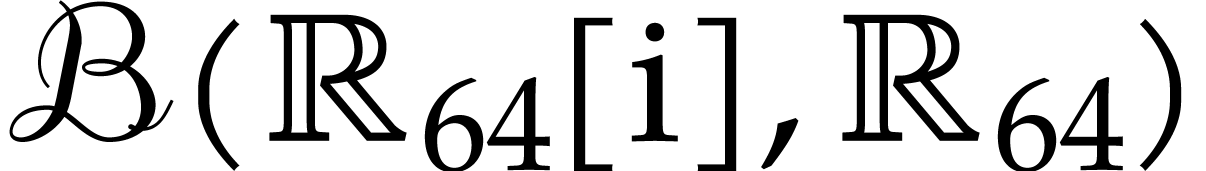

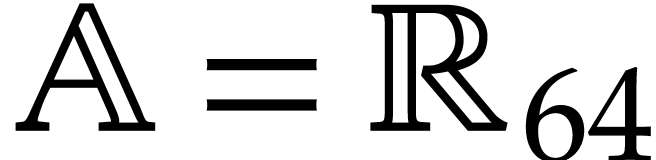

of  matrices with 64 bit integer coefficients in a typical

example of an SLP algebra. One advantage is that instances can always be

represented as dense vectors of a fixed size.

matrices with 64 bit integer coefficients in a typical

example of an SLP algebra. One advantage is that instances can always be

represented as dense vectors of a fixed size.

In the  of our domain must be specified. In our implementation, we use

of our domain must be specified. In our implementation, we use  as the signature, while agreeing that operations in

as the signature, while agreeing that operations in  that are not implemented for a given domain simply

raise an error. We also provide default implementations for operations

like FMA that can be emulated using other operations. In order to

achieve full extensibility, it suffices to add a generic operation to

our signature for all operations in

that are not implemented for a given domain simply

raise an error. We also provide default implementations for operations

like FMA that can be emulated using other operations. In order to

achieve full extensibility, it suffices to add a generic operation to

our signature for all operations in  ;

the arguments of this generic operation are the concrete operation in

;

the arguments of this generic operation are the concrete operation in

and the (variable number of) arguments of this

operation.

and the (variable number of) arguments of this

operation.

In our lower level C++ implementation in  gives rise to a

virtual method for this type which can carry out the operation for the

concrete domain at hand. An element of a domain is represented as one

consecutive block of data inside memory. The size of this block is fixed

and can be retrieved using another virtual method of the domain

type. This mechanism is essentially the same as the one from the

gives rise to a

virtual method for this type which can carry out the operation for the

concrete domain at hand. An element of a domain is represented as one

consecutive block of data inside memory. The size of this block is fixed

and can be retrieved using another virtual method of the domain

type. This mechanism is essentially the same as the one from the

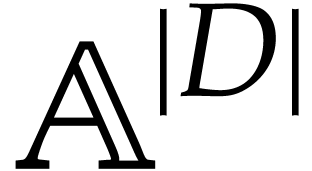

Given an abstract SLP  , it is

natural to represent

, it is

natural to represent  ,

,  , and

, and  as

actual vectors in contiguous memory. In the cases of

as

actual vectors in contiguous memory. In the cases of  and

and  , these vectors have

machine integer coefficients. If elements of

, these vectors have

machine integer coefficients. If elements of  can

themselves be represented by vectors of a fixed size, then one may use

the additional optimization to concatenate all entries of

can

themselves be represented by vectors of a fixed size, then one may use

the additional optimization to concatenate all entries of  into a single vector, which we do in the

into a single vector, which we do in the

For the representation of programs  ,

we have several options. In the

,

we have several options. In the  , so that

, so that  is simply

represented as a vector of instructions. In

is simply

represented as a vector of instructions. In  and the arguments of

instructions are represented by machine integers. Consequently,

instructions in

and the arguments of

instructions are represented by machine integers. Consequently,

instructions in  can be represented by machine

integer vectors. This again makes it possible to concatenate all

instructions into a single machine integer vector, which can be thought

of as “byte code”.

can be represented by machine

integer vectors. This again makes it possible to concatenate all

instructions into a single machine integer vector, which can be thought

of as “byte code”.

The systematic concatenation of vector entries of vectors in  is required in order to build a table

with the offsets of the individual instructions. Alternatively, we could

have imposed a maximal arity for the operations in

is required in order to build a table

with the offsets of the individual instructions. Alternatively, we could

have imposed a maximal arity for the operations in  , which would have allowed us to represent all

instructions by vectors of the same length. This alternative

representation is less compact but has the advantage that individual

instructions can be addressed more easily. It is also not so clear what

would be a good maximal arity.

, which would have allowed us to represent all

instructions by vectors of the same length. This alternative

representation is less compact but has the advantage that individual

instructions can be addressed more easily. It is also not so clear what

would be a good maximal arity.

The  and

and  should really be considered as output

streams rather than vectors. Moreover, it is almost always possible to

give a simple and often tight a priori bound for the lengths of

should really be considered as output

streams rather than vectors. Moreover, it is almost always possible to

give a simple and often tight a priori bound for the lengths of

and

and  at the end of this

streaming process. This makes it possible to allocate these vectors once

and for all and directly stream the entries without being preoccupied by

range checks or reallocations of larger memory blocks.

at the end of this

streaming process. This makes it possible to allocate these vectors once

and for all and directly stream the entries without being preoccupied by

range checks or reallocations of larger memory blocks.

We have considered but discarded a few alternative design options. All

operations in  have a single output value in our

implementation. Since SLPs are allowed to have multiple output values,

it might be interesting to allow the same thing for operations in

have a single output value in our

implementation. Since SLPs are allowed to have multiple output values,

it might be interesting to allow the same thing for operations in  . Some instructions in CPUs are

indeed of this kind, like long multiplication (x86) or loading several

registers from memory (ARM). However, this elegant feature requires an

additional implementation effort for almost all operations on SLPs. This

also incurs a performance penalty, even for the vast majority of SLPs

that do not need this feature.

. Some instructions in CPUs are

indeed of this kind, like long multiplication (x86) or loading several

registers from memory (ARM). However, this elegant feature requires an

additional implementation effort for almost all operations on SLPs. This

also incurs a performance penalty, even for the vast majority of SLPs

that do not need this feature.

Note finally that it is still possible to emulate instructions with multiple outputs by considering single vector outputs and then introducing extra instructions for extracting entries of these vectors. Unfortunately however, this is really inelegant, because it forces us to use more convoluted non-fixed-sized vector domains. It is also non-trivial to recombine these multiple instructions into single machines instruction when building the final machine code.

Similar considerations apply for in-place semantics like in the C

instruction  and

and  are not

required to be disjoint. But it is also easy to emulate an instruction

like

are not

required to be disjoint. But it is also easy to emulate an instruction

like

Yet another interesting type of alternative design philosophy would be to systematically rule out redundant computations by carrying out common subexpression elimination during the very construction of SLPs. However, for cache efficiency, it is sometimes more efficient to recompute a value rather than retrieving it from memory. Our representation provides greater control over what is stored where and when. The systematic use of common subexpression elimination through hash tables also has a price in performance.

Recall that two SLPs are equivalent if they compute the same mathematical function. Among two equivalent SLPs, the shorter one is typically most efficient. But the length of an SLP is not the only factor for its practical performance. It is also important to keep an eye on dependency chains, data locality, and cache performance. Besides performance, “readability” of an SLP may also be an issue: we often change the numbering of data fields in a way that makes the SLP more readable.

We catch all these considerations under the concept of quality. This concept is deliberately vague, because the practical benefits of SLPs of “higher quality” depend greatly on our specific hardware. Operations on SLPs should be designed carefully in order to avoid needless quality deteriorations. This can often be achieved by touching the input SLPs “as least as possible” and only in “straightforward ways”.

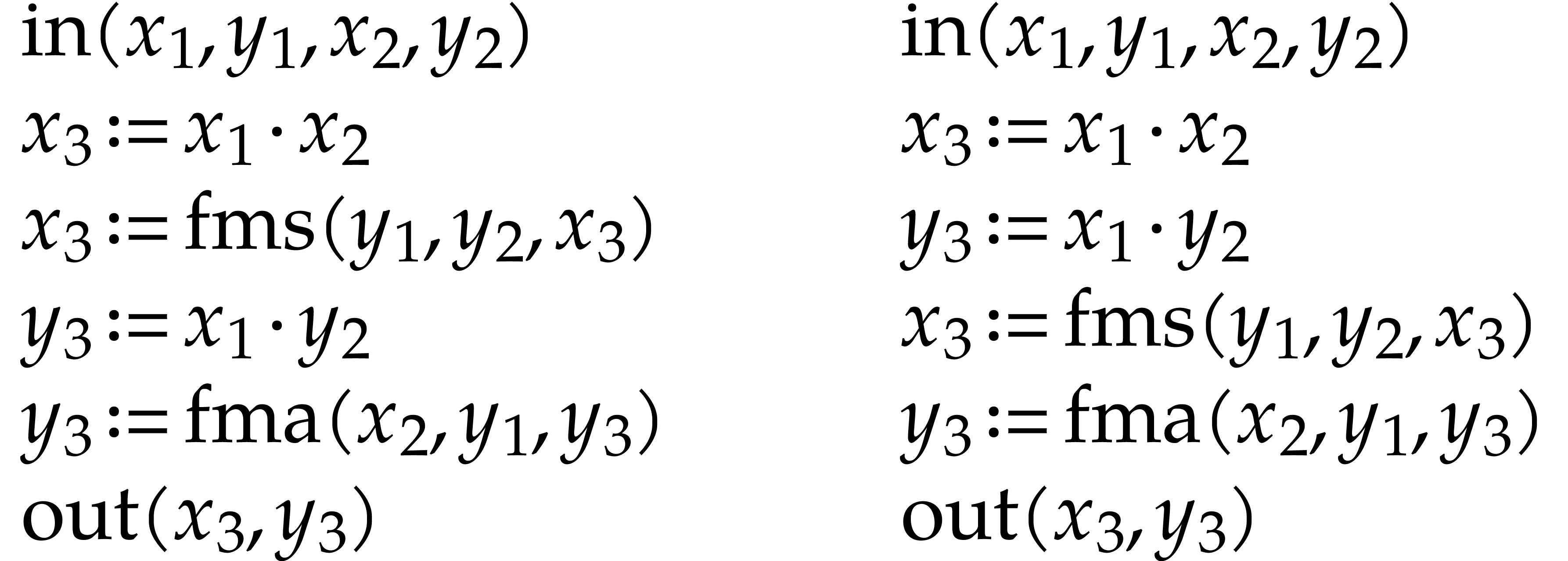

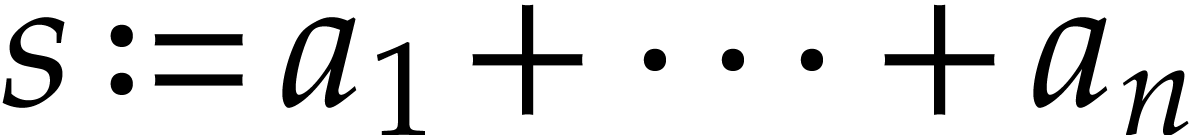

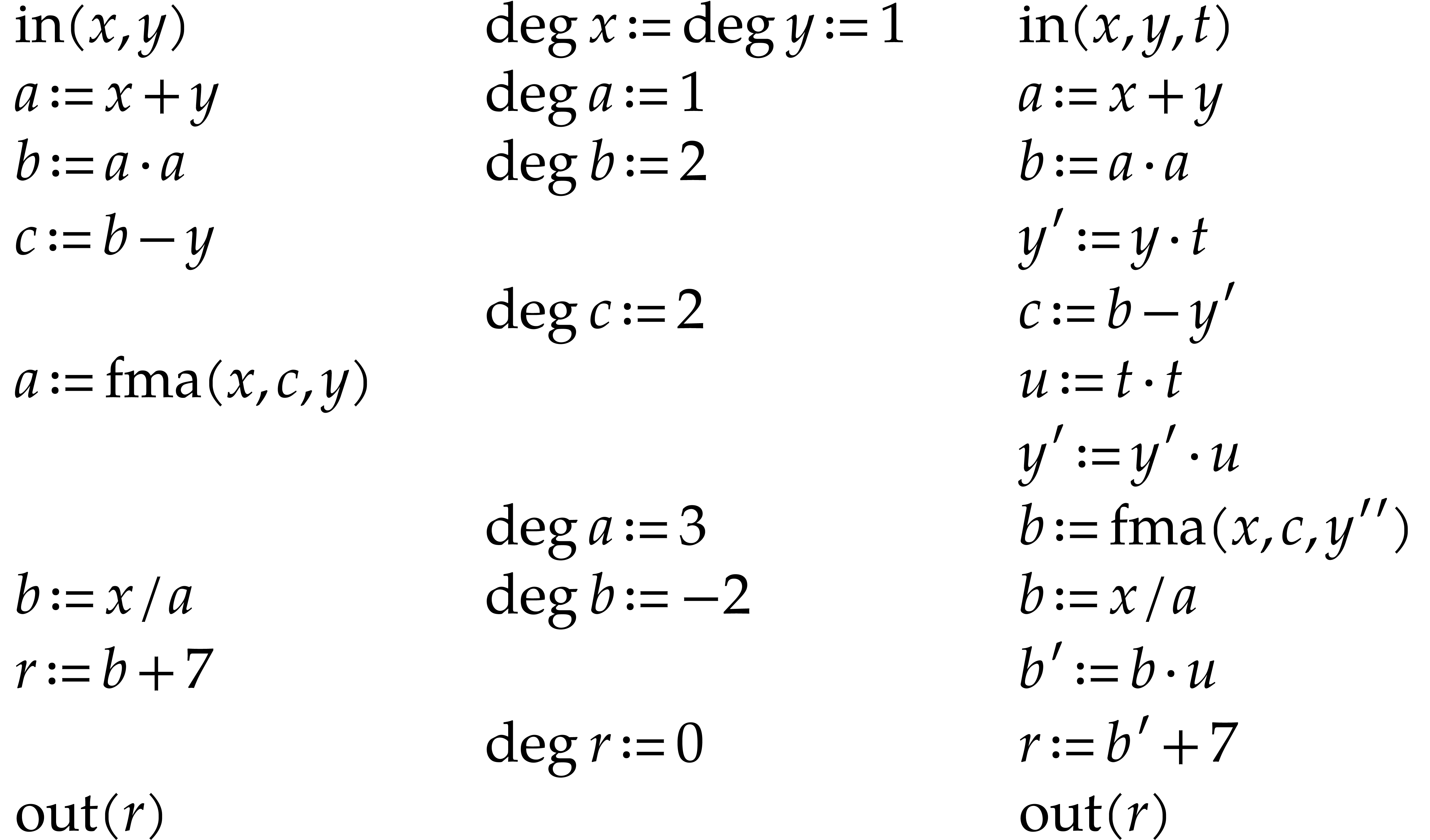

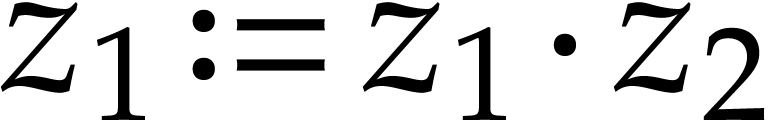

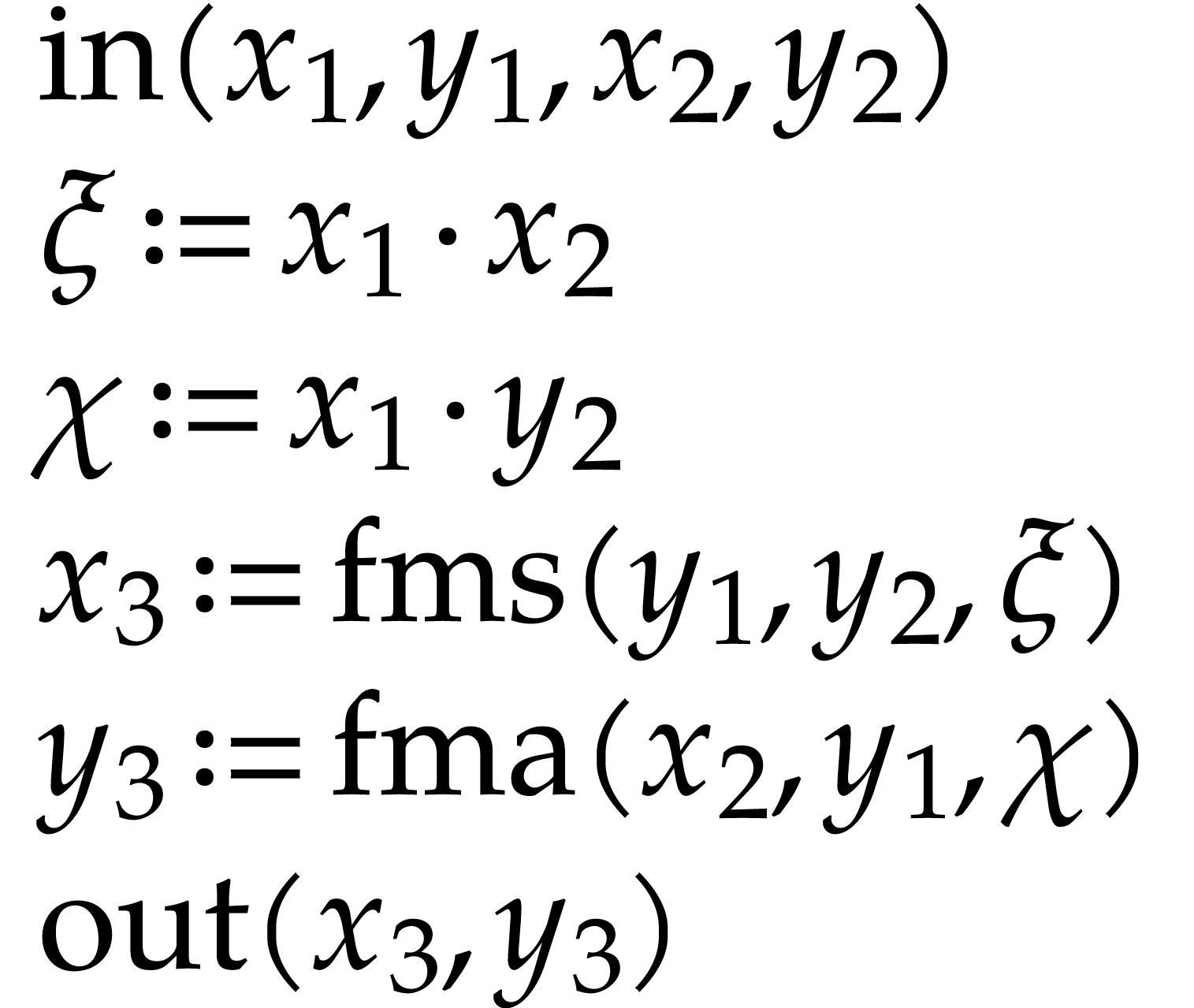

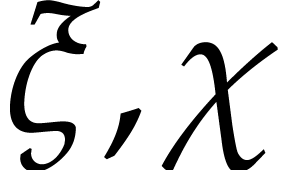

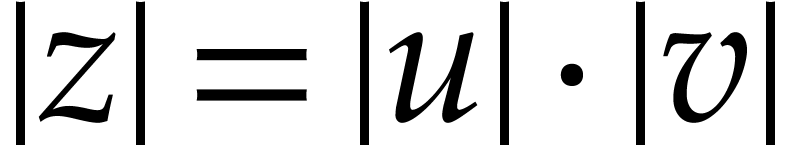

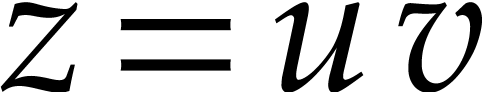

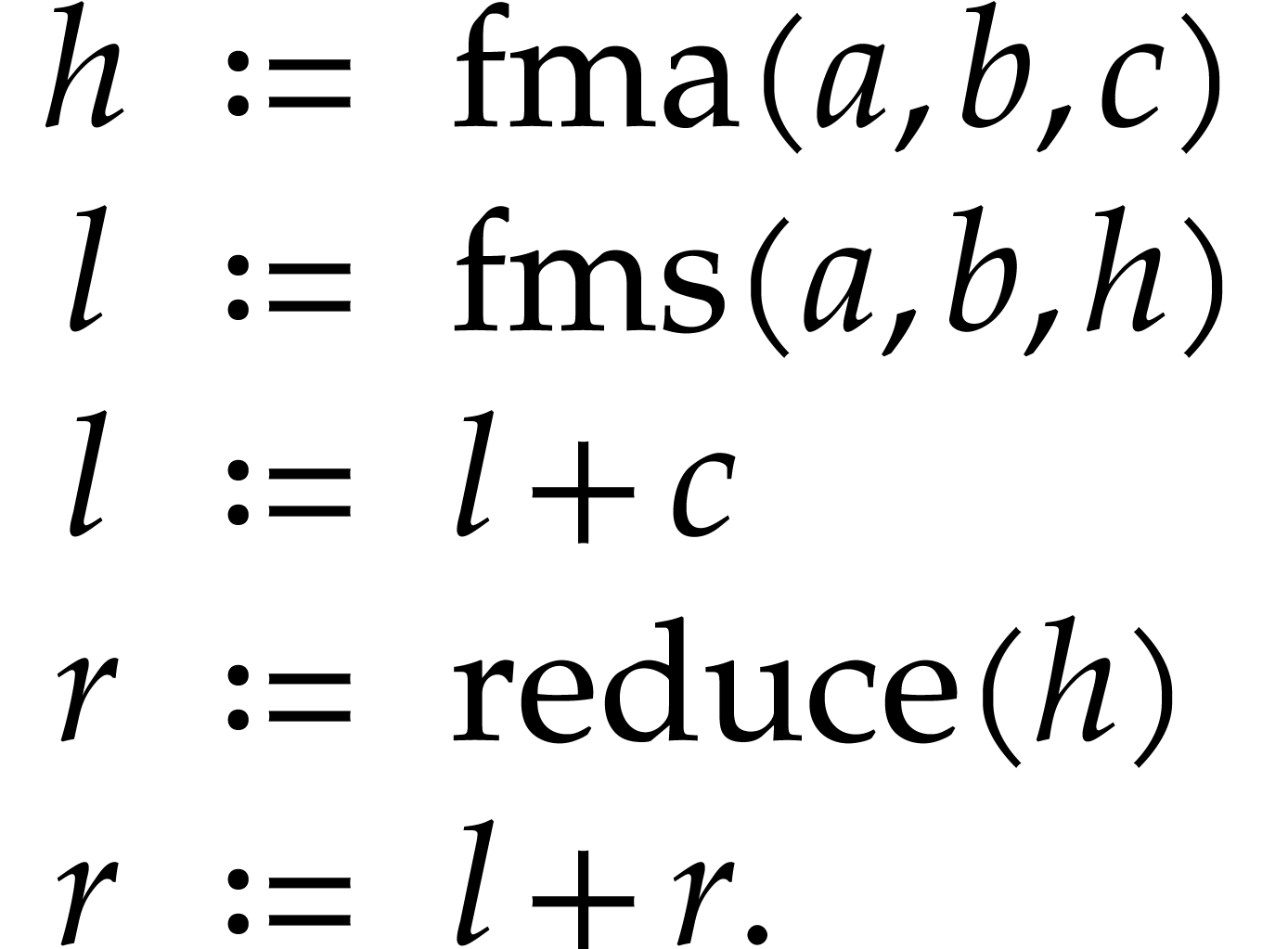

Let us briefly review how dependency chains and data layout may affect the performance. Consider the following two SLPs for complex multiplication:

|

(2.3) |

Here  stands for “fused multiply

subtract” and

stands for “fused multiply

subtract” and  for “fused multiply

add”. Modern hardware tends to contain multiple execution

units for additions, multiplications, fused multiplications, etc.,

which allow for out of order execution [75, 6, 34]. In the right SLP, this means that the

instruction

for “fused multiply

add”. Modern hardware tends to contain multiple execution

units for additions, multiplications, fused multiplications, etc.,

which allow for out of order execution [75, 6, 34]. In the right SLP, this means that the

instruction  can be dispatched to a

second multiplication unit while

can be dispatched to a

second multiplication unit while  is still being

executed by a first unit. However, in the left SLP, the instruction

is still being

executed by a first unit. However, in the left SLP, the instruction  has to wait the for the outcome of

has to wait the for the outcome of  . Whereas the fused multiplications in both

SLPs depend on the result of one earlier multiplication, these

dependencies are better spread out in time in the right SLP, which

typically leads to better performance.

. Whereas the fused multiplications in both

SLPs depend on the result of one earlier multiplication, these

dependencies are better spread out in time in the right SLP, which

typically leads to better performance.

Let us now turn to the numbering of our data fields in  . When generating the final machine code for

our SLP, we typically cut

. When generating the final machine code for

our SLP, we typically cut  into a small number of

contiguous blocks, which are then mapped into contiguous chunks in

memory. For instance, we might put the input, output, auxiliary, and

constant fields in successive blocks of

into a small number of

contiguous blocks, which are then mapped into contiguous chunks in

memory. For instance, we might put the input, output, auxiliary, and

constant fields in successive blocks of  and map

them to separate chunks in memory.

and map

them to separate chunks in memory.

Now memory accesses are most efficient when done per cache line. For

instance, if cache lines are 1024 bits long and if we are working over

64 bit double precision numbers, then fetching  from memory will also fetch

from memory will also fetch  .

The cache performance of an SLP will therefore be best when large

contiguous portions of the program

.

The cache performance of an SLP will therefore be best when large

contiguous portions of the program  access only a

proportionally small number of data fields

access only a

proportionally small number of data fields  ,

preferably by chunks of 16 contiguous fields. The theoretical notion of

cache oblivious algorithms [39] partially captures

these ideas. Divide and conquer algorithms tend to be cache oblivious

and thereby more efficient. Computing the product of two

,

preferably by chunks of 16 contiguous fields. The theoretical notion of

cache oblivious algorithms [39] partially captures

these ideas. Divide and conquer algorithms tend to be cache oblivious

and thereby more efficient. Computing the product of two  matrices using a naive triple loop is not cache oblivious

(for large

matrices using a naive triple loop is not cache oblivious

(for large  ) and can

therefore be very slow. In the terminology of this subsection, high

quality SLP should in particular be cache oblivious.

) and can

therefore be very slow. In the terminology of this subsection, high

quality SLP should in particular be cache oblivious.

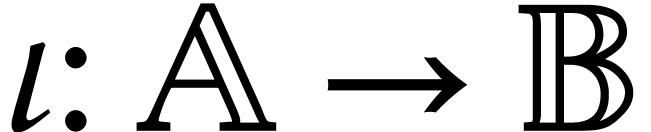

Where does the “initial SLP”  come

from in the workflow (1.2)? It is not very convenient to

oblige users to specify

come

from in the workflow (1.2)? It is not very convenient to

oblige users to specify  using pseudo-code like

(1.1), (2.1), or (2.2). Now we

often already have some existing implementation of

using pseudo-code like

(1.1), (2.1), or (2.2). Now we

often already have some existing implementation of  , albeit not in our SLP framework. In this

section we explain how to derive an SLP for

, albeit not in our SLP framework. In this

section we explain how to derive an SLP for  from

an existing implementation, using a mechanism that records the trace of

a generic execution of

from

an existing implementation, using a mechanism that records the trace of

a generic execution of  .

.

Modulo the creation of appropriate wrappers for recording, this

mechanism applies for any existing code in any language, as long as the

code is either generic or templated by a domain  that implements at most the signature

that implements at most the signature  .

By “at most”, we mean that the only allowed operations on

elements from

.

By “at most”, we mean that the only allowed operations on

elements from  are the ones from

are the ones from  .

.

Recording can be thought of as a more powerful version of inlining,

albeit restricted to the SLP setting: instead of using complex

compilation techniques for transforming the existing code, it suffices

to implement a cheap wrapper that records the trace of an execution.

Hence the names

Let us describe how to implement the recording mechanism for existing C

or C++ code over a domain  .

For this we introduce a type recorder, which could

either be a template with

.

For this we introduce a type recorder, which could

either be a template with  as a parameter, or a

generic but slower recorder type that works over all domains. In order

to record a function, say

as a parameter, or a

generic but slower recorder type that works over all domains. In order

to record a function, say  ,

we proceed as follows:

,

we proceed as follows:

|

In this code we use global variables for the state of the recorder. This

state mainly consists of the current quadruple  , where

, where  are thought of as

output streams (rather than vectors).

are thought of as

output streams (rather than vectors).

When starting the recorder, we reset  to being

empty. Instances of the recorder type represent indices

of data fields in

to being

empty. Instances of the recorder type represent indices

of data fields in  .

.

The instruction  , adds

the corresponding index (

, adds

the corresponding index ( in this case) to

in this case) to  , and next stores it in

, and next stores it in  ,

adds the corresponding index

,

adds the corresponding index  to

to  , and then stores it in

, and then stores it in

We next execute the actual function  on our input

data. For this, the data type recorder should implement

a default constructor, a constructor from

on our input

data. For this, the data type recorder should implement

a default constructor, a constructor from  ,

and the operations from

,

and the operations from  (as well as copy and

assignment operators to comply with C++). The default constructor adds a

new auxiliary data field to

(as well as copy and

assignment operators to comply with C++). The default constructor adds a

new auxiliary data field to  ,

whereas the constructor from

,

whereas the constructor from  adds a new constant

field. An operation like multiplication of

adds a new constant

field. An operation like multiplication of  and

and

creates a new auxiliary destination field

creates a new auxiliary destination field  in

in  and streams the

instruction

and streams the

instruction  into

into  .

.

At the end, the instruction  and

and  .

.

Remark

Remark  , the above recording

algorithm will put them in separate data fields. Another useful

optimization is to maintain a table of constants (as part of the global

state) and directly reuse constants that where allocated before.

, the above recording

algorithm will put them in separate data fields. Another useful

optimization is to maintain a table of constants (as part of the global

state) and directly reuse constants that where allocated before.

matrix

multiplication

matrix

multiplication

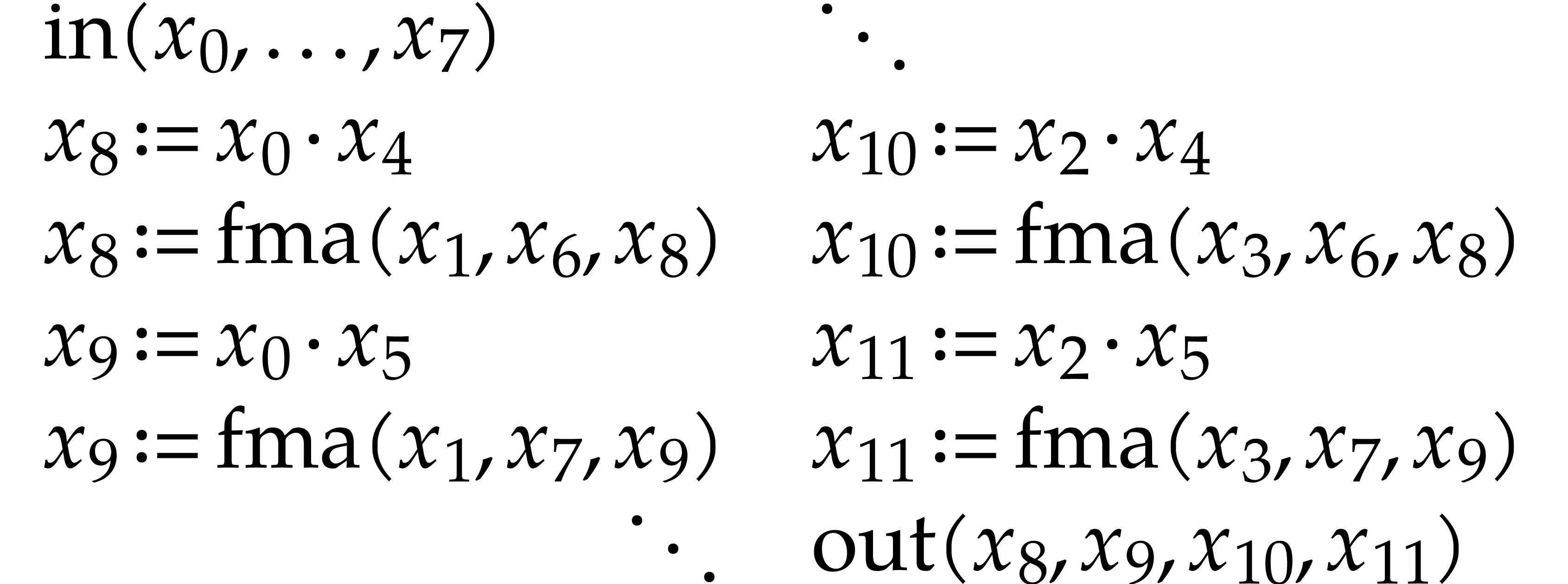

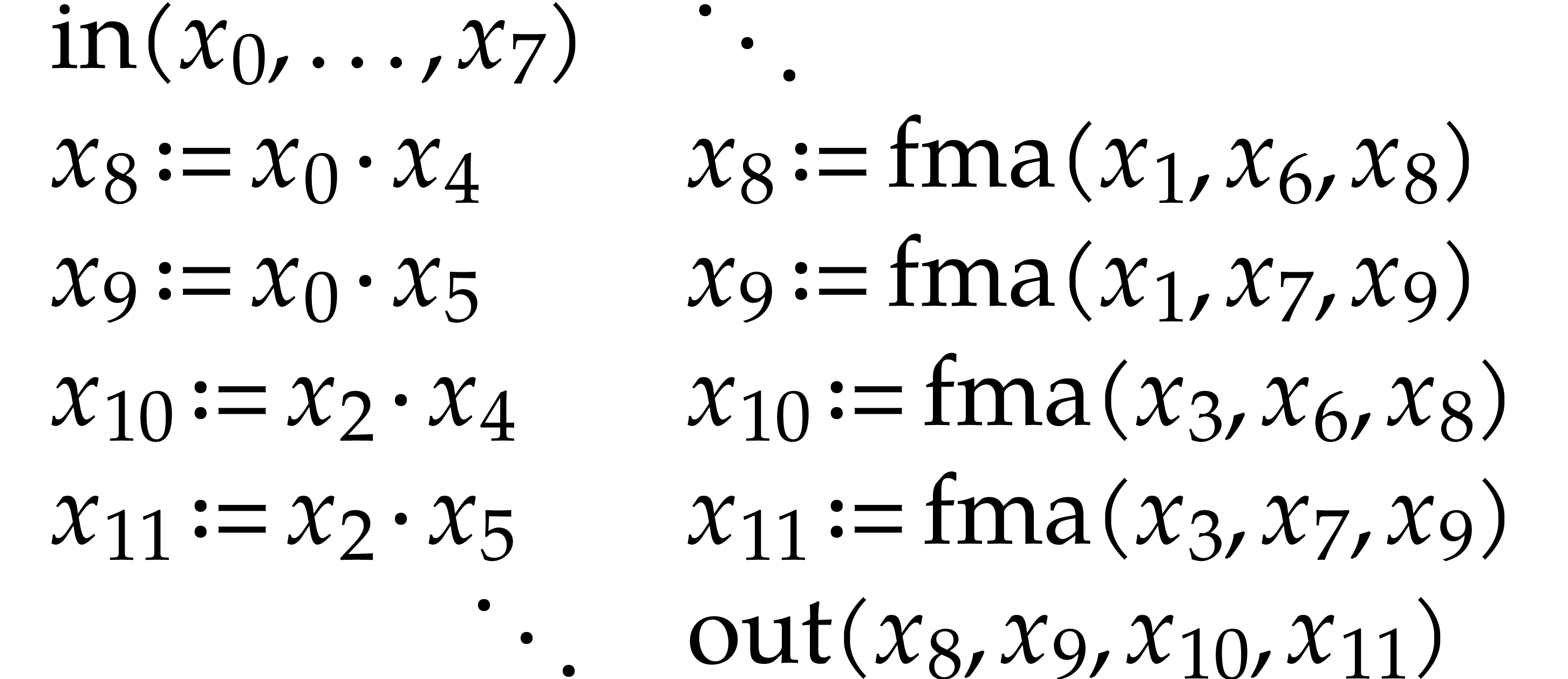

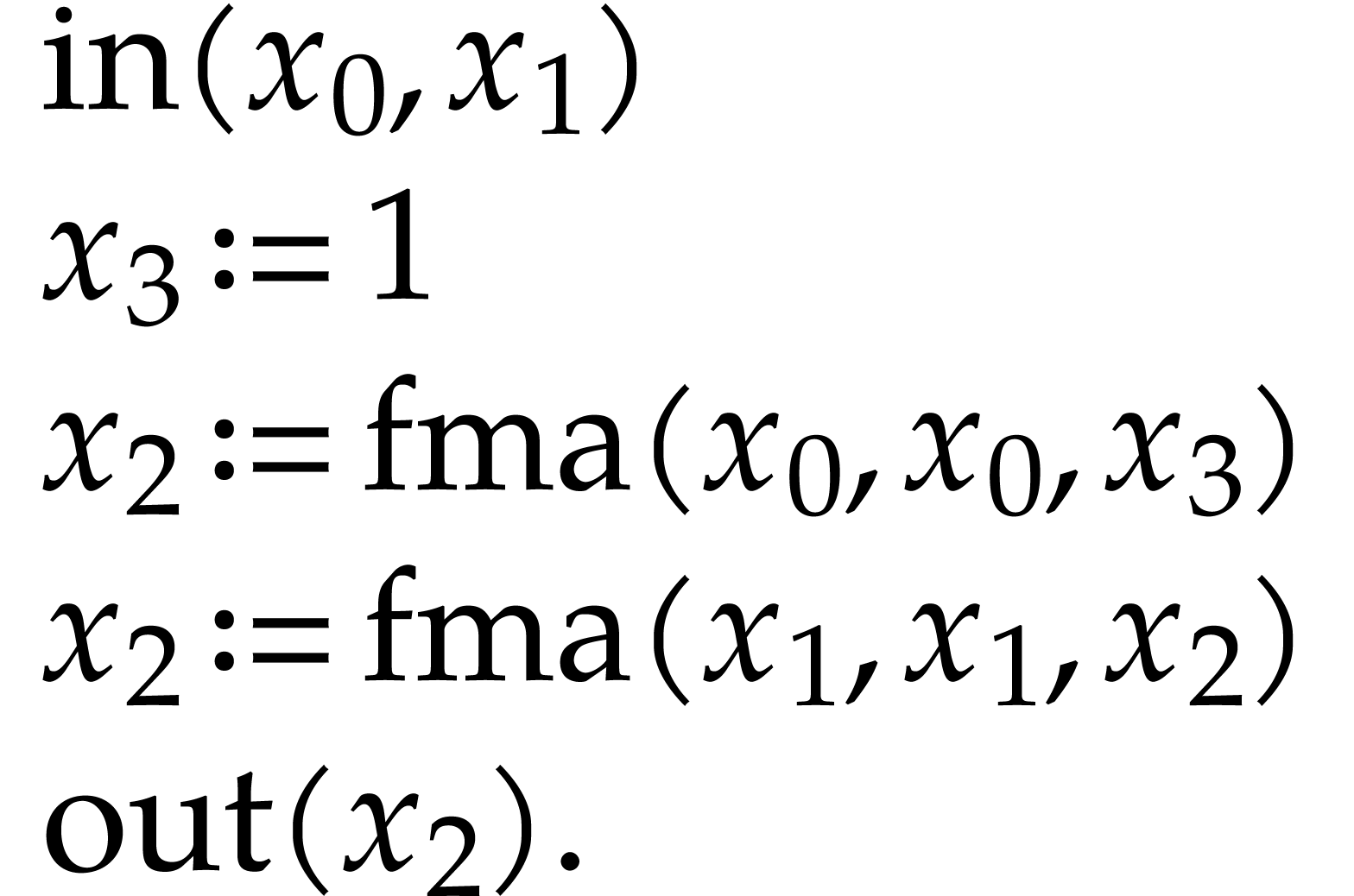

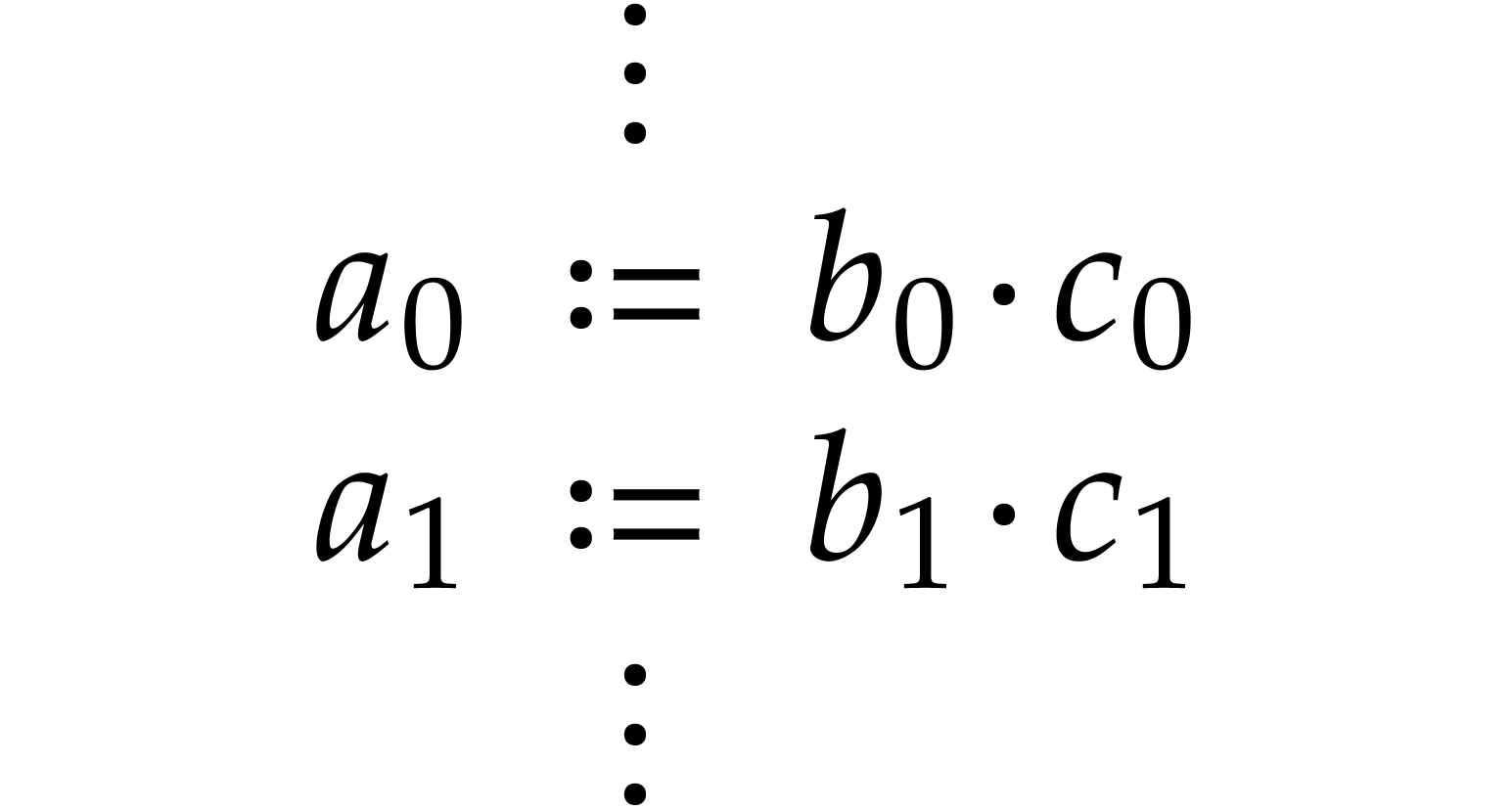

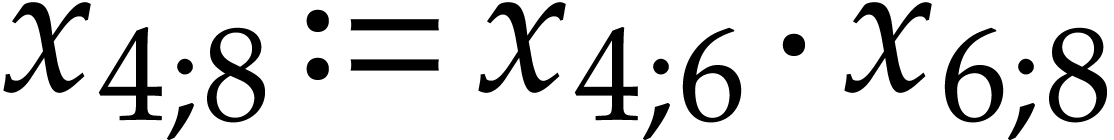

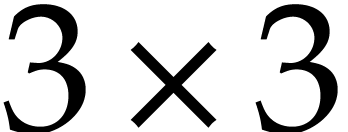

Let us investigate in detail what kind of SLPs are obtained through

recording on the example of  matrix

multiplication. Assume that matrices are implemented using a C++

template type matrix<C> with the expected

constructors and accessors. Assume that matrix multiplication is

implemented in the following way:

matrix

multiplication. Assume that matrices are implemented using a C++

template type matrix<C> with the expected

constructors and accessors. Assume that matrix multiplication is

implemented in the following way:

|

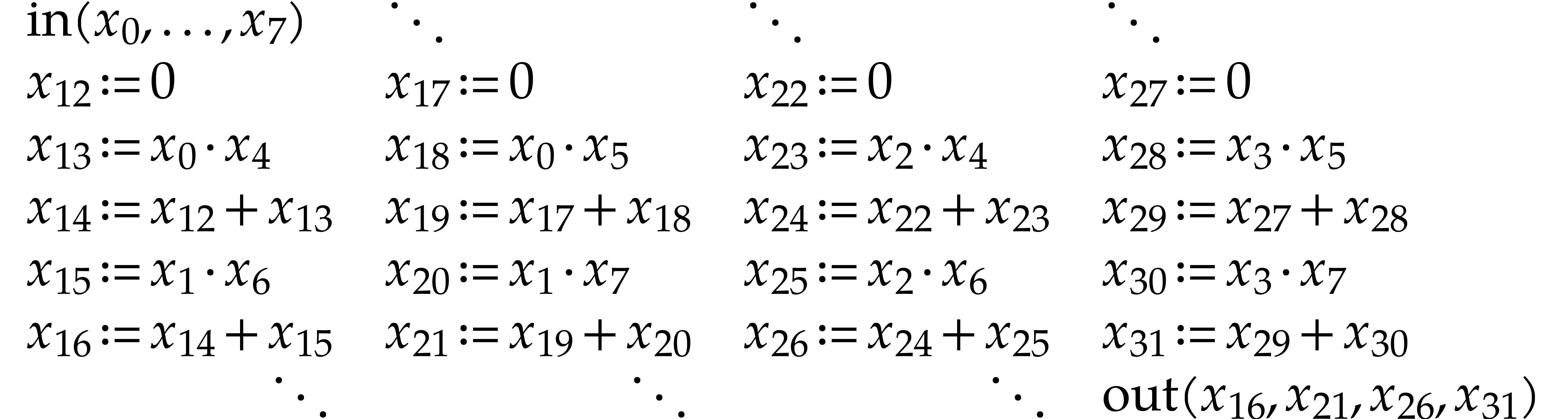

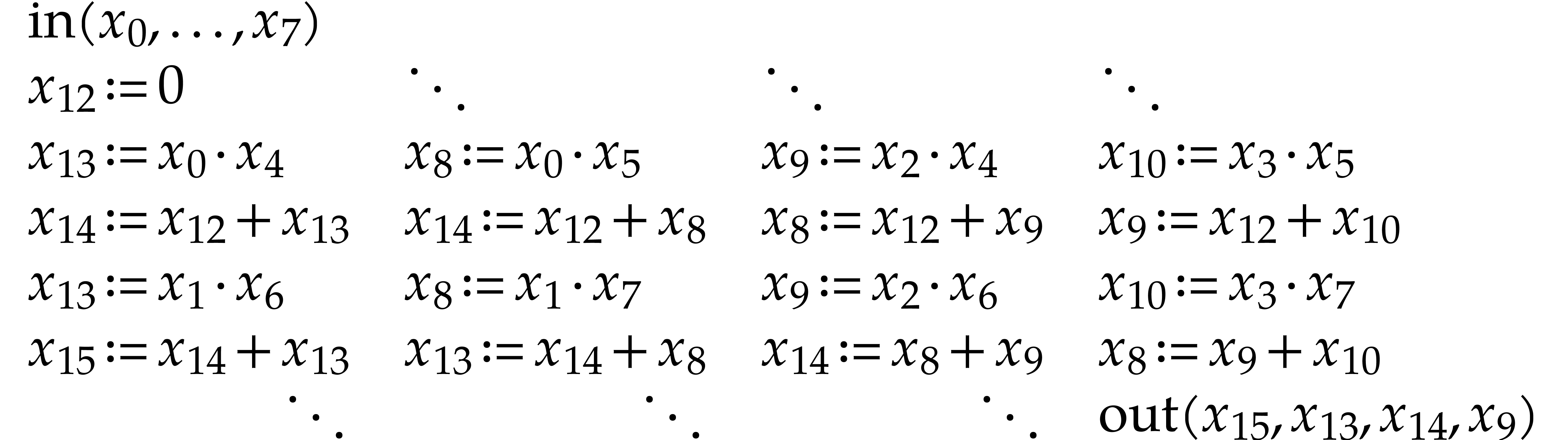

When applying this code for two generic  input

matrices in a similar way as in the previous subsection, without the

optimizations from Remarks 3.1 and 3.2, we

obtain the following SLP:

input

matrices in a similar way as in the previous subsection, without the

optimizations from Remarks 3.1 and 3.2, we

obtain the following SLP:

|

(3.1) |

(The order of this code is column by column from left to right.) In

order to understand the curious numbering here, one should remind how

constructors work: the declaration of  but never used. The constructor

of sum next creates the constant

but never used. The constructor

of sum next creates the constant  , which is put in

, which is put in  .

We then record the successive instructions, the results of which are all

put in separate data fields. Note that

.

We then record the successive instructions, the results of which are all

put in separate data fields. Note that  are not

really instructions, but rather a convenient way to indicate the

constant fields

are not

really instructions, but rather a convenient way to indicate the

constant fields  all contain zero.

all contain zero.

When applying the optimizations from Remarks 3.1 and 3.2, the generated code becomes as follows:

|

(3.2) |

The numbering may look even odder (although we managed to decrease the

length of  ). This is due to

the particular order in which auxiliary data fields are freed and

reclaimed via the stack.

). This is due to

the particular order in which auxiliary data fields are freed and

reclaimed via the stack.

The example from the previous subsection shows that the recording mechanism does not generate particularly clean SLPs. This is not per se a problem, because right after recording a function we typically simplify the resulting SLP, as will be detailed in section 4.4 below. However, the simplifier may not be able to correct all problems that we introduced when doing the recording in a naive manner. Simplification also tends to be faster for shorter and cleaner SLPs. Simplification might not even be needed at all.

The advantage of the mechanism from the previous section is that it can be applied to an external template C++ library over which we have no control. One reason for the somewhat messy results (3.1) and (3.2) is that C++ incites us to create classes with nice high level mathematical interfaces. Behind the scene, this gives rise to unnecessary temporary variables and copying of data. We may avoid these problems by using a lower level in-place interface for C and matrix<C>. For instance:

|

When recording our SLP using this kind of code, we obtain a much cleaner result:

|

(3.3) |

For this reason, the

Remark

It is a pain because the above multiplication algorithm becomes incorrect in this situation. Fortunately there is the easy remedy to copy colliding arguments into auxiliary variables. Testing for collisions induces additional overhead when recording the code, but the generated SLP is not impacted if no collisions occur.

What is more, if collisions do occur, then we actually have the opportunity to generate an even better SLP. This is for instance interesting for discrete Fourier transforms (DFTs), which can either be done in-place or out-of-place. In our framework, we may implement a single routine which tests in which case we are and then uses the best code in each case.

The shifted perspective from efficiency of execution to quality of recorded code cannot be highlighted enough.

One consequence is that the overhead of control structures only concerns

the cost of recording the SLP, but not the cost of executing the SLP

itself. For example, in Remark 3.3, this holds for the cost

of checking whether there are collisions between input and output

arguments. But the same holds for the overhead of recursive function

calls. For instance, one may implement matrix multiplication

recursively, by reducing an  product into an

product into an  and an

and an  product with

product with  and

and  (or using a similar

decomposition for

(or using a similar

decomposition for  or

or  if

these are larger). The advantage of such a recursive strategy is that it

tends to have a better cache efficiency. Now traditional implementations

typically recurse down only until a certain threshold, like

if

these are larger). The advantage of such a recursive strategy is that it

tends to have a better cache efficiency. Now traditional implementations

typically recurse down only until a certain threshold, like  , because of the overhead of the recursive

calls. But this overhead disappears when the multiplication is recorded

as an SLP, so we may just as well recurse down to

, because of the overhead of the recursive

calls. But this overhead disappears when the multiplication is recorded

as an SLP, so we may just as well recurse down to  .

.

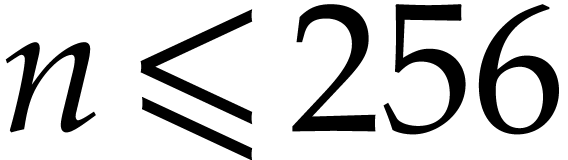

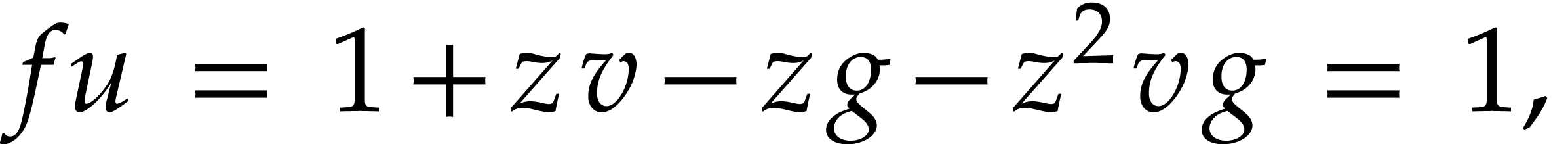

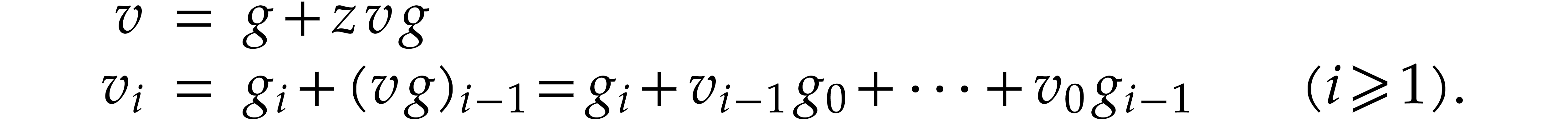

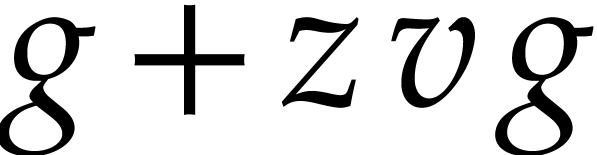

An even more extreme example is relaxed power series arithmetic.

Consider basic arithmetic operations on truncated power series such as

inversion, exponentiation, etc. If the series are truncated at a fixed

order  that is not too large (say

that is not too large (say  ), then lazy or relaxed algorithms are

particularly efficient (see [57] and section 6.3

below). However, they require highly non-conventional control

structures, which explains that they are not as widely used in computer

algebra systems as they might be. This disadvantage completely vanishes

when these operations are recorded as SLPs: there is still a little

overhead while recording an SLP, but not when executing it.

), then lazy or relaxed algorithms are

particularly efficient (see [57] and section 6.3

below). However, they require highly non-conventional control

structures, which explains that they are not as widely used in computer

algebra systems as they might be. This disadvantage completely vanishes

when these operations are recorded as SLPs: there is still a little

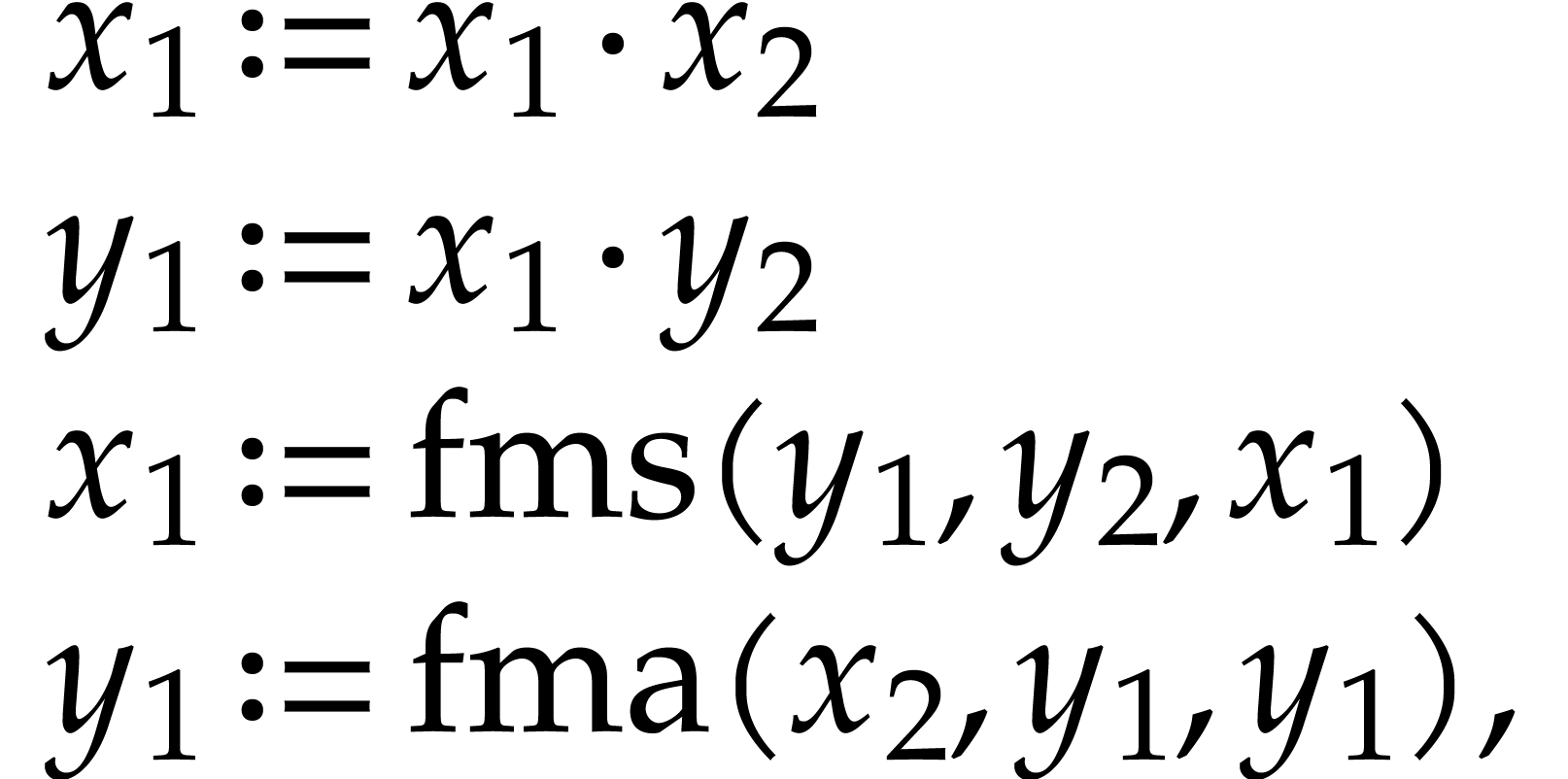

overhead while recording an SLP, but not when executing it.

The shifted perspective means that we should focus our attention on the

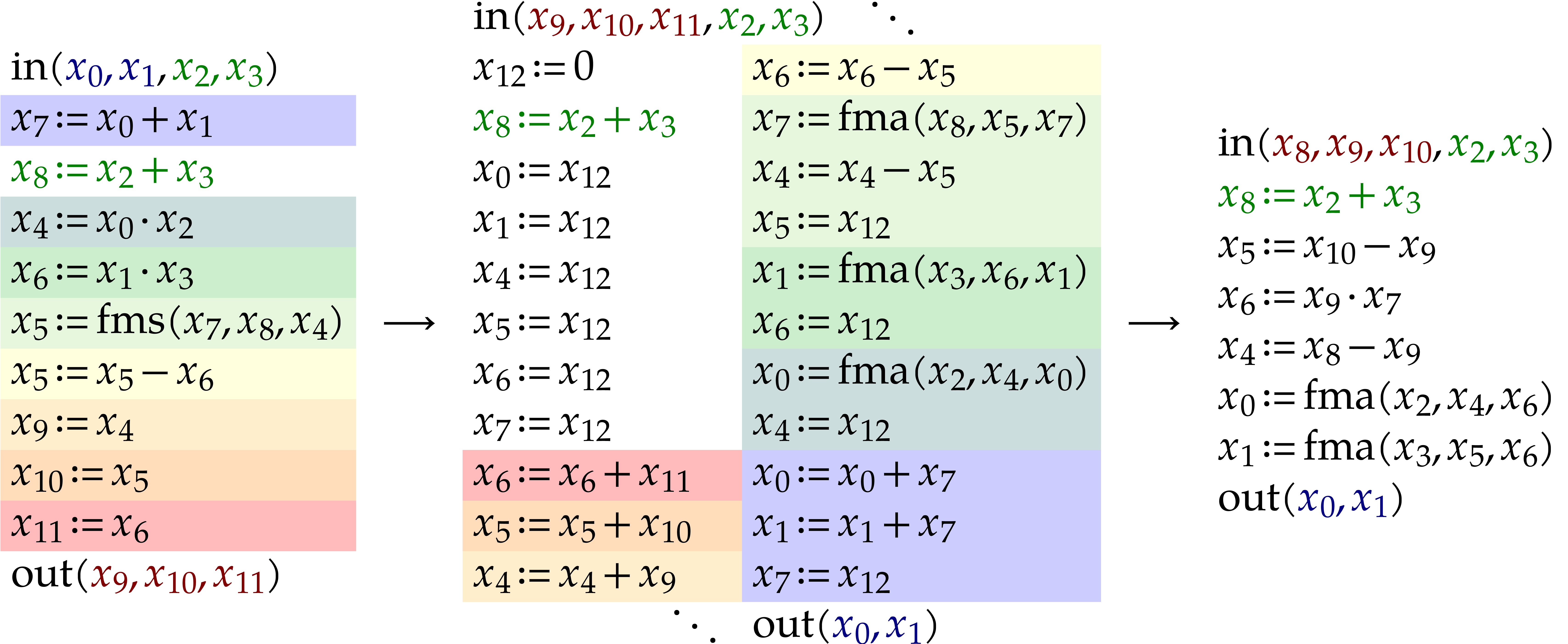

SLP that we wish to generate, not on the way that we generate it. For

instance, the SLP (3.3) is still not satisfactory, because

it contains several annoying dependency chains: the execution of the

instruction  cannot start before completing the

previous instruction

cannot start before completing the

previous instruction  and similarly for the other

FMAs. For CPUs with at least 12 registers, the following SLP would

behave better:

and similarly for the other

FMAs. For CPUs with at least 12 registers, the following SLP would

behave better:

|

(3.4) |

Again, the SLP (3.3) could have been rewritten into this

form automatically, using an appropriate optimization that detects

dependency chains and reschedules instructions. Another observation is

that the dependency chains were not present in (3.1), so

they were introduced by the optimizations from Remark 3.1;

this problem can be mitigated by replacing the first-in-first-out

“stack allocator” by one that disallows the last  freed registers to be immediately reused. But then again,

it is even better to design the routines that we wish to record in such

a way that none of these remedies are needed. For instance, when doing

freed registers to be immediately reused. But then again,

it is even better to design the routines that we wish to record in such

a way that none of these remedies are needed. For instance, when doing

matrix products in the above recursive fashion,

it suffices to recurse on

matrix products in the above recursive fashion,

it suffices to recurse on  or

or  when

when  and

and  .

.

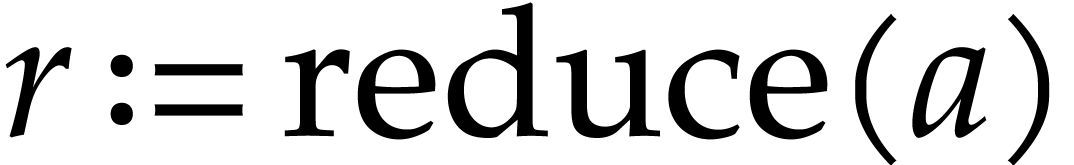

The

This section is dedicated to classical program transformations like common subexpression elimination, simplification, register allocation, etc. As explained in the introduction, this is part of the traditional theory of compilation, but the SLP framework gives rise to several twists. SLPs can become very large and should therefore be disposable as soon as they are no longer needed. Our resulting constraint is that all algorithms should run in linear time (or in expected linear time if hash tables are required).

We recall that another important general design goal is to preserve the “quality” of an SLP as much as possible during transformations. The example SLPs (3.1), (3.2), (3.3), and (3.4) from the previous subsection are all equivalent, but their quality greatly differs in terms of code length, data locality, cache efficiency, dependency chains, and general elegance. It is the responsibility of the user to provide initial SLPs of high quality in (1.2). But it is on the SLP library to not deteriorate this quality during transformations, or, at least, to not needlessly do so. An extreme case of this principle is when the input SLP already accomplishes the task that a transformation is meant to achieve. Ideally speaking, the transformation should then simply return the input SLP. One typical example is the simplification of an already simplified SLP.

For the sake of completeness, we first describe with some very basic and straightforward operations on SLPs that are essentially related to their representation. These operations are often used as building blocks inside more complex transformations.

We start with the extraction of useful information about a given SLP.

Concerning the variables in  ,

we may build boolean lookup tables of length

,

we may build boolean lookup tables of length  for

the following predicates:

for

the following predicates:

Is  an input variable?

an input variable?

Is  an output variables?

an output variables?

Is  a constant?

a constant?

Is  an auxiliary variable?

an auxiliary variable?

Is  modified (i.e. does it occur

as the destination of an instruction)?

modified (i.e. does it occur

as the destination of an instruction)?

Is  used (i.e. does it occur as

the argument of an instruction)?

used (i.e. does it occur as

the argument of an instruction)?

Does  occur in the SLP at all

(i.e. as an input/output or left/right hand side)?

occur in the SLP at all

(i.e. as an input/output or left/right hand side)?

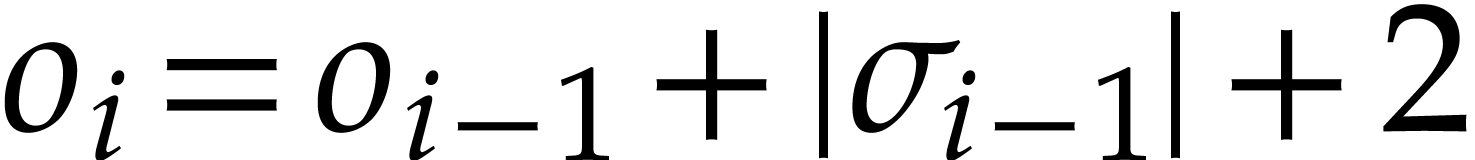

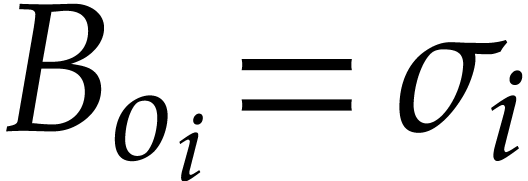

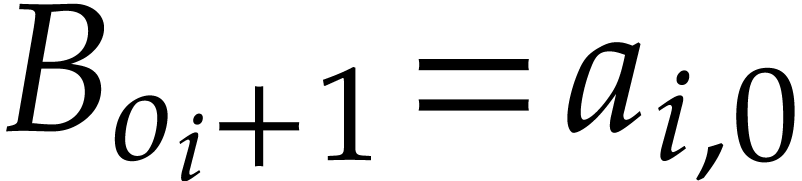

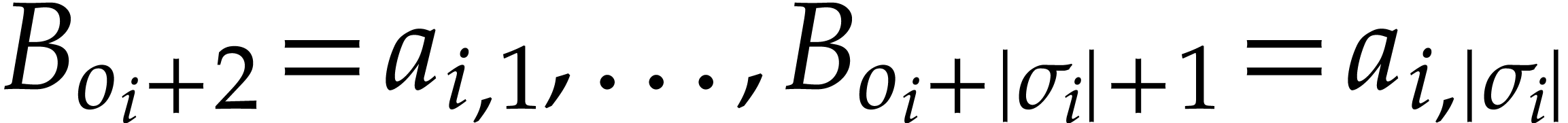

We may also build lookup tables to collect information about the program

itself. Let us focus on

itself. Let us focus on  for the internal low level representation of

for the internal low level representation of  . Every instruction

. Every instruction  thus

corresponds to a part of the byte code: for some offset

thus

corresponds to a part of the byte code: for some offset  (with

(with  and

and  otherwise), we

have

otherwise), we

have  ,

,  ,

,  . For

this representation, the following information is typically useful:

. For

this representation, the following information is typically useful:

An index table for the offsets  .

.

For every destination or source argument  , the next location in the byte code where it is

being used. Here we use the special values

, the next location in the byte code where it is

being used. Here we use the special values  and

and  to indicate when it is not reused at all

or when it is only used for the output. We also understand that an

argument is never “reused” as soon as it gets modified.

to indicate when it is not reused at all

or when it is only used for the output. We also understand that an

argument is never “reused” as soon as it gets modified.

For every destination or source argument  , the next location in the byte code where it is

being assigned a new value or

, the next location in the byte code where it is

being assigned a new value or  if this never

happens.

if this never

happens.

For every instruction, the maximal length of a dependency chain ending with this instruction.

Clearly, all these types of information can easily be obtained using one

linear pass through the program  .

.

We discussed several design options for the representations of SLPs in section 2. The options that we selected impose few restrictions, except for the one that all instructions have a single destination operand. This freedom allows us to express low level details that matter for cache efficiency and instruction scheduling. However, it is sometimes useful to impose additional constraints on the representation. We will now describe several basic operations on SLPs for this purpose.

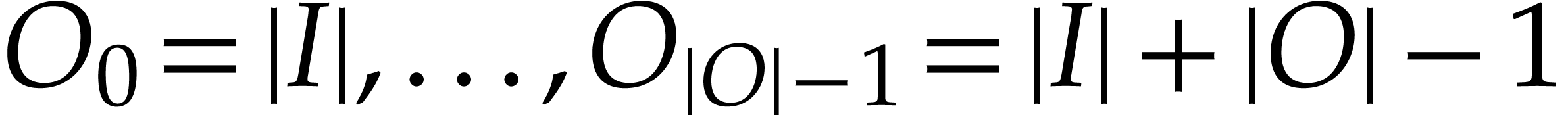

The function computed by an SLP clearly does not depend on the precise

numbering of the data fields in  .

If the input and output variables are pairwise distinct, then we may

always impose them to be numbered as

.

If the input and output variables are pairwise distinct, then we may

always impose them to be numbered as  and

and  : for this it suffices to permute

the data fields.

: for this it suffices to permute

the data fields.

Similarly, we may require that all variables actually occur in the

input, the output, or in the program  itself: if

itself: if

are the variables that occur in the SLP, then it

suffices to renumber

are the variables that occur in the SLP, then it

suffices to renumber  into

into  , for

, for  .

When applied to the SLP from (3.1), we have

.

When applied to the SLP from (3.1), we have  for

for  and

and  for

for  , so we suppress

, so we suppress  useless data fields.

useless data fields.

A stronger natural condition that we may impose on an SLP is that every

instruction participates in the computation of the output. This can be

achieved using classical dead code optimization, which does one linear

pass through  in reverse direction. When combined

with the above “dead data” optimization, we thus make sure

that our SLPs contain no obviously superfluous instructions or data

fields.

in reverse direction. When combined

with the above “dead data” optimization, we thus make sure

that our SLPs contain no obviously superfluous instructions or data

fields.

Our representation of SLPs allows the same variables to be reassigned

several times. This is useful in order to reduce memory consumption and

improve cache efficiency, but it may give rise to unwanted dependency

chains. For instance, the SLP (3.2) contains more

dependency chains than (3.1): the instruction  must be executed before

must be executed before  ,

but the instructions

,

but the instructions  and

and  can be swapped. In order to remove all dependency chains due to

reassignments, we may impose the strong condition that all destination

operands in our SLP are pairwise distinct. This can again easily be

achieved through renumbering. Note that the non-optimized recording

strategy from section 3.1 has this property.

can be swapped. In order to remove all dependency chains due to

reassignments, we may impose the strong condition that all destination

operands in our SLP are pairwise distinct. This can again easily be

achieved through renumbering. Note that the non-optimized recording

strategy from section 3.1 has this property.

In Remark 3.3, we discussed the in-place calling

convention that is allowed by our formalism and we saw that aliasing may

negatively impact the correctness of certain algorithms. For some

applications, one may therefore wish to forbid aliasing, which can

easily be achieved through numbering. This can be strengthened further

by imposing all input and output variables  to be

pairwise distinct.

to be

pairwise distinct.

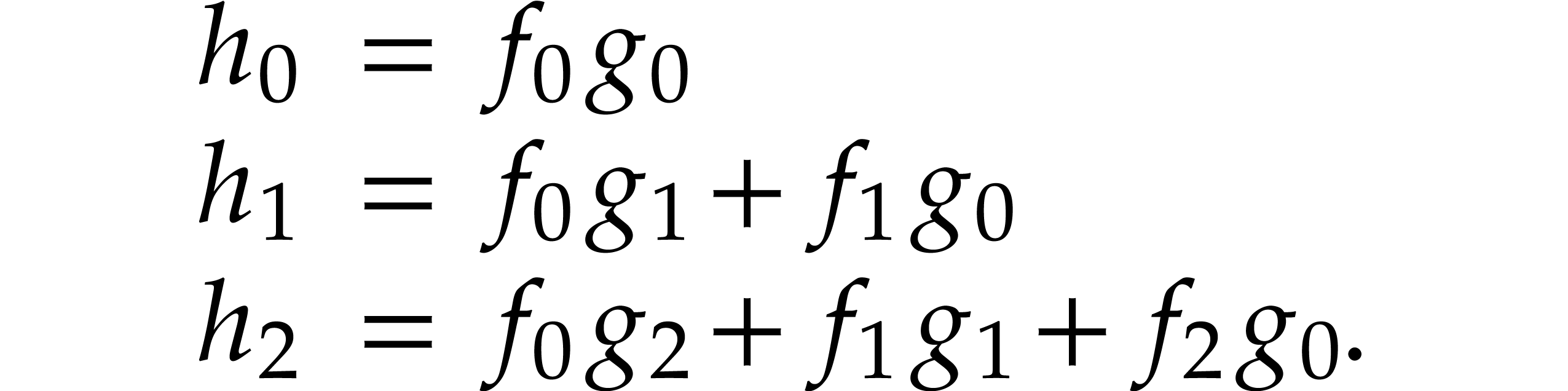

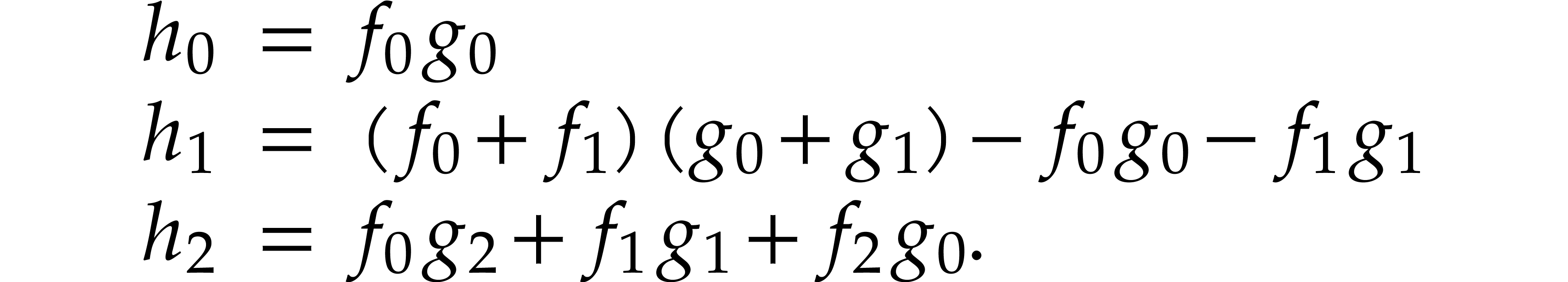

A powerful optimization in order to avoid superfluous computations is

common subexpression elimination (CSE). The result of each instruction

in  can be regarded as a mathematical expression

in the input variables of our SLP. We may simply discard instructions

that recompute the same expression as an earlier instruction. In order

to do this, it suffices to build a hash table with all expressions that

we encountered so far, using one linear pass through

can be regarded as a mathematical expression

in the input variables of our SLP. We may simply discard instructions

that recompute the same expression as an earlier instruction. In order

to do this, it suffices to build a hash table with all expressions that

we encountered so far, using one linear pass through  .

.

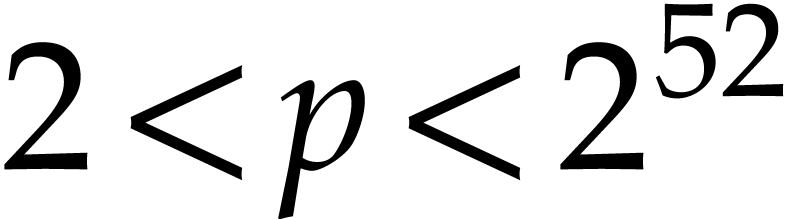

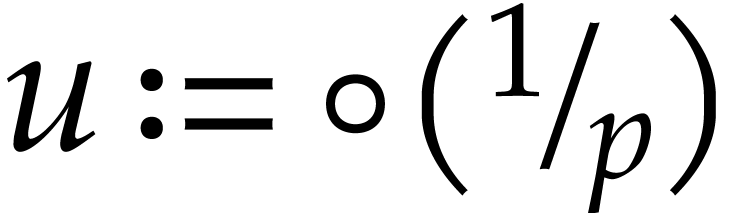

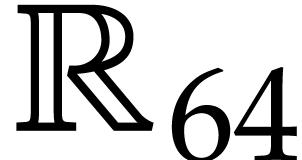

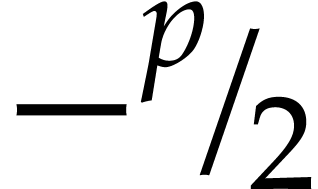

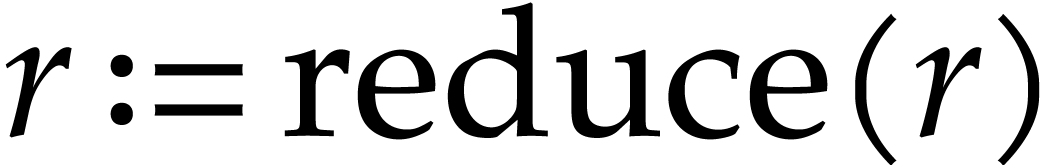

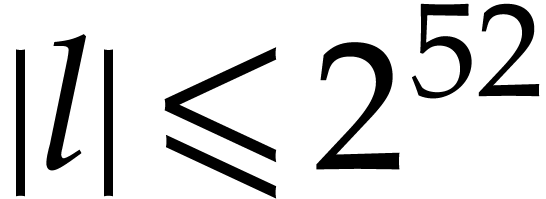

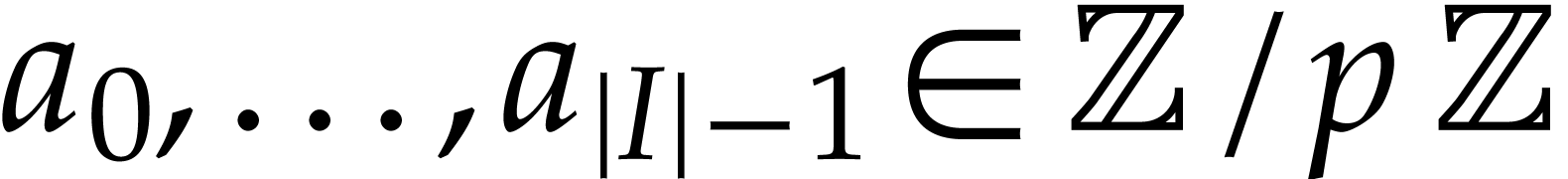

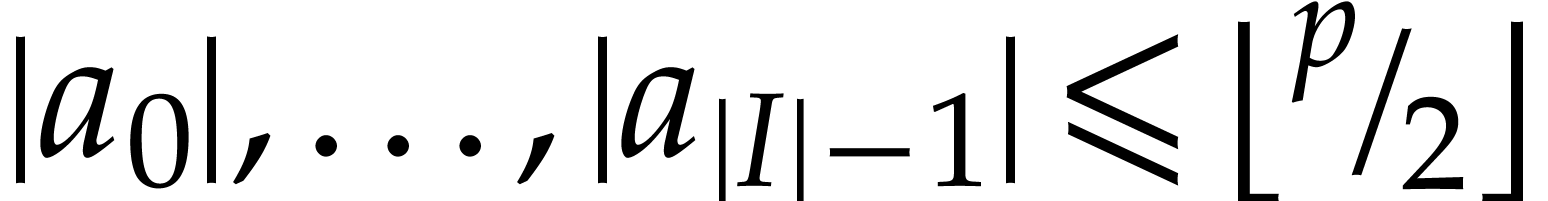

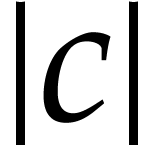

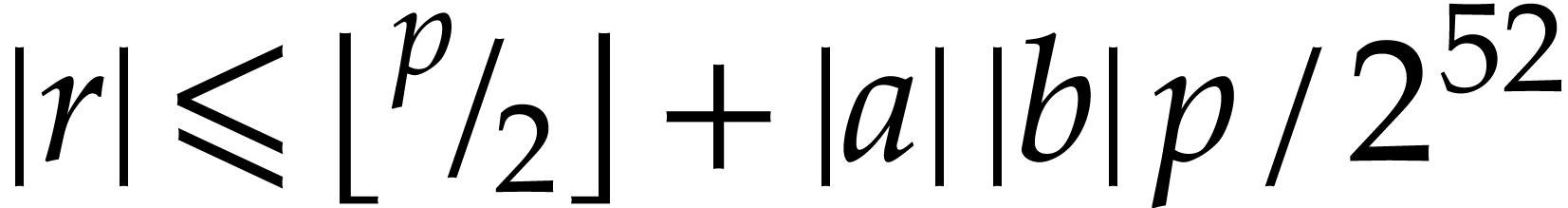

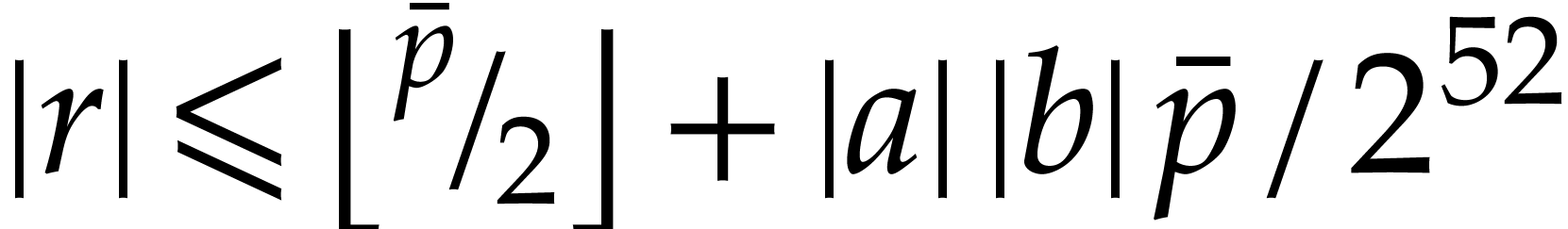

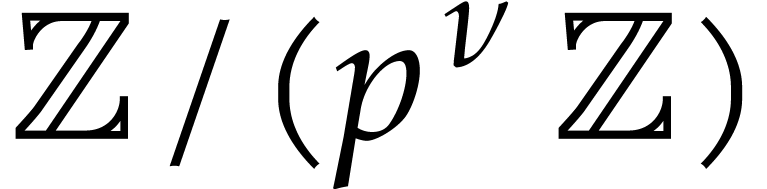

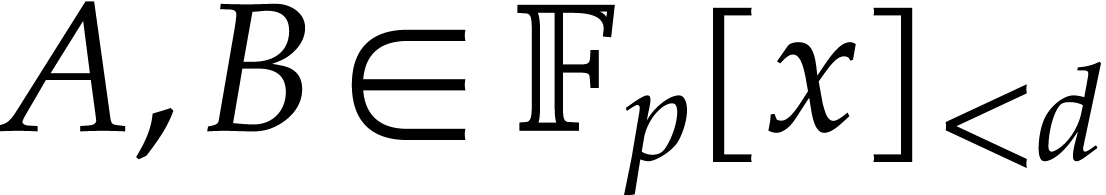

In our more algebraic setting, this mechanism can actually be improved,

provided that our domain  admits an algebraic

hash function. Assume for instance that

admits an algebraic

hash function. Assume for instance that  and let

and let

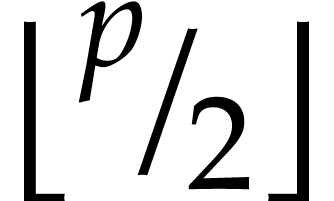

be a large prime number. Let

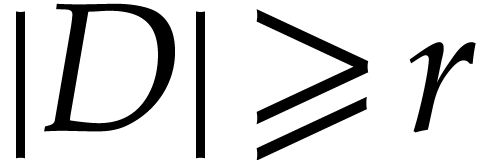

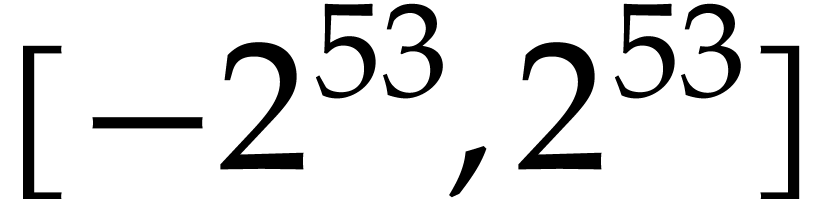

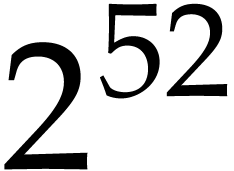

be a large prime number. Let  be the arguments of the function computed by our SLP and let

be the arguments of the function computed by our SLP and let  be uniformly random elements of

be uniformly random elements of  , regarded as a subset of the finite field

, regarded as a subset of the finite field  . Given any polynomial expression

. Given any polynomial expression

, we may use

, we may use  as an algebraic hash value for

as an algebraic hash value for  .

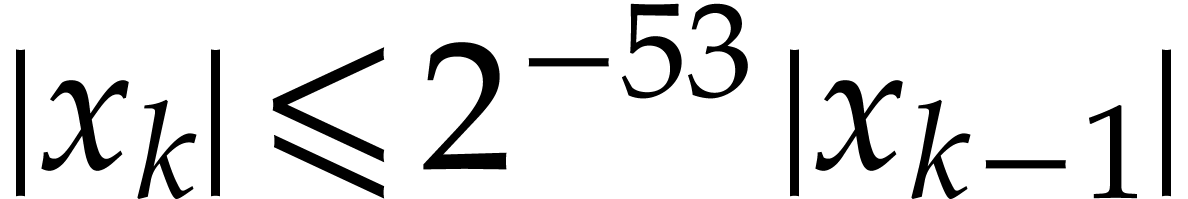

The probability that two distinct expressions have the same hash value

is proportional to

.

The probability that two distinct expressions have the same hash value

is proportional to  , and can

therefore be made exponentially small in the bit-size of

, and can

therefore be made exponentially small in the bit-size of  and the cost of hashing.

and the cost of hashing.

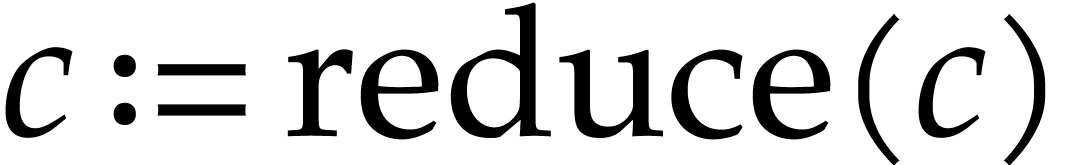

When using this algebraic hash function for common subexpression

elimination (both for hashing and equality testing), mathematically

equivalent expressions (like  and

and  or

or  and

and  ) will be considered as equal, which may result in

the elimination of more redundant computations. However, this algorithm

is only “Monte Carlo probabilistic” in the sense that it may

fail, albeit with a probability that can be made arbitrarily (and

exponentially) small. Due to its probabilistic nature, we do not use

this method by default in our libraries. The approach also applies when

) will be considered as equal, which may result in

the elimination of more redundant computations. However, this algorithm

is only “Monte Carlo probabilistic” in the sense that it may

fail, albeit with a probability that can be made arbitrarily (and

exponentially) small. Due to its probabilistic nature, we do not use

this method by default in our libraries. The approach also applies when

, in which case divisions by

zero (in

, in which case divisions by

zero (in  but not in

but not in  ) only arise with exponentially small probability.

) only arise with exponentially small probability.

When implementing CSE in a blunt way, it is natural to use a separate data field for every distinct expression that we encounter. However, this heavily deteriorates the cache efficiency and reuse of data in registers. In order to preserve the quality of SLPs, our implementation first generates a blunt SLP with separate fields for every expression (but without removing dead code). This SLP has exactly the same shape as the original one (same inputs, outputs, and instructions, modulo renumbering). During a second pass, we renumber the new SLP using numbers of the original one, and remove all superfluous instructions.

Simplification is an optimization that is even more powerful than common subexpression elimination. Mathematically speaking, an SLP always computes a function, but different SLPs may compute the same function. In particular, the output of CSE computes the same function as the input. But there might be even shorter and efficient SLPs with this property.

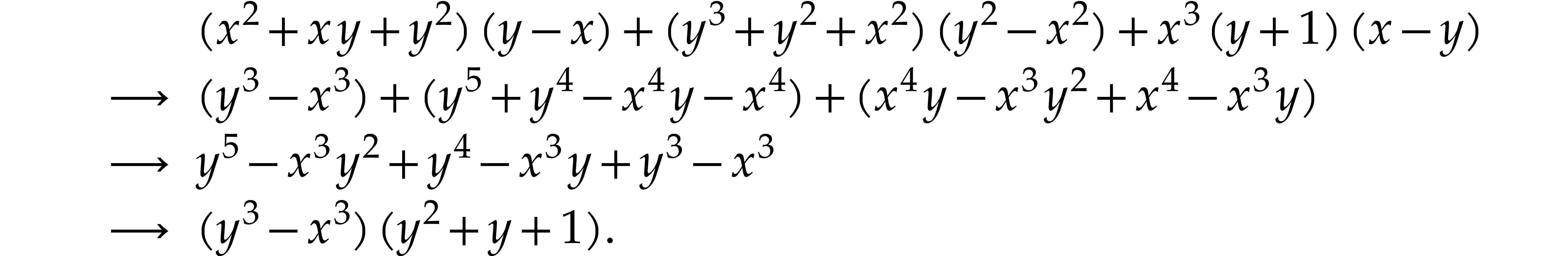

In full generality, finding the most efficient SLP for a given task is a difficult problem [21, 103, 93]. This is mainly due to the fact that an expression may first need to be expanded before an actual simplification occurs:

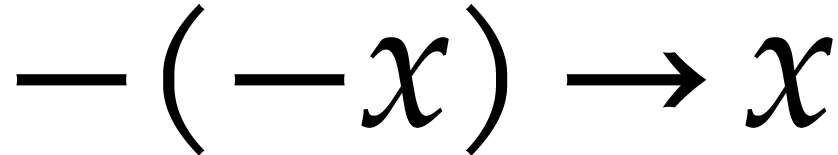

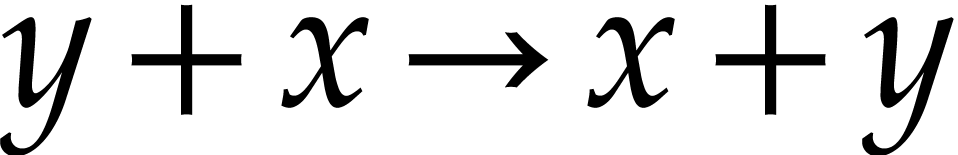

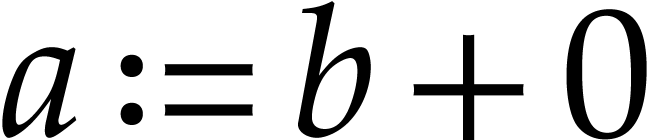

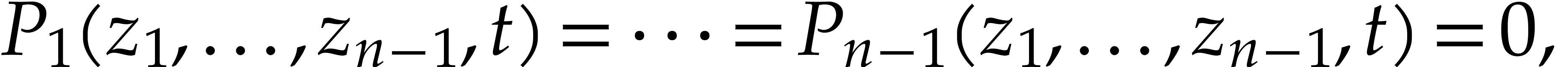

In our implementation, we therefore limited ourselves to simple algebraic rules that never increase the size of expressions and that can easily be applied in linear time using a single pass through the input SLP:

Constant folding: whenever an expression has only constant arguments, then we replace it by its evaluation.

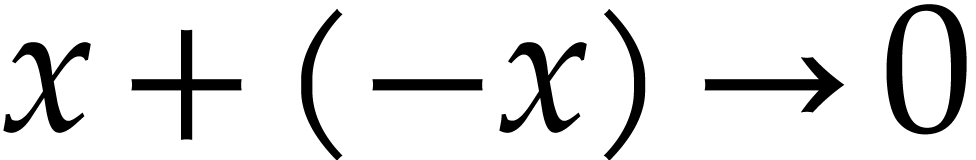

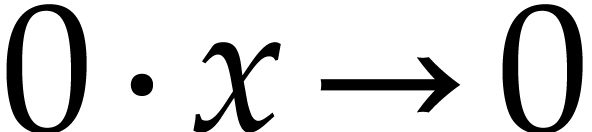

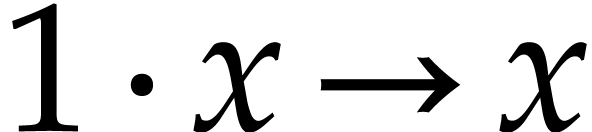

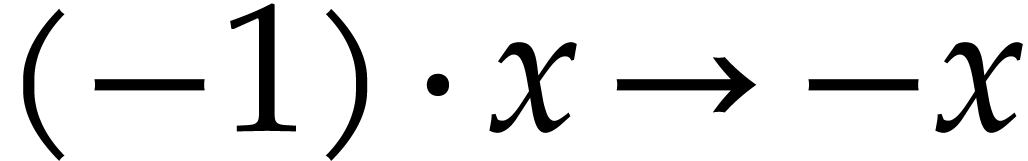

Basic algebraic simplifications like  ,

,

,

,  ,

,  ,

,

,

,  ,

,  ,

,

, etc.

, etc.

Imposing an ordering on the arguments of commutative operations:

,

,  , etc. In addition to this rewriting rule, we

also use a hash function for CSE that returns the same value for

expressions like

, etc. In addition to this rewriting rule, we

also use a hash function for CSE that returns the same value for

expressions like  and

and  .

.

Simplification is a particularly central operation of the

In the  of basic

operations with the set of simplification rules by introducing all

possible versions of signed multiplication: besides the four versions

of basic

operations with the set of simplification rules by introducing all

possible versions of signed multiplication: besides the four versions

of signed FMAs, our signature

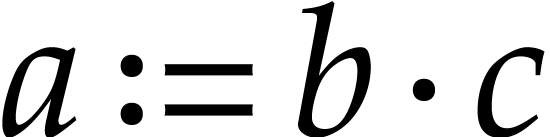

of signed FMAs, our signature  also contains the operation

also contains the operation  .

The resulting uniform treatment of signs is particularly beneficial for

complex arithmetic, linear algebra (when computing determinants),

polynomial and series division, automatic differentiation, and various

other operations that make intensive use of signs.

.

The resulting uniform treatment of signs is particularly beneficial for

complex arithmetic, linear algebra (when computing determinants),

polynomial and series division, automatic differentiation, and various

other operations that make intensive use of signs.

Just before generating machine code for our SLP in the workflow (1.2), it is useful to further “massage” our SLP while taking into account specific properties of the target hardware. Register allocation is a particularly crucial preparatory operation.

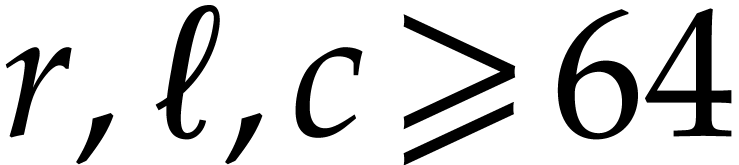

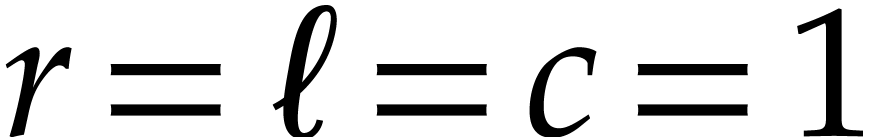

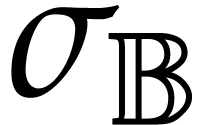

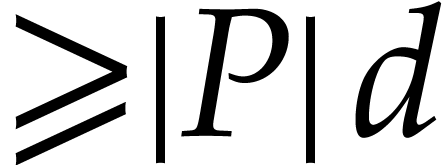

Consider a target CPU or GPU with  registers. In

our SLP formalism, the goal of register allocation is to transform an

input SLP into an equivalent SLP with the following properties:

registers. In

our SLP formalism, the goal of register allocation is to transform an

input SLP into an equivalent SLP with the following properties:

and the first

and the first  data

fields of

data

fields of  are reserved for

“registers”.

are reserved for

“registers”.

For all operations in  except

“move”, all arguments are registers.

except

“move”, all arguments are registers.

Moving registers to other data fields “in memory” or vice versa are thought of as expensive operations that should be minimized in number.

In our implementations, we also assume that  for

all input/output variables and constants, but this constraint can easily

be relaxed if needed.

for

all input/output variables and constants, but this constraint can easily

be relaxed if needed.

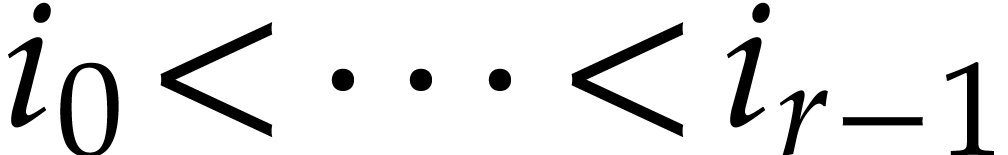

There is a rich literature on register allocation. The problem of finding an optimal solution is NP-complete [24], but practically efficient solutions exist, based on graph coloring [24, 23, 19] and linear scanning [96]. The second approach is more suitable for us, because it is guaranteed to run in linear time, so we implemented a variant of it.

More precisely, using one linear pass in backward direction, for each

operand of an instruction, we first determine the next location where it

is used (if anywhere). We next use a greedy algorithm that does one more

forward pass through  . During

this linear pass, we maintain lookup tables with the correspondence

between data fields and the registers they got allocated to. Whenever we

need to access a data field that is not in one of the registers, we

search for a register that is not mapped to a data field or whose

contents are no longer needed. If such a register exists, then we take

it. Otherwise, we determine the register whose contents are needed

farthest in the future. We then save its contents in the corresponding

data field, after which the register becomes available to hold new data.

. During

this linear pass, we maintain lookup tables with the correspondence

between data fields and the registers they got allocated to. Whenever we

need to access a data field that is not in one of the registers, we

search for a register that is not mapped to a data field or whose

contents are no longer needed. If such a register exists, then we take

it. Otherwise, we determine the register whose contents are needed

farthest in the future. We then save its contents in the corresponding

data field, after which the register becomes available to hold new data.

This simple strategy efficiently produces reasonably good (and sometimes very good) code on modern hardware. In the future, we might still implement some of the alternative strategies based on graph coloring, for cases when it is reasonable to spend more time on optimization.