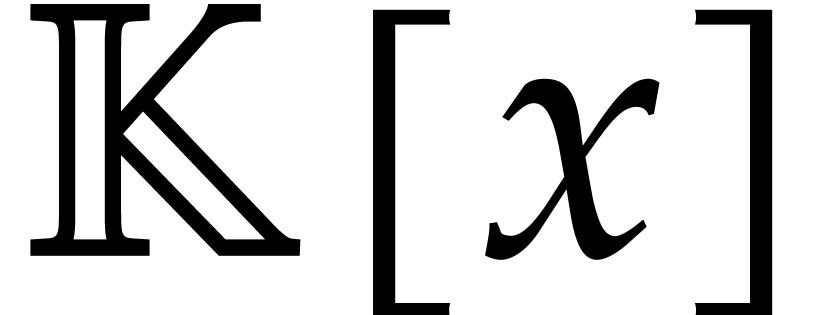

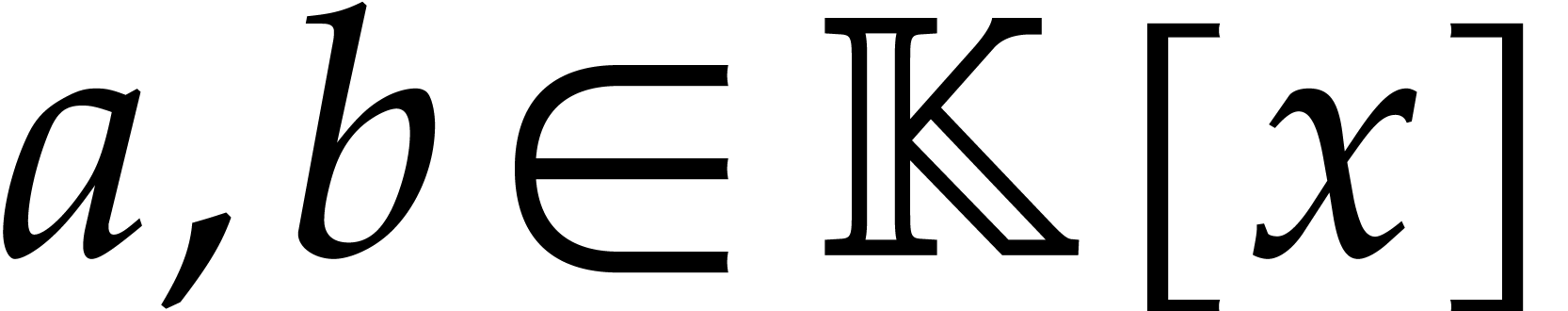

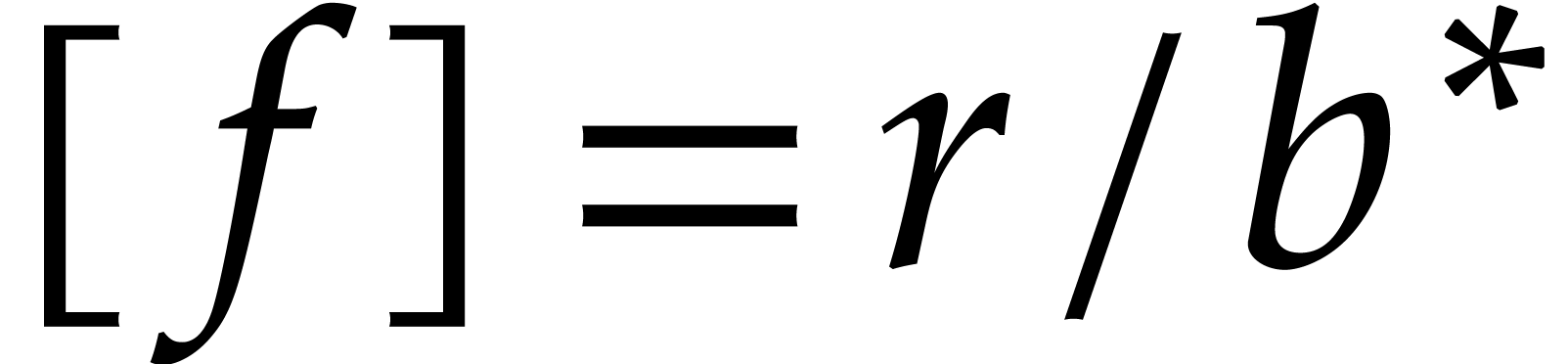

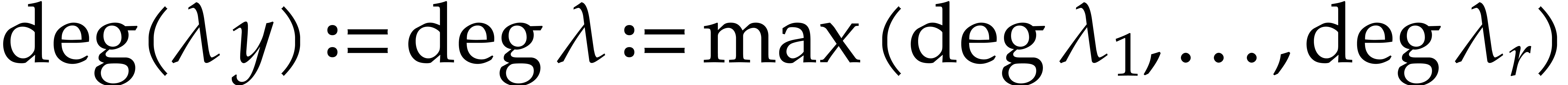

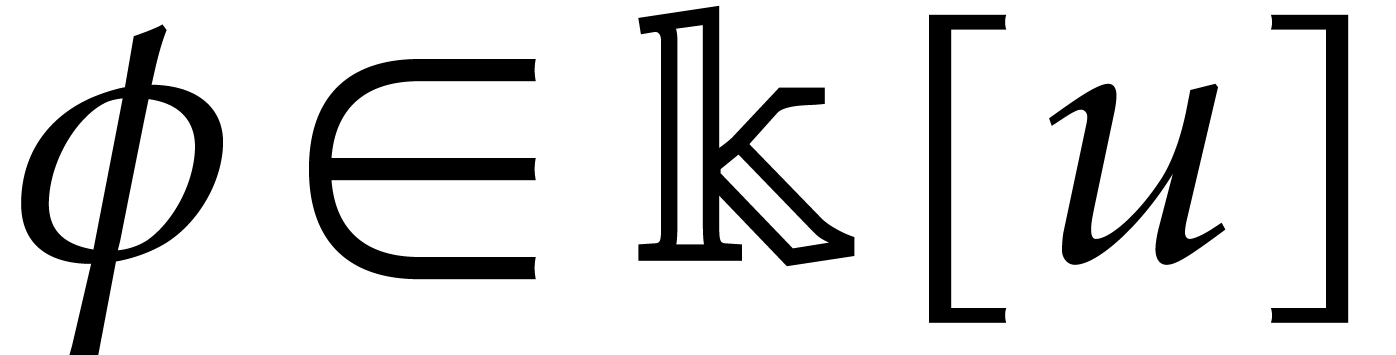

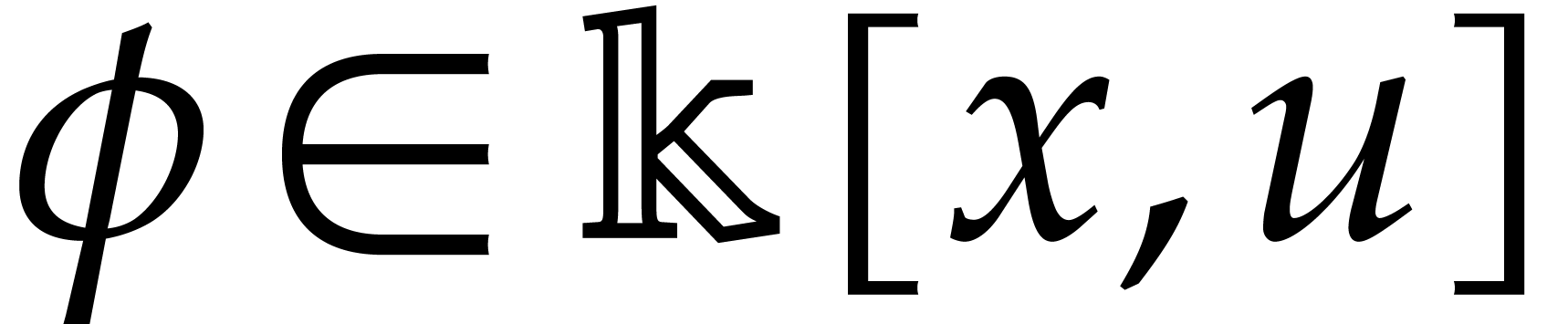

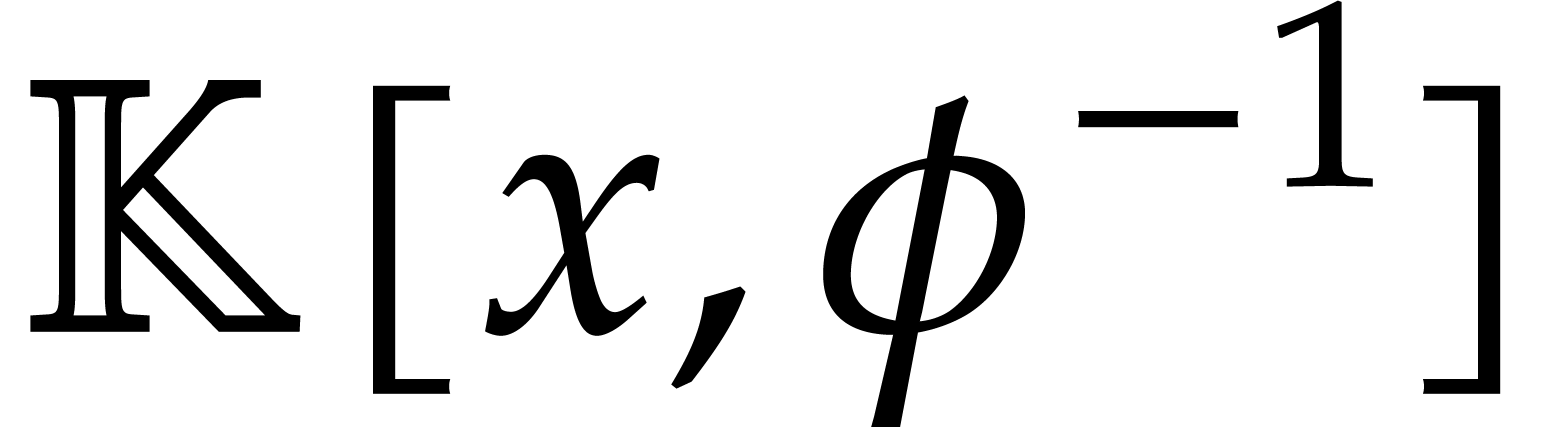

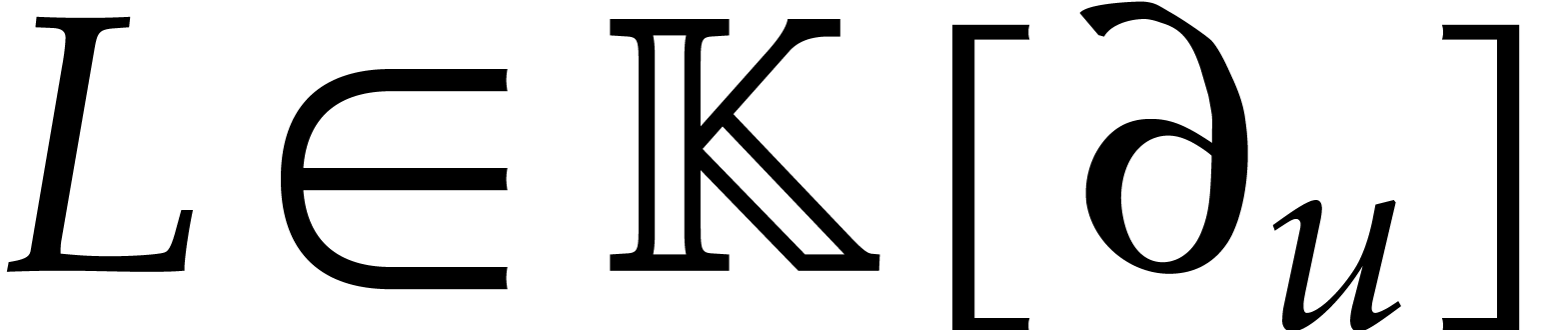

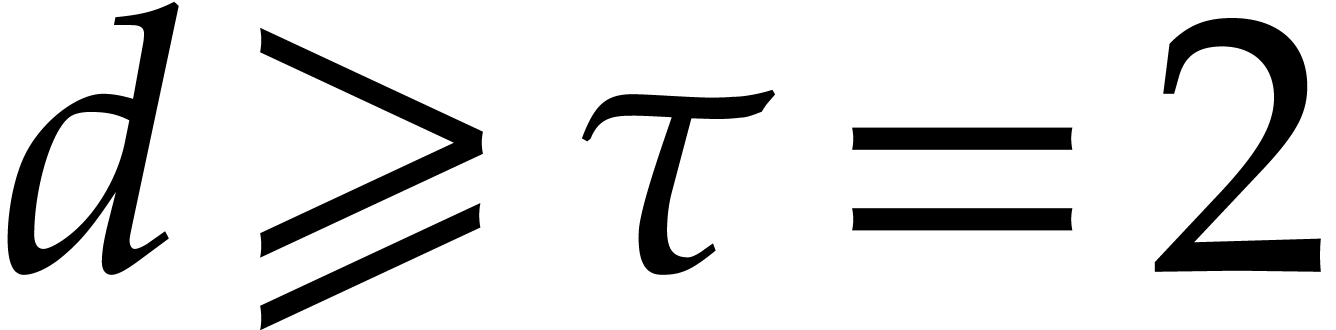

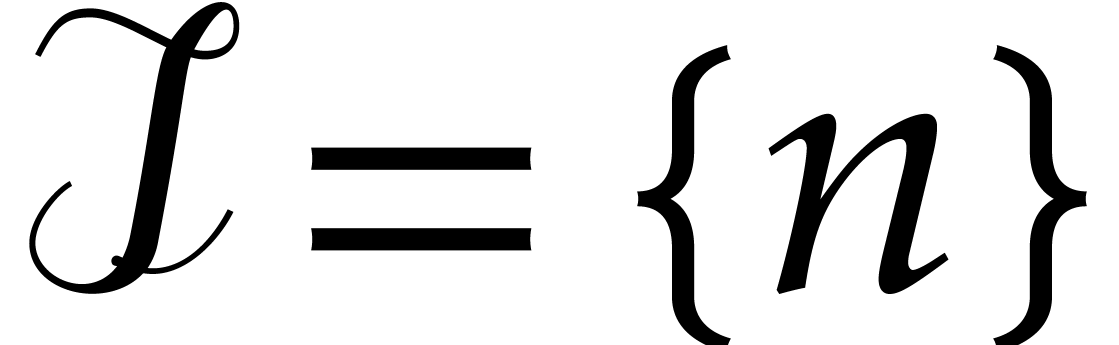

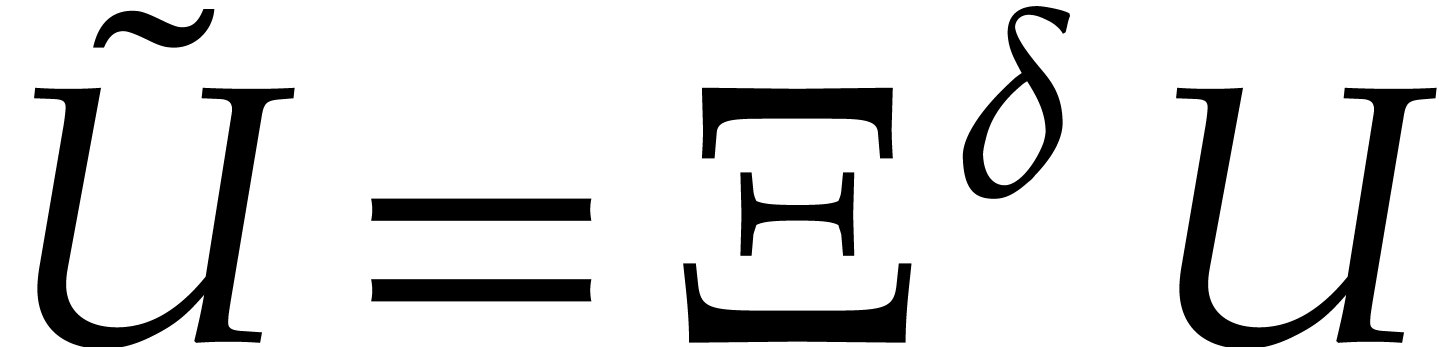

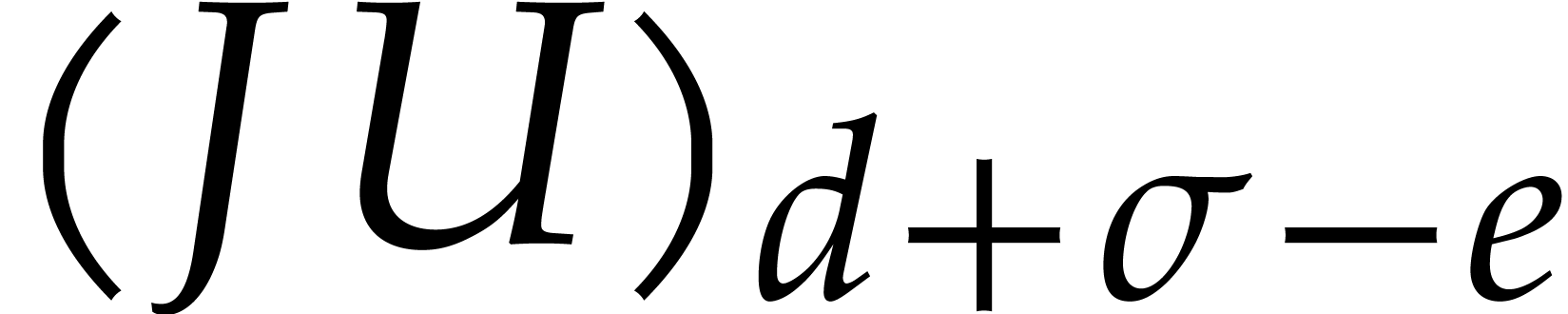

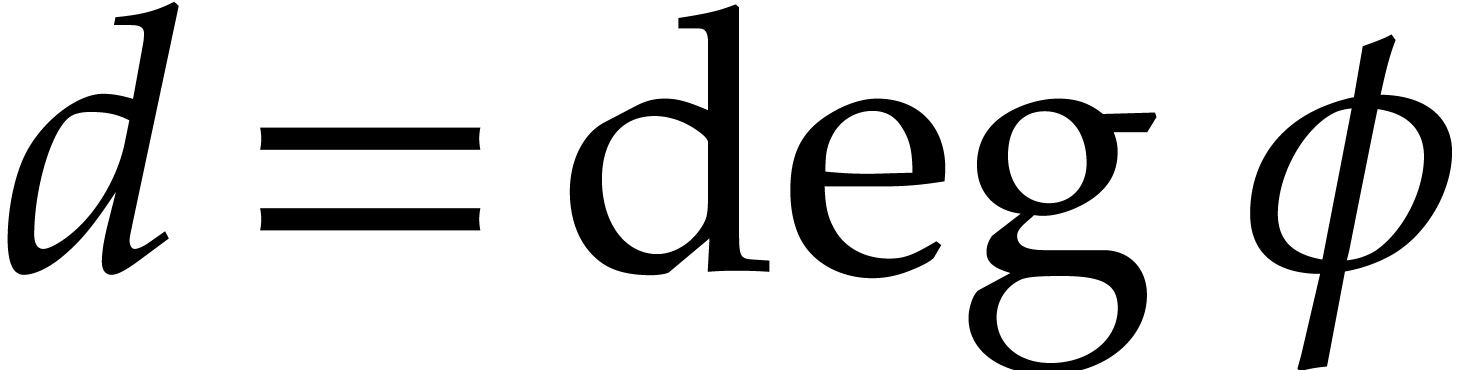

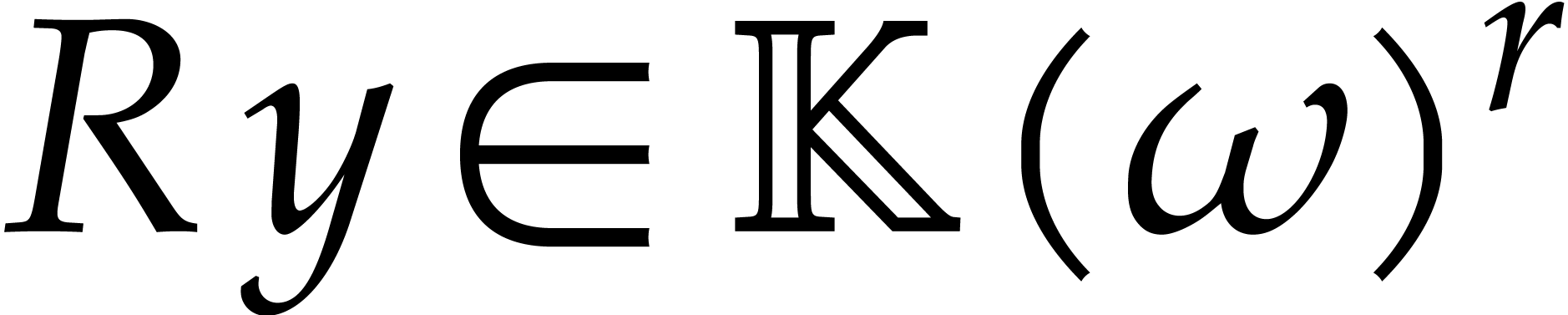

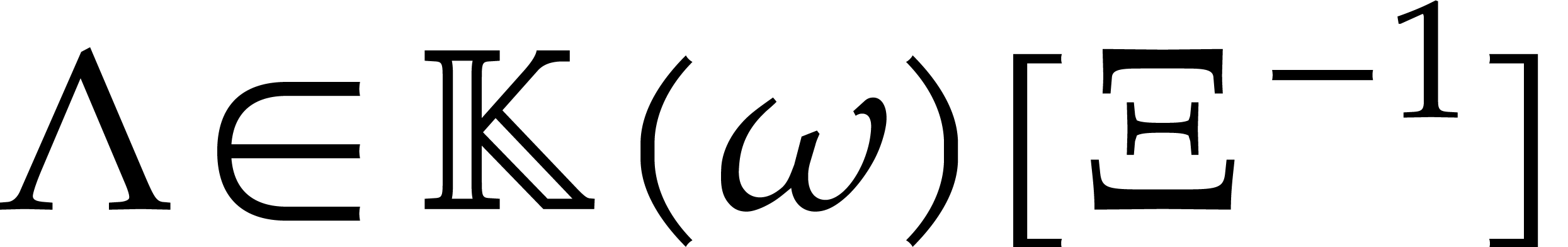

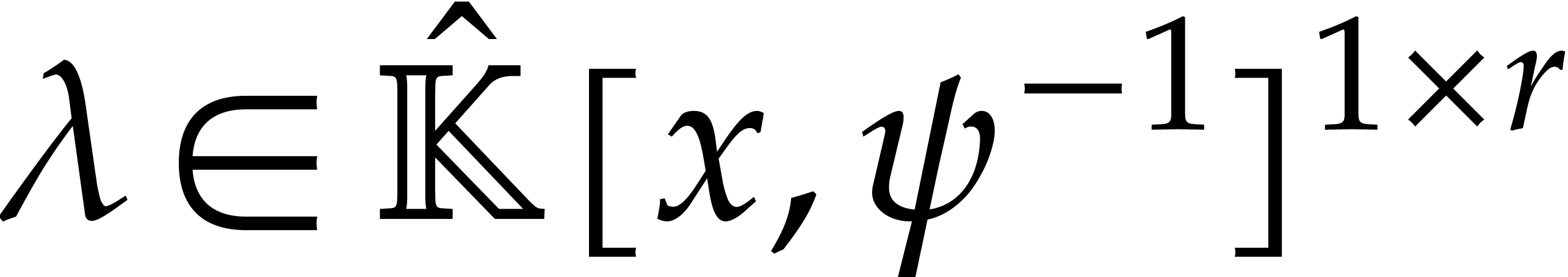

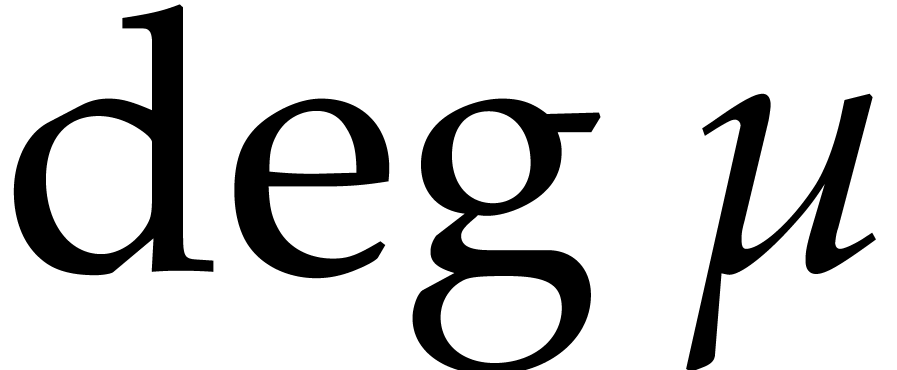

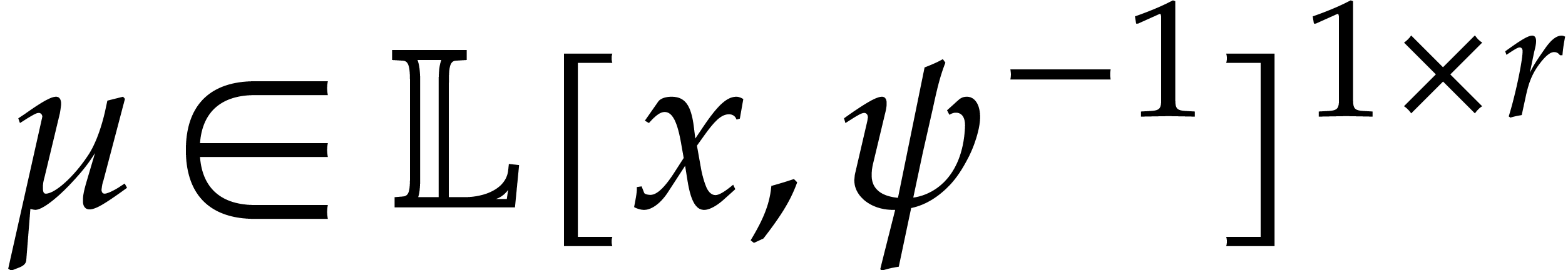

be an effective field of characteristic zero

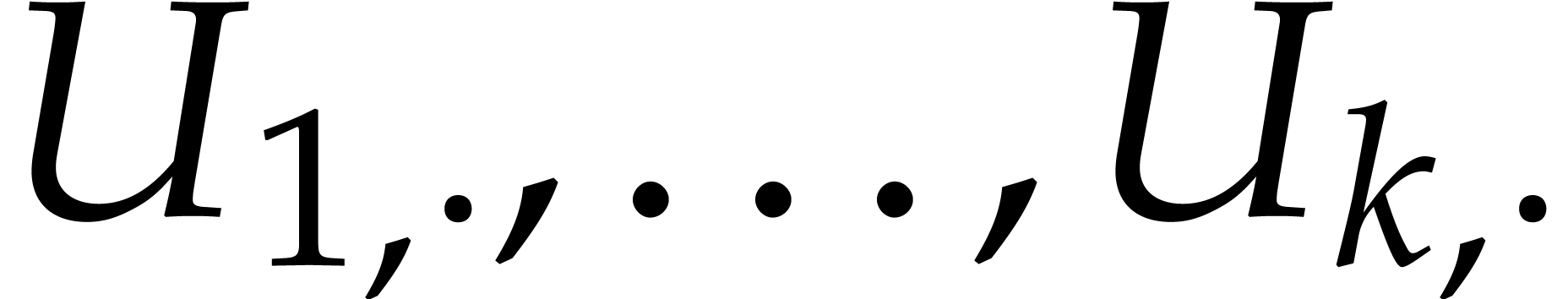

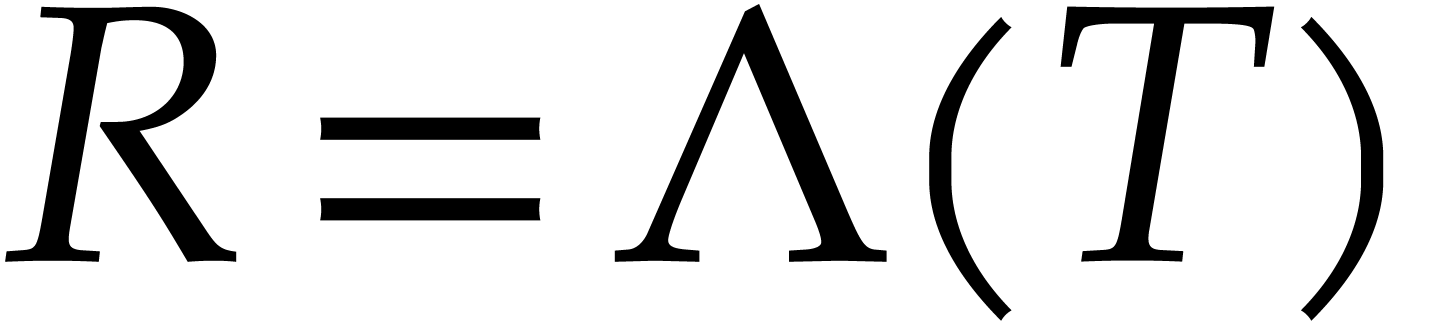

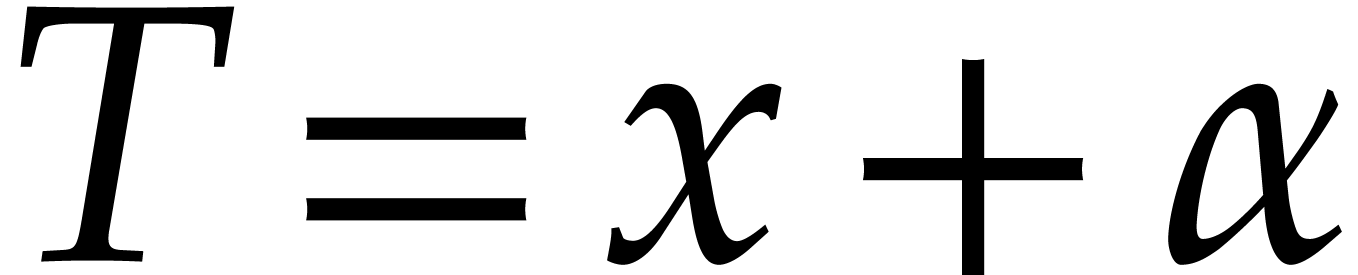

and let

be an effective field of characteristic zero

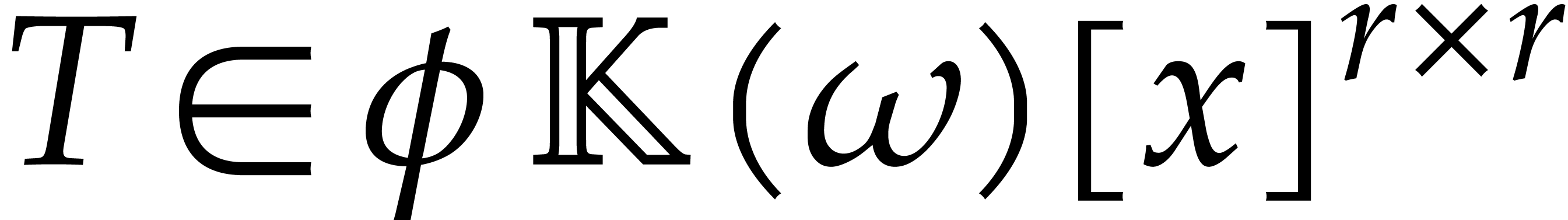

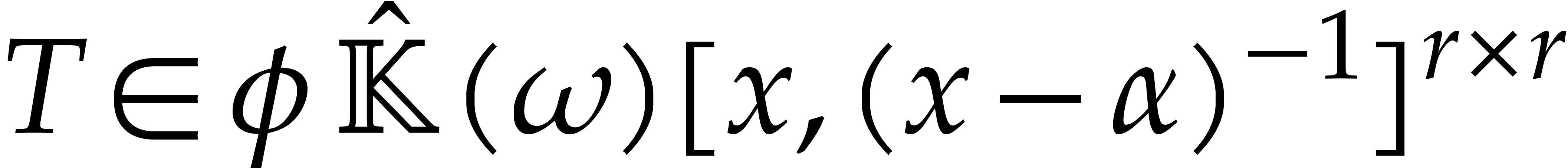

and let  be a non-zero polynomial. Consider the

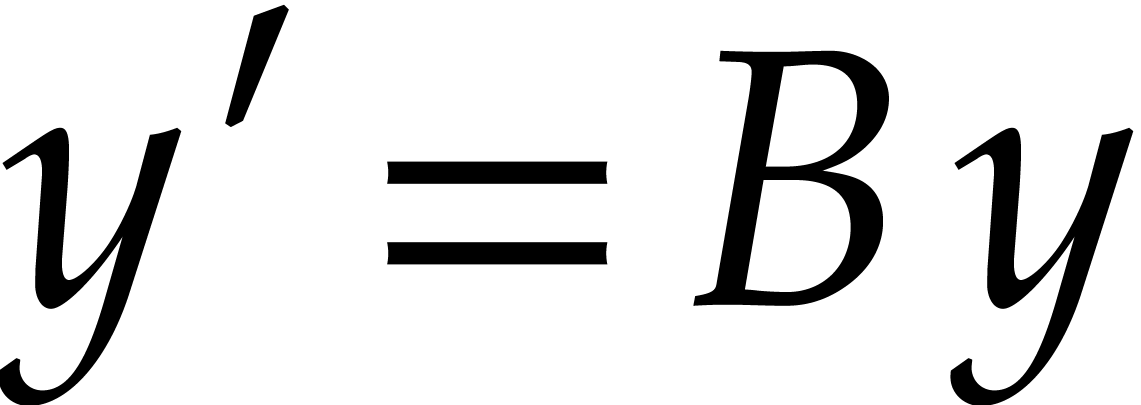

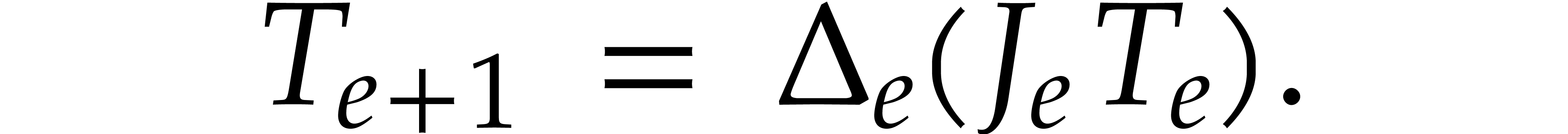

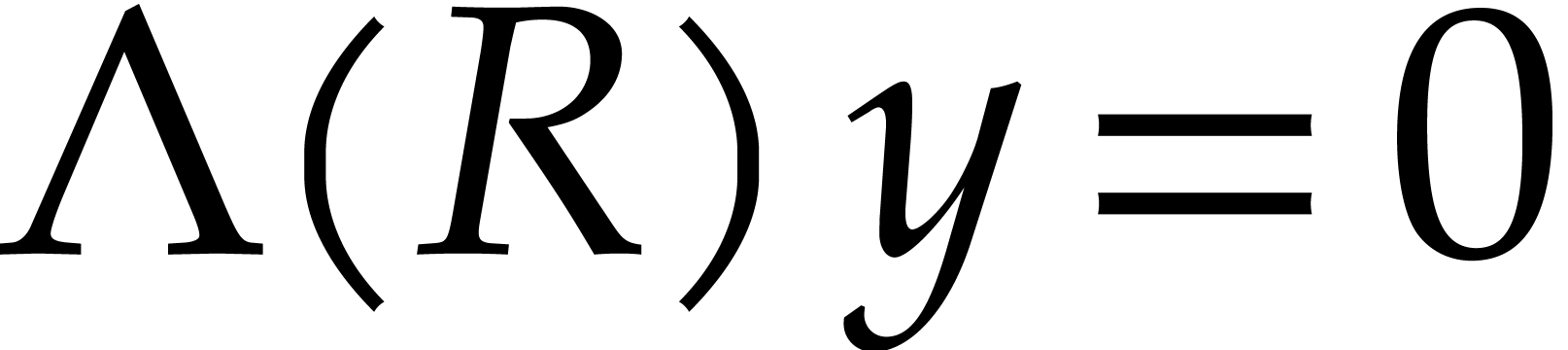

system of linear differential equations

be a non-zero polynomial. Consider the

system of linear differential equations

|

The class of reduction-based algorithms was introduced recently as a new approach towards creative telescoping. Starting with Hermite reduction of rational functions, various reductions have been introduced for increasingly large classes of holonomic functions. In this paper we show how to construct reductions for general holonomic functions, in the purely differential setting.

I.1.2 Algebraic algorithms |

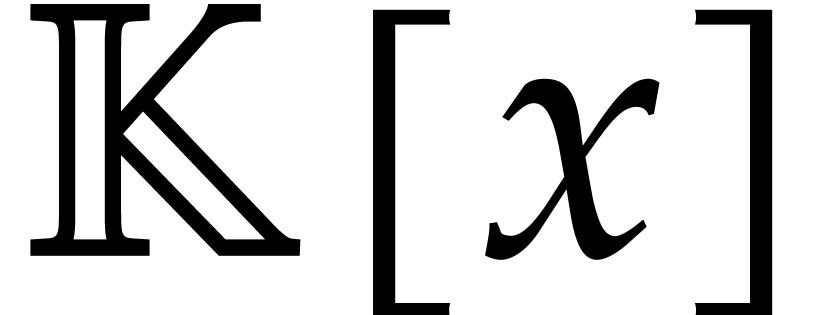

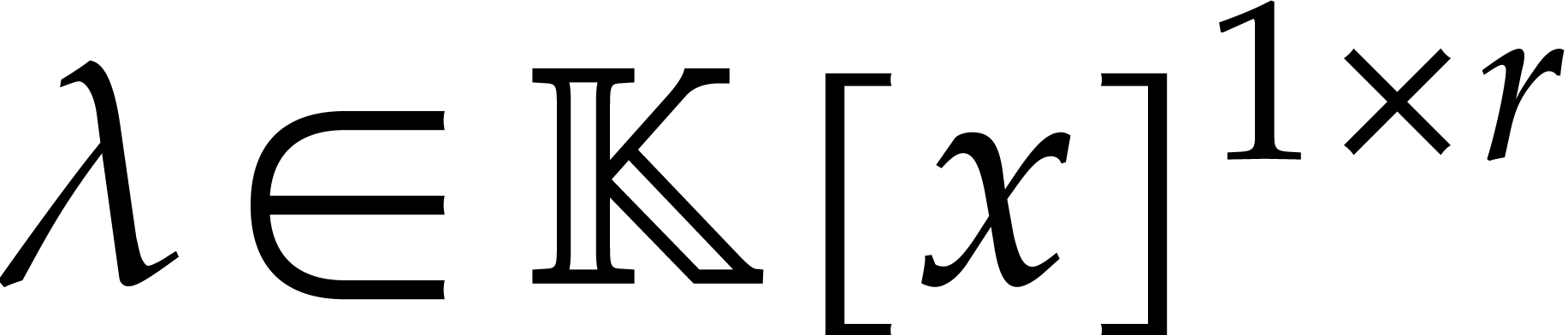

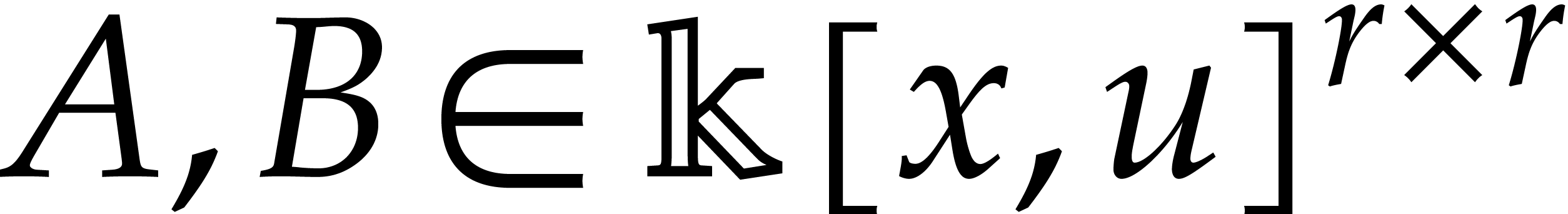

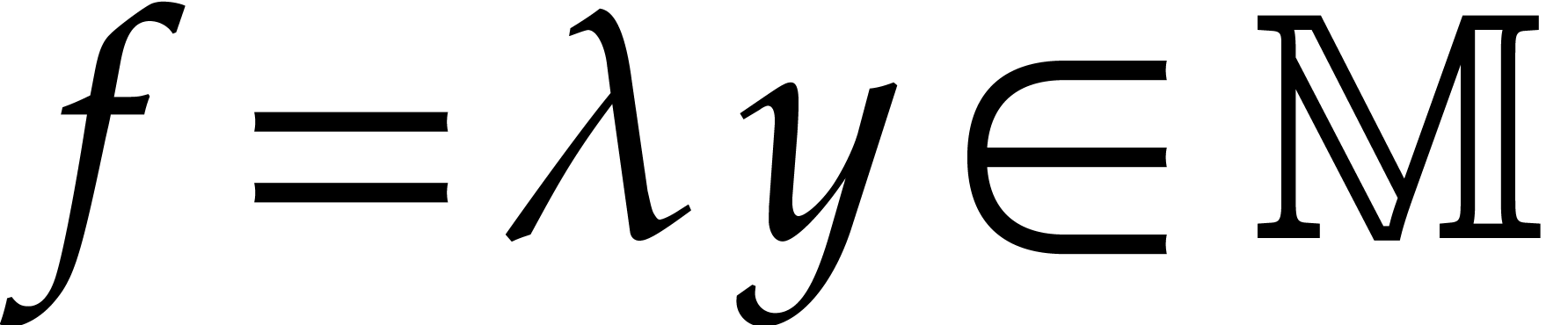

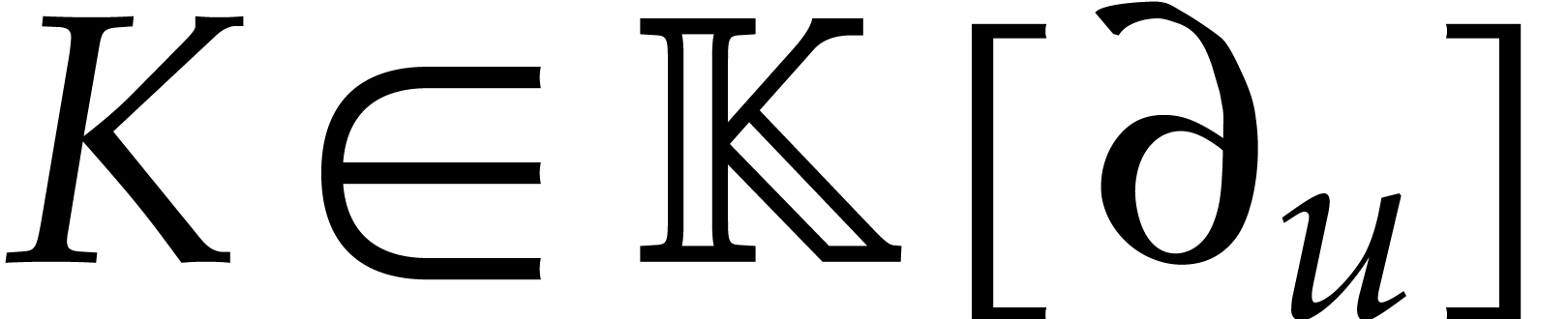

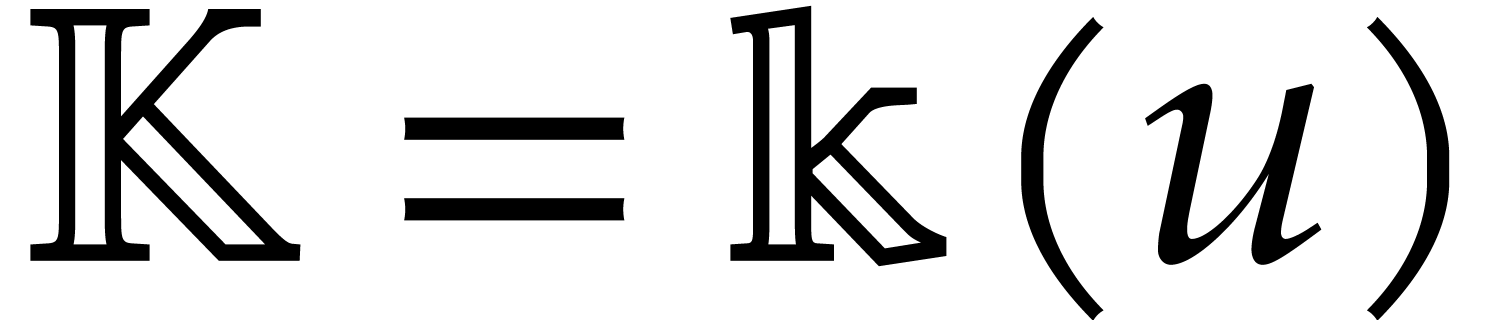

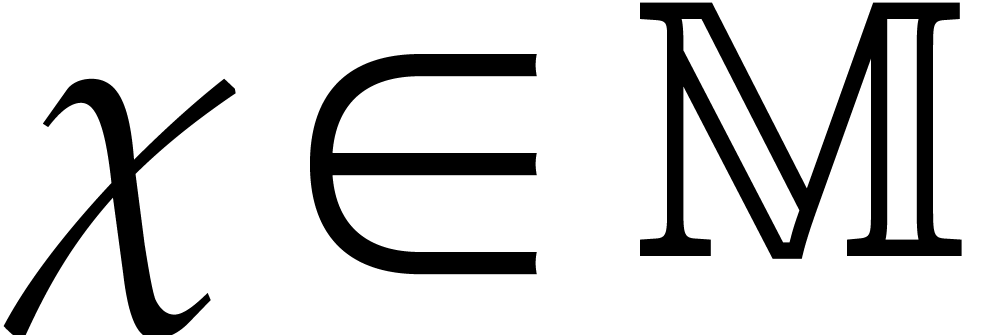

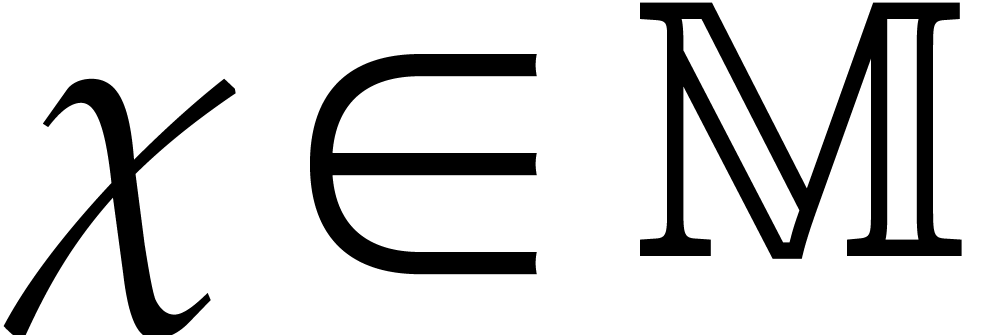

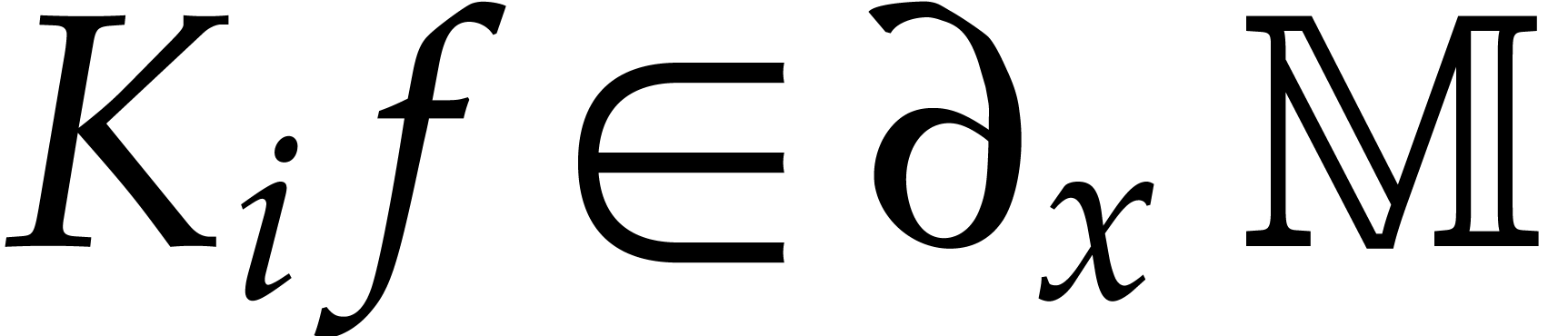

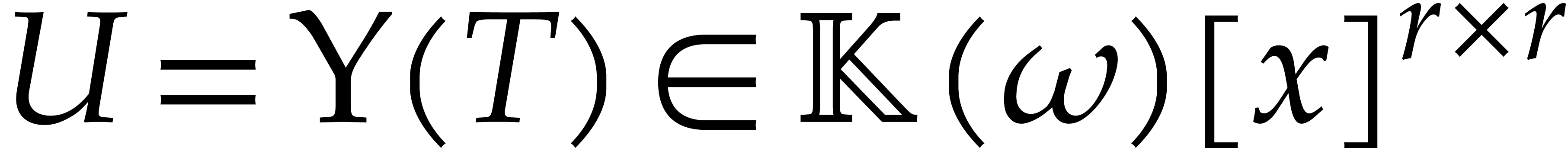

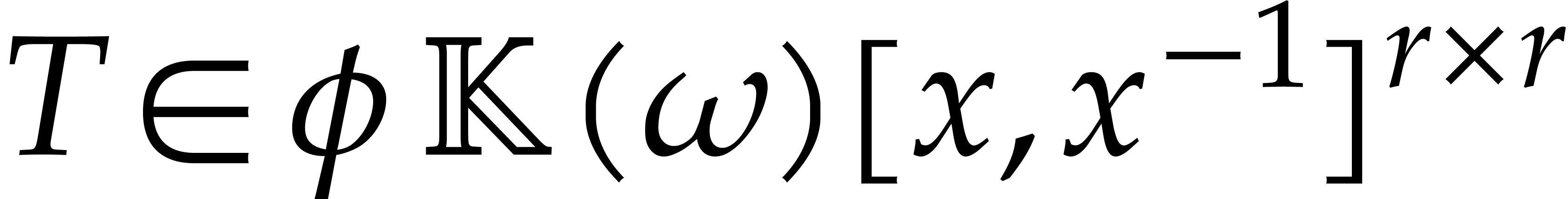

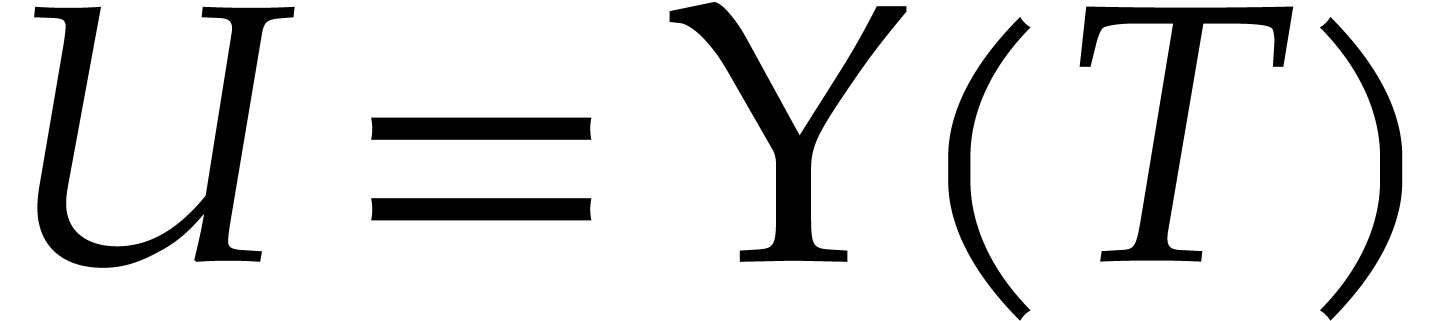

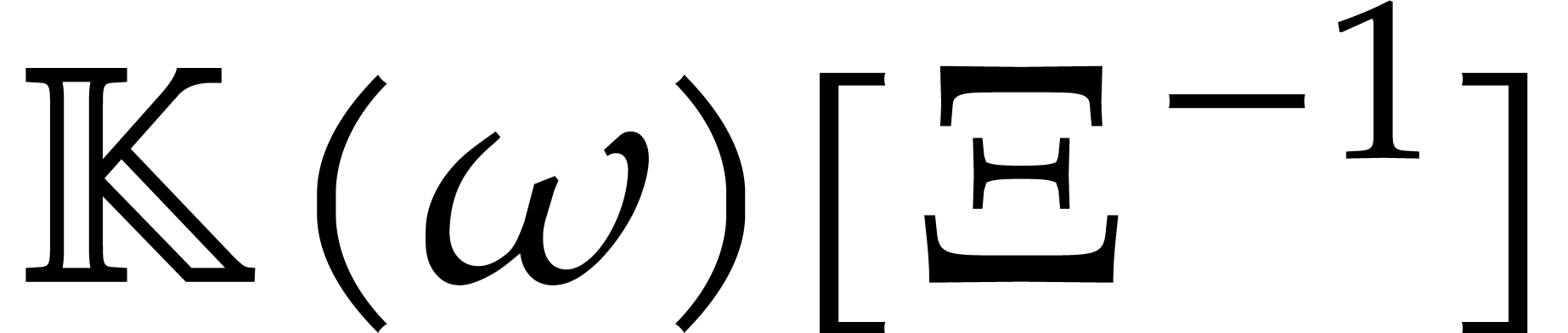

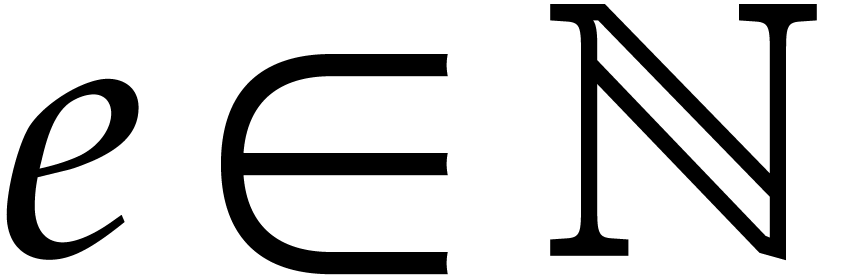

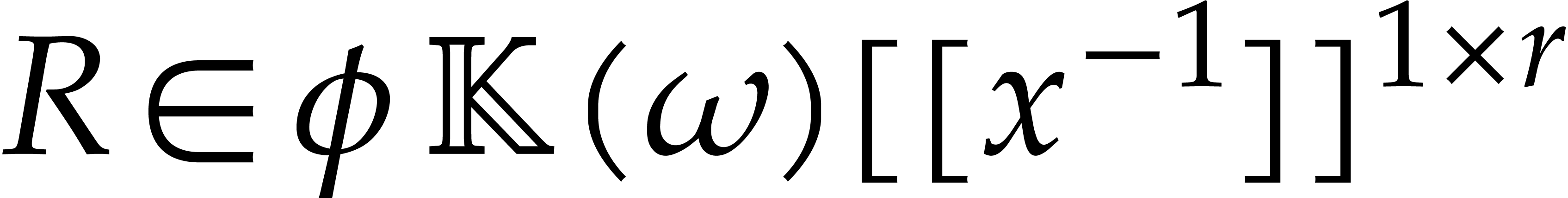

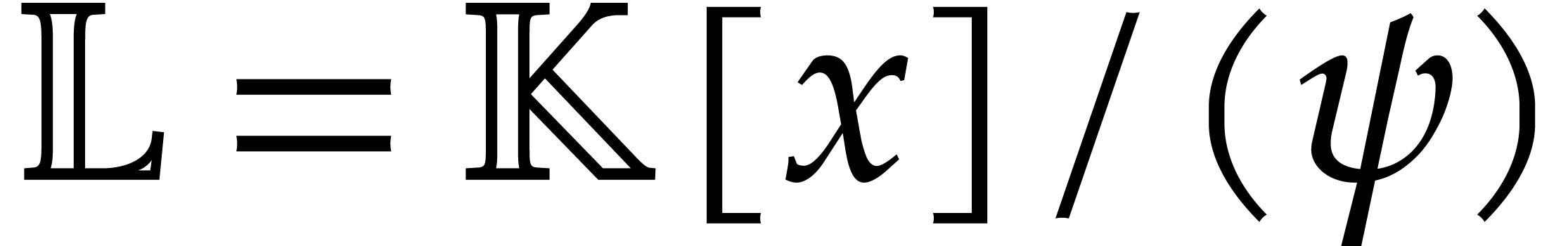

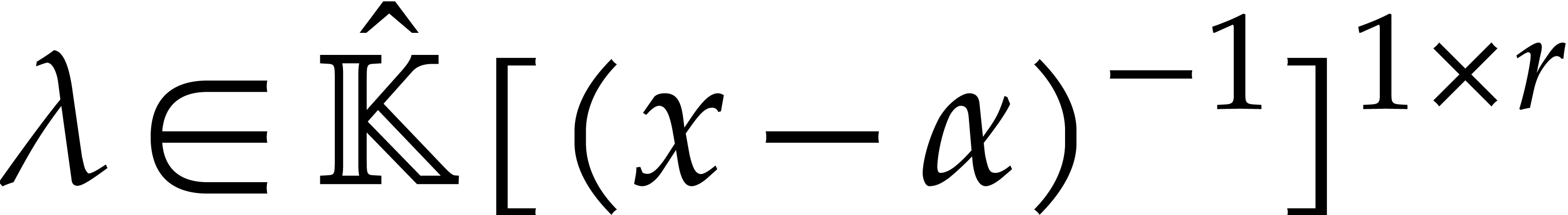

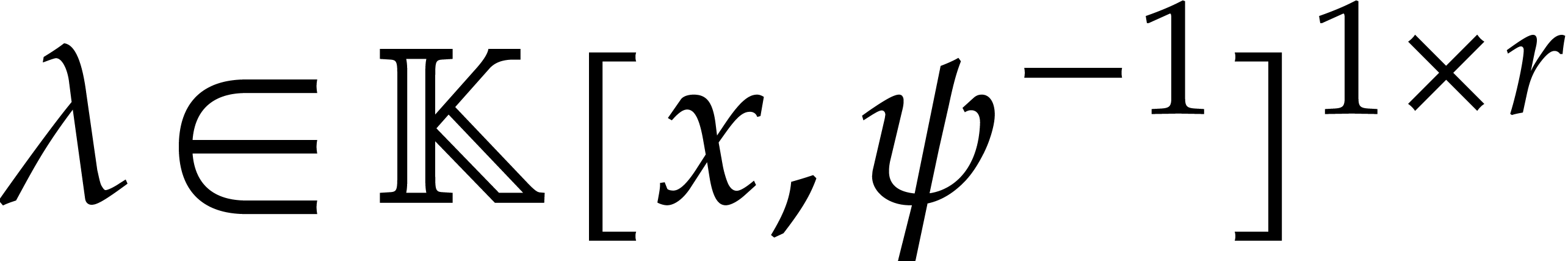

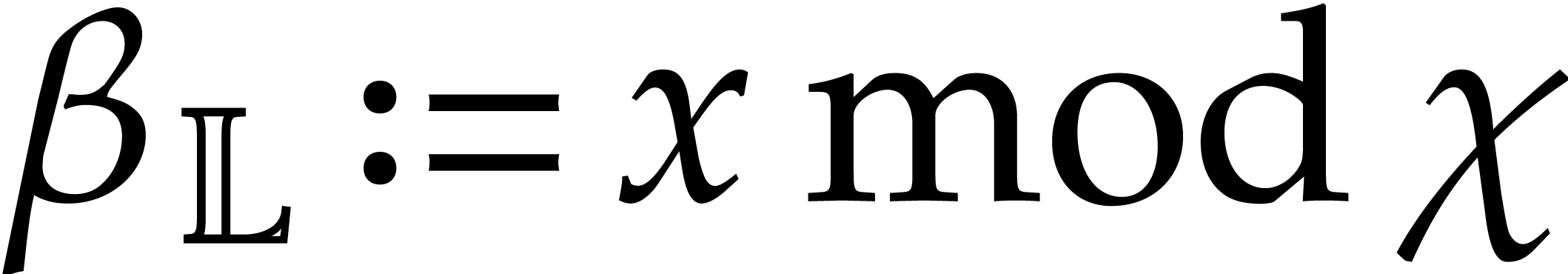

Let  be an effective field of characteristic zero

and let

be an effective field of characteristic zero

and let  be a non-zero polynomial. Consider the

system of linear differential equations

be a non-zero polynomial. Consider the

system of linear differential equations

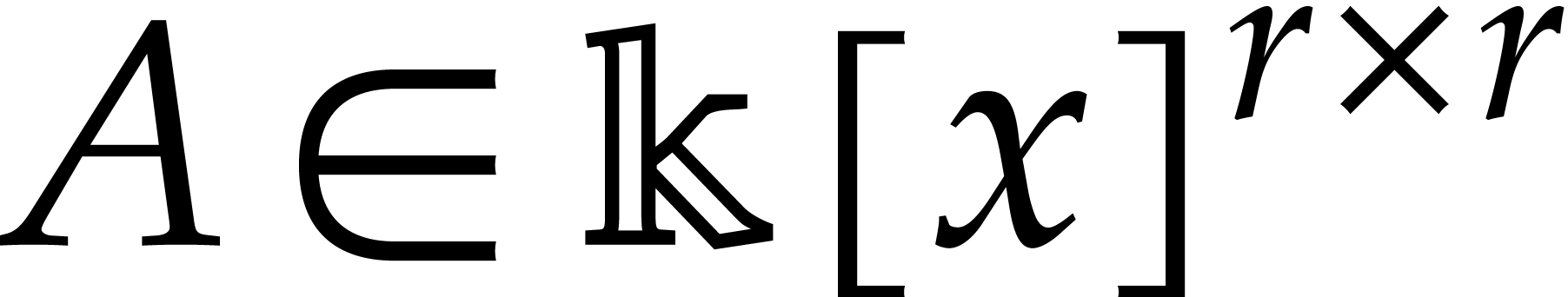

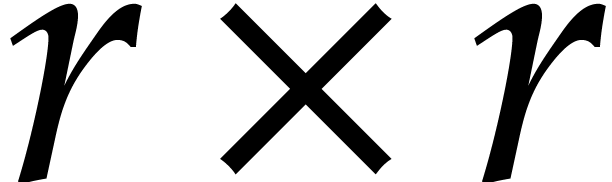

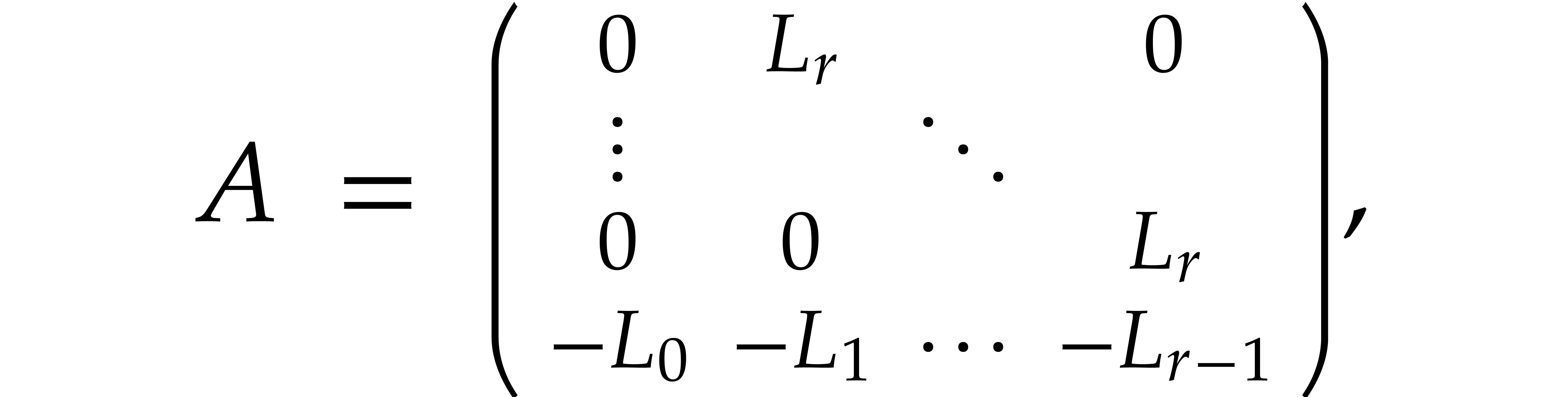

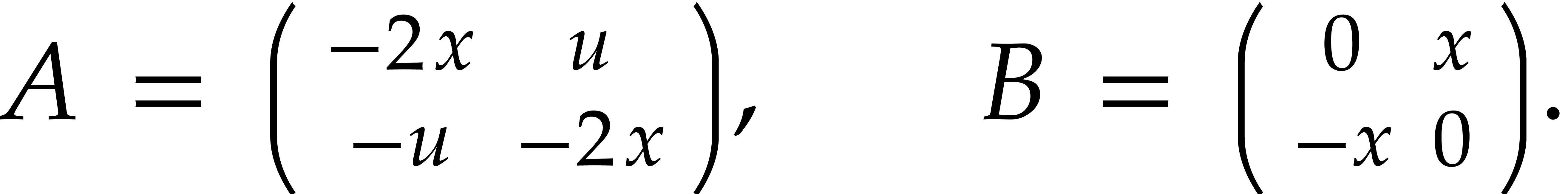

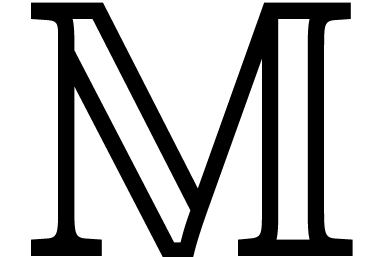

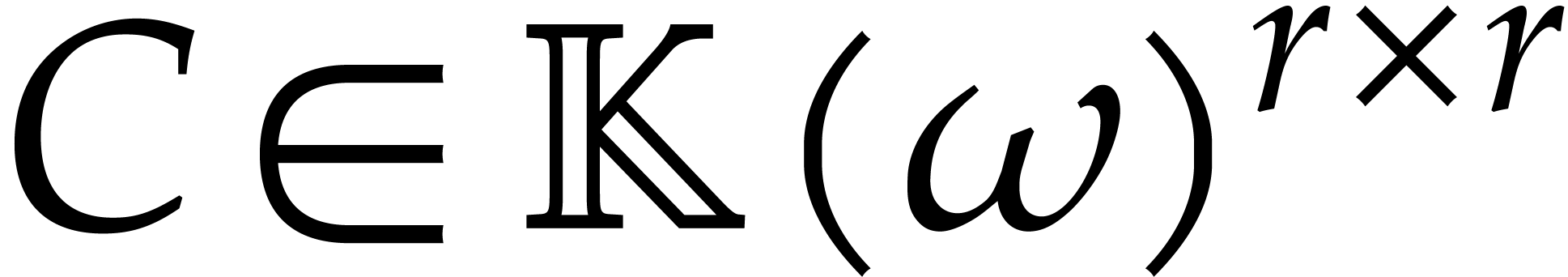

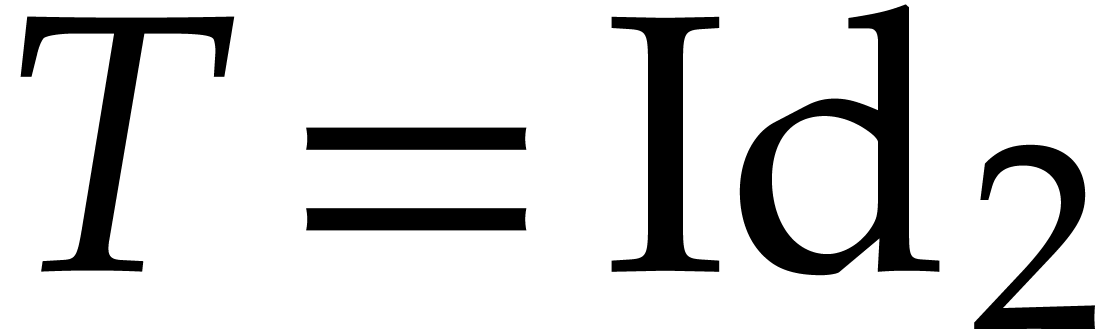

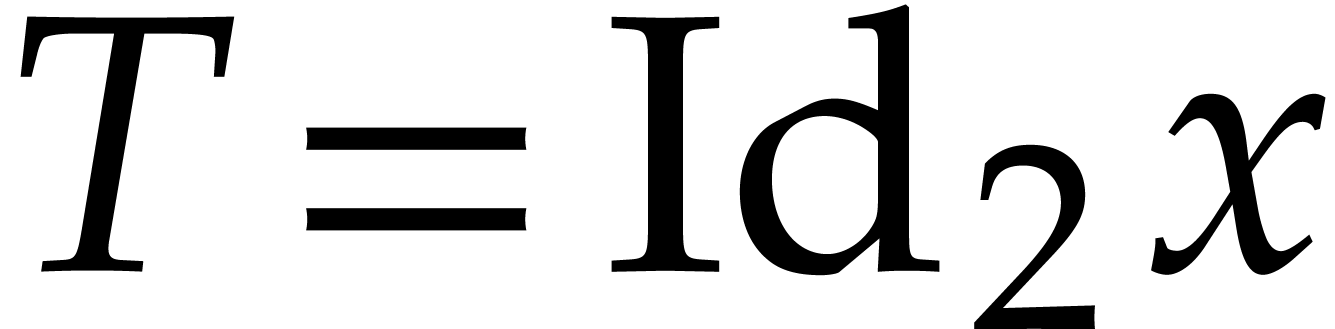

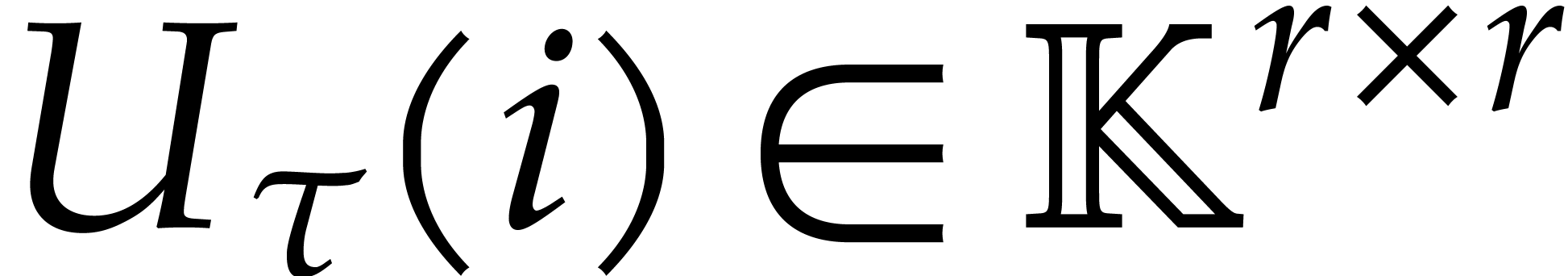

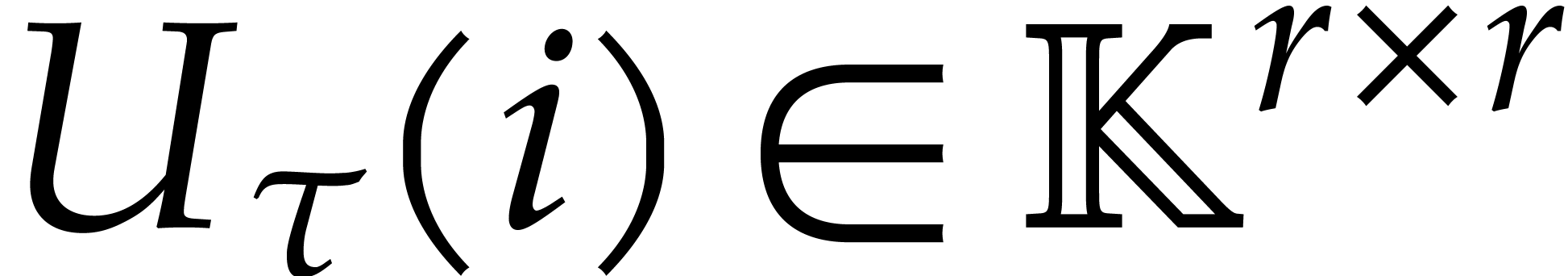

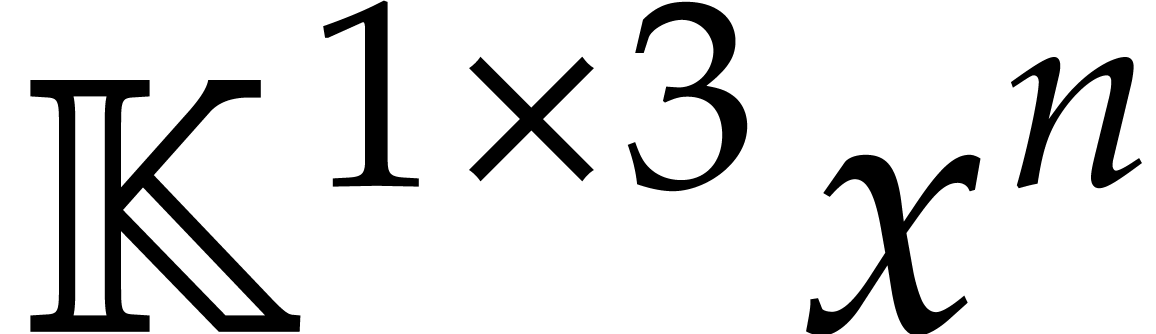

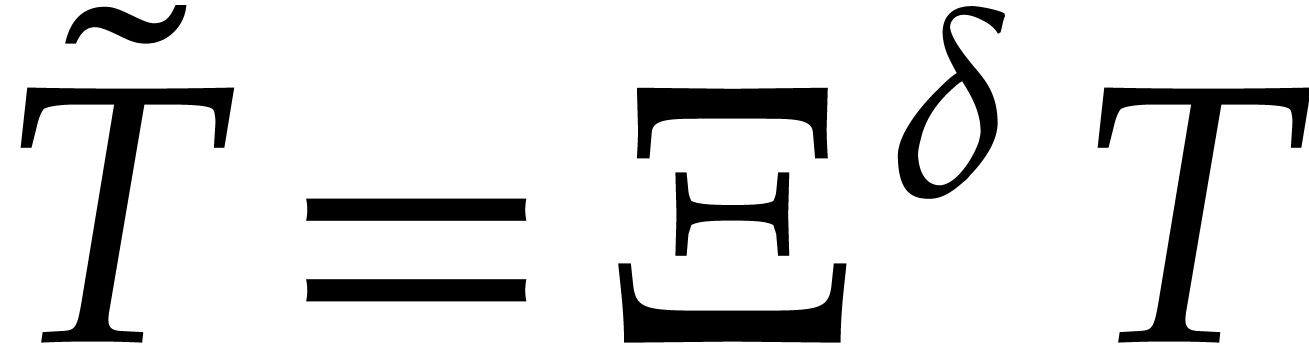

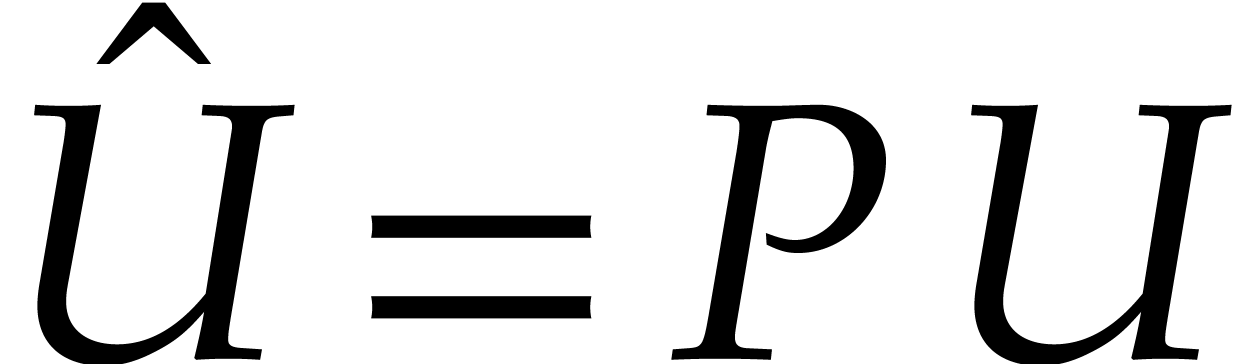

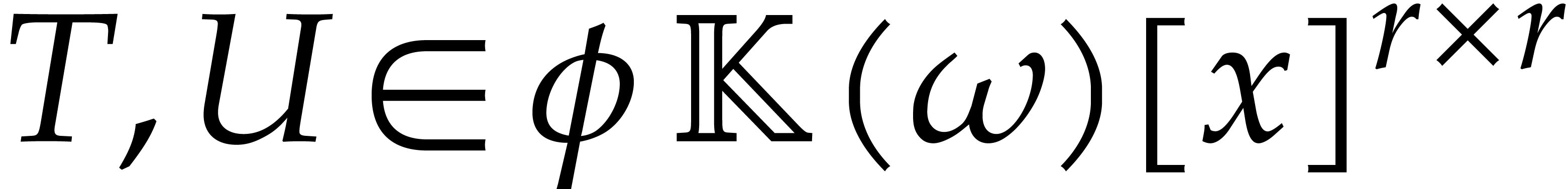

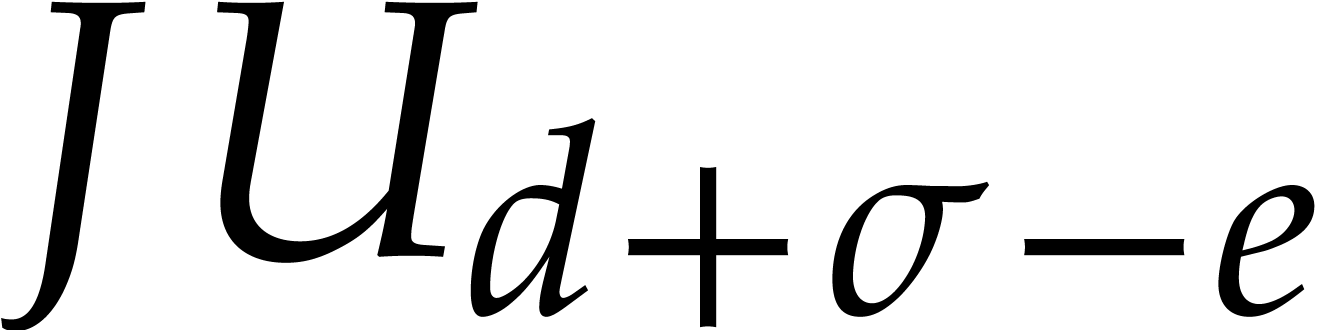

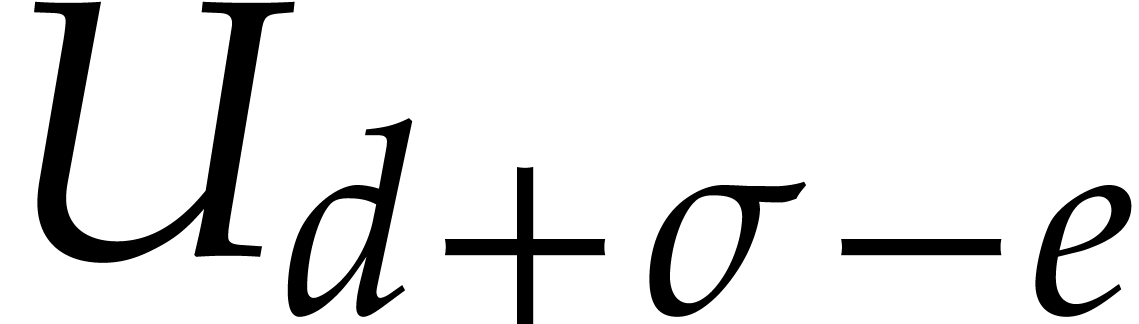

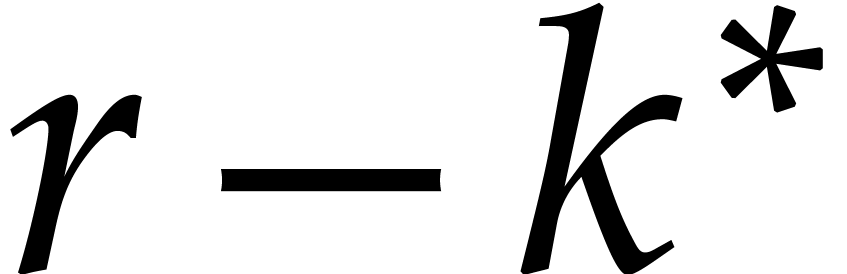

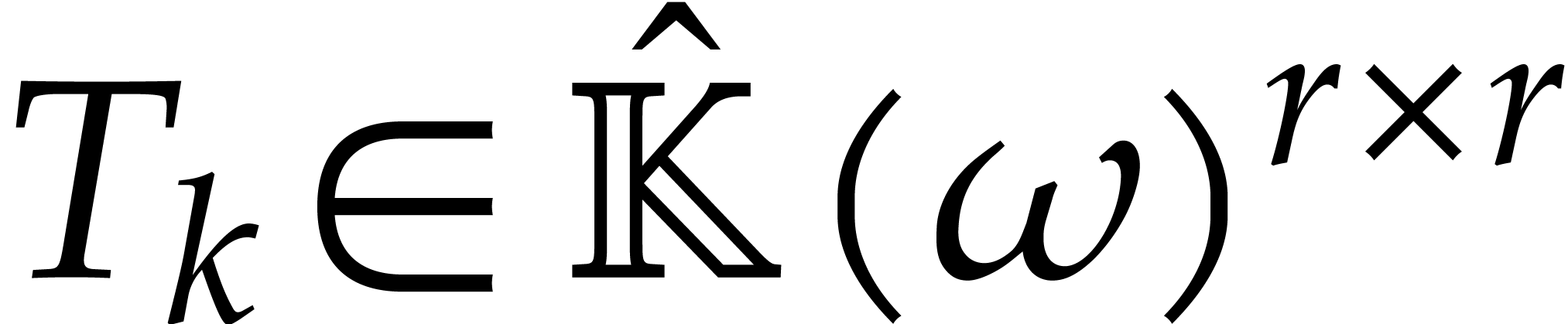

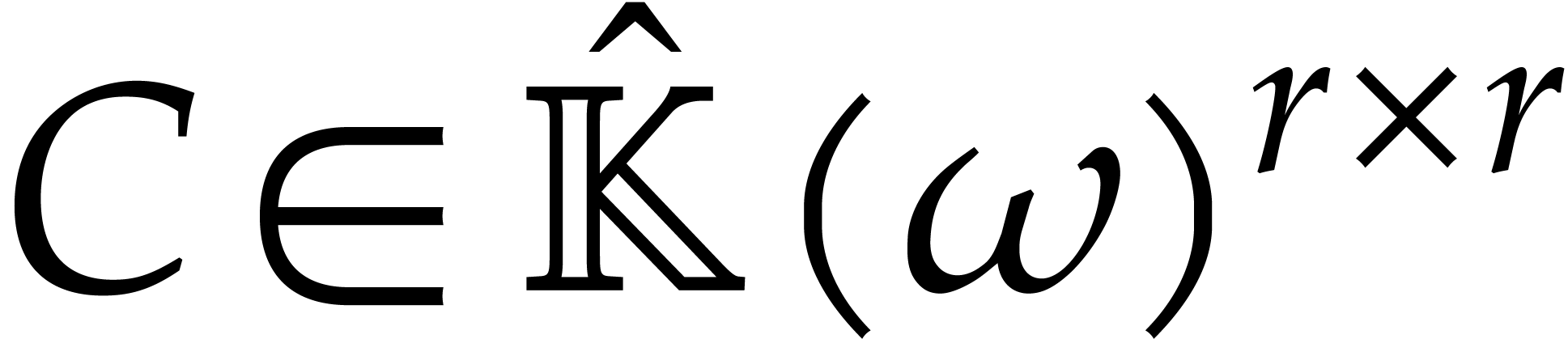

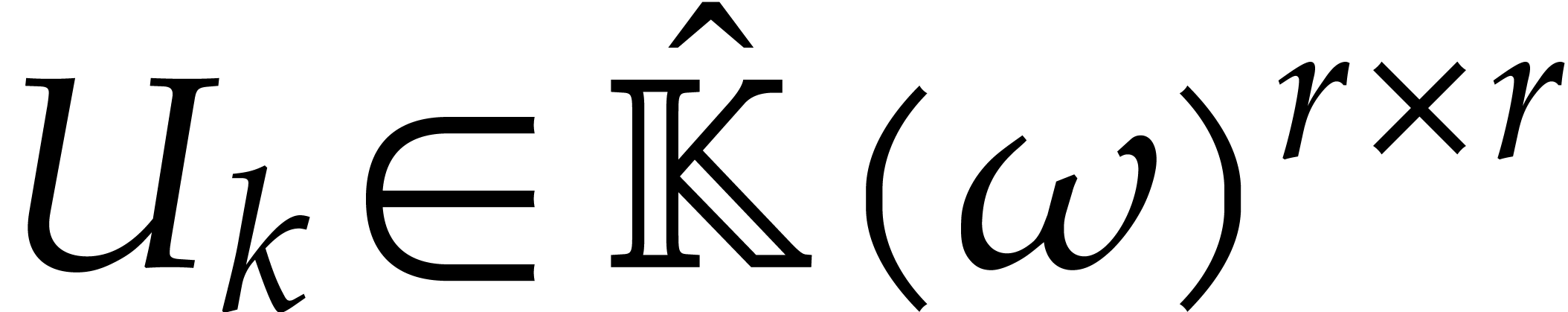

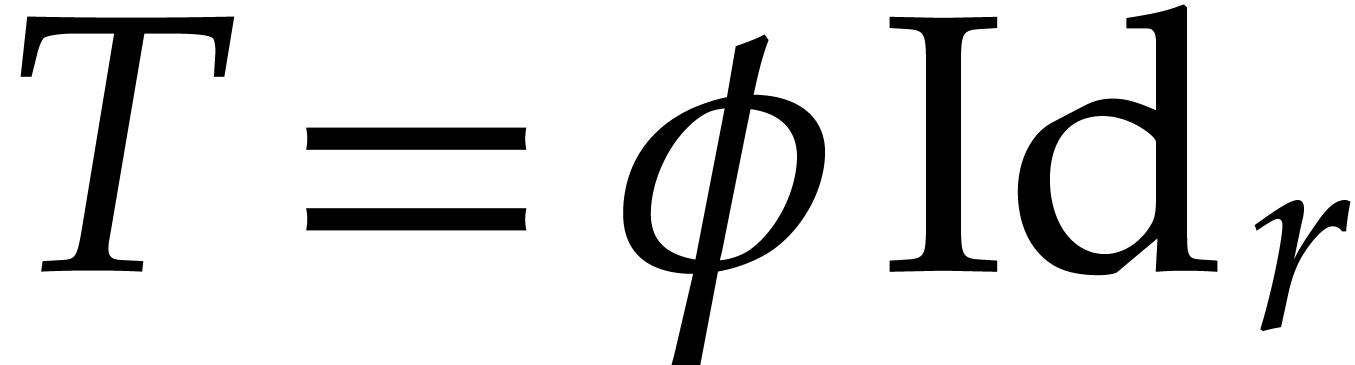

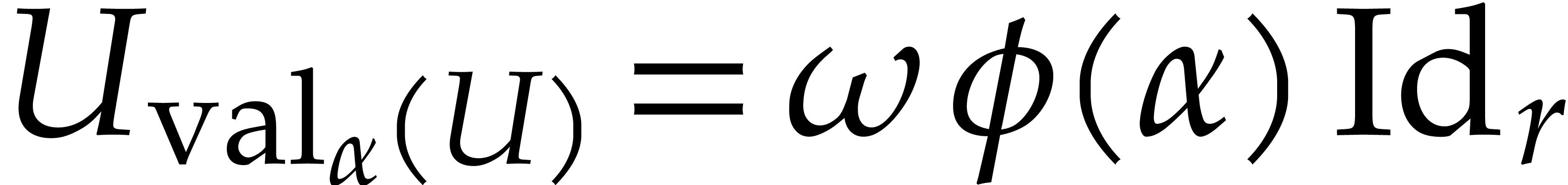

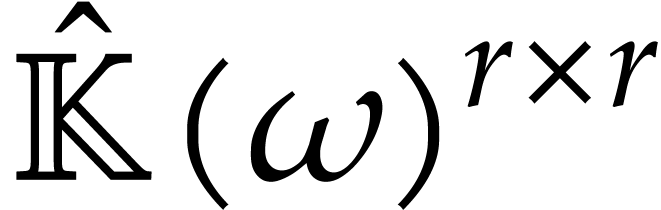

where  is an

is an  matrix with

entries in

matrix with

entries in  and

and  is a

column vector of

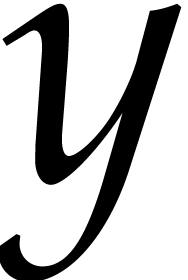

is a

column vector of  unknown functions. Notice that

any system of linear differential equations

unknown functions. Notice that

any system of linear differential equations  with

with

can be rewritten in this form by taking

can be rewritten in this form by taking  to be a multiple of all denominators.

to be a multiple of all denominators.

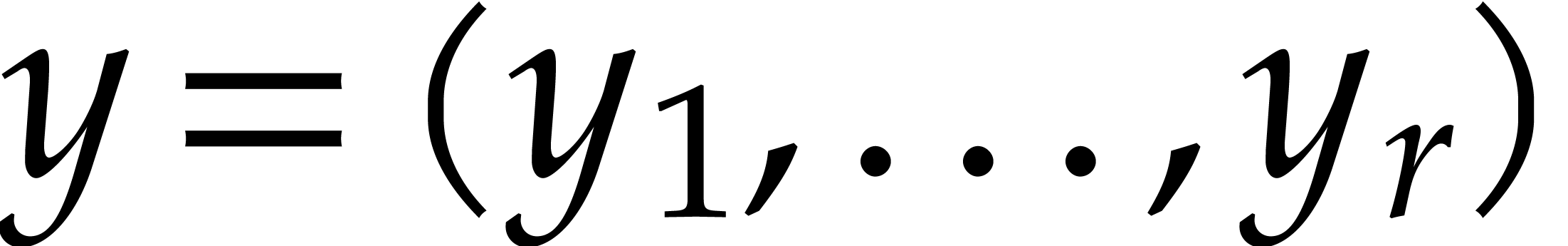

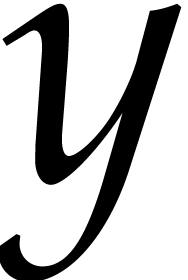

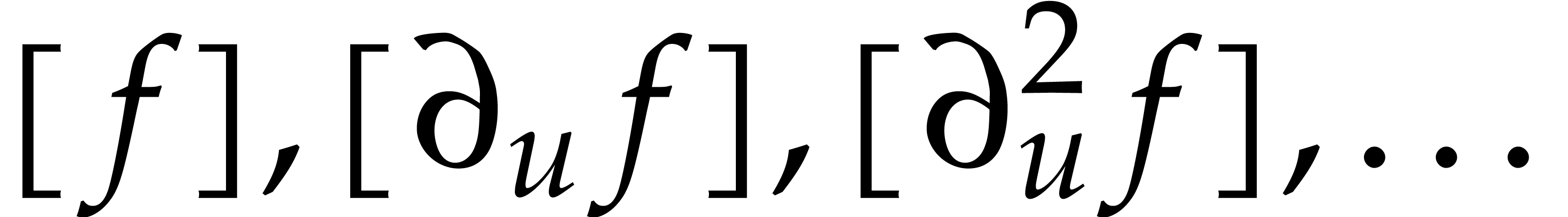

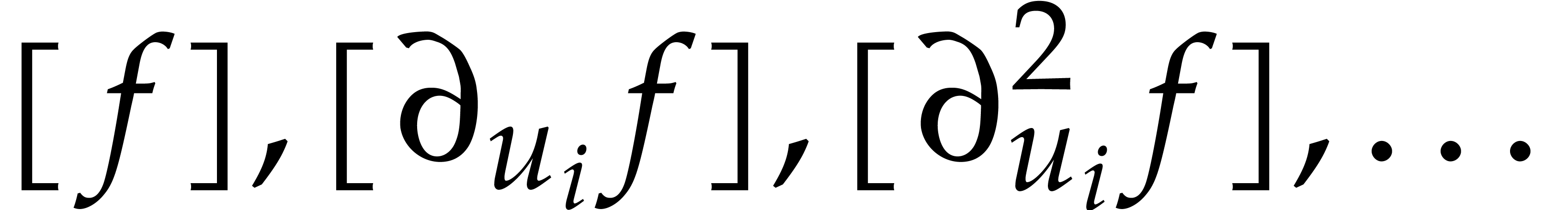

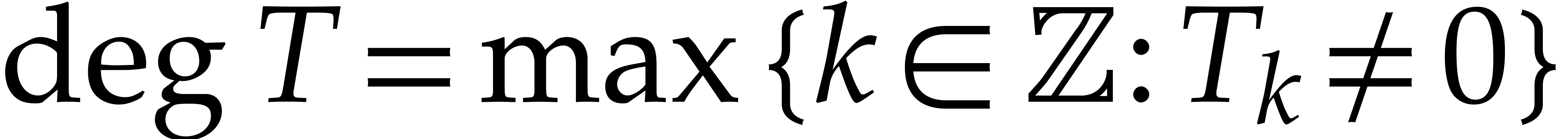

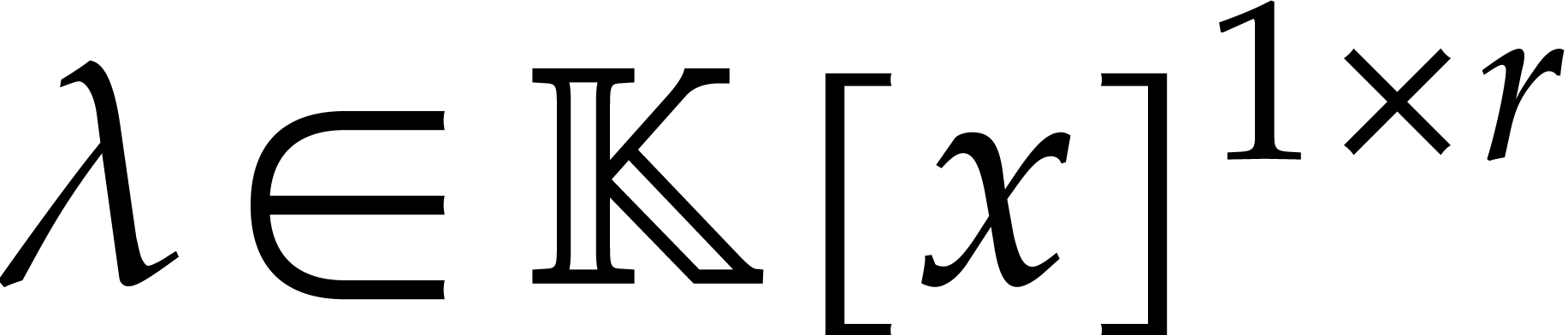

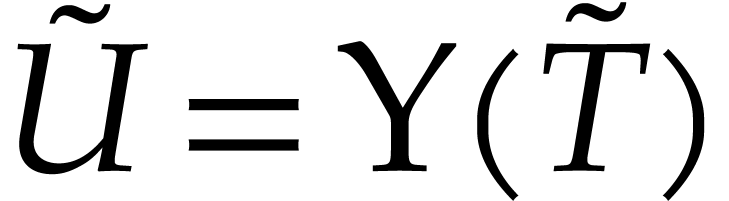

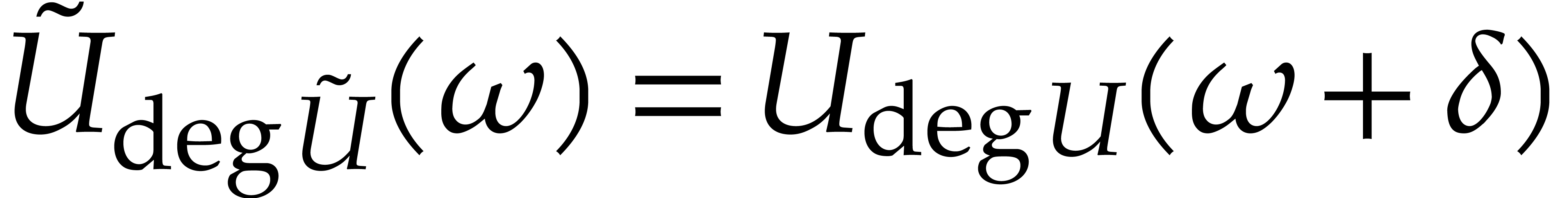

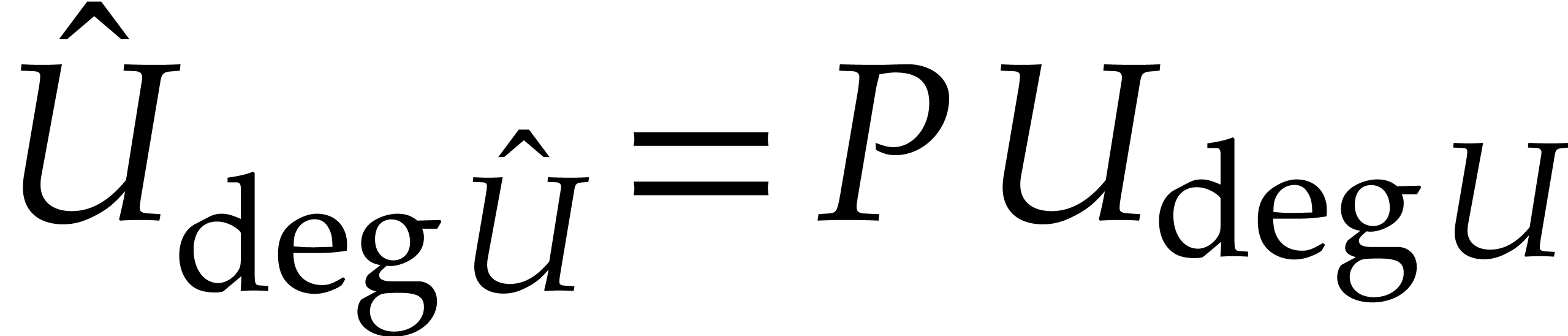

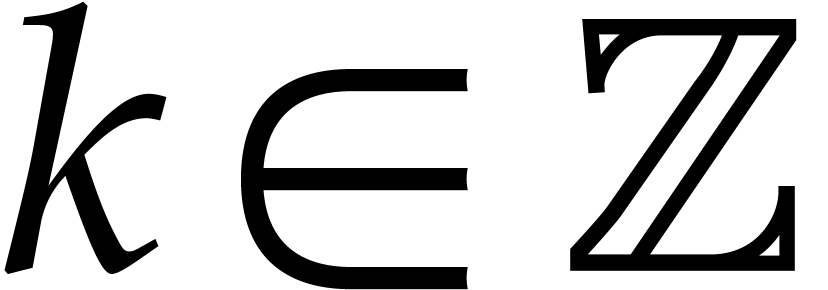

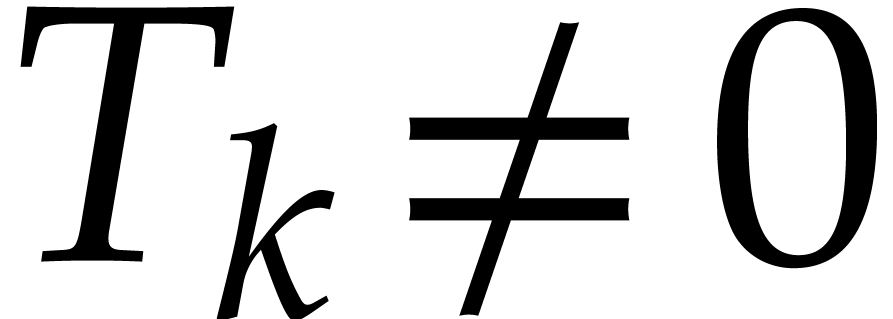

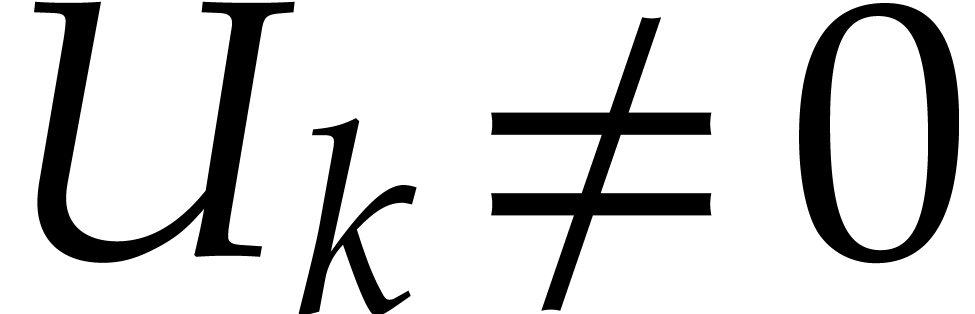

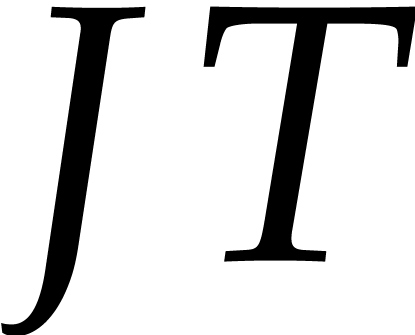

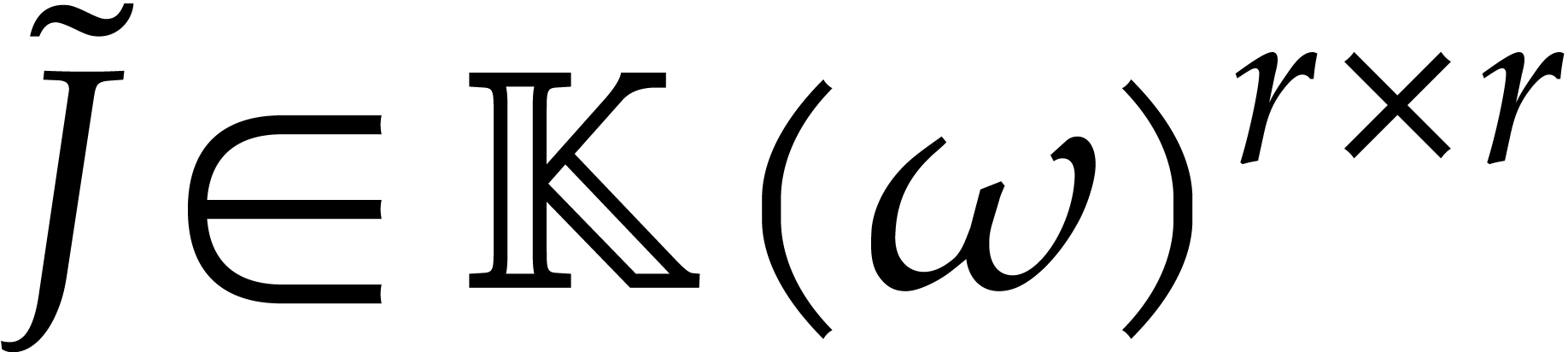

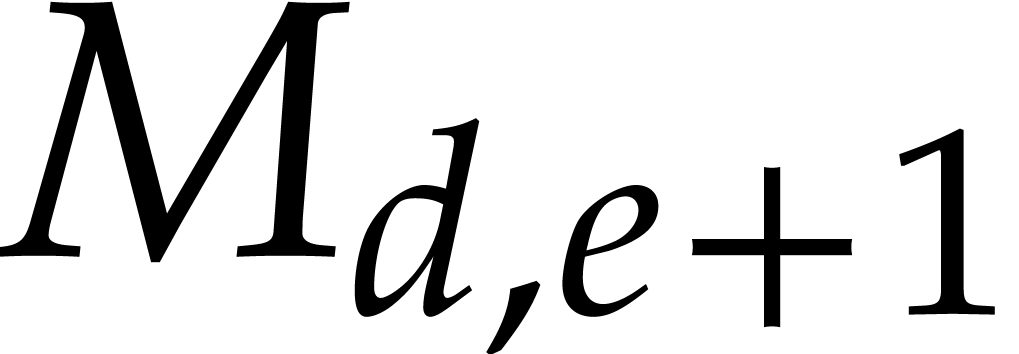

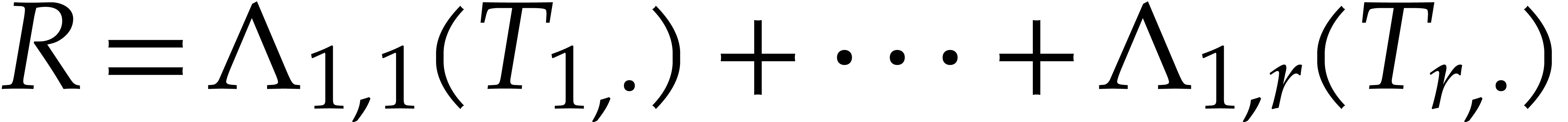

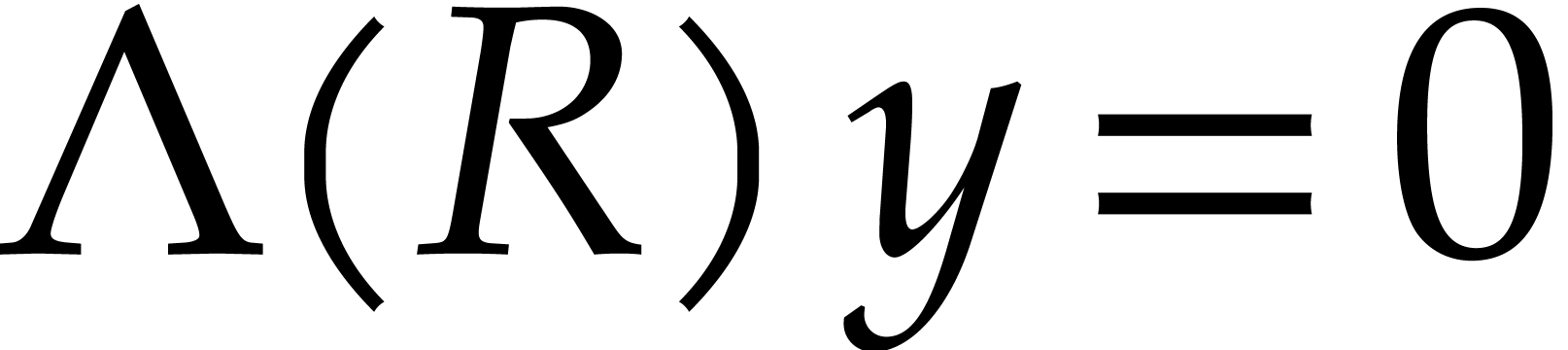

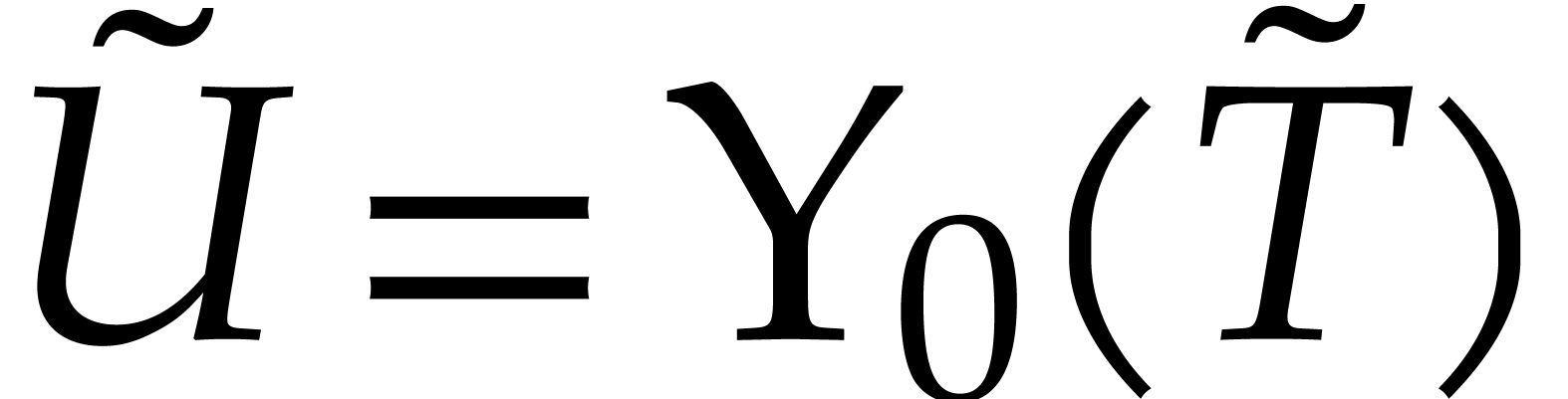

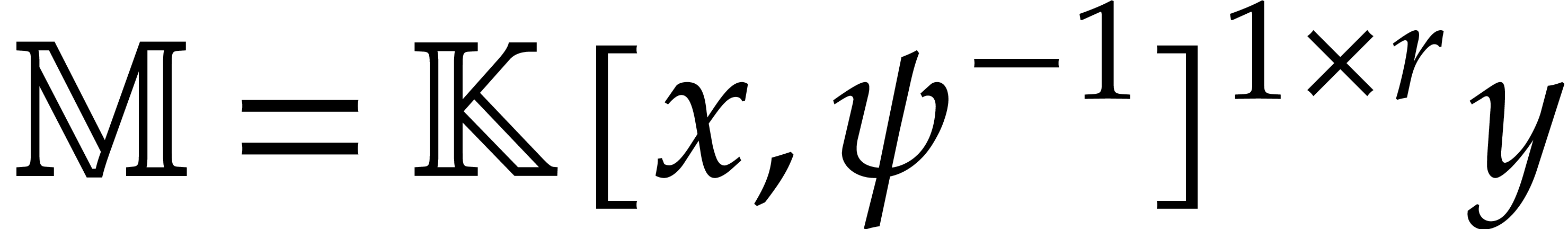

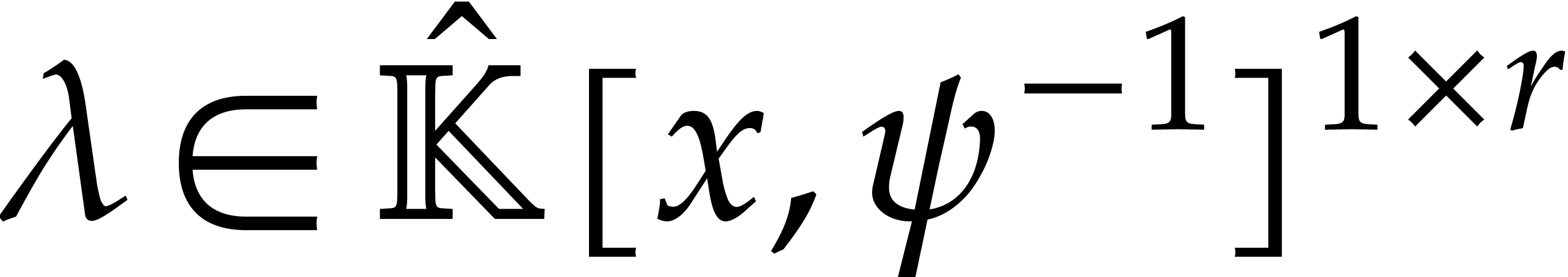

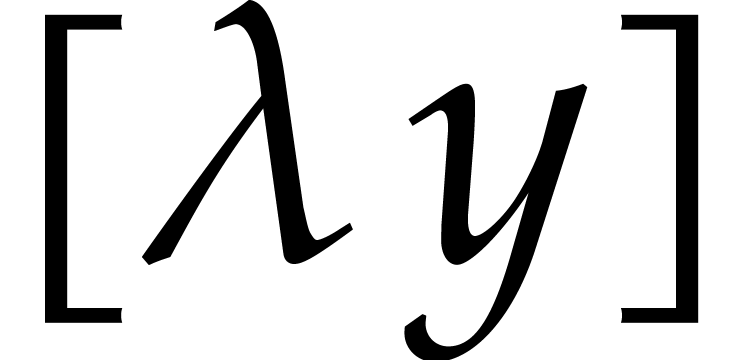

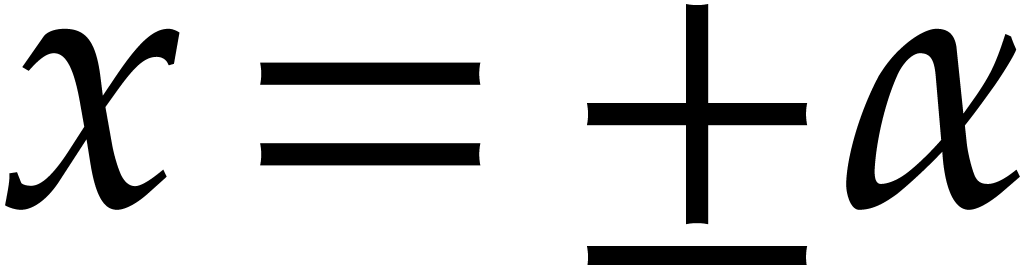

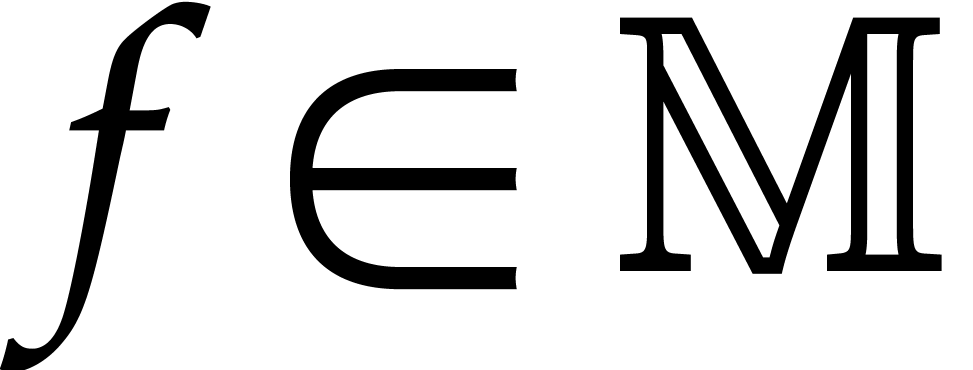

Let  be a formal solution of (1.1)

and consider the

be a formal solution of (1.1)

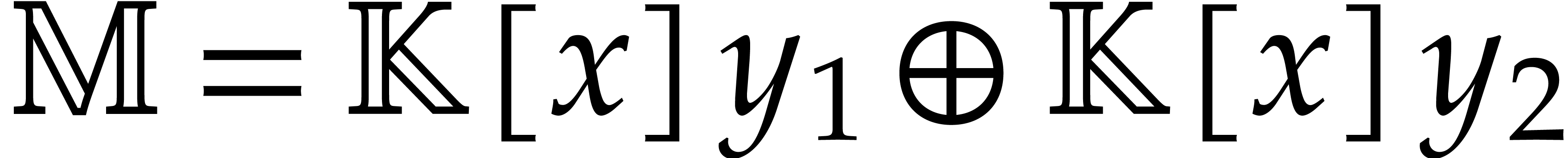

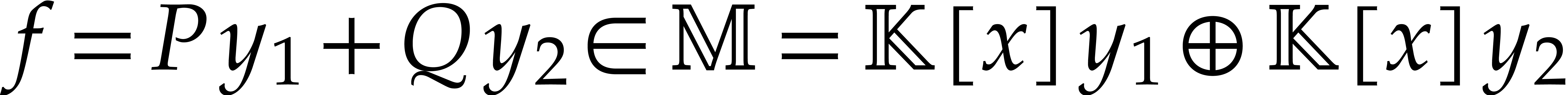

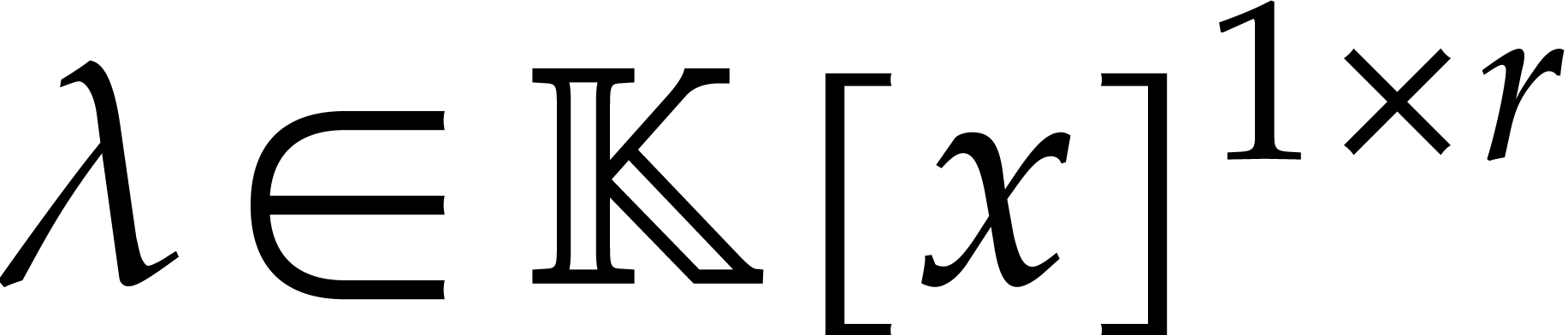

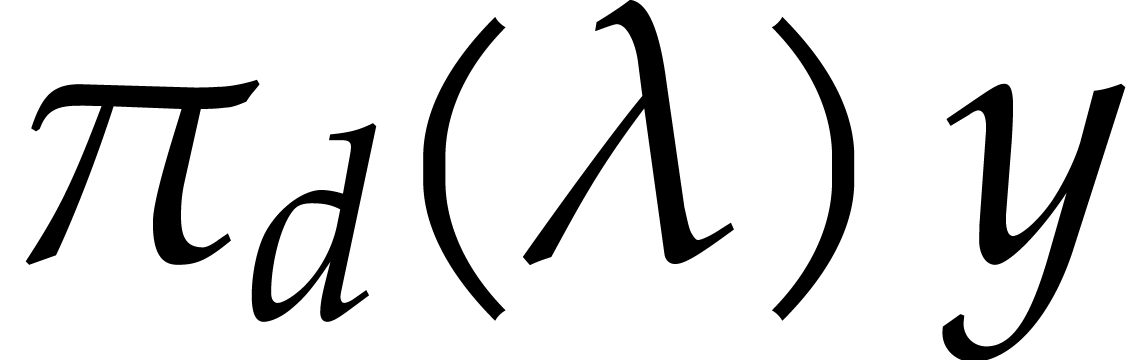

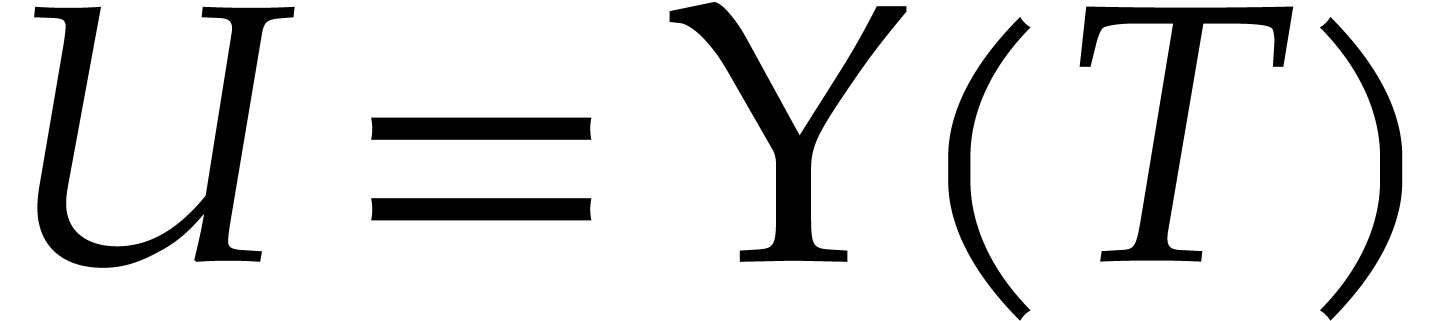

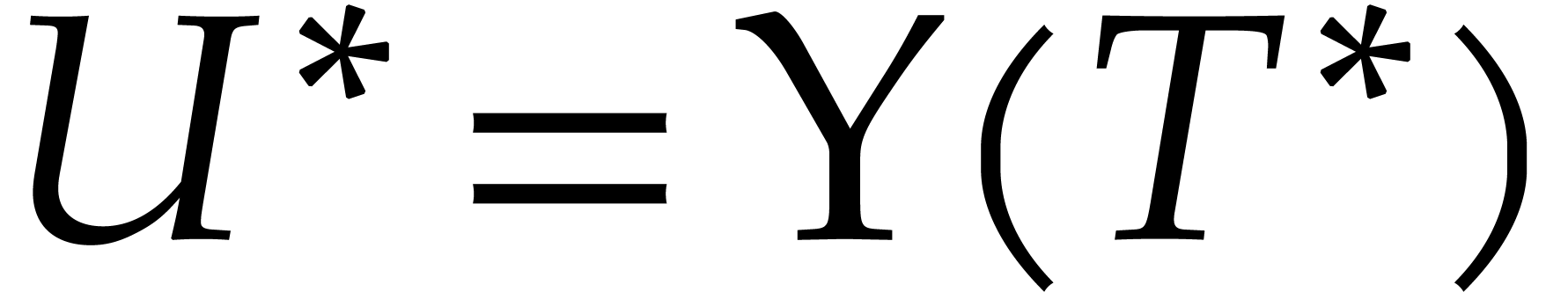

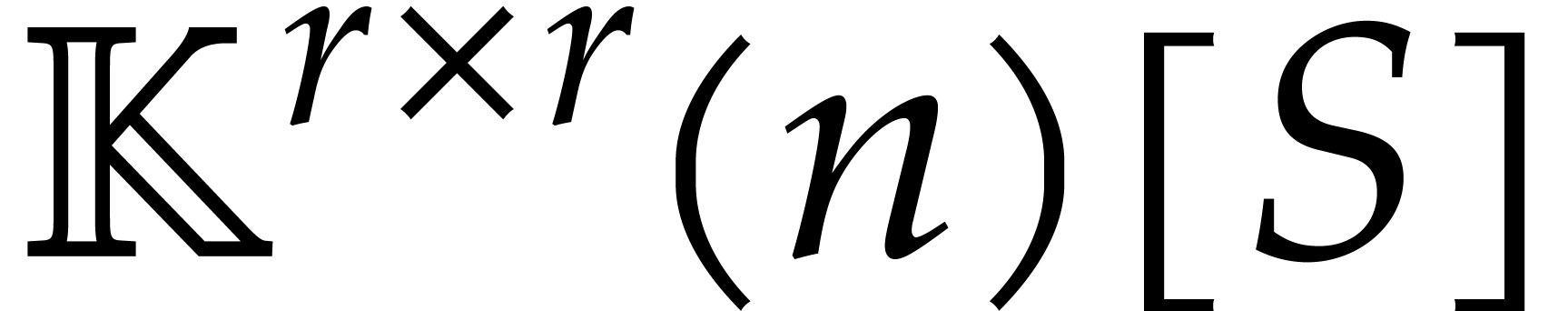

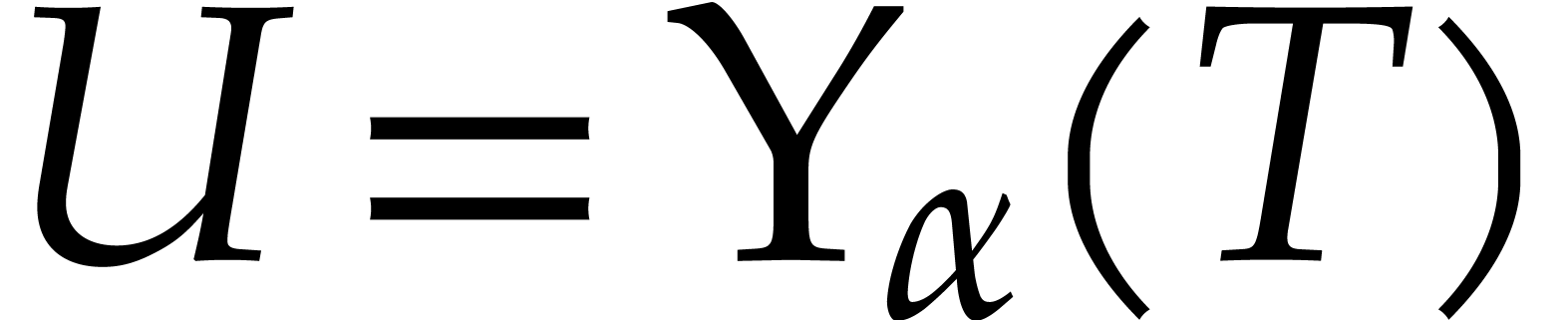

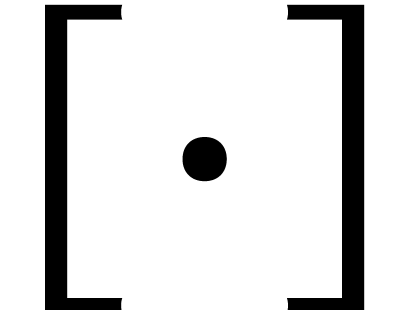

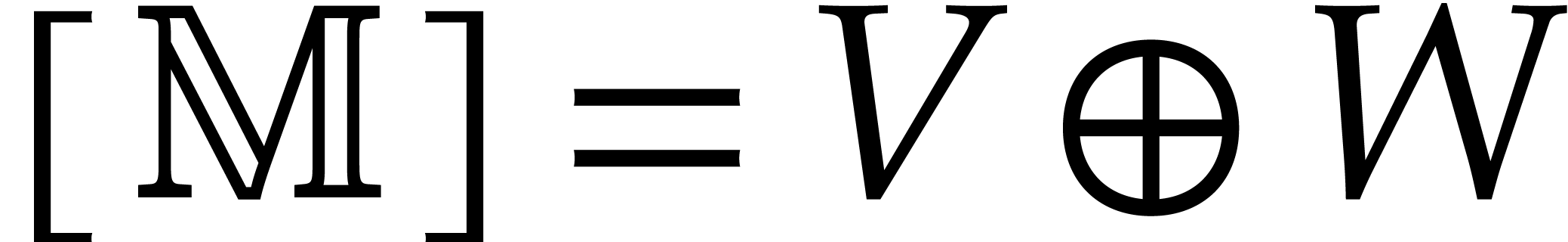

and consider the  -module

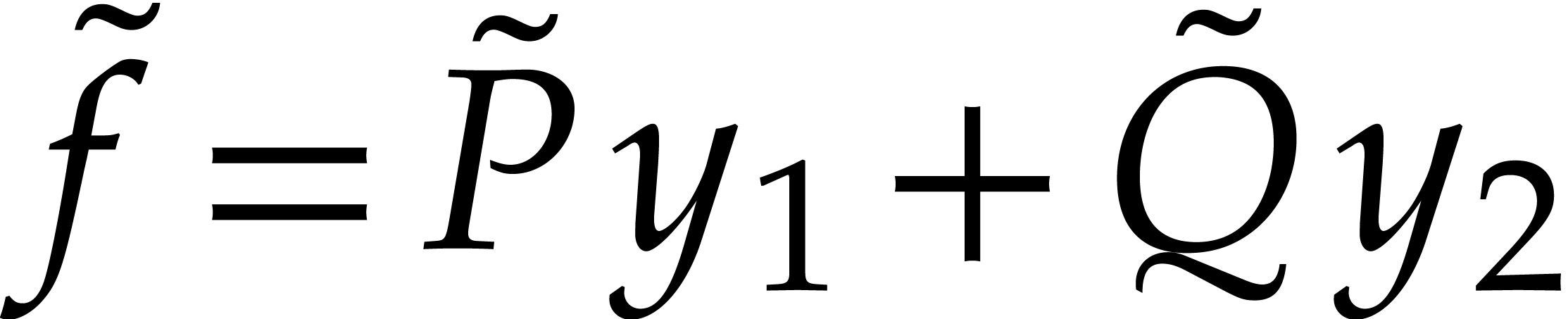

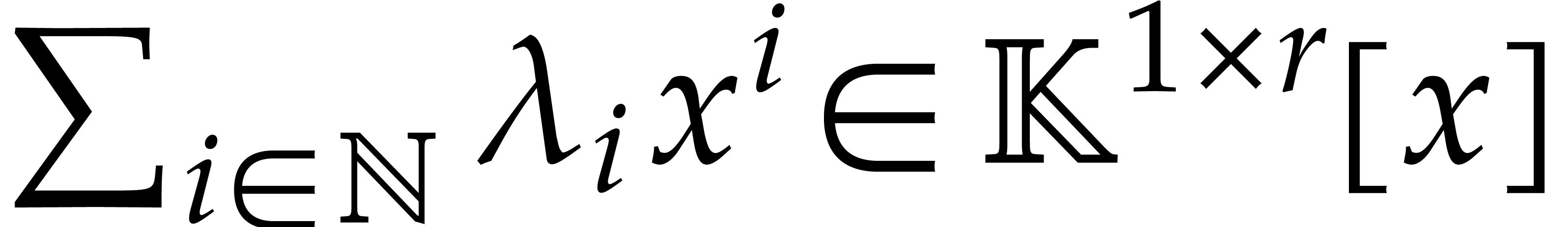

-module  of linear combinations

of linear combinations  where

where

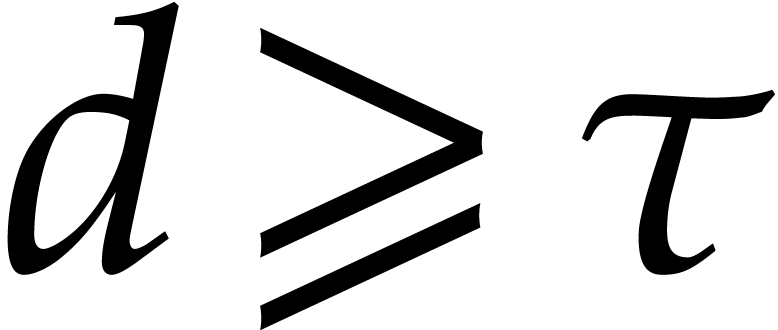

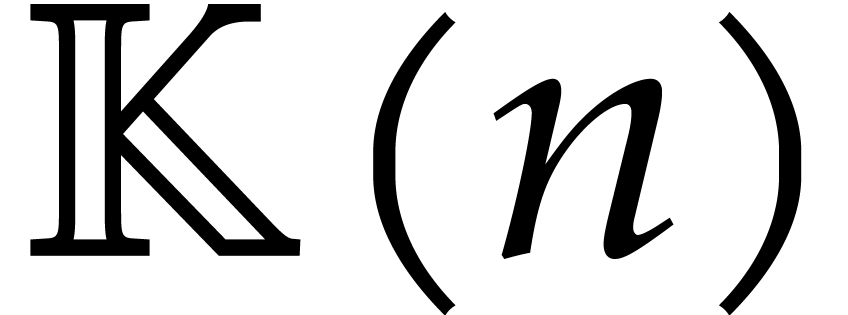

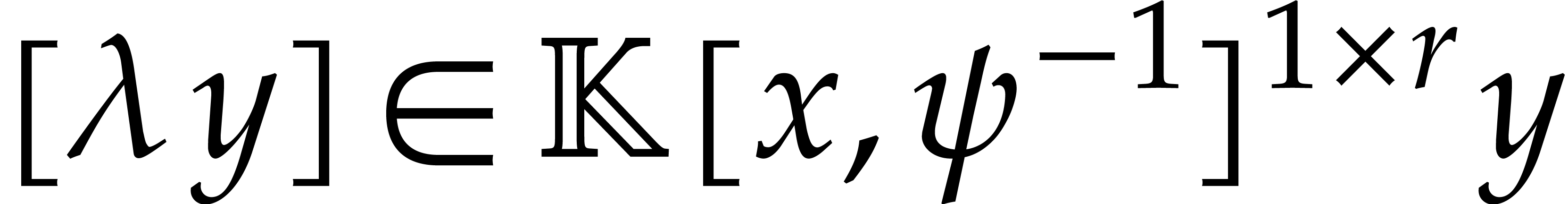

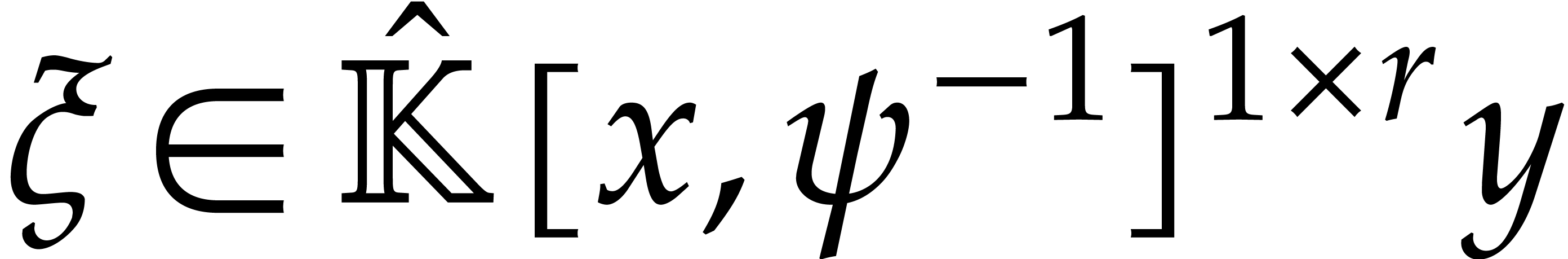

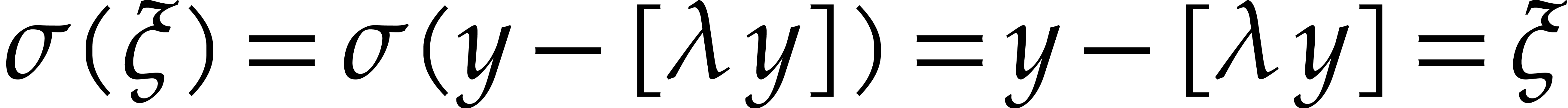

is a row vector. Then

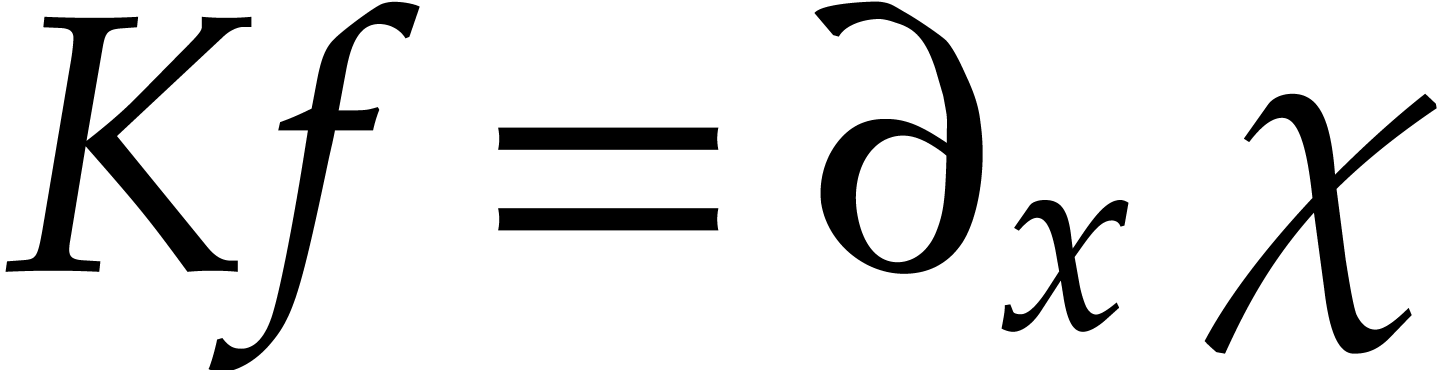

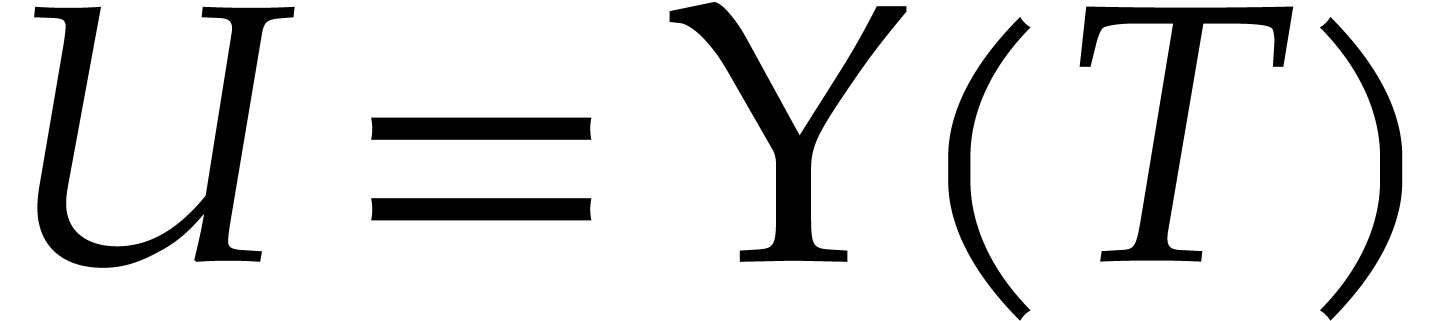

is a row vector. Then  has the natural structure of a D-module for the derivation

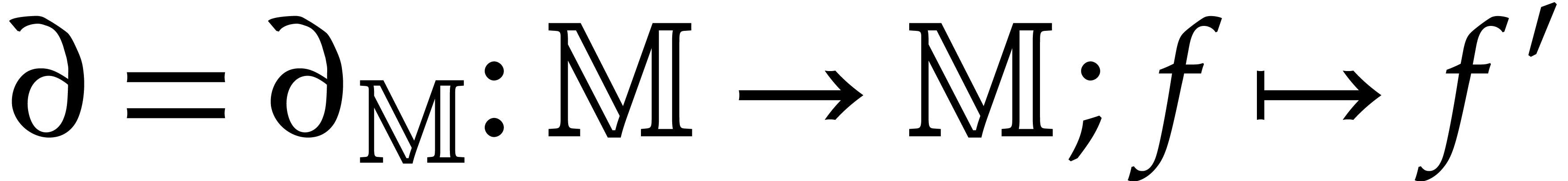

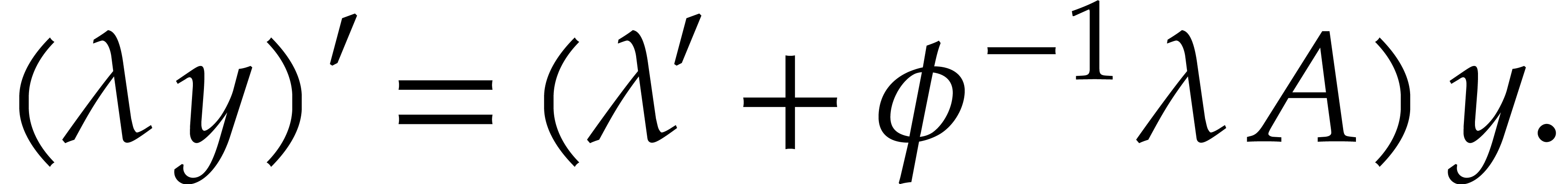

has the natural structure of a D-module for the derivation  defined by

defined by

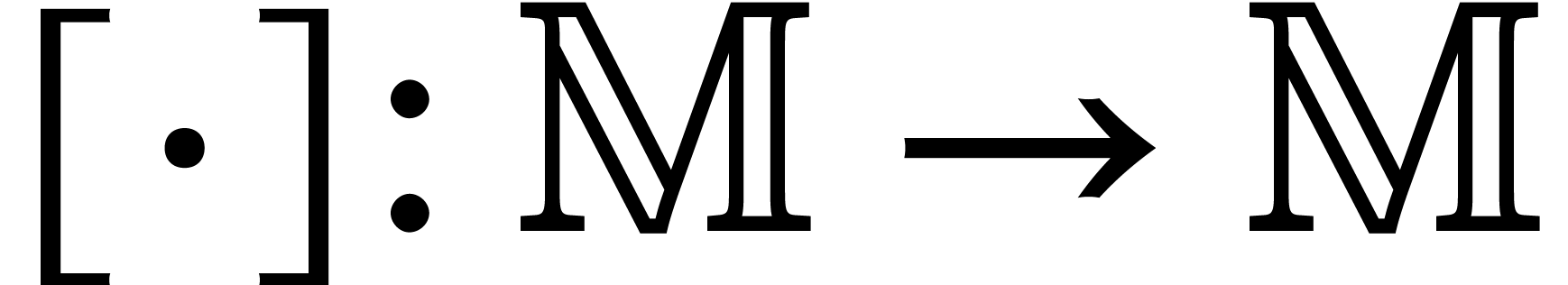

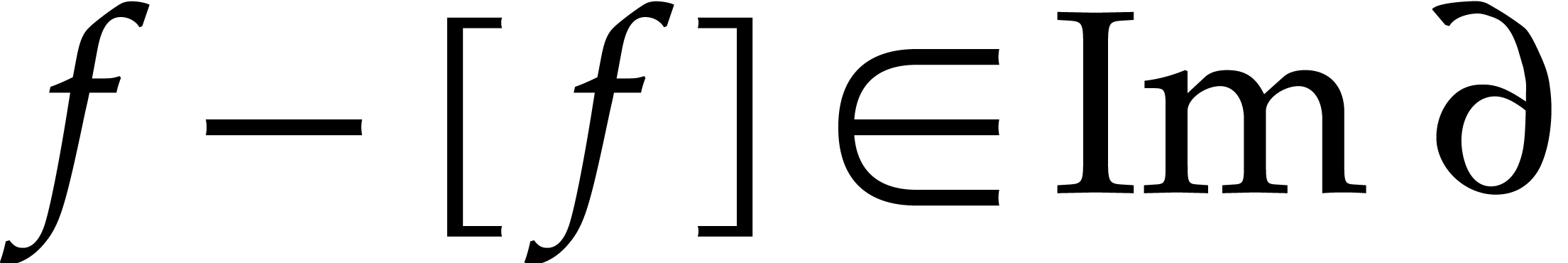

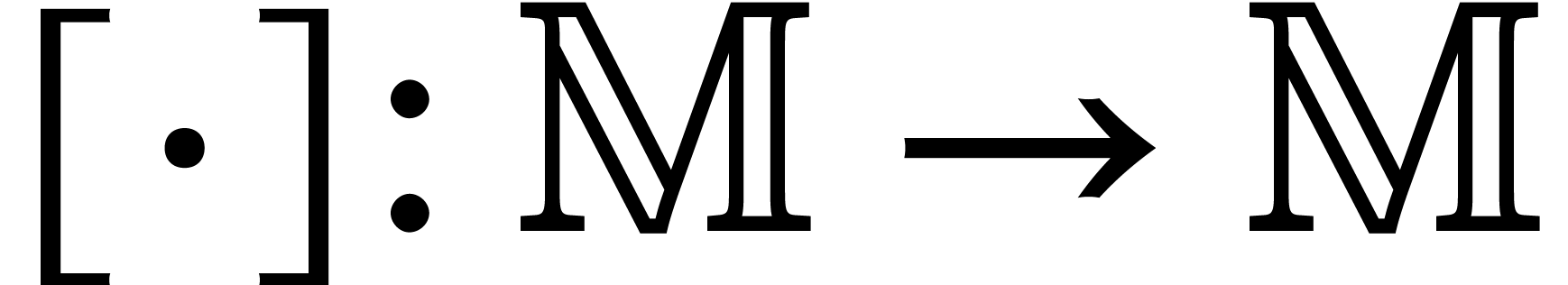

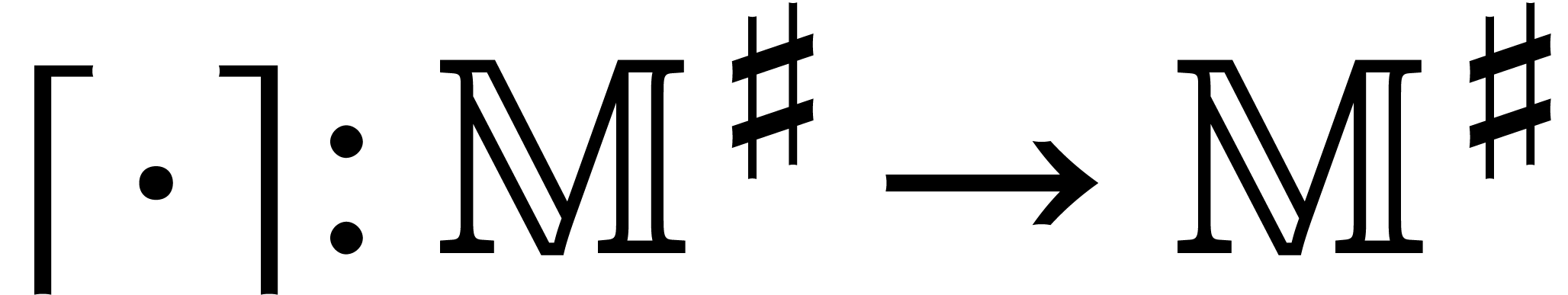

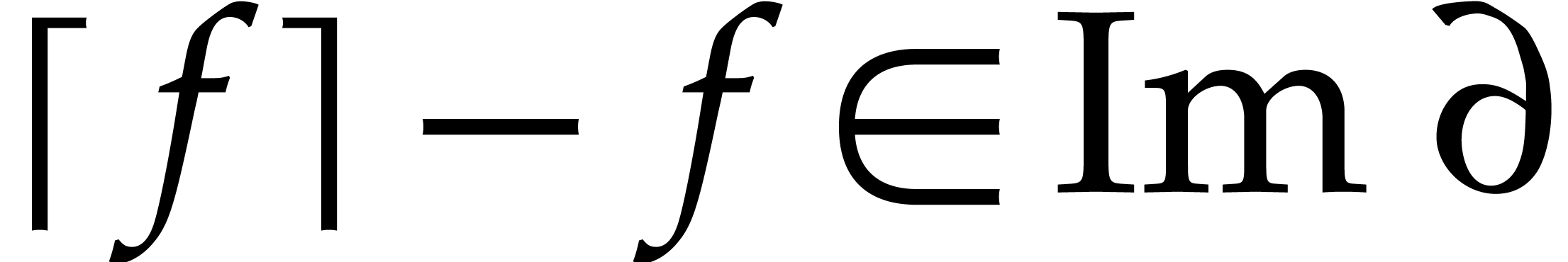

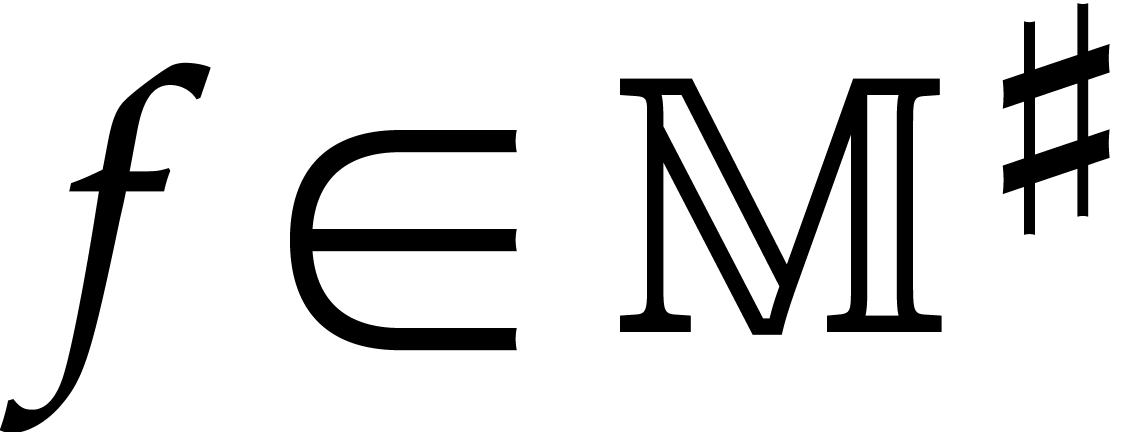

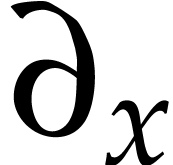

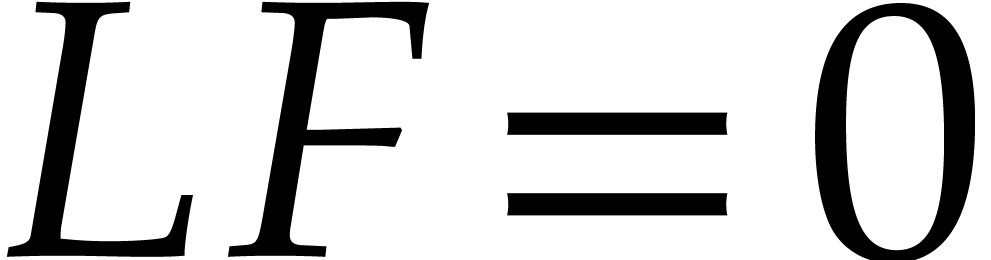

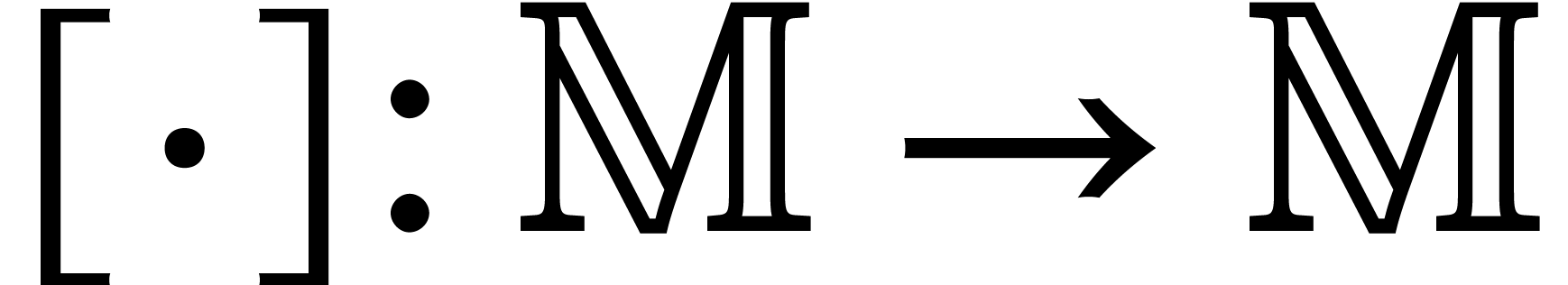

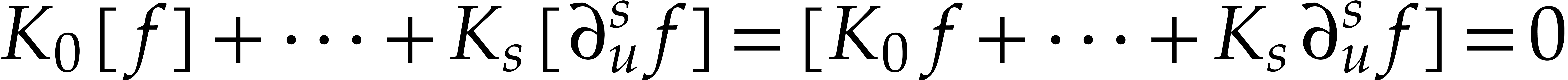

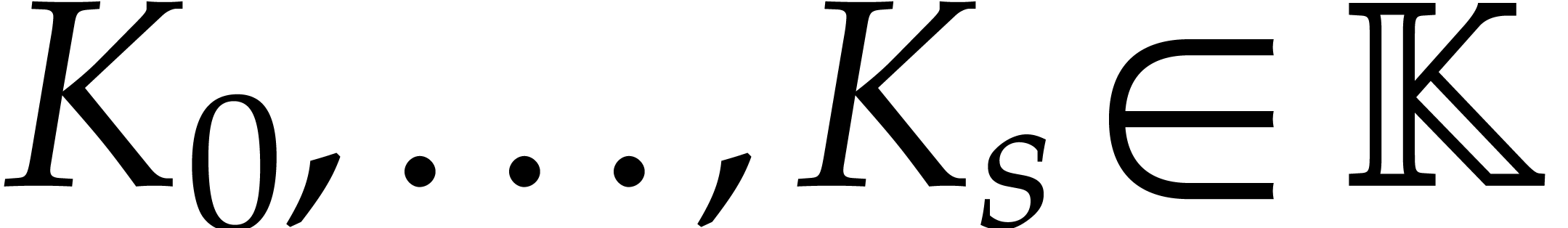

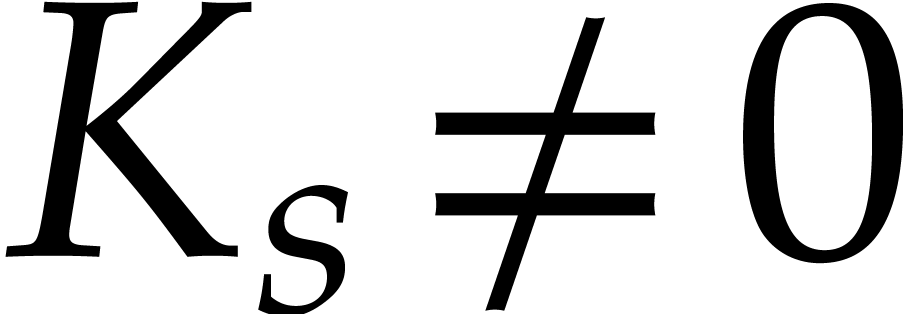

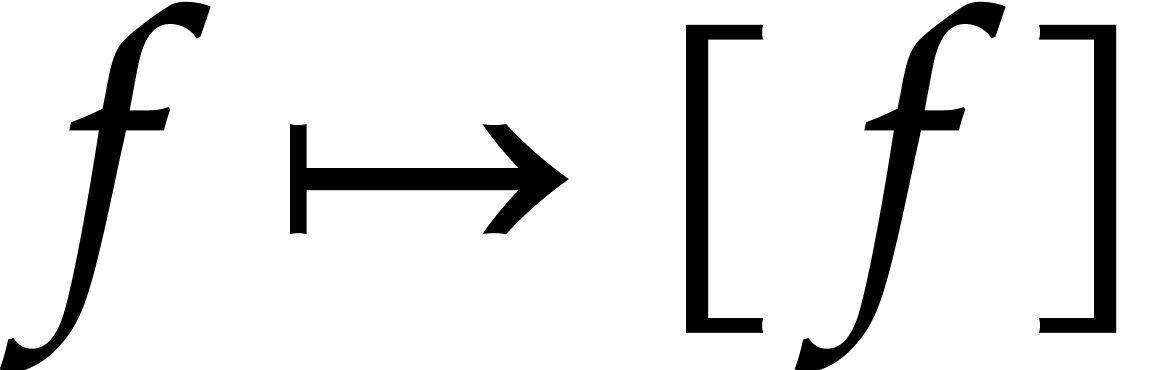

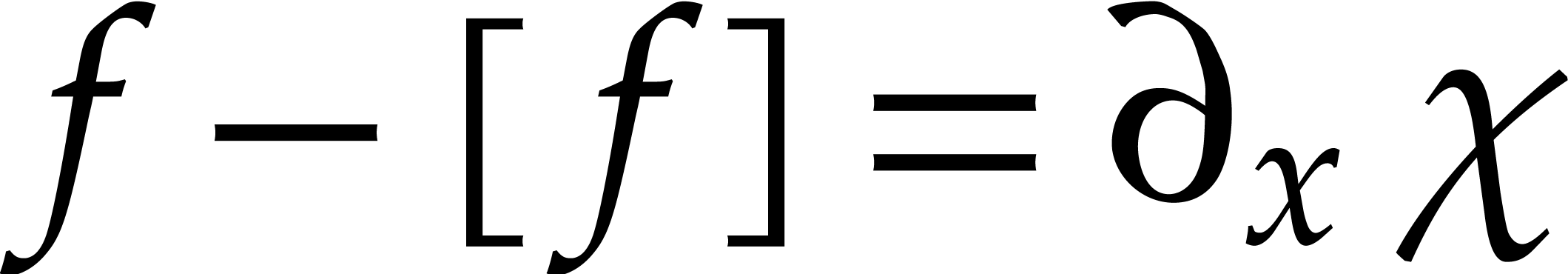

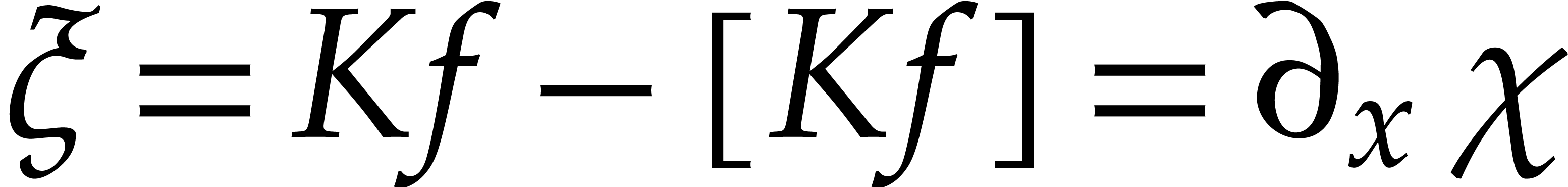

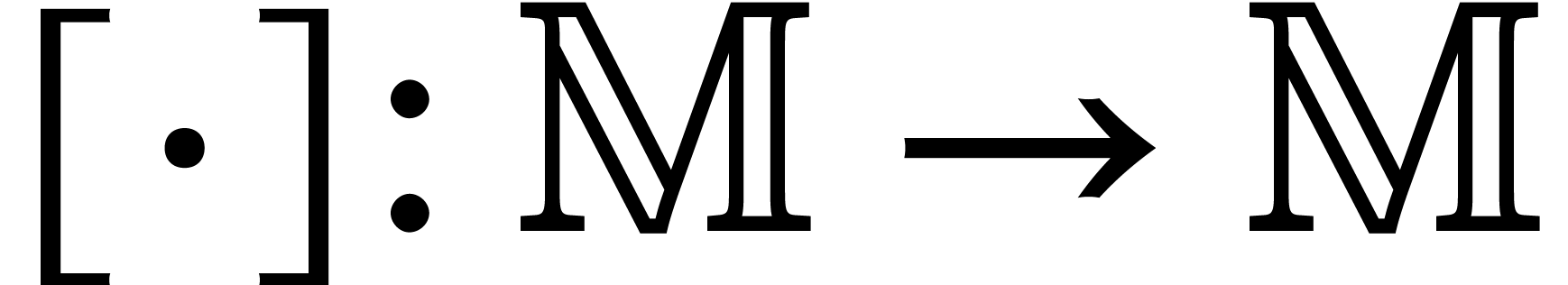

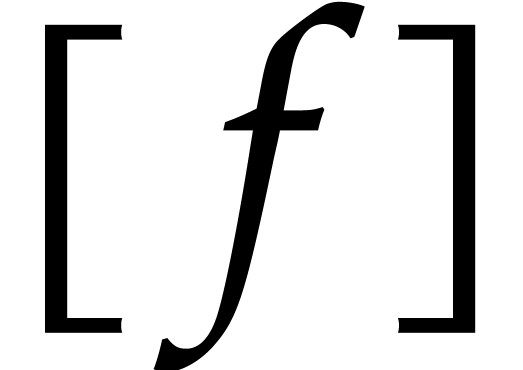

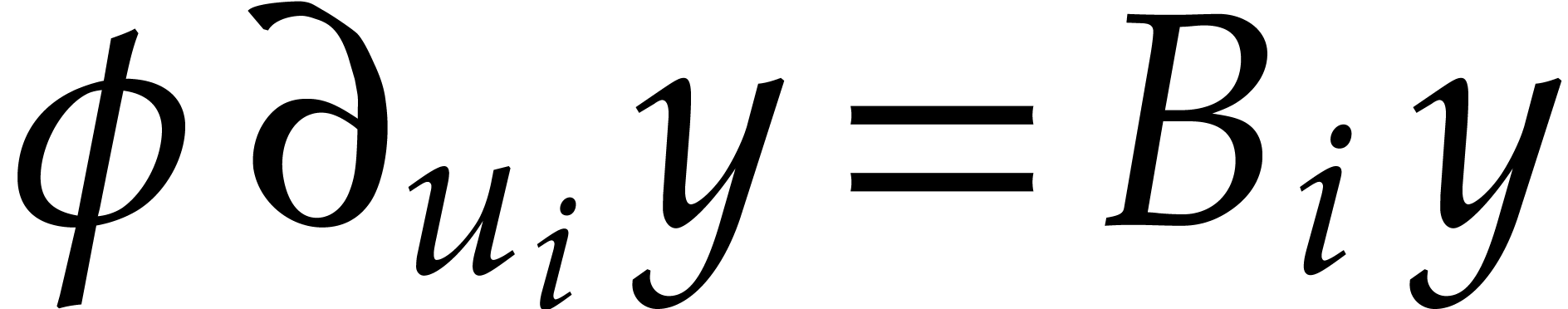

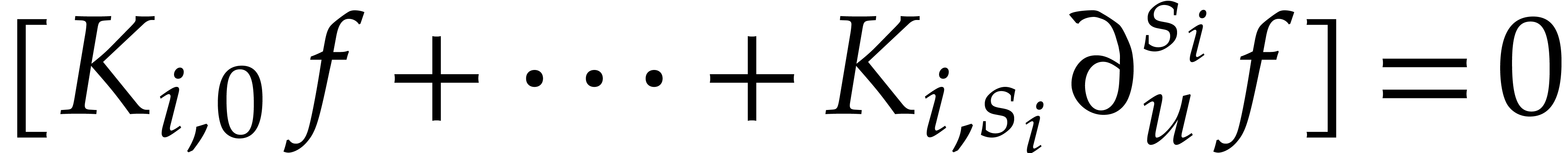

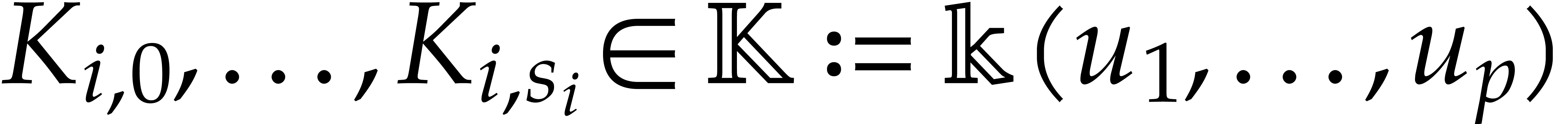

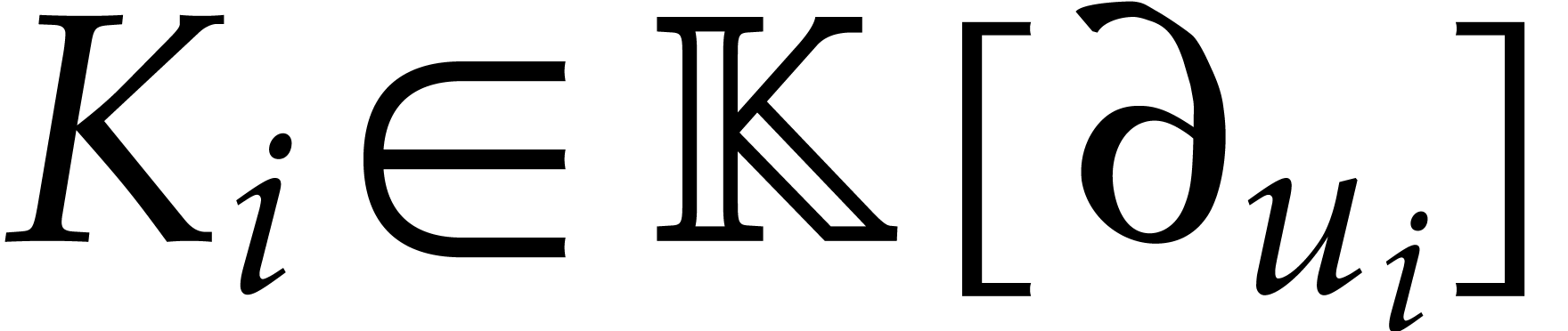

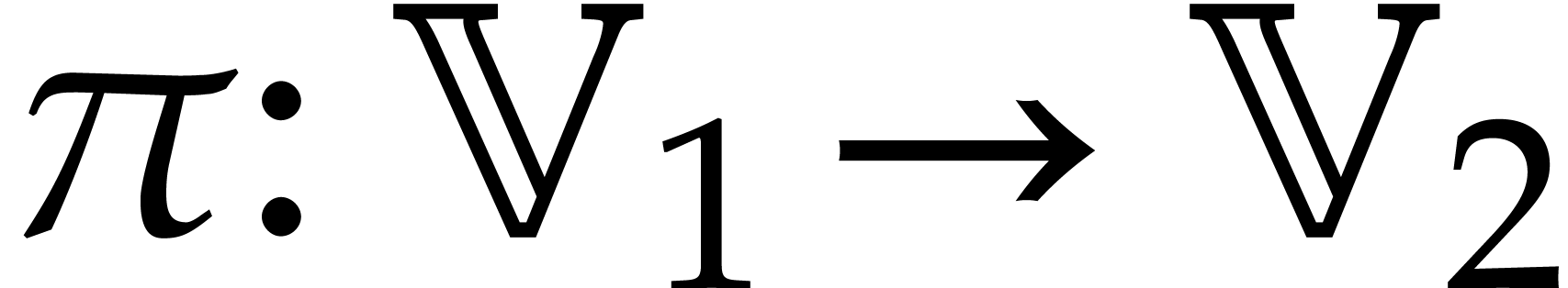

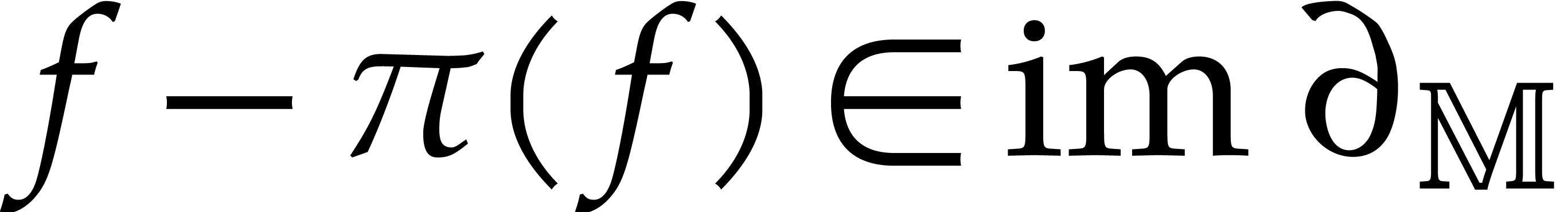

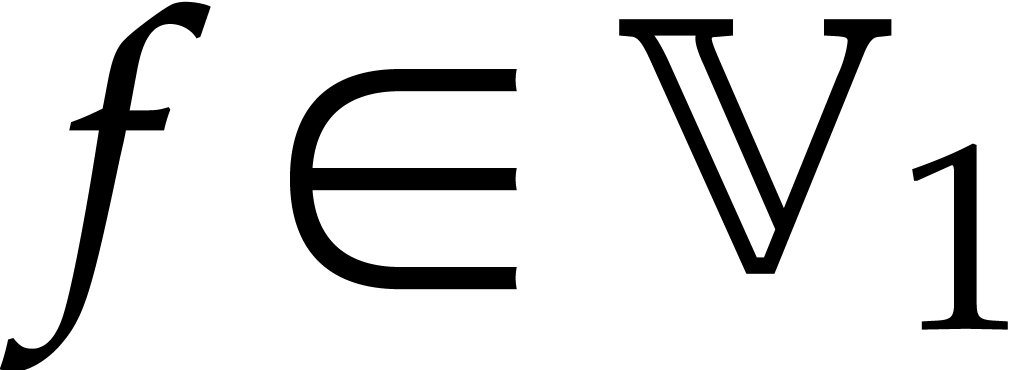

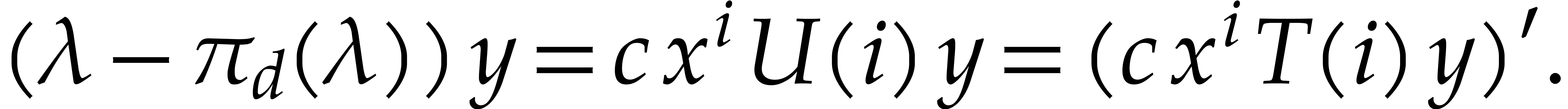

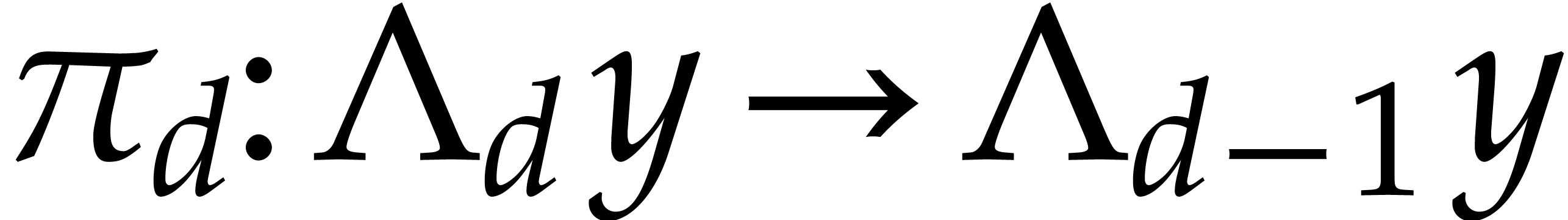

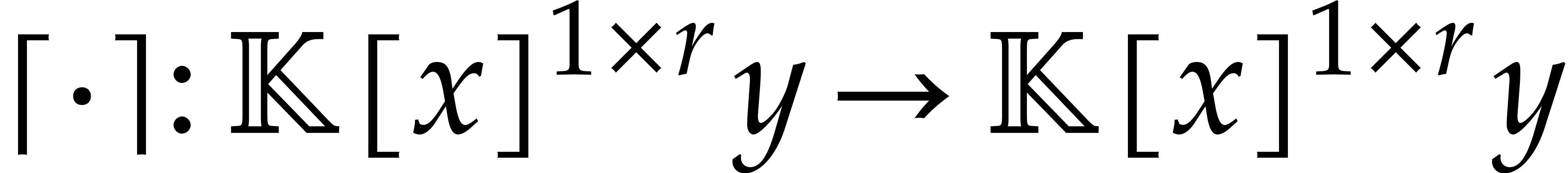

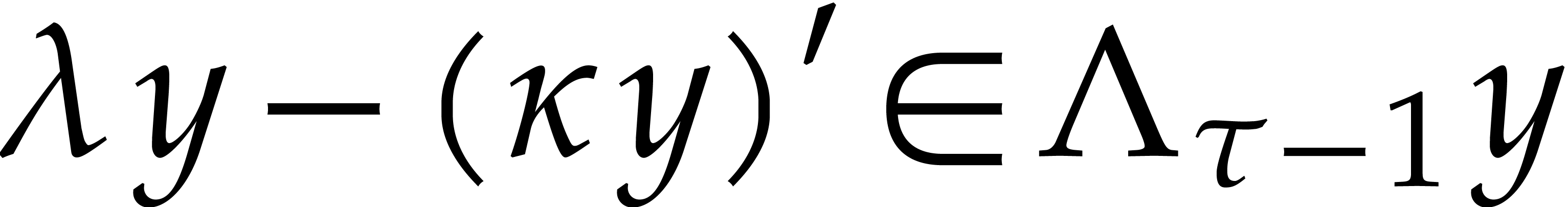

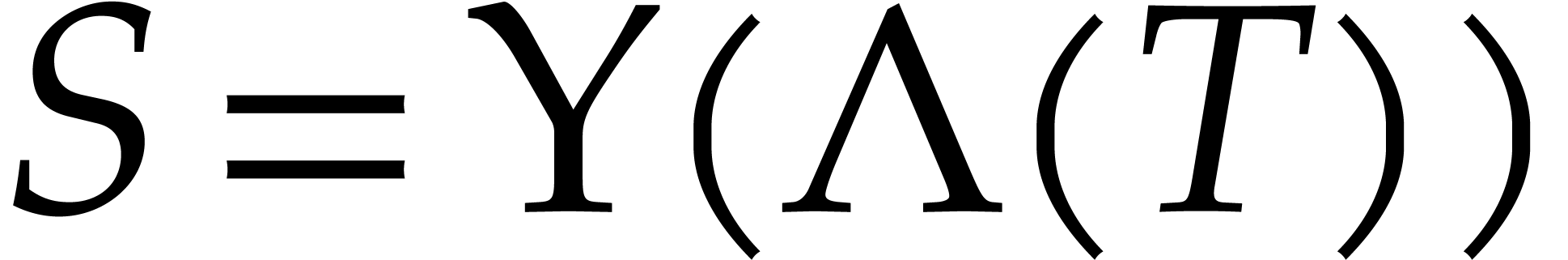

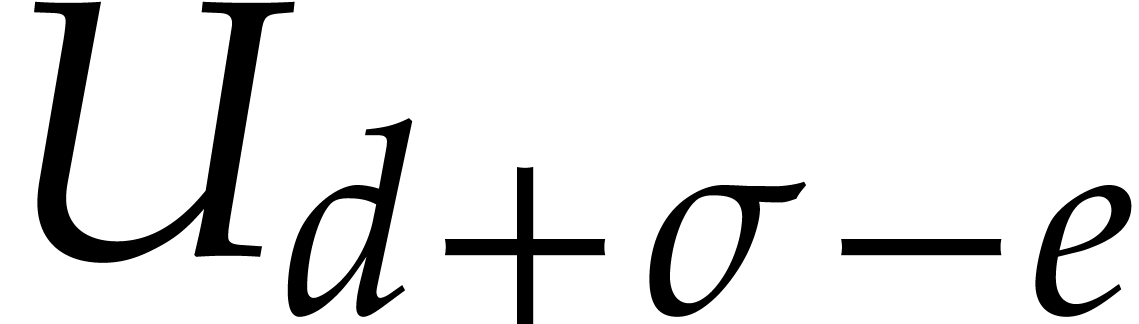

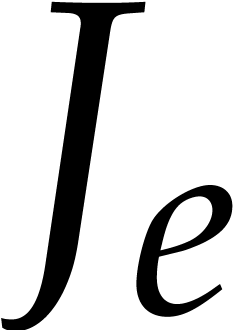

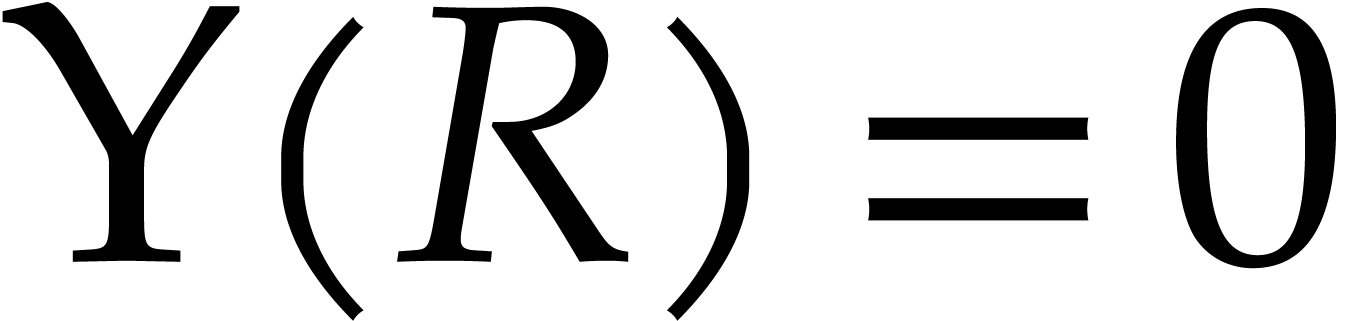

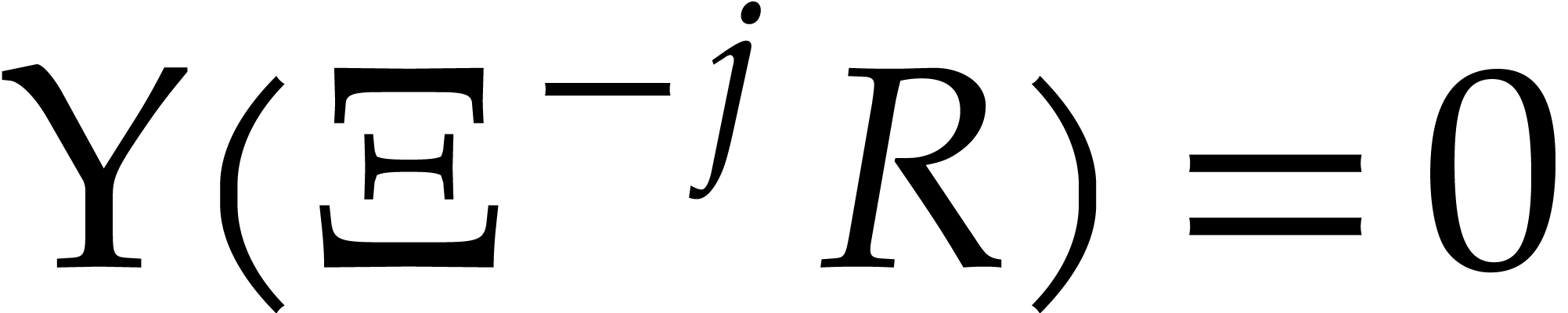

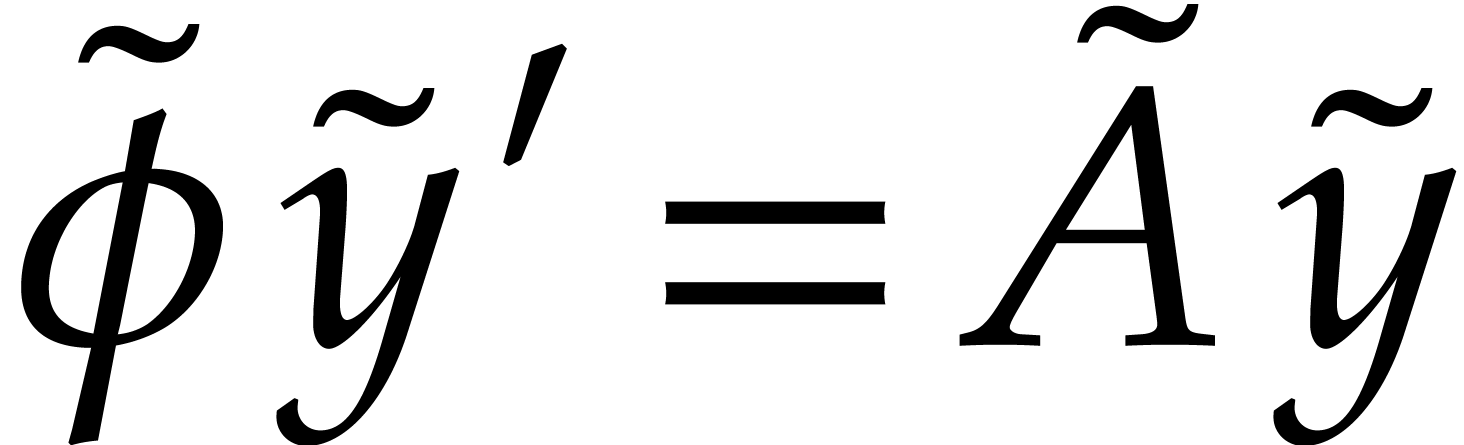

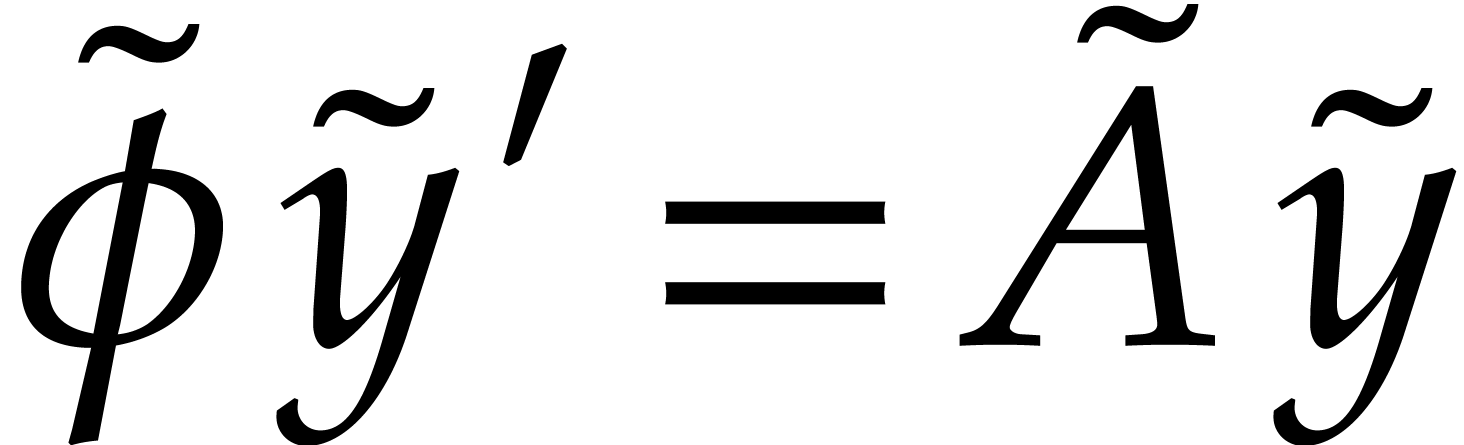

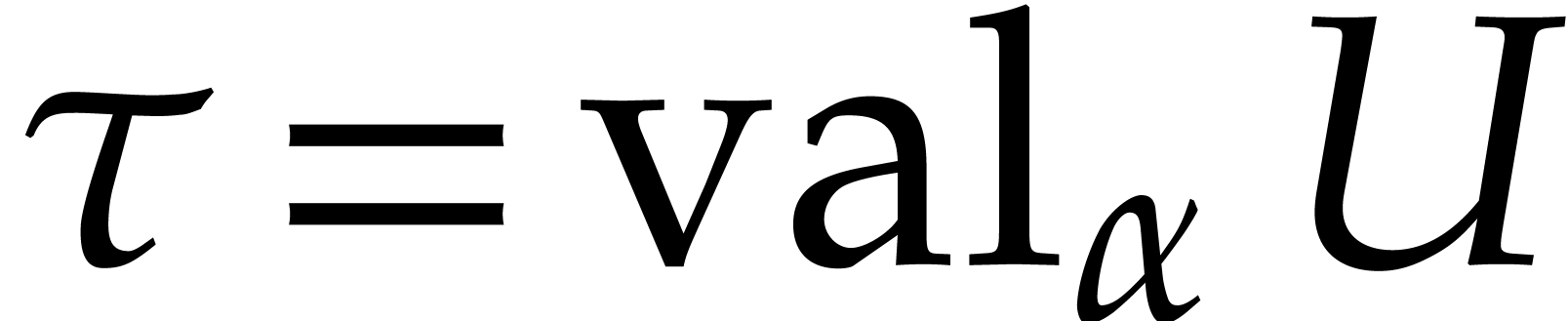

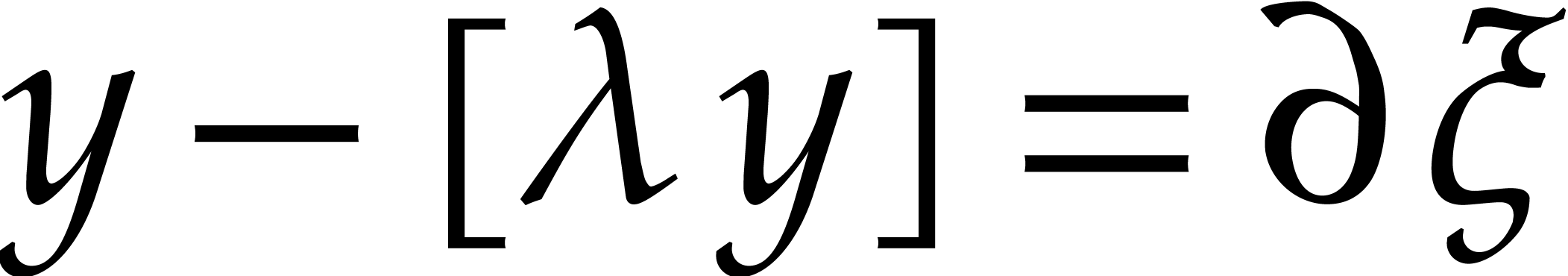

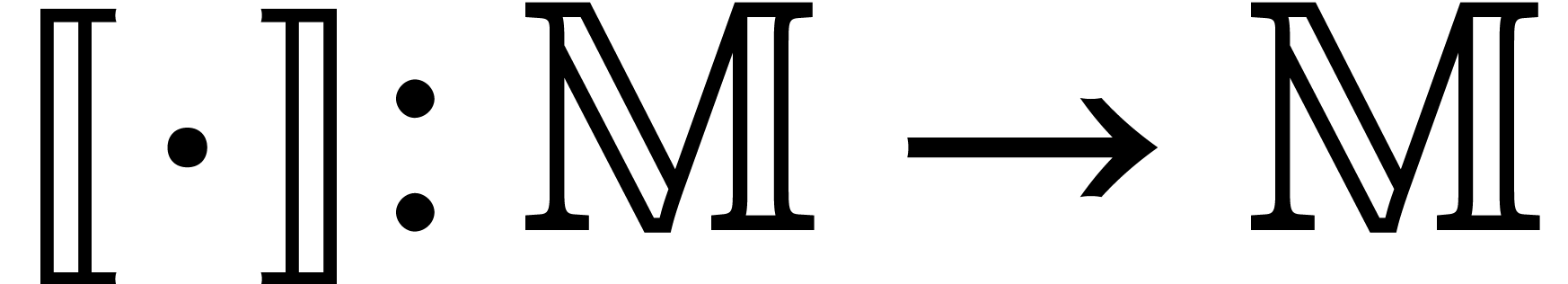

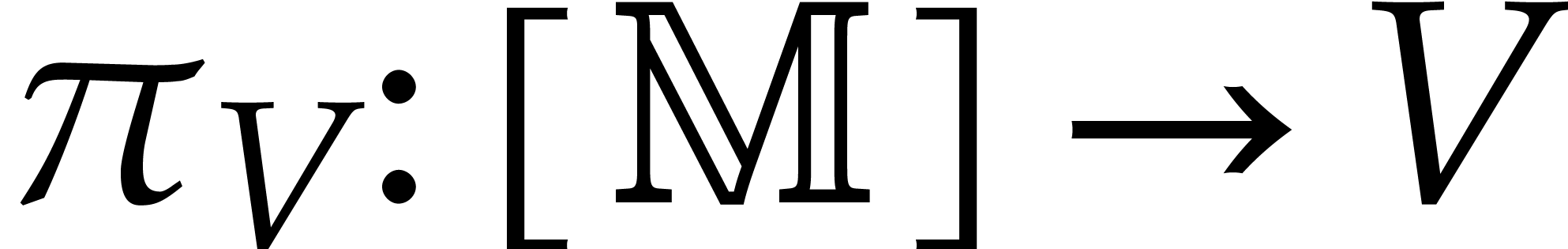

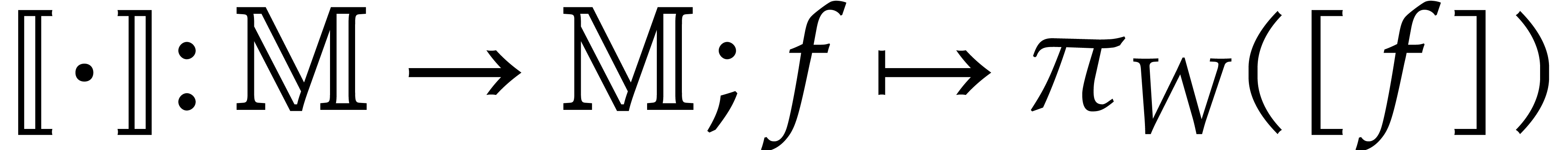

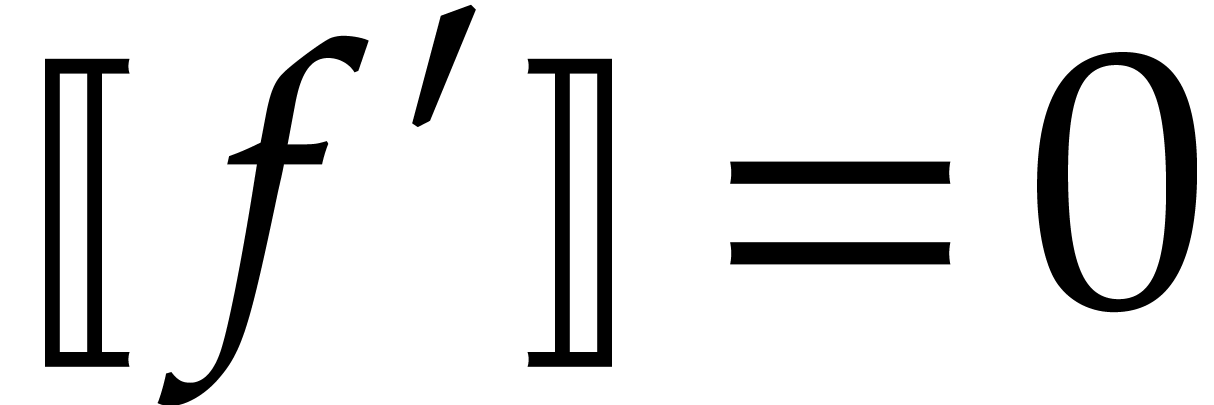

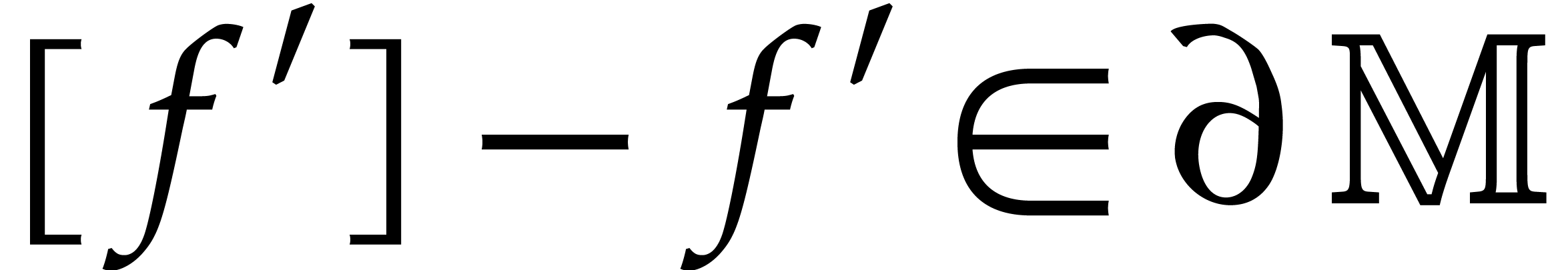

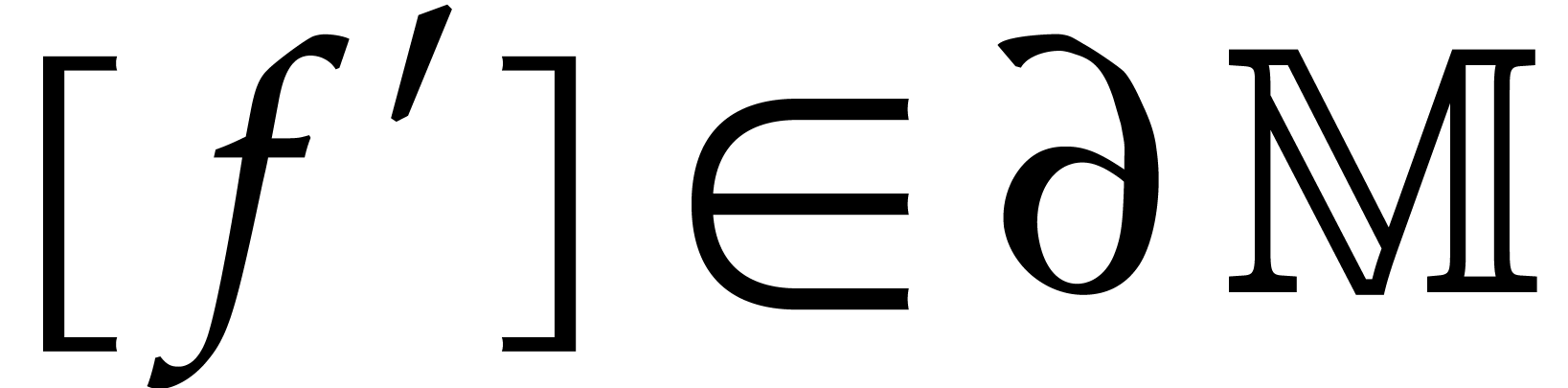

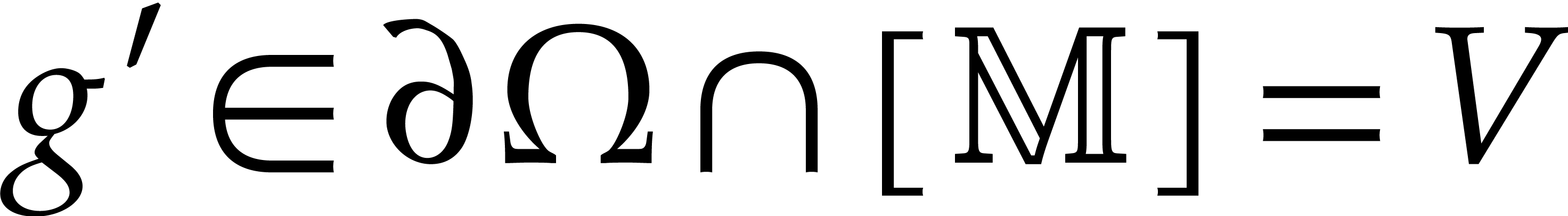

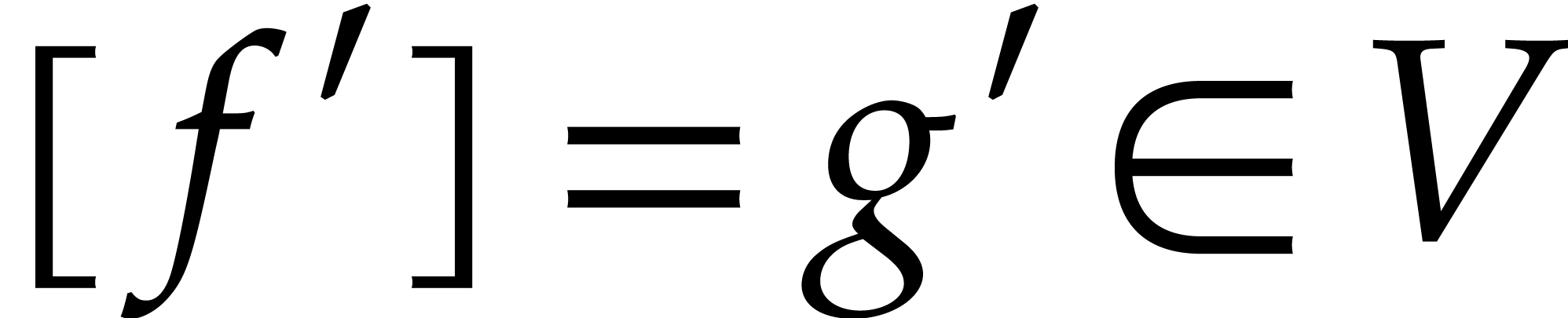

A  -linear mapping

-linear mapping  is said to be a reduction for (1.1)

if

is said to be a reduction for (1.1)

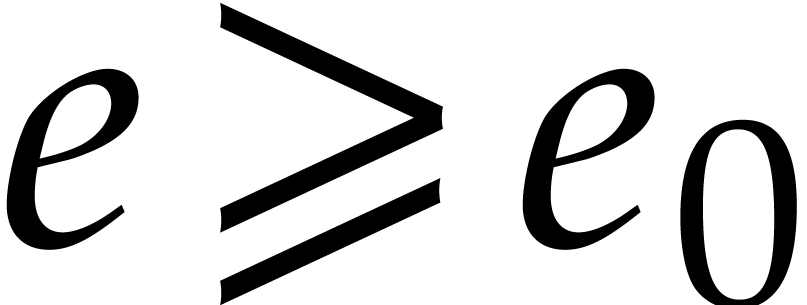

if  for all

for all  .

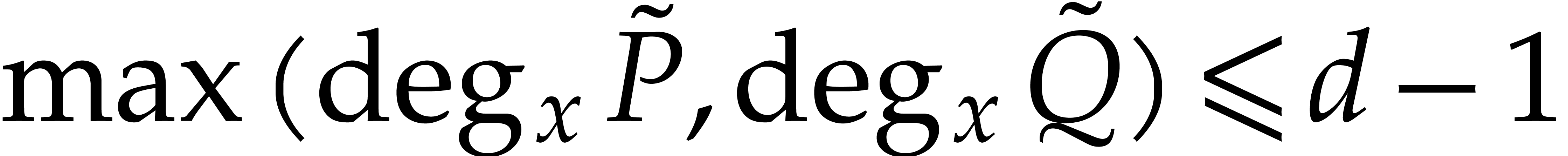

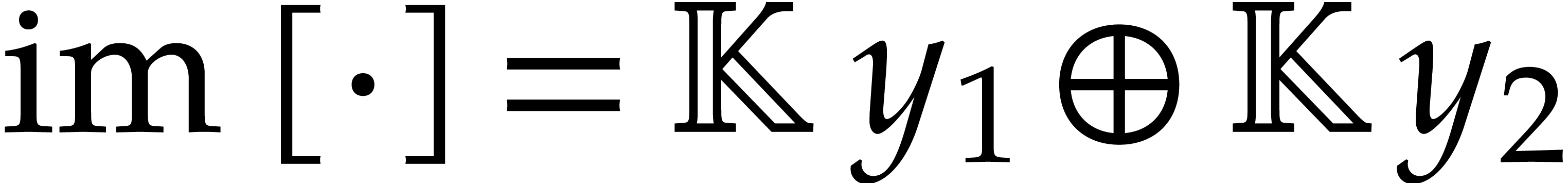

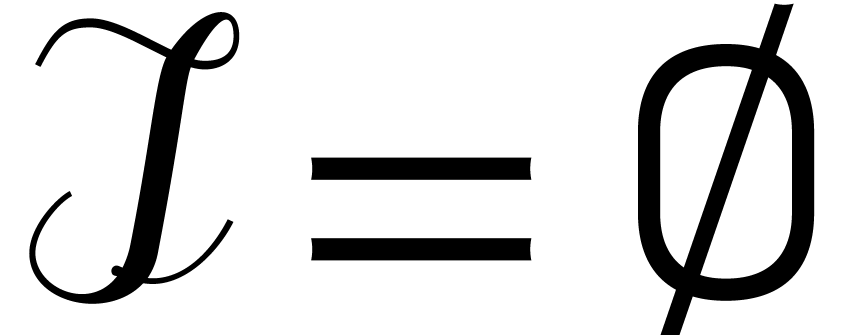

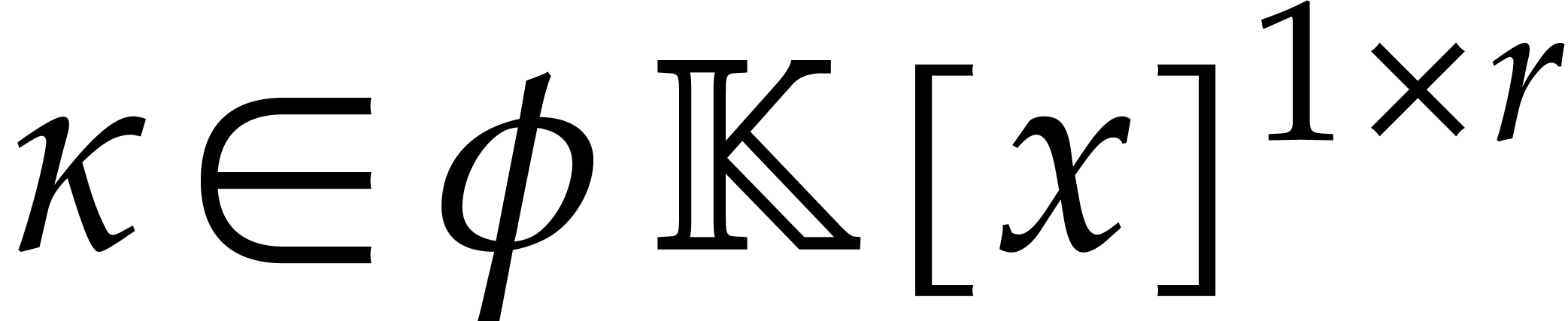

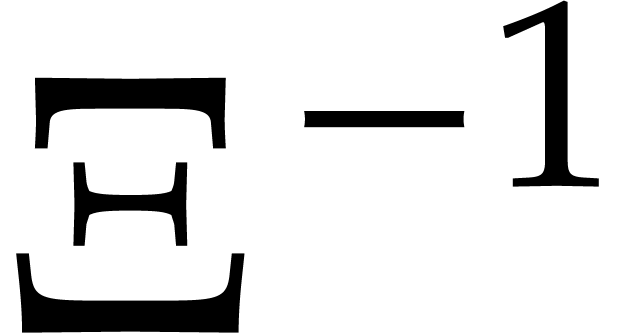

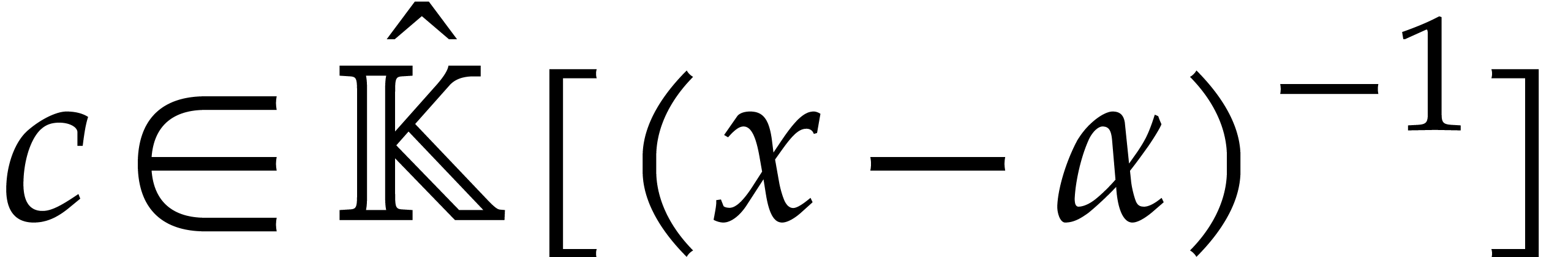

Such a reduction is said to be confined if its image is a

finite dimensional subspace of

.

Such a reduction is said to be confined if its image is a

finite dimensional subspace of  over

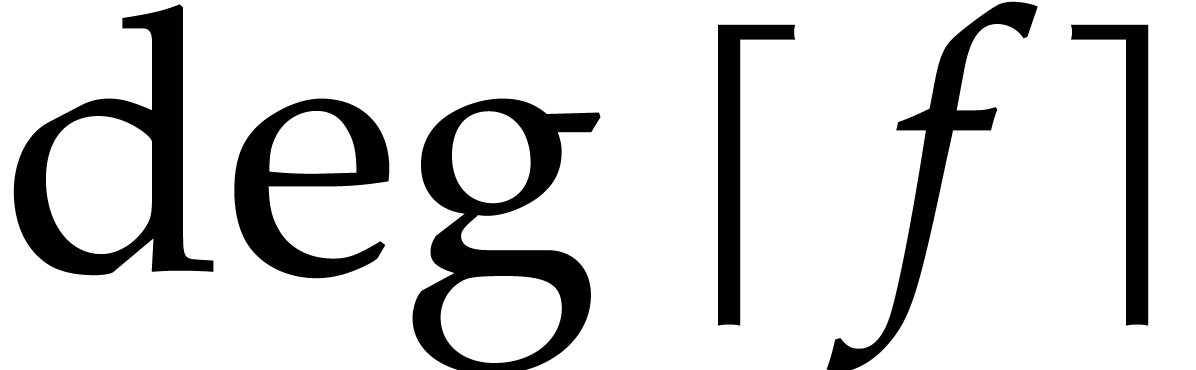

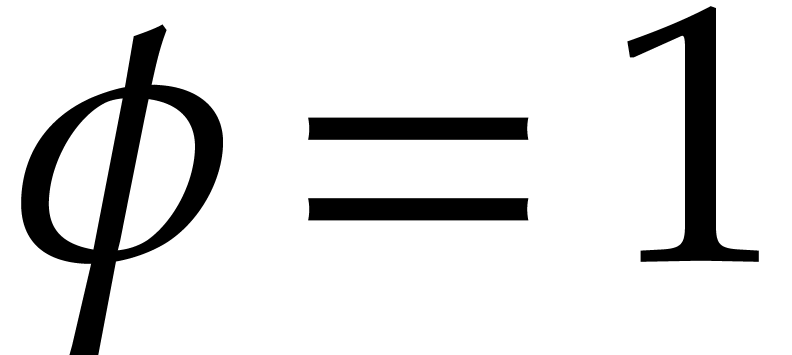

over  and normal if

and normal if  for all

for all

. In this paper, we propose a

solution to the following problem:

. In this paper, we propose a

solution to the following problem:

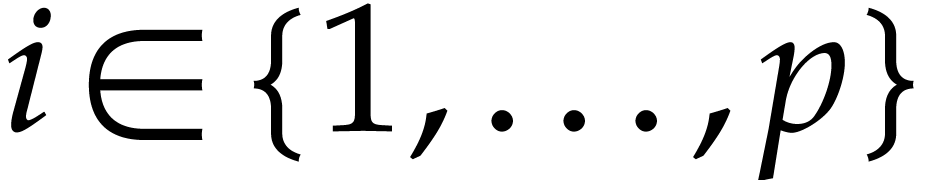

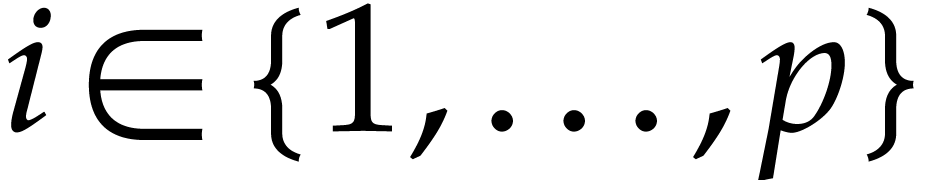

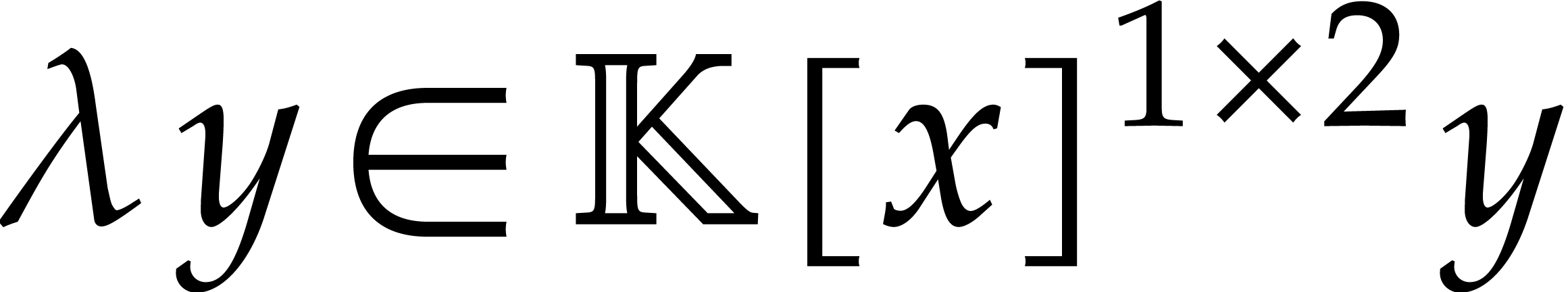

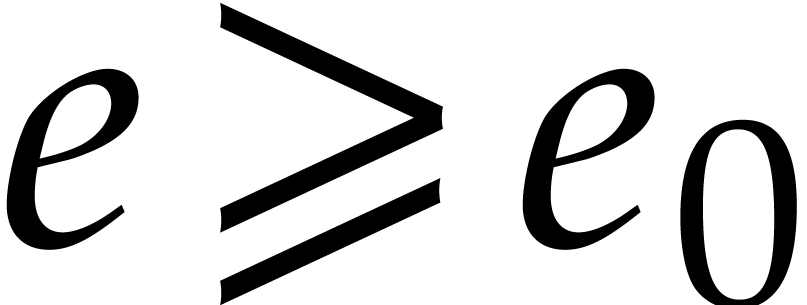

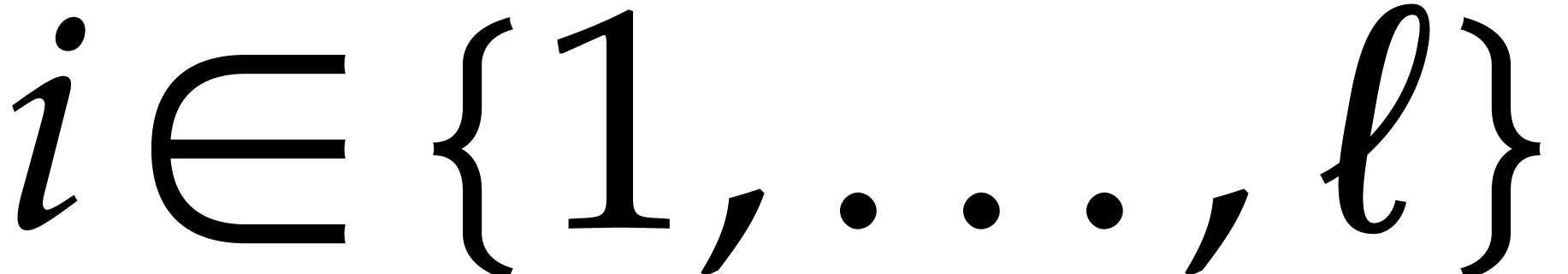

for

for  .

.

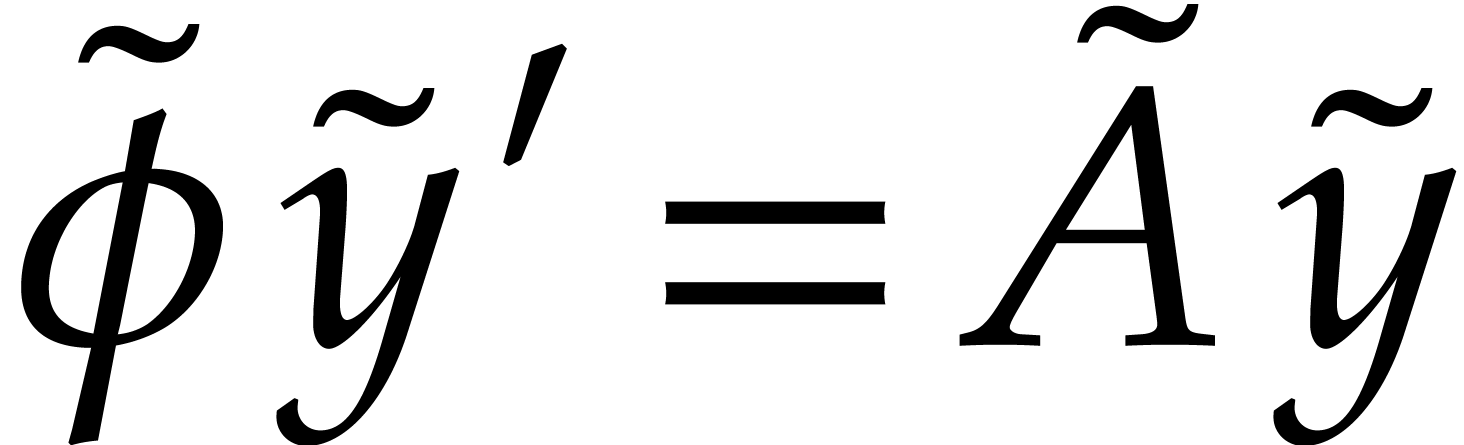

Confined reductions are interesting for their application to creative telescoping. After its introduction by Zeilberger in [23], the theory of creative telescoping has known a rapid development. For a brief history of the topic and further references, we point the reader to [12]. In section 2 we recall how confined reductions can be used for the computation of so-called telescopers; see also [7, 14]. It is worth to notice that Problem 1.1 concerns univariate differential equations, whereas creative telescoping is a priori a multivariate problem.

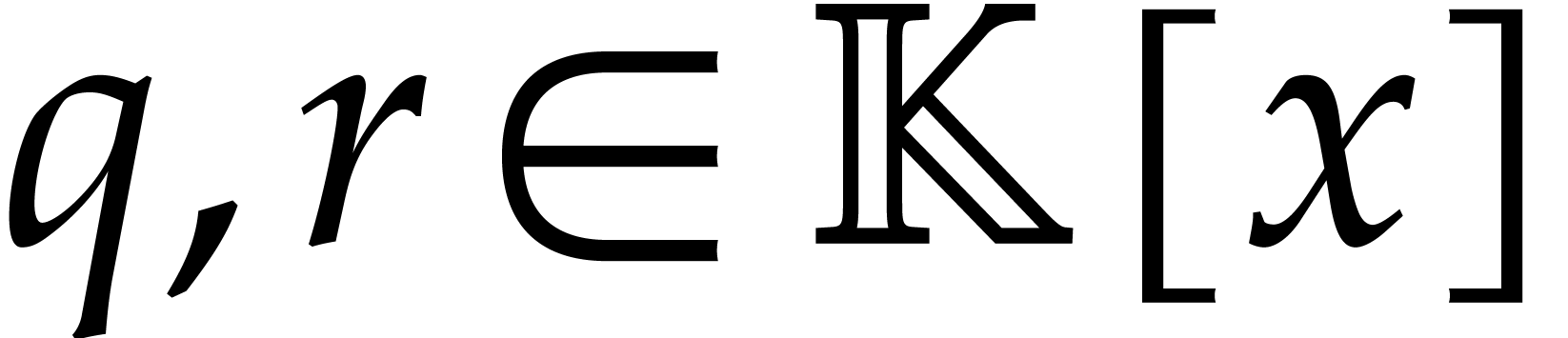

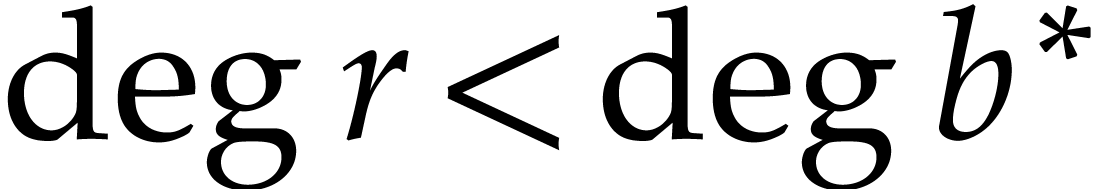

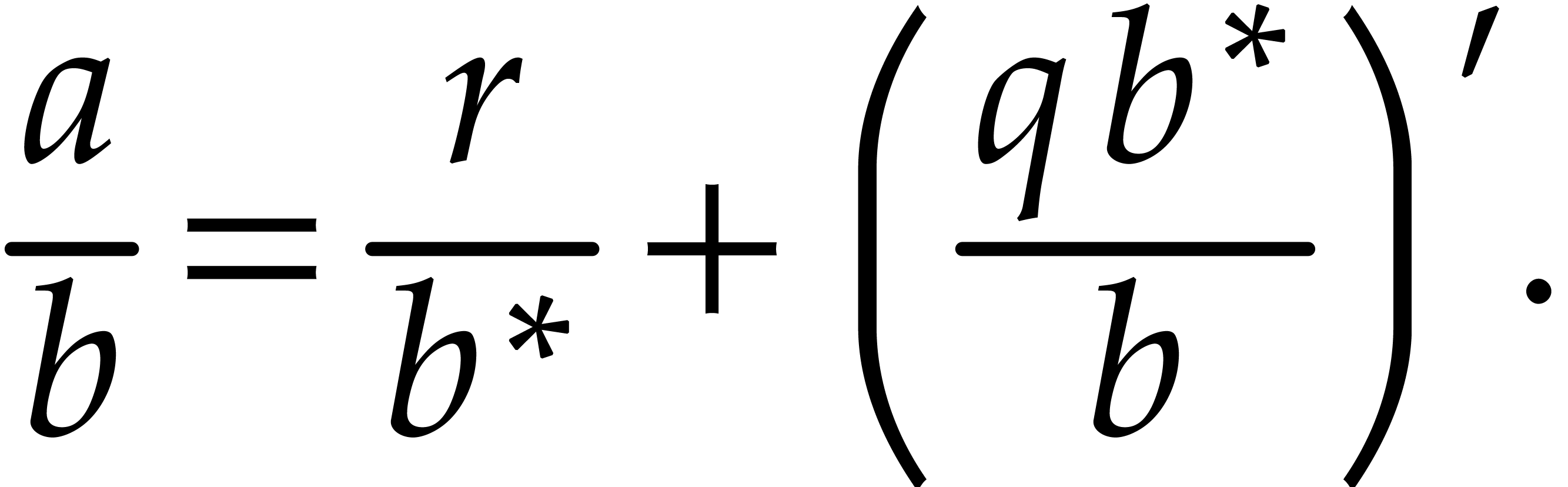

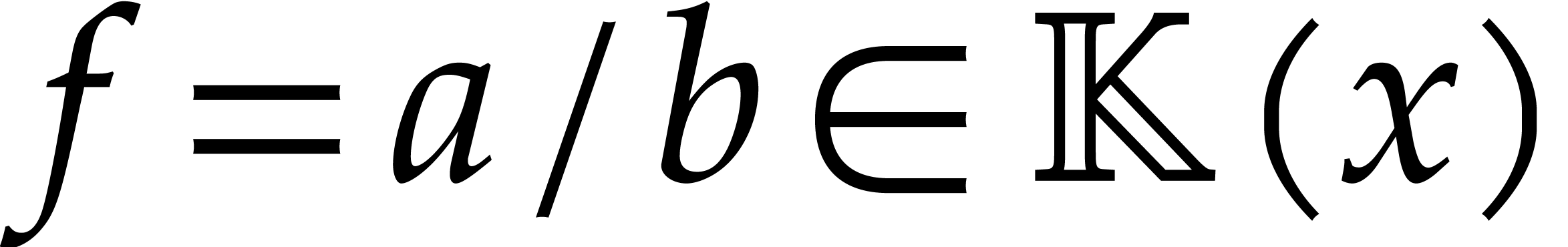

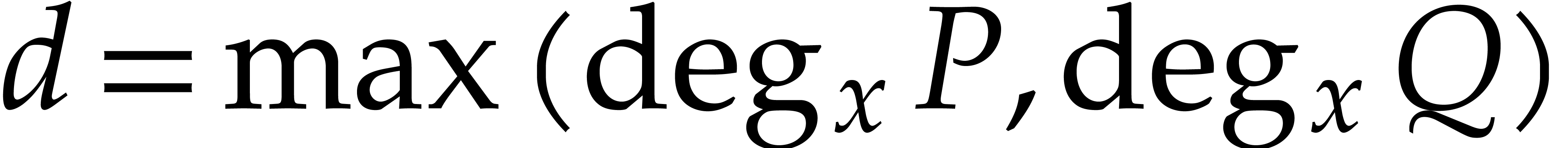

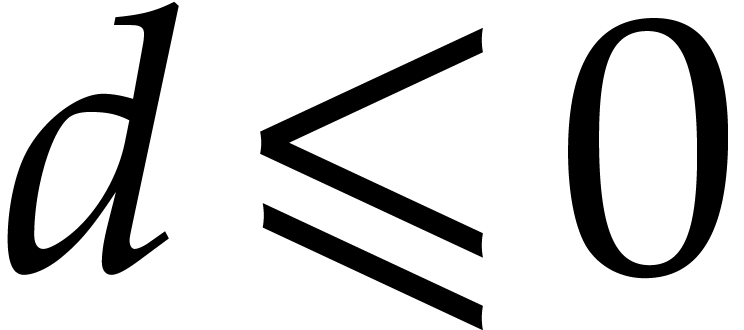

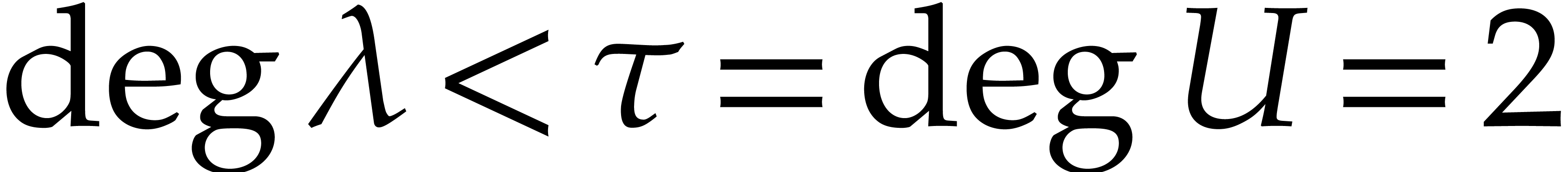

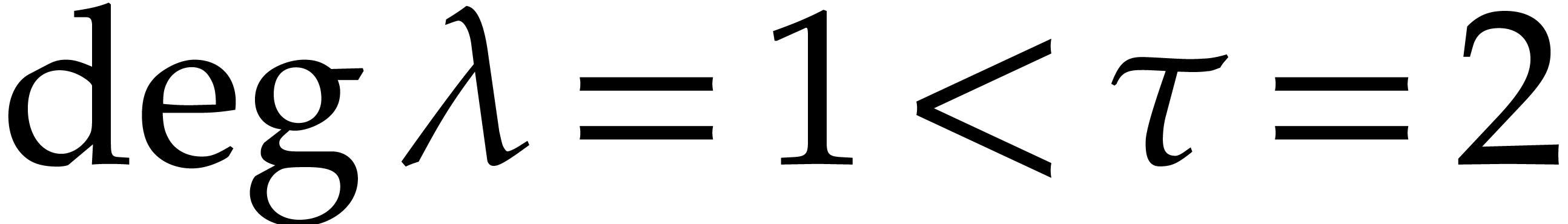

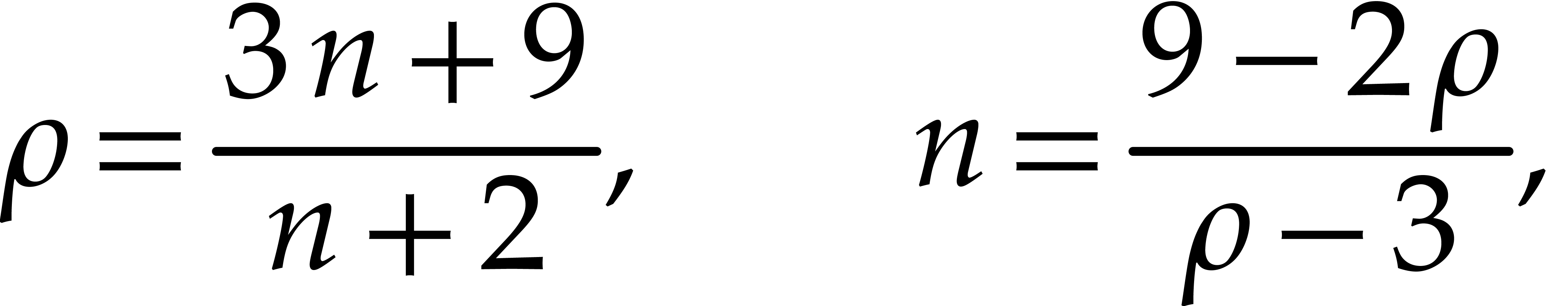

Reduction-based algorithms have appeared recently as a particularly

promising approach in order to make creative telescoping more efficient

and to understand its complexity. The simplest kind of reduction is

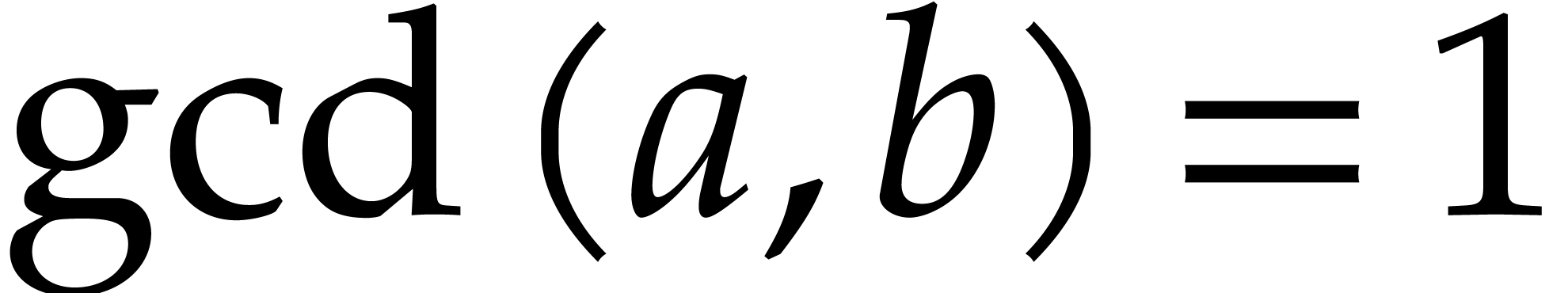

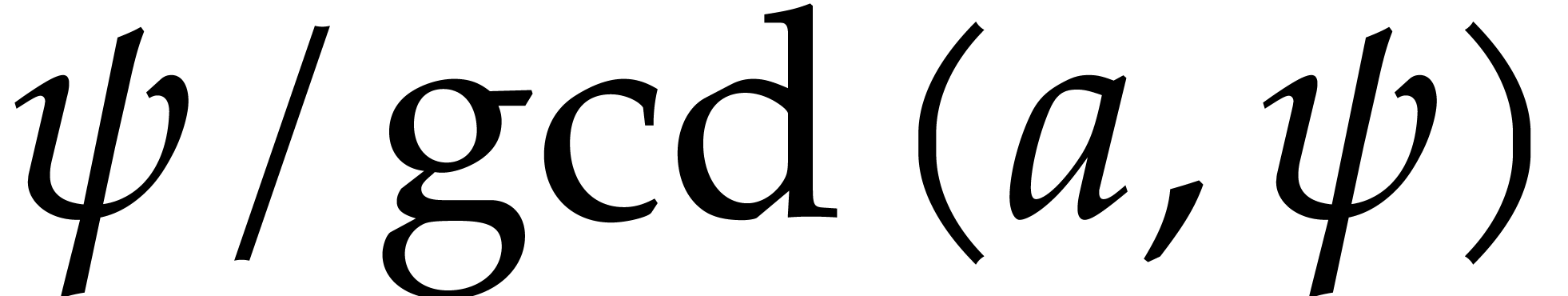

Hermite reduction [22, 16, 2, 7], in which case  and

and  . More precisely [14, Proposition

21], given

. More precisely [14, Proposition

21], given  with

with  ,

and writing

,

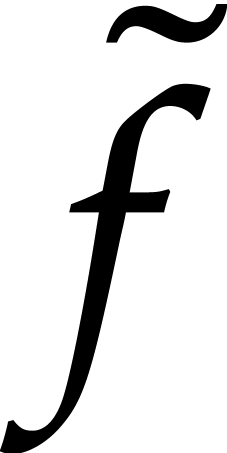

and writing  for the square-free part of

for the square-free part of  , there exist unique

, there exist unique  with

with  such that

such that

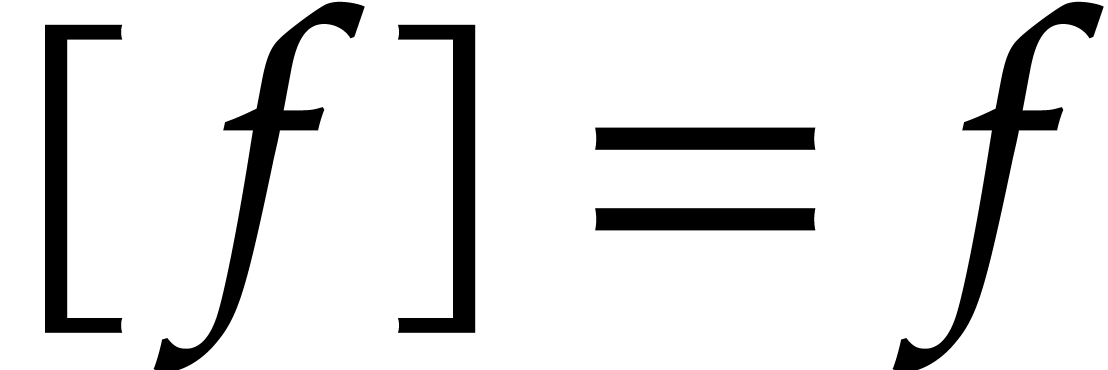

Then the Hermite reduction of  is defined by

is defined by

. Confined reductions have

been constructed in increasingly general cases: hyperexponential

functions [3] (see also [15]), hypergeometric

terms [9, 20], mixed

hypergeometric-hyperexponential terms [5], algebraic

functions [11], multivariate rational functions [6],

and Fuchsian differential equations [8].

. Confined reductions have

been constructed in increasingly general cases: hyperexponential

functions [3] (see also [15]), hypergeometric

terms [9, 20], mixed

hypergeometric-hyperexponential terms [5], algebraic

functions [11], multivariate rational functions [6],

and Fuchsian differential equations [8].

The existence of a reduction-based algorithm for general differential

equations was raised as open Problem 2.2 in [10]. Problem

1.1 is essentially a more precise form of this problem, by

specifying the space  on which the reduction

acts. From a cohomological point of view, reductions can be regarded as

an explicit way to exhibit elements in cokernels. An abstract proof of

the fact that the cokernel of the derivation on

on which the reduction

acts. From a cohomological point of view, reductions can be regarded as

an explicit way to exhibit elements in cokernels. An abstract proof of

the fact that the cokernel of the derivation on  is finite-dimensional was given in [21]. Our solution to

Problem 1.1 in particular yields a new constructive proof

of this fact.

is finite-dimensional was given in [21]. Our solution to

Problem 1.1 in particular yields a new constructive proof

of this fact.

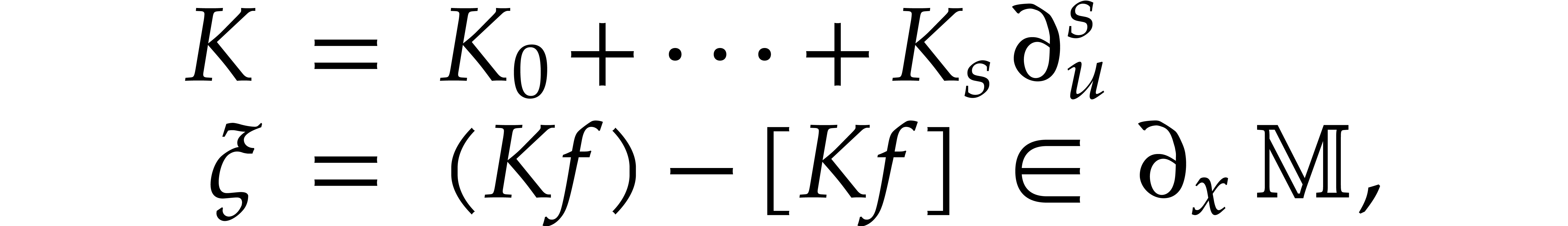

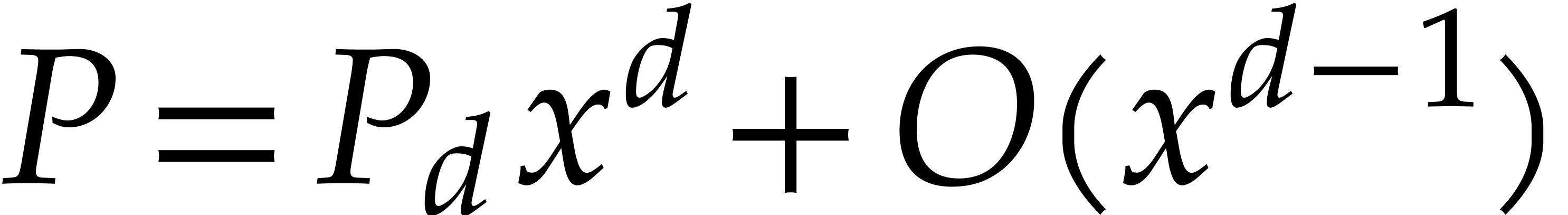

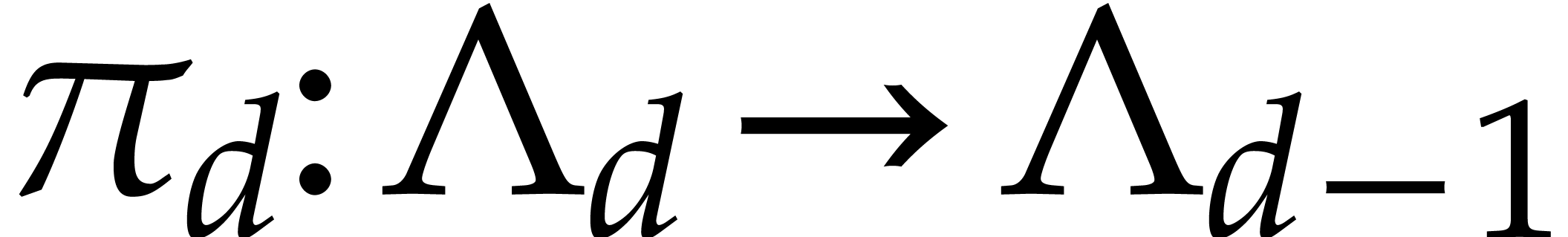

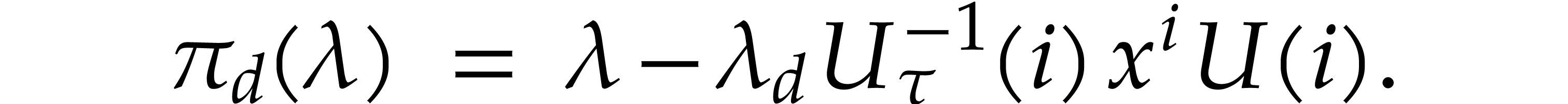

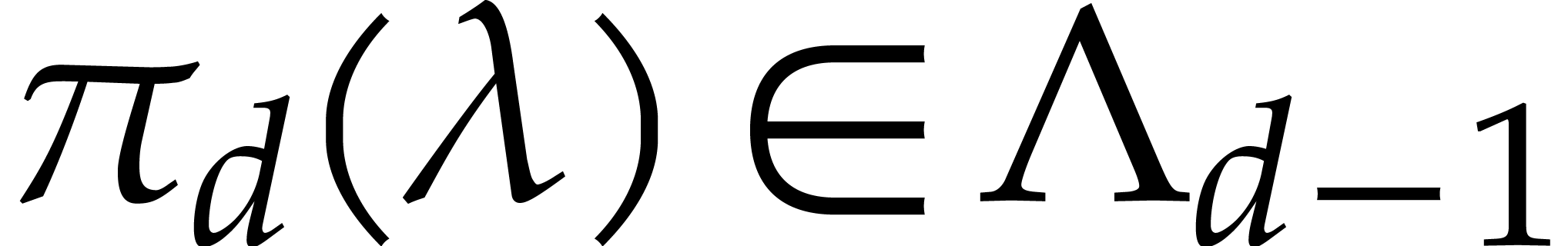

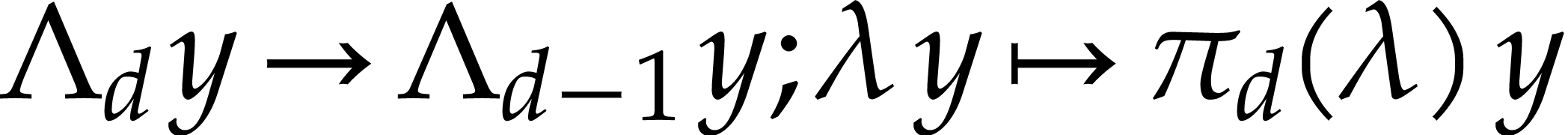

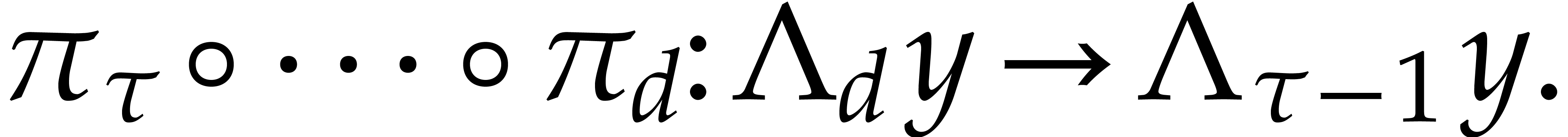

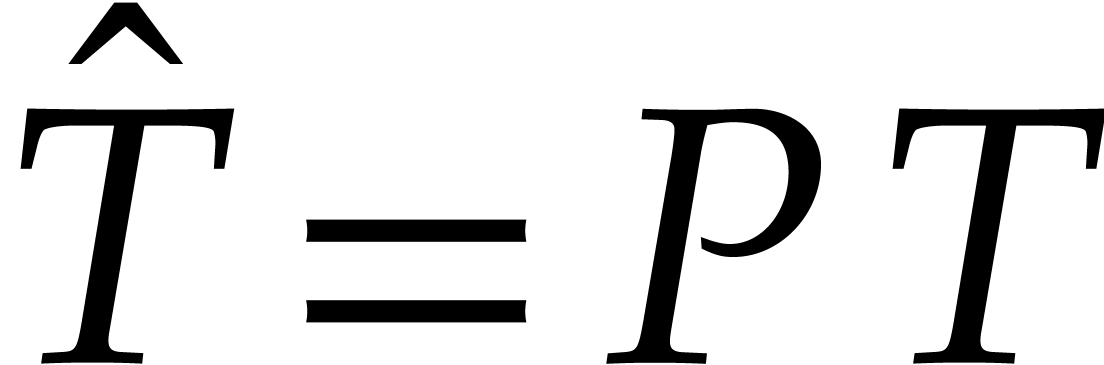

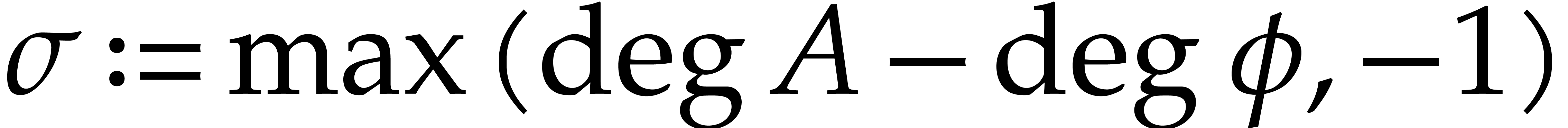

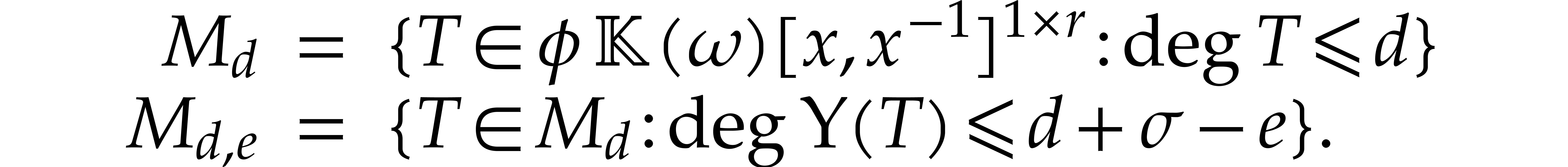

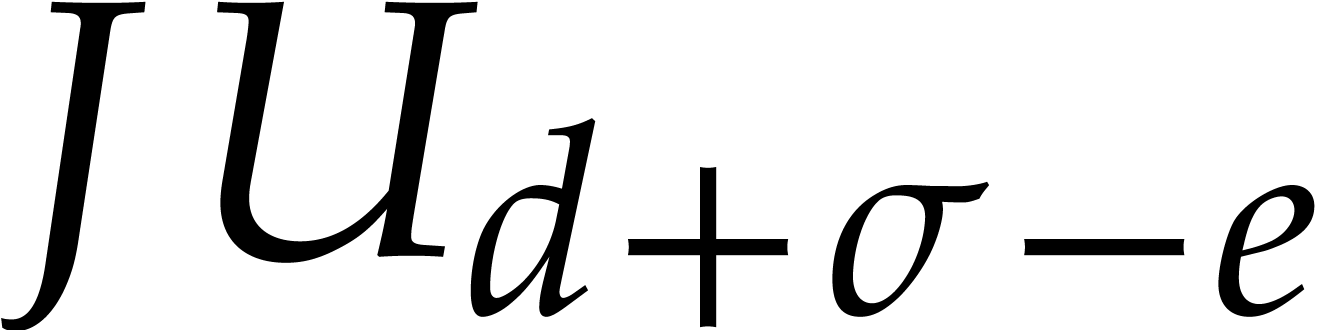

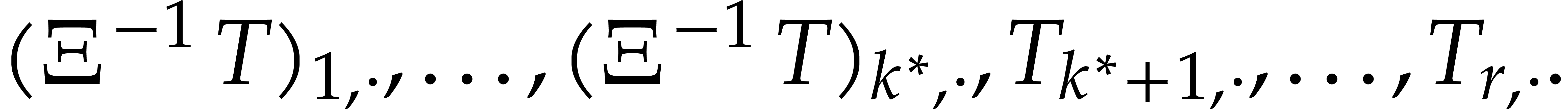

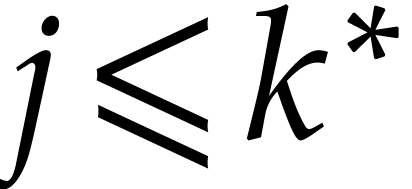

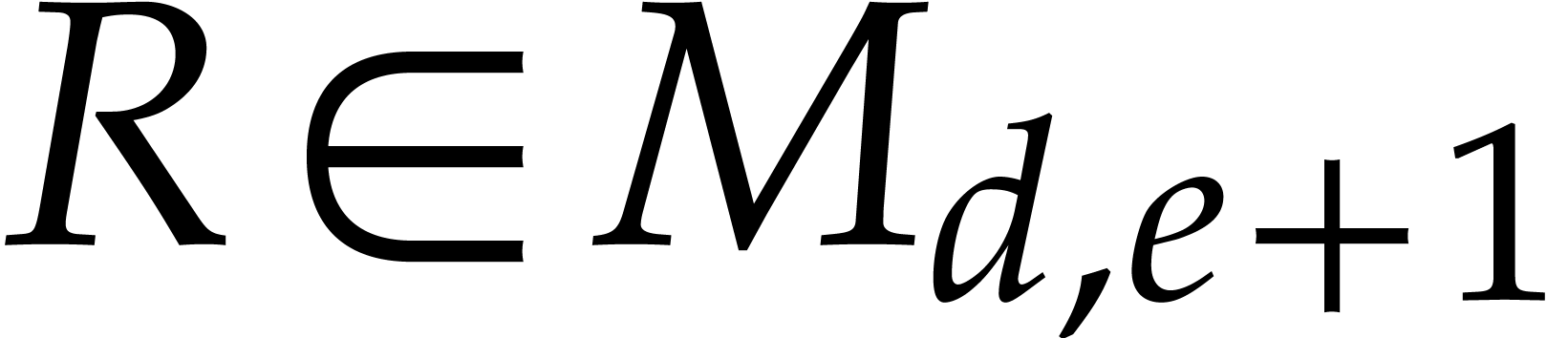

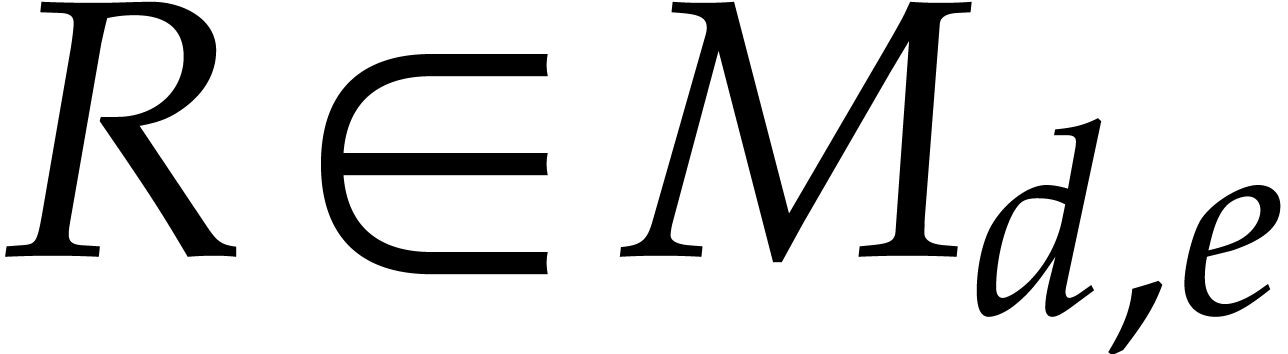

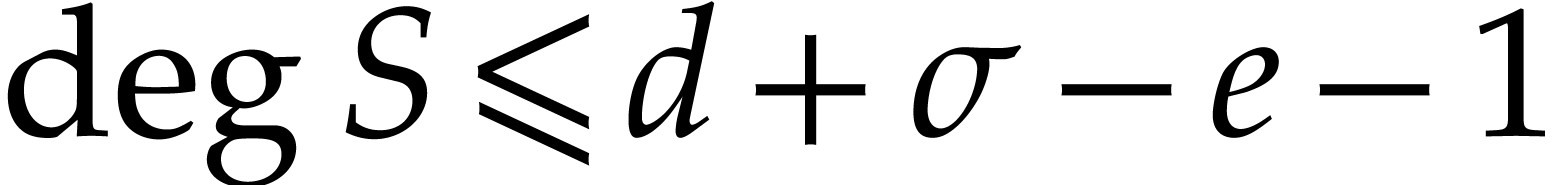

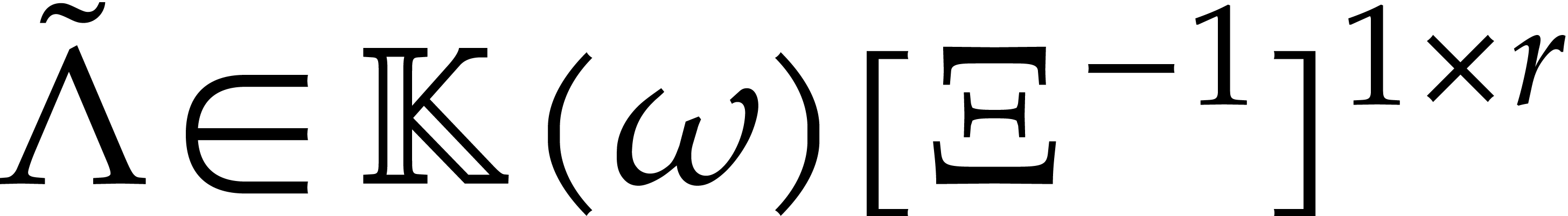

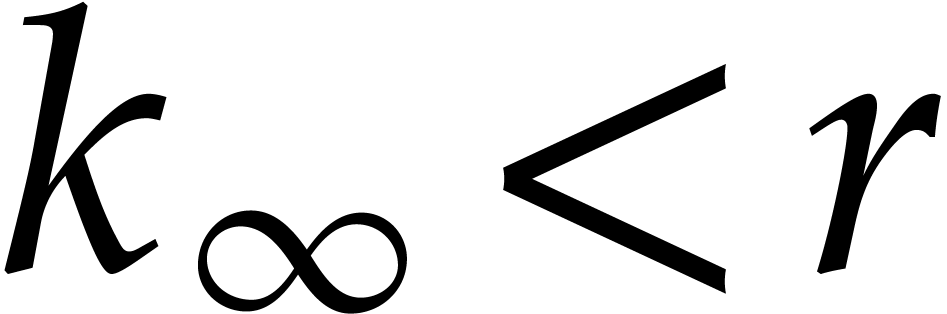

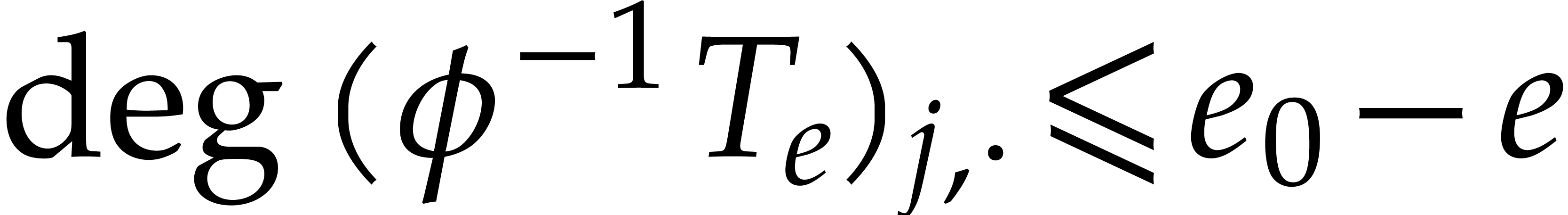

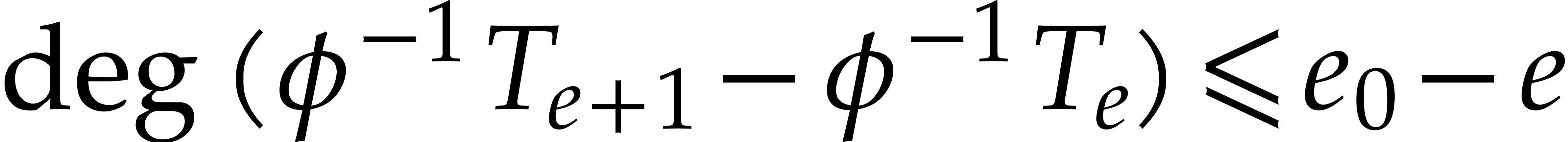

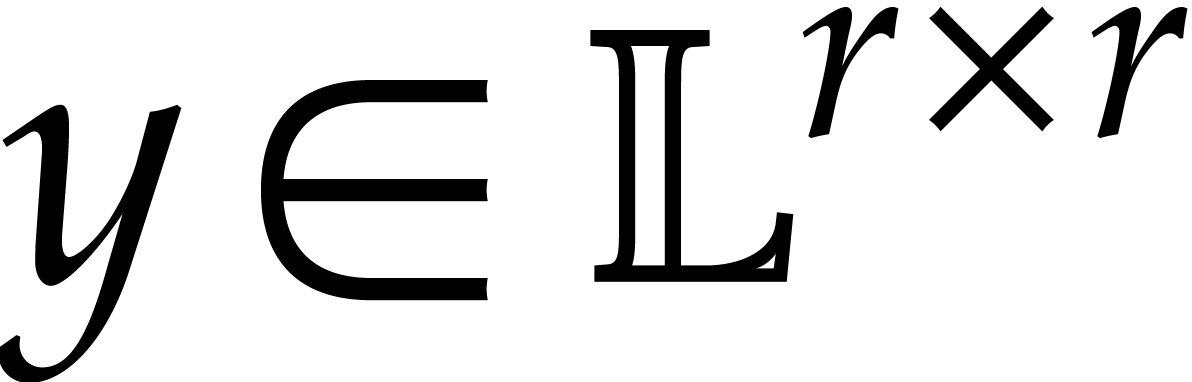

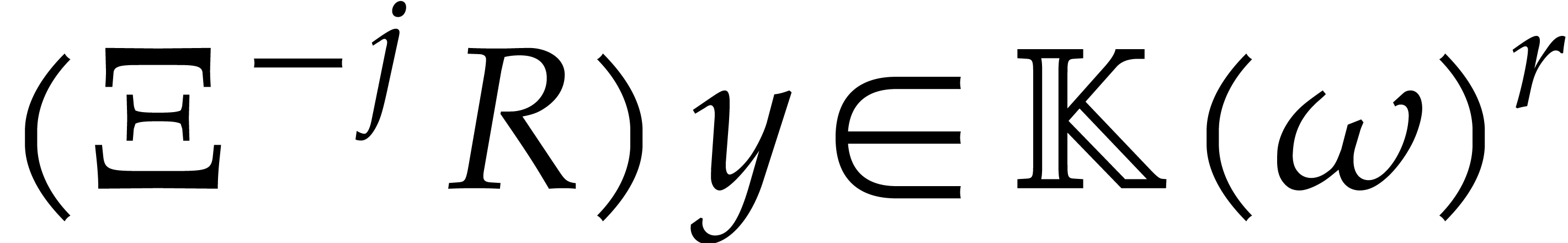

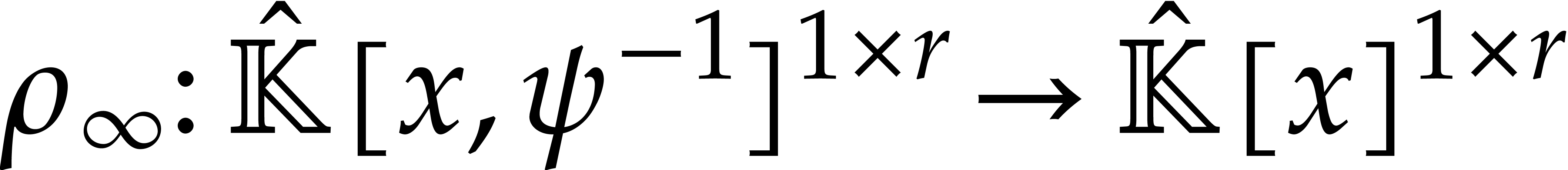

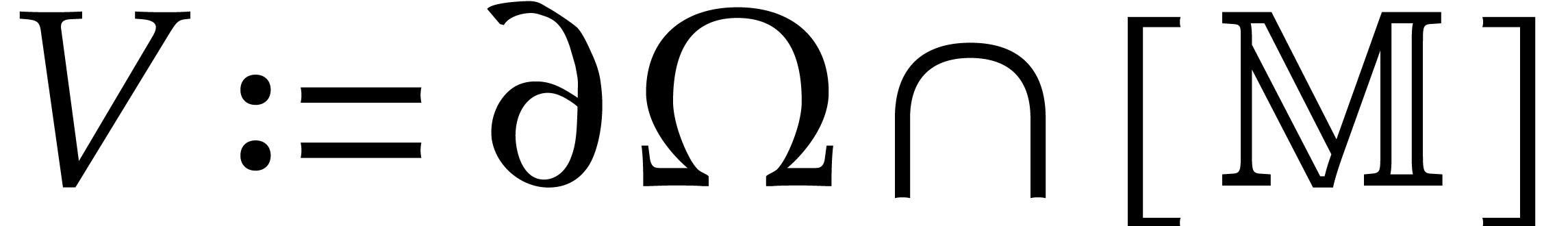

After section 2, we will leave applications to the theory

of creative telescoping aside and focus on the construction of confined

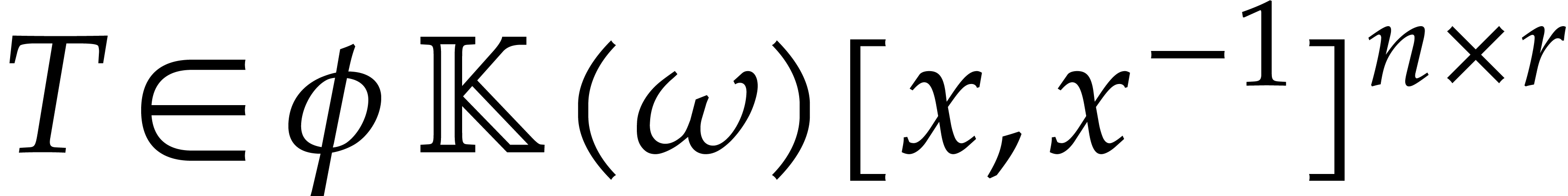

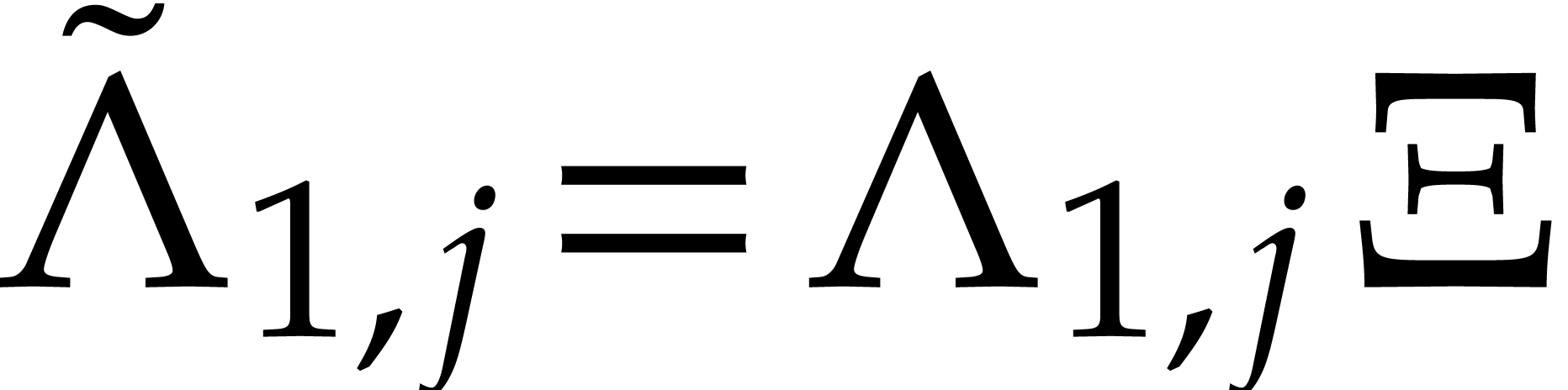

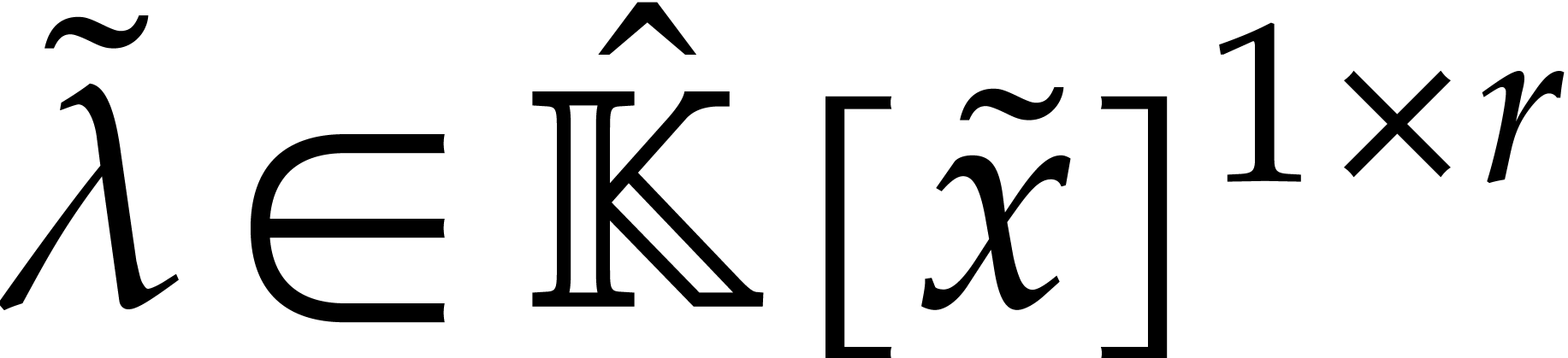

reductions. This construction proceeds in two stages. In section 3, we first consider the  -submodule

-submodule

of

of  of linear

combinations

of linear

combinations  with

with  .

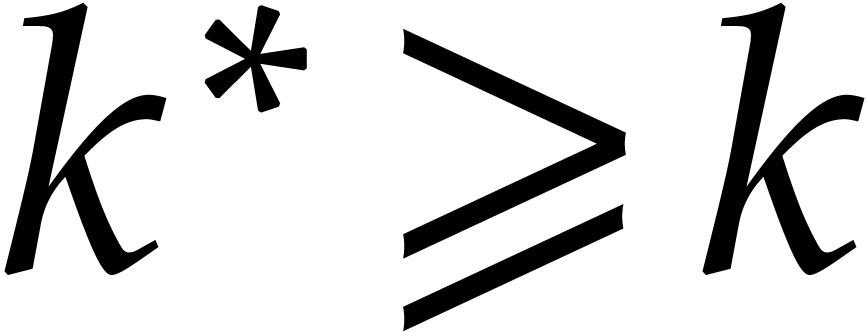

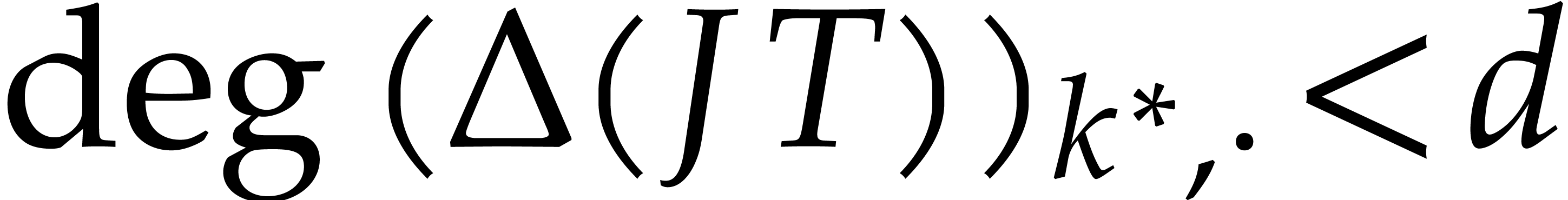

We will construct a

.

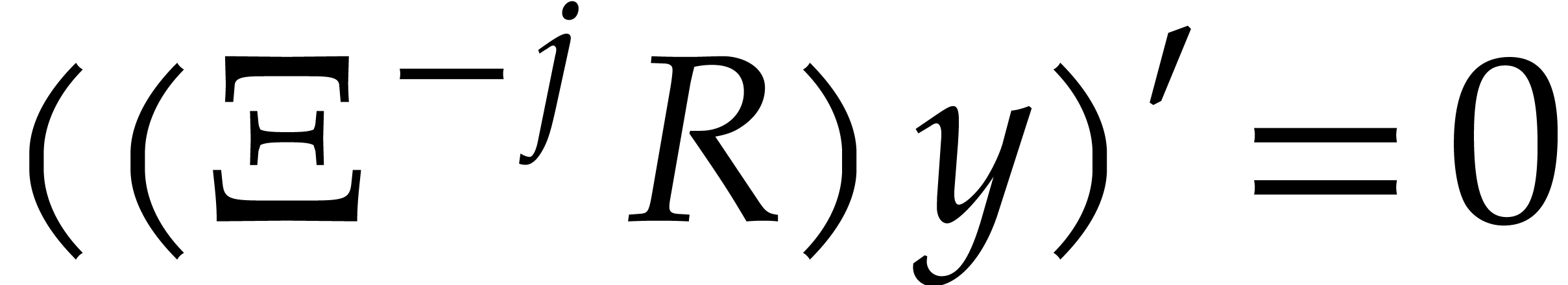

We will construct a  -linear

head reduction

-linear

head reduction  such that

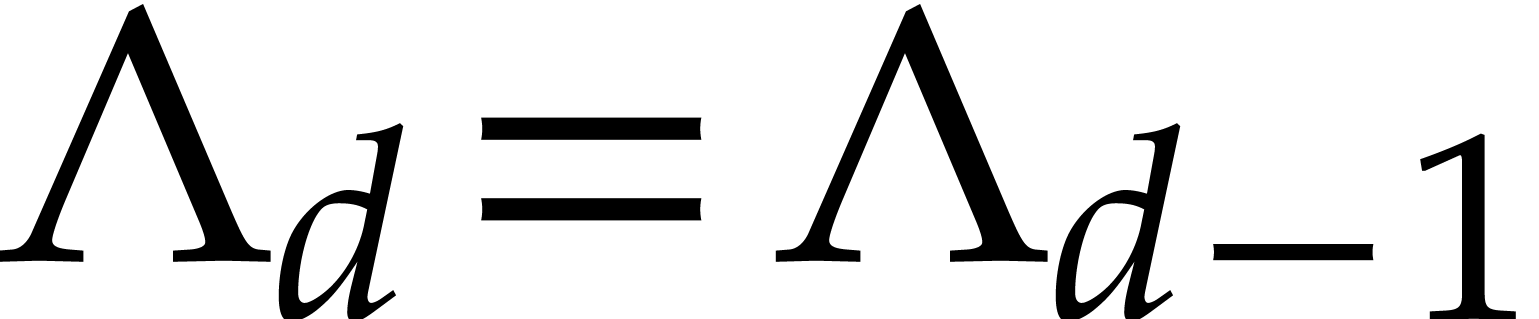

such that  and

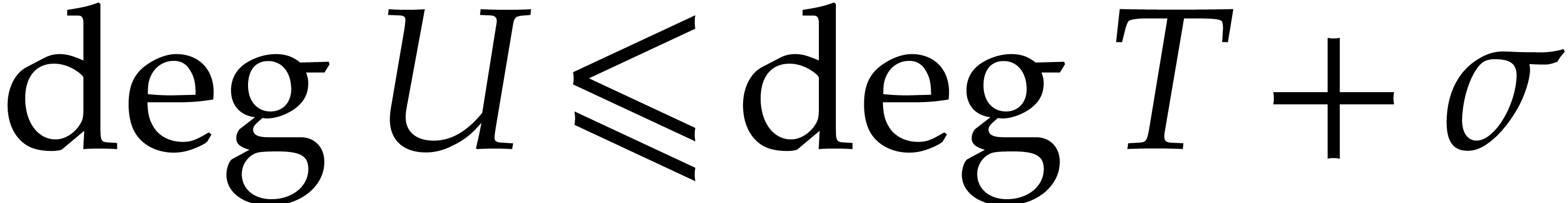

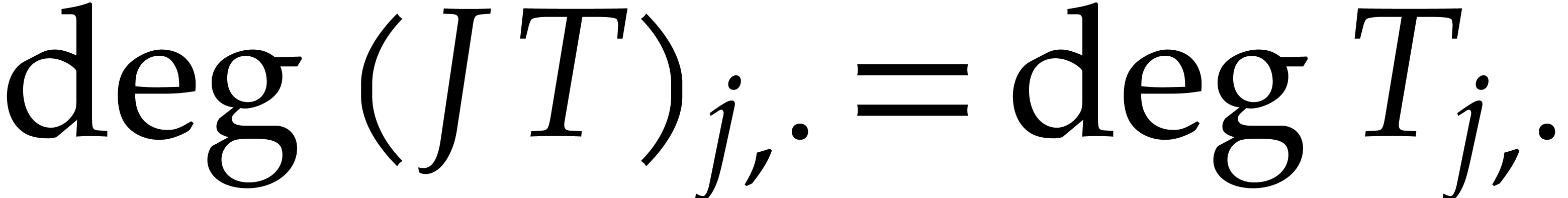

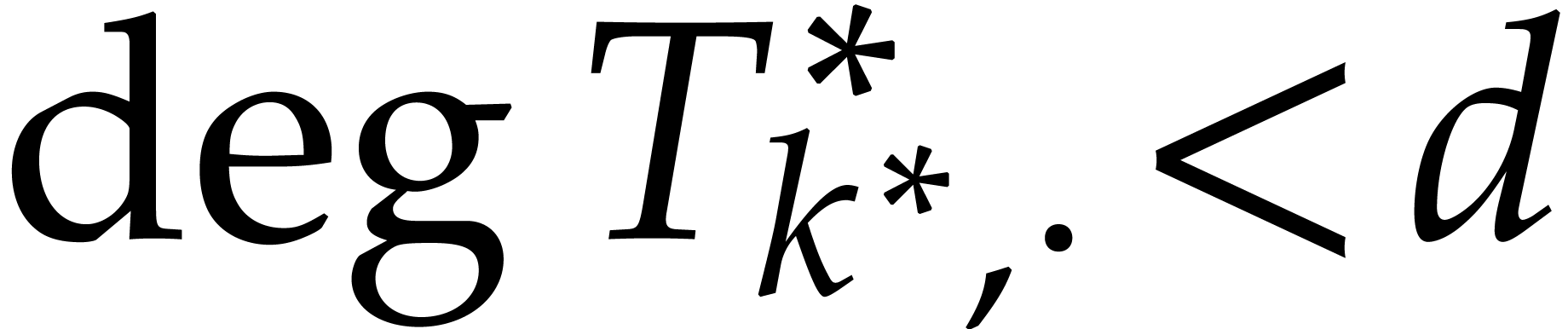

and  is bounded from above for all

is bounded from above for all

. Here we understand that

. Here we understand that

for all

for all  .

The head reduction procedure relies on the computation of a head

chopper using an algorithm that will be detailed in section 5. We also need a variant of row echelon forms that will be

described in section 4.

.

The head reduction procedure relies on the computation of a head

chopper using an algorithm that will be detailed in section 5. We also need a variant of row echelon forms that will be

described in section 4.

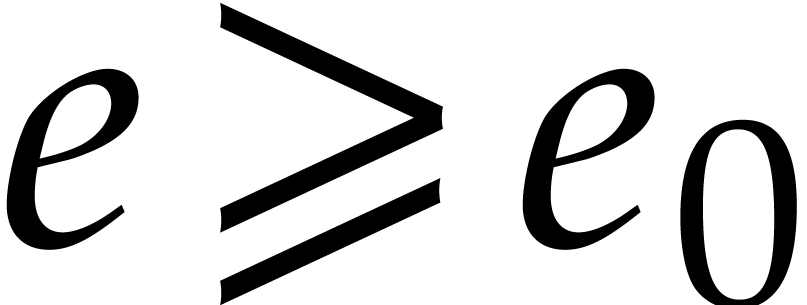

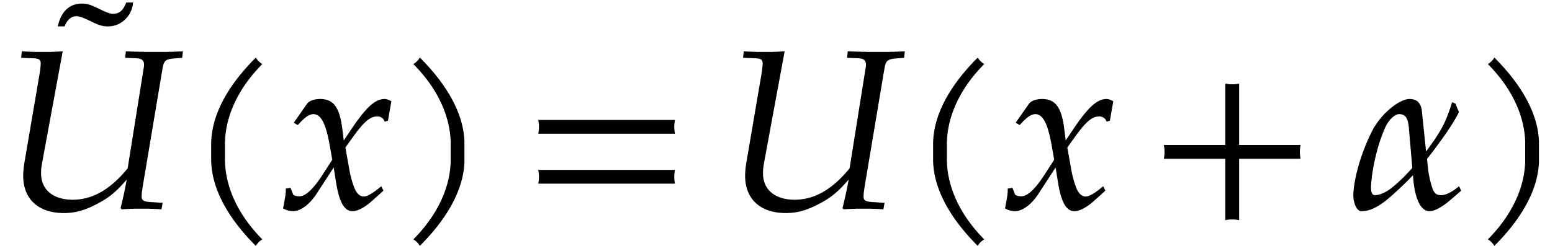

The head reduction may also be regarded as a way to reduce the valuation

of  in

in  ,

at the point at infinity. In section 6 we turn to tail

reductions, with the aim to reduce the valuation of

,

at the point at infinity. In section 6 we turn to tail

reductions, with the aim to reduce the valuation of  at all other points in

at all other points in  and its algebraic closure

and its algebraic closure

. This is essentially similar

to head reduction via a change of variables, while allowing

ourselves to work in algebraic extensions of

. This is essentially similar

to head reduction via a change of variables, while allowing

ourselves to work in algebraic extensions of  . In the last section 7, we show how to

combine the head reduction and the tail reductions at each of the roots

of

. In the last section 7, we show how to

combine the head reduction and the tail reductions at each of the roots

of  into a global confined reduction on

into a global confined reduction on  . Using straightforward linear

algebra and suitable valuation bounds, one can further turn this

reduction into a normal one, as will be shown in section 7.3.

. Using straightforward linear

algebra and suitable valuation bounds, one can further turn this

reduction into a normal one, as will be shown in section 7.3.

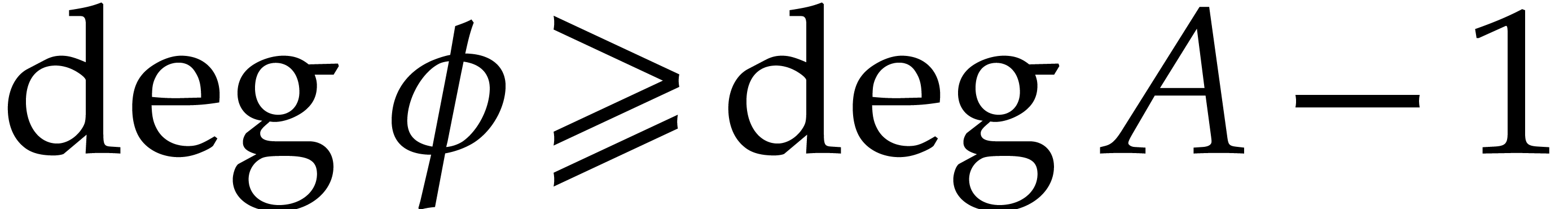

Our solution to Problem 1.1 is made precise in Theorems 5.9, 3.3, and 7.1. As far as we

aware of, these results are new, and provide a positive answer to [10, Problem 2.2]. The application to creative telescoping is

well known; see for instance [14, section 1.2.1]. Some of

the techniques that we use are similar to existing ones. First of all,

the construction of head choppers bears some similarities with Abramov's

EG-eliminations [1]. Our procedure for head reduction is

reminiscent of Euclidean division and classical algorithms for computing

formal power series solutions to differential equations: first find the

leading term and then continue with the remaining terms. In [11,

section 5], a similar “polynomial reduction” procedure has

been described in the particular case when  .

Finally, the idea to glue “local reductions” together into a

global one is also common in this area [3, 5,

8].

.

Finally, the idea to glue “local reductions” together into a

global one is also common in this area [3, 5,

8].

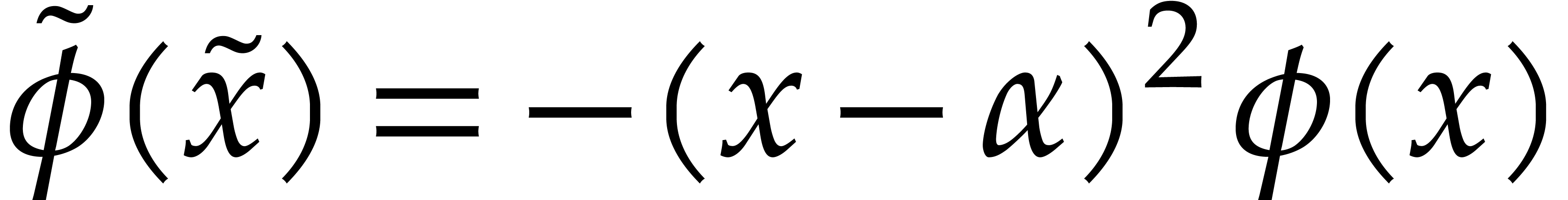

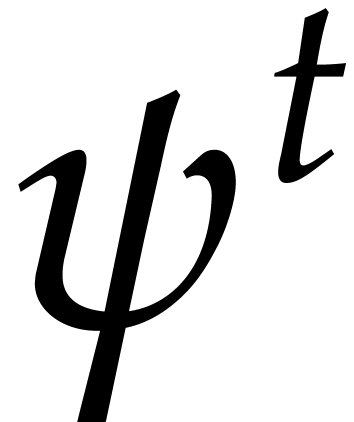

Subsequently to the publication of a preprint version of this paper [18], the results have been further sharpened and generalized.

In [4], an analogue algorithm was proposed for the case of

higher order linear differential equations instead of first order matrix

equations. This paper is mostly based on similar techniques, but also

introduced a new tool: the Lagrange identity. In the

terminology of the present paper, this makes it possible to avoid

introducing the formal parameter  ,

after which the operator

,

after which the operator  from section 5

simply becomes multiplication with

from section 5

simply becomes multiplication with  .

Such simplifications make it easier to extend the theory beyond the

setting of differential equations (1.1): see [19]

for generalizations to difference equations. The original preprint

version of this paper [18] also contained degree and

valuation bounds for head and tail choppers; one of our motivations was

to use these to derive polynomial complexity bounds for creative

telescoping. Using the Lagrange identity technique from [4],

it is possible to prove even sharper bounds. We refer to the follow-up

paper [19] for more information on degree and valuation

bounds and how to exploit them for proving polynomial complexity bounds.

.

Such simplifications make it easier to extend the theory beyond the

setting of differential equations (1.1): see [19]

for generalizations to difference equations. The original preprint

version of this paper [18] also contained degree and

valuation bounds for head and tail choppers; one of our motivations was

to use these to derive polynomial complexity bounds for creative

telescoping. Using the Lagrange identity technique from [4],

it is possible to prove even sharper bounds. We refer to the follow-up

paper [19] for more information on degree and valuation

bounds and how to exploit them for proving polynomial complexity bounds.

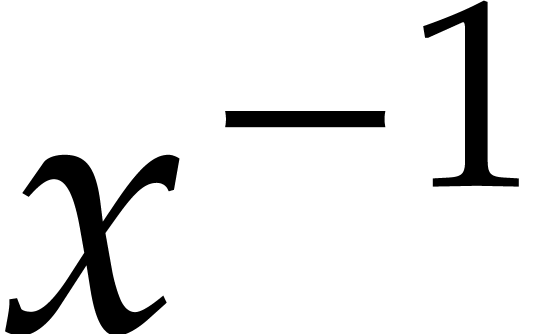

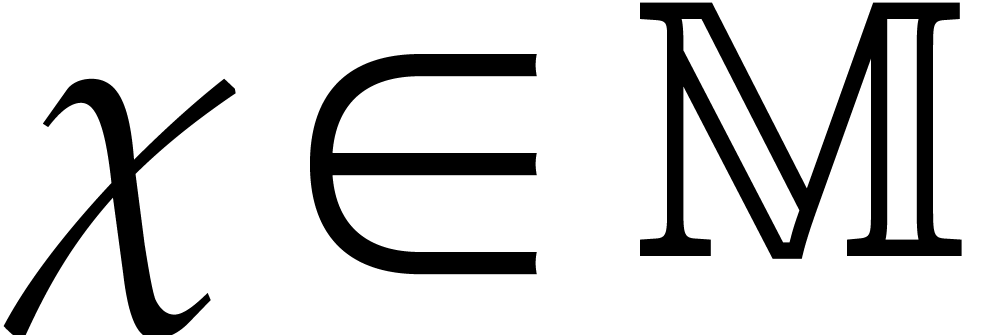

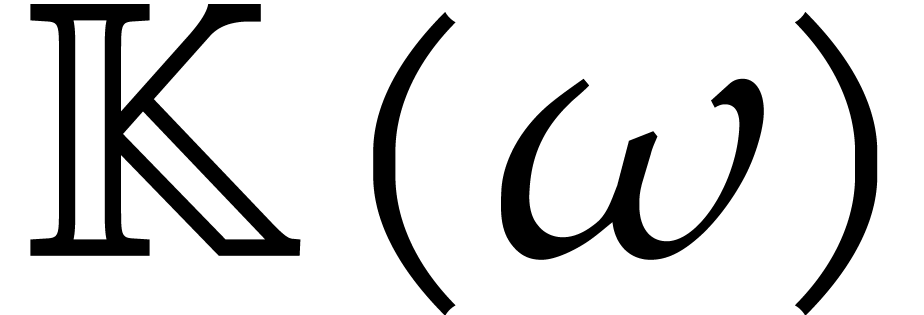

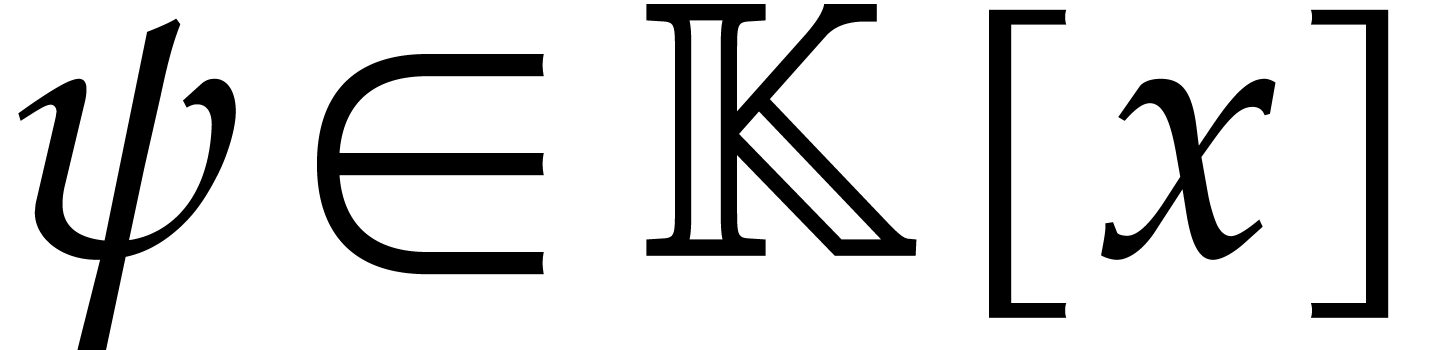

Let  be a subfield of

be a subfield of  . An analytic function

. An analytic function  on

some non-empty open subset of

on

some non-empty open subset of  is said to be

holonomic (or D-finite) over

is said to be

holonomic (or D-finite) over  if it satisfies a linear differential equation

if it satisfies a linear differential equation

where  are rational functions and

are rational functions and  . Modulo multiplication by the common

denominator, we may assume without loss of generality that

. Modulo multiplication by the common

denominator, we may assume without loss of generality that  are actually polynomials. Many, if not most, special

functions are holonomic. Examples include

are actually polynomials. Many, if not most, special

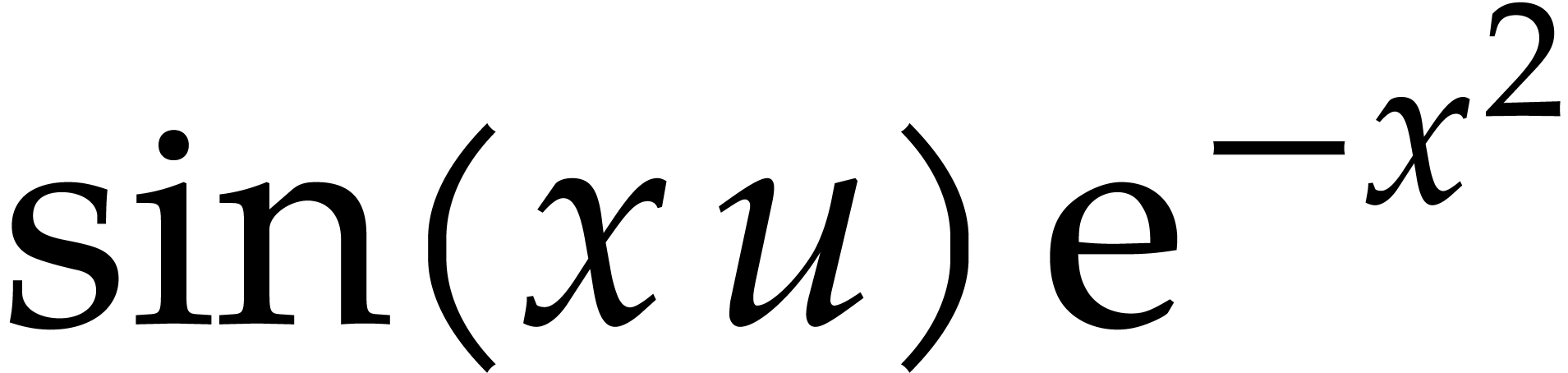

functions are holonomic. Examples include  ,

,

,

,  ,

,  ,

Bessel functions, hypergeometric functions, polylogarithms, etc.

,

Bessel functions, hypergeometric functions, polylogarithms, etc.

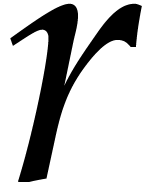

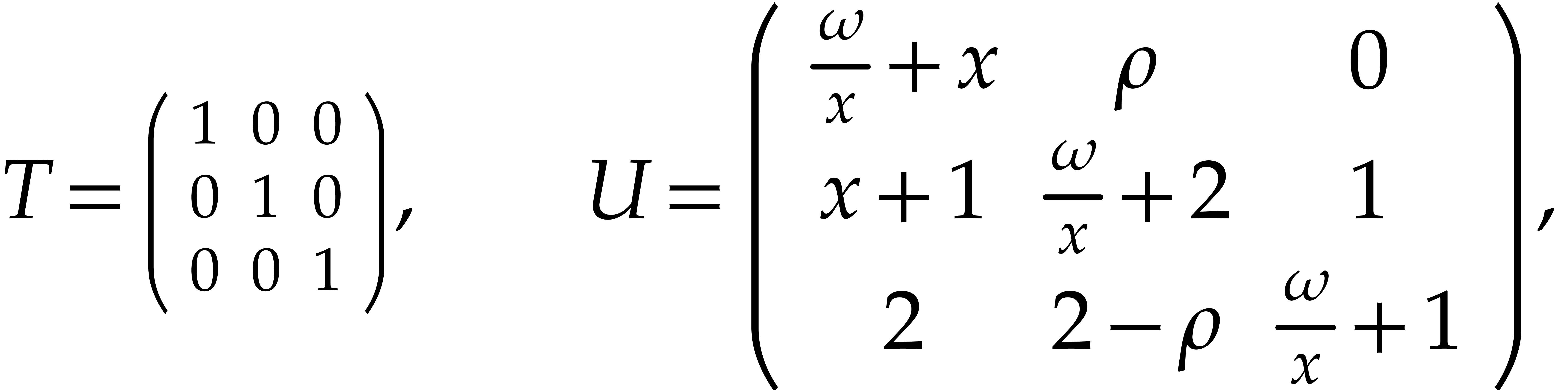

Instead of higher order scalar equations such as (2.1), it is also possible to consider first order linear differential systems

where  is a non-zero polynomial and

is a non-zero polynomial and  an

an  matrix with polynomial

coefficients. Given a column vector

matrix with polynomial

coefficients. Given a column vector  of analytic

solutions to (2.2) on some non-empty open subset of

of analytic

solutions to (2.2) on some non-empty open subset of  , it is well-known that each

component

, it is well-known that each

component  is a holonomic function. Conversely,

taking

is a holonomic function. Conversely,

taking  and

and

any solution  to (2.1) corresponds

to a unique solution

to (2.1) corresponds

to a unique solution  of (2.1).

of (2.1).

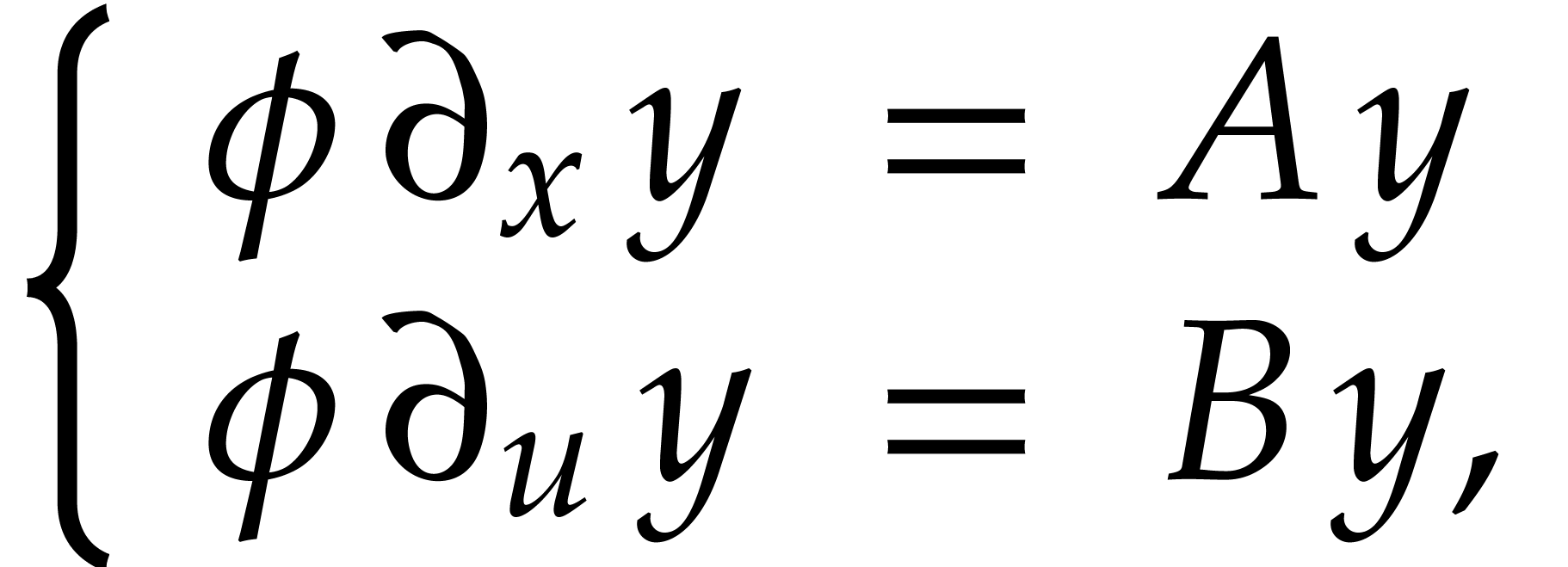

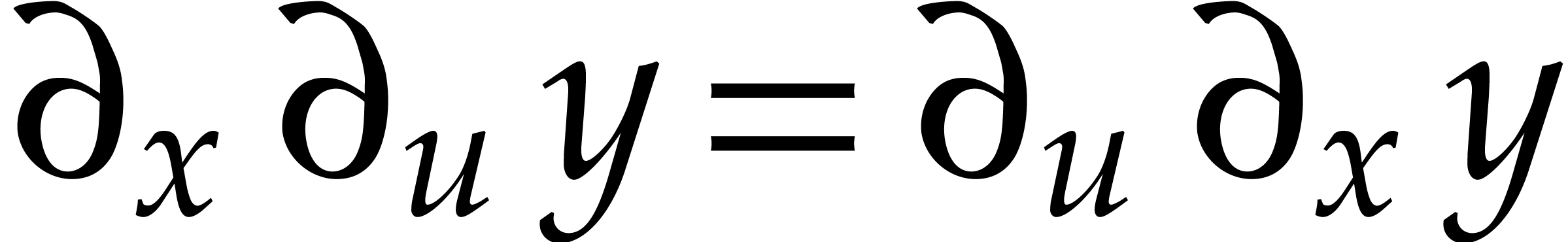

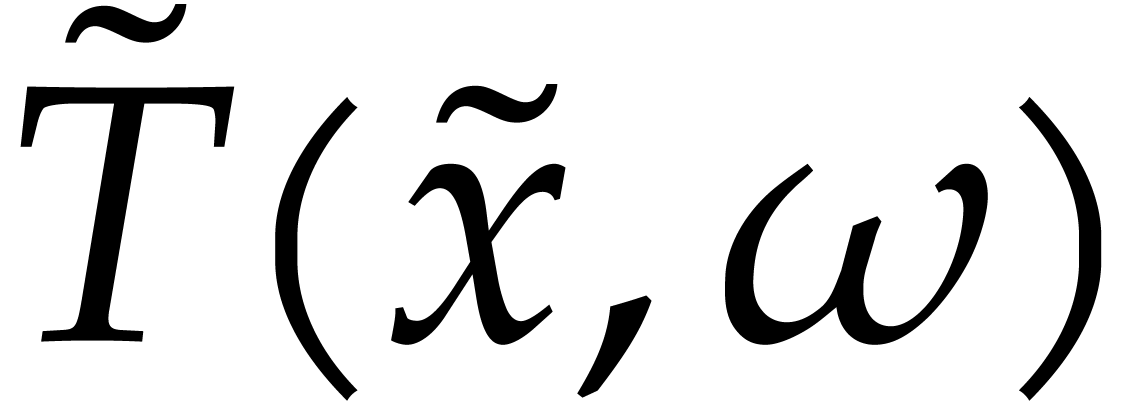

The concept of holonomy extends to multivariate functions. There are

again two equivalent formalizations that are respectively based on

higher order scalar equations and first order systems. Let us focus on

the bivariate case and let  and

and  denote the partial derivatives with respect to

denote the partial derivatives with respect to  and

and  . Consider a system of

linear differential equations

. Consider a system of

linear differential equations

|

(2.3) |

where  is non-zero and

is non-zero and  are such that

are such that

A holonomic function in two variables is defined to be a component of a

solution to such a system. The compatibility relation (2.4)

corresponds to the requirement  ,

under the assumption that

,

under the assumption that  satisfies (2.3).

satisfies (2.3).

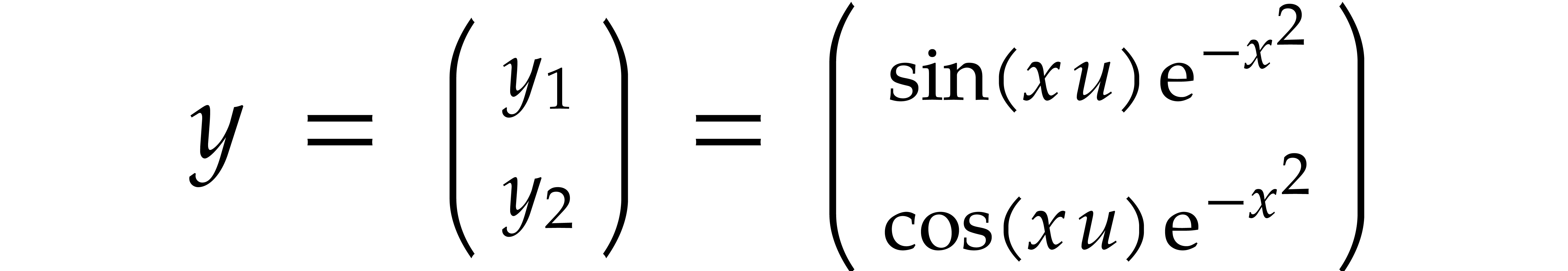

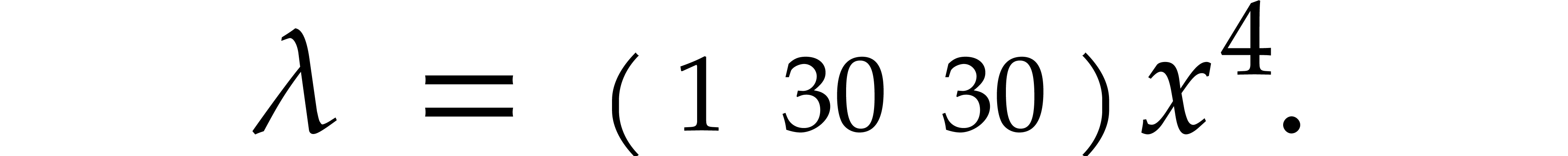

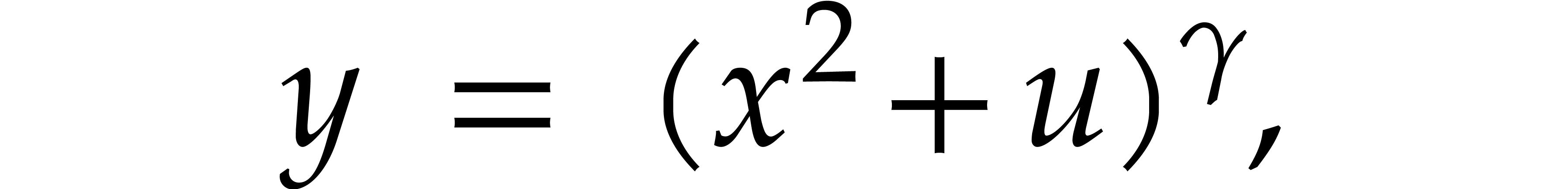

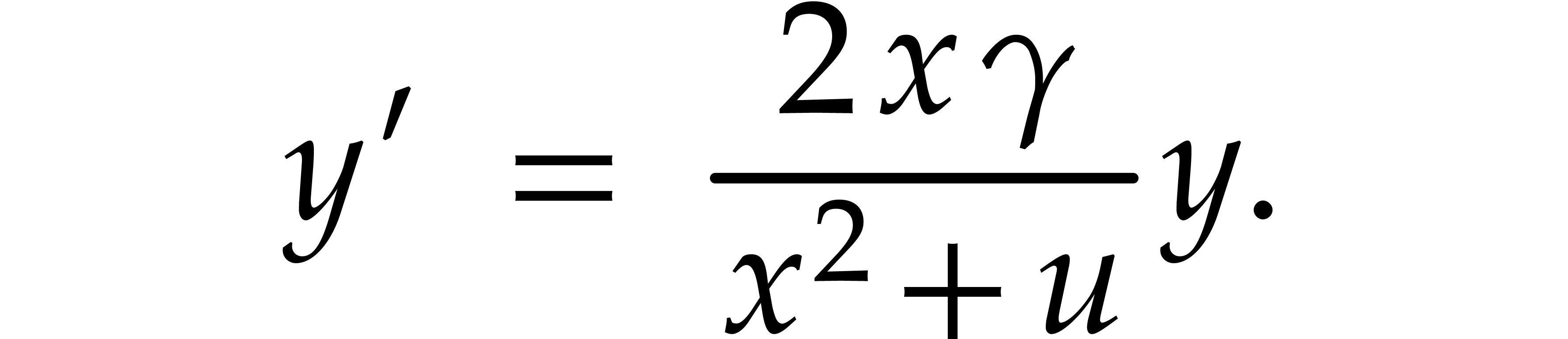

Example

satisfies the system (2.3) for  and

and

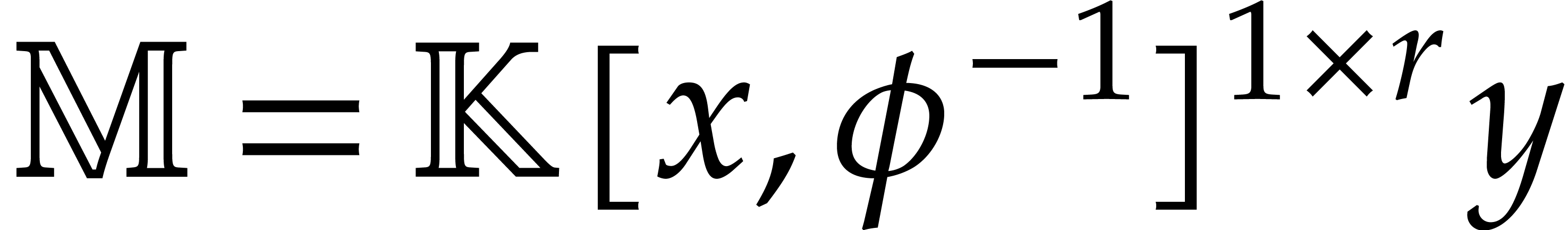

Assume that  is an effective subfield of

is an effective subfield of  and let

and let  be a complex analytic

solution of (2.3). By Cauchy-Kowalevski's theorem such

solutions exist and can be continued analytically above

be a complex analytic

solution of (2.3). By Cauchy-Kowalevski's theorem such

solutions exist and can be continued analytically above  . With

. With  ,

let

,

let  be the

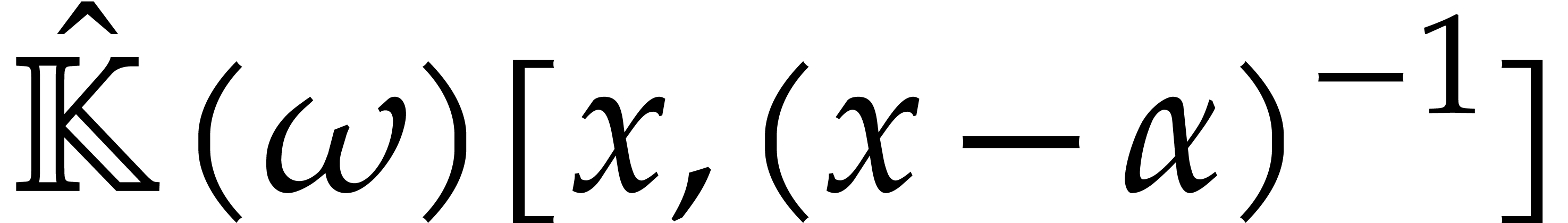

be the  -module

generated by the entries of

-module

generated by the entries of  .

Notice that

.

Notice that  is stable under both

is stable under both  and

and  . For any

. For any

with

with  and any

non-singular contour

and any

non-singular contour  in

in  between two points

between two points  , we may

consider the integral

, we may

consider the integral

which defines a function in the single variable  . It is natural to ask under which conditions

. It is natural to ask under which conditions  is a holonomic function and how to compute a

differential operator

is a holonomic function and how to compute a

differential operator  with

with  .

.

The idea of creative telescoping is to compute a differential

operator  (called the telescoper), an

element

(called the telescoper), an

element  (called the certificate), and

(called the certificate), and

, such that

, such that

Integrating over  , we then

obtain

, we then

obtain

If the contour  has the property that

has the property that  for all

for all  (where the equality is

allowed to hold at the limit if necessary), then

(where the equality is

allowed to hold at the limit if necessary), then  yields the desired annihilator with

yields the desired annihilator with  .

In general, we need to multiply

.

In general, we need to multiply  on the left with

an annihilator of

on the left with

an annihilator of  , as

operators in the skew ring

, as

operators in the skew ring  .

.

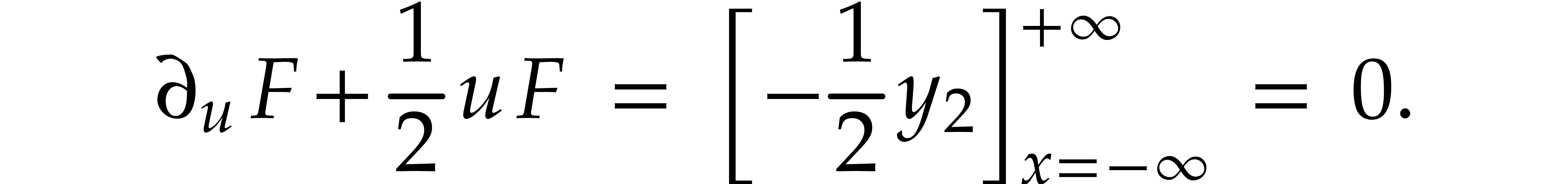

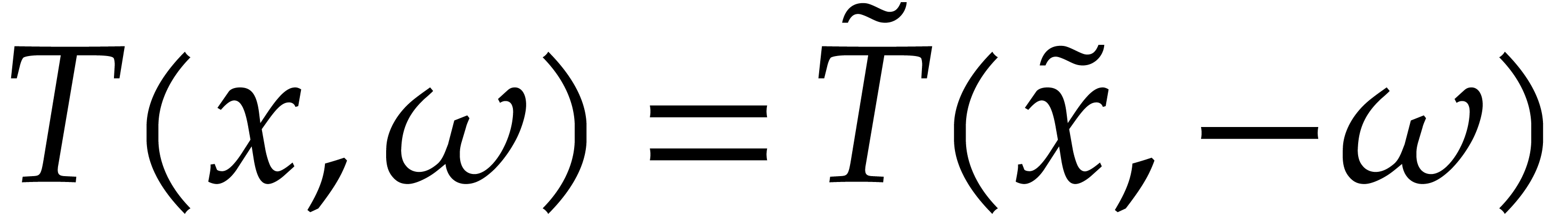

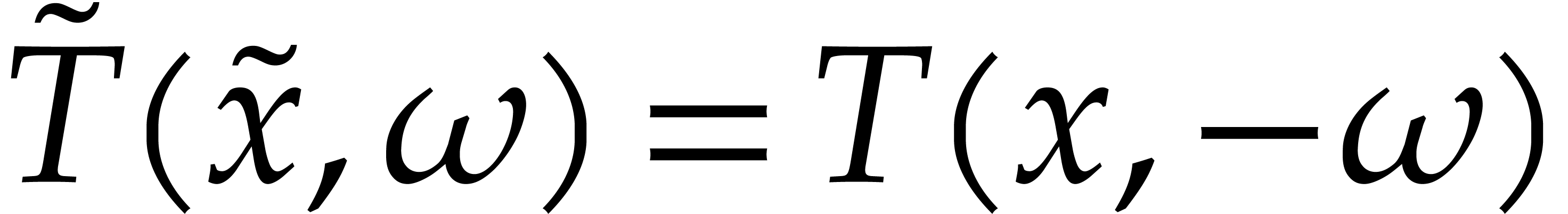

Example  as in Example 2.1, we have

as in Example 2.1, we have  . The contour

. The contour  that follows the real axis from

that follows the real axis from  to

to  is non-singular and any function in

is non-singular and any function in  vanishes at the limits of this contour (for fixed

vanishes at the limits of this contour (for fixed  ). In particular, taking

). In particular, taking  , the integral

, the integral

is well defined for all  . It

can be checked that

. It

can be checked that

whence we may take  as our telescoper and

as our telescoper and  as our certificate. Integrating over

as our certificate. Integrating over  , it follows that

, it follows that

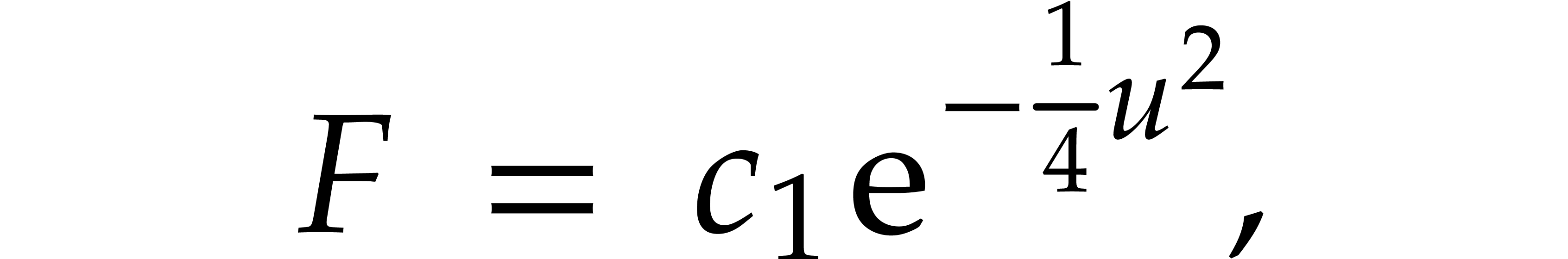

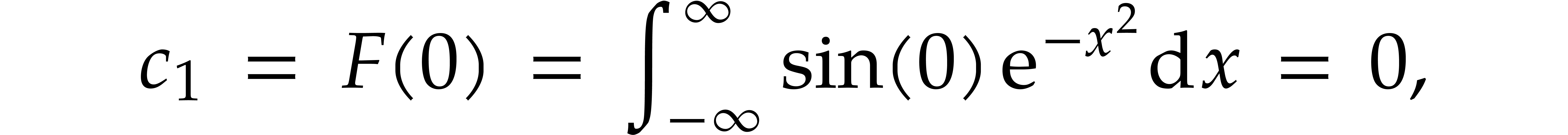

This equation admits a simple closed form solution

for some integration constant  .

In general, the computation of such integration constants is a difficult

problem that is well beyond the scope of this paper. For our particular

example, we have

.

In general, the computation of such integration constants is a difficult

problem that is well beyond the scope of this paper. For our particular

example, we have

whence  . We could have seen

this more directly by observing that the integrand

. We could have seen

this more directly by observing that the integrand  is an odd function in

is an odd function in  for all

for all  . On the other hand, a similar computation for

. On the other hand, a similar computation for

and

and

leads to

and

We have shown how relations of the form (2.6) can be used for the computation of parametric integrals (2.5). This leaves us with the question how to find such relations. Many different approaches have been proposed for this task and we refer to [12] for a historical overview. From now on we will focus on the reduction-based approach, which is fairly recent and has shown to be particularly efficient for various subclasses of holonomic functions.

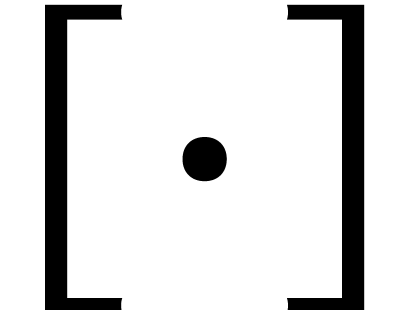

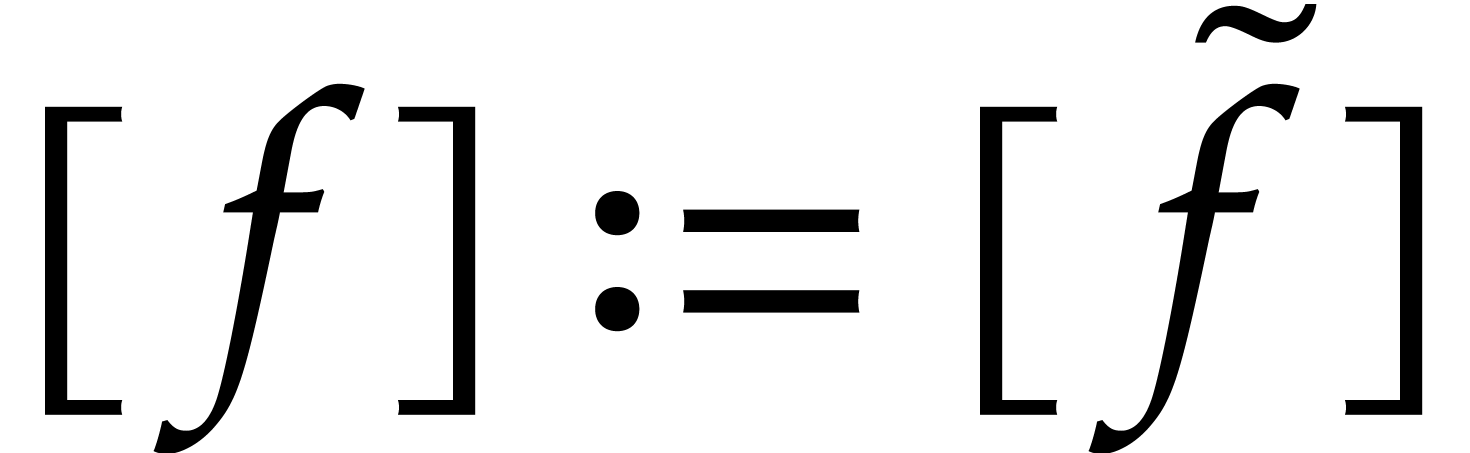

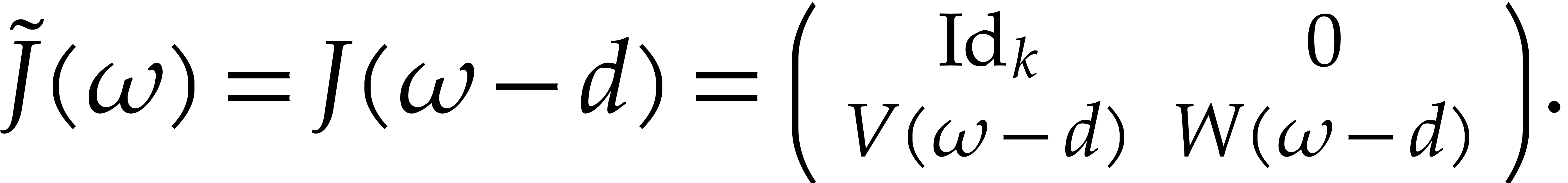

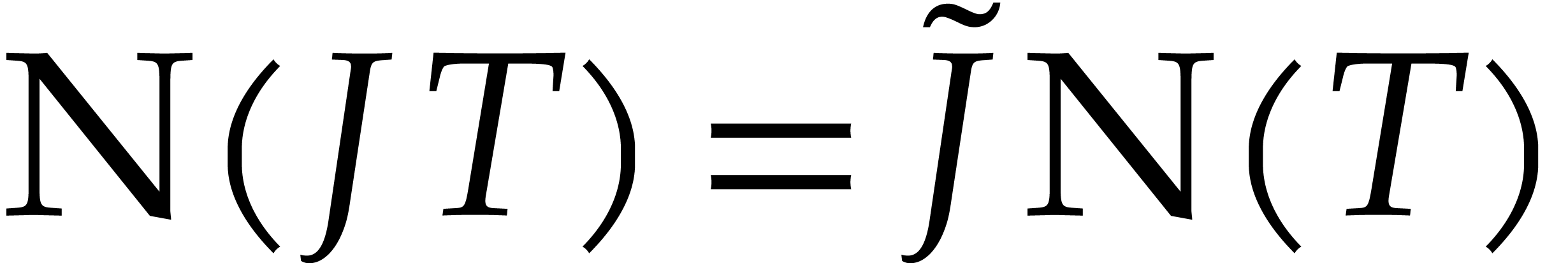

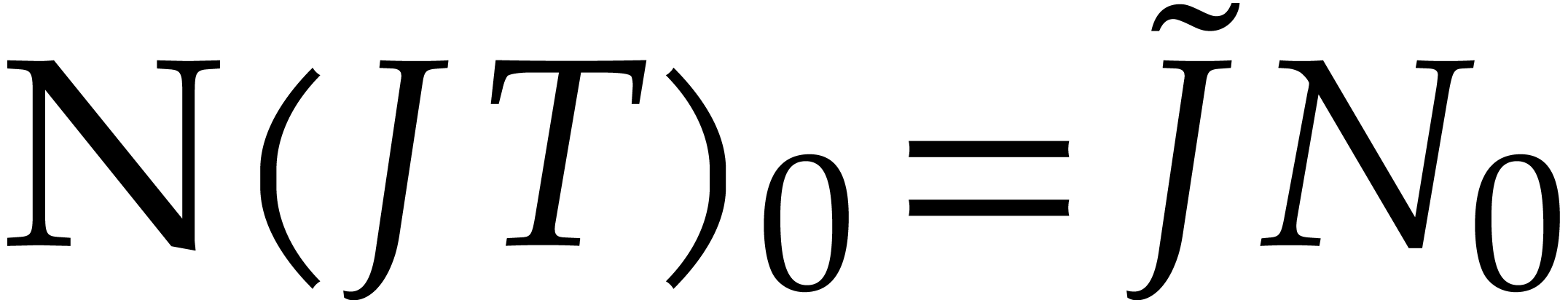

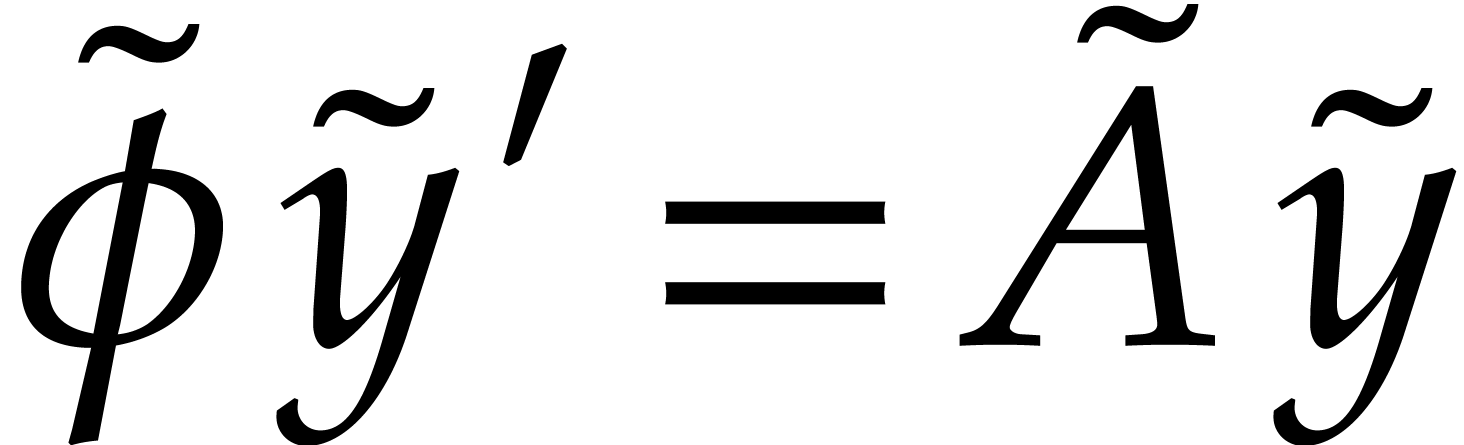

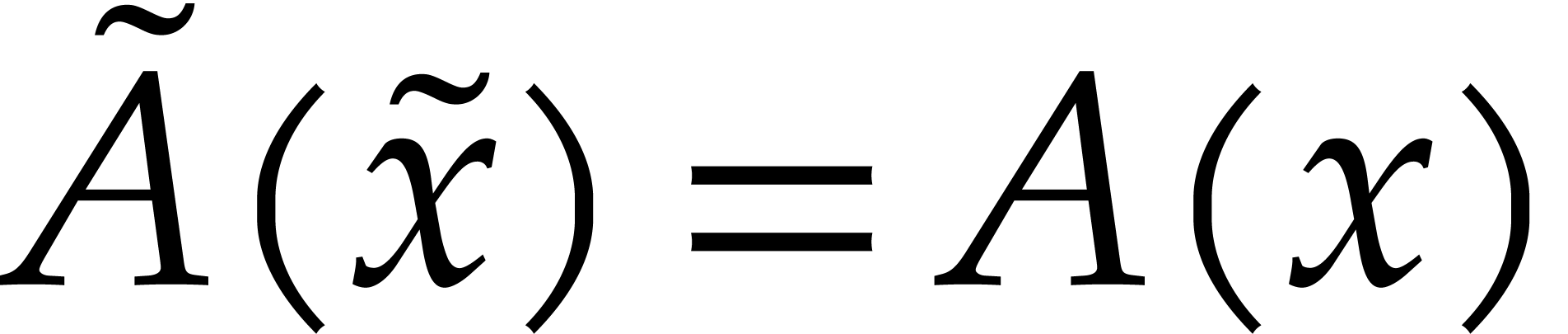

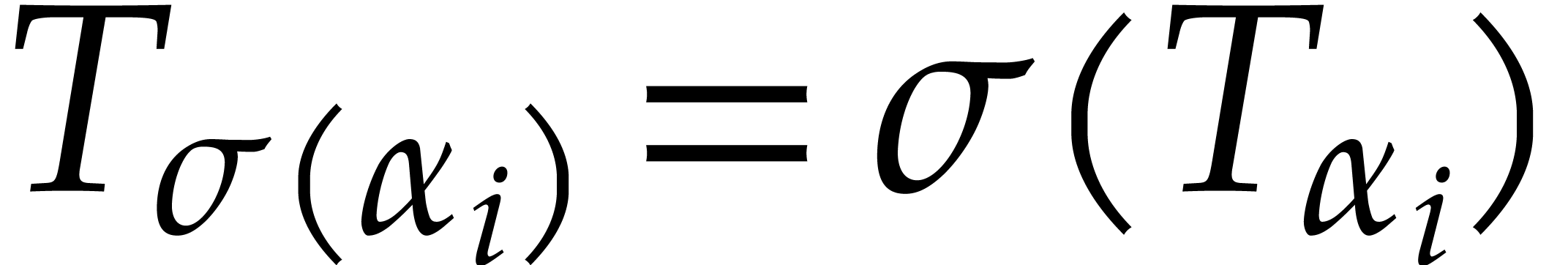

Notice that the first equation  of the system (2.3) is of the form (1.1), where we recall that

of the system (2.3) is of the form (1.1), where we recall that

. Now assume that we have a

computable confined reduction

. Now assume that we have a

computable confined reduction  .

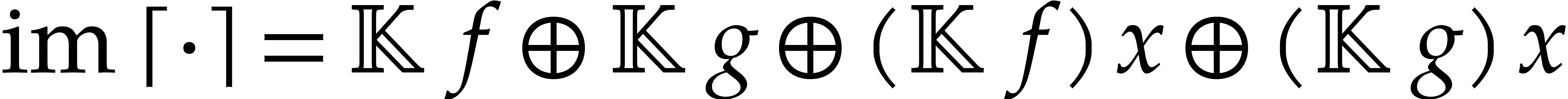

Then the functions in the sequence

.

Then the functions in the sequence  can all be

computed and they belong to a finite dimensional

can all be

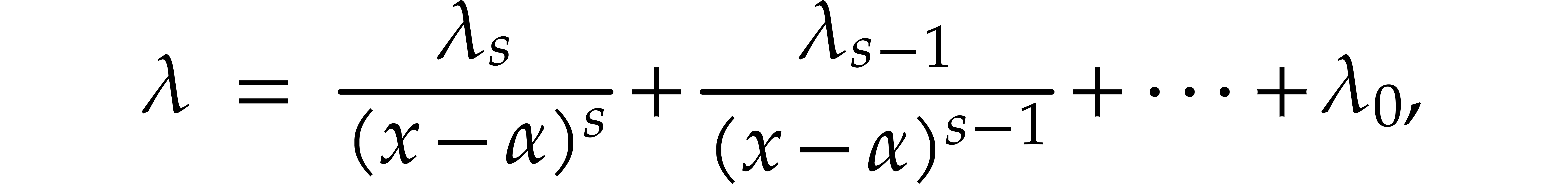

computed and they belong to a finite dimensional  -vector space

-vector space  .

Using linear algebra, this means that we can compute a relation

.

Using linear algebra, this means that we can compute a relation

|

(2.9) |

with  and

and  .

Taking

.

Taking

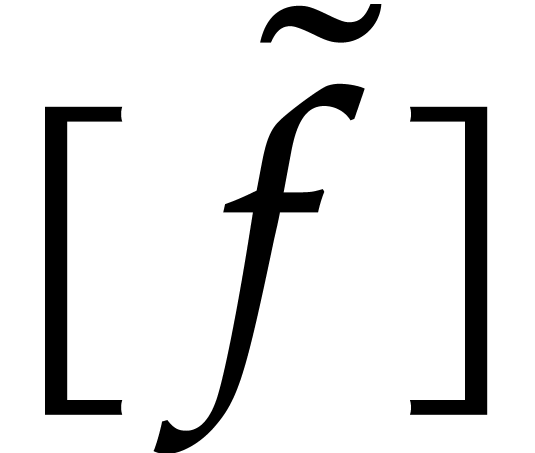

we thus obtain (2.6). If the relation (2.9)

has minimal order  and the reduction

and the reduction  is normal, then it can be shown [14,

Proposition 16] that there exist no relations of the form (2.6)

of order lower than

is normal, then it can be shown [14,

Proposition 16] that there exist no relations of the form (2.6)

of order lower than  .

.

One important property of reduction-based telescoping is that it allows

us to compute telescopers without necessarily computing the

corresponding certificates. In practice, it turns out that certificates

are often much larger than telescopers; this often explains the

efficiency of the reduction-based approach. Notice that the above

approach can easily be adapted to compute certificates as well, when

really needed: it suffices to require that the reduction procedure  also produces a

also produces a  with

with  . Given a relation (2.9)

and

. Given a relation (2.9)

and  with

with  ,

we indeed have

,

we indeed have  .

.

Example  .

Given

.

Given  , our algorithm to

compute

, our algorithm to

compute  proceeds by induction on

proceeds by induction on  . If

. If  ,

then we take

,

then we take  . Otherwise, we

may write

. Otherwise, we

may write  and

and  .

Setting

.

Setting

we have

whence

|

(2.10) |

is of the form  with

with  . By the induction hypothesis, we know how to

compute

. By the induction hypothesis, we know how to

compute  , so we can simply

take

, so we can simply

take  . It is easily verified

that

. It is easily verified

that  , again by induction on

, again by induction on

, so the reduction is

confined.

, so the reduction is

confined.

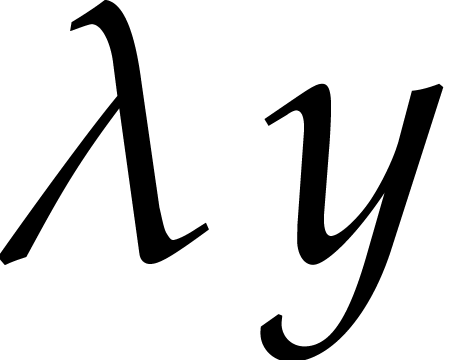

Applying our reduction to the functions  and

and  from Example 2.2, we find that

from Example 2.2, we find that

and

This leads to the desired relations (2.7) and (2.8).

Remark  is replaced by a finite number of

coordinates

is replaced by a finite number of

coordinates  and

and  satisfies an equation

satisfies an equation  with respect to each

coordinate

with respect to each

coordinate  (with suitable compatibility

constraints). Indeed, for each

(with suitable compatibility

constraints). Indeed, for each  ,

it suffices to compute the sequence

,

it suffices to compute the sequence  until we

find a relation

until we

find a relation  with

with  . For each

. For each  ,

this yields a non-trivial operator

,

this yields a non-trivial operator  with

with  .

.

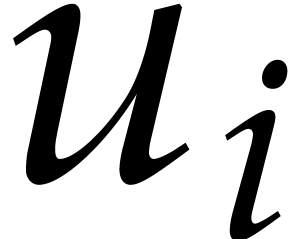

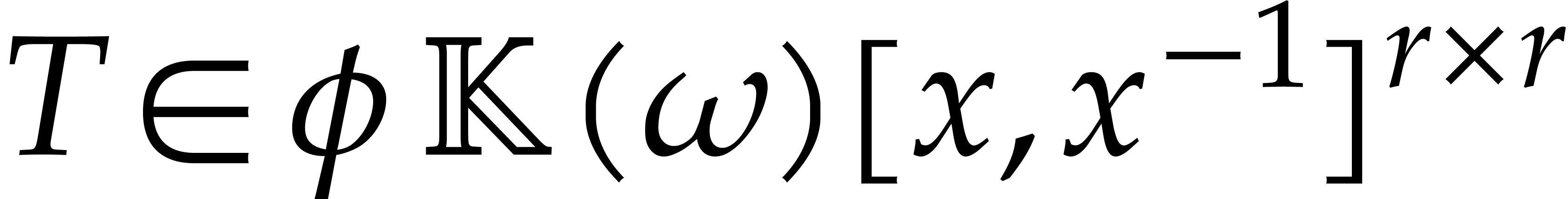

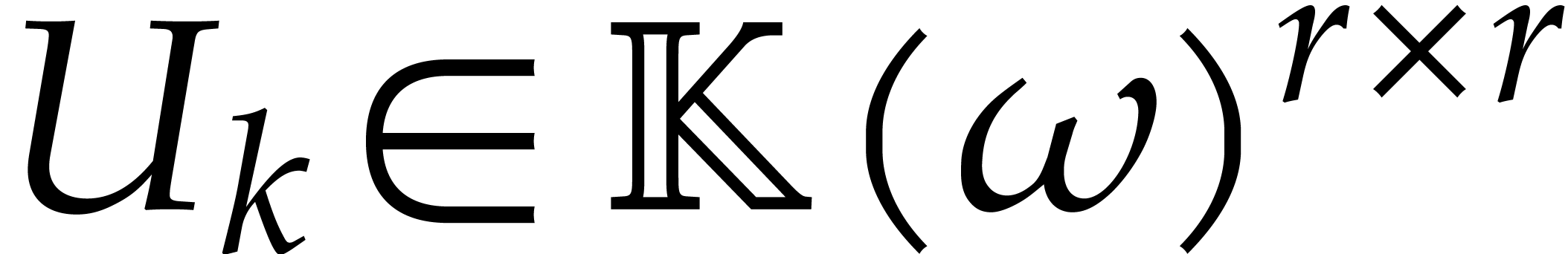

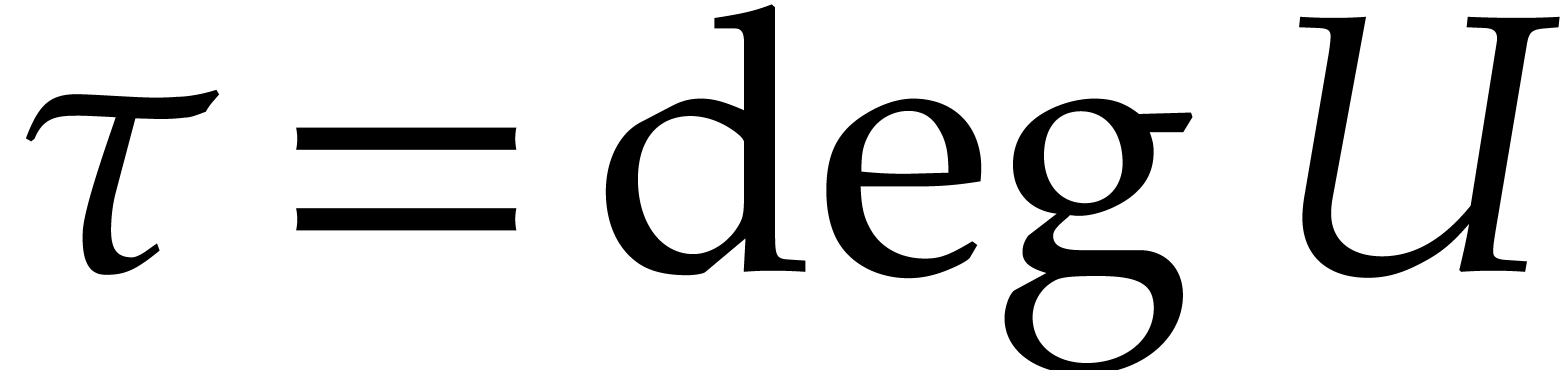

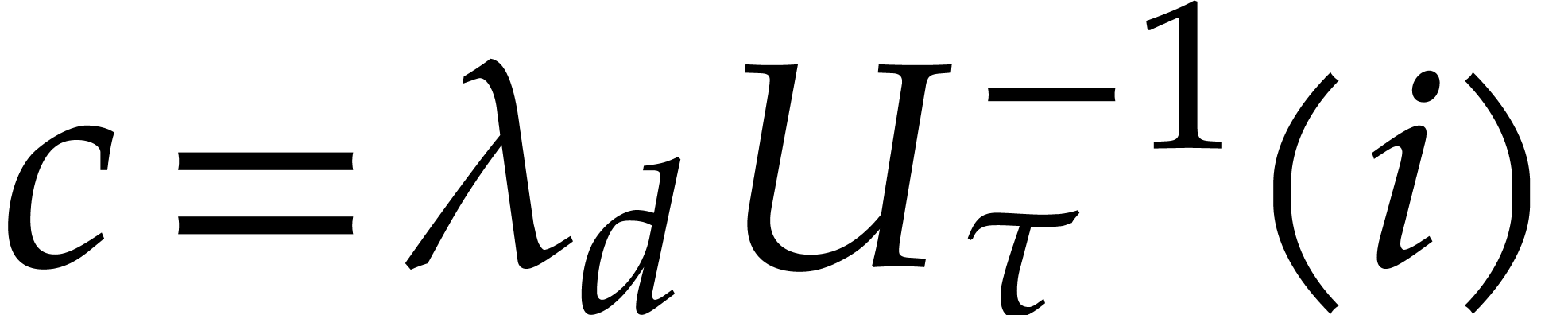

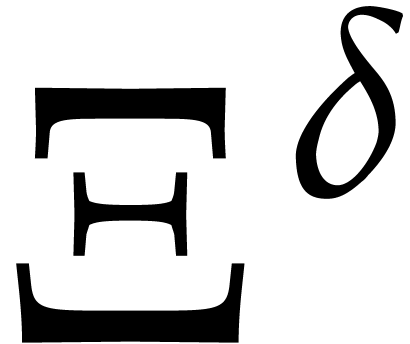

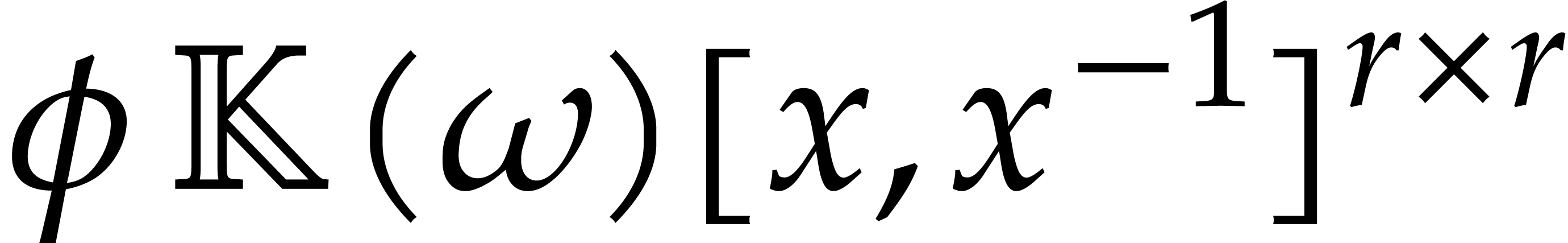

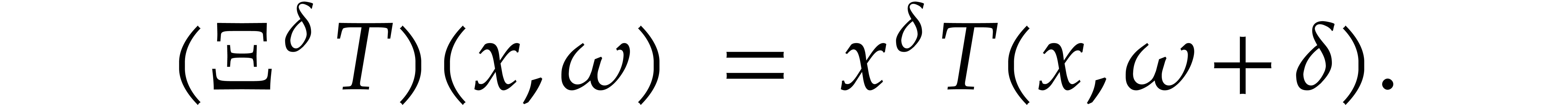

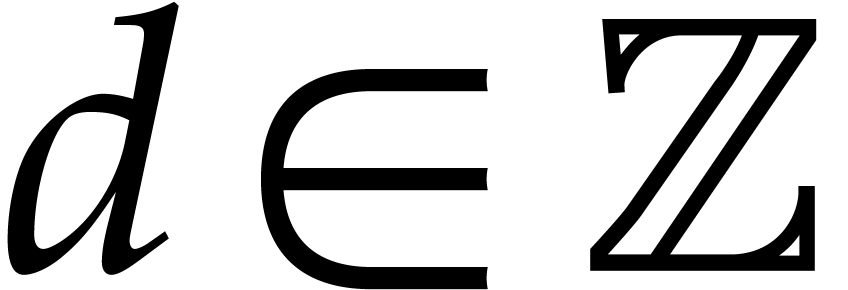

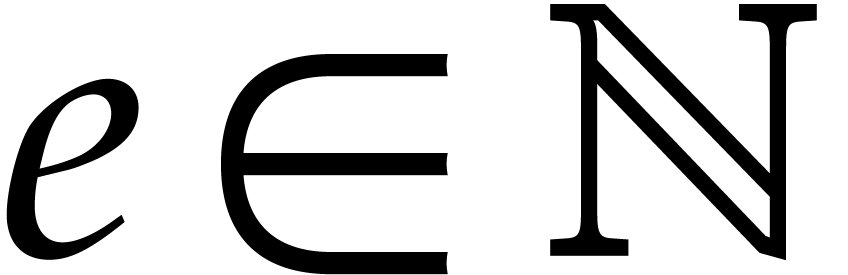

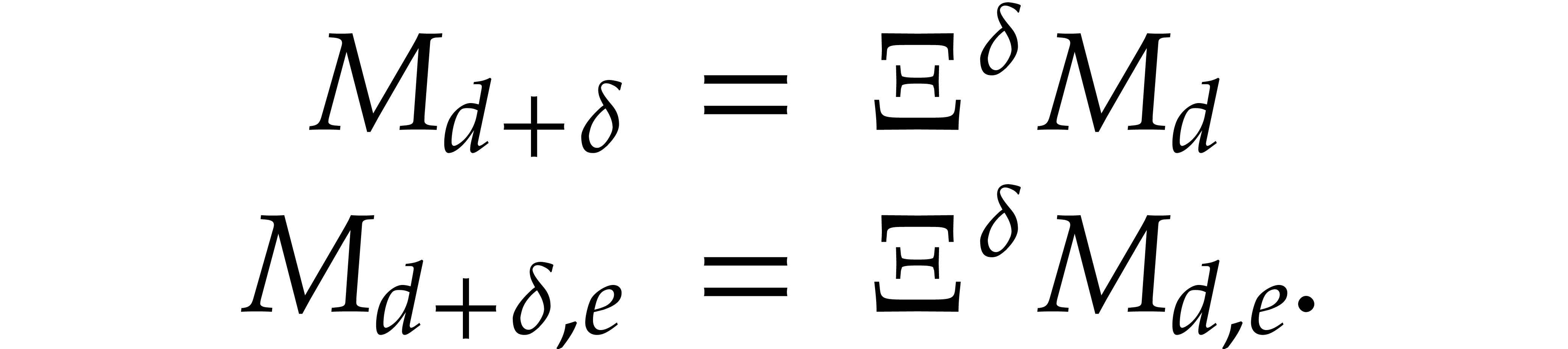

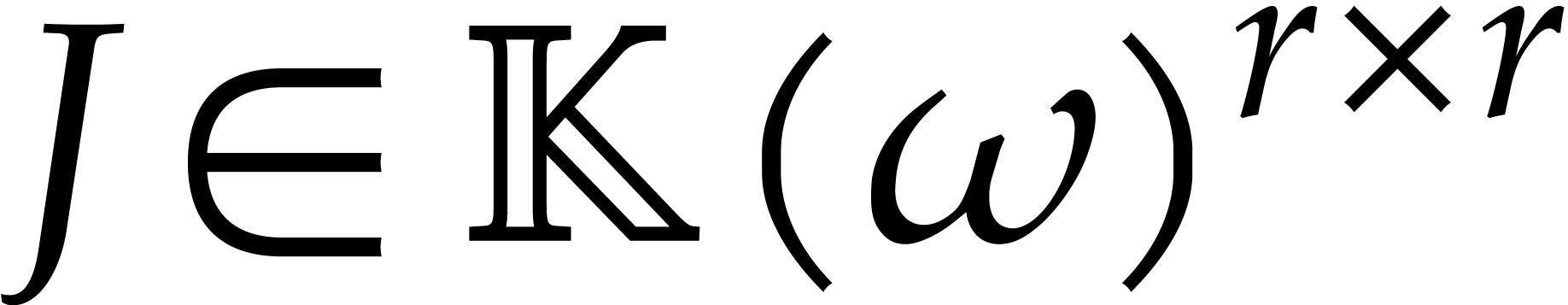

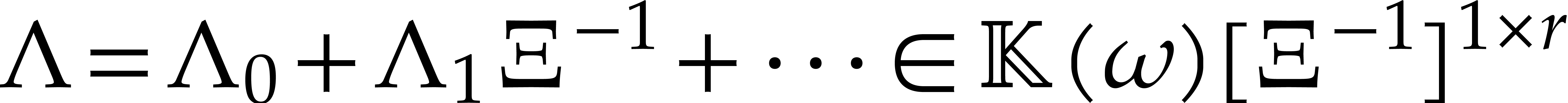

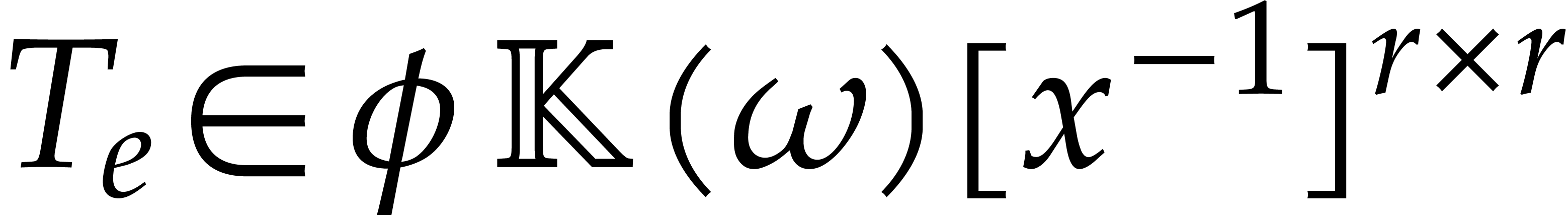

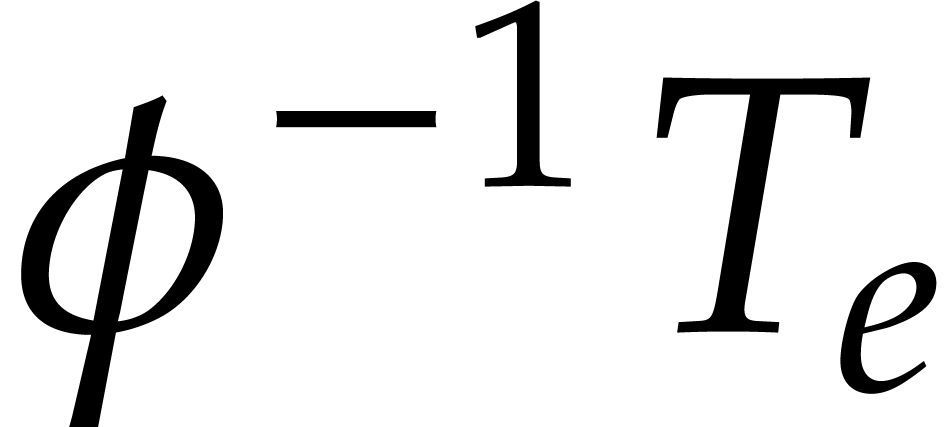

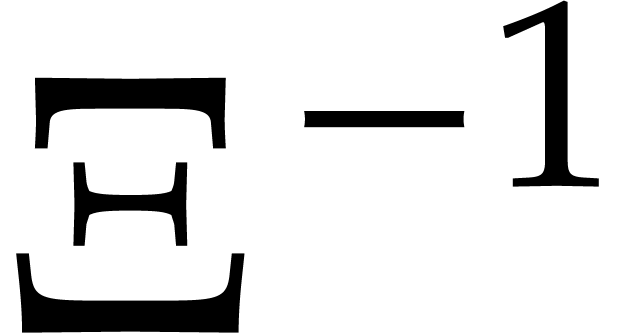

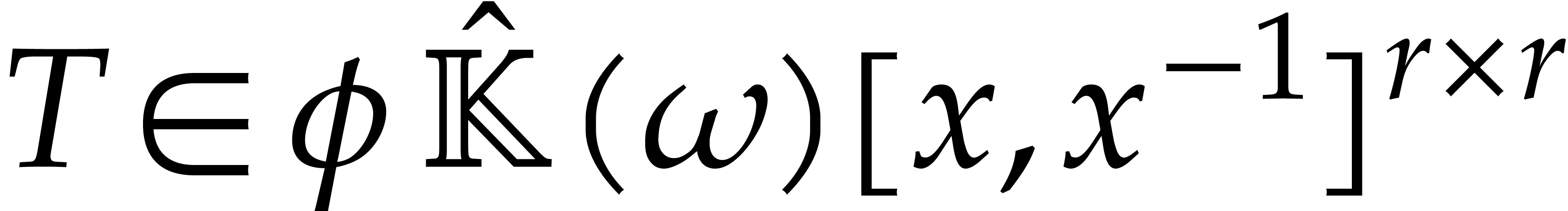

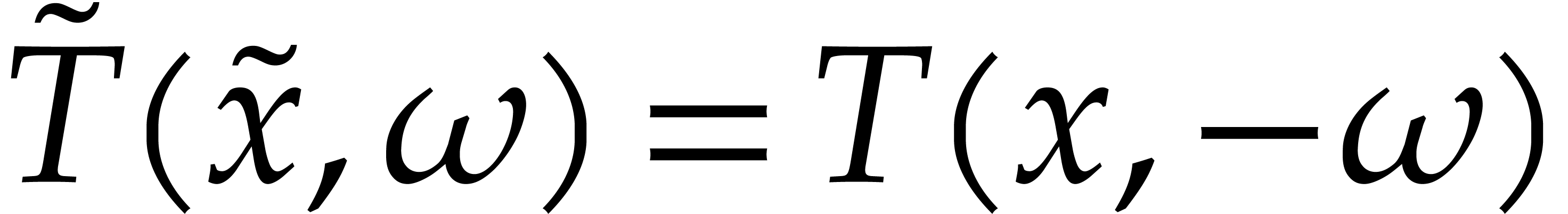

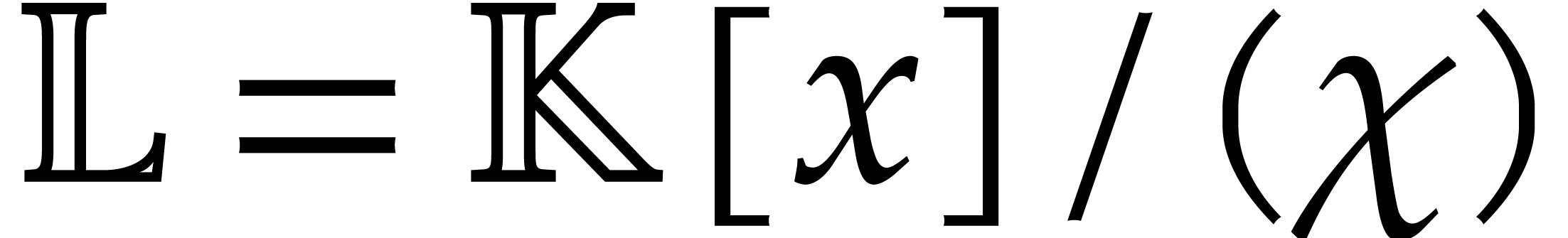

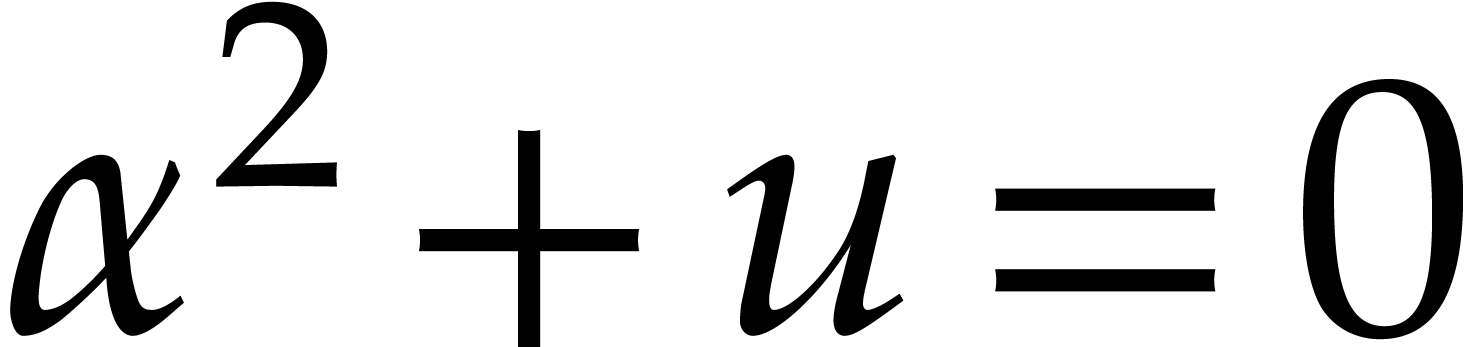

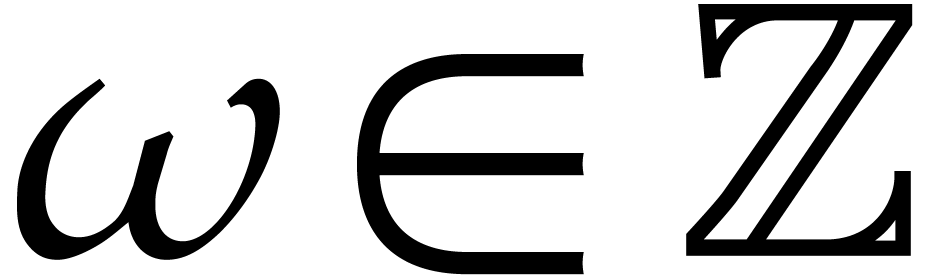

Let  , where

, where  and

and  are indeterminates. We view

are indeterminates. We view  as a parameter that takes integer values. We may regard

as a parameter that takes integer values. We may regard  as a Laurent polynomial with matrix coefficients

as a Laurent polynomial with matrix coefficients  :

:

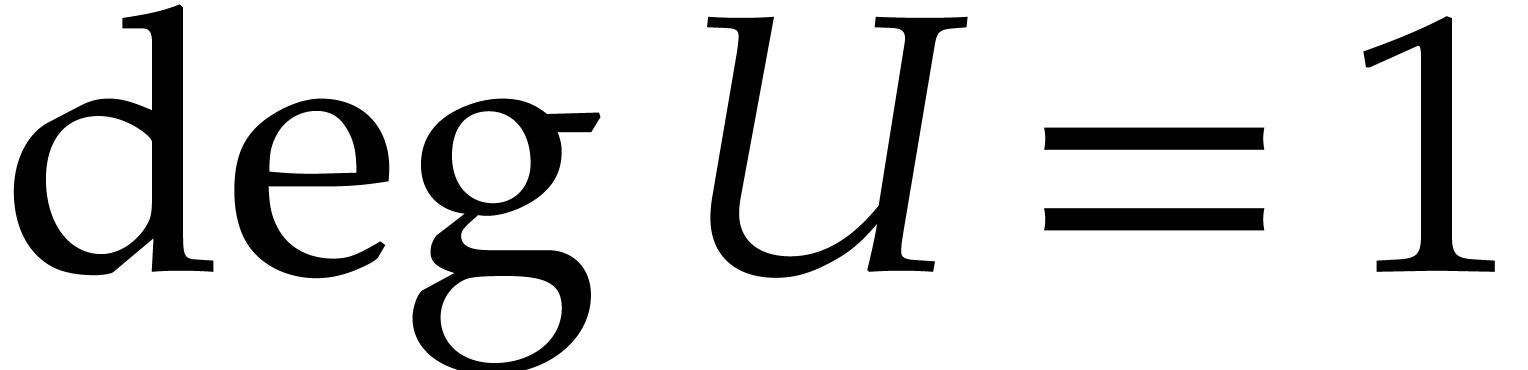

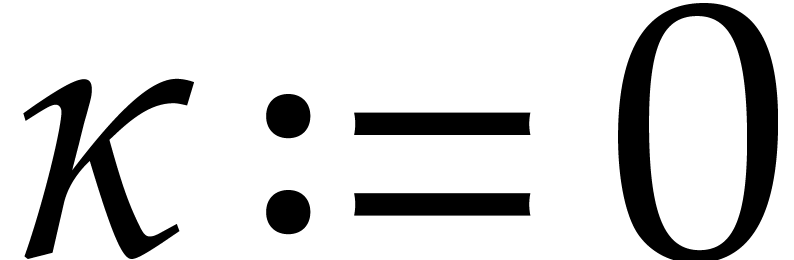

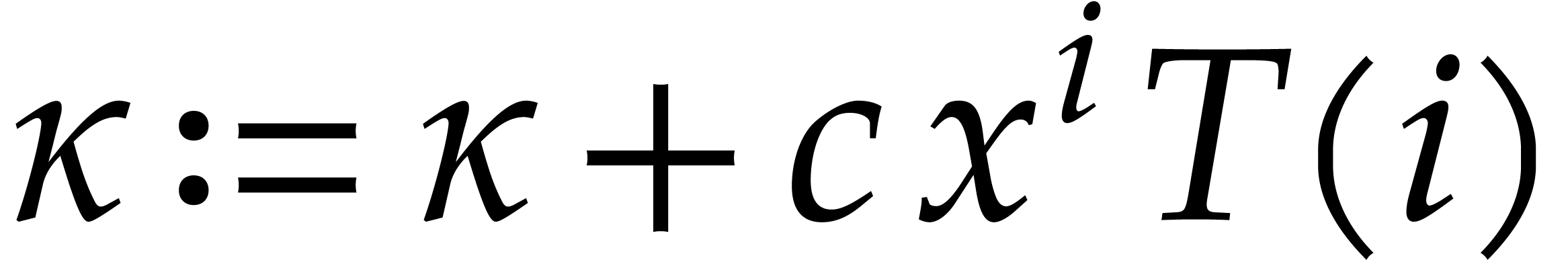

If  , then we denote

, then we denote  . Setting

. Setting

the equation (1.1) implies

for any constant matrix  . The

matrix

. The

matrix  can also be regarded as a Laurent

polynomial with matrix coefficients

can also be regarded as a Laurent

polynomial with matrix coefficients  .

We say that

.

We say that  is a head chopper for (1.1) if

is a head chopper for (1.1) if  is an invertible matrix.

is an invertible matrix.

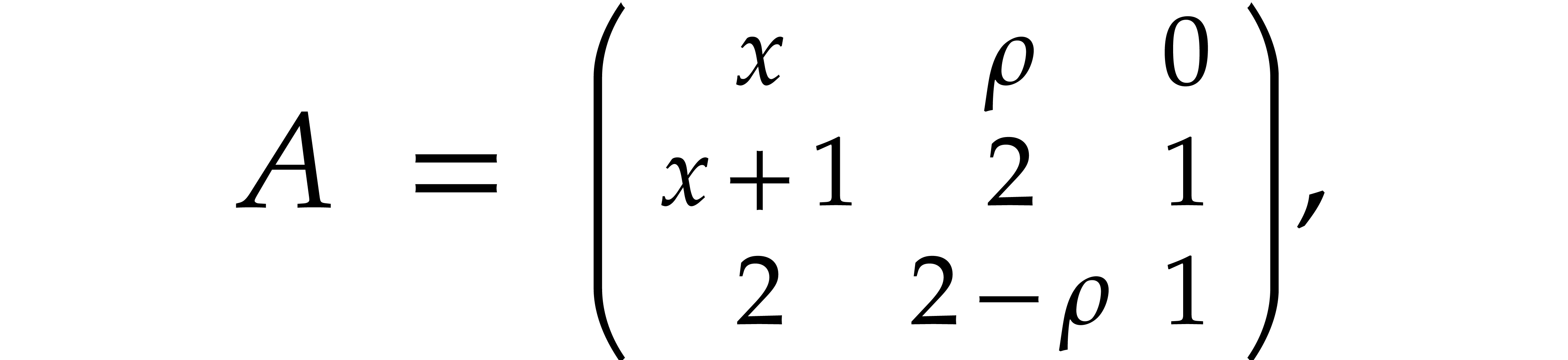

Example  and

and  as in Example 2.1, the identity matrix

as in Example 2.1, the identity matrix  is a head

chopper. Indeed, for this choice of

is a head

chopper. Indeed, for this choice of  ,

we obtain

,

we obtain

so  and

and  is invertible.

The matrix

is invertible.

The matrix  is also a head chopper, with

is also a head chopper, with

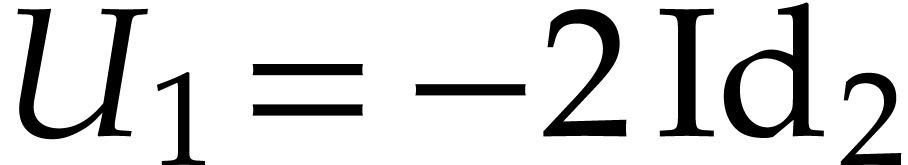

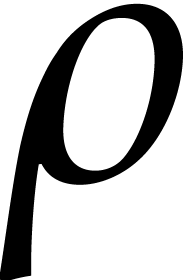

Example  and

and

for some formal parameter  .

Then we claim that

.

Then we claim that

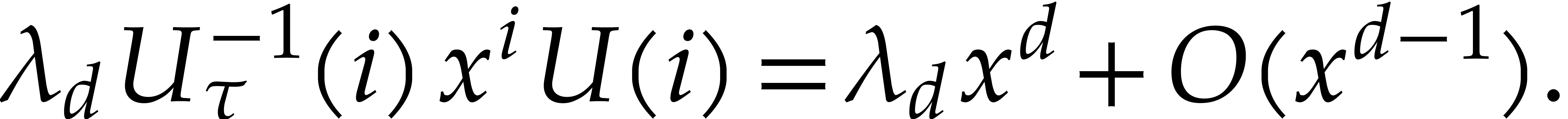

is a head chopper. Indeed, a straightforward computation yields

which shows that the leading coefficient of  as a

polynomial in

as a

polynomial in  is (formally) invertible.

is (formally) invertible.

Before studying the computation of head choppers, let us first show how

they can be used for the construction of so-called “head

reductions”, by generalizing the inductive construction from

Example 2.3. Let  be a head chopper

for (1.1) and assume in addition that

be a head chopper

for (1.1) and assume in addition that  and

and  . Given

. Given  -subvector spaces

-subvector spaces  and

and

of

of  ,

we say that a

,

we say that a  -linear map

-linear map

is a partial reduction for (1.1)

if

is a partial reduction for (1.1)

if  for all

for all  .

.

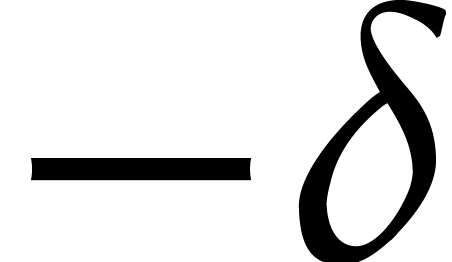

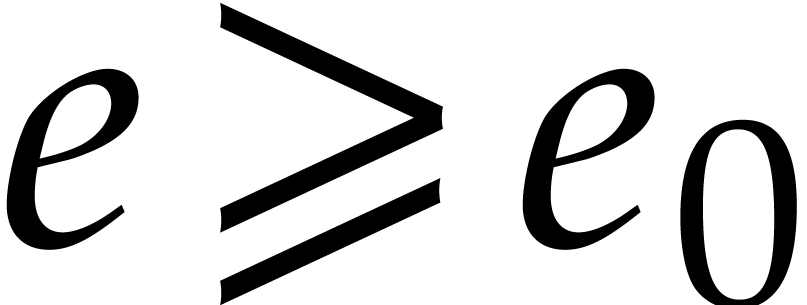

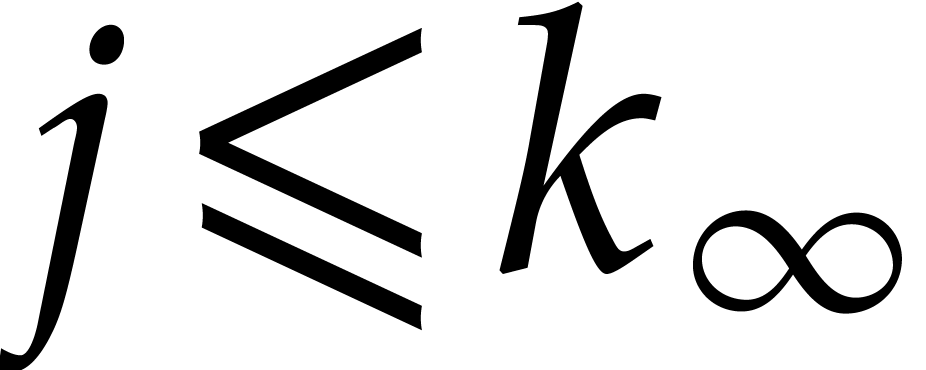

Let  . Writing

. Writing  with

with  and

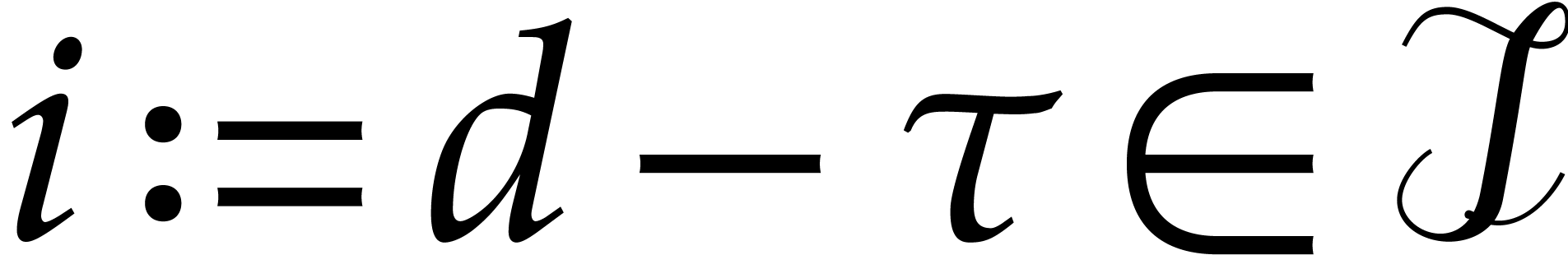

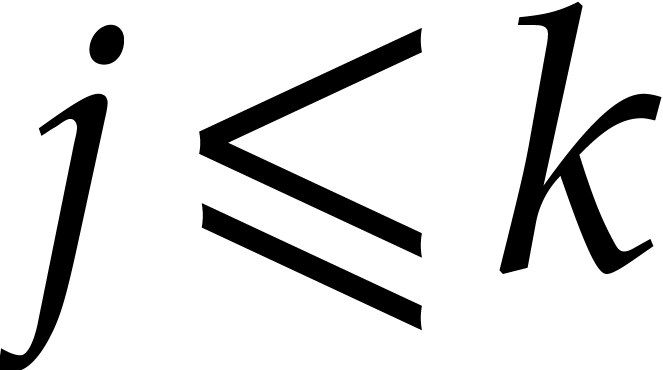

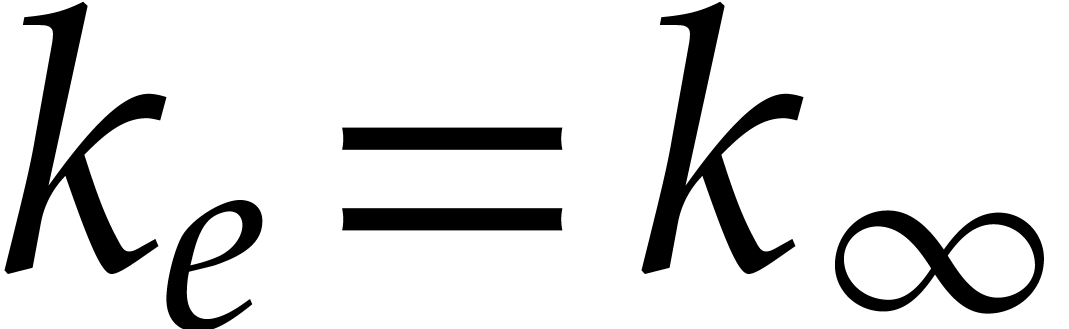

and  , we say that

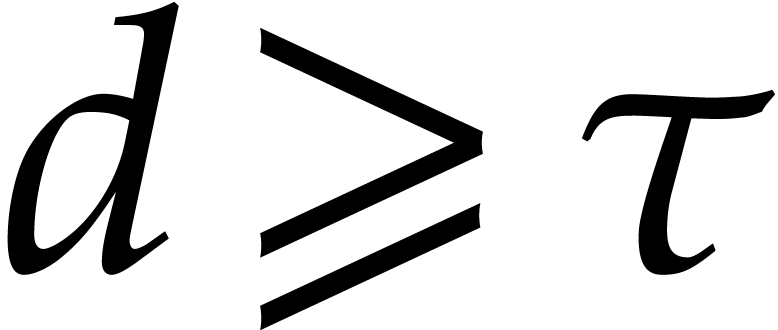

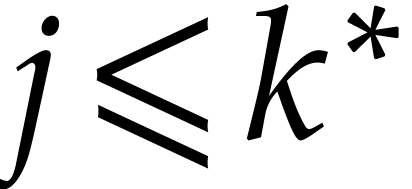

, we say that  is an

exceptional index if

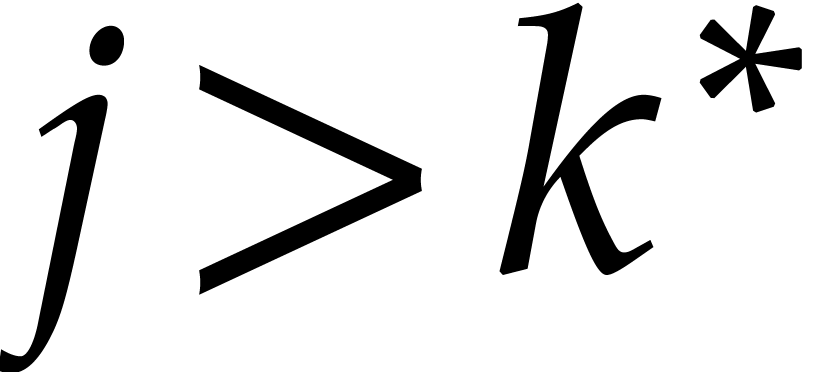

is an

exceptional index if  or

or  . Here we understand that

. Here we understand that  stands for the evaluation of

stands for the evaluation of  at

at  and similarly for

and similarly for  . We write

. We write

for the finite set of exceptional indices. If

for the finite set of exceptional indices. If

, then we notice that the

matrix

, then we notice that the

matrix  is invertible.

is invertible.

Any  can be regarded as a polynomial

can be regarded as a polynomial  in

in  . Given

. Given

, let

, let

If  and

and  ,

then recall that the matrix

,

then recall that the matrix  is invertible. We

may thus define the

is invertible. We

may thus define the  -linear

mapping

-linear

mapping  by

by

We indeed have  , since

, since

The mapping  also induces a mapping

also induces a mapping  that we will still denote by

that we will still denote by  .

Setting

.

Setting  , the relation (3.3) yields

, the relation (3.3) yields

This shows that the mapping  is a partial

reduction. If

is a partial

reduction. If  and

and  ,

then we have

,

then we have  and the identity map

and the identity map  is clearly a partial reduction as well.

is clearly a partial reduction as well.

Now we observe that compositions of partial reductions are again partial

reductions. For each  , we

thus have a partial reduction

, we

thus have a partial reduction

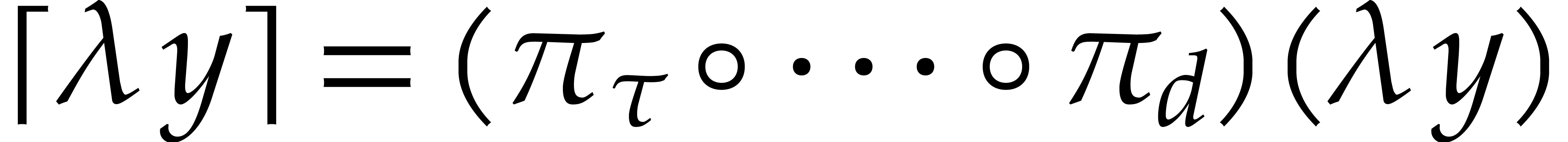

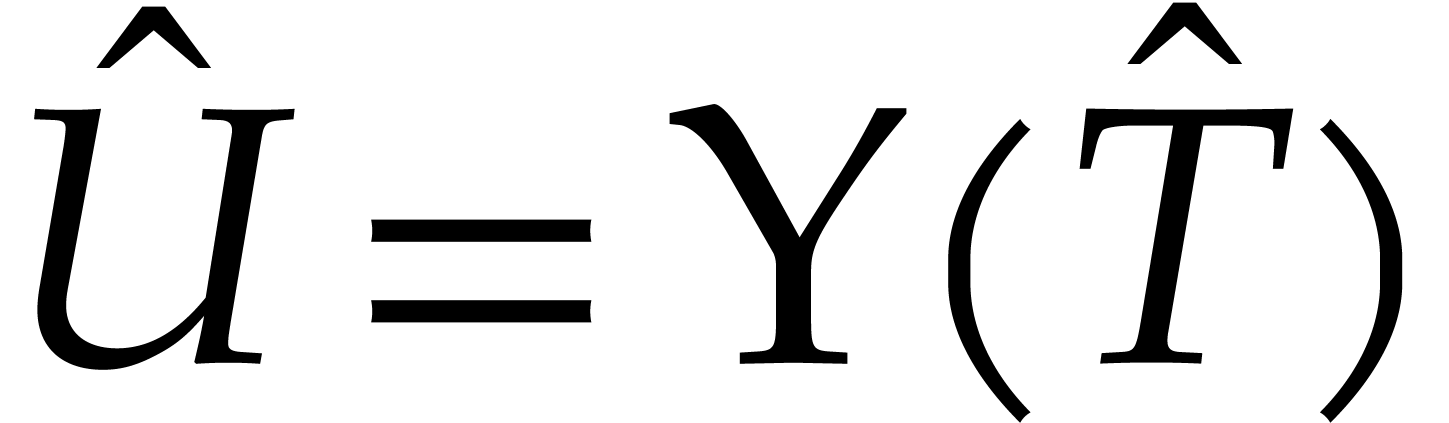

Now let  be the unique mapping with

be the unique mapping with  for all

for all  and

and  . Then

. Then  is clearly a partial

reduction as well and it admits a finite dimensional image

is clearly a partial

reduction as well and it admits a finite dimensional image  . For any

. For any  ,

we call

,

we call  the head reduction of

the head reduction of  . The following straightforward

algorithm allows us to compute head reductions:

. The following straightforward

algorithm allows us to compute head reductions:

Algorithm HeadReduce

Input:

Output: the head-reduction |

repeat

if

Let

|

Example  and

and  be as in Examples 2.1,

2.2, 2.3 and 3.1. Taking the head

chopper

be as in Examples 2.1,

2.2, 2.3 and 3.1. Taking the head

chopper  with

with  as in (3.5), we get

as in (3.5), we get

for all  and

and  .

In other words,

.

In other words,  coincides with

coincides with  from (2.10), so the head reduction procedure coincides with

the reduction procedure from Example 2.3, except that we

directly reduce any

from (2.10), so the head reduction procedure coincides with

the reduction procedure from Example 2.3, except that we

directly reduce any  with

with  to itself (we have

to itself (we have  and

and  ). The fact that we may actually reduce elements

). The fact that we may actually reduce elements

with

with  somewhat further is

due to the fact that the coefficient of

somewhat further is

due to the fact that the coefficient of  in (3.4) vanishes for

in (3.4) vanishes for  .

Indeed, this means that the matrix

.

Indeed, this means that the matrix  from (3.4) actually evaluates to a polynomial in

from (3.4) actually evaluates to a polynomial in  at

at  , so we may use it instead

of the matrix from (3.5) as a head chopper.

, so we may use it instead

of the matrix from (3.5) as a head chopper.

Example  and

and  be as in Example 3.2, with

be as in Example 3.2, with  . Taking

. Taking

and

and  as in (3.6)

and (3.7), we obtain

as in (3.6)

and (3.7), we obtain  ,

,

, and

, and

For  , we note that

, we note that

Let us show how  and

and  can

be used to compute the head-chopper of

can

be used to compute the head-chopper of  ,

where

,

where

Applying  to

to  ,

we find that

,

we find that  is maximal with

is maximal with  , so we set

, so we set

We repeat the loop for the new value of  ,

which yields

,

which yields

Repeating the loop once more, we obtain

At this point, we have  , so

we have obtained the head reduction of the original

, so

we have obtained the head reduction of the original  .

.

Example  ,

,  ,

,  , and

, and

be as in Example 3.2 and

be as in Example 3.2 and  . Taking

. Taking

we observe that  , whence any

element of

, whence any

element of  is head-reduced. Even for equations

of bounded degree and order, this means that head reduced elements

is head-reduced. Even for equations

of bounded degree and order, this means that head reduced elements  can have arbitrarily large degree.

can have arbitrarily large degree.

Remark  with

with  . Indeed, it suffices to start with

. Indeed, it suffices to start with  and accumulate

and accumulate  at the end of the

main loop.

at the end of the

main loop.

Remark  has one row. In fact, the same algorithm works

for matrices

has one row. In fact, the same algorithm works

for matrices  with an arbitrary number of rows

with an arbitrary number of rows

. This allows for the

simultaneous head reduction of several elements in

. This allows for the

simultaneous head reduction of several elements in  , something that might be interesting for the

application to creative telescoping.

, something that might be interesting for the

application to creative telescoping.

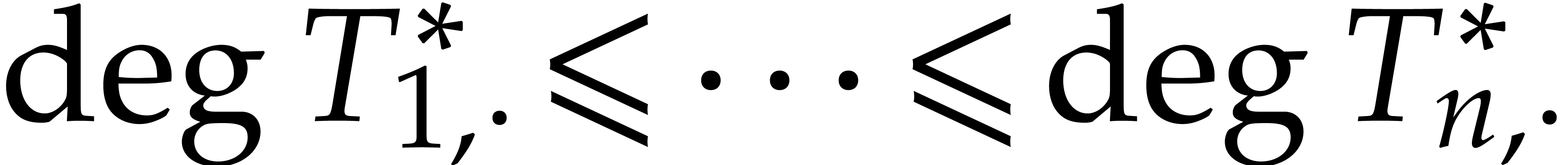

The computation of head choppers essentially boils down to linear algebra. We will rely on the concept of “row swept forms”. This notion is very similar to the more traditional row echelon forms, but there are a few differences that are illustrated in Example 4.1 below.

Let  be a matrix and denote the

be a matrix and denote the  -th row of

-th row of  by

by  . Assuming that

. Assuming that  , its leading index

, its leading index  is the smallest index

is the smallest index  with

with  . We say that

. We say that  is in

row swept form if there exists a

is in

row swept form if there exists a  such

that

such

that  and

and  for all

for all  . Notice that

. Notice that  has rank

has rank  in this case.

in this case.

An invertible matrix  such that

such that  is in row swept form will be called a row sweeper for

is in row swept form will be called a row sweeper for  . We may compute such a matrix

. We may compute such a matrix  using the routine RowSweeper below,

which is really a variant of Gaussian elimination. Whenever we apply

this routine to a matrix

using the routine RowSweeper below,

which is really a variant of Gaussian elimination. Whenever we apply

this routine to a matrix  such that the truncated

matrix

such that the truncated

matrix  with rows

with rows  is in

row swept form, we notice that these first

is in

row swept form, we notice that these first  rows

are left invariant by the row sweeping process. In other words, the

returned row sweeper

rows

are left invariant by the row sweeping process. In other words, the

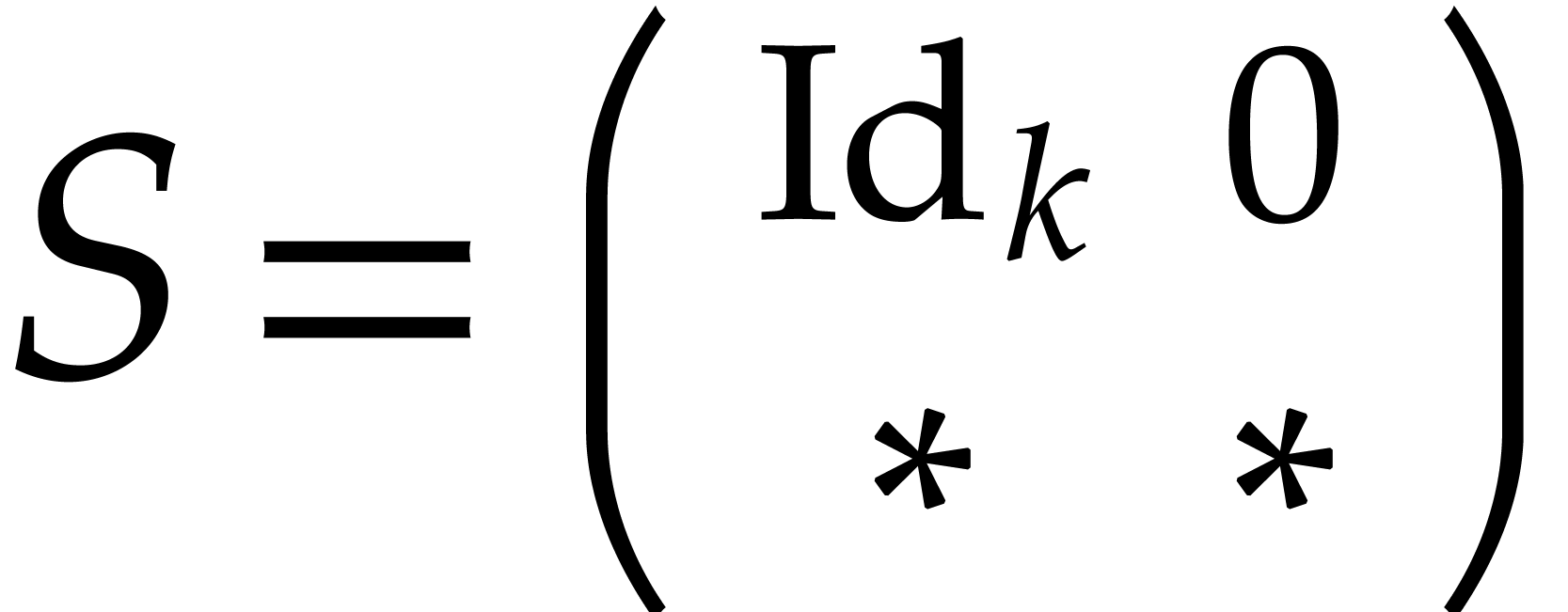

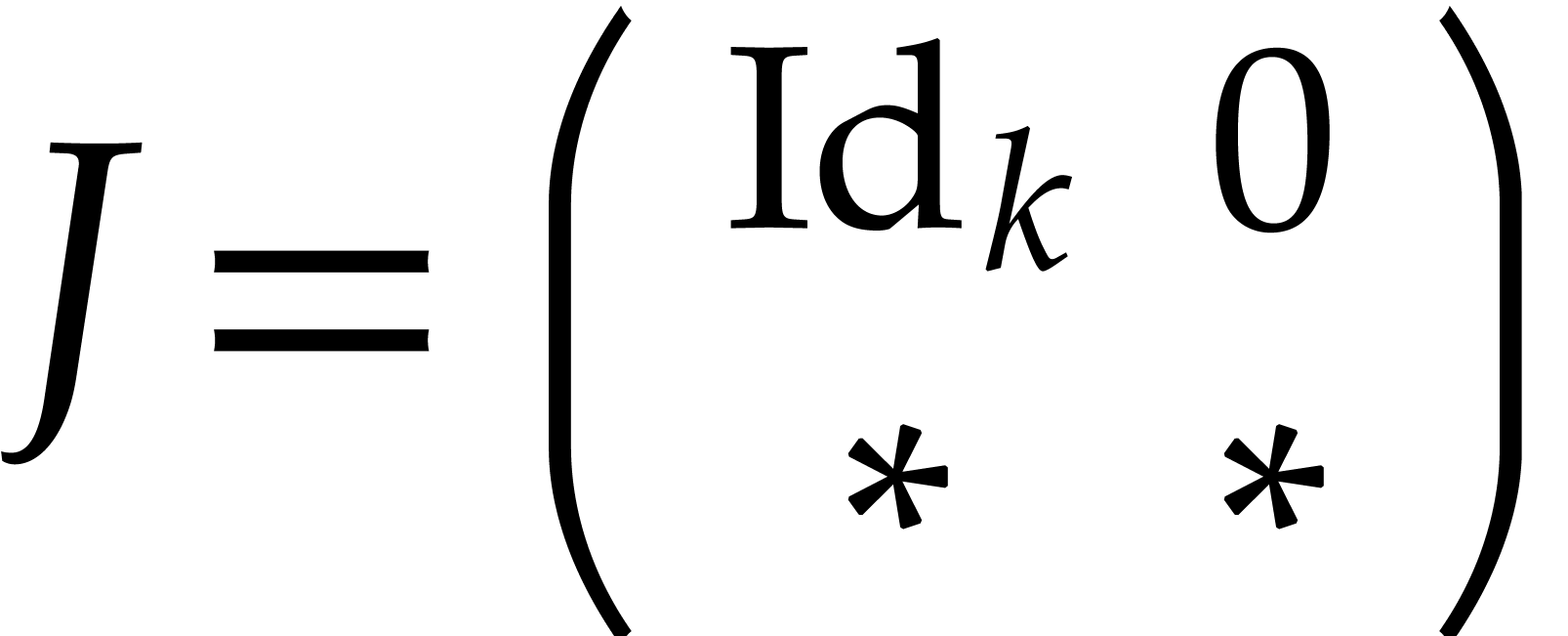

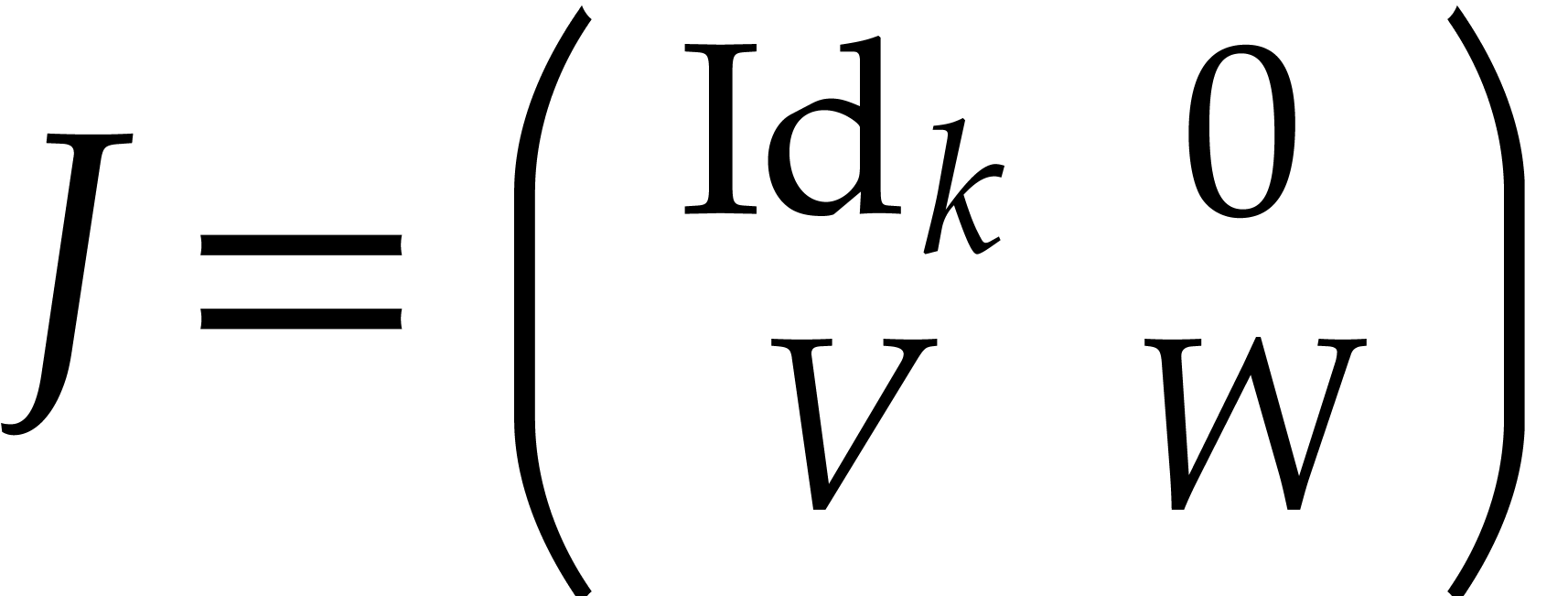

returned row sweeper  is of the form

is of the form  . If, in addition, the matrix

. If, in addition, the matrix  has rank

has rank  , then

, then  is of the form

is of the form  .

.

Algorithm RowSweeper

Input: a matrix

Output: a row sweeper |

for

if

Let

Swap the

for

return |

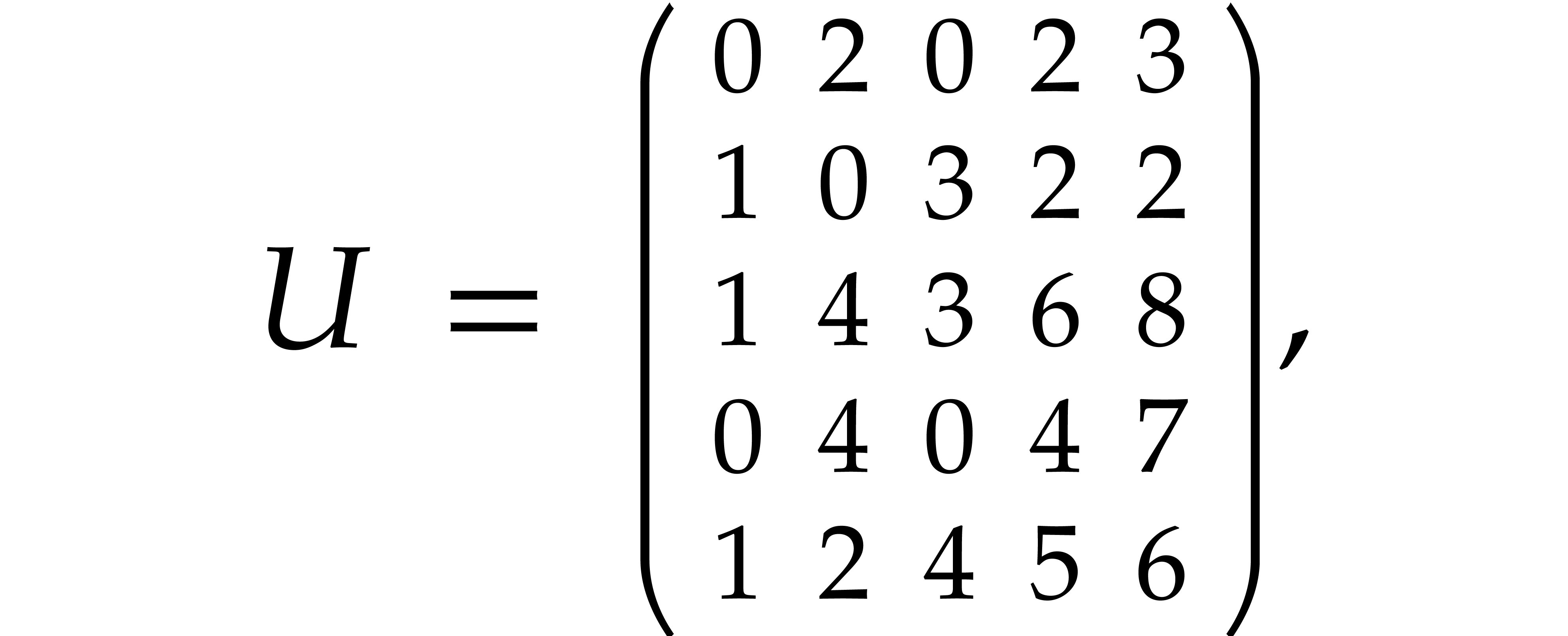

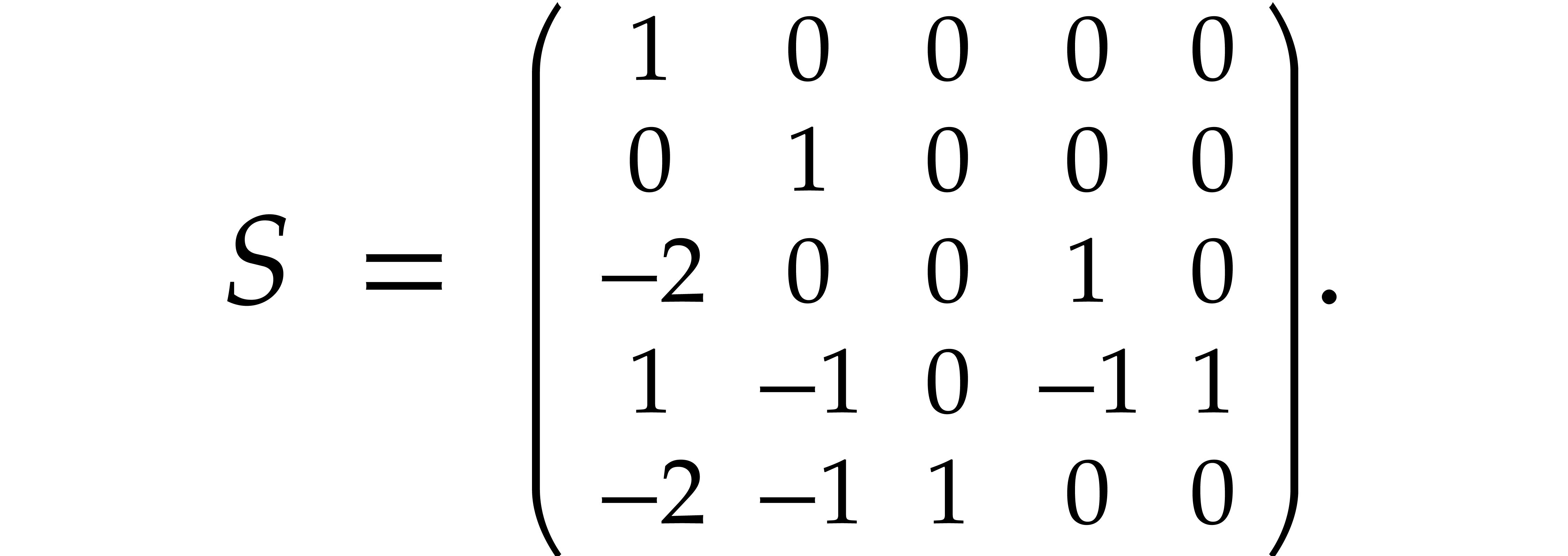

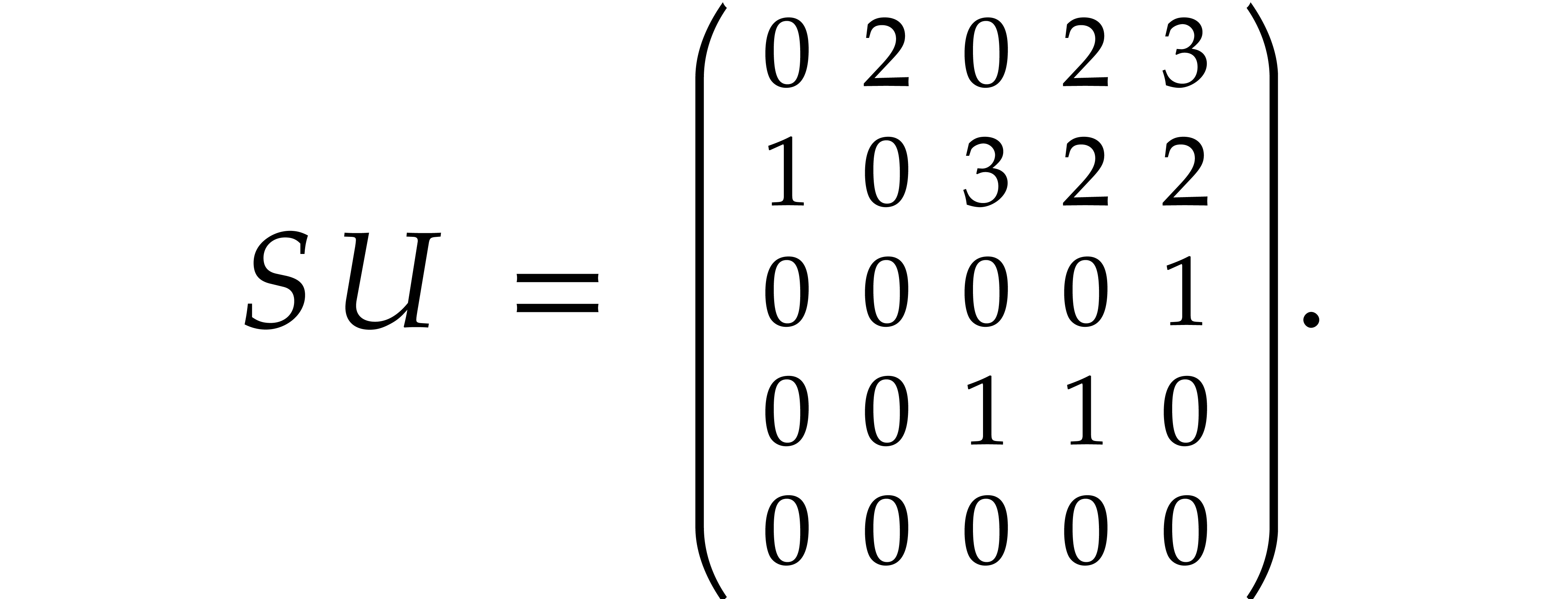

the algorithm RowSweeper produces the row sweeper

Since the two first rows of  were already in row

swept form, this matrix is indeed of the form

were already in row

swept form, this matrix is indeed of the form  . The resulting row swept form

. The resulting row swept form  for

for  is

is

The more traditional row echelon and reduced row echelon forms insist on

moving the rows  for which

for which  is minimal to the top, so the first two rows are not left invariant. The

different “normal” forms that we obtain for our example

matrix

is minimal to the top, so the first two rows are not left invariant. The

different “normal” forms that we obtain for our example

matrix  are shown below:

are shown below:

In Example 3.1 we already pointed out that head choppers

are generally not unique. Let us now study some transformations that

allow us to produce new head choppers from known ones; this will provide

us with useful insights for the general construction of head choppers.

For any  , we define the

operator

, we define the

operator  on

on  by

by

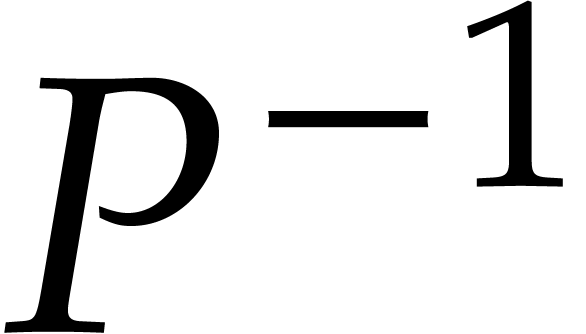

Proof. Setting  ,

,

and

and  ,

we have

,

we have

In other words,  .

.

Proof. Assume that  is a

head chopper for (1.1). Setting

is a

head chopper for (1.1). Setting  and

and

, we have

, we have  and

and  is invertible. Similarly, setting

is invertible. Similarly, setting  and

and  , we have

, we have

, whence

, whence  is invertible. The opposite directions follow by taking

is invertible. The opposite directions follow by taking  and

and  in the roles of

in the roles of  and

and

.

.

Given a head chopper  for (1.1) and

for (1.1) and

, let

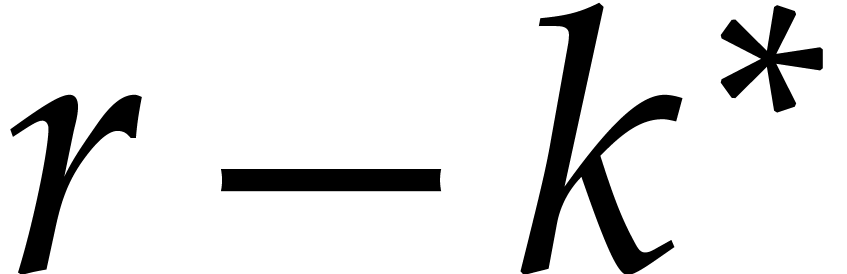

, let  be minimal such that

be minimal such that  or

or  . Then

. Then  is also maximal with

the property that

is also maximal with

the property that  and

and  . From Proposition 5.2(a) it

follows that

. From Proposition 5.2(a) it

follows that  is again a head chopper for (1.1). Without loss of generality, this allows us to make the

additional assumption that

is again a head chopper for (1.1). Without loss of generality, this allows us to make the

additional assumption that  at the beginning of

subsection 3.2.

at the beginning of

subsection 3.2.

In order to compute head choppers by induction, it will be convenient to

introduce a partial variant of this concept. First of all, we notice

that the equations (3.1–3.3) and

Proposition 5.1 generalize to the case when  , where

, where  is arbitrary.

Notice also that

is arbitrary.

Notice also that  , where

, where

. Given

. Given  and

and  , let

, let

It is easy to see that both  and

and  are

are  -modules.

-modules.

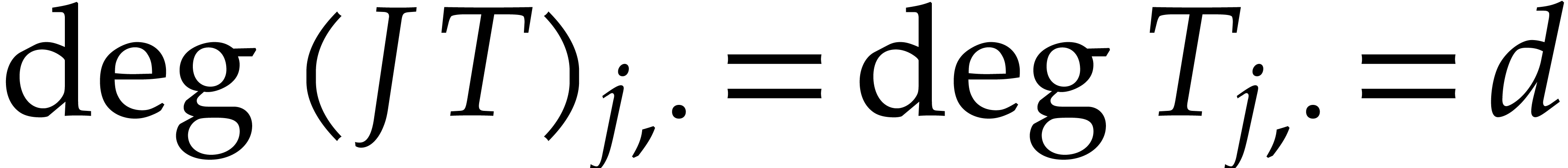

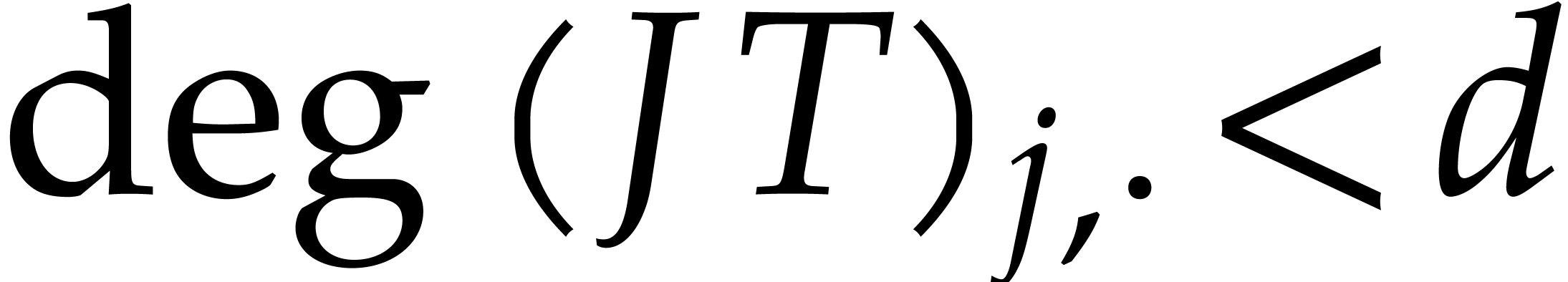

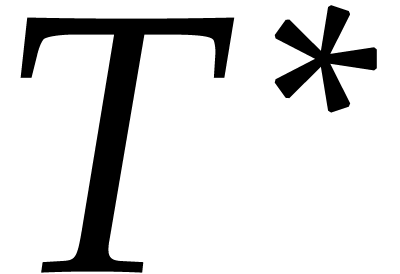

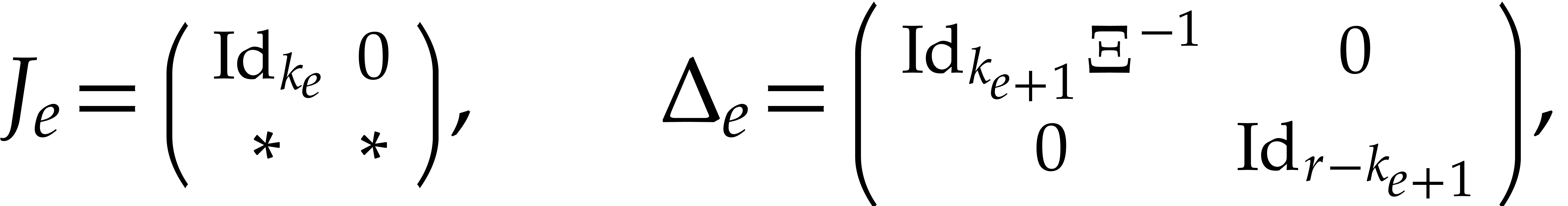

Now consider a matrix  with rows

with rows  ordered by increasing degree

ordered by increasing degree

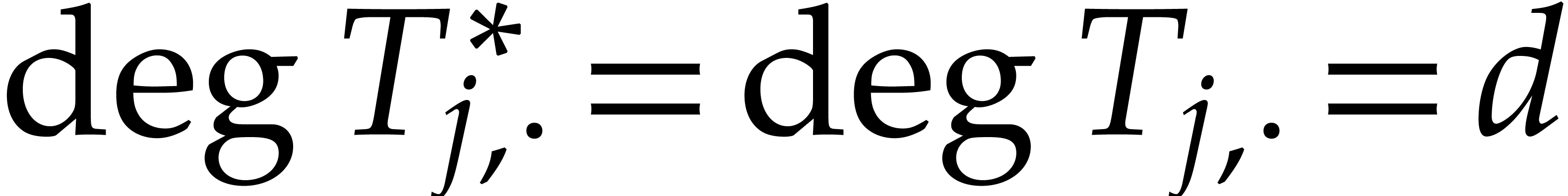

Let  , let

, let  be the matrix with rows

be the matrix with rows  , and

let

, and

let  be maximal such that

be maximal such that  . We say that

. We say that  is a

is a  -head annihilator for (1.1) if the following conditions are satisfied:

-head annihilator for (1.1) if the following conditions are satisfied:

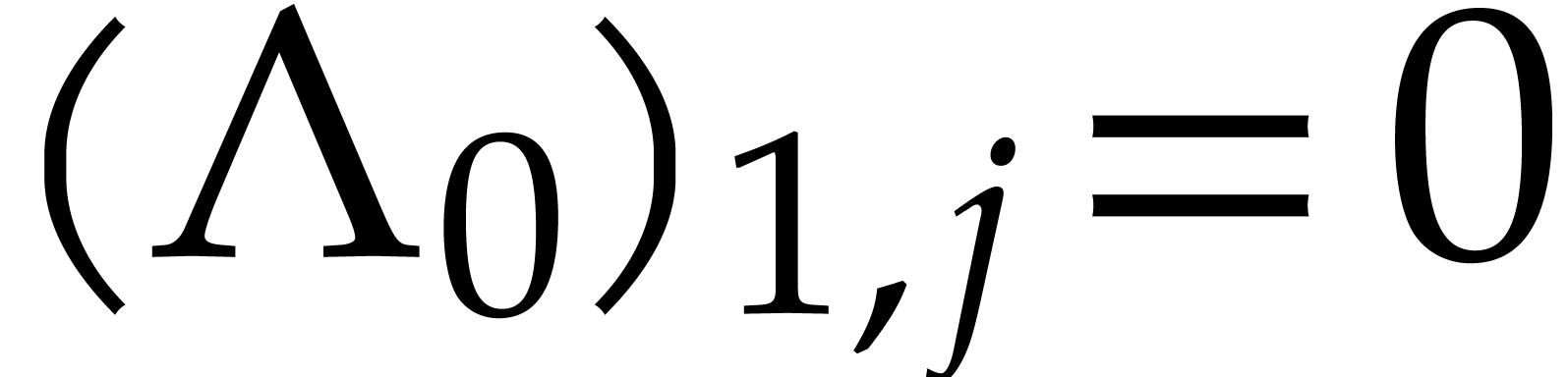

The rows of  form a basis for the

form a basis for the  -module

-module  ;

;

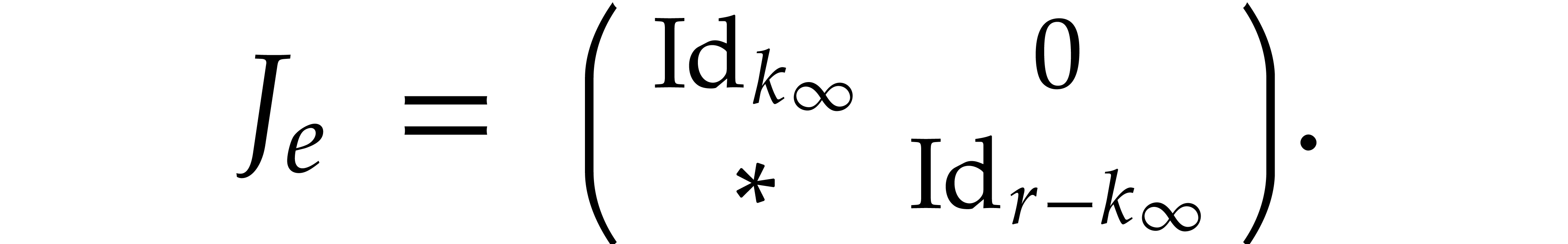

The matrix  is invertible;

is invertible;

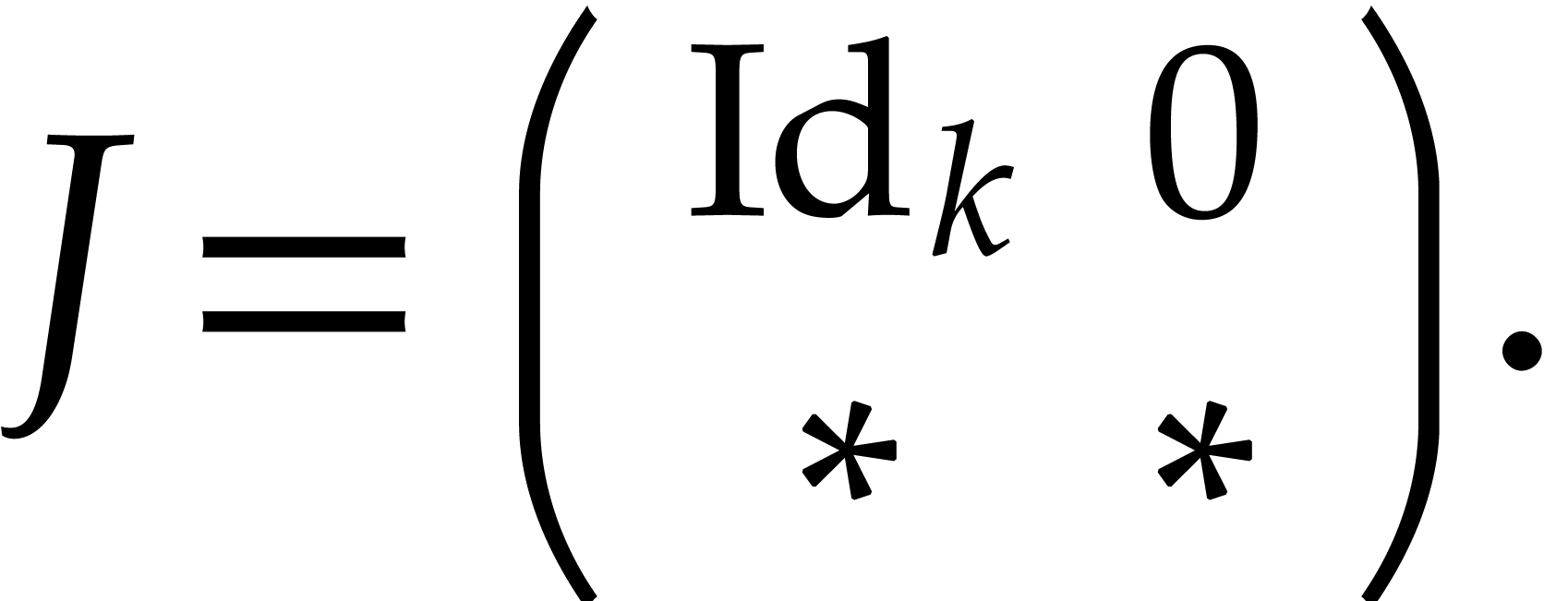

The first  rows of

rows of  are

are  -linearly

independent.

-linearly

independent.

The matrix  is obviously a

is obviously a  -head annihilator with

-head annihilator with  .

If

.

If  , then we notice that

HA3 implies that

, then we notice that

HA3 implies that  is a head

chopper for (1.1). We also have the following variant of

Proposition 5.2(a):

is a head

chopper for (1.1). We also have the following variant of

Proposition 5.2(a):

, we

have

, we

have

is a

is a  -head

annihilator if and only if

-head

annihilator if and only if  is a

is a  -head annihilator.

-head annihilator.

Using a constant linear transforation as in Proposition 5.2(b), we may now achieve the following:

be a

be a  -head annihilator for

-head annihilator for  and

and  be as in

be as in

. Then there

exists an invertible matrix

. Then there

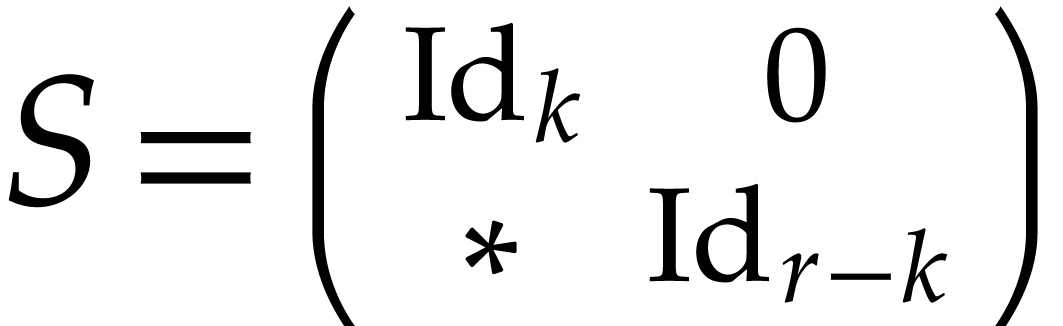

exists an invertible matrix  of the form

of the form

such that the last  rows of

rows of  vanish and such that

vanish and such that  is a

is a  -head annihilator for

-head annihilator for

Proof. Let

be the row sweeper for  as computed by the

algorithm RowSweeper from section 4. By

construction,

as computed by the

algorithm RowSweeper from section 4. By

construction,  for all

for all  , and the last

, and the last  rows of

rows of  vanish. We claim that

vanish. We claim that  for all

for all

. Indeed, if

. Indeed, if  , then this would imply that

, then this would imply that  , which contradicts HA2. From

our claim, it follows that

, which contradicts HA2. From

our claim, it follows that  and

and  is maximal with the property that

is maximal with the property that  .

Since the first

.

Since the first  rows of

rows of  and

and  coincide, the first

coincide, the first  rows of

rows of  are

are  -linearly

independent. This shows that HA3 is satisfied for

-linearly

independent. This shows that HA3 is satisfied for  . As to HA2, let

. As to HA2, let

be the invertible matrix with

be the invertible matrix with

Then we notice that  , whence

, whence

is invertible. The rows of

is invertible. The rows of  clearly form a basis for

clearly form a basis for  ,

since

,

since  is invertible.

is invertible.

As long as  is not invertible, we finally use the

following simple but non-constant linear transformation in order to

improve the rank of

is not invertible, we finally use the

following simple but non-constant linear transformation in order to

improve the rank of  :

:

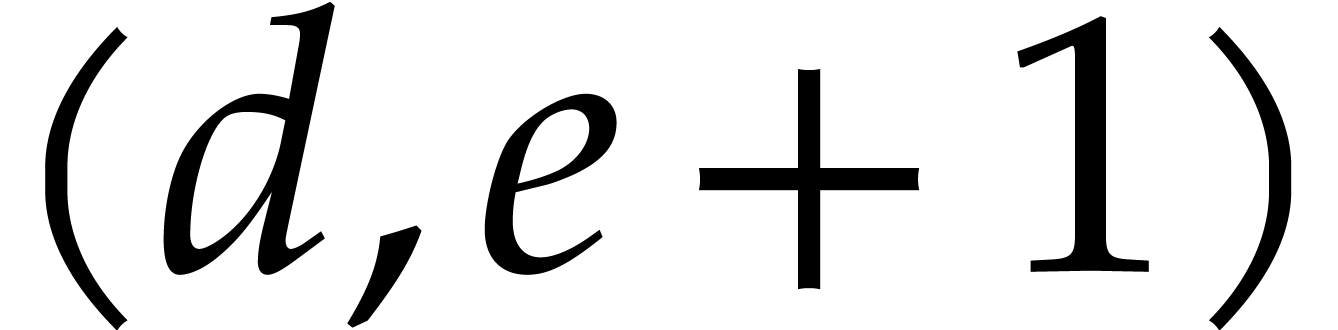

be a

be a  -head annihilator for

-head annihilator for  , let

, let  , and assume that the last

, and assume that the last  rows of

rows of  vanish. Let

vanish. Let  be

the matrix with rows

be

the matrix with rows

Then  is a

is a  -head

annihilator for

-head

annihilator for

Proof. We have  for all

for all

and

and  for all

for all  . In particular, we have

. In particular, we have  and

and  is maximal with the property that

is maximal with the property that  . Setting

. Setting  , we also observe that

, we also observe that  for

all

for

all  . Since

. Since  and the last

and the last  rows of

rows of  vanish, the first

vanish, the first  rows of both

rows of both  and

and  are

are  -linearly

independent. In other words, HA3 is satisfied for

-linearly

independent. In other words, HA3 is satisfied for  . As to HA2, we

observe that

. As to HA2, we

observe that  , whence

, whence  is invertible.

is invertible.

Let us finally show that  forms a basis for the

forms a basis for the

-module

-module  . So let

. So let  .

Then

.

Then  , so

, so  for some row matrix

for some row matrix  . Setting

. Setting

, we have

, we have  , whence

, whence  .

Since the first

.

Since the first  rows of

rows of  are

are  -linearly independent and

the last

-linearly independent and

the last  rows of

rows of  vanish,

we get

vanish,

we get  for all

for all  .

Let

.

Let  be the row vector with

be the row vector with  for

for  and

and  for

for  . By what precedes, we have

. By what precedes, we have  and

and  . Now we have

. Now we have  for

for  and

and  for

for  . In other words,

. In other words,  , as desired.

, as desired.

Propositions 5.4 and 5.5 allow us to compute

-head annihilators for (1.1) with arbitrarily large

-head annihilators for (1.1) with arbitrarily large  .

Assuming that we have

.

Assuming that we have  in HA3

for sufficiently large

in HA3

for sufficiently large  , this

yields the following algorithm for the computation of a head chopper for

(1.1):

, this

yields the following algorithm for the computation of a head chopper for

(1.1):

Algorithm HeadChopper

Input:

Ouput: a head chopper |

repeat

if

|

Example  and

and  as in

Example 3.2. We enter the loop with

as in

Example 3.2. We enter the loop with

so that  is a

is a  -head

annihilator for

-head

annihilator for

and then

Propositions 5.4 and 5.5 imply that the new

matrix  is a

is a  -head

annihilator for

-head

annihilator for

after which we set  ,

,

At this point,  is a

is a  -head annihilator for

-head annihilator for

and then  ,

,  ,

,

Applying  to both

to both  and

and

, we find the head chopper

from Example 3.2.

, we find the head chopper

from Example 3.2.

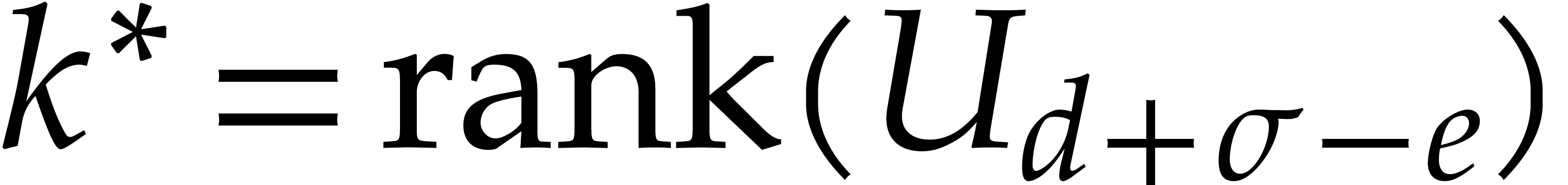

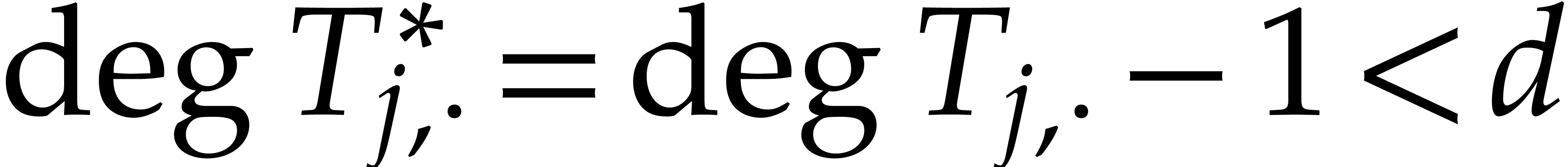

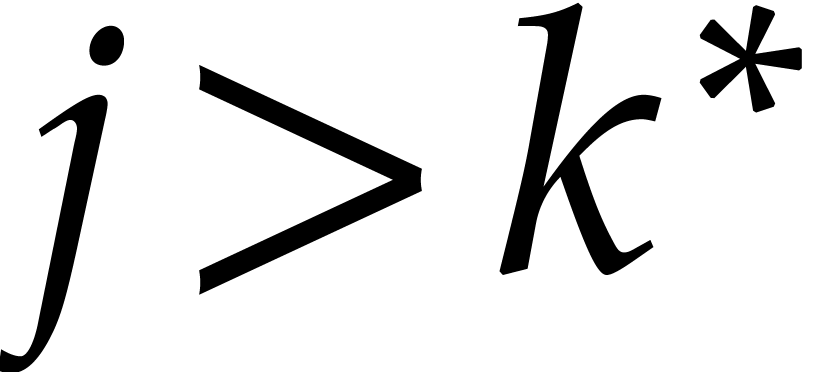

. Consider

the value of

. Consider

the value of  at the beginning of the loop and

after

at the beginning of the loop and

after  iterations. Then

iterations. Then  is a

is a  -head annihilator.

-head annihilator.

Proof. We first observe that  throughout the algorithm. Let us now prove the proposition by induction

over

throughout the algorithm. Let us now prove the proposition by induction

over  . The proposition

clearly holds for

. The proposition

clearly holds for  . Assuming

that the proposition holds for a given

. Assuming

that the proposition holds for a given  ,

let us show that it again holds at the next iteration. Consider the

values of

,

let us show that it again holds at the next iteration. Consider the

values of  and

and  at the

beginning of the loop and after

at the

beginning of the loop and after  iterations. Let

iterations. Let

be maximal such that

be maximal such that  . From the induction hypothesis, it follows that the

first

. From the induction hypothesis, it follows that the

first  rows of

rows of  are

are  -linearly independent, whence the

matrix

-linearly independent, whence the

matrix  is of the form

is of the form

Now Proposition 5.4 implies that  is

still a

is

still a  -head annihilator.

Since the last

-head annihilator.

Since the last  rows of

rows of  vanish, Proposition 5.5 also implies that

vanish, Proposition 5.5 also implies that  is a

is a  -head annihilator. This

completes the induction. Notice also that

-head annihilator. This

completes the induction. Notice also that  is

maximal with the property that

is

maximal with the property that  .

.

.

Then there exists a non-zero row matrix

.

Then there exists a non-zero row matrix  with

with

. In particular,

. In particular,  .

.

Proof. Assume that  be the value of

be the value of  at the beginning of the main loop after

at the beginning of the main loop after  iterations. Also let

iterations. Also let  and

and  be the values of

be the values of  and

and  as computed during the

as computed during the  -th

iteration.

-th

iteration.

Let  be maximal such that

be maximal such that  . Using the observation made at the end of the above

proof, we have

. Using the observation made at the end of the above

proof, we have  , so there

exist an index

, so there

exist an index  and

and  with

with

for all

for all  .

Furthermore,

.

Furthermore,

and

Moreover, for  , the row

sweeper

, the row

sweeper  is even of the form

is even of the form

By induction on  , we observe

that

, we observe

that  . For

. For  , we also have

, we also have  for all

for all

, again by induction.

Consequently,

, again by induction.

Consequently,  for all

for all  , which means that the sequence

, which means that the sequence  formally converges to a limit

formally converges to a limit  in

in  . By construction, the first

. By construction, the first  rows of

rows of  are zero, its last

are zero, its last  rows have rank

rows have rank  , and

, and  . We conclude by taking

. We conclude by taking  to be the last row of

to be the last row of  .

.

Proof. We already observed that  throughout the algorithm. If the algorithm terminates, then it follows

that

throughout the algorithm. If the algorithm terminates, then it follows

that  is indeed a head chopper for (1.1).

Assume for contradiction that the algorithm does not terminate and let

is indeed a head chopper for (1.1).

Assume for contradiction that the algorithm does not terminate and let

be such that

be such that  .

Let

.

Let  be a fundamental system of solutions to the

equation

be a fundamental system of solutions to the

equation  is some differential field extension of

is some differential field extension of  with constant field

with constant field  . From

. From

we deduce that

we deduce that  ,

whence

,

whence  . More generally,

. More generally,  whence

whence  and

and  for all

for all  . Since the space

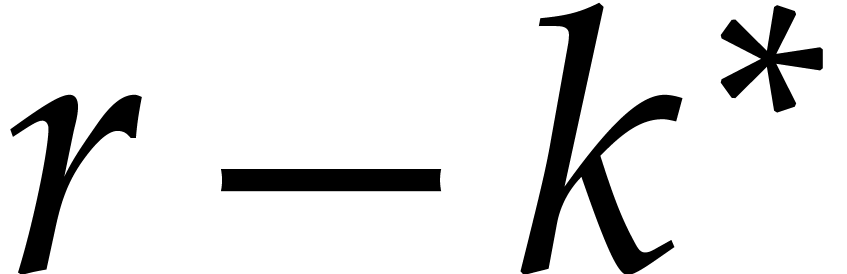

. Since the space

has dimension

has dimension  over

over  , it follows that there exists a

polynomial

, it follows that there exists a

polynomial  of degree at most

of degree at most  in

in  such that

such that  and

and  . Since

. Since  is

a fundamental system of solutions, we have

is

a fundamental system of solutions, we have  .

This contradicts the existence of an element

.

This contradicts the existence of an element  with

with  .

.

Remark

Note that one should not confuse the existence of polynomial degree

bounds for head choppers with the absence of such bounds for exceptional

indices. Indeed, Example 3.6 shows how to obtain

arbitrarily high exceptional indices  for

equations of bounded degree and order. Yet, the degrees of the

corresponding head choppers are also bounded, as shown in Example 3.2.

for

equations of bounded degree and order. Yet, the degrees of the

corresponding head choppers are also bounded, as shown in Example 3.2.

Remark  be an indeterminate and let

be an indeterminate and let  be the shift operator. Then EG-eliminations can be used to compute

normal forms for linear difference operators in

be the shift operator. Then EG-eliminations can be used to compute

normal forms for linear difference operators in  . The rank of the leading (or trailing coefficient)

of the normal form is equal to the rank of the original operator.

Abramov achieves such normal form computations by transforming the

problem into a big linear algebra problem over

. The rank of the leading (or trailing coefficient)

of the normal form is equal to the rank of the original operator.

Abramov achieves such normal form computations by transforming the

problem into a big linear algebra problem over  . Our algorithm for the computation of head choppers

is different in two ways: the operator

. Our algorithm for the computation of head choppers

is different in two ways: the operator  is not a

shift operator and we work directly over

is not a

shift operator and we work directly over  .

.

Head reduction essentially allows us to reduce the valuation in  of elements in

of elements in  via

the subtraction of elements in

via

the subtraction of elements in  .

Tail reduction aims at reducing the valuation in

.

Tail reduction aims at reducing the valuation in  in a similar way for any

in a similar way for any  in the algebraic

closure

in the algebraic

closure  of

of  .

It is well known that holonomy is preserved under compositions with

rational functions. Modulo suitable changes of variables, this allows us

to compute tail reductions using the same algorithms as in the case of

head reduction. For completeness, we will detail in this section how

this works.

.

It is well known that holonomy is preserved under compositions with

rational functions. Modulo suitable changes of variables, this allows us

to compute tail reductions using the same algorithms as in the case of

head reduction. For completeness, we will detail in this section how

this works.

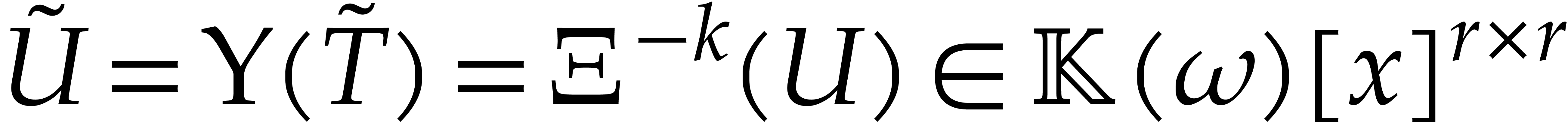

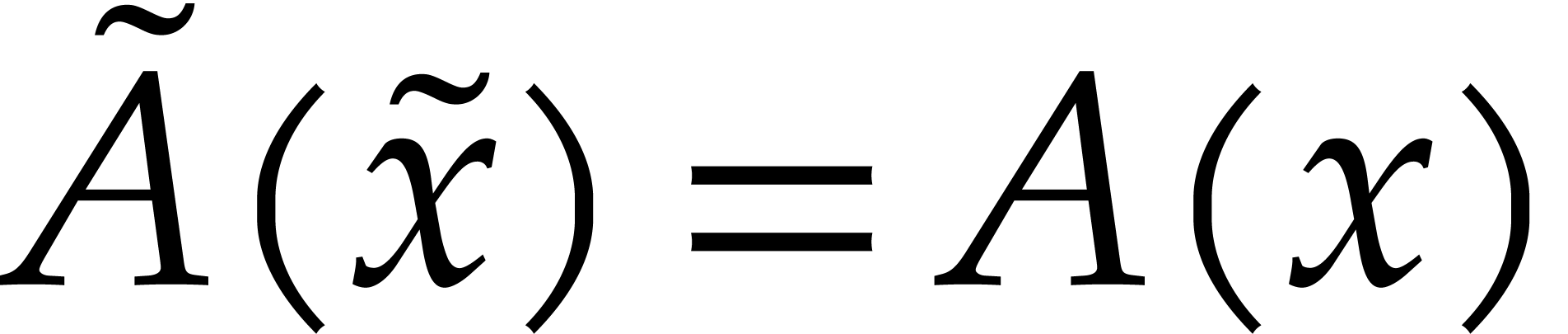

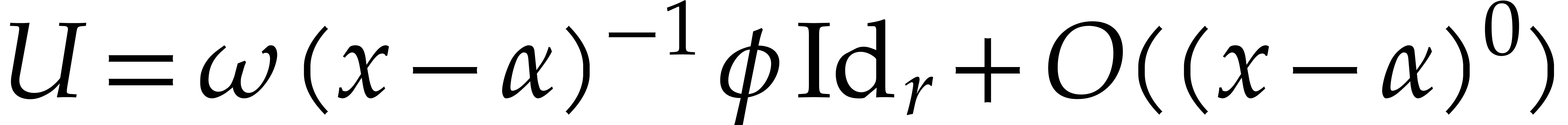

More precisely, let  ,

,  and

and  . We

may regard

. We

may regard  as a Laurent polynomial in

as a Laurent polynomial in  with matrix coefficients

with matrix coefficients  :

:

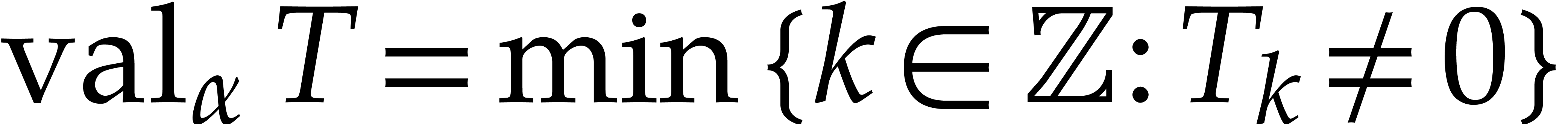

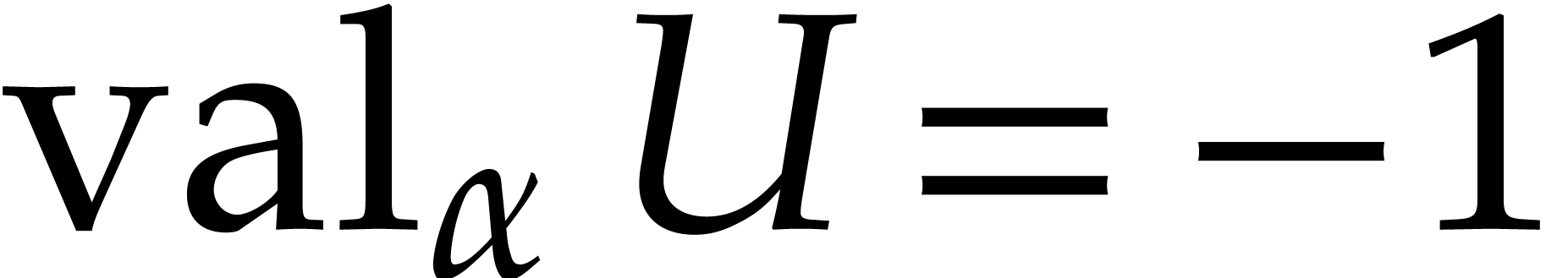

If  , then we denote its

valuation in

, then we denote its

valuation in  by

by  .

Setting

.

Setting

the equation (1.1) implies

for any matrix  . The matrix

. The matrix

can also be regarded as a Laurent polynomial

with matrix coefficients

can also be regarded as a Laurent polynomial

with matrix coefficients  . We

say that

. We

say that  is a tail chopper at

is a tail chopper at  for (1.1) if

for (1.1) if  is

an invertible matrix. In fact, it suffices to consider tail choppers at

the origin:

is

an invertible matrix. In fact, it suffices to consider tail choppers at

the origin:

, where

, where  . Define

. Define  ,

,  and

and  . Then

. Then  is a tail

chopper at

is a tail

chopper at  for

for  is a tail chopper at

is a tail chopper at  for

for  .

.

Proof. Setting  ,

we have

,

we have  . Consequently,

. Consequently,  and

and  .

.

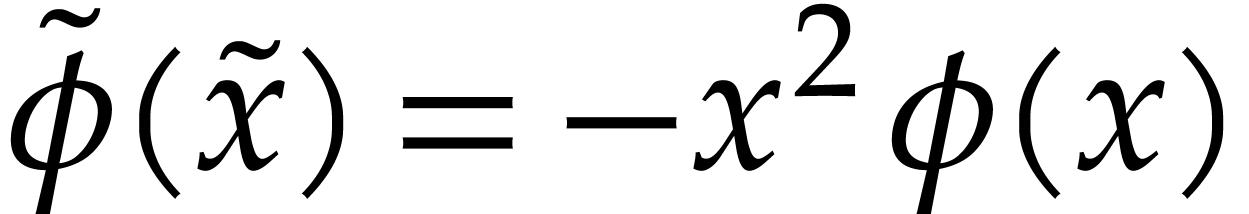

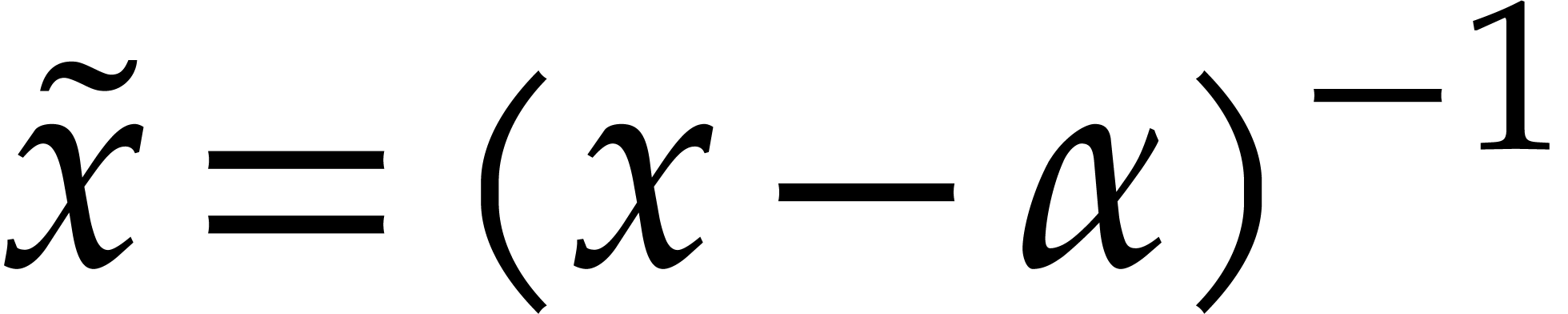

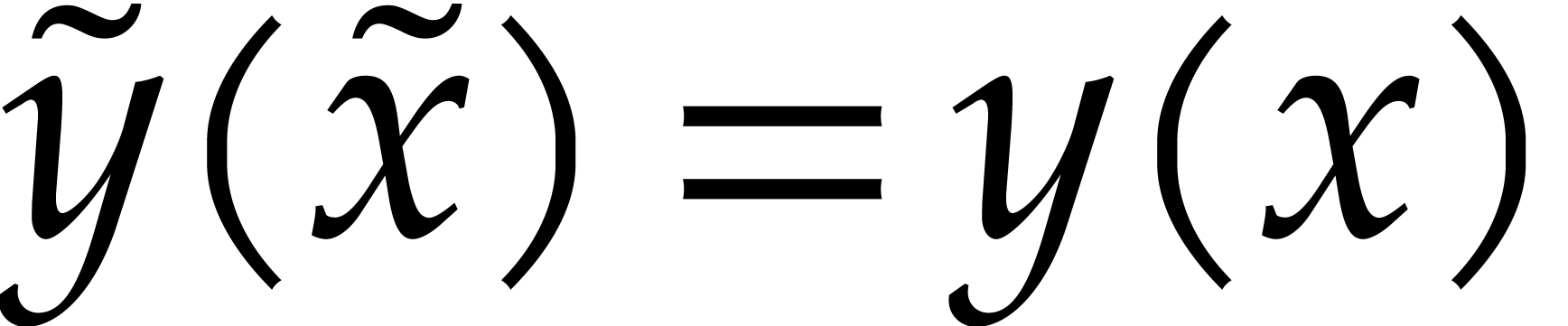

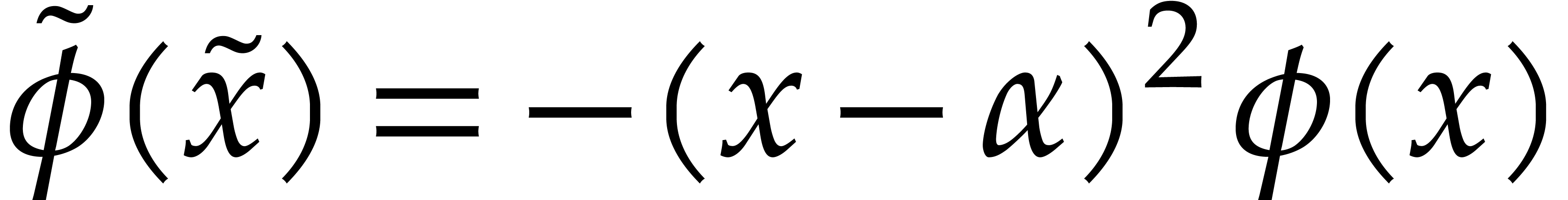

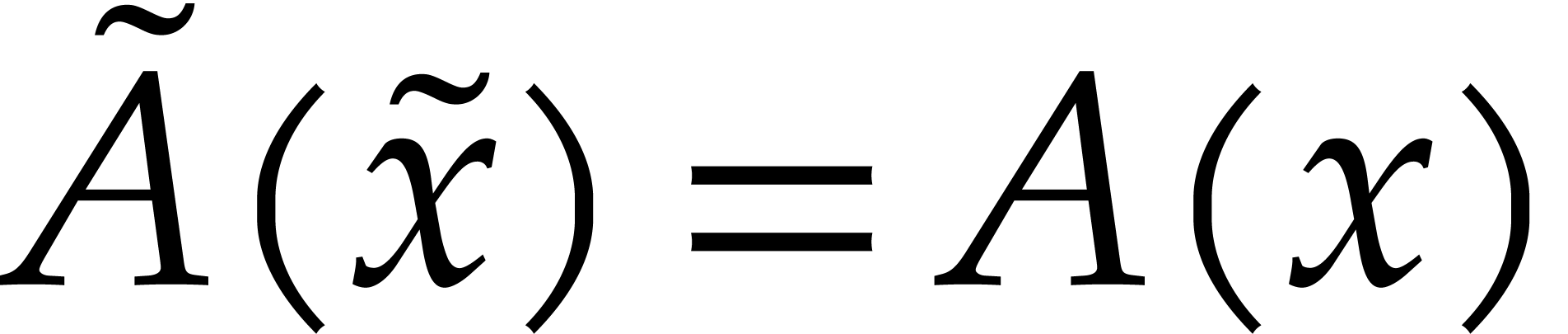

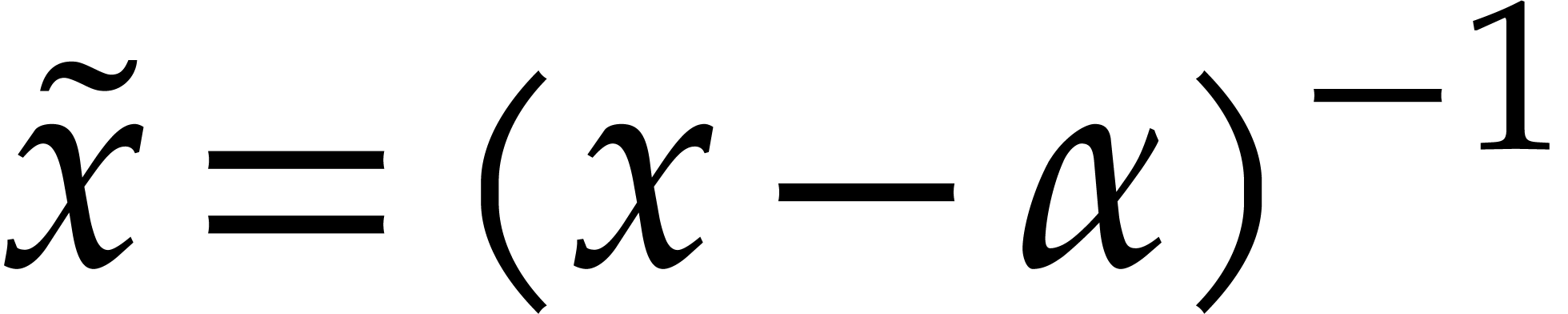

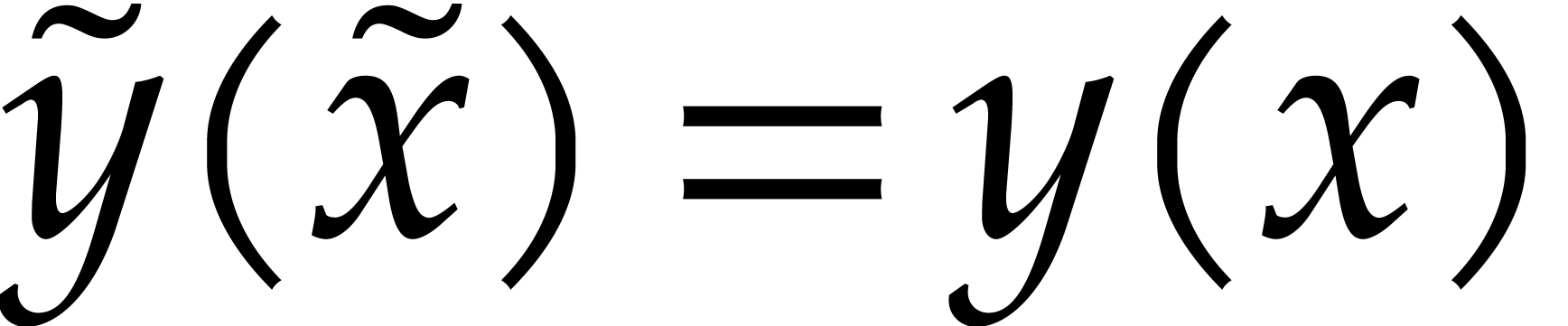

There is also a direct link between head choppers and tail choppers at

via the change of variables

via the change of variables  .

.

. Setting

. Setting  , we define

, we define  ,

,  and

and  . Then

. Then  is a tail

chopper at

is a tail

chopper at  for

for  is a head chopper for

is a head chopper for  .

.

Proof. Setting  ,

we have

,

we have

Consequently,  and

and  .

.

Finally, the matrix  is a tail chopper at almost

all points

is a tail chopper at almost

all points  :

:

be such that

be such that  .

Then

.

Then  is a tail chopper for

is a tail chopper for  .

.

Proof. If  and

and  , then (6.2) becomes

, then (6.2) becomes

for

for  .

In particular,

.

In particular,  and

and  is

invertible in

is

invertible in  .

.

Now consider a monic square-free polynomial  and

assume that we wish to compute a tail chopper for (1.1) at

a root

and

assume that we wish to compute a tail chopper for (1.1) at

a root  of

of  in

in  . First of all, we have to decide

how to conduct computations in

. First of all, we have to decide

how to conduct computations in  .

If

.

If  is irreducible, then we may simply work in

the field

is irreducible, then we may simply work in

the field  instead of

instead of  and

take

and

take  to be the residue class of

to be the residue class of  , so that

, so that  becomes a

generic formal root of

becomes a

generic formal root of  . In

general, factoring

. In

general, factoring  over

over  may be hard, so we cannot assume

may be hard, so we cannot assume  to be

irreducible. Instead, we rely on the well known technique of dynamic

evaluation [13].

to be

irreducible. Instead, we rely on the well known technique of dynamic

evaluation [13].

For convenience of the reader, let us recall that dynamic evaluation

amounts to performing all computations as if  were irreducible and

were irreducible and  were a field with an

algorithm for division. Whenever we wish to divide by a non-zero element

were a field with an

algorithm for division. Whenever we wish to divide by a non-zero element

(with

(with  )

that is not invertible, then

)

that is not invertible, then  provides us with a

non-trivial factor of

provides us with a

non-trivial factor of  . In

that case, we launch an exception and redo all computations with

. In

that case, we launch an exception and redo all computations with  or

or  in the role of

in the role of  .

.

So let  be a formal root of

be a formal root of  and define

and define  ,

,  ,

,  and

and  . Let

. Let  be a head chopper

for the equation

be a head chopper

for the equation  , as

computed using the algorithm from section 5.3. Then

, as

computed using the algorithm from section 5.3. Then  is a tail chopper at

is a tail chopper at  by

Lemmas 6.1 and 6.2.

by

Lemmas 6.1 and 6.2.

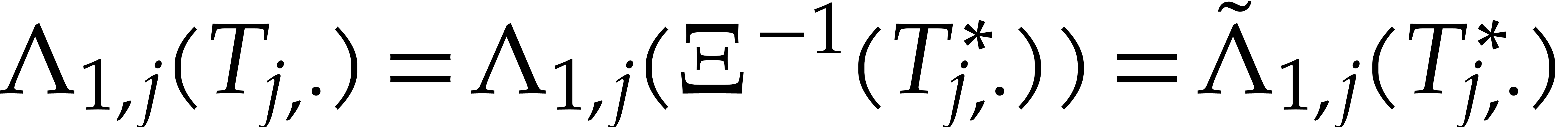

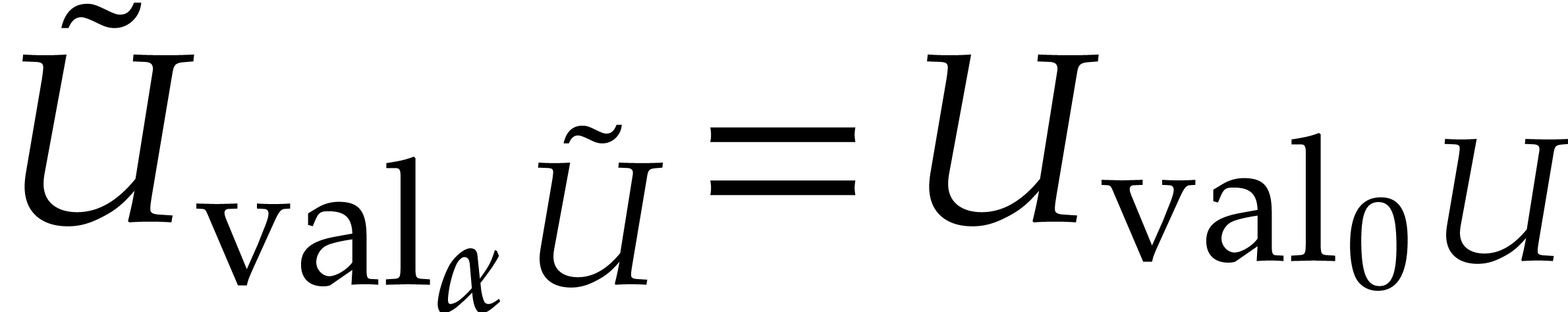

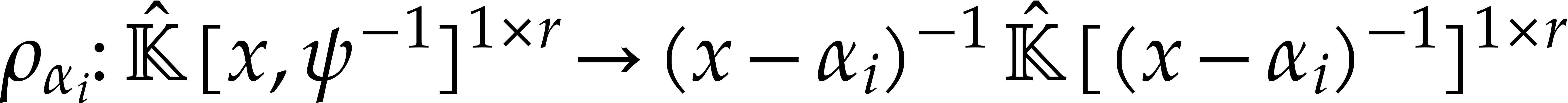

Let  be a tail chopper for (1.1) at

be a tail chopper for (1.1) at

. Let

. Let  ,

,  ,

,  and

and  be as above, so that

be as above, so that

, is a head chopper for the

equation

, is a head chopper for the

equation  . In particular,

rewriting linear combinations

. In particular,

rewriting linear combinations  with

with  as linear combinations

as linear combinations  with

with  , we may head reduce

, we may head reduce  as described in section 3.2. Let

as described in section 3.2. Let  be such that

be such that  .

Then we may rewrite

.

Then we may rewrite  as an element

as an element  of

of  . We call

. We call

the tail reduction of

the tail reduction of  at

at  and write

and write  .

.

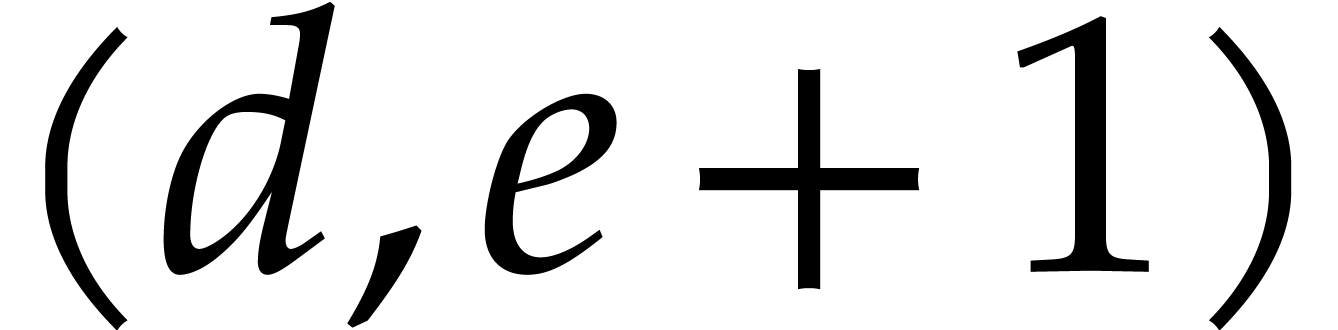

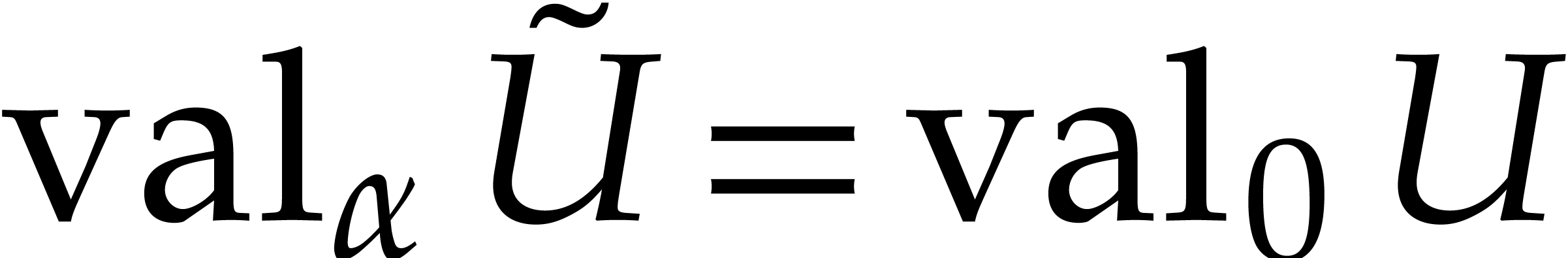

Let  be the finite set of exceptional indices for

the above head reduction and

be the finite set of exceptional indices for

the above head reduction and  .

Setting

.

Setting  and

and  ,

it can be checked that the following algorithm computes the tail

reduction at

,

it can be checked that the following algorithm computes the tail

reduction at  :

:

Algorithm TailReduce

Input:

Output: the tail reduction |

repeat

if

Let

|

Let us now study how head and tail reductions can be glued together into

a global confined reduction on  .

More generally, we consider the case when

.

More generally, we consider the case when  ,

where

,

where  is a monic square-free polynomial such

that

is a monic square-free polynomial such

that  divides

divides  for some

for some

.

.

We assume that we have computed a head chopper for (1.1)

and tail choppers  for (1.1) at each

the roots

for (1.1) at each

the roots  of

of  in

in  . In particular, we may compute the

corresponding head and tail reductions

. In particular, we may compute the

corresponding head and tail reductions

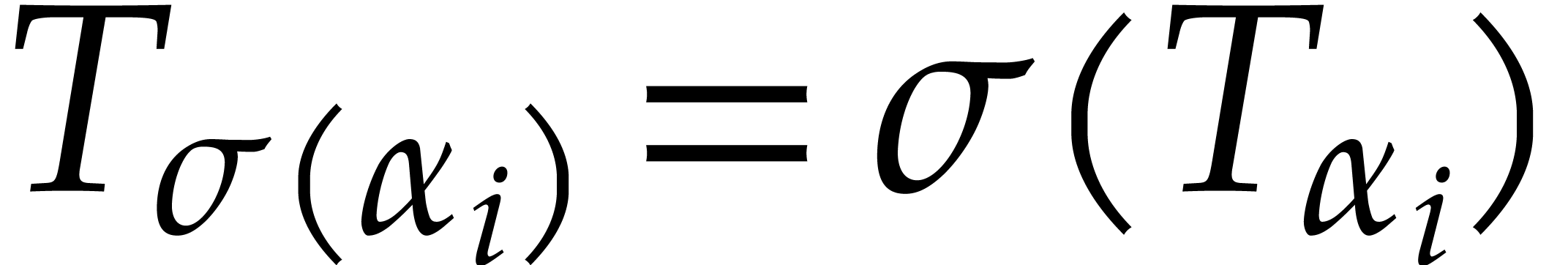

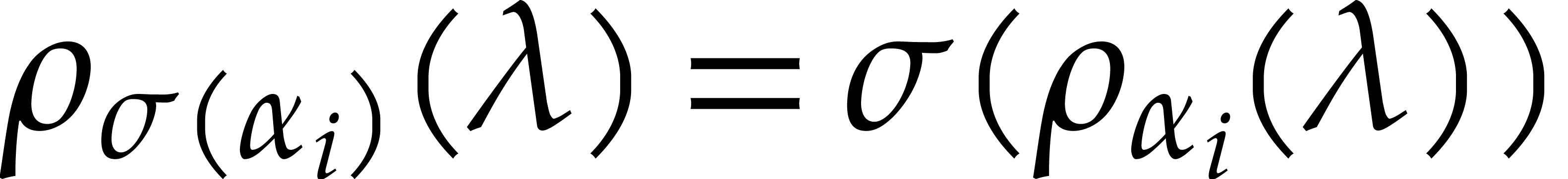

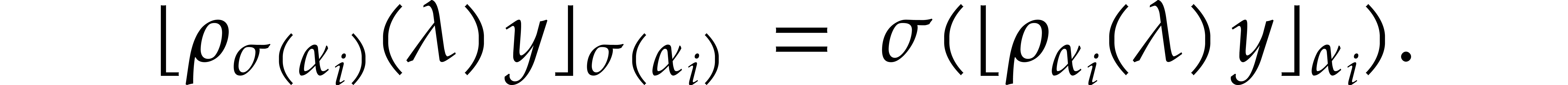

Given an element  of the Galois group of

of the Galois group of  over

over  , we

may also assume without loss of generality that the tail choppers were

chosen such that

, we

may also assume without loss of generality that the tail choppers were

chosen such that  for all

for all  (note that this is automatically the case when using the technique of

dynamic evaluation from section 6.2).

(note that this is automatically the case when using the technique of

dynamic evaluation from section 6.2).

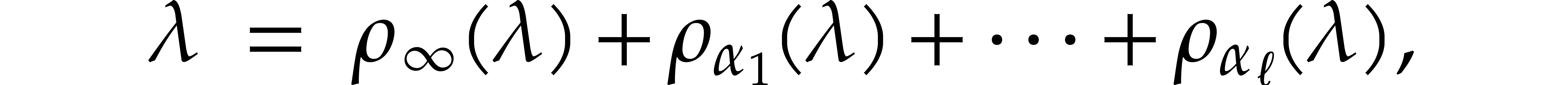

Partial fraction decomposition yields  -linear

mappings

-linear

mappings

and

with

for all  . This allows us to

define a global reduction

. This allows us to

define a global reduction  of

of  by

by

.

.

Proof. Let  be an

automorphism of

be an

automorphism of  over

over  . Then

. Then  naturally extends to

naturally extends to

by setting

by setting  for all

for all  . Given

. Given  and

and

, we have

, we have  . By our assumption that

. By our assumption that  , it follows that

, it follows that

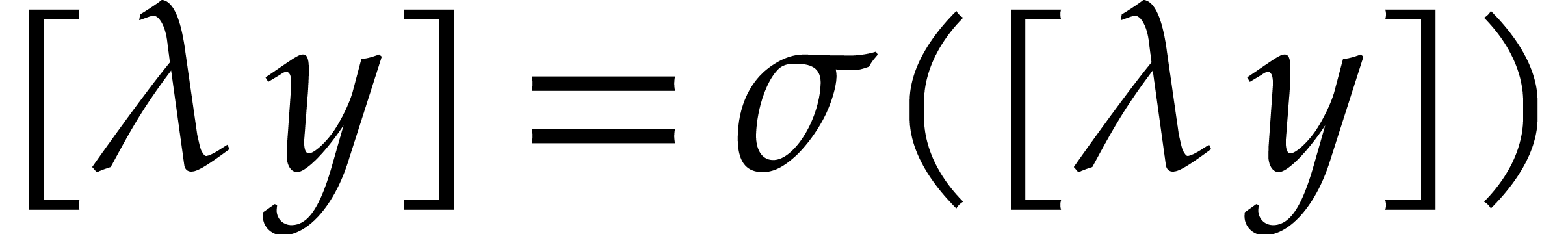

Summing over all  , we get

, we get

. Since this equality holds

for all automorphisms

. Since this equality holds

for all automorphisms  , we

conclude that

, we

conclude that  . Similarly,

given

. Similarly,

given  with

with  ,

we have

,

we have  for all automorphisms

for all automorphisms  , whence

, whence  .

This shows that

.

This shows that  . For any

. For any  in the image of the restriction of

in the image of the restriction of  to

to  , we finally observe that

, we finally observe that

, and

, and  are uniformly bounded, by construction. In other words, the reduction

are uniformly bounded, by construction. In other words, the reduction

is confined.

is confined.

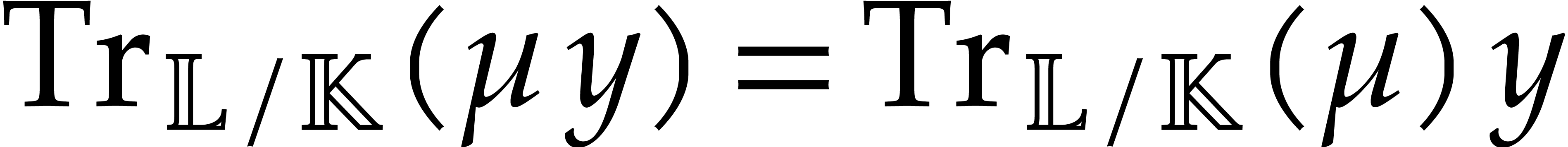

For actual implementations, one may perform the computations in

extension fields  , where

, where  is an irreducible factor of

is an irreducible factor of  (or simply a square-free factor, while relying on dynamic evaluation as

in section 6.2). Let

(or simply a square-free factor, while relying on dynamic evaluation as

in section 6.2). Let  be the roots

of such an irreducible factor

be the roots

of such an irreducible factor  and assume that we

wish to compute

and assume that we

wish to compute  for

for  . Instead of computing each

. Instead of computing each  separately, one may use the formula

separately, one may use the formula

where  is the canonical root of

is the canonical root of  in

in  and

and  for all

for all  .

.

Example  be a fixed parameter. Consider the function

be a fixed parameter. Consider the function

which satisfies the differential equation

This equation admits two singularities at  ,

where

,

where  . Any non-zero element

of

. Any non-zero element

of  is a tail chopper at

is a tail chopper at  . Taking

. Taking  as our tail

chopper, we have

as our tail

chopper, we have

for all  and

and  .

Given

.

Given

its tail reduction at  is therefore recursively

defined by

is therefore recursively

defined by

Now assume that we wish to compute the tail reduction

of

with respect to both roots  and

and  of

of  . We have

. We have

The above computation holds when considering  as

a root of the polynomial

as

a root of the polynomial  in the algebraic

closure of

in the algebraic

closure of  . Exactly the same

computation can therefore be used for the other root

. Exactly the same

computation can therefore be used for the other root  of this polynomial. The computation also holds for a generic root

of this polynomial. The computation also holds for a generic root  in the algebraic extension

in the algebraic extension  and we obtain

and we obtain

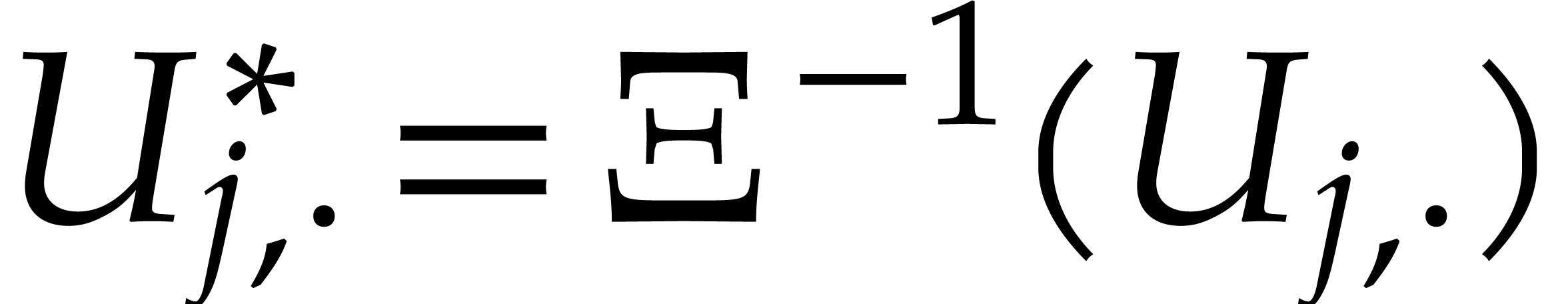

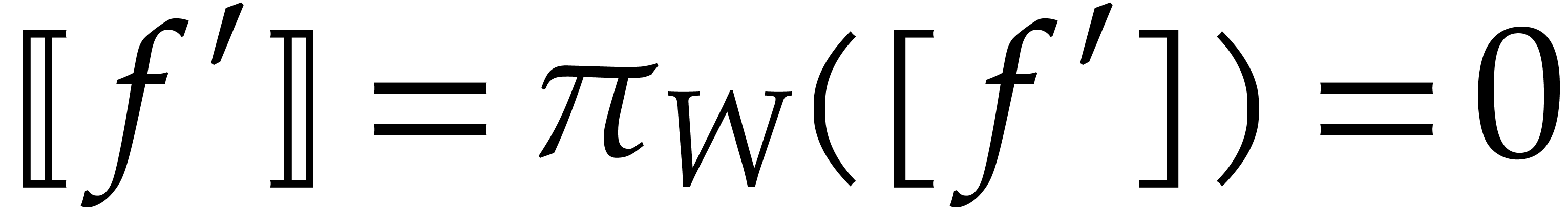

Given the confined reduction  from section 7.1, let us now give a general procedure how to turn it into a

normal confined reduction

from section 7.1, let us now give a general procedure how to turn it into a

normal confined reduction  .

For this purpose, we assume that we know a

.

For this purpose, we assume that we know a  -subvector

space

-subvector

space  of

of  with the

property that for any

with the

property that for any  with

with  , we have

, we have  .

.

Remark  ,

and

,

and  such that we can take

such that we can take

For equations (1.1) of bounded degree and size, Example 3.6 shows that  can become arbitrarily

large, whence so can the dimension of

can become arbitrarily

large, whence so can the dimension of  .

For this reason, normal confined reductions can be computationally

expensive, so it is usually preferable to rely on non-normalized

reductions. One way to compute

.

For this reason, normal confined reductions can be computationally

expensive, so it is usually preferable to rely on non-normalized

reductions. One way to compute  ,

and

,

and  was detailed in [18, sections 6

and 7], but better approaches have been proposed since [4,

19].

was detailed in [18, sections 6

and 7], but better approaches have been proposed since [4,

19].

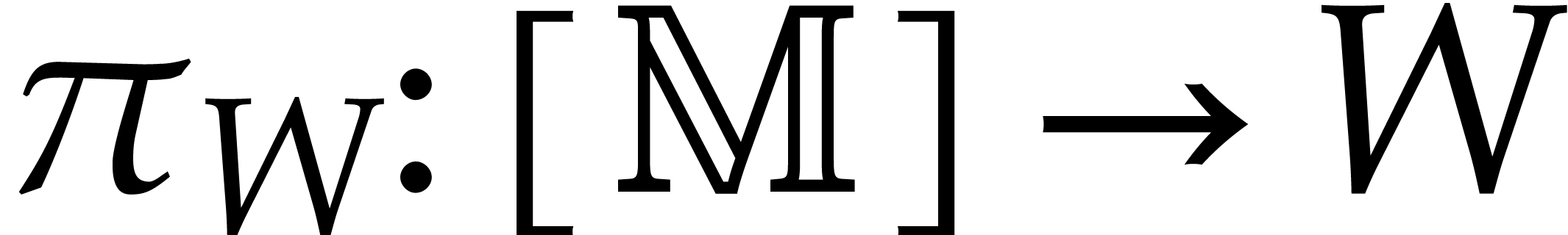

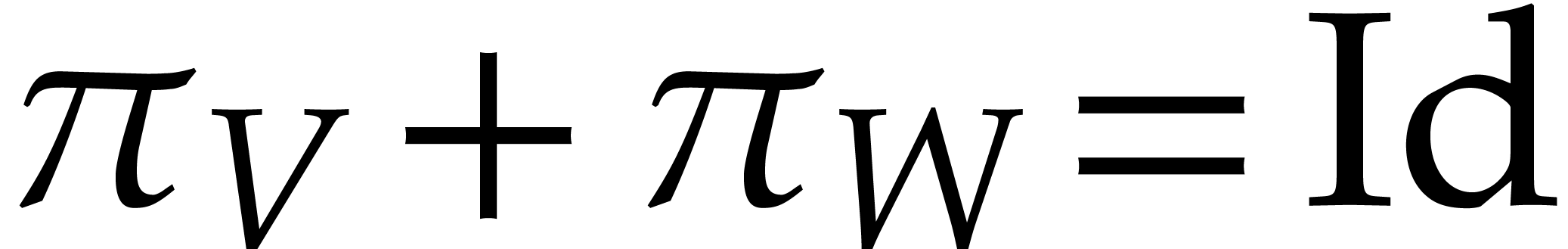

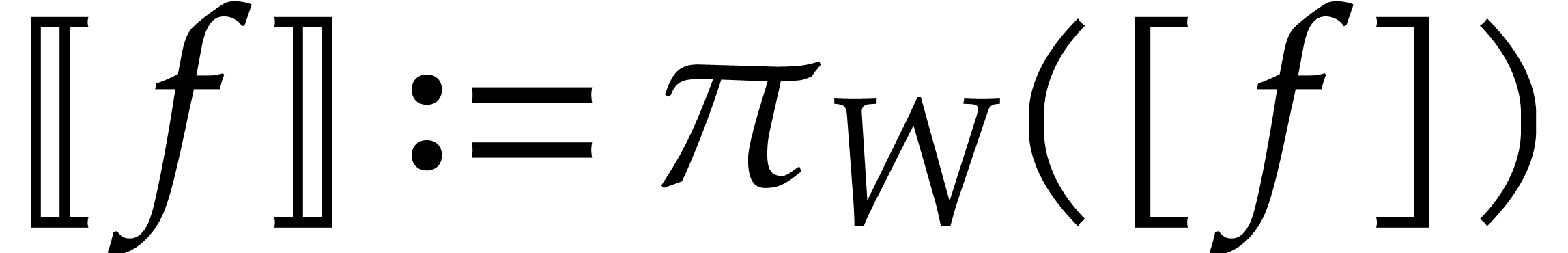

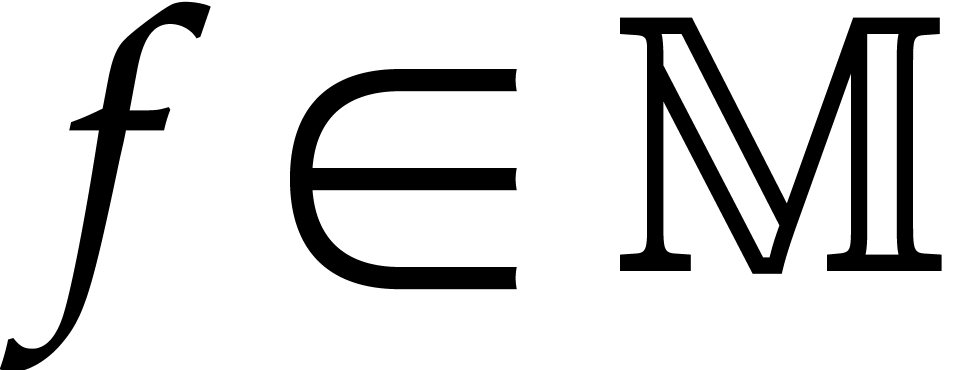

Now let  and let

and let  be a

supplement of

be a

supplement of  in

in  so that

so that

. We may compute bases of

. We may compute bases of

and

and  using

straightforward linear algebra. The canonical

using

straightforward linear algebra. The canonical  -linear projections

-linear projections  and

and  with

with  are also computable. We

claim that we may take

are also computable. We

claim that we may take  for every

for every  .

.

defines a computable

normal confined reduction on

defines a computable

normal confined reduction on  .

.

Proof. The mapping  is

clearly a computable confined reduction on

is

clearly a computable confined reduction on  .

It remains to be shown that

.

It remains to be shown that  for all

for all  . Now

. Now  ,

so

,

so  and there exists a

and there exists a  with

with  . Since

. Since  , it follows that

, it follows that  and

and

. In other words,

. In other words,  and

and  .

.

Acknowledgments. We would like to thank Pierre Lairez for a helpful remark. We also thank the second referee for pointing us to [21] and for further helpful remarks and suggestions.

S. A. Abramov. EG-eliminations. Journal of Difference Equations and Applications, 5(4–5):393–433, 1999.

A. Bostan, S. Chen, F. Chyzak, and Z. Li. Complexity of creative telescoping for bivariate rational functions. In Proc. ISSAC '10, pages 203–210. New York, NY, USA, 2010. ACM.

A. Bostan, S. Chen, F. Chyzak, Z. Li, and G. Xin. Hermite reduction and creative telescoping for hyperexponential functions. In Proc. ISSAC'13, pages 77–84. ACM, 2013.

A. Bostan, F. Chyzak, P. Lairez, and B. Salvy. Generalized Hermite reduction, creative telescoping and definite integration of differentially finite functions. In Proc. ISSAC '18, pages 95–102. New York, 2018. ACM.

A. Bostan, L. Dumont, and B. Salvy. Efficient algorithms for mixed creative telescoping. In Proc. ISSAC'16, pages 127–134. ACM, 2016.

A. Bostan, P. Lairez, and B. Salvy. Creative telescoping for rational functions using the Griffiths-Dwork method. In Proc. ISSAC'13, pages 93–100. ACM, 2013.

S. Chen. Some applications of differential-difference algebra to creative telescoping. PhD thesis, École Polytechnique, 2011.

S. Chen, M. van Hoeij, M. Kauers, and C. Koutschan. Reduction-based creative telescoping for Fuchsian D-finite functions. JSC, 85:108–127, 2018.

S. Chen, H. Huang, M. Kauers, and Z. Li. A modified Abramov-Petkovsˇek reduction and creative telescoping for hypergeometric terms. In Proc. ISSAC'15, pages 117–124. ACM, 2015.

S. Chen and M. Kauers. Some open problems related to creative telescoping. J. of Systems Science and Complexity, 30(1):154–172, 2017.

S. Chen, M. Kauers, and C. Koutschan. Reduction-based creative telescoping for algebraic functions. In Proc. ISSAC '16, pages 175–182. New York, NY, USA, 2016. ACM.

F. Chyzak. The ABC of Creative Telescoping — Algorithms, Bounds, Complexity. Habilitation, École polytechnique, 2014.

J. Della Dora, C. Dicrescenzo, and D. Duval. A new method for computing in algebraic number fields. In G. Goos and J. Hartmanis, editors, Eurocal'85 (2), volume 174 of Lect. Notes in Comp. Science, pages 321–326. Springer, 1985.

L. Dumont. Efficient algorithms for the symbolic computation of some contour integrals depending on one parameter. PhD thesis, École Polytechnique, 2016.

K. Geddes, H. Le, and Z. Li. Differential rational normal forms and a reduction algorithm for hyperexponential functions. In Proc. ISSAC'04, pages 183–190. ACM, 2004.

C. Hermite. Sur l'intégration des fractions rationnelles. Ann. Sci. École Norm. Sup. Série 2, 1:215–218, 1972.

J. van der Hoeven. Relax, but don't be too lazy. JSC, 34:479–542, 2002.

J. van der Hoeven. Constructing reductions for creative telescoping. Technical Report, HAL, 2017. http://hal.archives-ouvertes.fr/hal-01435877.

J. van der Hoeven. Creative telescoping using reductions. Technical Report, HAL, 2018. http://hal.archives-ouvertes.fr/hal-01773137.

H. Huang. New bounds for hypergeometric creative telescoping. In Proc. ISSAC'16, pages 279–286. ACM, 2016.

P. Monsky. Finiteness of de Rham cohomology. American Journal of Mathematics, 94(1):237–245, 1972.

M. Ostrogradsky. De l'integration des fractions rationelles. Bull. de la Classe Physico- Mathématique de l'Académie Imperiale des Sciences de Saint-Petersburg, IV:147–168, 1845.

D. Zeilberger. The method of creative telescoping. JSC, 11(3):195–204, 1991.