Ultimate complexity for numerical

algorithms |

|

| Preliminary version, February 11, 2020 |

|

. This article has

been written using GNU TeXmacs [15].

. This article has

been written using GNU TeXmacs [15].

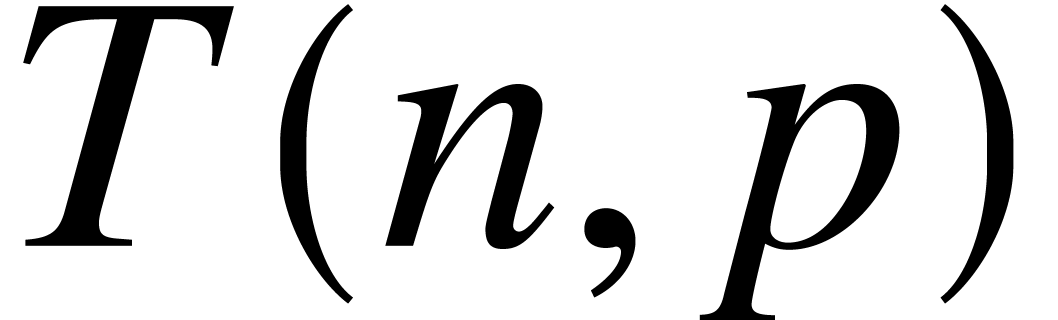

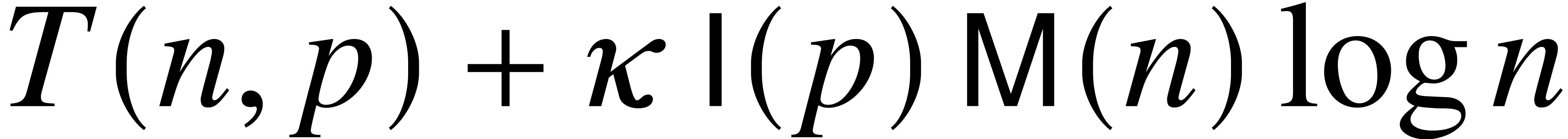

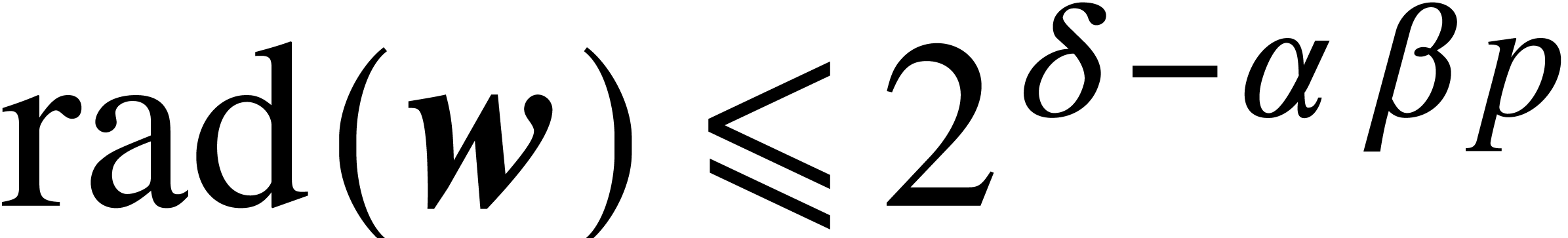

Most numerical algorithms are designed for single or double precision floating point arithmetic, and their complexity is measured in terms of the total number of floating point operations. The resolution of problems with high condition numbers (e.g. when approaching a singularity or degeneracy) may require higher working precisions, in which case it is important to take the precision into account when doing complexity analyses. In this paper, we propose a new “ultimate complexity” model, which focuses on analyzing the cost of numerical algorithms for “sufficiently large” precisions. As an example application we will present an ultimately softly linear time algorithm for modular composition of univariate polynomials. |

The total number of floating point operations is a good measure for the complexity of numerical algorithms that operate at a fixed working precision. However, for certain ill-conditioned problems it may be necessary to use higher working precisions. In order to analyze the complexity to solve such problems, it is crucial to take into account the working precision as an additional parameter.

Multiple precision algorithms are particularly important in the area of symbolic-numeric computation. Two examples of applications are polynomial factorization and lattice reduction. Analyzing the bit complexities of multiple precision algorithms is often challenging. Even in the case of a simple operation such as multiplication, there has been recent progress [11]. The complexities of other basic operations such as division, square roots, and the evaluation of elementary functions have also been widely studied; see for instance [5, 25].

For high level algorithms, sharp complexity analyses become tedious. This happens for instance in the area of “numerical algebraic geometry” when analyzing the complexity of homotopy continuation methods: condition numbers are rather intricate and worst case complexity bounds do not well reflect practical timings that can be observed with generic input data. This motivated the introduction of new average complexity models and lead to an active new area of research; see for instance [2, 7].

Studying the complexity of algorithms has various benefits. First, it is useful to predict practical running times of implementations. Secondly, it helps to determine which part of an algorithm consumes most of the time. Thirdly, for algorithms that are harder to implemented, complexity bounds are a way to estimate and compare algorithmic advances.

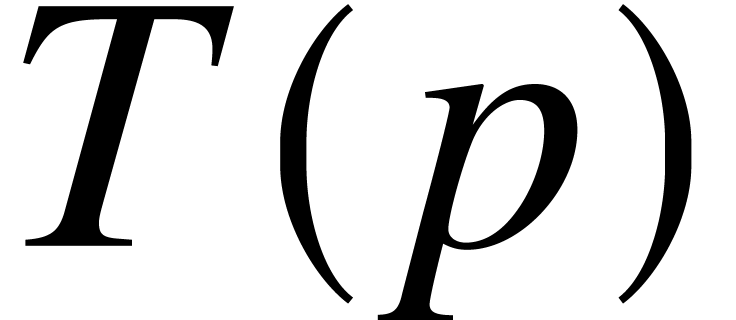

The present paper is devoted to a new “ultimate complexity”

model. It focuses on the asymptotic complexity of a numerical algorithm

when the working precision becomes sufficiently large. This means in

particular that we are in the asymptotic regime where the working

precision becomes arbitrarily large with respect to other parameters for

the algorithm, such as the degree of a polynomial or the size of a

matrix. The ultimate complexity model allows for rather simple and quick

analyses. In particular, it is easy to transfer algebraic complexity

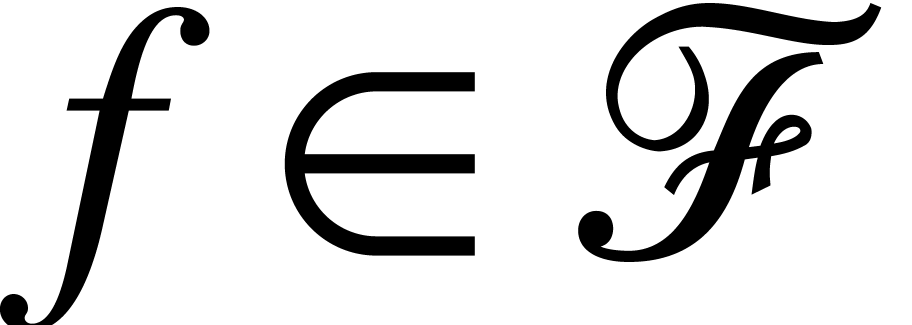

results over an abstract effective field  into

ultimate complexity results over the complex numbers. However, it does

not provide any information on how large the precision should be in

order for the asymptotic bounds to be observable in practice.

into

ultimate complexity results over the complex numbers. However, it does

not provide any information on how large the precision should be in

order for the asymptotic bounds to be observable in practice.

As a first example, let us consider the problem of multiplying two  matrices with floating point entries of bit size

matrices with floating point entries of bit size

. The usual cost is

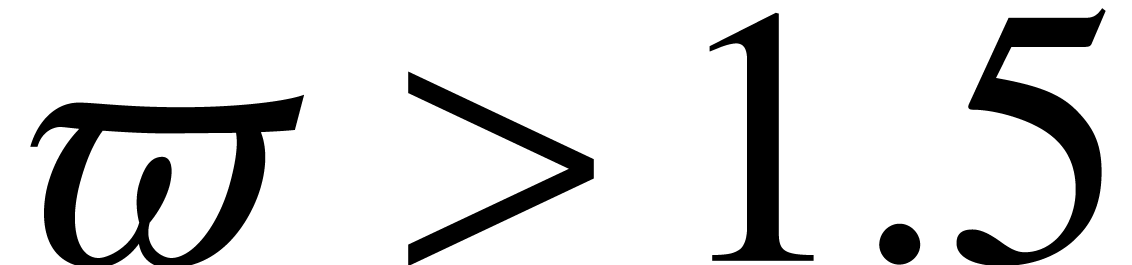

. The usual cost is  floating point operations, for some feasible exponent

floating point operations, for some feasible exponent  for matrix multiplication; in [24] it

has been shown that one may take

for matrix multiplication; in [24] it

has been shown that one may take  .

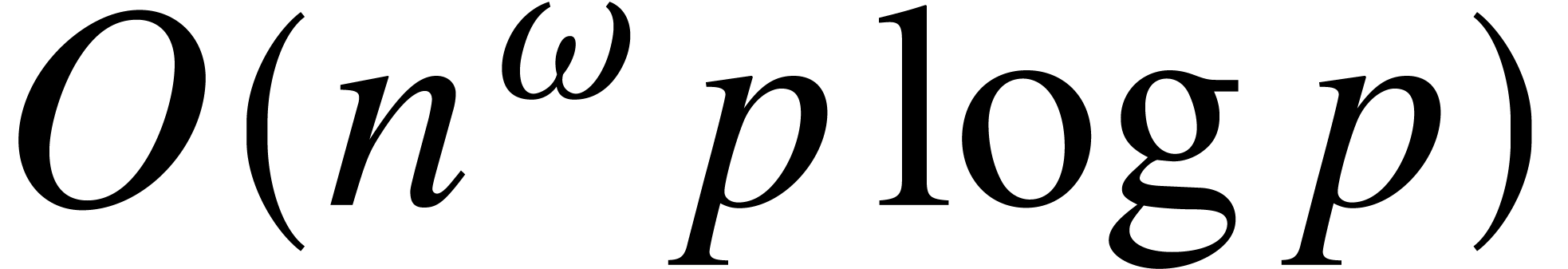

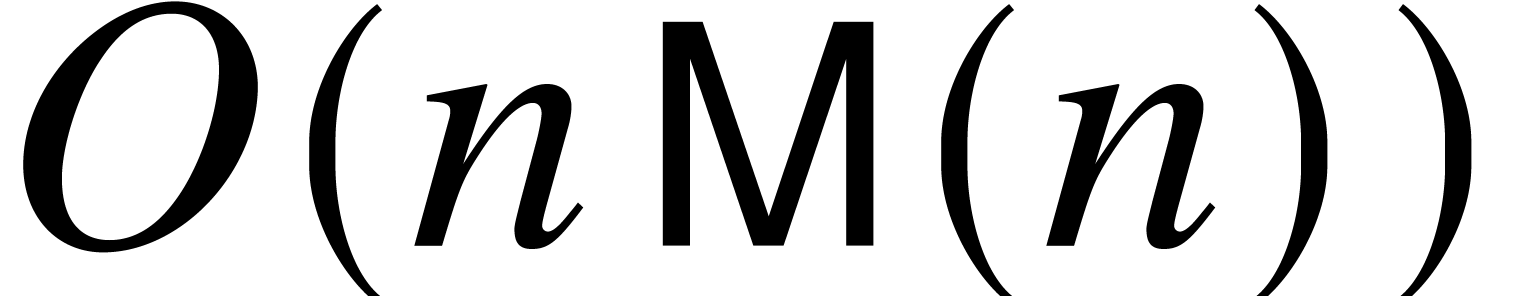

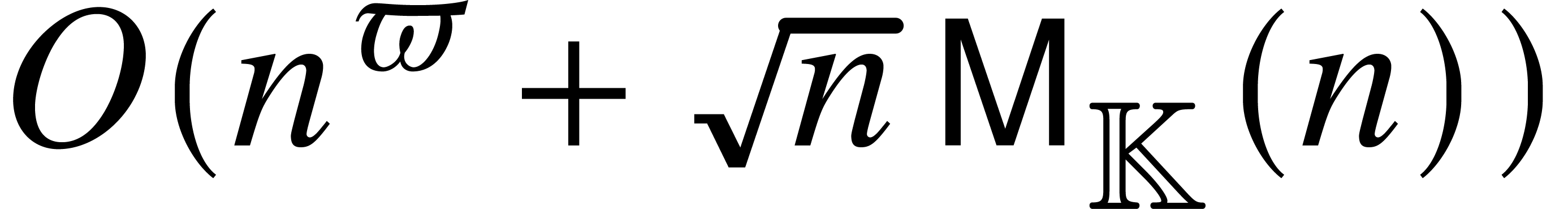

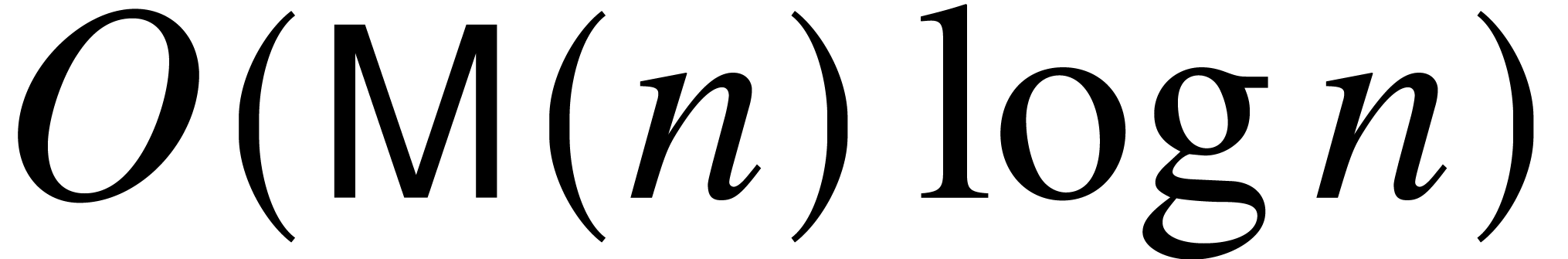

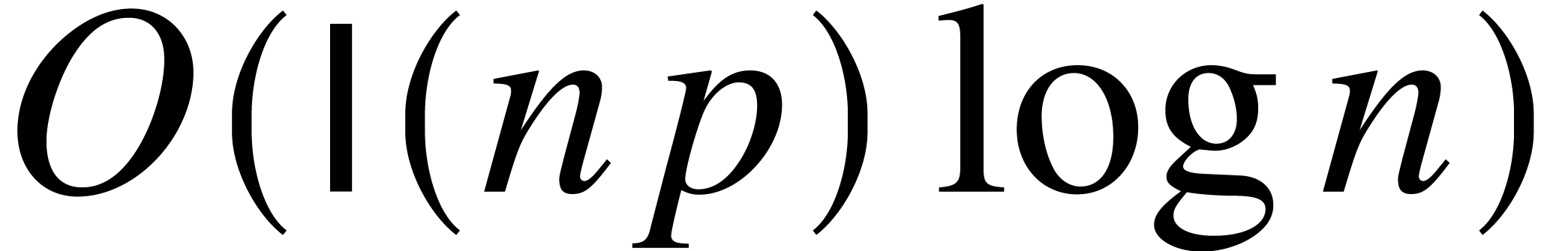

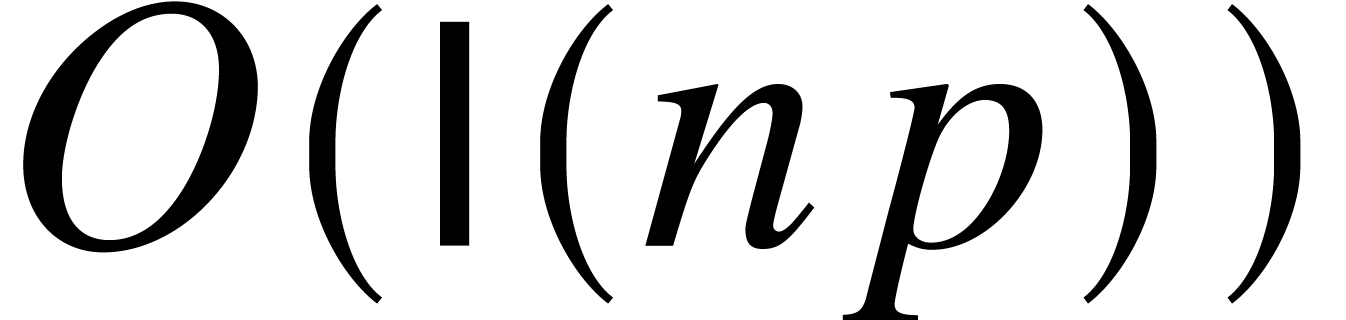

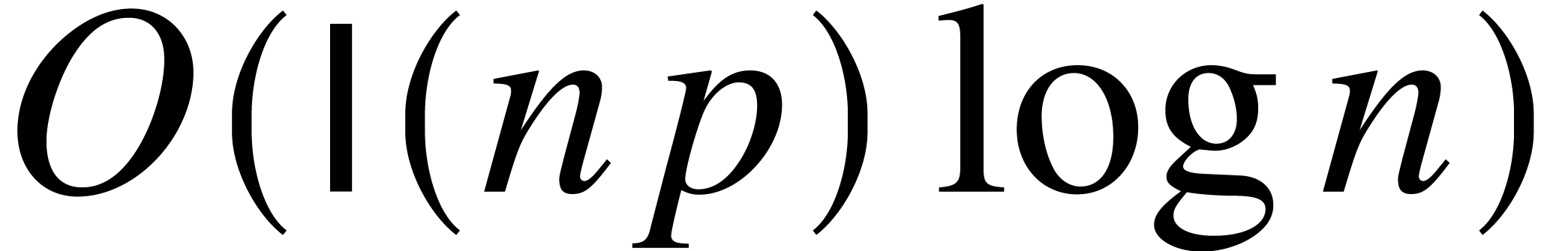

Using the above transfer principle, this leads to an ultimate bit

complexity bound of

.

Using the above transfer principle, this leads to an ultimate bit

complexity bound of  , where

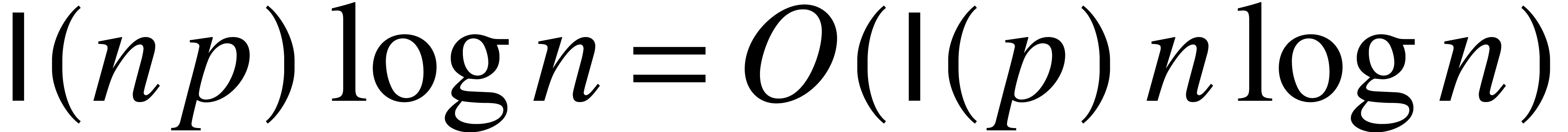

we used the recent result from [11] that

, where

we used the recent result from [11] that  -bit integers can be multiplied in time

-bit integers can be multiplied in time  . In practical applications, one is

often interested in the case when

. In practical applications, one is

often interested in the case when  ,

in which the bit-complexity rather becomes linear in

,

in which the bit-complexity rather becomes linear in  . But this is not what the ultimate complexity

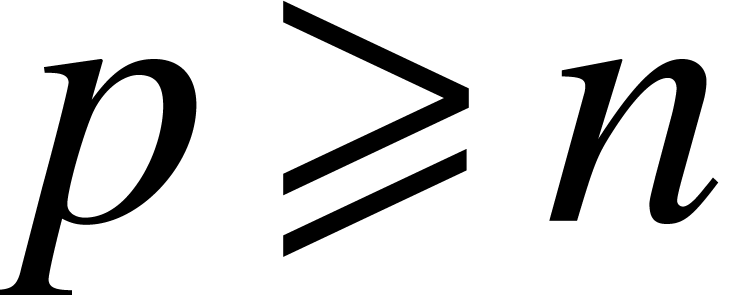

model does: it rather assumes that

. But this is not what the ultimate complexity

model does: it rather assumes that  ,

and even

,

and even  for any

for any  .

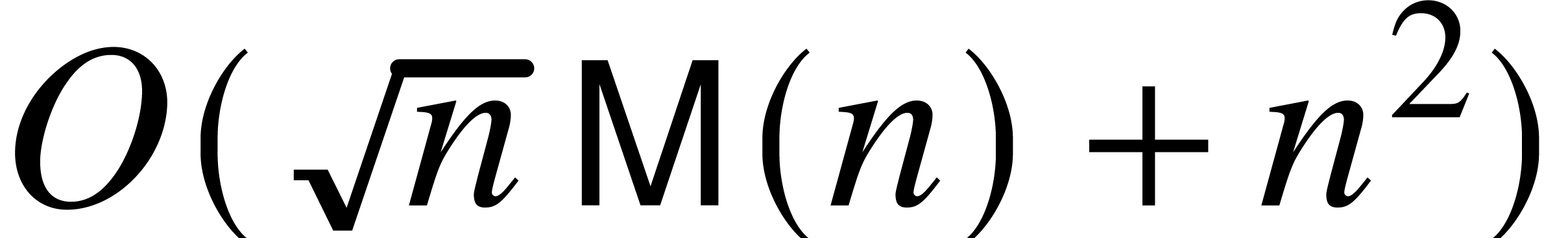

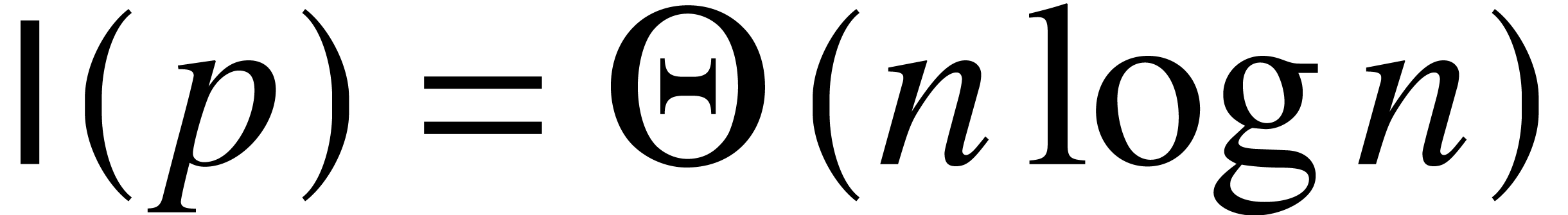

Under this assumption, we actually have the sharper ultimate bit

complexity bound

.

Under this assumption, we actually have the sharper ultimate bit

complexity bound  , which is

proved using FFT techniques [10].

, which is

proved using FFT techniques [10].

As a second example, which will be detailed in the sequel, consider the

computation of the complex roots of a separable polynomial  of degree

of degree  over the complex

numbers: once initial approximations of the roots are known with a

sufficiently large precision, the roots can be refined in softly linear

time by means of fast multi-point evaluations and Newton iterations.

This implies that the ultimate complexity is softly linear, even if a

rather slow algorithm is used to compute the initial approximations.

over the complex

numbers: once initial approximations of the roots are known with a

sufficiently large precision, the roots can be refined in softly linear

time by means of fast multi-point evaluations and Newton iterations.

This implies that the ultimate complexity is softly linear, even if a

rather slow algorithm is used to compute the initial approximations.

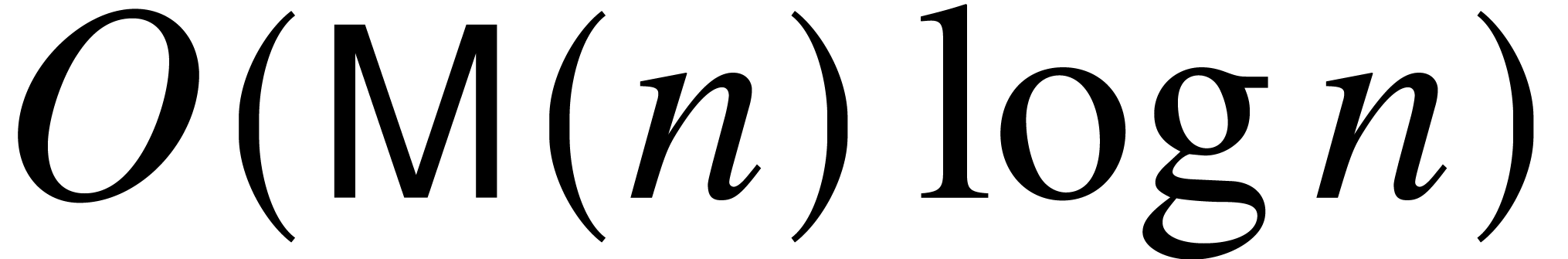

In sections 2 and 3, we recall basic results about ball arithmetic [13] and straight-line programs [6]. Section 4 is devoted to the new ultimate complexity model. In section 5 we illustrate the ultimate complexity model with modular composition. Modular composition consists in computing the composition of two univariate polynomials modulo a third one. For polynomials with coefficients in a finite field, Kedlaya and Umans proved in 2008 that the theoretical complexity for performing this task could be made arbitrarily close to linear [21, 22]. Unfortunately, beyond its major theoretical impact, this result has not led to practically faster implementations yet; see [17]. In this paper, we explore the particular case when the ground field is the field of computable complex numbers and improve previously known results in this special case.

In the bit complexity model, the running time of an algorithm is analyzed in terms of the number of bit operations that need to be performed. In this paper, we always assume that this is done on a Turing machine with a finite and sufficiently large number of tapes [28].

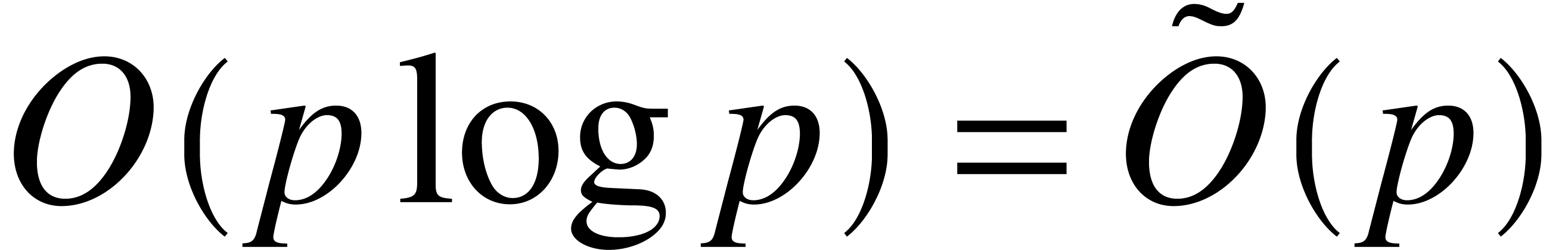

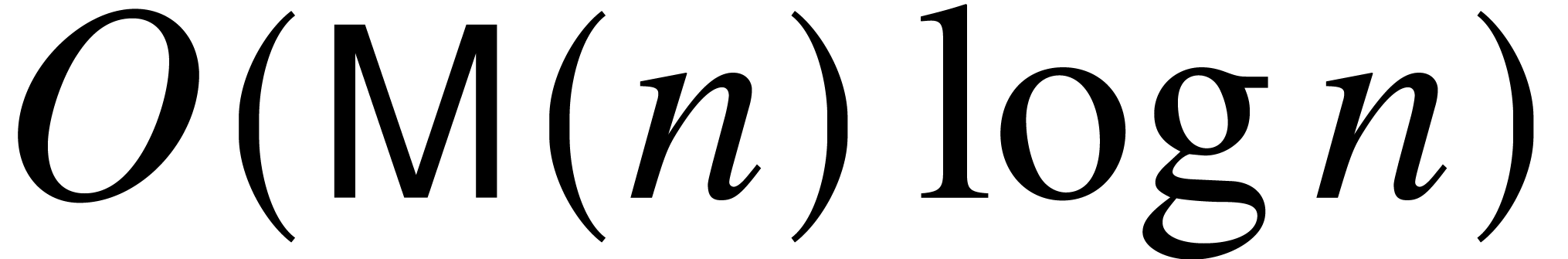

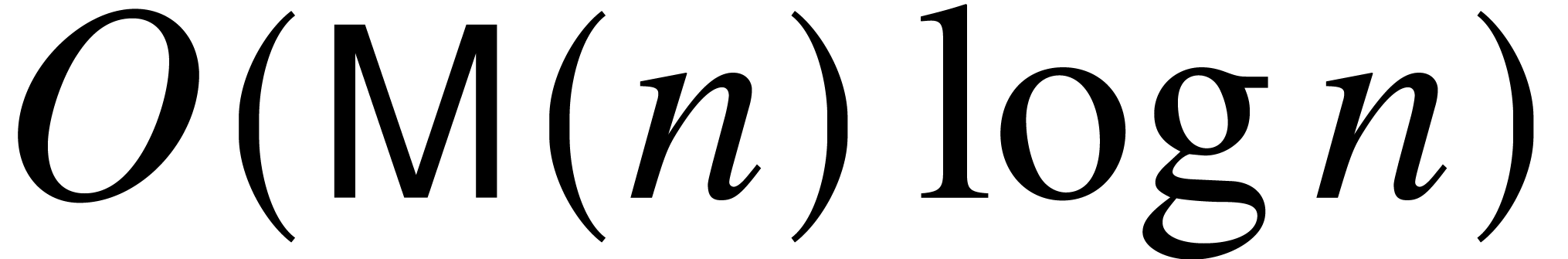

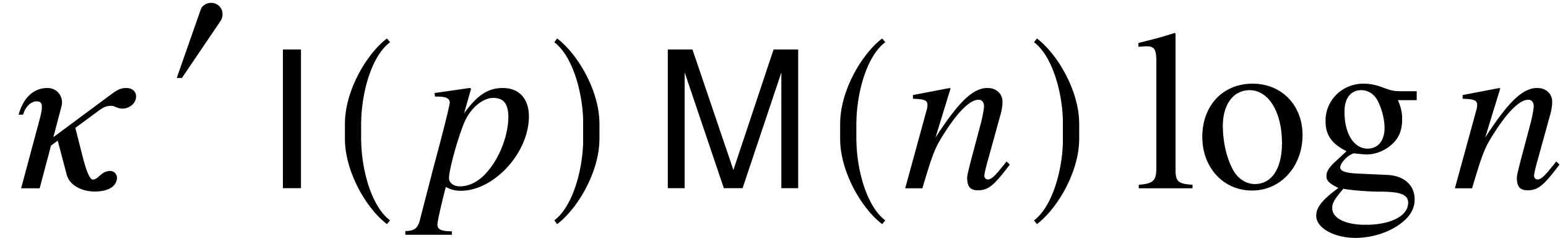

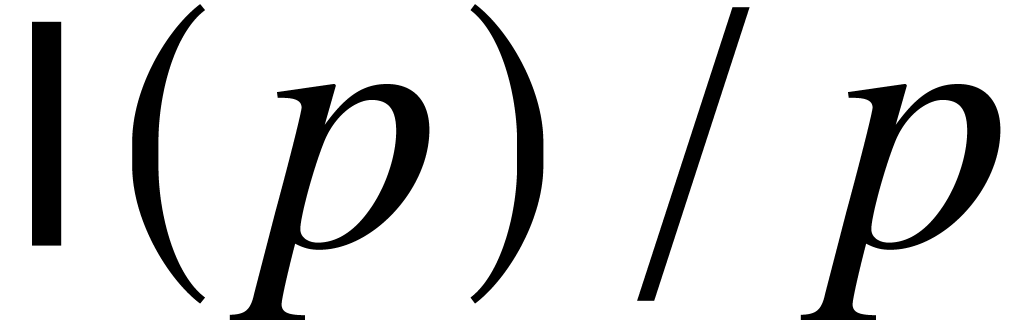

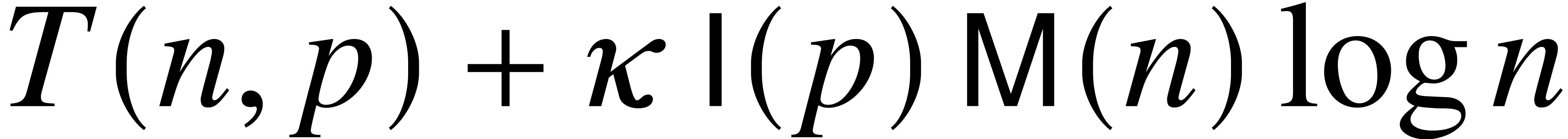

Multiplication is a central operation in both models. We write  for a function that bounds the bit-cost of an algorithm

which multiplies two integers of bit sizes at most

for a function that bounds the bit-cost of an algorithm

which multiplies two integers of bit sizes at most  , for the usual binary representation. The best

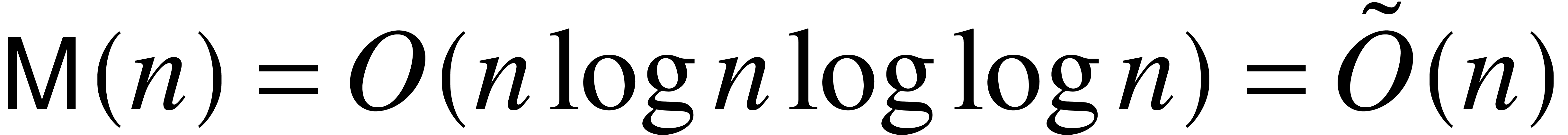

known bound [11] for

, for the usual binary representation. The best

known bound [11] for  is

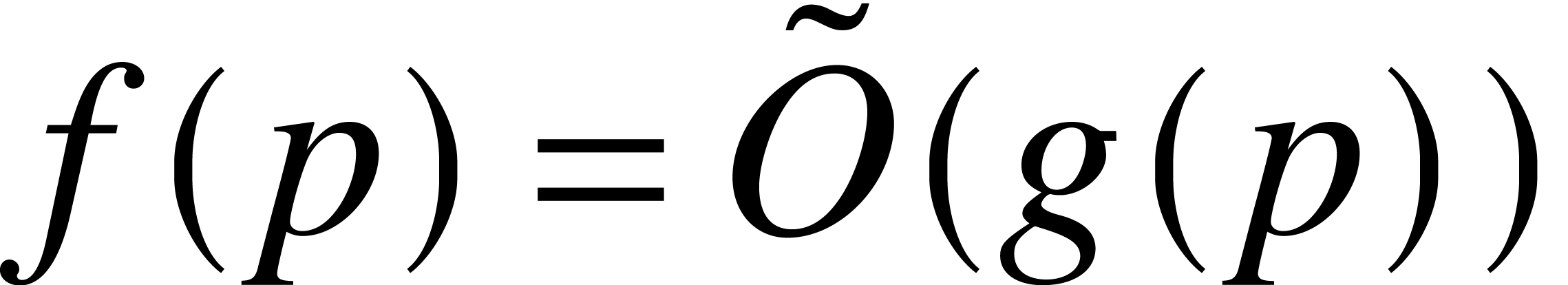

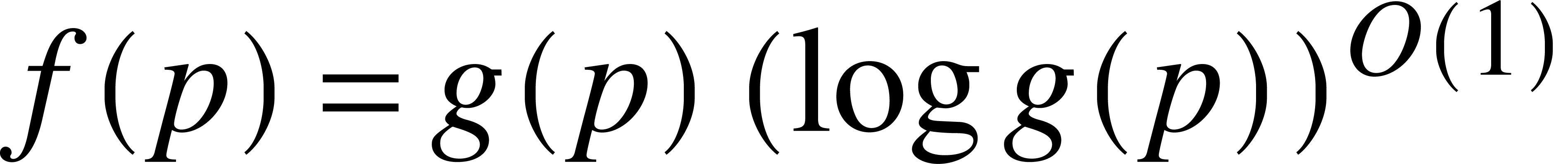

is  . Here, the soft-Oh

notation

. Here, the soft-Oh

notation  means that

means that  ; we refer the reader to [9, Chapter

25, Section 7] for technical details. We make the customary assumption

that

; we refer the reader to [9, Chapter

25, Section 7] for technical details. We make the customary assumption

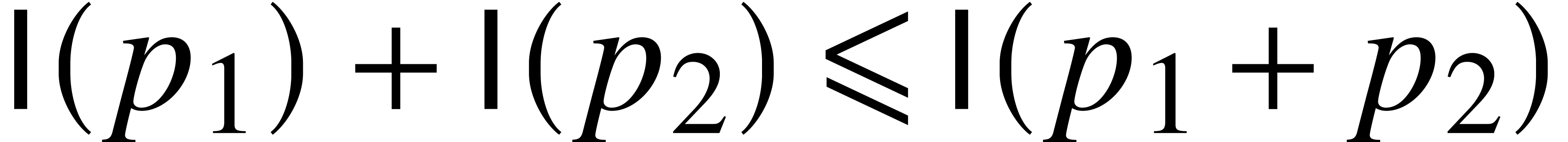

that  is non-decreasing. Notice that this

assumption implies the super-additivity of

is non-decreasing. Notice that this

assumption implies the super-additivity of  , namely

, namely  for all

for all  and

and  .

.

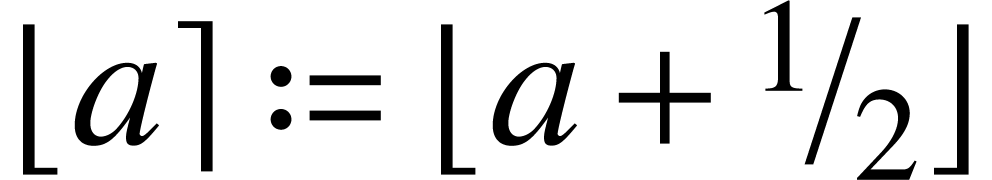

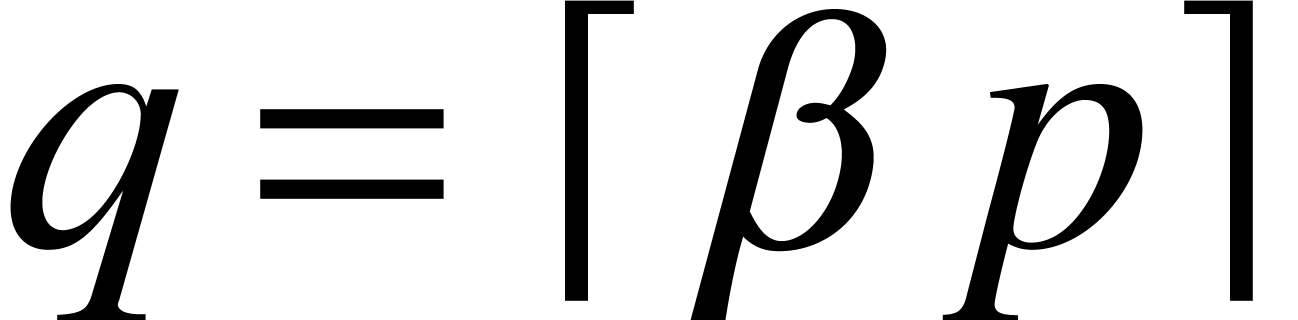

Let  be a real number, we write

be a real number, we write  for the largest integer less or equal to

for the largest integer less or equal to  and

and

for the closest integer to

for the closest integer to  .

.

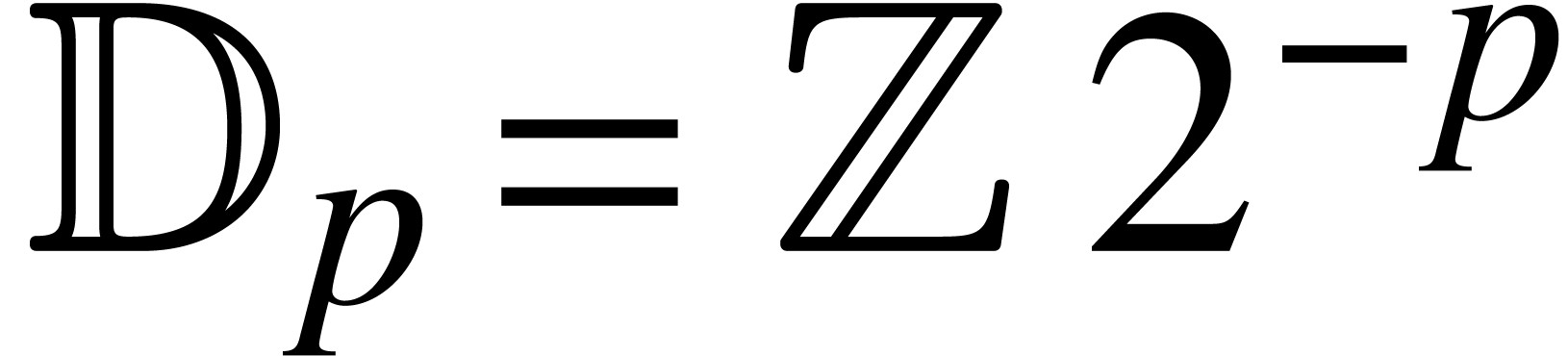

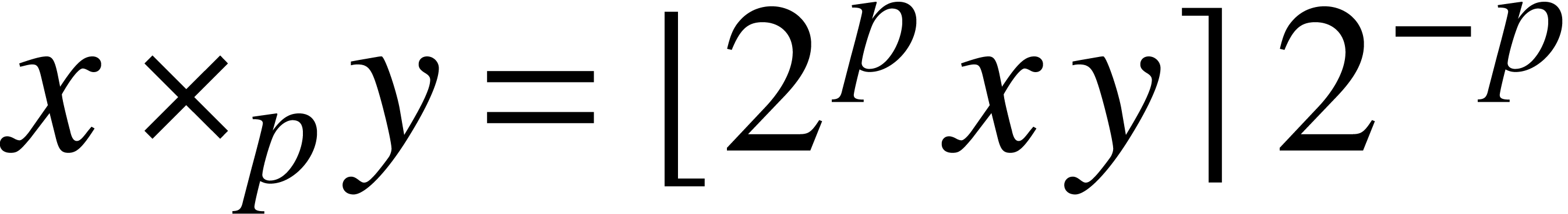

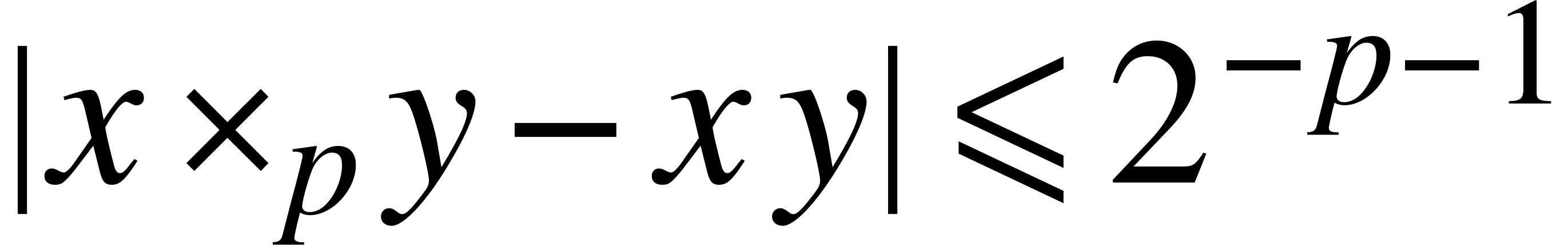

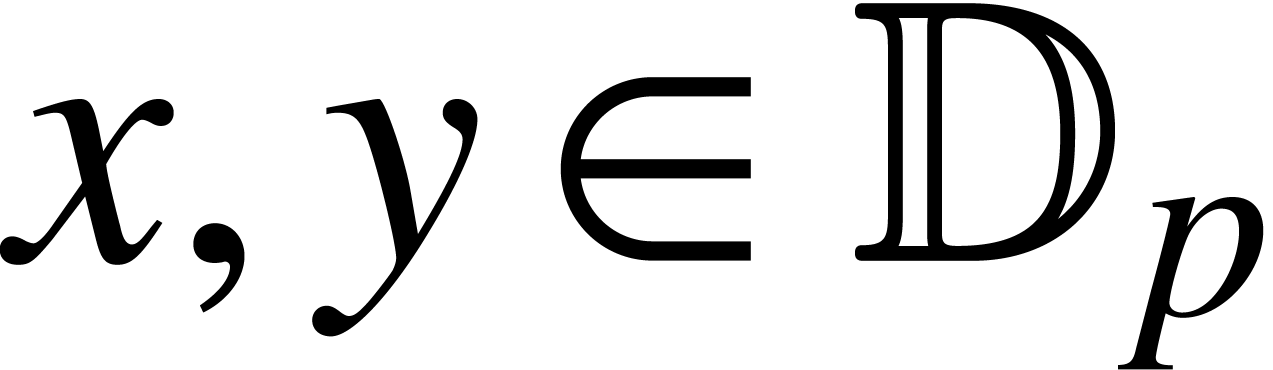

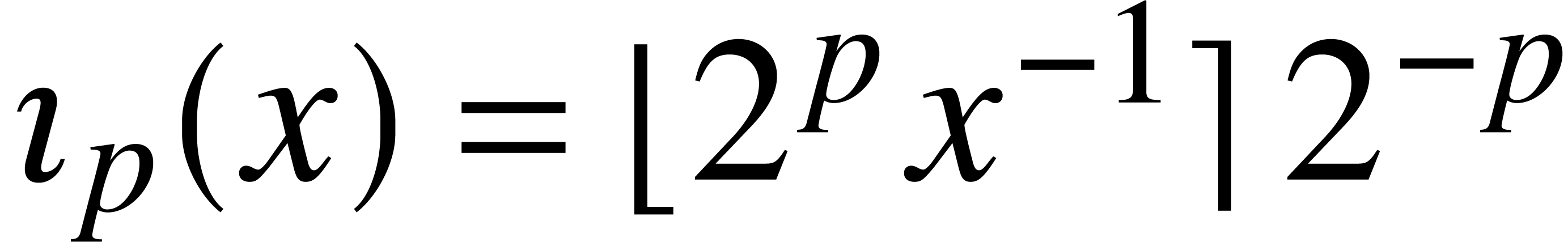

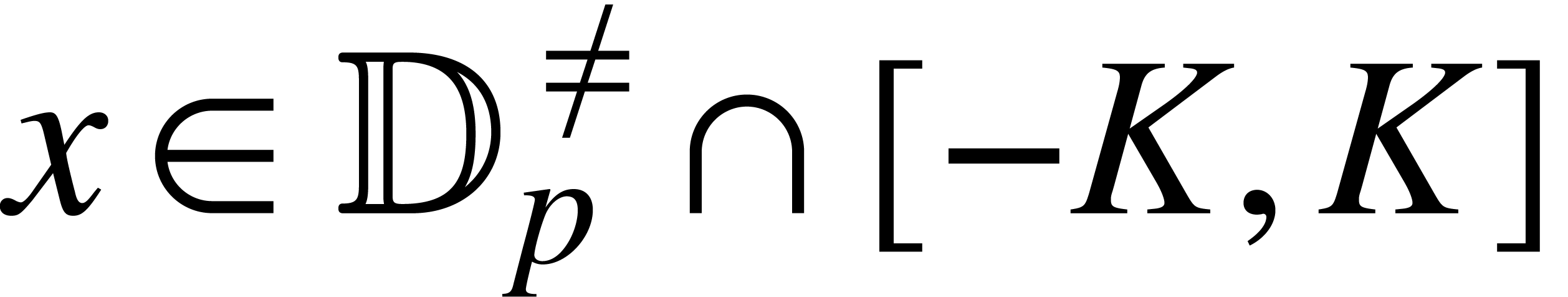

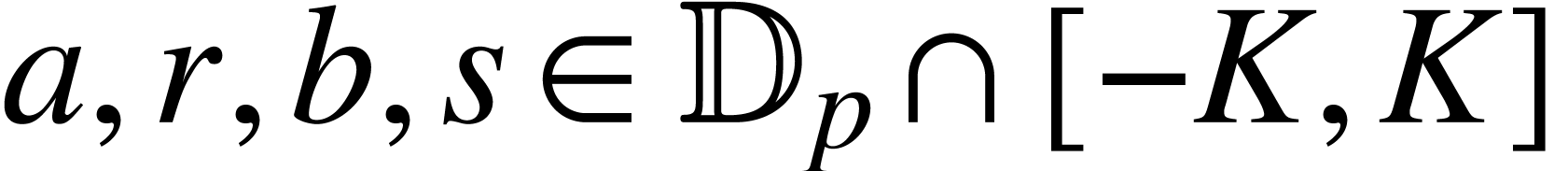

Given a precision  , we denote

by

, we denote

by  the set of fixed point numbers with

the set of fixed point numbers with

binary digits after the dot. This set

binary digits after the dot. This set  is clearly stable under addition and subtraction. We can

also define approximate multiplication

is clearly stable under addition and subtraction. We can

also define approximate multiplication  on

on  using

using  , so

, so

for all

for all  .

.

For any fixed constant  and

and  , we notice that

, we notice that  and

and

can be computed in time

can be computed in time  , whereas

, whereas  can be computed in

time

can be computed in

time  . Similarly, one may

define an approximate inversion

. Similarly, one may

define an approximate inversion  on

on  by

by  . For any

fixed constant

. For any

fixed constant  and

and  ,

we may compute

,

we may compute  in time

in time  .

.

Remark

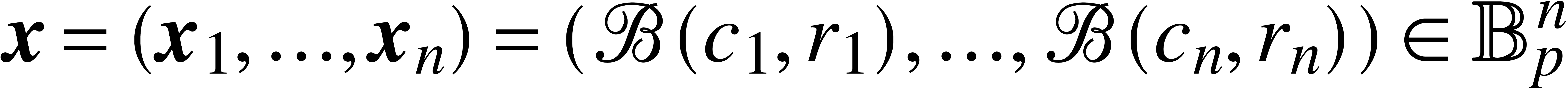

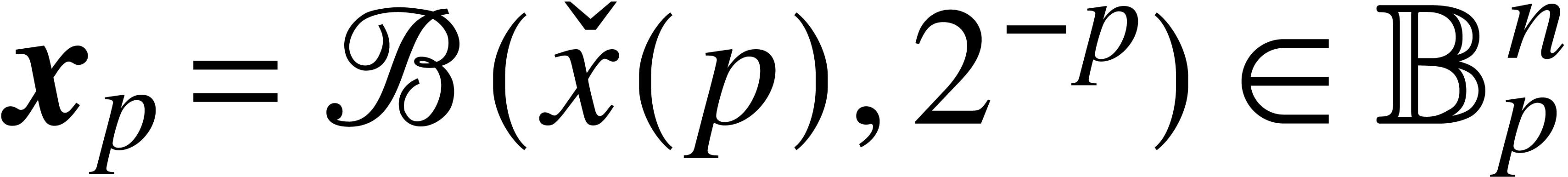

Ball arithmetic is used for providing reliable error bounds for

approximate computations. A ball is a set  with

with  and

and  .

From the computational point of view, we represent such balls by their

centers

.

From the computational point of view, we represent such balls by their

centers  and radii

and radii  .

We denote by

.

We denote by  the set of balls with centers in

the set of balls with centers in

and radii in

and radii in  .

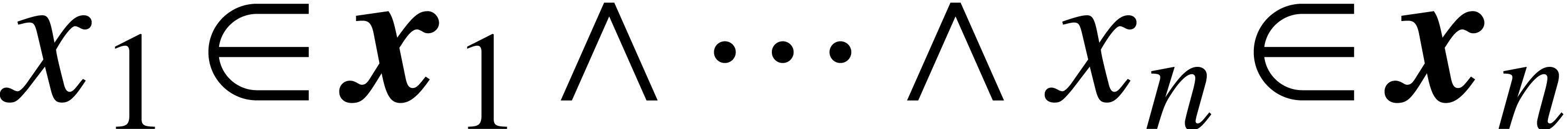

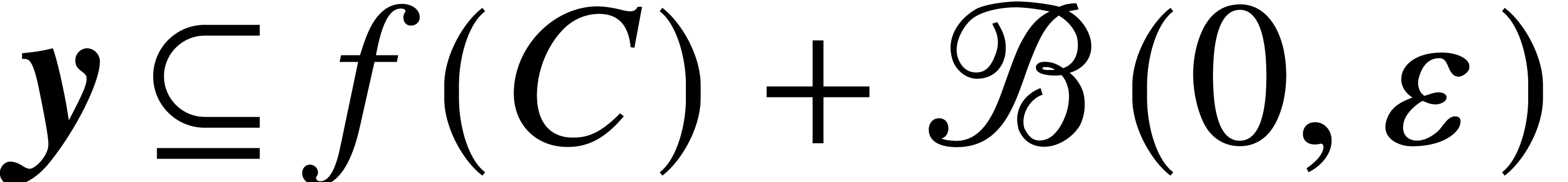

Given vectors

.

Given vectors  and

and  we

write

we

write  to mean

to mean  ,

and we also set

,

and we also set  .

.

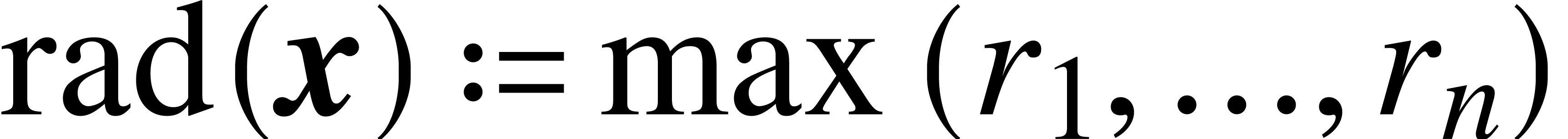

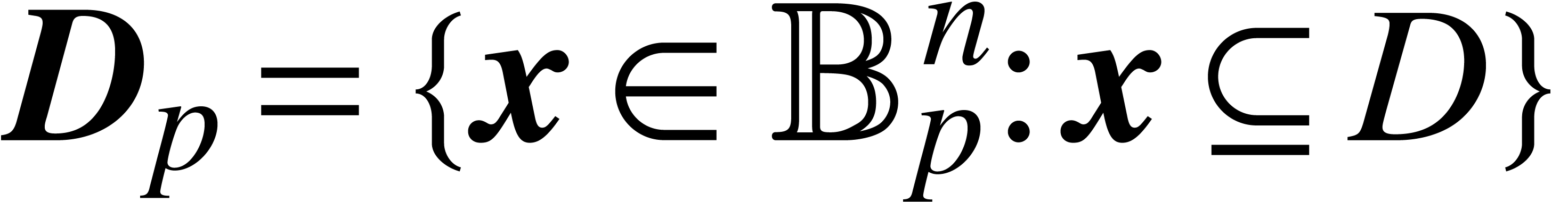

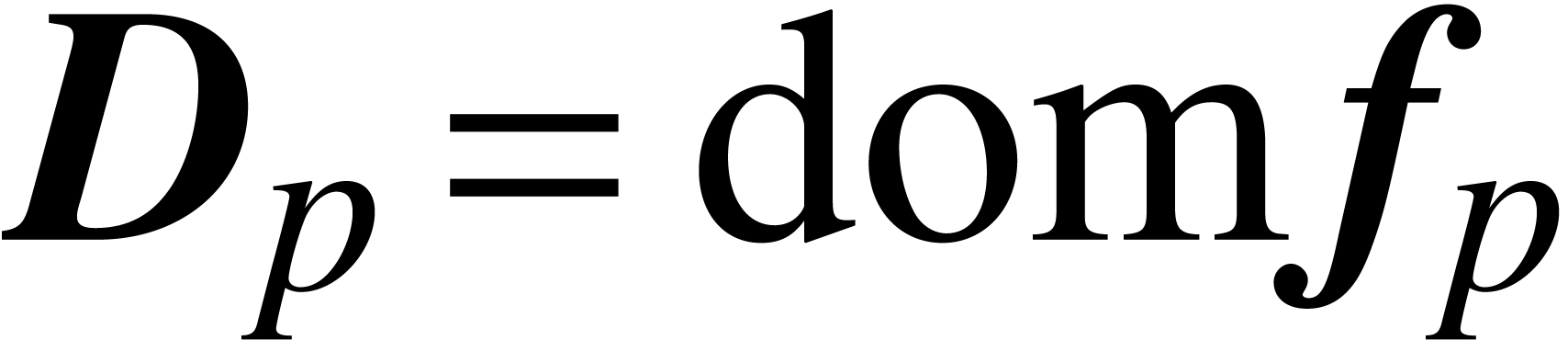

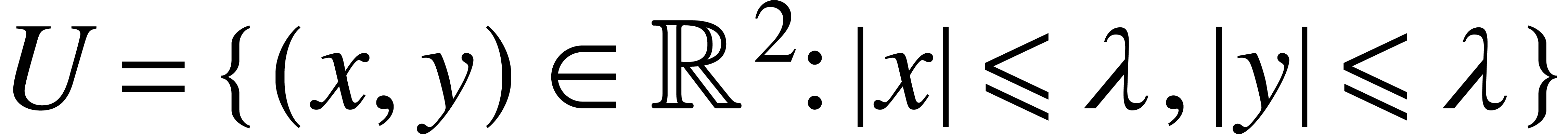

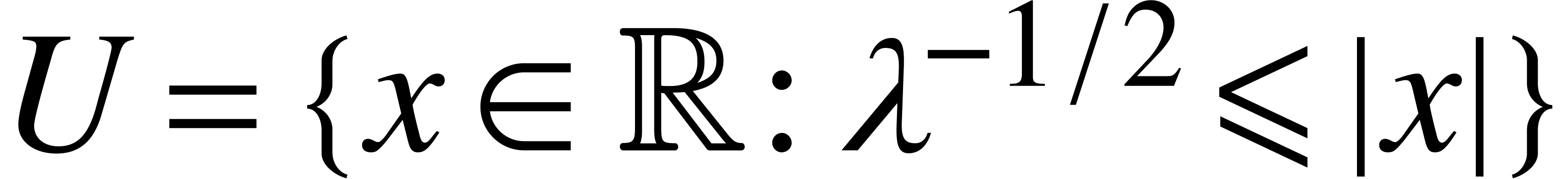

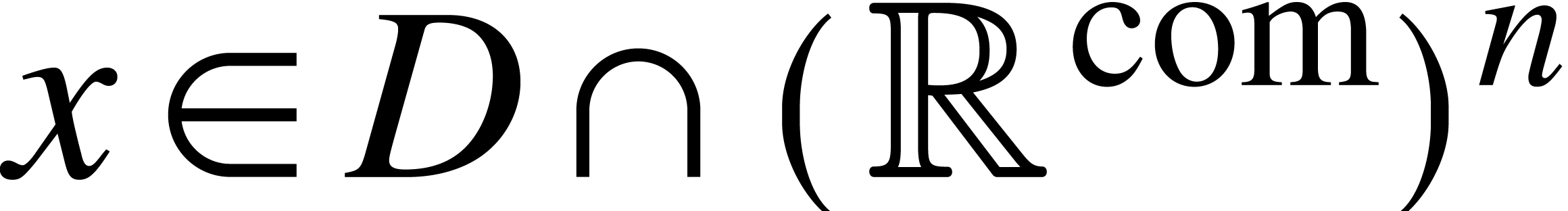

Let  be an open subset of

be an open subset of  . We say that

. We say that  is a

domain lift at precision

is a

domain lift at precision  if

if  for all

for all  . The

maximal such lift is given by

. The

maximal such lift is given by  .

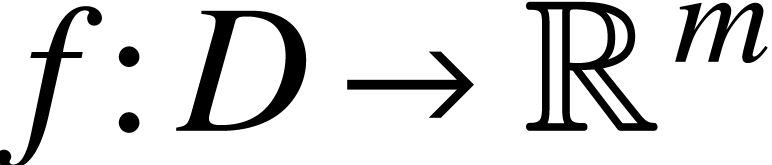

Given a function

.

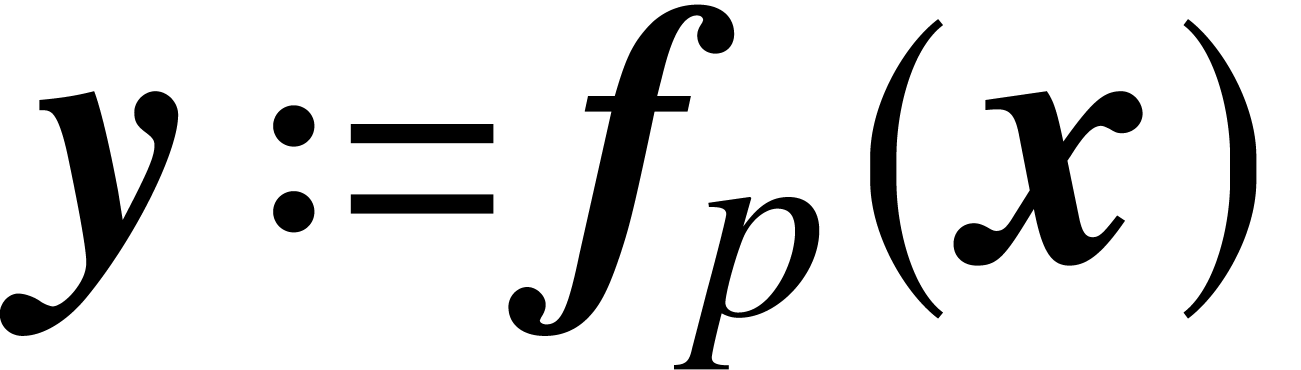

Given a function  , a ball

lift of

, a ball

lift of  at precision

at precision  is a function

is a function  , where

, where  is a domain lift of

is a domain lift of  at

precision

at

precision  , that satisfies

the inclusion property: for any

, that satisfies

the inclusion property: for any  and

and

, we have

, we have

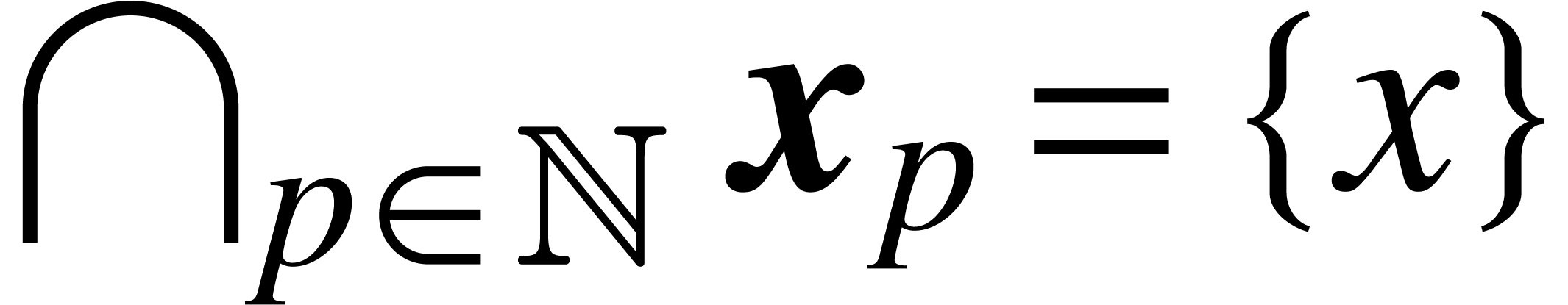

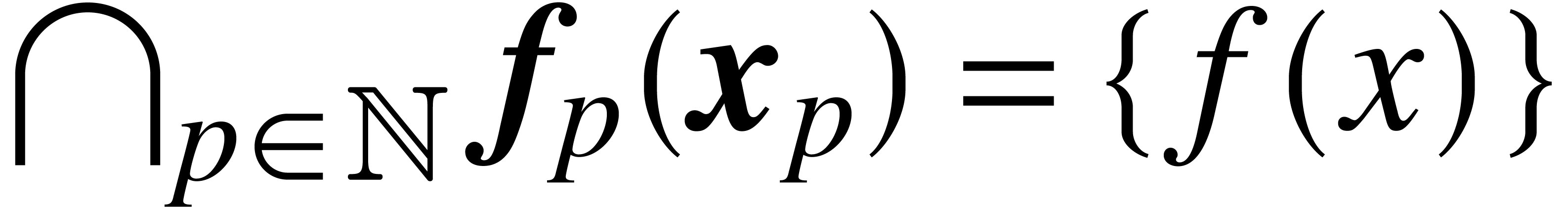

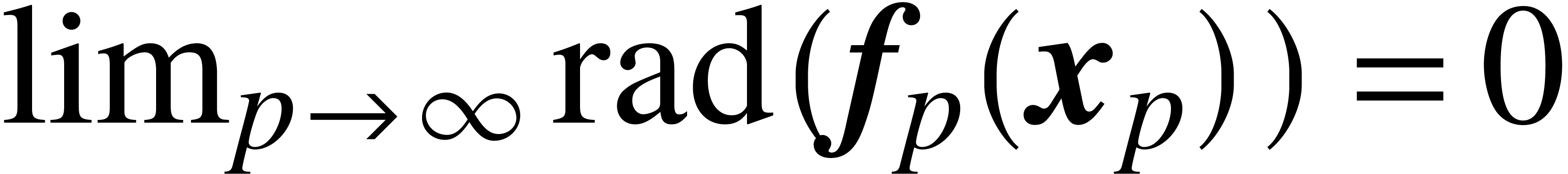

A ball lift  of

of  is a computable sequence

is a computable sequence  of ball lifts at every

precision such that for any sequence

of ball lifts at every

precision such that for any sequence  with

with  , we have

, we have

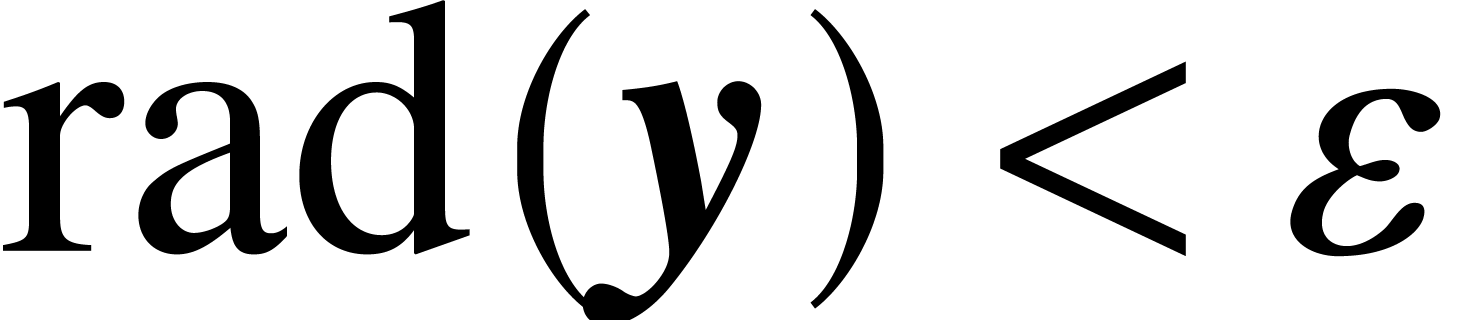

This condition implies the following:

We say that  is maximal if

is maximal if  is the maximal domain lift for each

is the maximal domain lift for each  . Notice that a function

. Notice that a function  must be continuous in order to admit a maximal ball lift.

must be continuous in order to admit a maximal ball lift.

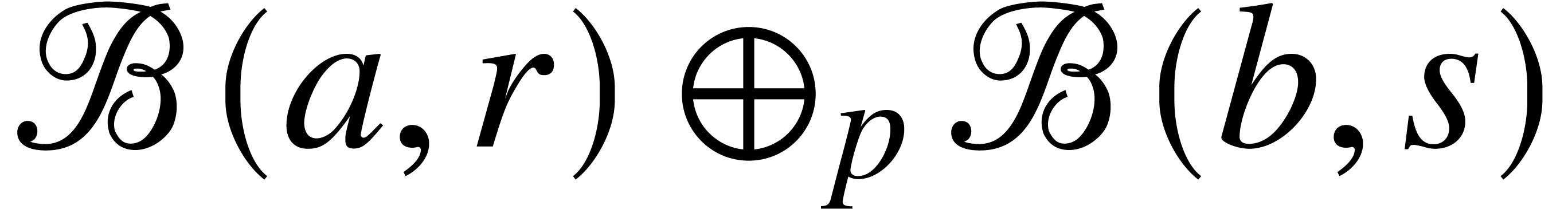

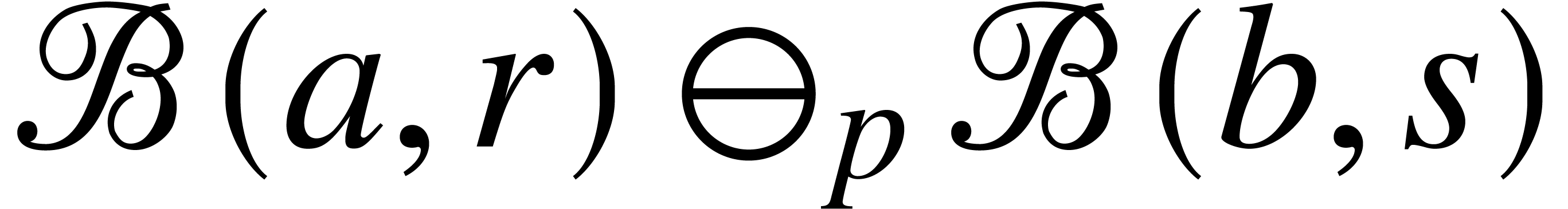

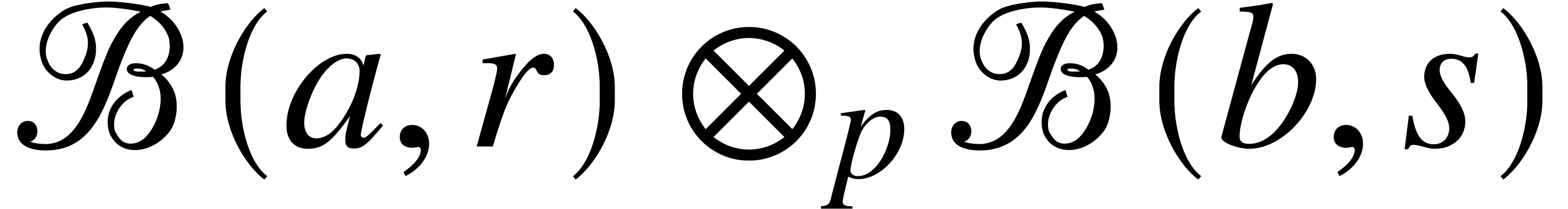

The following formulas define maximal ball lifts  ,

,  and

and  at precision

at precision  for the ring operations

for the ring operations  ,

,  and

and  :

:

The extra  in the formula for multiplication is

needed in order to counter the effect of rounding errors that might

occur in the three multiplications

in the formula for multiplication is

needed in order to counter the effect of rounding errors that might

occur in the three multiplications  ,

,

and

and  .

For

.

For  with

with  ,

the following formula also defines a maximal ball lift

,

the following formula also defines a maximal ball lift  at precision

at precision  for the inversion:

for the inversion:

For any fixed constant  and

and  , we notice that

, we notice that  ,

,

,

,  and

and

can be computed in time

can be computed in time  .

.

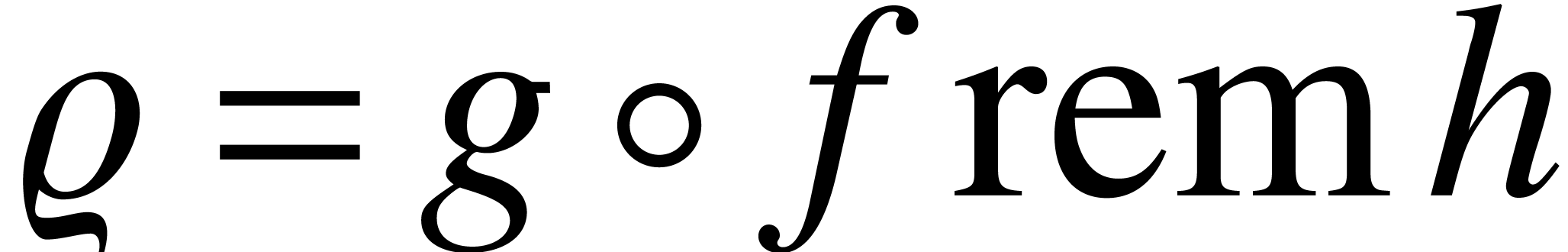

Let  be the ball lift of a function

be the ball lift of a function  with

with  . Consider

a second ball lift

. Consider

a second ball lift  of a function

of a function  with

with  . Then we

may define a ball lift

. Then we

may define a ball lift  of the composition

of the composition  as follows. For each precision

as follows. For each precision  , we take

, we take  ,

where

,

where  is the restriction of

is the restriction of  to the set

to the set  .

.

We shall use ball arithmetic for the computation of complex functions

simply through the consideration of real and

imaginary parts. This point of view is sufficient for the asymptotic

complexity analyses of the present paper. Of course, it would be more

efficient to directly compute with complex balls (i.e.

balls with a complex center and a real radius), but this would involve

approximate square roots and ensuing technicalities.

simply through the consideration of real and

imaginary parts. This point of view is sufficient for the asymptotic

complexity analyses of the present paper. Of course, it would be more

efficient to directly compute with complex balls (i.e.

balls with a complex center and a real radius), but this would involve

approximate square roots and ensuing technicalities.

Assume that we are given the ball lift  of a

function

of a

function  with

with  .

Given a subset

.

Given a subset  and constants

and constants  , we say that the ball lift

, we say that the ball lift  is

is  -Lipschitz on

-Lipschitz on

if

if

For instance, the ball lifts  and

and  of addition and subtraction are

of addition and subtraction are  -Lipschitz on

-Lipschitz on  .

Similarly, the ball lift

.

Similarly, the ball lift  of multiplication is

of multiplication is

-Lipschitz on

-Lipschitz on  (by taking

(by taking  ),

whereas the ball lift

),

whereas the ball lift  of

of  is

is  -Lipschitz on

-Lipschitz on  .

.

Given  and

and  as above, we

say that

as above, we

say that  is locally

is locally  -Lipschitz on

-Lipschitz on  if

if  is

is  -Lipschitz

on each compact subset of

-Lipschitz

on each compact subset of  .

We define

.

We define  to be

to be  -Lipschitz

(resp. locally

-Lipschitz

(resp. locally  -Lipschitz) on

-Lipschitz) on

if there exists a constant

if there exists a constant  for which

for which  is

is  -Lipschitz

(resp. locally

-Lipschitz

(resp. locally  -Lipschitz).

If

-Lipschitz).

If  is locally

is locally  -Lipschitz

on

-Lipschitz

on  , then it is not hard to

see that

, then it is not hard to

see that  is necessarily locally Lipschitz on

is necessarily locally Lipschitz on

, with Lipschitz constant

, with Lipschitz constant

. That is,

. That is,

In fact, the requirement that a computable ball lift  is

is  -Lipschitz implies that we

have a way to compute high quality error bounds. We finally define

-Lipschitz implies that we

have a way to compute high quality error bounds. We finally define  to be Lipschitz (resp. locally Lipschitz) on

to be Lipschitz (resp. locally Lipschitz) on  if there exists a constant

if there exists a constant  for which

for which  is

is  -Lipschitz

(resp. locally

-Lipschitz

(resp. locally  -Lipschitz).

-Lipschitz).

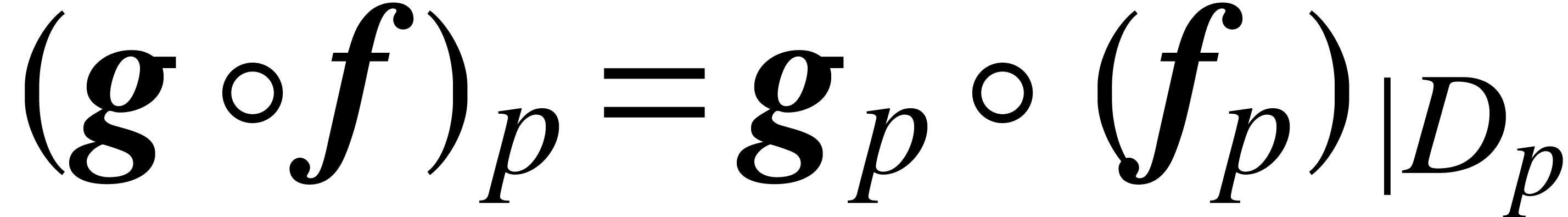

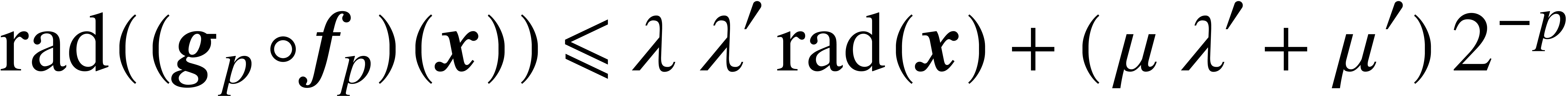

be a locally

be a locally  -Lipschitz

ball lift of

-Lipschitz

ball lift of  on an open set

on an open set  . Let

. Let  be a locally

be a locally

-Lipschitz ball lift of

-Lipschitz ball lift of

on an open set

on an open set  .

If

.

If  and

and  ,

then

,

then  is a locally

is a locally  -Lipschitz ball lift of

-Lipschitz ball lift of  on

on

.

.

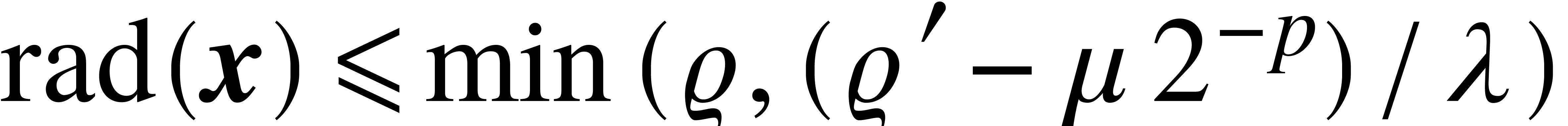

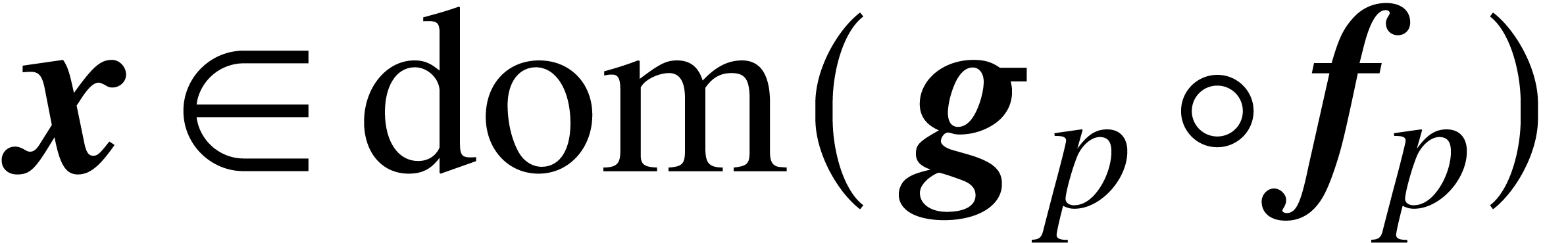

Proof. Consider a compact subset  . Since this implies

. Since this implies  to

be a compact subset of

to

be a compact subset of  , it

follows that there exists an

, it

follows that there exists an  such that

such that  . Let

. Let  ,

,

and

and  be such that for any

be such that for any

,

,  and

and

, we have

, we have

Given  with

with  and

and  , it follows that

, it follows that  satisfies

satisfies  , whence

, whence  . If we also assume that

. If we also assume that  , then it also follows that

, then it also follows that  , whence

, whence  and

and  . In other words, if

. In other words, if  and

and  , then

, then

and

and  .

.

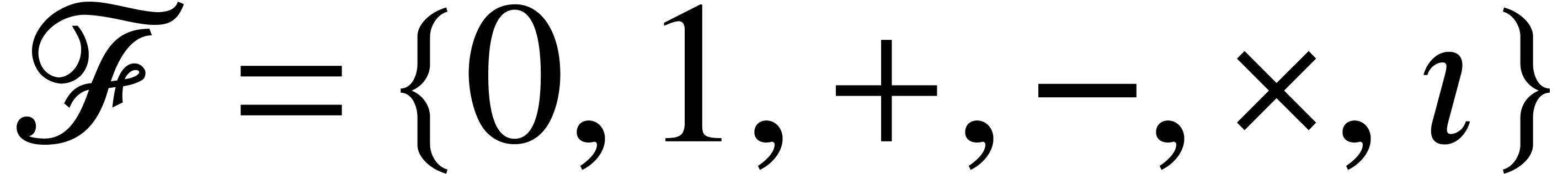

Informally speaking, a straight-line program is a sequence of programing instructions that does not contain any loop or branching. Each instruction applies a single elementary operation to constants and values stored in variables. The result of the instruction is then stored into a variable. A detailed presentation of straight-line programs can be found in the book [6]. From the mathematical point of view, a straight-line program can be regarded as a data structure that encodes of a composition of elementary operations.

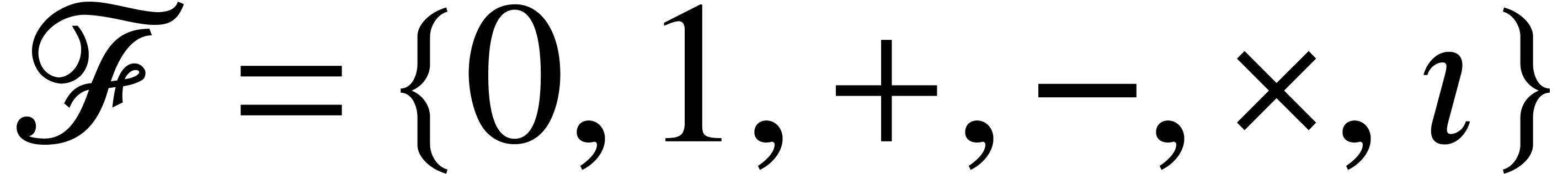

For straight-line programs over rings (and fields), elementary operations are usually the arithmetic ones: addition, subtraction, products (and inversions). Our application to modular composition in section 5 only requires these operations. However in the present numerical context, it is relevant to allow us for wider sets of elementary operations such as exponentials, logarithms, trigonometric functions, etc. The following paragraphs thus formalize straight-line programs for a wide class of numerical algorithms. We take care of definition domains and allow for changes of evaluation domains.

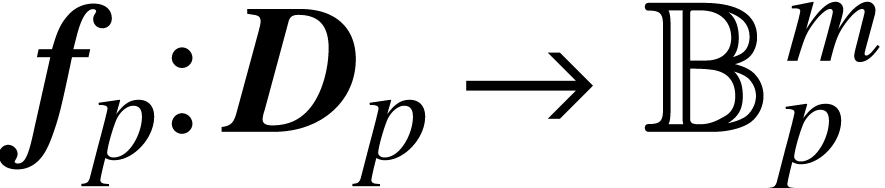

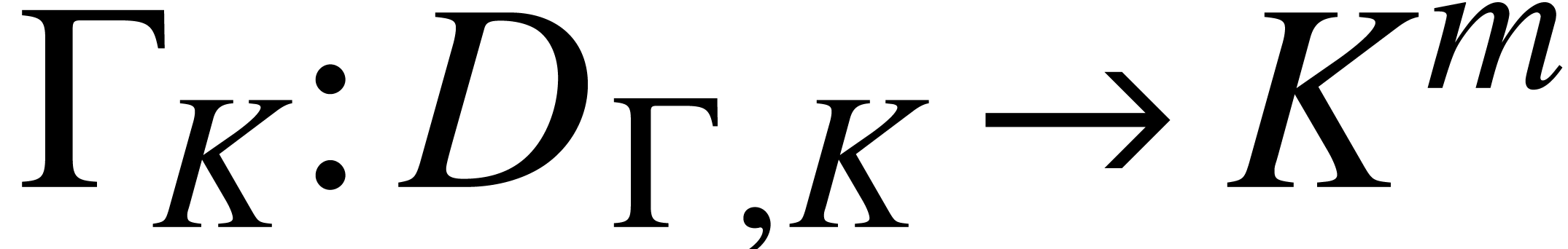

A signature is a finite or countable set of function symbols

together with an arity

together with an arity  for each

for each  . A model

for

. A model

for  is a set

is a set  together

with a function

together

with a function  with

with  for

each

for

each  . If

. If  is a topological space, then

is a topological space, then  is required to be

an open subset of

is required to be

an open subset of  . Let

. Let  be a countable and ordered set of variable symbols.

be a countable and ordered set of variable symbols.

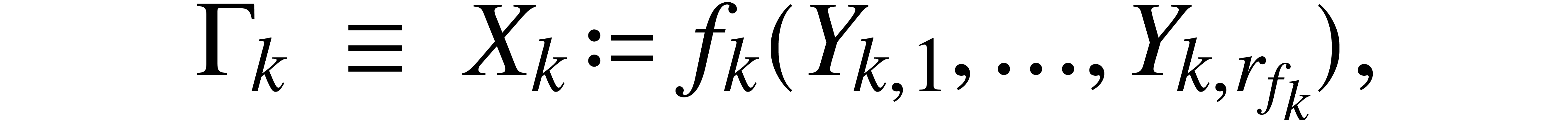

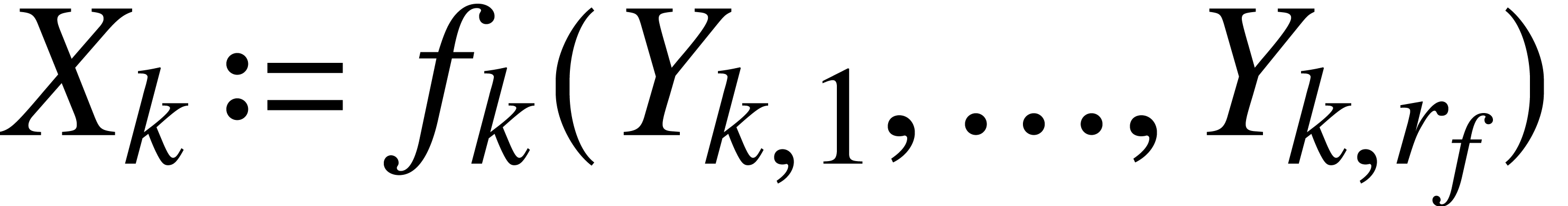

A straight-line program (SLP)  with

signature

with

signature  is a sequence

is a sequence  of instructions of the form

of instructions of the form

where  and

and  ,

together with a subset

,

together with a subset  of output

variables. Variables that appear for the first time in the sequence

in the right-hand side of an instruction are called input

variables. We denote by

of output

variables. Variables that appear for the first time in the sequence

in the right-hand side of an instruction are called input

variables. We denote by  the set of input

variables. The number

the set of input

variables. The number  is called the

length of

is called the

length of  .

.

There exist unique sequences  and

and  with

with  and

and  . Given a model

. Given a model  of

of  we can run

we can run  for inputs in

for inputs in  , provided that the arguments

, provided that the arguments  are always in the domain of

are always in the domain of  when executing the instruction

when executing the instruction  .

Let

.

Let  be the set of tuples

be the set of tuples  on which

on which  can be run. Given

can be run. Given  , let

, let  denote the value

of

denote the value

of  at the end of the program. Hence

at the end of the program. Hence  gives rise to a function

gives rise to a function  .

.

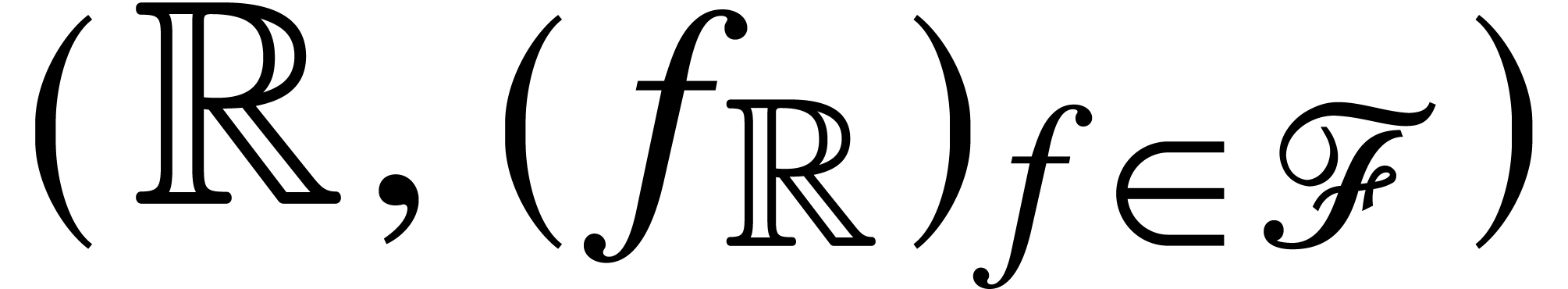

Now assume that  is a model for

is a model for  and that we are given a ball lift

and that we are given a ball lift  of

of  for each

for each  . Then

. Then

is also a model for

is also a model for  at

each precision

at

each precision  , by taking

, by taking

for each

for each  .

Consequently, any SLP

.

Consequently, any SLP  as above gives rise to

both a function

as above gives rise to

both a function  and a ball lift

and a ball lift  at each precision

at each precision  . The

sequence

. The

sequence  thus provides us with a ball lift

thus provides us with a ball lift  for

for  .

.

of

of  is Lipschitz for each

is Lipschitz for each  ,

then

,

then  is again Lipschitz.

is again Lipschitz.

Proof. For each model  of

of

, for each variable

, for each variable  and each input

and each input  ,

let

,

let  denote the value of

denote the value of  after step

after step  . We may regard

. We may regard

as a function from

as a function from  to

to

. In particular, we obtain a

computable sequence of functions

. In particular, we obtain a

computable sequence of functions  that give rise

to a ball lift

that give rise

to a ball lift  of

of  .

Let us show by induction over

.

Let us show by induction over  that

that  is Lipschitz for every

is Lipschitz for every  .

This is clear for

.

This is clear for  , so let

, so let

. If

. If  , then we have

, then we have  ;

otherwise, we have

;

otherwise, we have

In both cases, it follows from Lemma 2 that  is again a Lipschitz ball lift. We conclude by noticing

that

is again a Lipschitz ball lift. We conclude by noticing

that  .

.

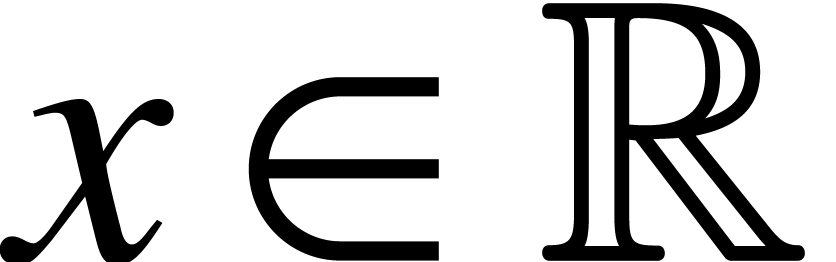

A real number  is said to be computable

if there exists an approximation algorithm

is said to be computable

if there exists an approximation algorithm  that takes

that takes  on input and produces

on input and produces  on output with

on output with  (we say that

(we say that  is a

is a  -approximation

of

-approximation

of  ). We denote by

). We denote by  the field of computable real numbers.

the field of computable real numbers.

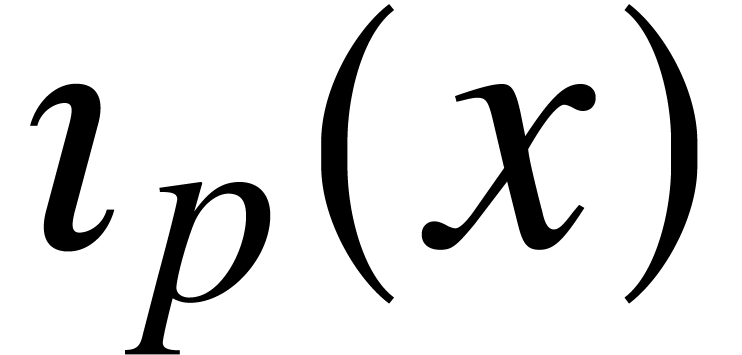

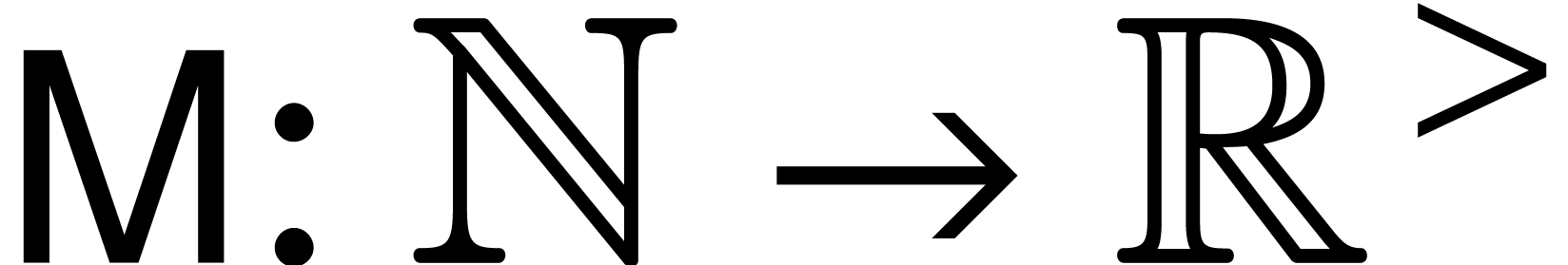

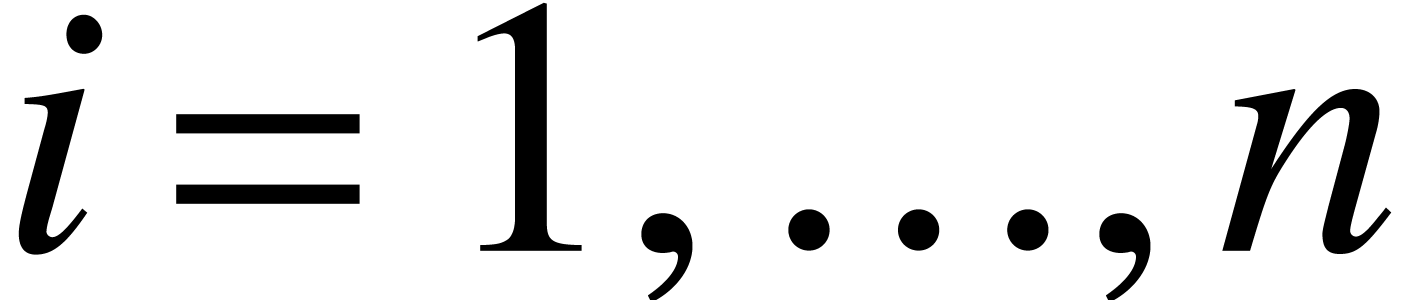

Let  be a nondecreasing function. We say that a

computable real number

be a nondecreasing function. We say that a

computable real number  has ultimate

complexity

has ultimate

complexity  if it admits an approximation

algorithm

if it admits an approximation

algorithm  that computes

that computes  in time

in time  for some fixed constant

for some fixed constant  . The fact that we allow

. The fact that we allow  to be computed in time

to be computed in time  and not

and not  is justified by the observation that the position of the “binary

dot” is somewhat arbitrary in the approximation process of a

computable number.

is justified by the observation that the position of the “binary

dot” is somewhat arbitrary in the approximation process of a

computable number.

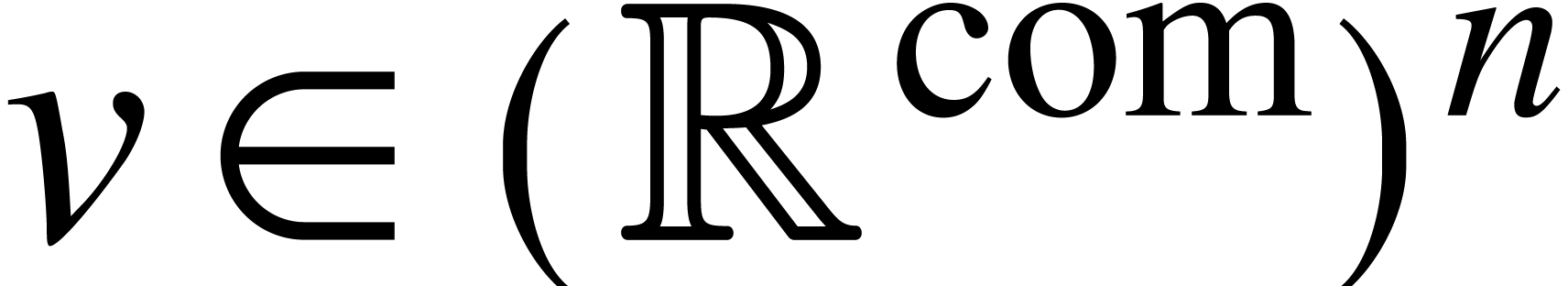

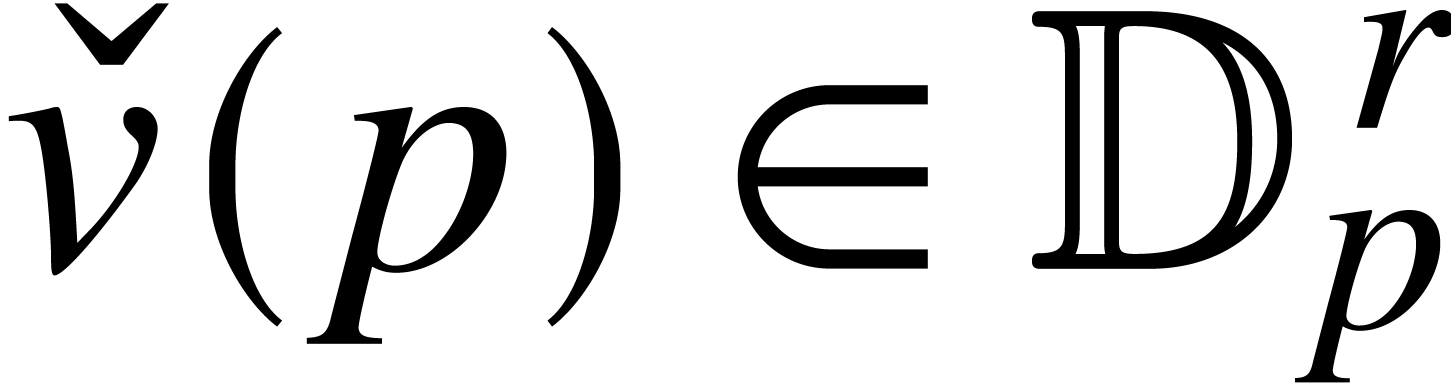

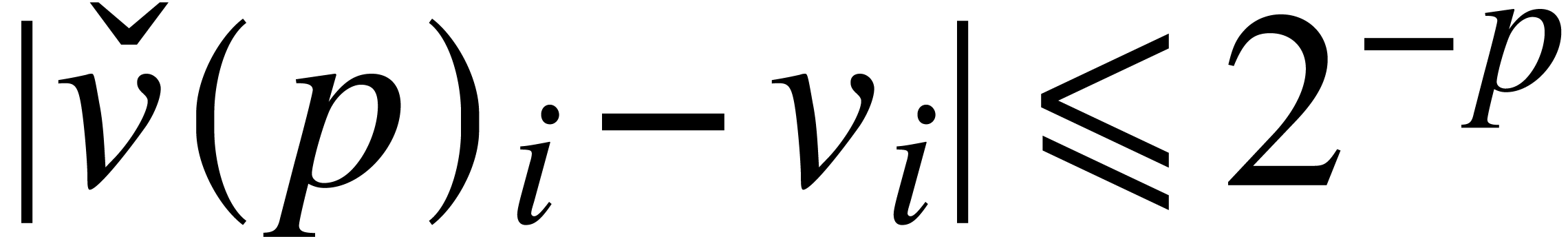

The notion of approximation algorithm generalizes to vectors with real

coefficients: given  , an

approximation algorithm for

, an

approximation algorithm for  as a whole is an

algorithm

as a whole is an

algorithm  that takes

that takes  on

input and returns

on

input and returns  on output with

on output with  for

for  . This

definition naturally extends to any other mathematical objects that can

be encoded by vectors of real numbers: complex numbers (by their real

and complex parts), polynomials and matrices (by their vectors of

coefficients), etc. The notion of ultimate complexity also extends to

any of these objects.

. This

definition naturally extends to any other mathematical objects that can

be encoded by vectors of real numbers: complex numbers (by their real

and complex parts), polynomials and matrices (by their vectors of

coefficients), etc. The notion of ultimate complexity also extends to

any of these objects.

A ball lift  is said to be computable if

there exists an algorithm for computing

is said to be computable if

there exists an algorithm for computing  for all

for all

. A computable ball lift

. A computable ball lift  of a function

of a function  with

with  allows us to compute the restriction of

allows us to compute the restriction of  to

to  : given

: given  with approximation algorithm

with approximation algorithm  ,

by taking

,

by taking  , we have

, we have  ,

,  ,

and

,

and  .

.

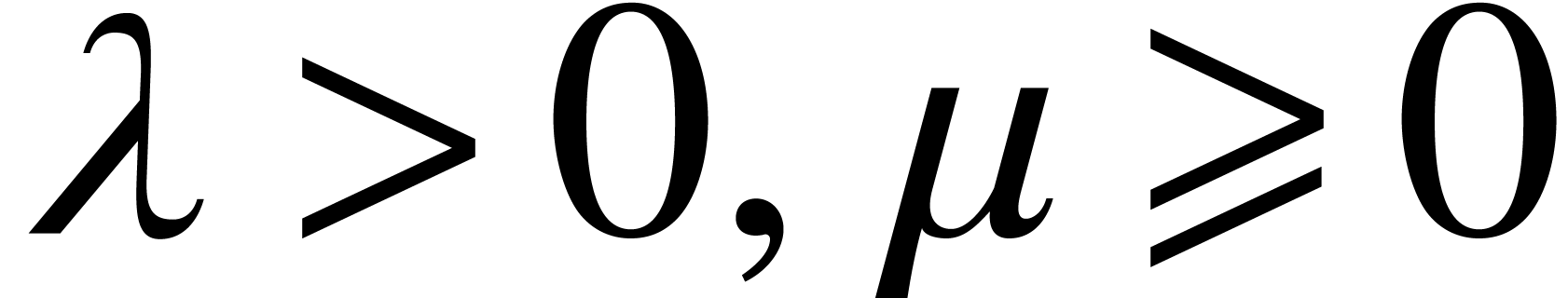

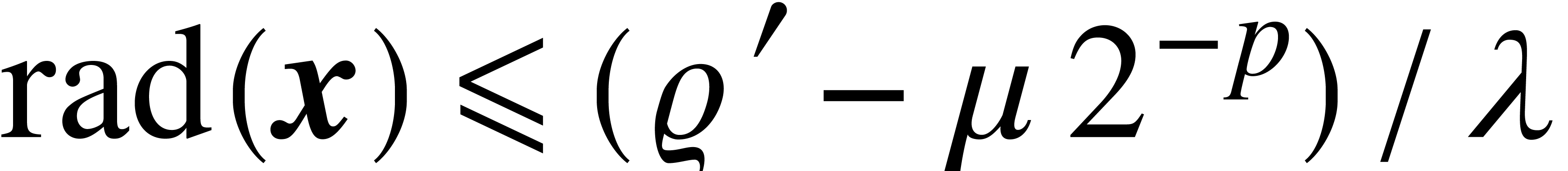

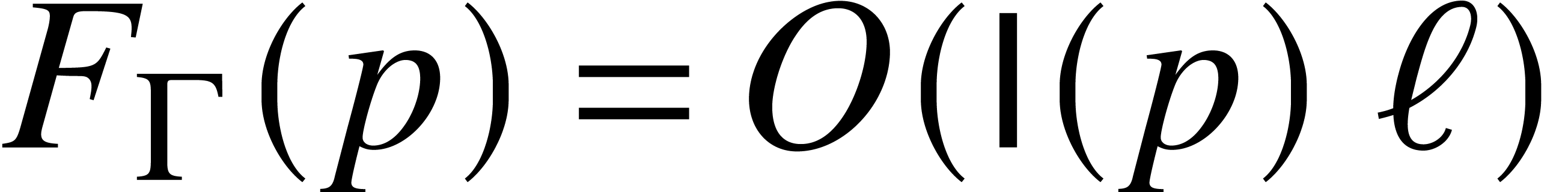

Let  be a nondecreasing function and assume that

be a nondecreasing function and assume that

is open. We say that

is open. We say that  has

ultimate complexity

has

ultimate complexity  if for every

compact set

if for every

compact set  , there exist

constants

, there exist

constants  ,

,  and

and  such that for any

such that for any  and

and  with

with  and

and  , we can compute

, we can compute  in time

in time  . For instance,

. For instance,  and

and  have ultimate complexity

have ultimate complexity

, whereas

, whereas  and

and  have ultimate complexity

have ultimate complexity  .

.

is locally

Lipschitz. If

is locally

Lipschitz. If  has ultimate complexity

has ultimate complexity  and

and  has ultimate complexity

has ultimate complexity

, then

, then  has ultimate complexity

has ultimate complexity  .

.

Proof. Let  be an

approximation algorithm for

be an

approximation algorithm for  of complexity

of complexity  , where

, where  . There exist

. There exist  and a compact

ball

and a compact

ball  around

around  with

with  and such that

and such that  is included in

is included in

for all

for all  .

There also exists a constant

.

There also exists a constant  such that

such that  can be computed in time

can be computed in time  for all

for all

. Since

. Since  is locally Lipschitz, there exists yet another constant

is locally Lipschitz, there exists yet another constant  such that

such that  for

for  .

For

.

For  and

and  ,

this shows that we may compute a

,

this shows that we may compute a  -approximation

of

-approximation

of  in time

in time  .

.

and

g are two locally Lipschitz ball lifts of

and

g are two locally Lipschitz ball lifts of  and

and  that can be composed. If

that can be composed. If

and g have respective

ultimate complexities

and g have respective

ultimate complexities  and

and  , then

, then  has ultimate

complexity

has ultimate

complexity  .

.

Proof. In a similar way as in the proof of Lemma

2, the evaluation of  for

for  with

with  and

and  boils down to the evaluation of

boils down to the evaluation of  at

at  and the evaluation of

and the evaluation of  at

at  with

with  . Modulo a

further lowering of

. Modulo a

further lowering of  and

and  if necessary, these evaluations can be done in time

if necessary, these evaluations can be done in time  and

and  for suitable

for suitable  and

sufficiently large

and

sufficiently large  .

.

is a model for the

function symbols

is a model for the

function symbols  , and that

we are given a computable ball lift

, and that

we are given a computable ball lift  of

of  for each

for each  .

For each

.

For each  , assume in

addition that

, assume in

addition that  is locally Lipschitz, and let

is locally Lipschitz, and let

be a nondecreasing function such that

be a nondecreasing function such that  has ultimate complexity

has ultimate complexity  . Let

. Let  be an SLP over

be an SLP over  whose

whose  -th

instruction

-th

instruction  writes

writes  . Then, the ball lift

. Then, the ball lift  of

of

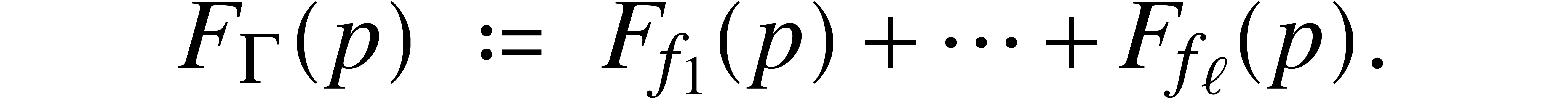

has ultimate complexity

has ultimate complexity

Proof. This is a direct consequence of

Proposition 5.

be an SLP of length

be an SLP of length  over

over  (where

(where  and

and  are naturally seen as constant functions

of arity zero). Then, there exists a ball lift

are naturally seen as constant functions

of arity zero). Then, there exists a ball lift  of

of  with ultimate complexity

with ultimate complexity  .

.

Proof. We use the ball lifts of section 2

for each  : they are locally

Lipschitz and computable with ultimate complexity

: they are locally

Lipschitz and computable with ultimate complexity  . We may thus apply the Theorem 6 to

obtain

. We may thus apply the Theorem 6 to

obtain  .

.

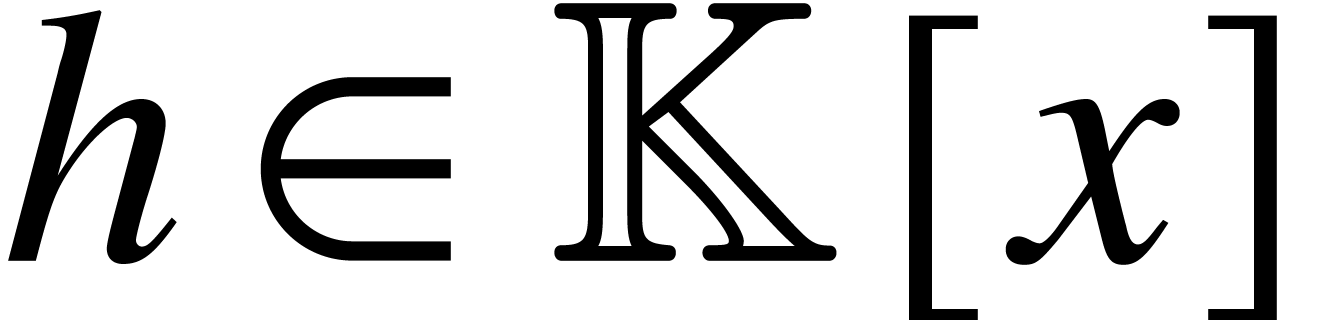

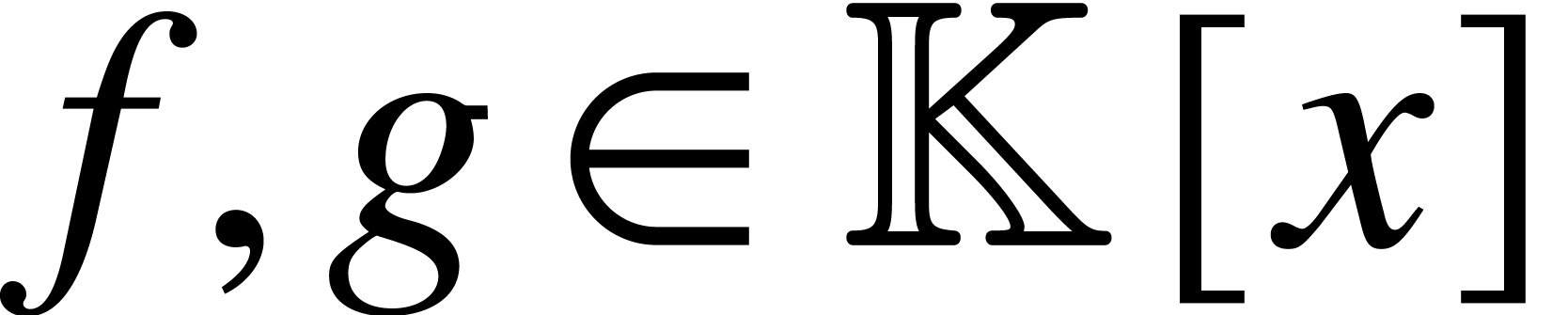

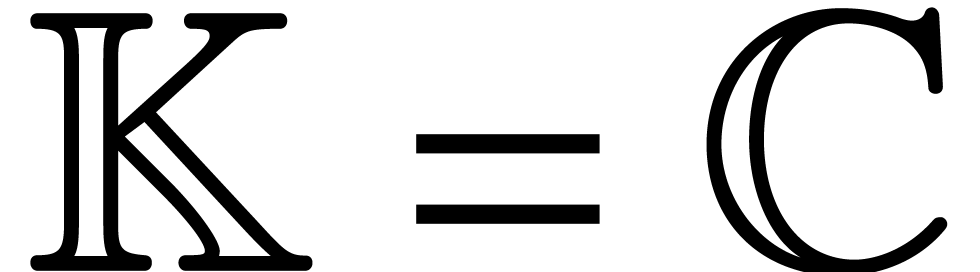

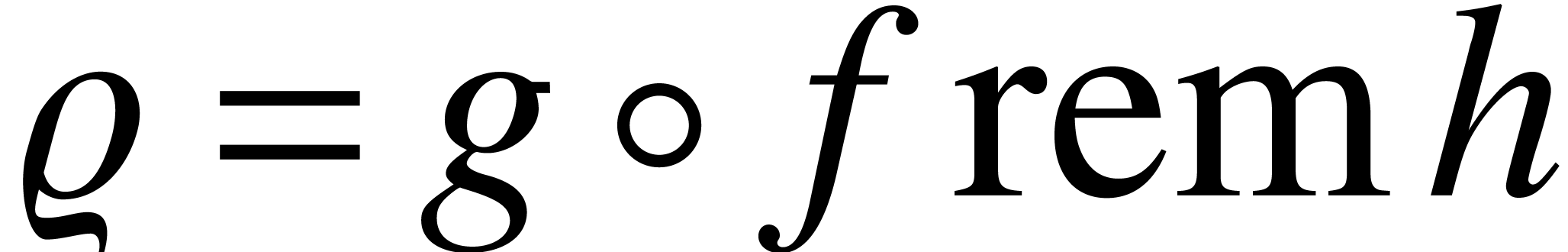

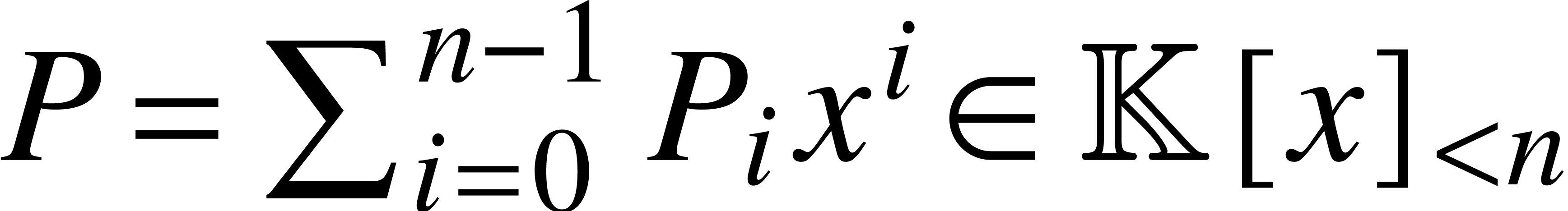

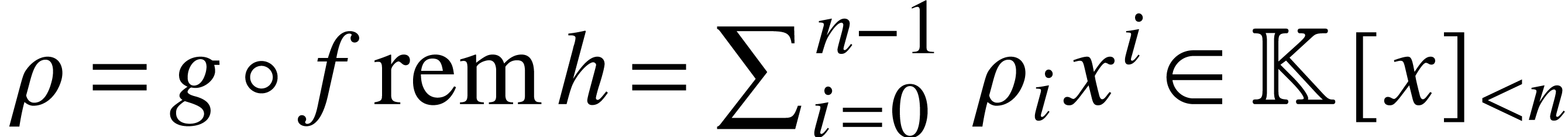

Let  be an effective field, and let

be an effective field, and let  be polynomials in

be polynomials in  .

The problem of modular composition is to compute

.

The problem of modular composition is to compute  modulo

modulo  .

Modular composition is an important problem in complexity theory because

of its applications to polynomial factorization [20–22]. It also occurs very naturally whenever one wishes to

perform polynomial computations over

.

Modular composition is an important problem in complexity theory because

of its applications to polynomial factorization [20–22]. It also occurs very naturally whenever one wishes to

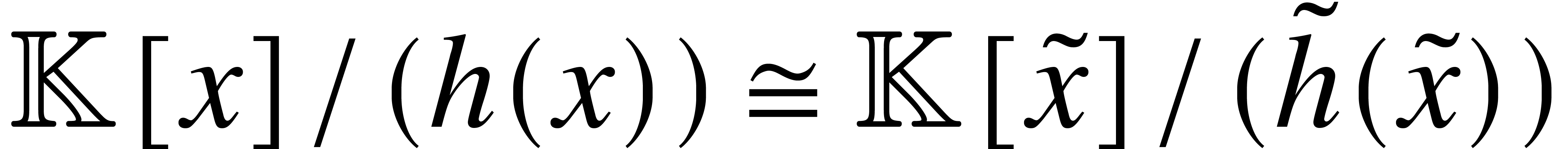

perform polynomial computations over  inside an

algebraic extension of

inside an

algebraic extension of  . In

addition, given two different representations

. In

addition, given two different representations  of

an algebraic extension of

of

an algebraic extension of  ,

the implementation of an explicit isomorphism actually boils down to

modular composition.

,

the implementation of an explicit isomorphism actually boils down to

modular composition.

Besides the bit complexity model from section 2.1, we will also need the algebraic complexity model, in which running times are analyzed in terms of the number of operations in some abstract ground ring or field [6, Chapter 4].

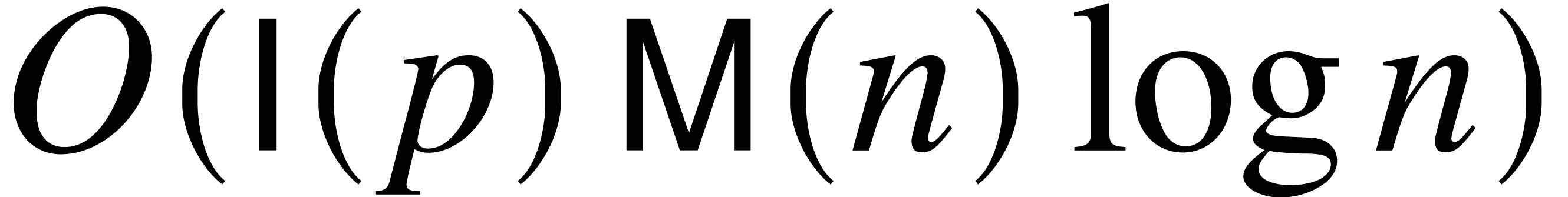

We write  for a cost function such that for any

effective field

for a cost function such that for any

effective field  and any two polynomials of

degree

and any two polynomials of

degree  in

in  ,

we can compute their product using at most

,

we can compute their product using at most  arithmetic operations in

arithmetic operations in  .

The fastest currently known algorithm [8] allows us to take

.

The fastest currently known algorithm [8] allows us to take

. As in the case of integer

multiplication, we assume that

. As in the case of integer

multiplication, we assume that  is

non-decreasing; this again implies that

is

non-decreasing; this again implies that  is

super-additive.

is

super-additive.

Given two polynomials  , we

define

, we

define  and

and  to be the

quotient and the remainder of the Euclidean division

of

to be the

quotient and the remainder of the Euclidean division

of  by

by  .

Both the quotient and the remainder can be computed using

.

Both the quotient and the remainder can be computed using  operations in

operations in  ,

if

,

if  and

and  have degrees

have degrees

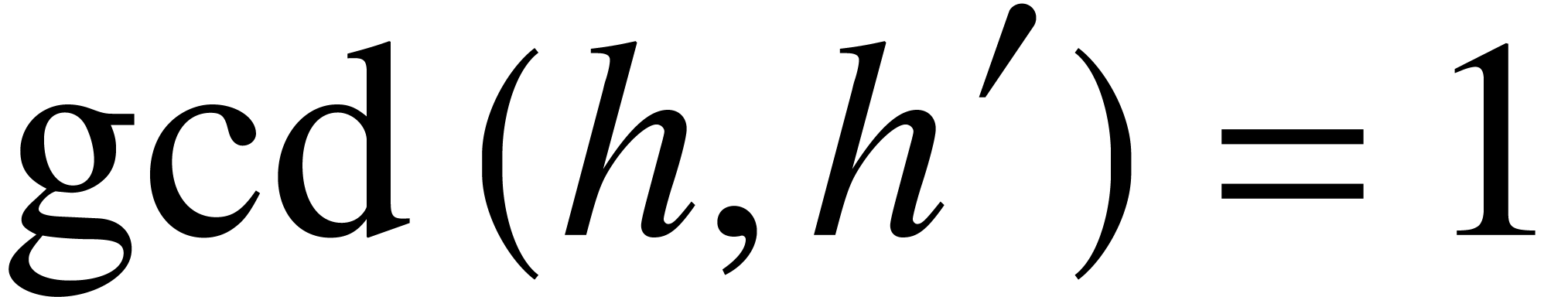

. We recall that the gcd of

two polynomials of degrees at most

. We recall that the gcd of

two polynomials of degrees at most  over

over  can be computed using

can be computed using  operations in

operations in  [9, Algorithm 11.4].

Given polynomials

[9, Algorithm 11.4].

Given polynomials  and

and  over

over  with

with  and

and  , all the remainders

, all the remainders  may be computed simultaneously in cost

may be computed simultaneously in cost  using a subproduct tree [9, Chapter 10]. The

inverse problem, called Chinese remaindering, can be solved

with a similar cost

using a subproduct tree [9, Chapter 10]. The

inverse problem, called Chinese remaindering, can be solved

with a similar cost  ,

assuming that the

,

assuming that the  are pairwise coprime. The

fastest known algorithms for these tasks can be found in [1,

3, 14].

are pairwise coprime. The

fastest known algorithms for these tasks can be found in [1,

3, 14].

Let  and

and  be polynomials

in

be polynomials

in  of respective degrees

of respective degrees  ,

,  and

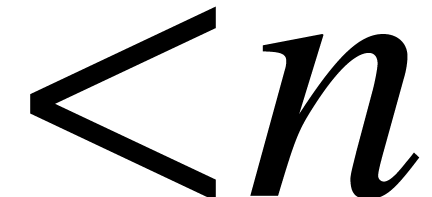

and  . The naive modular composition algorithm takes

. The naive modular composition algorithm takes  operations in

operations in  .

In 1978, Brent and Kung [4] gave an algorithm with cost

.

In 1978, Brent and Kung [4] gave an algorithm with cost

. It uses the baby-step

giant-step technique due to Paterson and Stockmeyer [29],

and even yields a sub-quadratic cost

. It uses the baby-step

giant-step technique due to Paterson and Stockmeyer [29],

and even yields a sub-quadratic cost  when using

fast linear algebra; see [19, p. 185]. The constant

when using

fast linear algebra; see [19, p. 185]. The constant  is such that a

is such that a  matrix over

matrix over

can be multiplied with another

can be multiplied with another  rectangular matrix in time

rectangular matrix in time  .

The best current bound

.

The best current bound  is due to Huang and Pan

[18, Theorem 10.1].

is due to Huang and Pan

[18, Theorem 10.1].

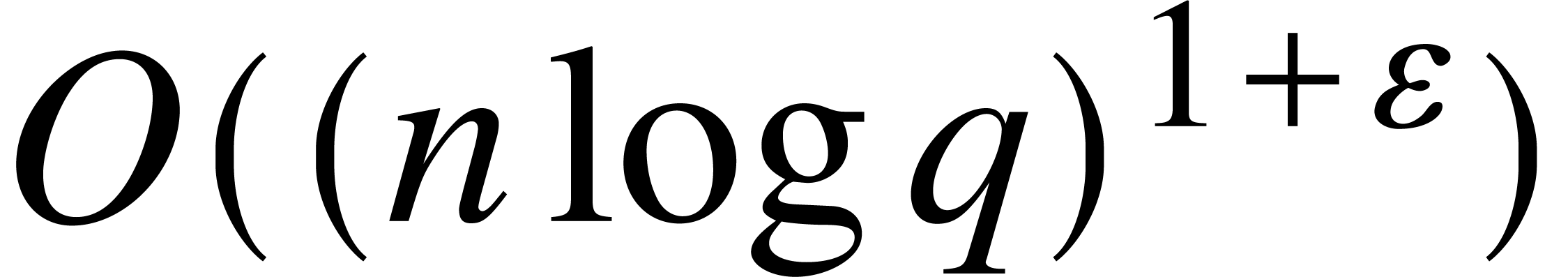

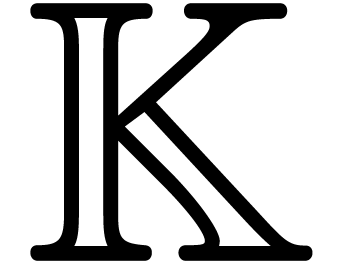

A major breakthrough has been achieved by Kedlaya and Umans [21,

22] in the case when  is the finite

field

is the finite

field  . For any positive

. For any positive

, they showed that the

composition

, they showed that the

composition  modulo

modulo  could

be computed with bit complexity

could

be computed with bit complexity  .

Unfortunately, it remains a major open problem to turn this theoretical

complexity bound into practically useful implementations.

.

Unfortunately, it remains a major open problem to turn this theoretical

complexity bound into practically useful implementations.

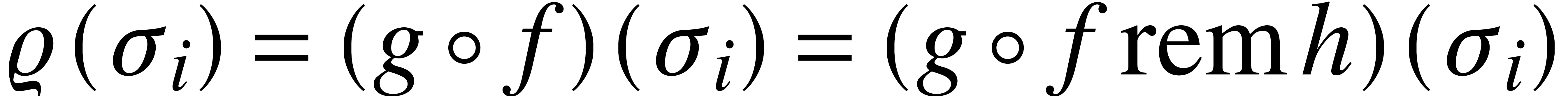

Quite surprisingly, the existing literature on modular composition does

not exploit the simple observation that composition modulo a separable

polynomial  that splits over

that splits over  can be reduced to the well known problems of multi-point evaluation and

interpolation [9, Chapter 10]. More precisely, assume that

can be reduced to the well known problems of multi-point evaluation and

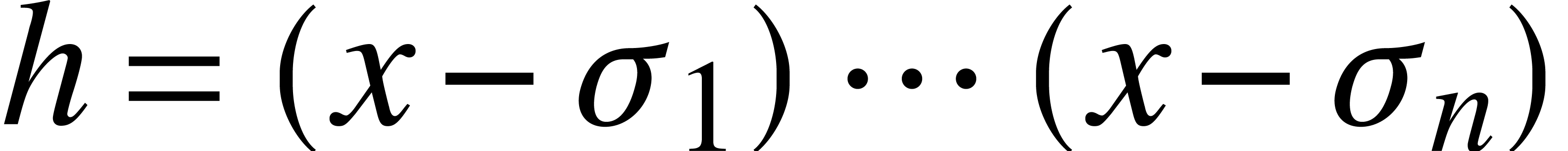

interpolation [9, Chapter 10]. More precisely, assume that

is separable, which means that

is separable, which means that  . If

. If  are of degree

are of degree

, then

, then  can be computed by evaluating

can be computed by evaluating  at

at  , by evaluating

, by evaluating  at

at

, and by interpolating the

evaluations

, and by interpolating the

evaluations  to yield

to yield  .

.

Whenever  is algebraically closed and a

factorization of

is algebraically closed and a

factorization of  is known, the latter

observation leads to a softly-optimal algorithm for composition modulo

is known, the latter

observation leads to a softly-optimal algorithm for composition modulo

. More generally, if the

computation of a factorization of

. More generally, if the

computation of a factorization of  has a

negligible or acceptable cost, then this approach leads to an efficient

method for modular composition. In this paper, we prove a precise

complexity result in the case when

has a

negligible or acceptable cost, then this approach leads to an efficient

method for modular composition. In this paper, we prove a precise

complexity result in the case when  is the field

of computable complex numbers. In a separate paper [16], we

also consider the case when

is the field

of computable complex numbers. In a separate paper [16], we

also consider the case when  is a finite field

and

is a finite field

and  has composite degree; in that case,

has composite degree; in that case,  can be factored over suitable field extensions, and

similar ideas lead to improved complexity bounds.

can be factored over suitable field extensions, and

similar ideas lead to improved complexity bounds.

In the special case of power series composition (i.e.

composition modulo  ), our

approach is similar in spirit to the analytic algorithm designed by

Ritzmann [30]; see also [12]. In order to keep

the exposition as simple as possible in this paper, we only study

composition modulo separable polynomials. By handling multiplicities

with Ritzmann's algorithm, we expect our algorithm to extend to the

general case.

), our

approach is similar in spirit to the analytic algorithm designed by

Ritzmann [30]; see also [12]. In order to keep

the exposition as simple as possible in this paper, we only study

composition modulo separable polynomials. By handling multiplicities

with Ritzmann's algorithm, we expect our algorithm to extend to the

general case.

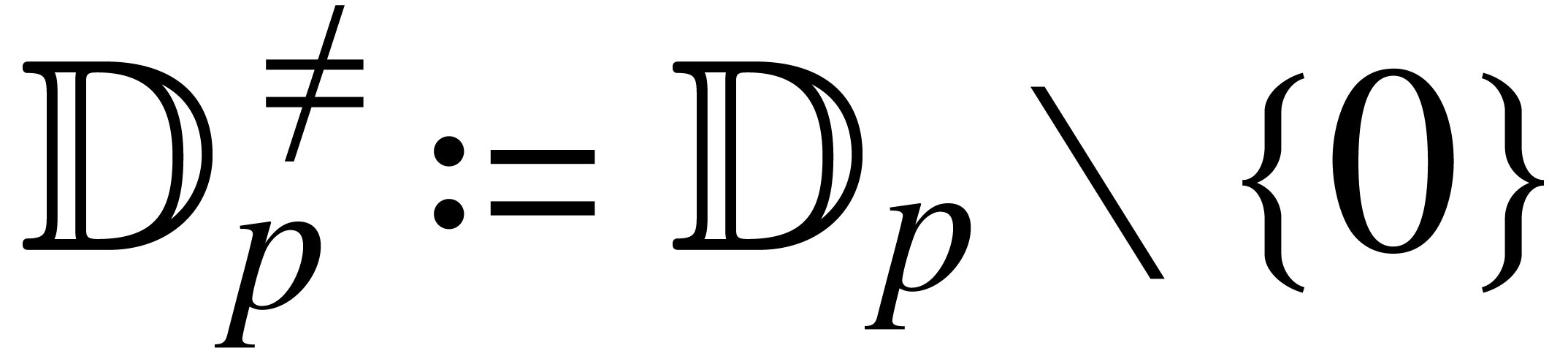

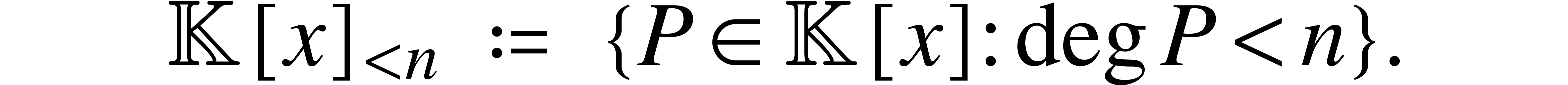

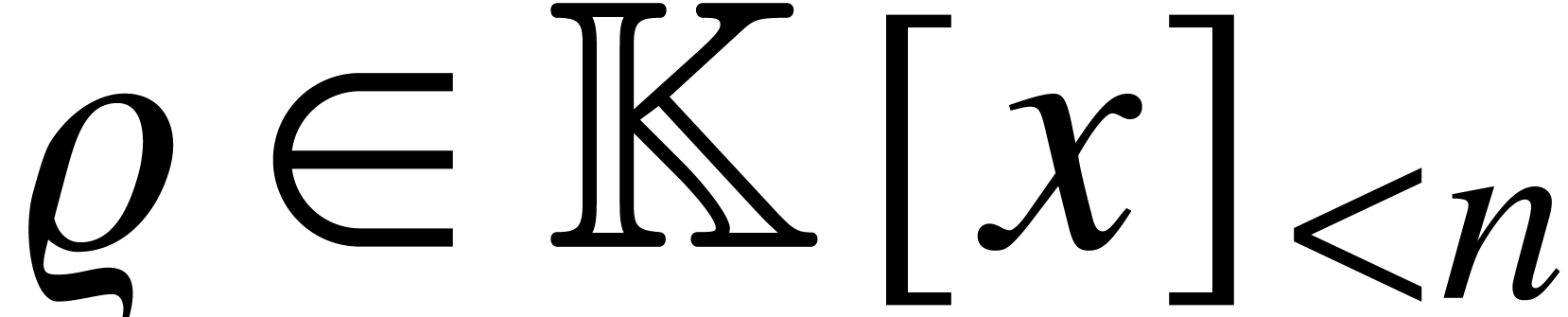

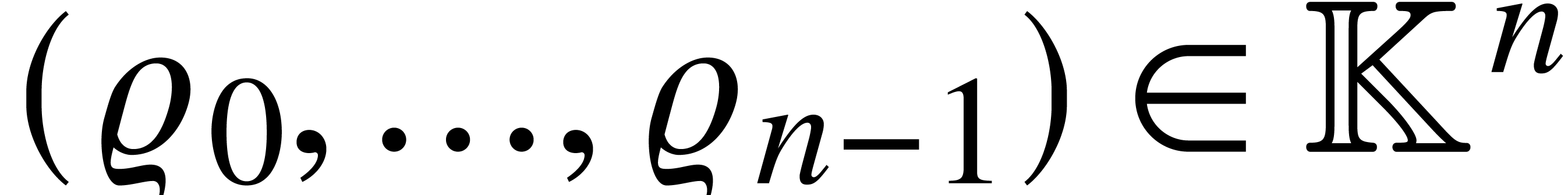

For any field  and

and  ,

we denote

,

we denote

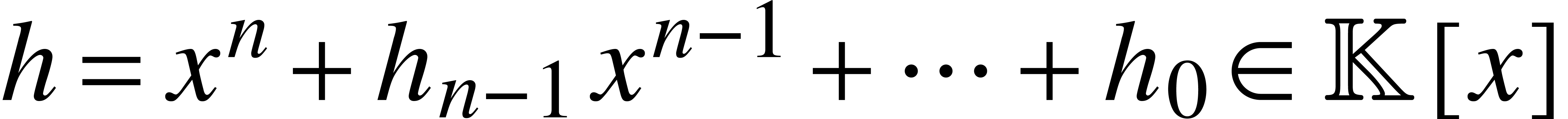

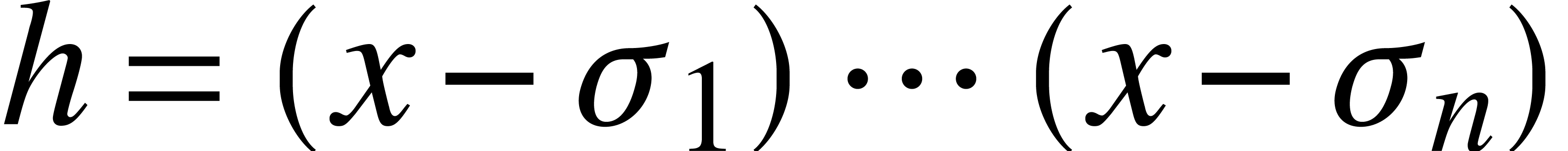

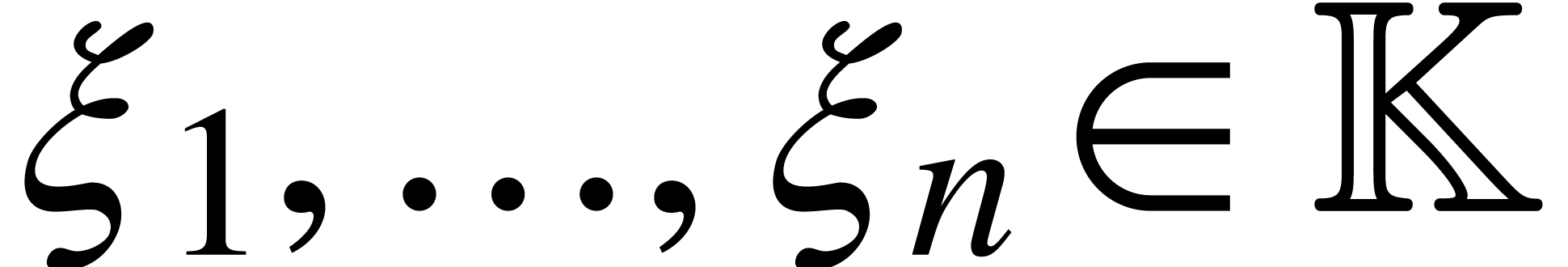

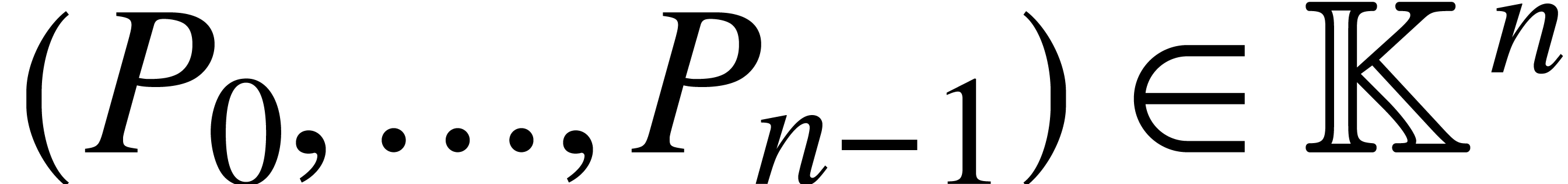

In this section,  represents an abstract

algebraically closed field of constants. Let

represents an abstract

algebraically closed field of constants. Let  be

a separable monic polynomial, so

be

a separable monic polynomial, so  admits

admits  pairwise distinct roots

pairwise distinct roots  in

in

. Then we may use the

following algorithm for composition modulo

. Then we may use the

following algorithm for composition modulo  :

:

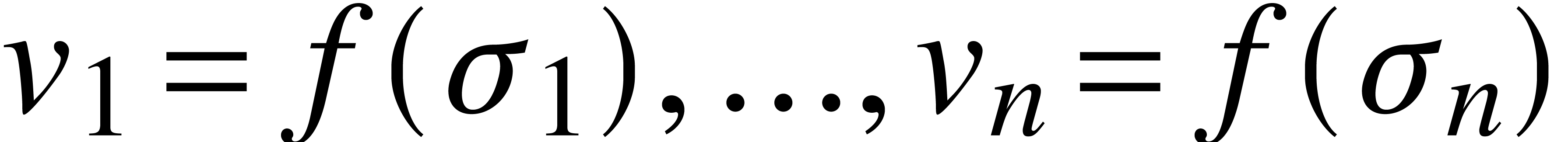

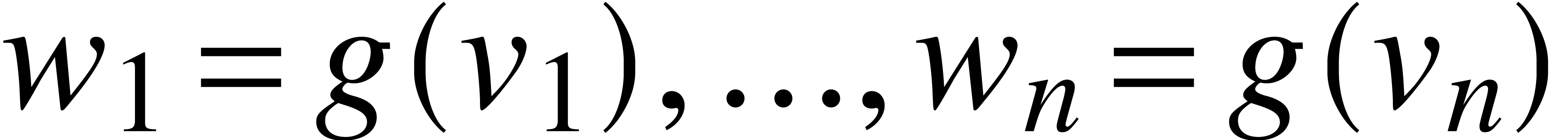

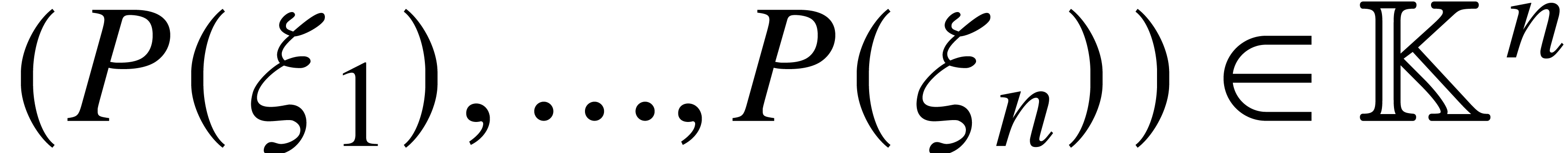

Algorithm

Compute  using fast multi-point

evaluation.

using fast multi-point

evaluation.

Compute  using fast multi-point

evaluation.

using fast multi-point

evaluation.

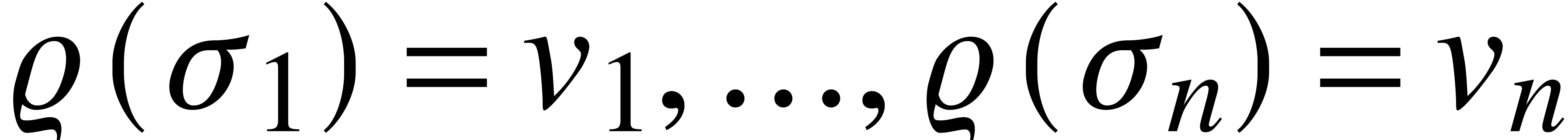

Retrieve  with

with  using fast interpolation.

using fast interpolation.

Return  .

.

operations in

operations in  .

.

Proof. By construction,  for

for  . Since

. Since  and the

and the  are pairwise distinct, it follows that

are pairwise distinct, it follows that

. This proves the correctness

of the algorithm. The complexity bound follows from the fact that steps

1, 2 and 3 take

. This proves the correctness

of the algorithm. The complexity bound follows from the fact that steps

1, 2 and 3 take  operations in

operations in  .

.

We wish to apply the theorem in the case when  . Of course, on a Turing machine, we can only

approximate complex numbers with arbitrarily high precision, and

likewise for the field operations in

. Of course, on a Turing machine, we can only

approximate complex numbers with arbitrarily high precision, and

likewise for the field operations in  .

For given numbers

.

For given numbers  and

and  , approximations at precision

, approximations at precision  for

for  ,

,  ,

,  and

and  (whenever

(whenever  ) can all be

computed in time

) can all be

computed in time  . In view of

Theorem 8, it is therefore natural to ask whether

. In view of

Theorem 8, it is therefore natural to ask whether  -bit approximations of the

coefficients of

-bit approximations of the

coefficients of  may be computed in time

may be computed in time  .

.

In the remainder of this paper we give a positive answer to a carefully

formulated version of this question. First we will prove a complexity

bound for modular composition that holds for a fixed modulus  with known roots

with known roots  and for

sufficiently large working precisions

and for

sufficiently large working precisions  .

Then we will show that the assumption that the roots

.

Then we will show that the assumption that the roots  of

of  are known is actually quite harmless for

ultimate complexity for the following reason: as soon as approximations

for

are known is actually quite harmless for

ultimate complexity for the following reason: as soon as approximations

for  are known at a sufficiently high precision,

the computation of even better approximations can be done fast using

Newton's method combined with multi-point evaluation. Since we are only

interested in the complexity for “sufficiently large working

precisions”, the computation of the initial approximations of

are known at a sufficiently high precision,

the computation of even better approximations can be done fast using

Newton's method combined with multi-point evaluation. Since we are only

interested in the complexity for “sufficiently large working

precisions”, the computation of the initial approximations of  can therefore be regarded as a precomputation of

negligible cost.

can therefore be regarded as a precomputation of

negligible cost.

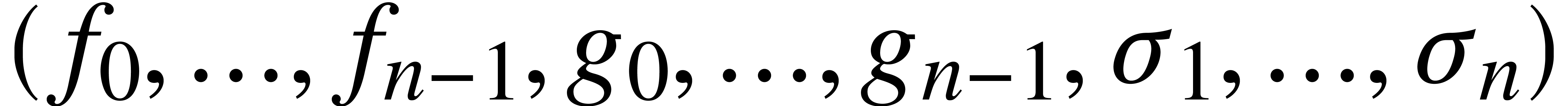

such that the following

assertion holds. Let

such that the following

assertion holds. Let  , let

, let

be pairwise distinct elements of

be pairwise distinct elements of  , and let

, and let  .

Assume that

.

Assume that  has ultimate complexity

has ultimate complexity  . Then

. Then  has ultimate complexity

has ultimate complexity  .

.

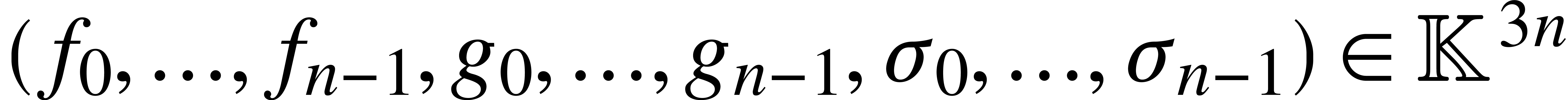

Proof. The algorithm for fast multi-point

evaluation of a polynomial  at

at  can be regarded as an SLP over

can be regarded as an SLP over  of length

of length  that takes

that takes  on input and that

produces

on input and that

produces  on output. Similarly, the algorithm for

interpolation can be regarded as an SLP over

on output. Similarly, the algorithm for

interpolation can be regarded as an SLP over  of

length

of

length  that takes

that takes  on

input and that produces

on

input and that produces  on output with

on output with  . Altogether, we may regard the

entire Algorithm 1 as an SLP

. Altogether, we may regard the

entire Algorithm 1 as an SLP  over

over

of length

of length  that takes

that takes

on input and that produces

on input and that produces  on output with

on output with  . It follows

from Corollary 7 that

. It follows

from Corollary 7 that  admits a ball

lift

admits a ball

lift  of ultimate complexity

of ultimate complexity  . The conclusion now follows from Proposition

4.

. The conclusion now follows from Proposition

4.

According to the above lemma, we notice that the time complexity for

computing  is

is  for some

constant

for some

constant  that depends on

that depends on  ,

,

, and the

, and the  .

.

such that the following

assertion holds. Let

such that the following

assertion holds. Let  be separable and monic of

degree

be separable and monic of

degree  , and denote the

roots of

, and denote the

roots of  by

by  .

If

.

If  has ultimate complexity

has ultimate complexity  , then

, then  has ultimate

complexity

has ultimate

complexity  .

.

Proof. There are many algorithms for the

certified computation of the roots of a separable complex polynomial. We

may use any of these algorithms as a “fall back” algorithm

in the case that we only need a  -approximation

of

-approximation

of  at a low precision

at a low precision  determined by

determined by  only.

only.

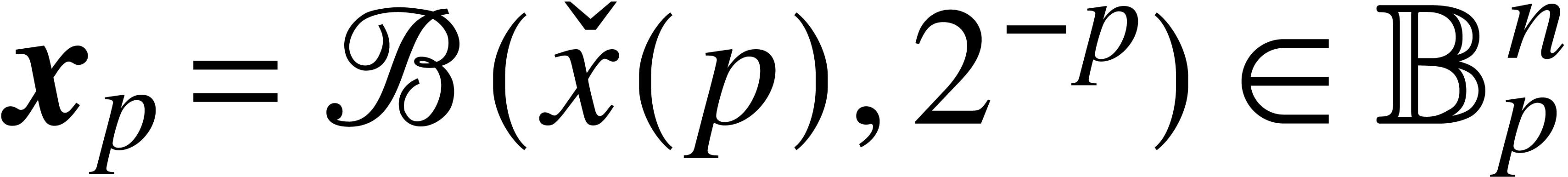

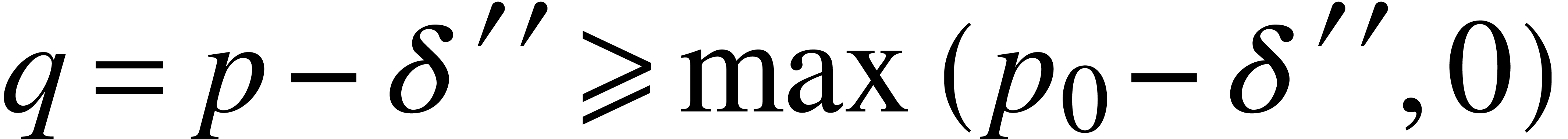

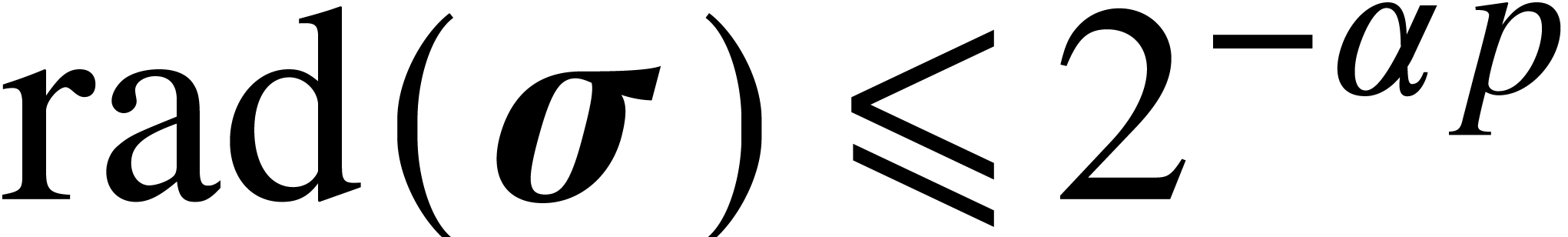

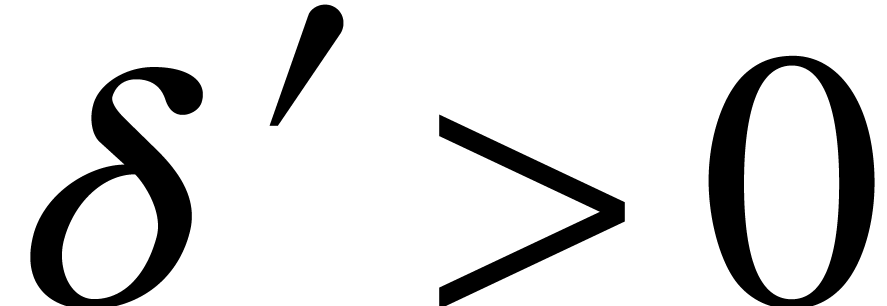

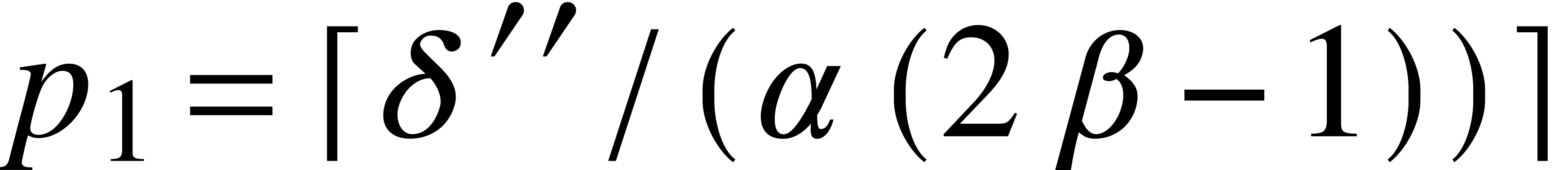

For general precisions  , we

use the following strategy in order to compute a ball

, we

use the following strategy in order to compute a ball  with

with  and

and  for some fixed

threshold

for some fixed

threshold  . For some suitable

. For some suitable

and

and  ,

we use the fall back algorithm. For

,

we use the fall back algorithm. For  and for a

second fixed constant

and for a

second fixed constant  , we

first compute a ball enclosure

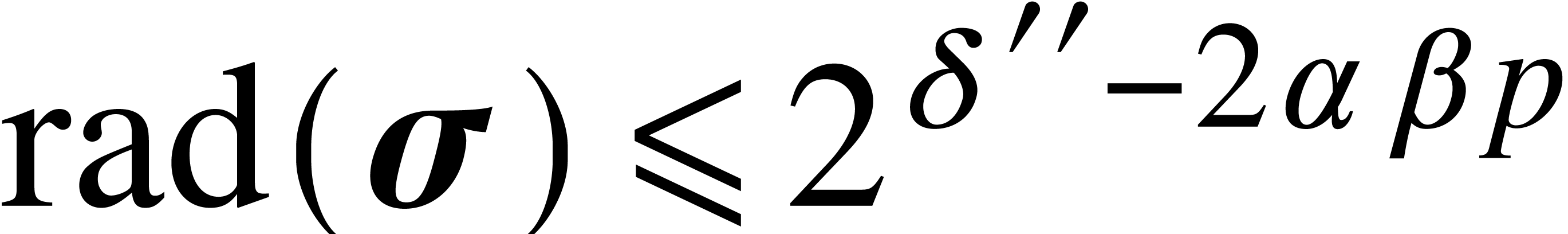

, we

first compute a ball enclosure  at the lower

precision

at the lower

precision  using a recursive application of the

method. We next compute

using a recursive application of the

method. We next compute  using a ball version of

the Newton iteration, as explained below. If this yields a ball

using a ball version of

the Newton iteration, as explained below. If this yields a ball  with acceptable radius

with acceptable radius  ,

then we are done. Otherwise, we resort to our fall-back method. Such

calls of the fall-back method only occur if the default threshold

precision

,

then we are done. Otherwise, we resort to our fall-back method. Such

calls of the fall-back method only occur if the default threshold

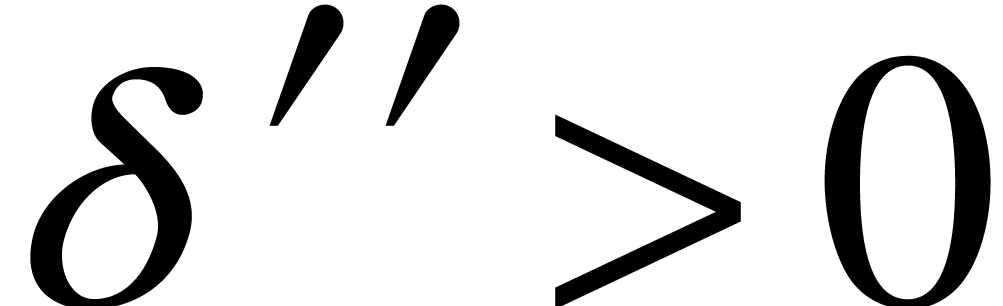

precision  was chosen too low. Nevertheless, we

will show that there exists a threshold

was chosen too low. Nevertheless, we

will show that there exists a threshold  such

that the computed

such

that the computed  by the Newton iteration always

satisfies

by the Newton iteration always

satisfies  for

for  .

.

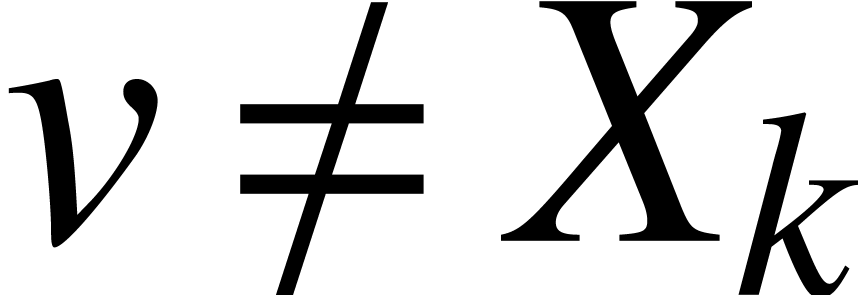

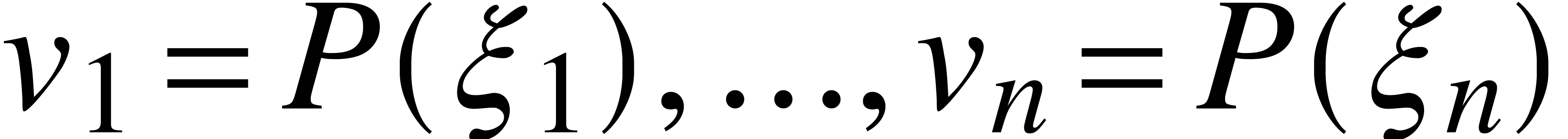

Let us detail how we perform our ball version of the Newton iteration.

Recall that  with

with  and

and

is given. We also assume that we computed once

and for all a

is given. We also assume that we computed once

and for all a  -approximation

of

-approximation

of  , in the form of a ball

polynomial

, in the form of a ball

polynomial  of radius

of radius  that contains

that contains  . Now we

evaluate

. Now we

evaluate  and

and  at each of

the points

at each of

the points  using fast multi-point evaluation.

Let us denote the results by

using fast multi-point evaluation.

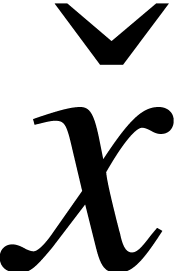

Let us denote the results by  and

and  . Let

. Let  ,

,

and

and  denote the balls

with radius zero and whose centers are the same as for

denote the balls

with radius zero and whose centers are the same as for  ,

,  and

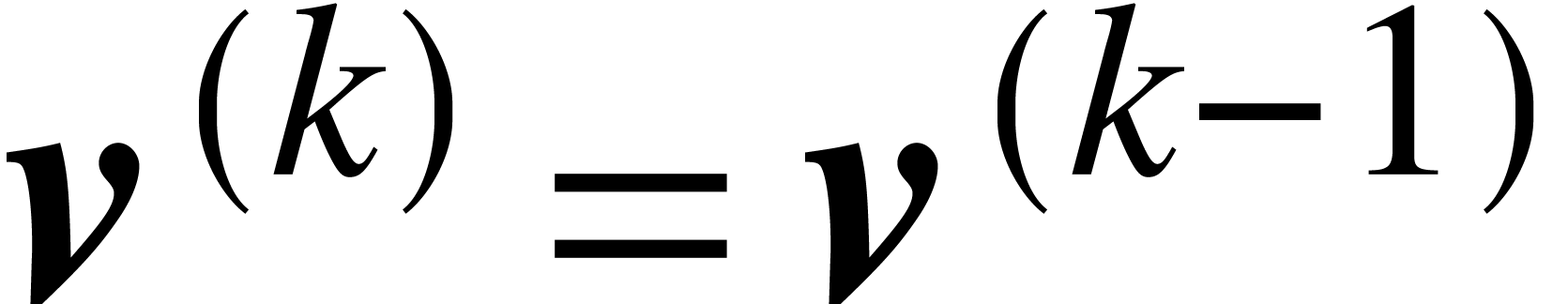

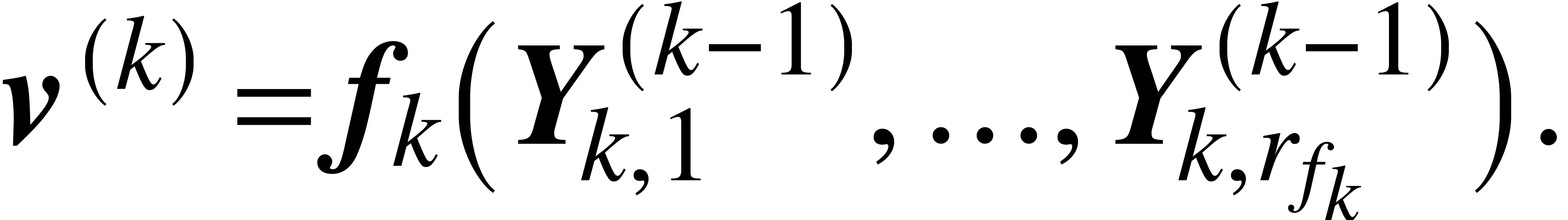

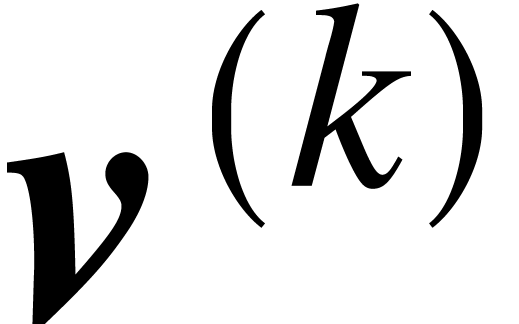

and  . Using vector notation, the Newton iteration

now becomes:

. Using vector notation, the Newton iteration

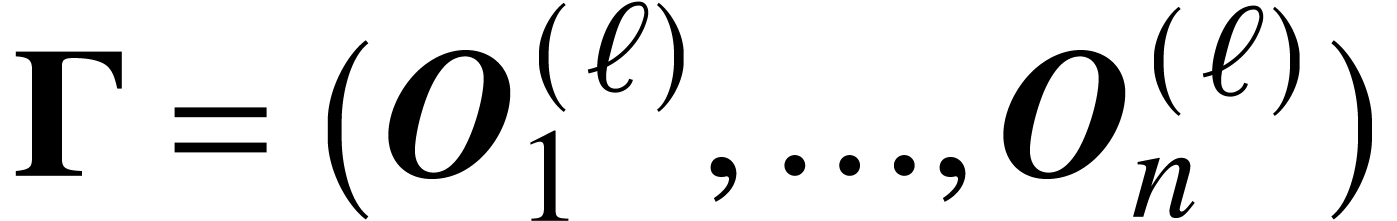

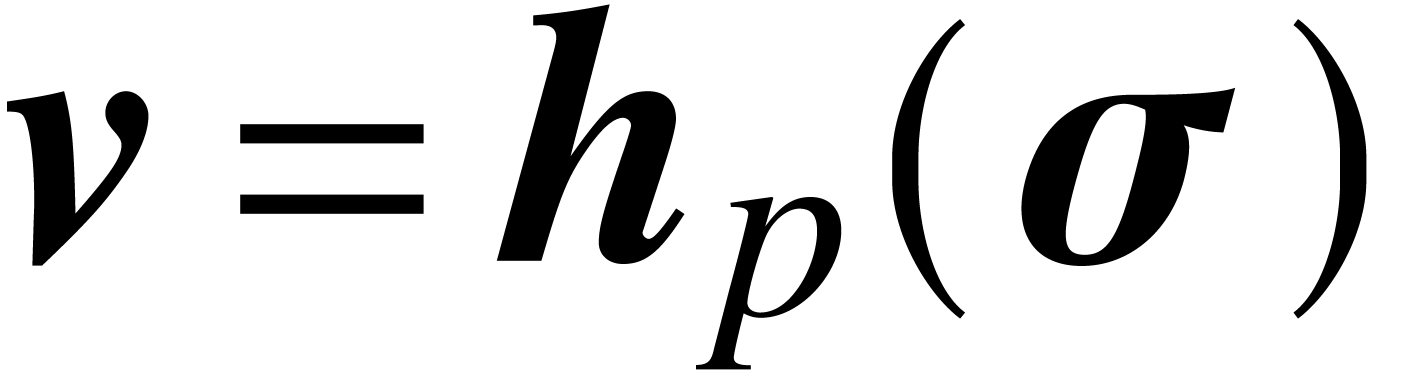

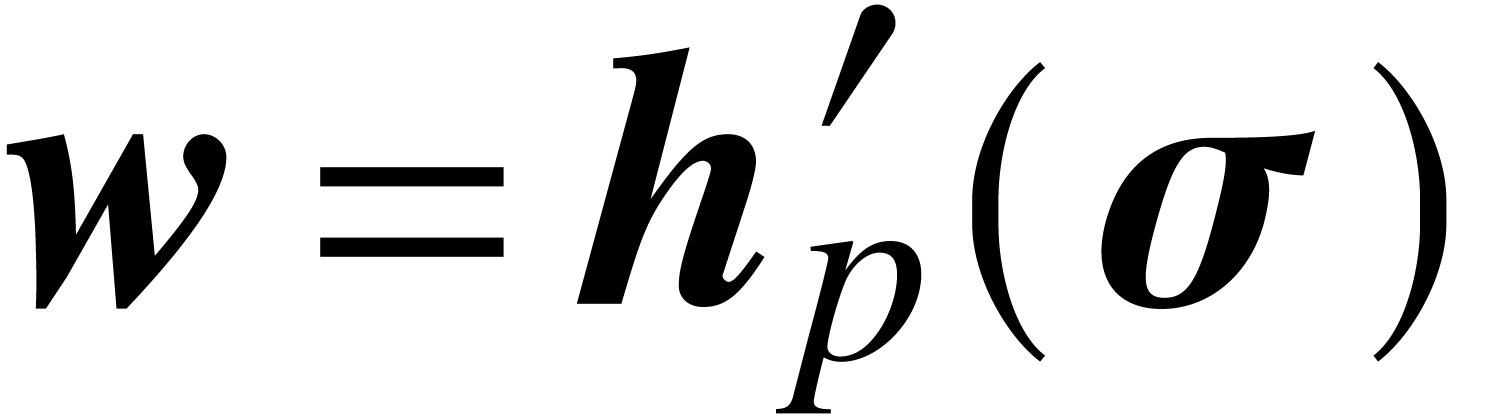

now becomes:

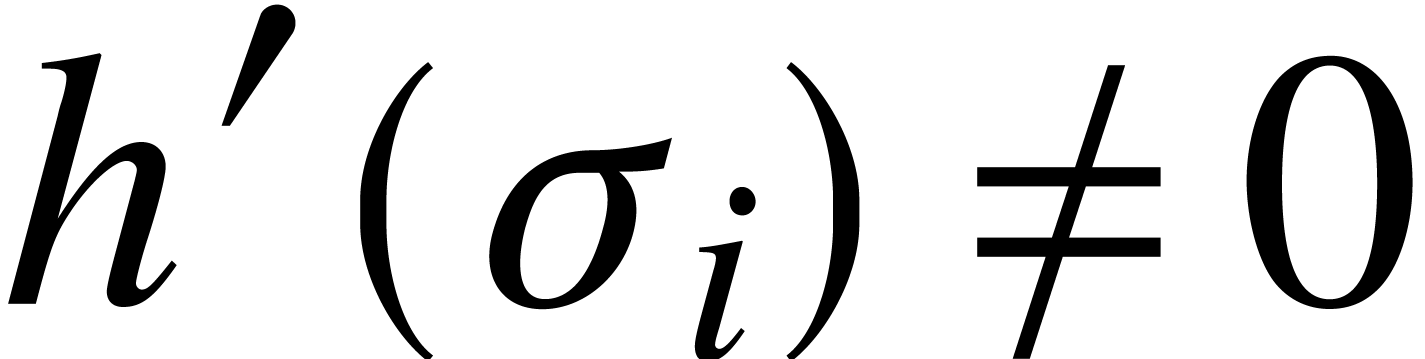

If  , then it is well-known

[23, 31] that

, then it is well-known

[23, 31] that  .

Since

.

Since  , the fact that

multi-point ball evaluation (used for

, the fact that

multi-point ball evaluation (used for  and

and  ) is locally Lipschitz implies the

existence of a constant

) is locally Lipschitz implies the

existence of a constant  with

with  and

and  . Since

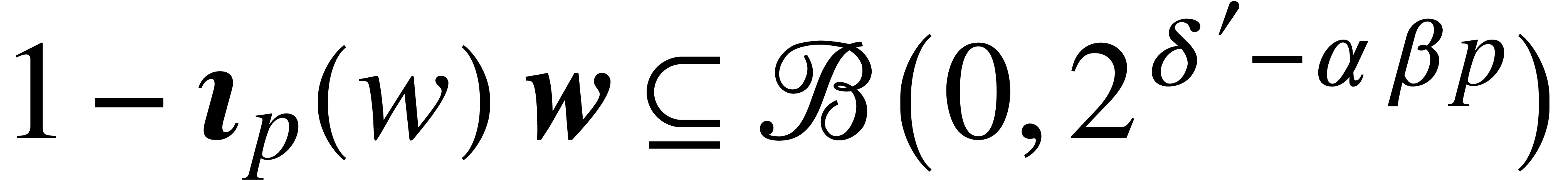

. Since  for

for  , there also exists a

constant

, there also exists a

constant  with

with  .

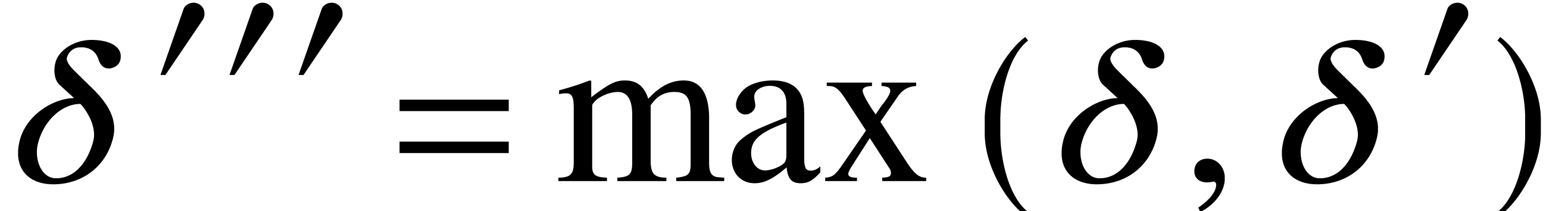

Altogether, this means that there exists a constant

.

Altogether, this means that there exists a constant  with

with  . Let

. Let  . Then for any

. Then for any  ,

the Newton iteration provides us with a

,

the Newton iteration provides us with a  with

with

.

.

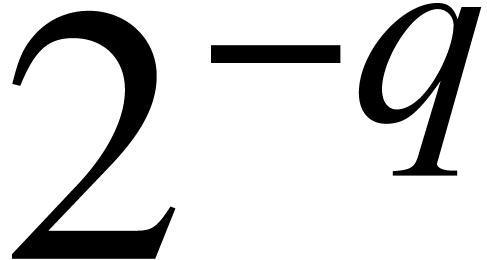

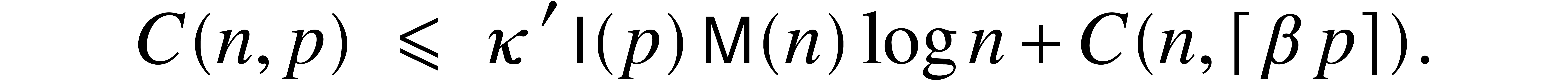

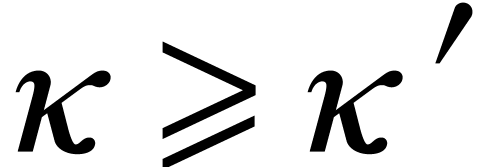

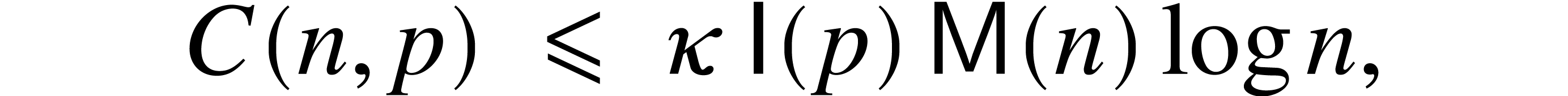

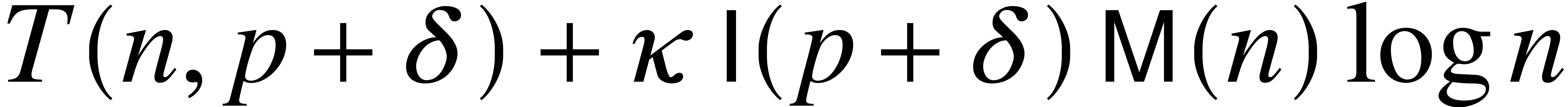

Let us now analyze the ultimate complexity  of

our algorithm. For large

of

our algorithm. For large  ,

the algorithm essentially performs two multi-point evaluations of

ultimate cost

,

the algorithm essentially performs two multi-point evaluations of

ultimate cost  for some constant

for some constant  that does not depend on

that does not depend on  , and

a recursive call. Consequently,

, and

a recursive call. Consequently,

We finally obtain an other constant  such that

such that

by summing up the geometric progression and using the fact that  is nondecreasing. The conclusion now follows from

Lemma 4.

is nondecreasing. The conclusion now follows from

Lemma 4.

Remark  at which the Newton iteration can safely be used does not need to be

known in advance. In particular, the proof does not require any a

priori knowledge about the Lipschitz constants.

at which the Newton iteration can safely be used does not need to be

known in advance. In particular, the proof does not require any a

priori knowledge about the Lipschitz constants.

such that the

following assertion holds. Let

such that the

following assertion holds. Let  and let

and let  be separable and monic of degree

be separable and monic of degree  . Assume that

. Assume that  has

ultimate complexity

has

ultimate complexity  . Then

. Then

has ultimate complexity

has ultimate complexity  .

.

Proof. This is an immediate consequence of the

combination of the two above lemmas.

One disadvantage of ultimate complexity is that it does not provide us

with any information about the precision from which the ultimate

complexity is reached. In practical applications, the input polynomials

and

and  often admit integer

or rational coefficients. In these cases, the required bit precision is

expected to be of order

often admit integer

or rational coefficients. In these cases, the required bit precision is

expected to be of order  in the worst case, where

in the worst case, where

and

and  is the largest bit

size of the coefficients: in fact, this precision allows to compute all

the complex roots of

is the largest bit

size of the coefficients: in fact, this precision allows to compute all

the complex roots of  efficiently using

algorithms from [26, 27, 32].

This precision should also be sufficient to perform the multi-point

polynomial evaluations of

efficiently using

algorithms from [26, 27, 32].

This precision should also be sufficient to perform the multi-point

polynomial evaluations of  and

and  by asymptotically fast algorithms.

by asymptotically fast algorithms.

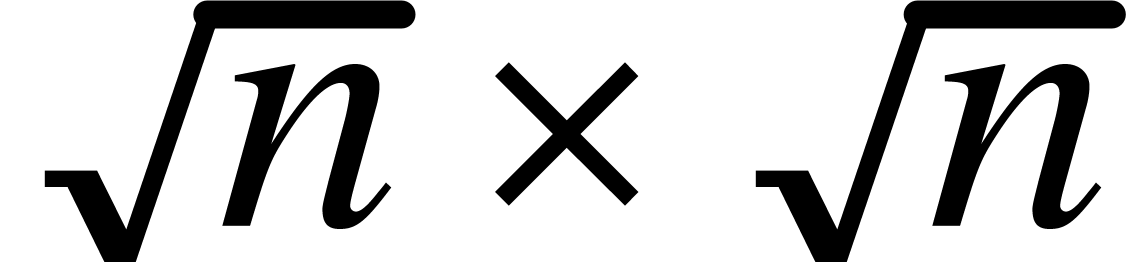

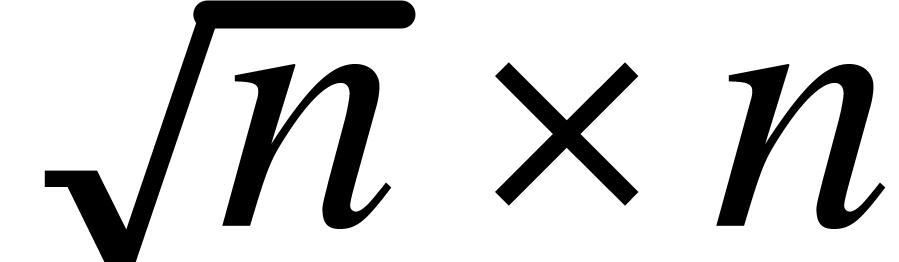

With some more work, we expect that all above bounds of the form  can be lowered to

can be lowered to  .

Notice that

.

Notice that  for

for  ,

when taking

,

when taking  . In order to

prove this stronger bound using our framework, one might add an

auxiliary operation

. In order to

prove this stronger bound using our framework, one might add an

auxiliary operation  for the product of two

polynomials of degrees

for the product of two

polynomials of degrees  to the set of signatures

to the set of signatures

. Polynomial products of this

kind can be implemented for coefficients in

. Polynomial products of this

kind can be implemented for coefficients in  with

with

using Kronecker substitution. For bounded

coefficients, this technique allows for the computation of one such

product in time

using Kronecker substitution. For bounded

coefficients, this technique allows for the computation of one such

product in time  . By using

Theorem 6, a standard complexity analysis should show that

multi-point evaluation and interpolation have ultimate complexity

. By using

Theorem 6, a standard complexity analysis should show that

multi-point evaluation and interpolation have ultimate complexity  .

.

By Theorem 12, the actual bit complexity of modular

composition is of the form  for some value of

for some value of

that depends on

that depends on  (hence

of

(hence

of  ). An interesting problem

is to get a better grip on this value

). An interesting problem

is to get a better grip on this value  ,

which mainly depends on the geometric proximity of the roots of

,

which mainly depends on the geometric proximity of the roots of  .

.

If  belong to

belong to  ,

then

,

then  and we may wish to bound

and we may wish to bound  as a function of

as a function of  and the maximum bit size

and the maximum bit size  of the coefficients of

of the coefficients of  ,

,

and

and  .

This would involve bit complexity results for root isolation [26,

27, 32], for multi-point evaluation, and for

interpolation. The overall complexity should then be compared with the

maximal size of the output, namely

.

This would involve bit complexity results for root isolation [26,

27, 32], for multi-point evaluation, and for

interpolation. The overall complexity should then be compared with the

maximal size of the output, namely  ,

which is in general much larger than the input size. Here, the ultimate

complexity model therefore offers a convenient trade-off between a fine

asymptotic complexity bound and a long technical complexity analysis.

,

which is in general much larger than the input size. Here, the ultimate

complexity model therefore offers a convenient trade-off between a fine

asymptotic complexity bound and a long technical complexity analysis.

D. Bernstein. Scaled remainder trees. Available from https://cr.yp.to/arith/scaledmod-20040820.pdf, 2004.

L. Blum, F. Cucker, M. Shub, and S. Smale. Complexity and real computation. Springer-Verlag New York, 1998.

A. Bostan, G. Lecerf, and É. Schost. Tellegen's principle into practice. In Proceedings of the 2003 International Symposium on Symbolic and Algebraic Computation, ISSAC '03, pages 37–44, New York, NY, USA, 2003. ACM.

R. P. Brent and H. T. Kung. Fast algorithms for manipulating formal power series. J. ACM, 25(4):581–595, 1978.

R. P. Brent and P. Zimmermann. Modern computer arithmetic, volume 18 of Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, 2010.

P. Bürgisser, M. Clausen, and M. A. Shokrollahi. Algebraic complexity theory, volume 315 of Grundlehren der Mathematischen Wissenschaften. Springer-Verlag, 1997.

P. Bürgisser and F. Cucker. Condition. The Geometry of Numerical Algorithms, volume 349 of Grundlehren der mathematischen Wissenschaften. Springer-Verlag Berlin Heidelberg, 2013.

D. G. Cantor and E. Kaltofen. On fast multiplication of polynomials over arbitrary algebras. Acta Infor., 28(7):693–701, 1991.

J. von zur Gathen and J. Gerhard. Modern Computer Algebra. Cambridge University Press, New York, NY, USA, 3rd edition, 2013.

D. Harvey and J. van der Hoeven. On the complexity of integer matrix multiplication. J. Symbolic Comput., 89:1–8, 2018.

D. Harvey and J. van der Hoeven. Integer

multiplication in time  .

Technical report, HAL, 2019. http://hal.archives-ouvertes.fr/hal-02070778.

.

Technical report, HAL, 2019. http://hal.archives-ouvertes.fr/hal-02070778.

J. van der Hoeven. GNU TeXmacs User Manual, 1998. Available from http://www.texmacs.org.

J. van der Hoeven. Fast composition of numeric power series. Technical Report 2008-09, Université Paris-Sud, Orsay, France, 2008.

J. van der Hoeven. Ball arithmetic. Technical report, CNRS & École polytechnique, 2011. https://hal.archives-ouvertes.fr/hal-00432152/.

J. van der Hoeven. Faster Chinese remaindering. Technical report, CNRS & École polytechnique, 2016. http://hal.archives-ouvertes.fr/hal-01403810.

J. van der Hoeven et al. GNU TeXmacs. http://www.texmacs.org, 1998.

J. van der Hoeven and G. Lecerf. Modular composition via factorization. J. Complexity, 48:36–68, 2018.

J. van der Hoeven and G. Lecerf. Fast multivariate multi-point evaluation revisited. J. Complexity, 56:101405, 2020.

Xiaohan Huang and V. Y. Pan. Fast rectangular matrix multiplication and applications. J. Complexity, 14(2):257–299, 1998.

E. Kaltofen and V. Shoup. Fast polynomial factorization over high algebraic extensions of finite fields. In Proceedings of the 1997 International Symposium on Symbolic and Algebraic Computation, ISSAC '97, pages 184–188, New York, NY, USA, 1997. ACM.

E. Kaltofen and V. Shoup. Subquadratic-time factoring of polynomials over finite fields. Math. Comp., 67(223):1179–1197, 1998.

K. S. Kedlaya and C. Umans. Fast modular composition in any characteristic. In FOCS'08: IEEE Conference on Foundations of Computer Science, pages 146–155, Washington, DC, USA, 2008. IEEE Computer Society.

K. S. Kedlaya and C. Umans. Fast polynomial factorization and modular composition. SIAM J. Comput., 40(6):1767–1802, 2011.

R. Krawczyk. Newton-Algorithmen zur Bestimmung von Nullstellen mit Fehler-schranken. Computing, 4:187–201, 1969.

F. Le Gall. Powers of tensors and fast matrix multiplication. In Proceedings of the 39th International Symposium on Symbolic and Algebraic Computation, ISSAC '14, pages 296–303, New York, NY, USA, 2014. ACM.

J.-M. Muller, N. Brunie, F. De Dinechin, C.-P. Jeannerod, M. Joldes, V. Lefèvre, G. Melquiond, N. Revol, and S. Torres. Handbook of floating-point arithmetic. Birkhäuser Basel, 2nd edition, 2018.

C. A. Neff and J. H. Reif. An efficient algorithm for the complex roots problem. J. Complexity, 12(2):81–115, 1996.

V. Y. Pan. Univariate polynomials: nearly optimal algorithms for numerical factorization and root-finding. J. Symbolic Comput., 33(5):701–733, 2002.

C. H. Papadimitriou. Computational Complexity. Addison-Wesley, 1994.

M. S. Paterson and L. J. Stockmeyer. On the number of nonscalar multiplications necessary to evaluate polynomials. SIAM J.Comput., 2(1):60–66, 1973.

P. Ritzmann. A fast numerical algorithm for the composition of power series with complex coefficients. Theoret. Comput. Sci., 44:1–16, 1986.

S. M. Rump. Kleine Fehlerschranken bei Matrixproblemen. PhD thesis, Universität Karlsruhe, 1980.

A. Schönhage. The fundamental theorem of algebra in terms of computational complexity. Technical report, Preliminary Report of Mathematisches Institut der Universität Tübingen, Germany, 1982.